Author Contributions

Conceptualization, R.B.S. and H.Z.; methodology, R.B.S.; software, R.B.S.; validation, R.B.S.; formal analysis, R.B.S. and H.Z.; investigation, R.B.S.; resources, R.B.S. and H.Z.; data curation, R.B.S.; writing—original draft preparation, R.B.S.; writing—review and editing, R.B.S. and H.Z.; visualization, R.B.S. and H.Z.; supervision, H.Z. All authors have read and agreed to the published version of the manuscript.

Figure 1.

Images of some of the various environments used for the capture of the plastic bag popping sounds (class 8): (

a) along glass corridor, (

b) inside of personal dwelling, (

c) between two buildings, (

d) side of building, (

e) open field and, (

f) anechoic chamber [

16].

Figure 1.

Images of some of the various environments used for the capture of the plastic bag popping sounds (class 8): (

a) along glass corridor, (

b) inside of personal dwelling, (

c) between two buildings, (

d) side of building, (

e) open field and, (

f) anechoic chamber [

16].

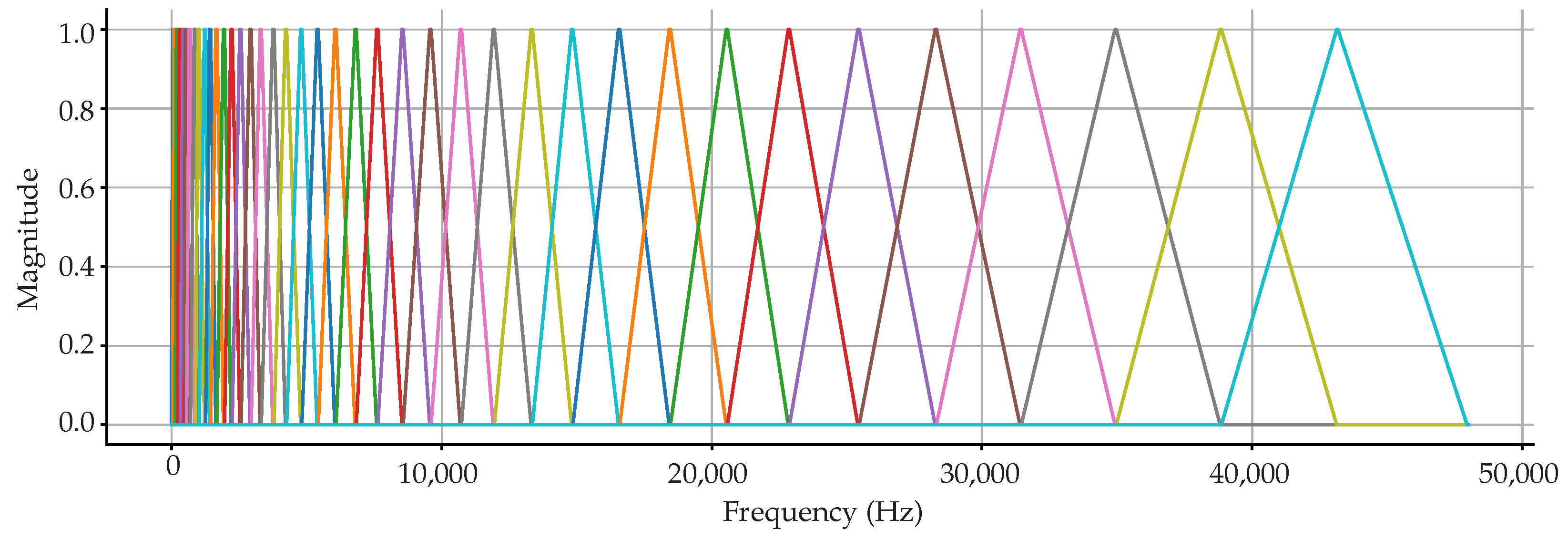

Figure 2.

Uniform Mel-bandwidth filterbank for a 96 k sample rate.

Figure 2.

Uniform Mel-bandwidth filterbank for a 96 k sample rate.

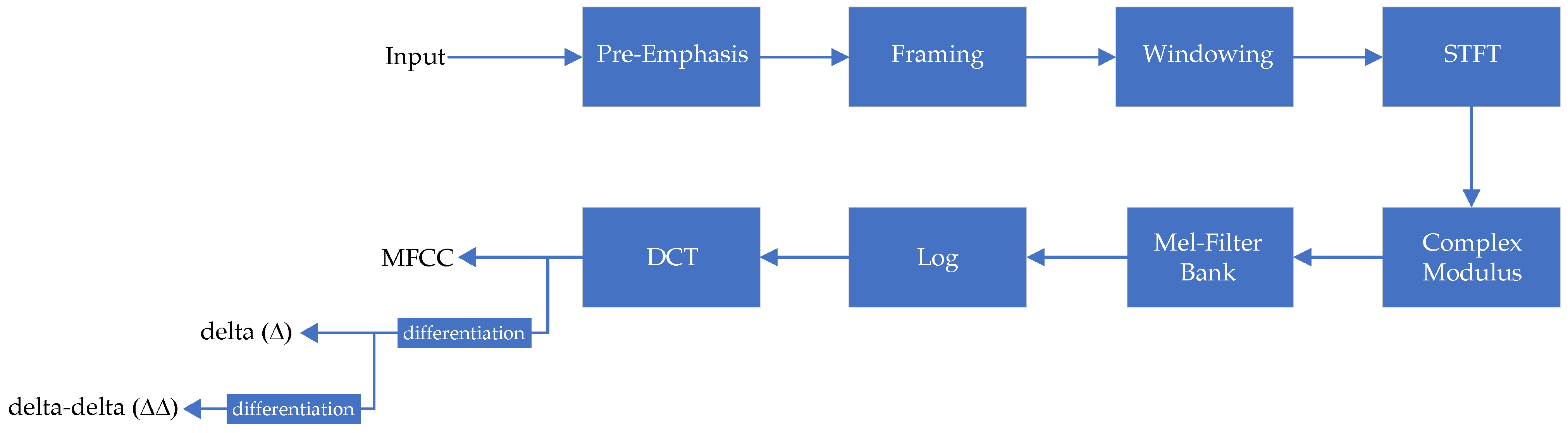

Figure 3.

Block diagram of the MFCC process and its derivatives.

Figure 3.

Block diagram of the MFCC process and its derivatives.

Figure 4.

Random forest impurity-based feature importance showing the mean and standard deviation for (a) MFCC, (b) MFCC-Delta, (c) MFCC-Delta-Delta feature extraction and, (d) the 20 most important features in descending order.

Figure 4.

Random forest impurity-based feature importance showing the mean and standard deviation for (a) MFCC, (b) MFCC-Delta, (c) MFCC-Delta-Delta feature extraction and, (d) the 20 most important features in descending order.

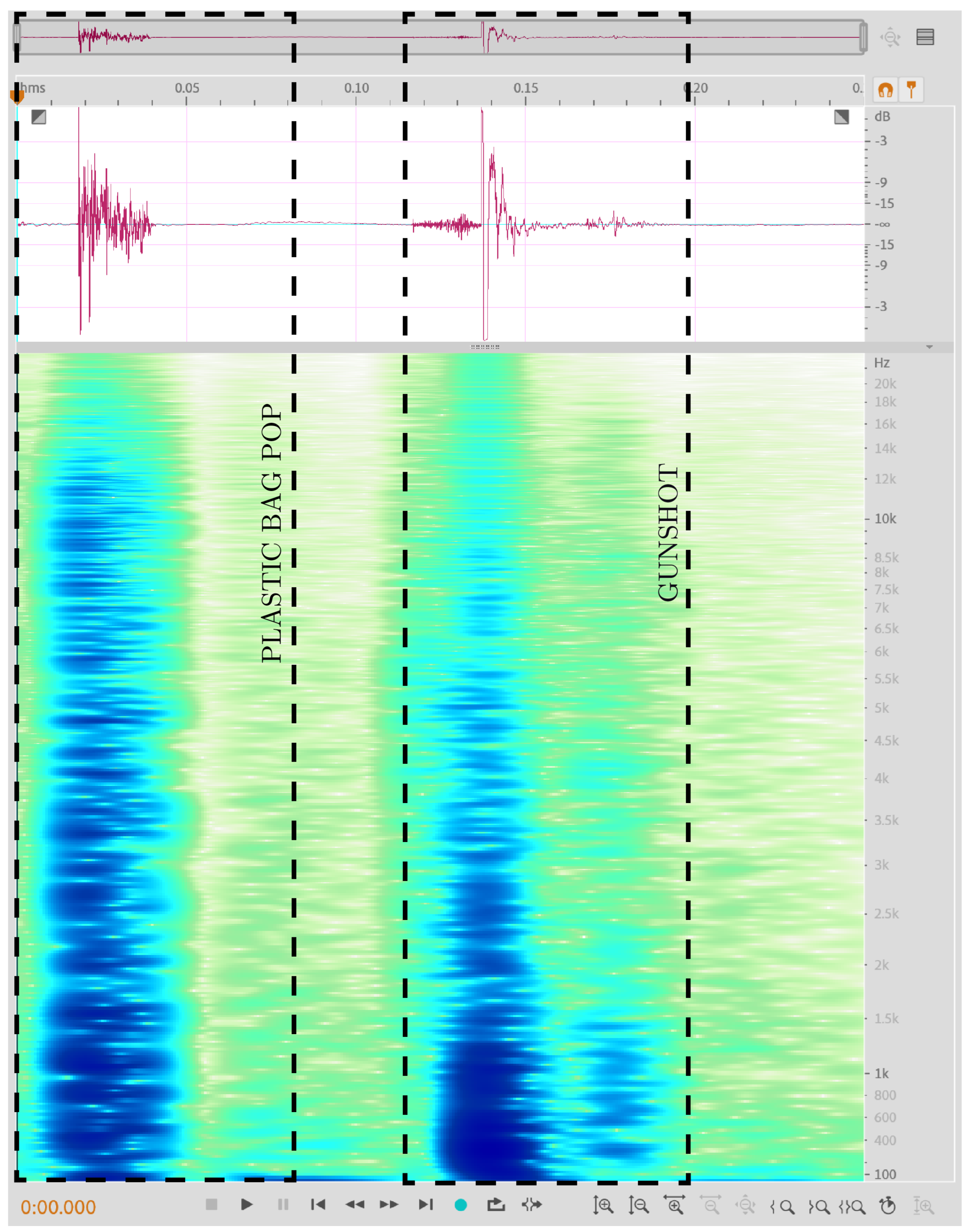

Figure 5.

Comparison of zoomed in spectrogram plots of a plastic bag pop and gunshot sound using Adobe Audition.

Figure 5.

Comparison of zoomed in spectrogram plots of a plastic bag pop and gunshot sound using Adobe Audition.

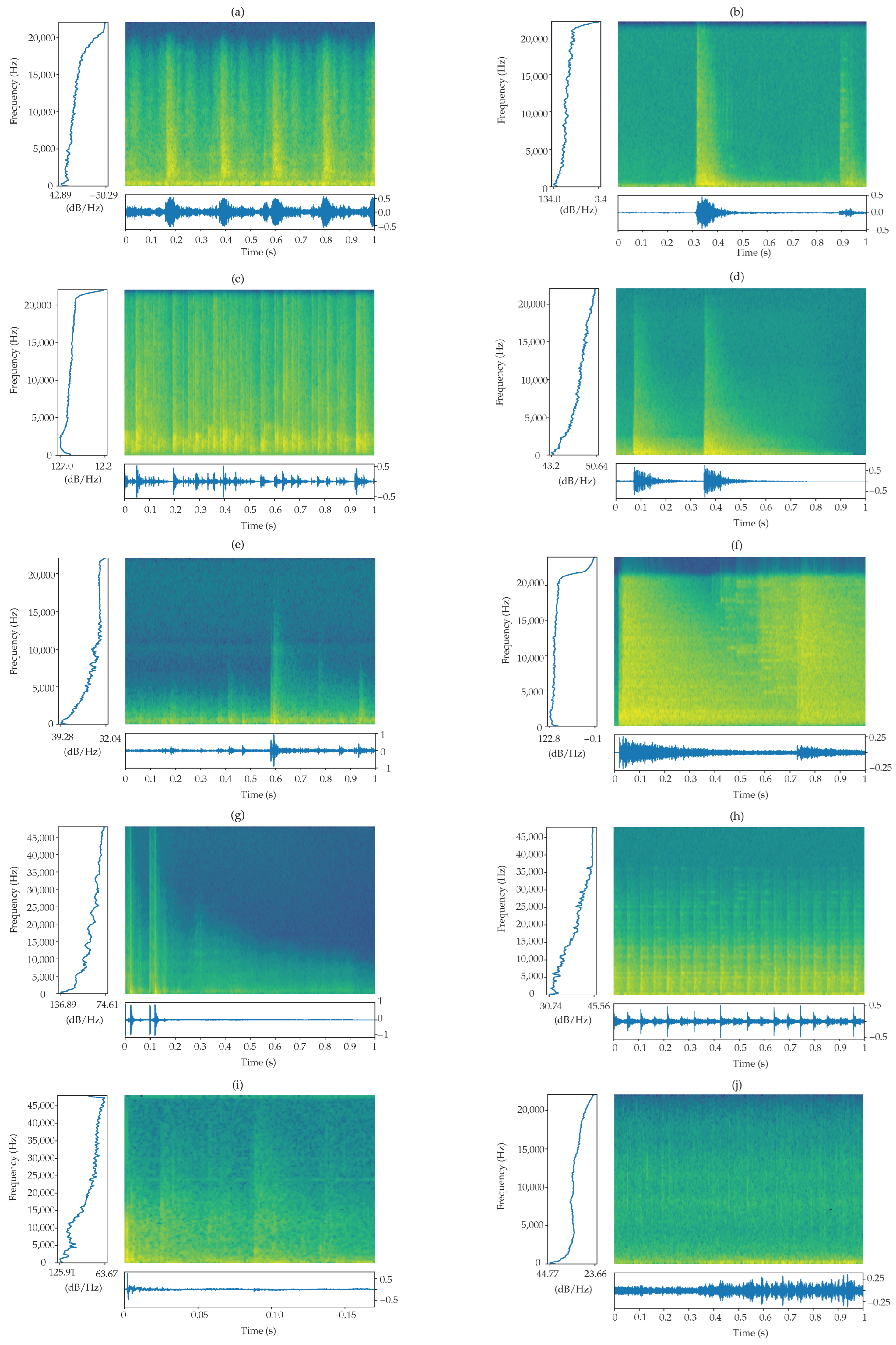

Figure 6.

Comparison of the spectrograms and respective PSD for the various classes: (a) carbackfire-0, (b) cardoorslam-1, (c) clapping-2, (d) doorknock_slam-3, (e) fireworks-4, (f) glassbreak_bulb-burst-5, (g) gunshot-6, (h) jackhammer-7, (i) plastic_pop-8 and, (j) thunderstorm-9.

Figure 6.

Comparison of the spectrograms and respective PSD for the various classes: (a) carbackfire-0, (b) cardoorslam-1, (c) clapping-2, (d) doorknock_slam-3, (e) fireworks-4, (f) glassbreak_bulb-burst-5, (g) gunshot-6, (h) jackhammer-7, (i) plastic_pop-8 and, (j) thunderstorm-9.

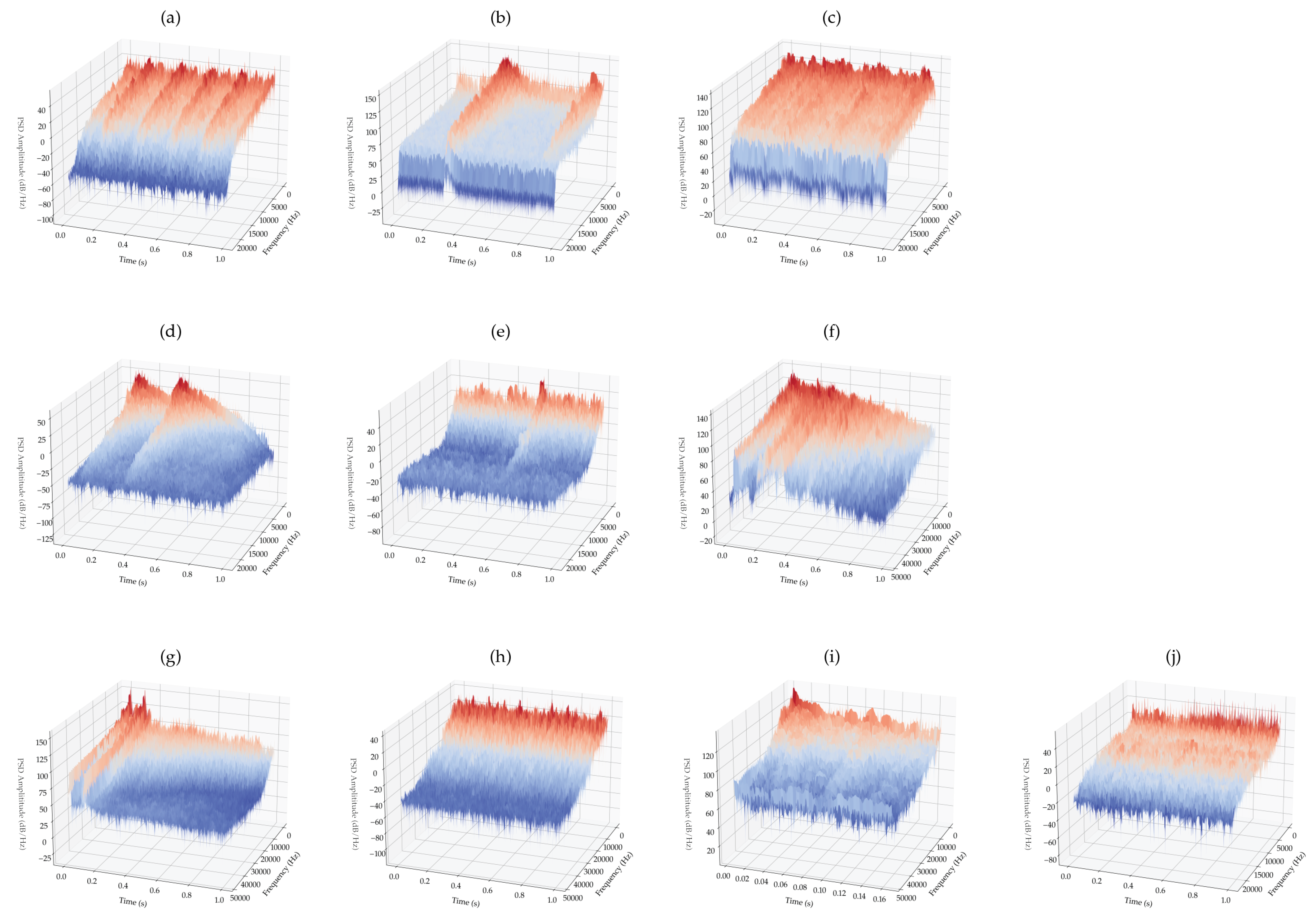

Figure 7.

Waterfall plots of the various classes: : (a) carbackfire-0, (b) cardoorslam-1, (c) clapping-2, (d) doorknock_slam-3, (e) fireworks-4, (f) glassbreak_bulb-burst-5, (g) gunshot-6, (h) jackhammer-7, (i) plastic_pop-8 and, (j) thunderstorm-9.

Figure 7.

Waterfall plots of the various classes: : (a) carbackfire-0, (b) cardoorslam-1, (c) clapping-2, (d) doorknock_slam-3, (e) fireworks-4, (f) glassbreak_bulb-burst-5, (g) gunshot-6, (h) jackhammer-7, (i) plastic_pop-8 and, (j) thunderstorm-9.

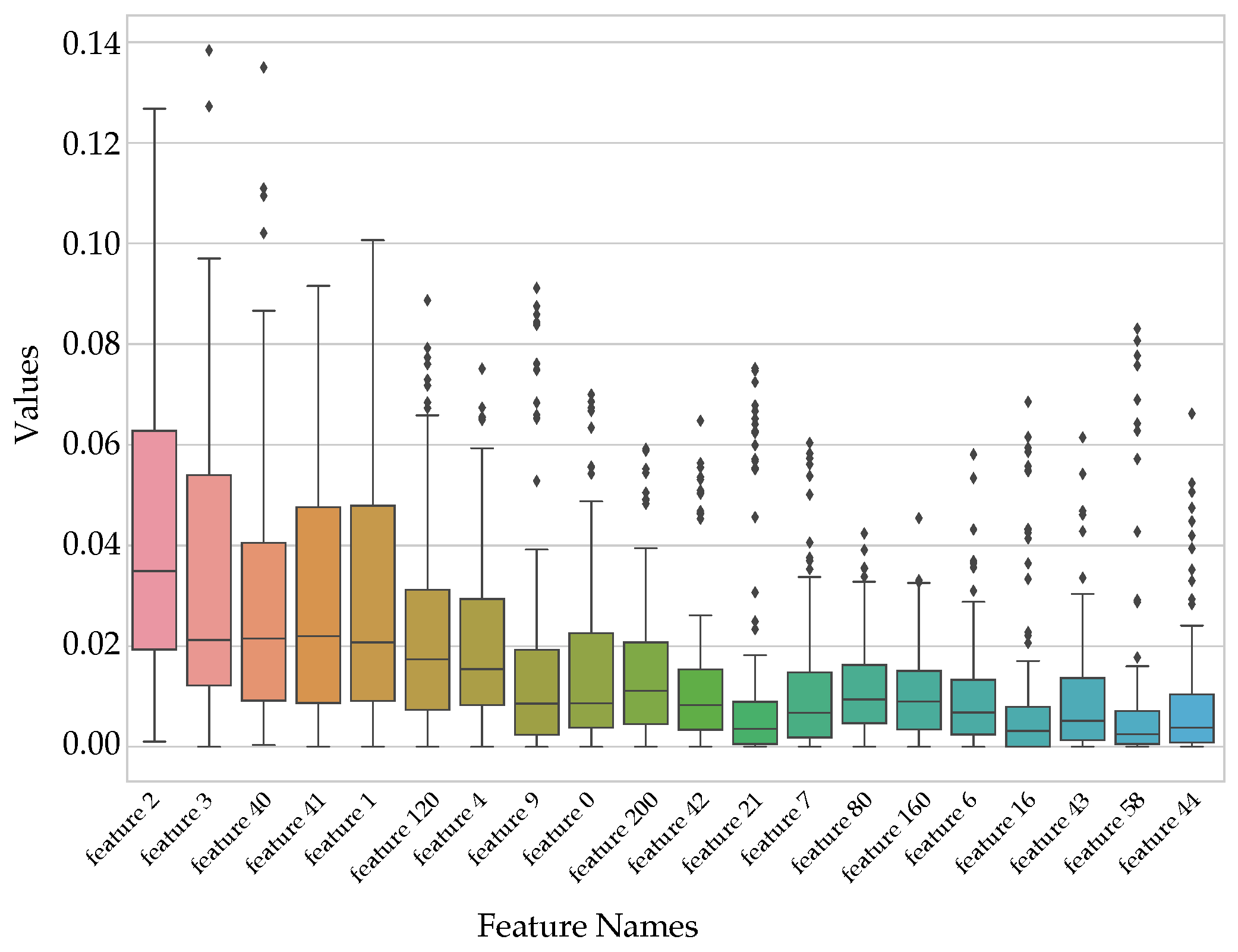

Figure 8.

Box plot showing the first 20 sorted MDI features.

Figure 8.

Box plot showing the first 20 sorted MDI features.

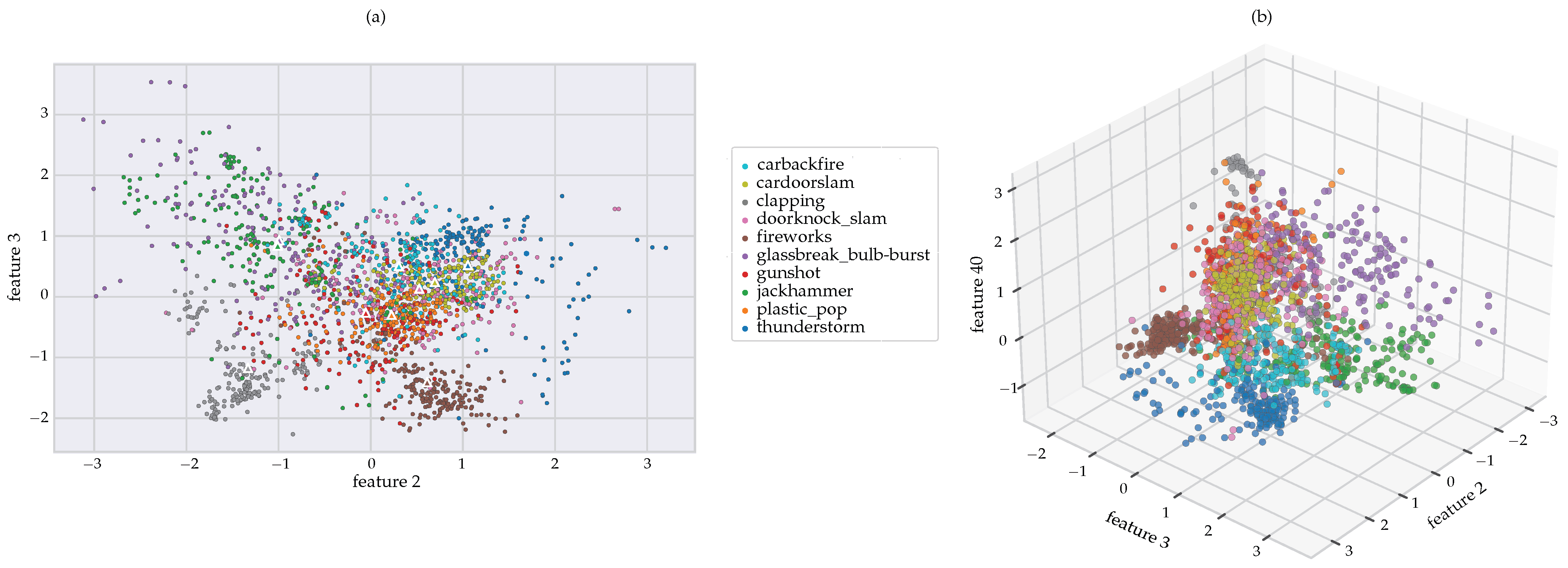

Figure 9.

(a) 2D scatter plot of feature 2 vs feature 3 with centroids and (b) 3D plot of features 2, 3, and 40.

Figure 9.

(a) 2D scatter plot of feature 2 vs feature 3 with centroids and (b) 3D plot of features 2, 3, and 40.

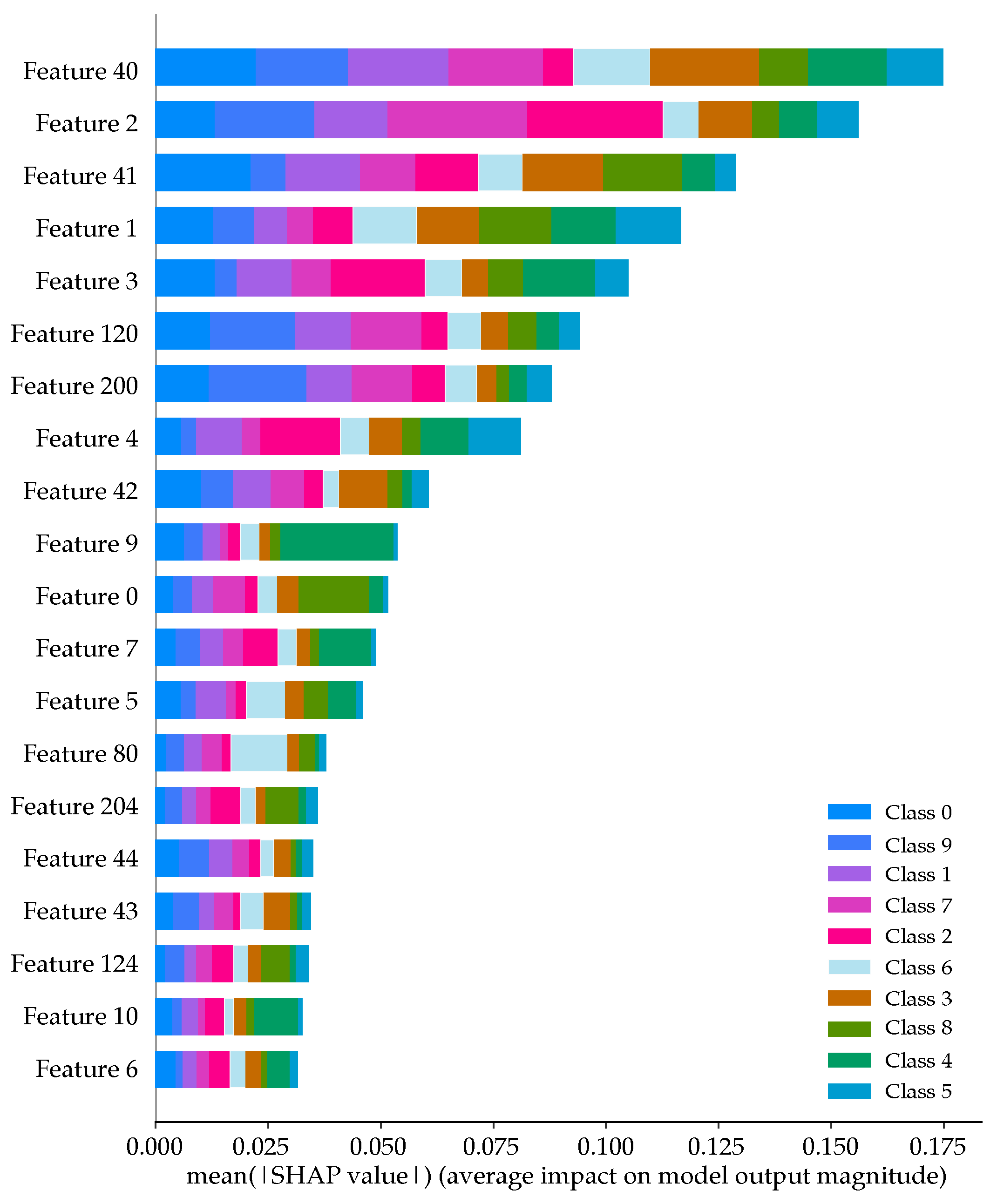

Figure 10.

SHAP summary plot.

Figure 10.

SHAP summary plot.

Figure 11.

The generated model using only the first 20 features according to the SHAP feature importance analysis, showing the resulting: (a) confusion matrix, (b) classification report and, (c) ROC curves.

Figure 11.

The generated model using only the first 20 features according to the SHAP feature importance analysis, showing the resulting: (a) confusion matrix, (b) classification report and, (c) ROC curves.

Figure 12.

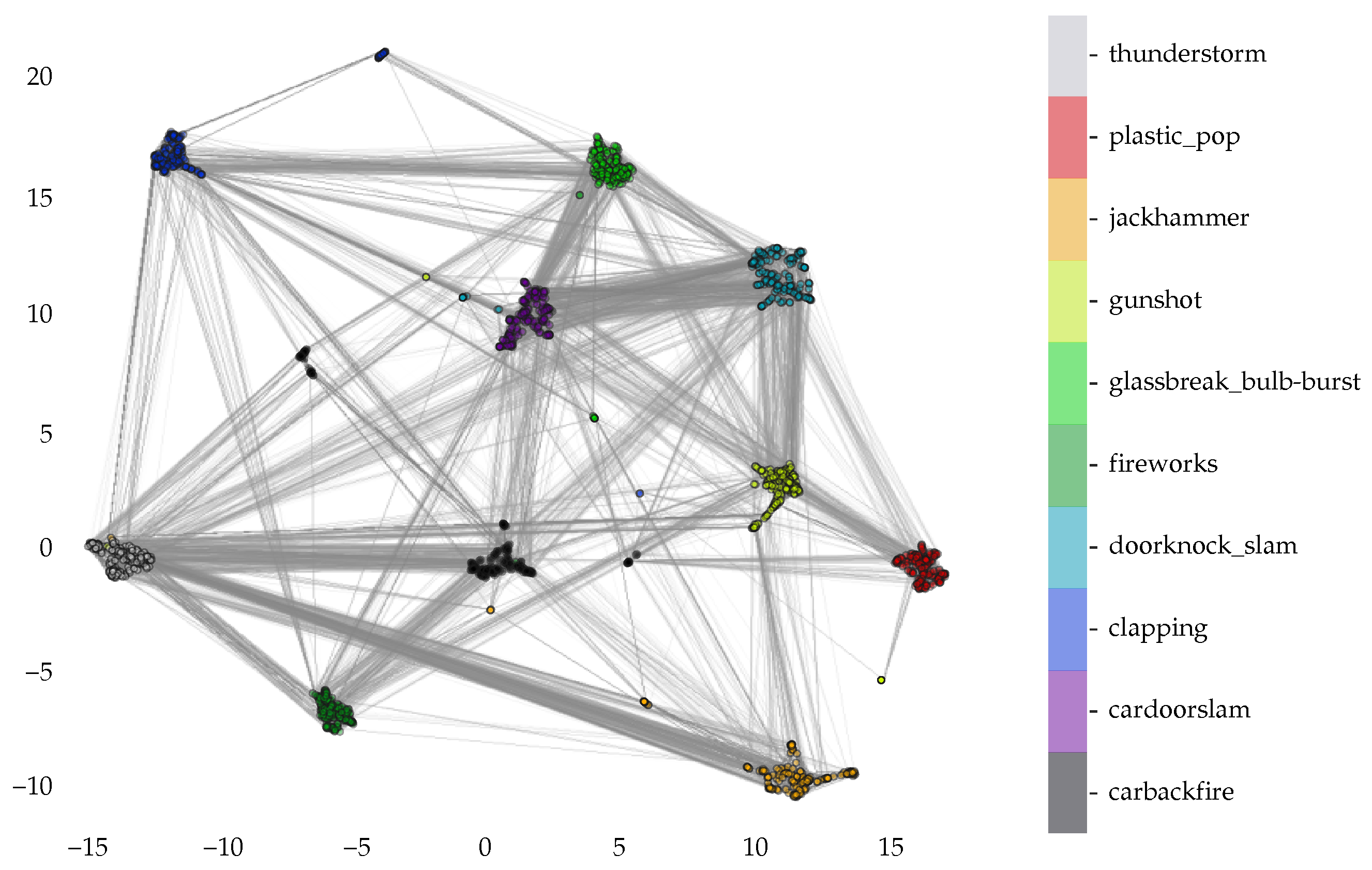

UMAP connectivity for the gunshot and gunshot-like sounds.

Figure 12.

UMAP connectivity for the gunshot and gunshot-like sounds.

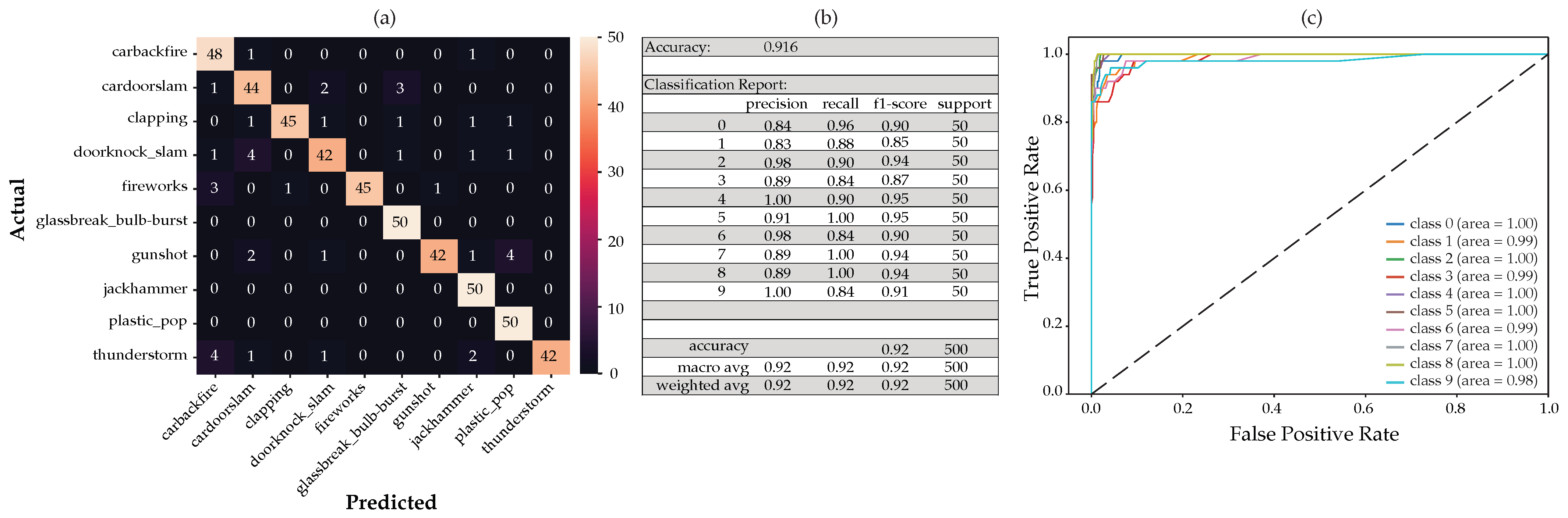

Figure 13.

Full feature analysis showing the (a) confusion matrix, (b) classification report and, (c) ROC curves.

Figure 13.

Full feature analysis showing the (a) confusion matrix, (b) classification report and, (c) ROC curves.

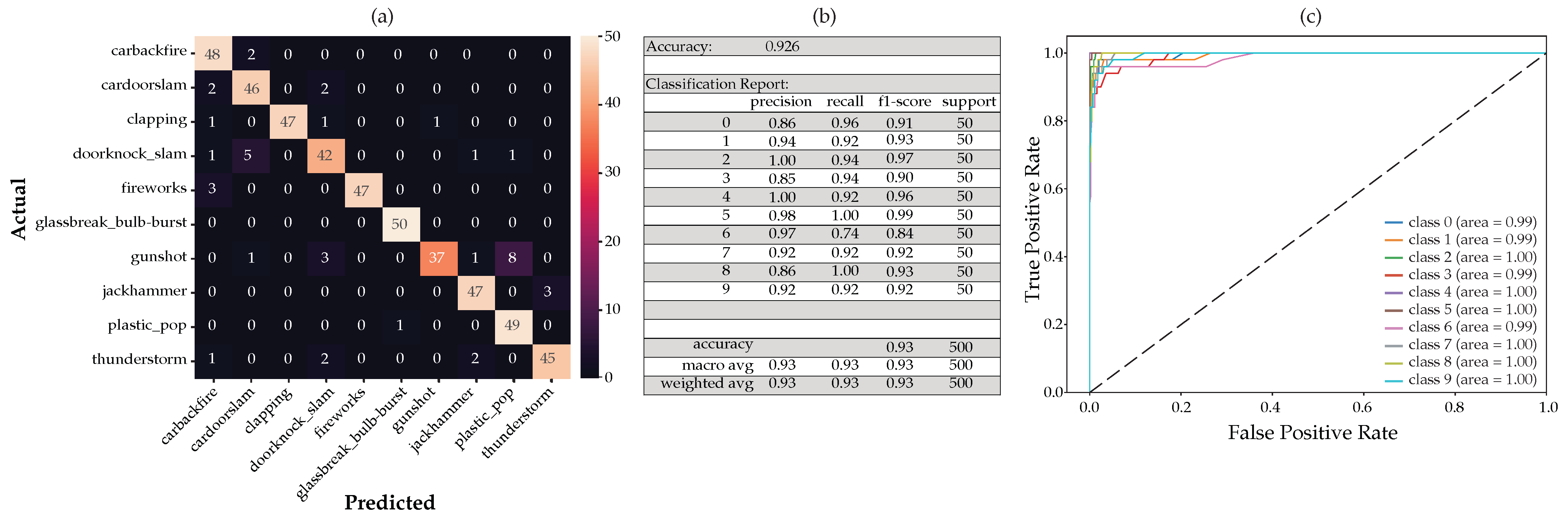

Figure 14.

The generated model using only the first 20 features according to the MDI feature importance analysis, showing the resulting: (a) confusion matrix, (b) classification report and, (c) ROC curves.

Figure 14.

The generated model using only the first 20 features according to the MDI feature importance analysis, showing the resulting: (a) confusion matrix, (b) classification report and, (c) ROC curves.

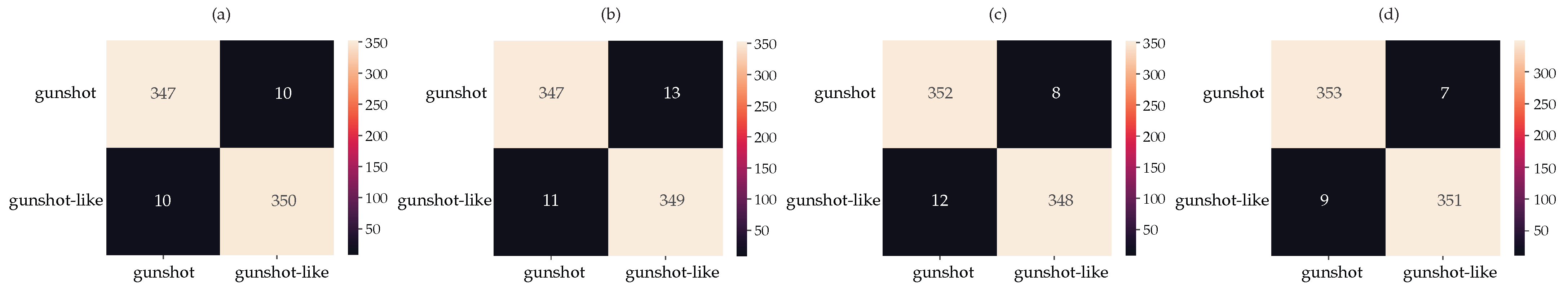

Figure 15.

Confusion matrix for (a) full dataset, (b) 16 common features, (c) RF MDI top 20 and, (d) SHAP top 20 features.

Figure 15.

Confusion matrix for (a) full dataset, (b) 16 common features, (c) RF MDI top 20 and, (d) SHAP top 20 features.

Table 1.

Order of the various classes with a partial list of audio used.

Table 1.

Order of the various classes with a partial list of audio used.

| carbackfire (0) | cardoorslam (1) |

|---|

| 1905 Cadillac | 1963 Porsche |

| 1932 Plymouth Roadster car | 1964 Cadillac |

| 1967 Doge D100 Adventurer truck | 1965 Jaguar E-Type |

| 1971 Ford | 1976 Pontiac Grand Prix car |

| 1976 Ford Pinto 3 cylinder car | 1983 Chevy pickup truck |

| 1979 Ford F600 | 1986 Cadillac DeVille sedan |

| 1980 Datsun 210 | 1988 Chevy pickup truck |

| Chevy Nova drag racer muscle car | 1998 Ford Expedition V8 SUV |

| | Car trunk |

| | U-Hall truck |

| clapping (2) | doorknock_slam (3) |

| Applause small group | Wood door hits |

| Baseball game | Door pounds |

| Children applause | Elevator door knock |

| Children in classroom | Glass door |

| Church applause small group | Glass and wood door |

| Crowd cheer | Half glass door impact |

| Crowd clap and stomp | Metal dumpster slam |

| Crowd rhythmic | Metal screen door slam |

| Crowd rhythmic fast | Stairwell door slam |

| Crowd rhythmic scattered | Dublin castle large wood door slam |

| fireworks (4) | glassbreak_bulb-burst (5) |

| New year fireworks in city | Beer bottle break on cement |

| New year fireworks ambience | Beer smash hit on steel plate |

| Long intensive | Fluorescent tube crash |

| Small fireworks | Glass breaking window frame |

| Mid distance fireworks | Glass picture solid impact |

| Single burning fireworks bang | Glass safety break |

| Sparkling single fireworks ambience | Large pickle jar break on cement |

| Sparkling single fireworks bang | Light bulb smash |

| | Light bulb smash with hammer |

| | Plates smash against wall |

| gunshot (6) | jackhammer (7) |

| AK47 bursts | Ambience construction site |

| Beretta M9 | 8th floor construction |

| Glock 9 mm | Urban small construction |

| M4 double tap | Spread out jackhammer |

| Maverick 88 single shots | Street construction hydraulic jackhammer |

| Pistol | Hotel construction, light hammering |

| Rifle | Short busts |

| SKS M59 single shots | City industry construction site |

| Sub machine gun-9 mm | |

| Winchester 1300 | |

| plastic_pop (8) | thunderstorm (9) |

| 0.05 m (2in) from Yeti mic using 1.89 L (0.5 Gal) bags-outdoor park | Deep rumble |

| 0.30 m (1FT) from Yeti mic using 1.89 L (0.5 Gal) bags-side of building | Long and slow rolling bursts |

| 0.91 m (3FT) from Tascam mic using 9.08 L (2.4 Gal) bags-between buildings | Long thunderstorm with hard rain |

| 1.52 m (5FT) from JLab mic using 1.89 L (0.5 Gal) bags-inside lab with curtains | Rain and thunder approaching |

| 3.04 m (10FT) from Bruel and Kajer mic using 15.14 L (4 Gal) bags-inside home | Rolling thunderstorm |

| 4.57 m (15FT) from JLab mic using 3.02 L (0.8 Gal) bags-inside lab with glass walls | Storm with strong thunders |

| 6.10 m (20FT) from Zoom mic using 1.89 L (0.5 Gal) bags-inside home | Strong thunderstorm in city |

| 6.71 m (22FT) from JLab mic using 9.08 L (2.4 Gal) bags-inside home | Thunder rumble with constant rain |

| 7.32 m (24FT) from iPad mini using 1.89 L (0.5 Gal) bags-outdoor park | Thunderstorm in closed car |

| 7.32 m (24FT) from Samsung S9 phone using 1.89 L (0.5 Gal) bags - outdoor park | |

Table 2.

Feature numbers and its associated meaning.

Table 2.

Feature numbers and its associated meaning.

| Feature Name | Feature Decipher |

|---|

| feature 0….feature 39 | MFCC_MEAN_FLTR0…MFCC_MEAN_FLTR39 |

| feature 40…feature 79 | MFCC_STDDEV_FLTR0…MFCC_STDDEV_FLTR39 |

| feature 80…feature 119 | DELTA_MEAN_FLTR0…DELTA_MEAN_FLTR39 |

| feature 120…feature159 | DELTA_STDDEV_FLTR0…DELTA_STDDEV_FLTR39 |

| feature 160…feature 199 | DELTA2_MEAN_FLTR0…DELTA2_MEAN_FLTR39 |

| feature 200…feature 239 | DELTA2_STDDEV_FLTR0…DELTA2_STDDEV_FLTR39 |

Table 3.

Start/Stop frequencies for Mel triangular filters given a sample rate of 96k and 40 Mel coefficients.

Table 3.

Start/Stop frequencies for Mel triangular filters given a sample rate of 96k and 40 Mel coefficients.

| Feature | Start (Hz) | Stop (Hz) | Feature | Start (Hz) | Stop (Hz) | Feature | Start (Hz) | Stop (Hz) | Feature | Start (Hz) | Stop (Hz) |

|---|

| 0 | 0 | 160.97 | 10 | 1270.02 | 1722.96 | 20 | 4844.28 | 6118.98 | 30 | 14,903.36 | 18,490.79 |

| 1 | 76.31 | 254.80 | 11 | 1484.79 | 1987.10 | 21 | 5448.68 | 6862.35 | 31 | 16,604.36 | 20,582.87 |

| 2 | 160.70 | 358.88 | 12 | 1722.96 | 2280.03 | 22 | 6118.98 | 7686.76 | 32 | 18,490.79 | 22,903.02 |

| 3 | 254.80 | 474.32 | 13 | 1987.10 | 2604.90 | 23 | 6862.35 | 8601.04 | 33 | 20,582.87 | 25,476.10 |

| 4 | 358.88 | 602.33 | 14 | 2280.03 | 2965.18 | 24 | 7686.76 | 9614.99 | 34 | 22,903.02 | 28,329.68 |

| 5 | 474.32 | 744.31 | 15 | 2604.90 | 3364.74 | 25 | 8601.04 | 10739.48 | 35 | 25,476.10 | 31,494.35 |

| 6 | 602.33 | 901.76 | 16 | 2965.18 | 3807.86 | 26 | 9614.99 | 11,986.55 | 36 | 28,329.68 | 35,004.01 |

| 7 | 744.31 | 1076.37 | 17 | 3364.74 | 4299.28 | 27 | 10,739.48 | 13,369.57 | 37 | 31,494.35 | 38,896.27 |

| 8 | 901.76 | 1270.02 | 18 | 3807.86 | 4844.28 | 28 | 11,986.55 | 14,903.36 | 38 | 35,004.01 | 43,212.85 |

| 9 | 1076.37 | 1484.79 | 19 | 4299.28 | 5448.68 | 29 | 13,369.57 | 16,604.36 | 39 | 38,896.27 | 48,000.00 |

Table 4.

Comparison of relative distances from the gunshot sound to the gunshot-like sounds.

Table 4.

Comparison of relative distances from the gunshot sound to the gunshot-like sounds.

| Class | feat2 | feat3 | Dist from Class 6 | Class_Name |

|---|

| 6 | −0.0525 | −0.2625 | 0.0000 | gunshot |

| 8 | 0.2264 | −0.2992 | 0.2813 | plastic_pop |

| 3 | 0.5276 | 0.1332 | 0.7022 | doorknock_slam |

| 0 | 0.3052 | 0.5041 | 0.8460 | carbackfire |

| 1 | 0.6965 | 0.2429 | 0.9036 | cardoorslam |

| 4 | 0.6124 | −1.4075 | 1.3241 | fireworks |

| 9 | 1.0548 | 0.5224 | 1.3573 | thunderstorm |

| 7 | −1.0090 | 0.8419 | 1.4610 | jackhammer |

| 5 | −0.9919 | 0.9307 | 1.5186 | glassbreak_bulb-burst |

| 2 | −1.3317 | −1.1709 | 1.5689 | clapping |

Table 5.

Comparison of the RF MDI and SHAP 20 most important features.

Table 5.

Comparison of the RF MDI and SHAP 20 most important features.

| MDI | SHAP |

|---|

| Feature Name | Feature | Feature | Feature Name |

|---|

| MFCC_MEAN_FLTR2 | 2 | 40 | MFCC_STDDEV_FLTR0 |

| MFCC_MEAN_FLTR3 | 3 | 2 | MFCC_MEAN_FLTR2 |

| MFCC_STDDEV_FLTR0 | 40 | 41 | MFCC_STDDEV_FLTR1 |

| MFCC_STDDEV_FLTR1 | 41 | 1 | MFCC_MEAN_FLTR1 |

| MFCC_MEAN_FLTR1 | 1 | 3 | MFCC_MEAN_FLTR3 |

| DELTA_STDDEV_FLTR0 | 120 | 120 | DELTA_STDDEV_FLTR0 |

| MFCC_MEAN_FLTR4 | 4 | 200 | DELTA2_STDDEV_FLTR0 |

| MFCC_MEAN_FLTR9 | 9 | 4 | MFCC_MEAN_FLTR4 |

| MFCC_MEAN_FLTR0 | 0 | 42 | MFCC_STDDEV_FLTR2 |

| DELTA2_STDDEV_FLTR0 | 200 | 9 | MFCC_MEAN_FLTR9 |

| MFCC_STDDEV_FLTR2 | 42 | 0 | MFCC_MEAN_FLTR0 |

| MFCC_MEAN_FLTR21 | 21 | 7 | MFCC_MEAN_FLTR7 |

| MFCC_MEAN_FLTR7 | 7 | 5 | MFCC_MEAN_FLTR5 |

| DELTA_MEAN_FLTR0 | 80 | 80 | DELTA_MEAN_FLTR0 |

| DELTA2_MEAN_FLTR0 | 160 | 204 | DELTA2_STDDEV_FLTR4 |

| MFCC_MEAN_FLTR6 | 6 | 44 | MFCC_STDDEV_FLTR4 |

| MFCC_MEAN_FLTR16 | 16 | 43 | MFCC_STDDEV_FLTR3 |

| MFCC_STDDEV_FLTR3 | 43 | 124 | DELTA_STDDEV_FLTR4 |

| MFCC_STDDEV_FLTR18 | 58 | 10 | MFCC_MEAN_FLTR10 |

| MFCC_STDDEV_FLTR4 | 44 | 6 | MFCC_MEAN_FLTR6 |

Table 6.

Tabulated data for the accuracy and FPR using the full dataset, 16 common features, RF MDI top 20, and SHAP top 20 features.

Table 6.

Tabulated data for the accuracy and FPR using the full dataset, 16 common features, RF MDI top 20, and SHAP top 20 features.

| Feature Set | Accuracy | FPR |

|---|

| Full Dataset | 0.9681 | 0.028 |

| 16 Common | 0.9667 | 0.031 |

| RD MDI Top 20 | 0.9722 | 0.033 |

| SHAP Top 20 | 0.9778 | 0.025 |