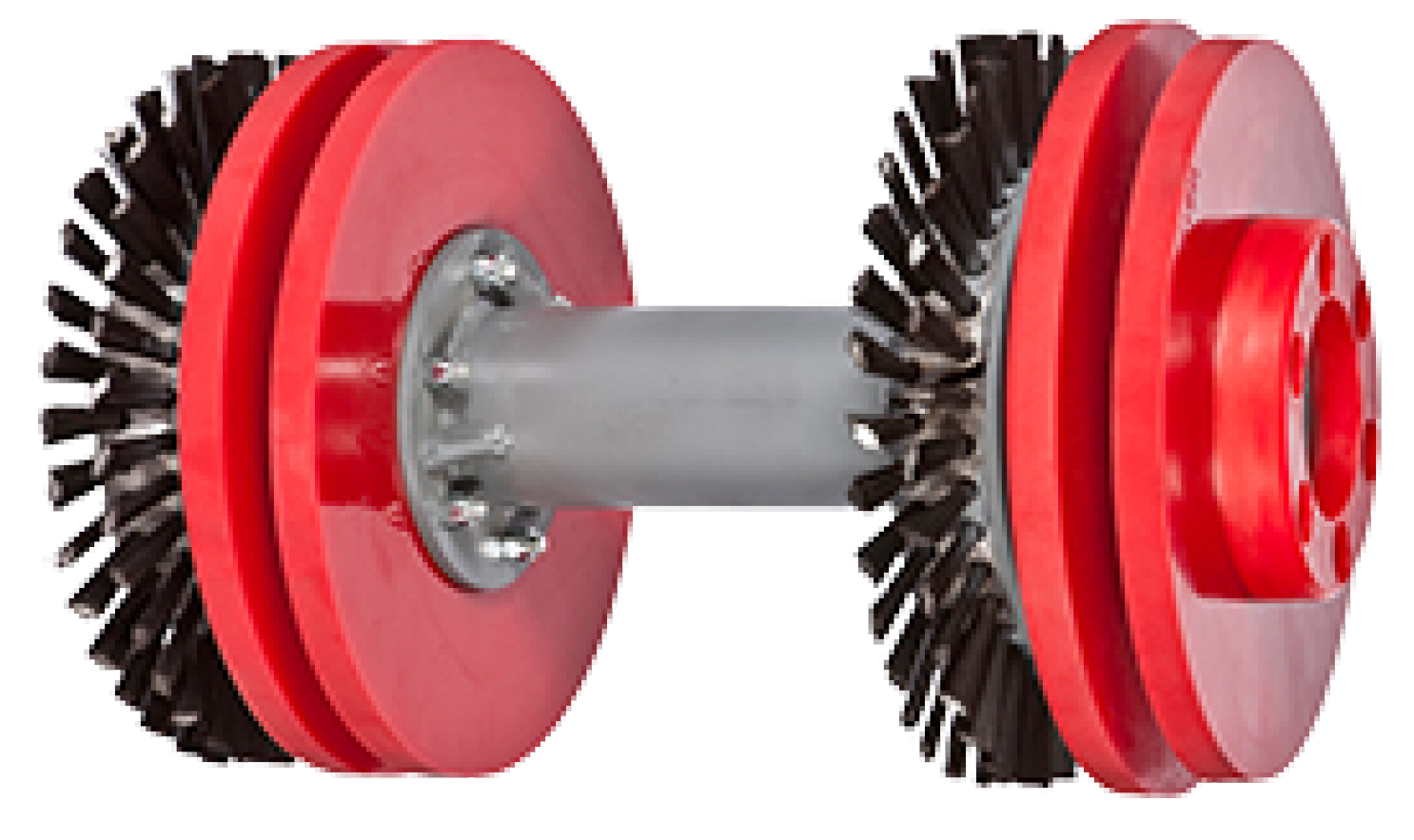

Figure 1.

Example of cleaning PIG.

Figure 1.

Example of cleaning PIG.

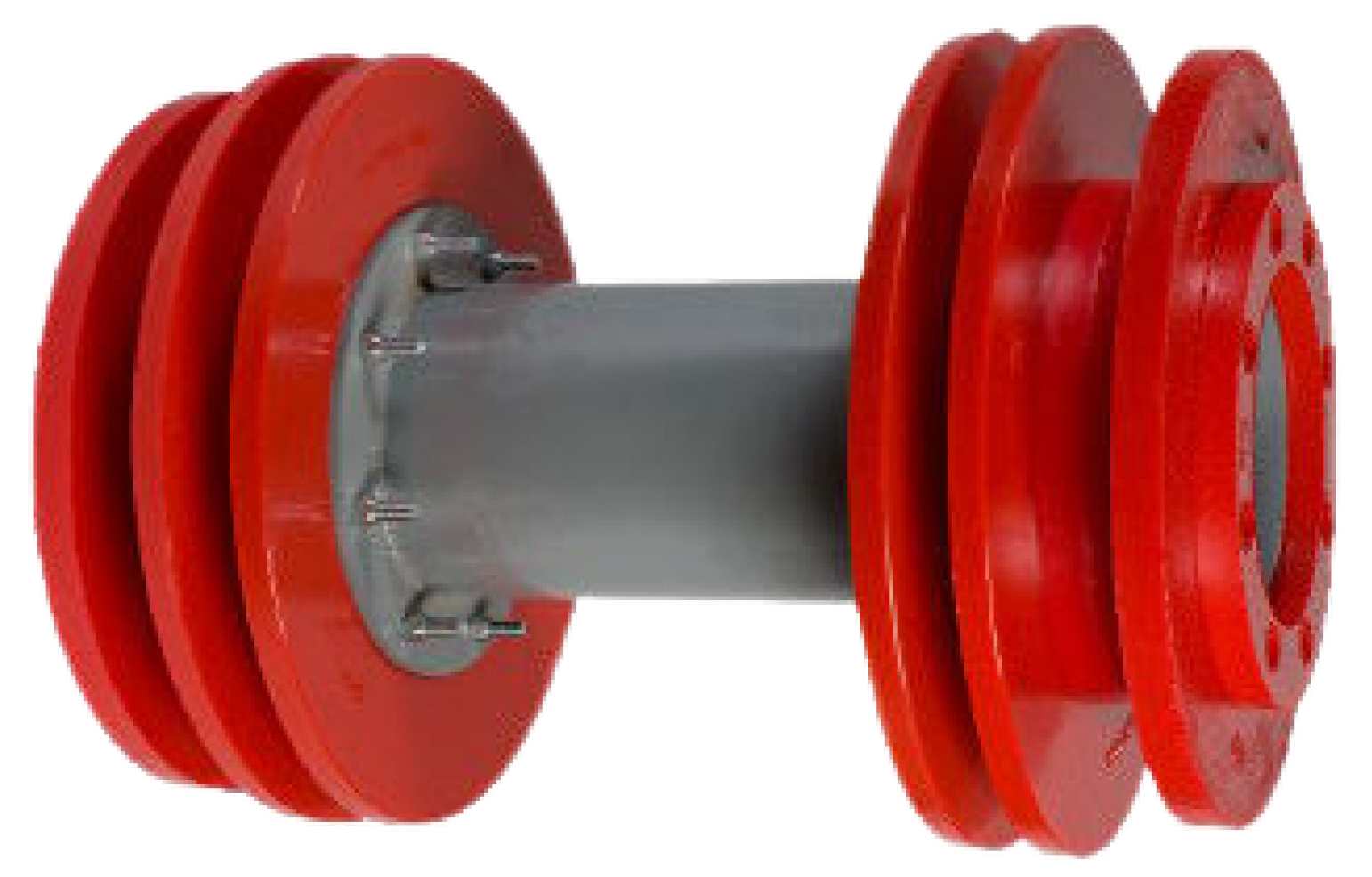

Figure 2.

Example of sealing PIG.

Figure 2.

Example of sealing PIG.

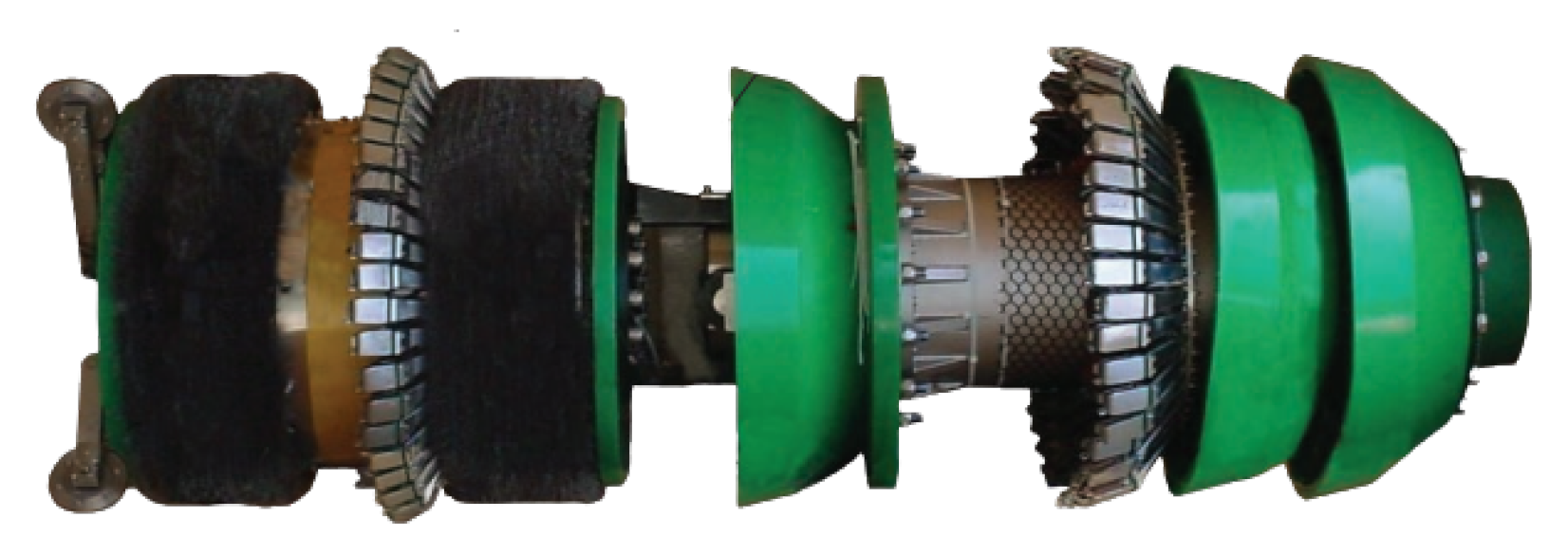

Figure 3.

Example of smart PIG.

Figure 3.

Example of smart PIG.

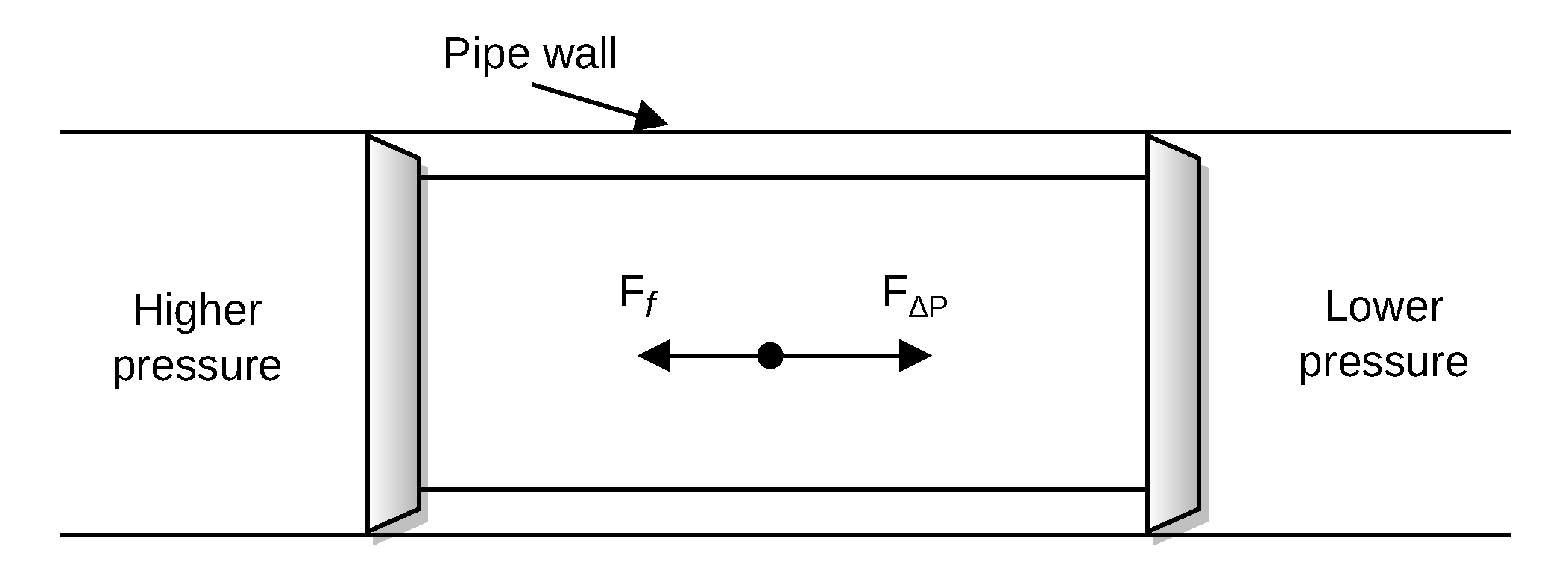

Figure 4.

Forces involved in PIG motion.

Figure 4.

Forces involved in PIG motion.

Figure 5.

Behavior of velocity (V) and differential pressure () with respect to time (t) in the presence of a velocity excursion. PIG velocity.

Figure 5.

Behavior of velocity (V) and differential pressure () with respect to time (t) in the presence of a velocity excursion. PIG velocity.

Figure 6.

Behavior of velocity (V) and differential pressure (ΔP) with respect to time (t) in the presence of a velocity excursion. Differential pressure acting on the PIG.

Figure 6.

Behavior of velocity (V) and differential pressure (ΔP) with respect to time (t) in the presence of a velocity excursion. Differential pressure acting on the PIG.

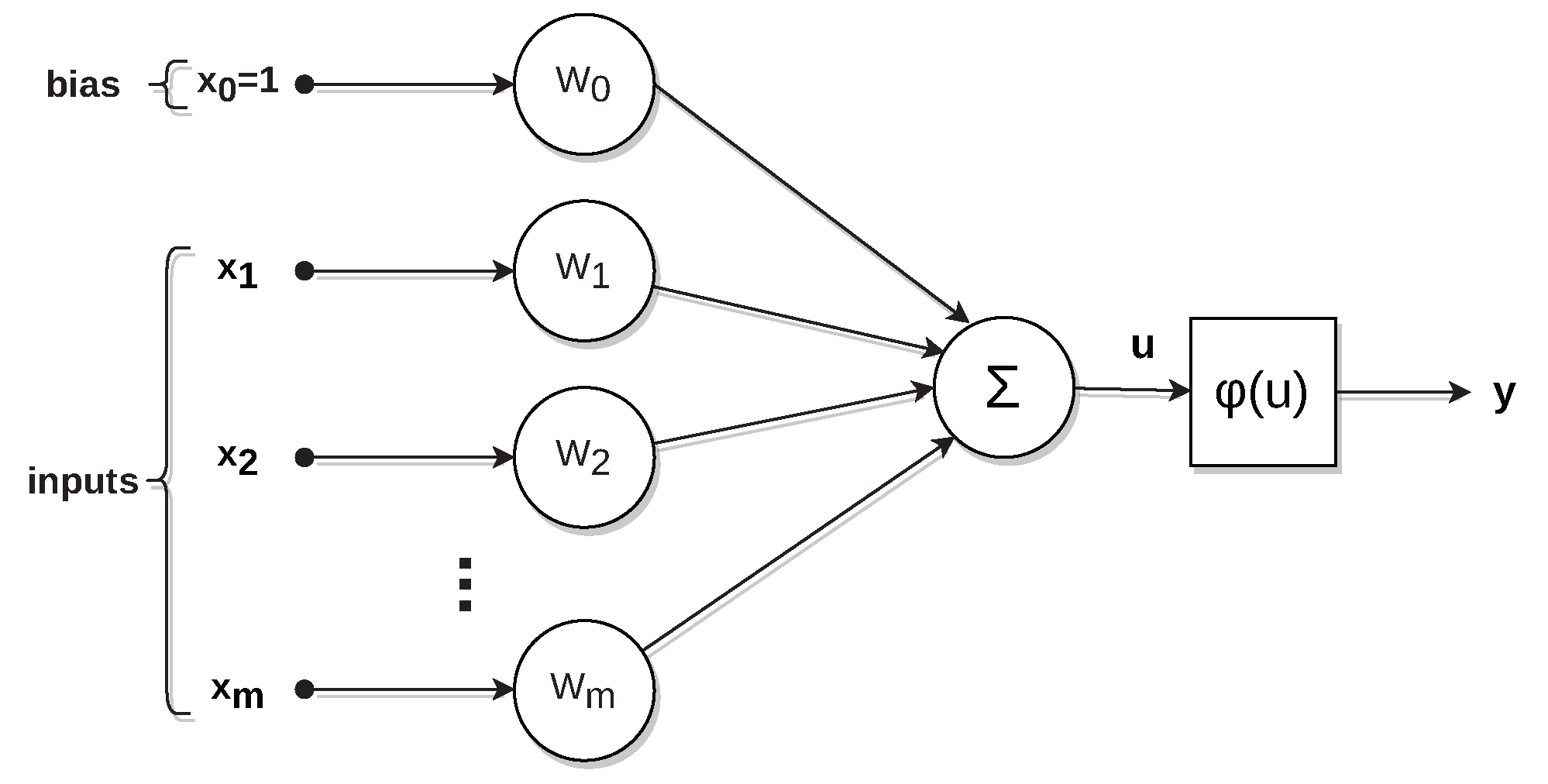

Figure 7.

Artificial neuron model.

Figure 7.

Artificial neuron model.

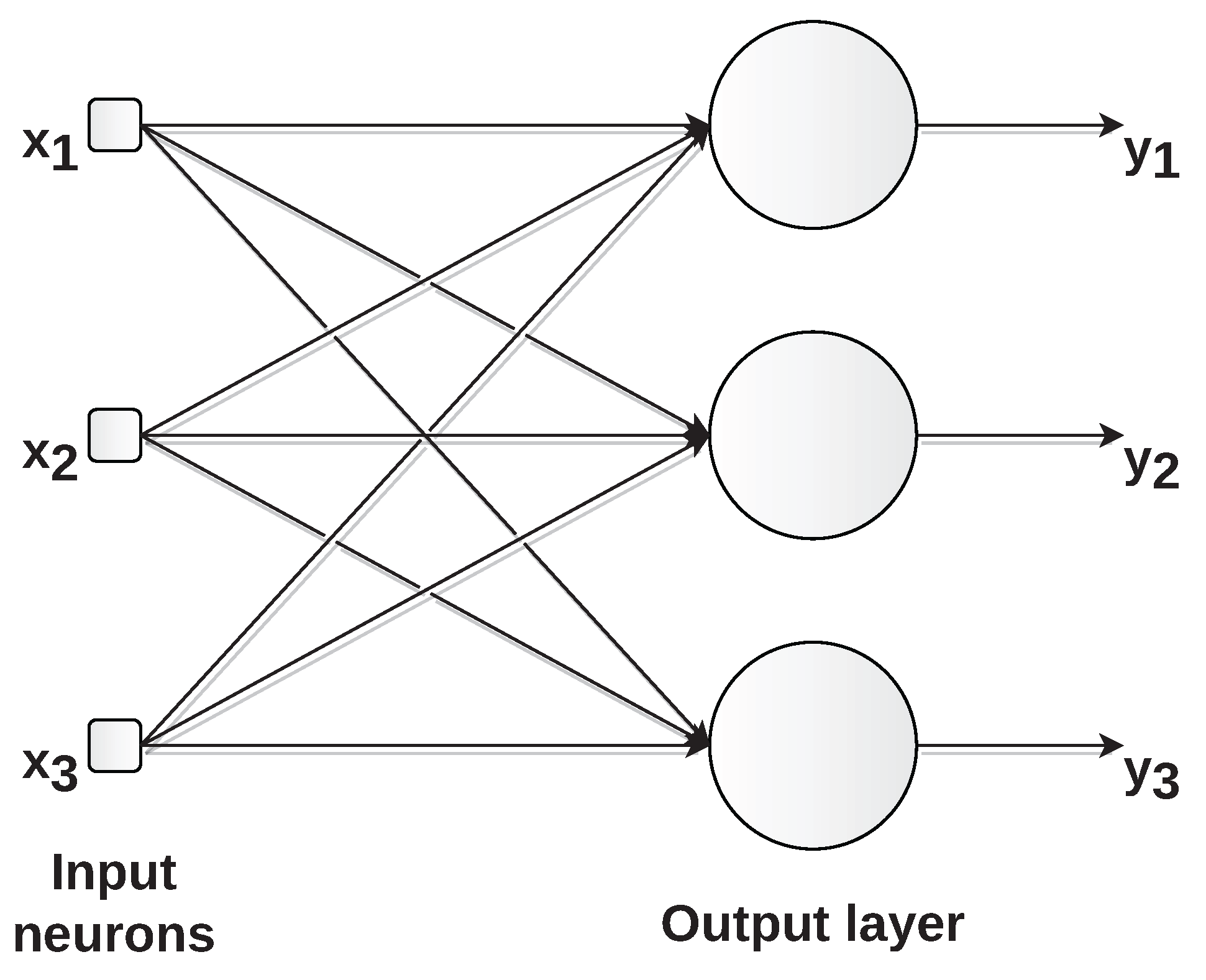

Figure 8.

Example of single-layer feed-forward network with three inputs (, and ) and three outputs (, and ).

Figure 8.

Example of single-layer feed-forward network with three inputs (, and ) and three outputs (, and ).

Figure 9.

Example of multilayer feed-forward network with three inputs (, and ), two hidden layers, and two outputs ( and ).

Figure 9.

Example of multilayer feed-forward network with three inputs (, and ), two hidden layers, and two outputs ( and ).

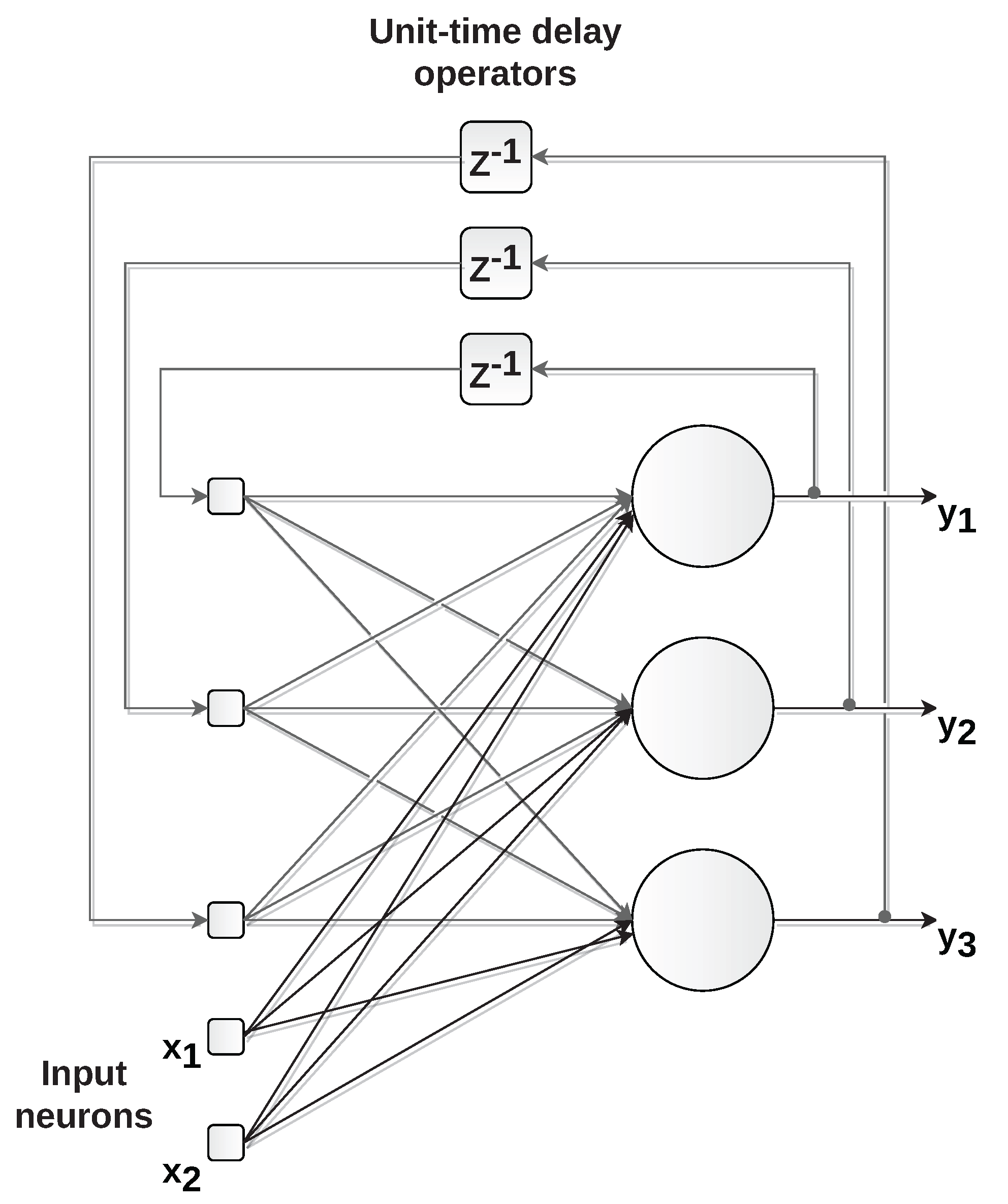

Figure 10.

Example of recurrent network with two inputs ( and ) and three outputs (, and ). The time-delayed outputs are used as inputs for feedback on the network.

Figure 10.

Example of recurrent network with two inputs ( and ) and three outputs (, and ). The time-delayed outputs are used as inputs for feedback on the network.

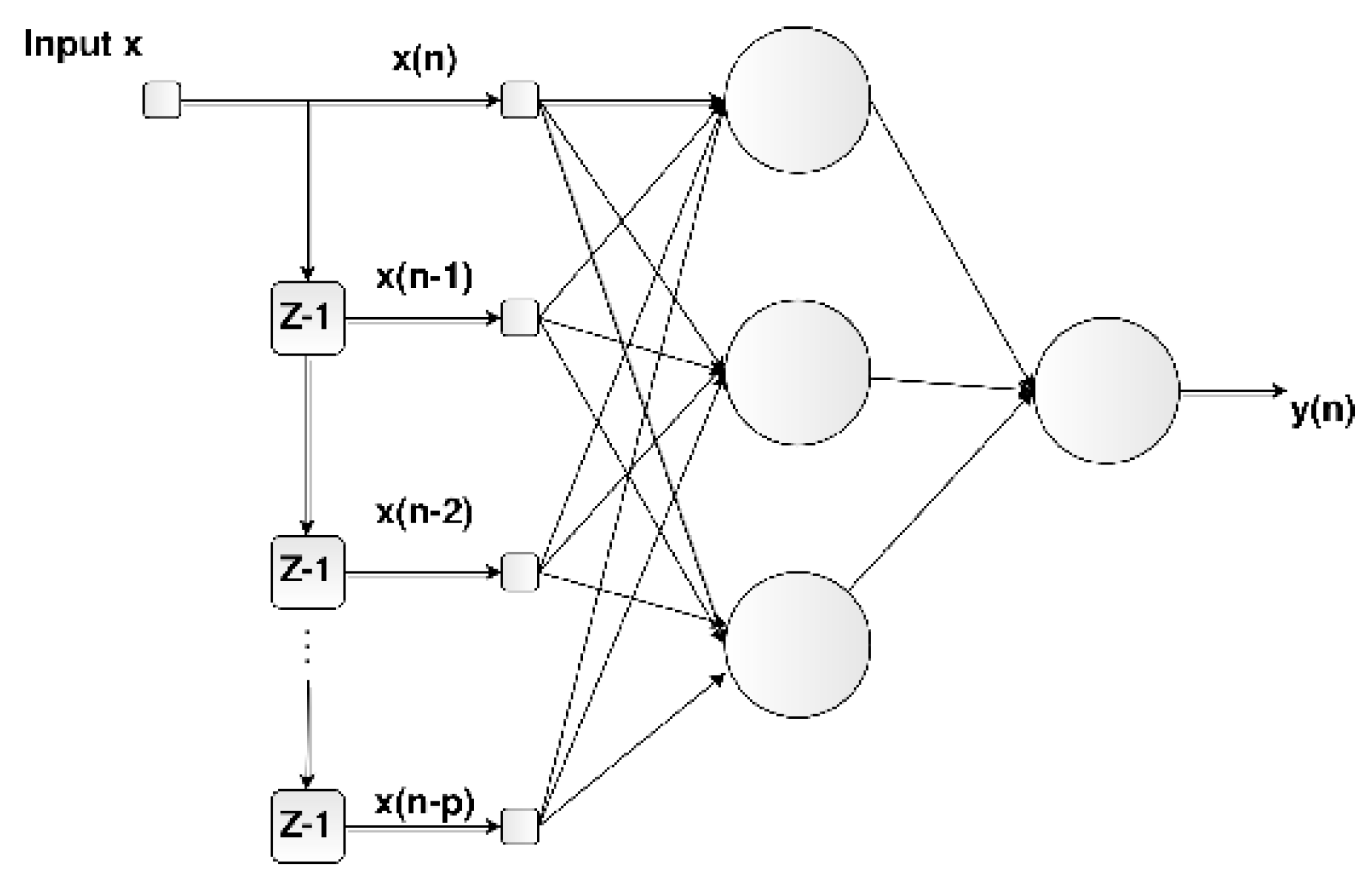

Figure 11.

Time-delay neural network (TDNN).

Figure 11.

Time-delay neural network (TDNN).

Figure 12.

Parallel (closed-loop) configuration.

Figure 12.

Parallel (closed-loop) configuration.

Figure 13.

Series-parallel (open-loop) configuration.

Figure 13.

Series-parallel (open-loop) configuration.

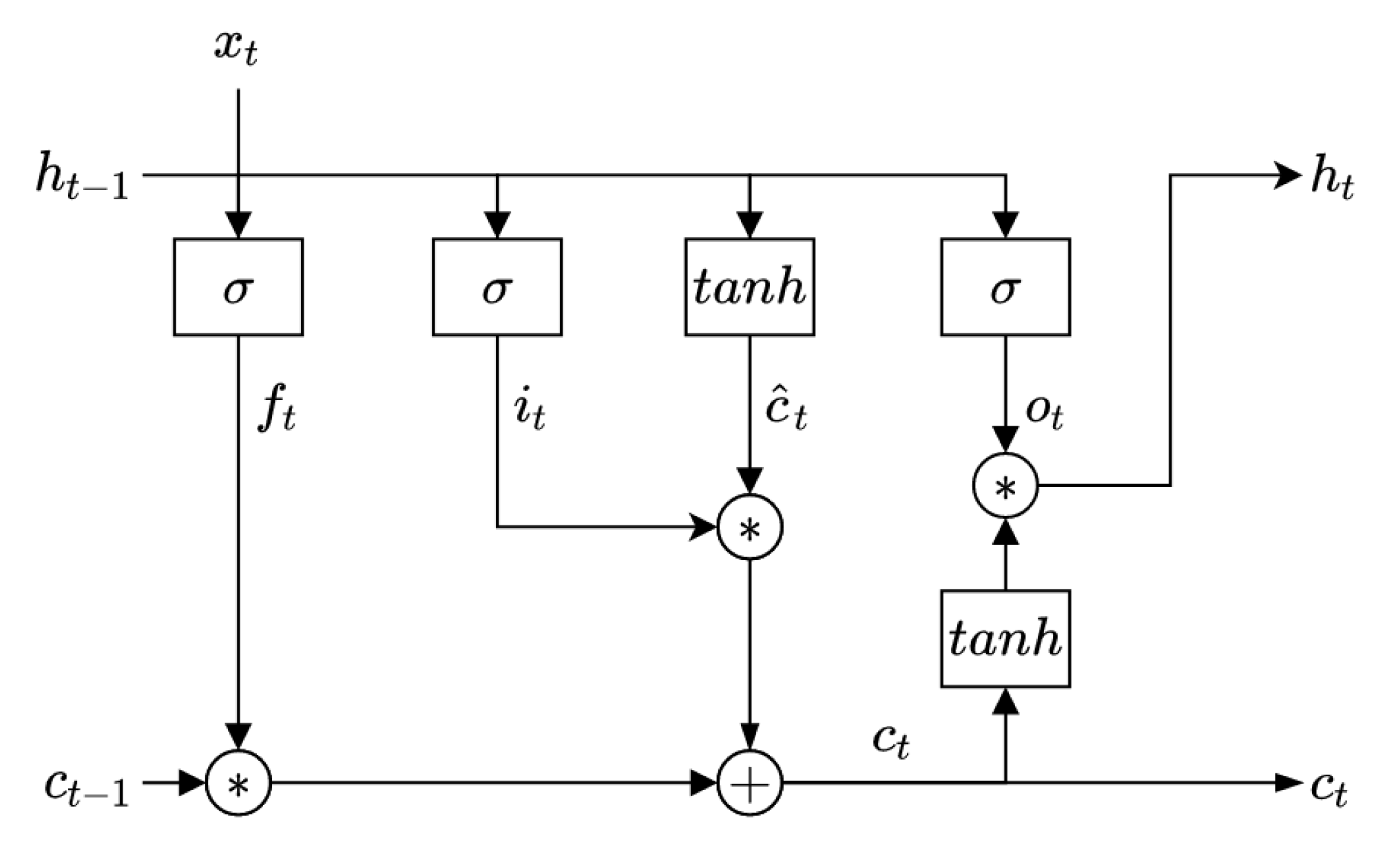

Figure 14.

Representation of the LSTM cell.

Figure 14.

Representation of the LSTM cell.

Figure 15.

Exploded view of the Prototype PIG 2.

Figure 15.

Exploded view of the Prototype PIG 2.

Figure 16.

Prototype PIG 2 (side view picture).

Figure 16.

Prototype PIG 2 (side view picture).

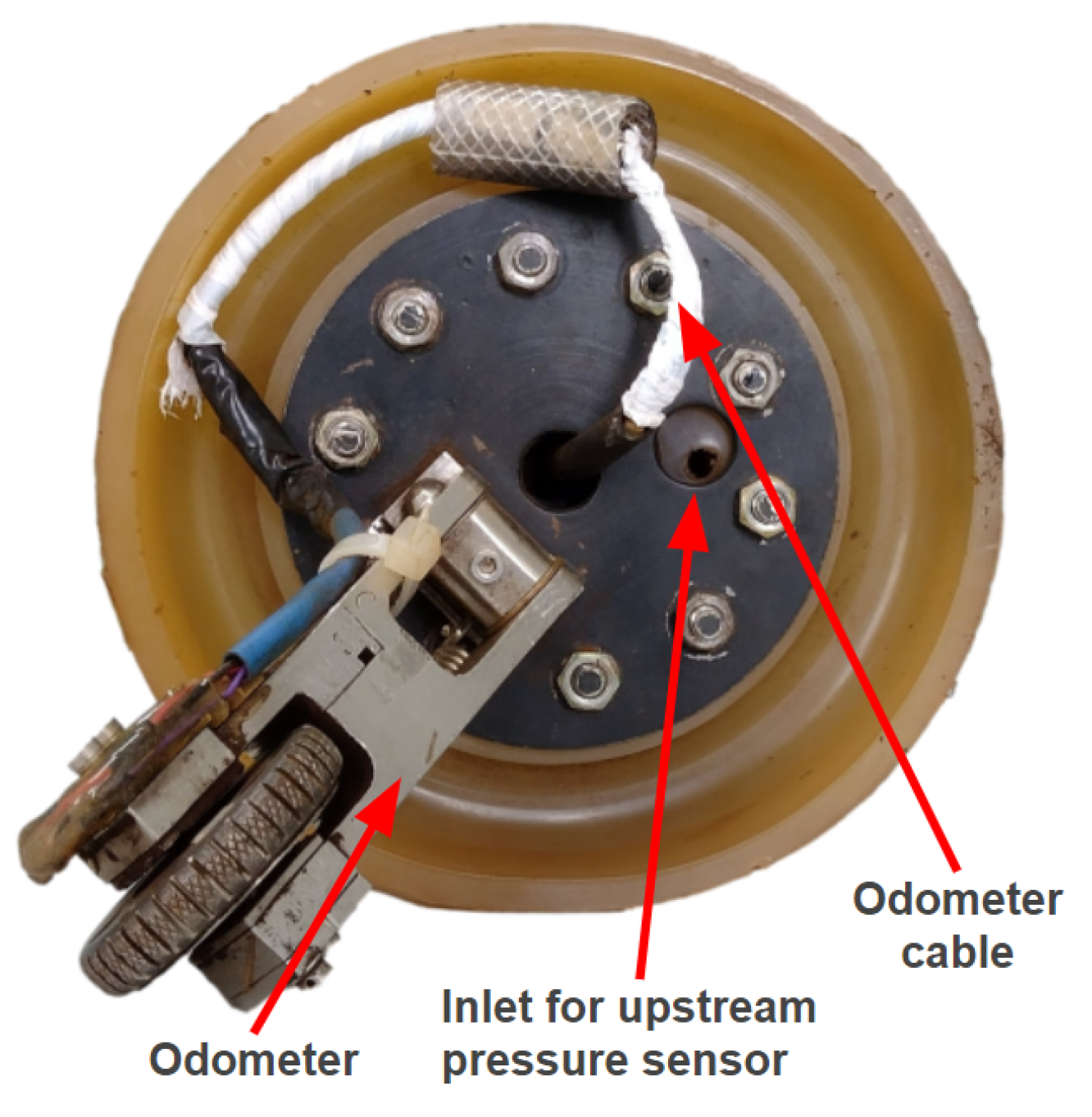

Figure 17.

Front and rear views of Prototype PIG 2. Front view.

Figure 17.

Front and rear views of Prototype PIG 2. Front view.

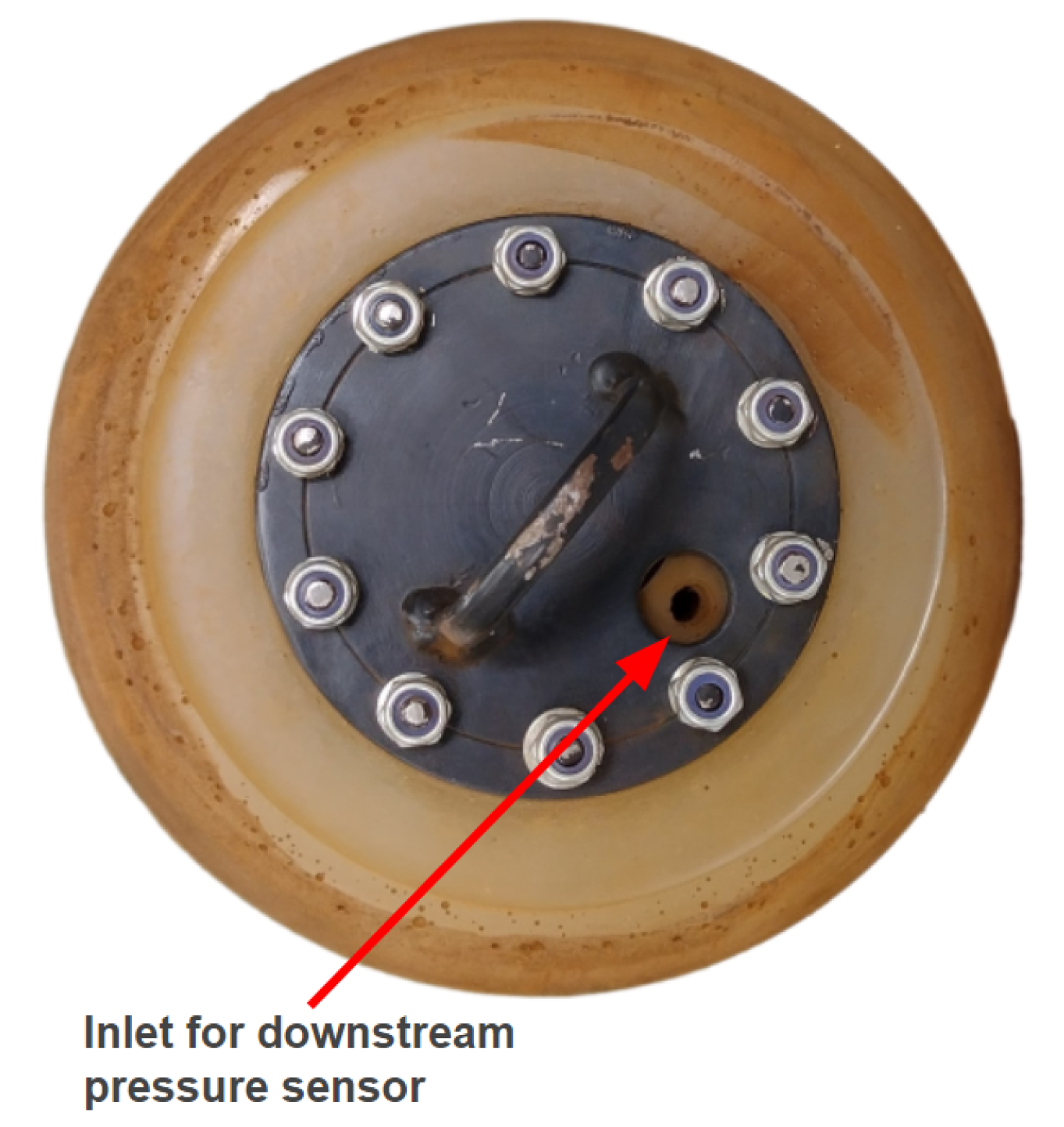

Figure 18.

Front and rear views of Prototype PIG 2. Rear view.

Figure 18.

Front and rear views of Prototype PIG 2. Rear view.

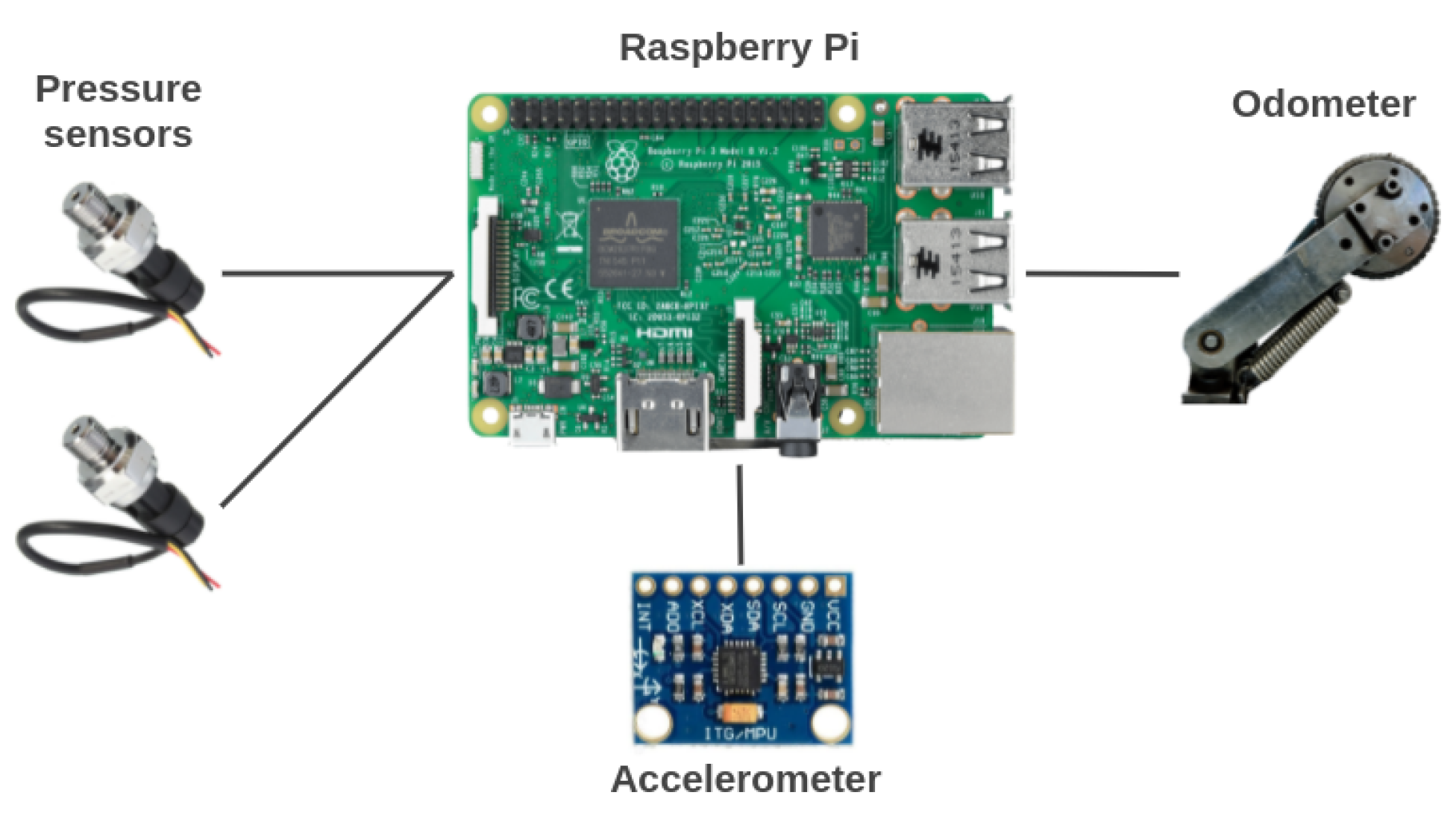

Figure 19.

An overall representation of the embedded system’s elements.

Figure 19.

An overall representation of the embedded system’s elements.

Figure 20.

Top view of the Pi Add-On Board. An analog-to-digital converter (ADC) was used to interface the pressure sensors with the Raspberry Pi.

Figure 20.

Top view of the Pi Add-On Board. An analog-to-digital converter (ADC) was used to interface the pressure sensors with the Raspberry Pi.

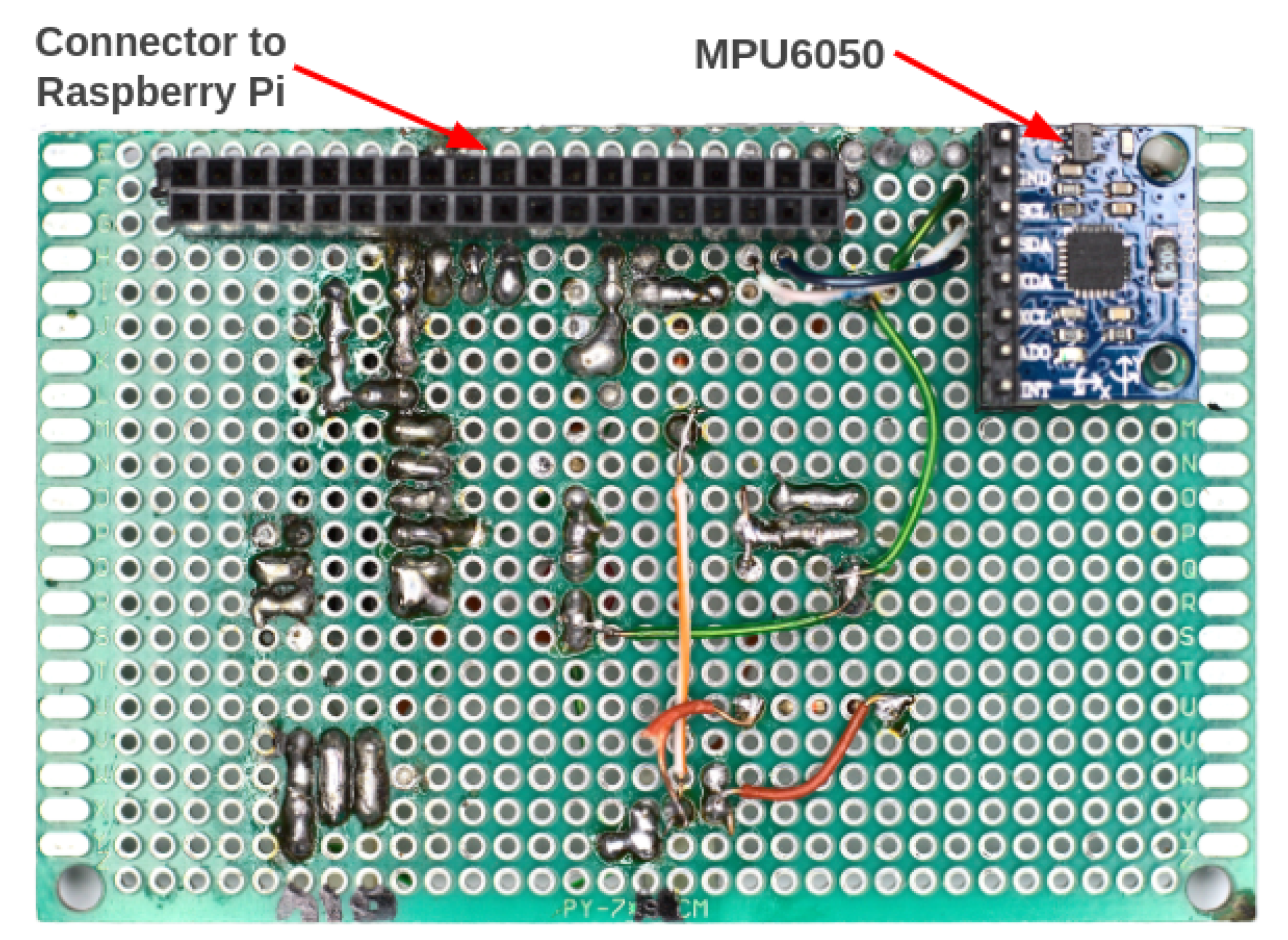

Figure 21.

Bottom view of the Pi Add-On Board. The accelerometer (MPU6050) was mounted on the Pi Add-On Board.

Figure 21.

Bottom view of the Pi Add-On Board. The accelerometer (MPU6050) was mounted on the Pi Add-On Board.

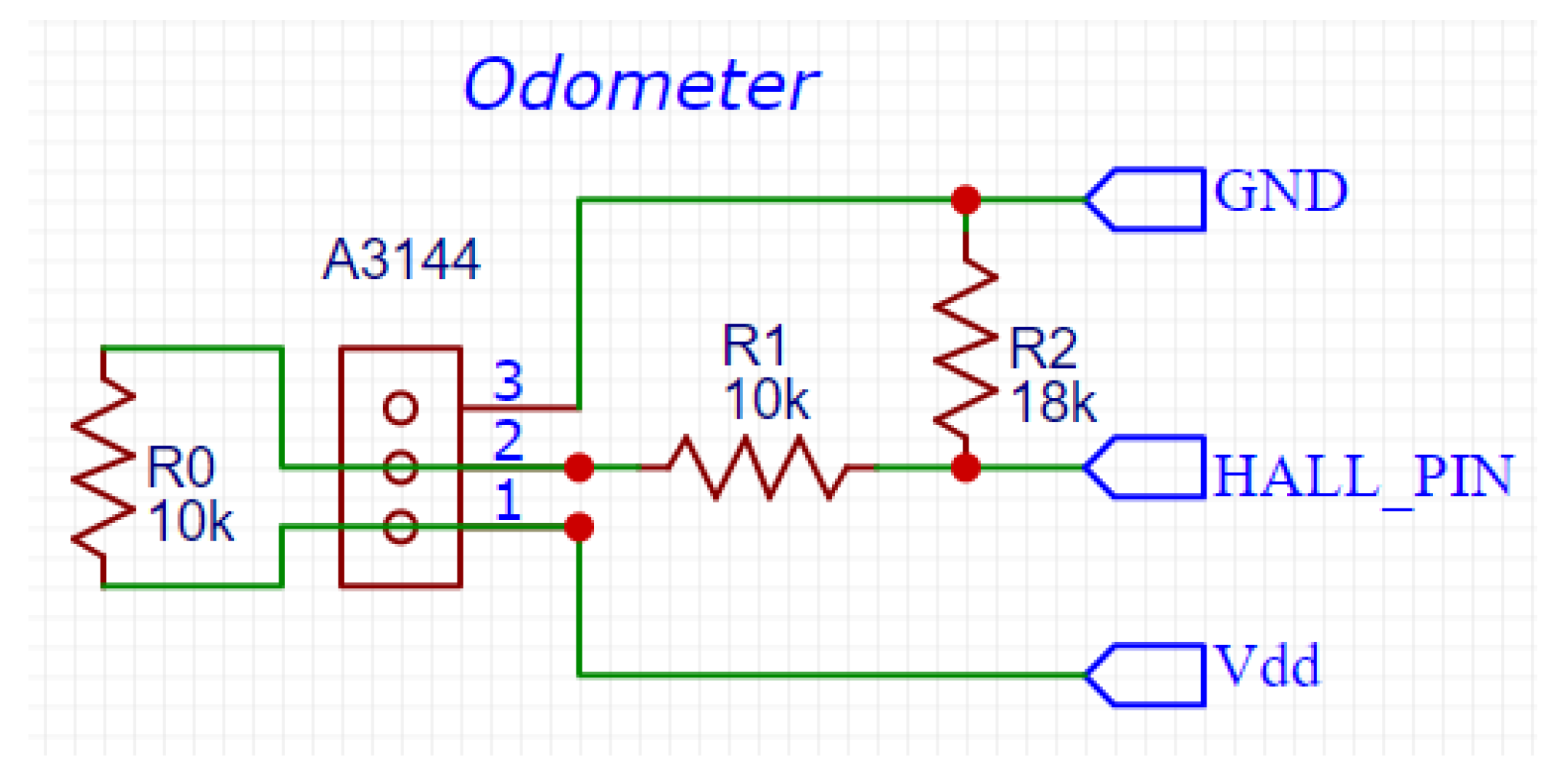

Figure 22.

Voltage divider used to reduce the voltage of the odometer’s output signal.

Figure 22.

Voltage divider used to reduce the voltage of the odometer’s output signal.

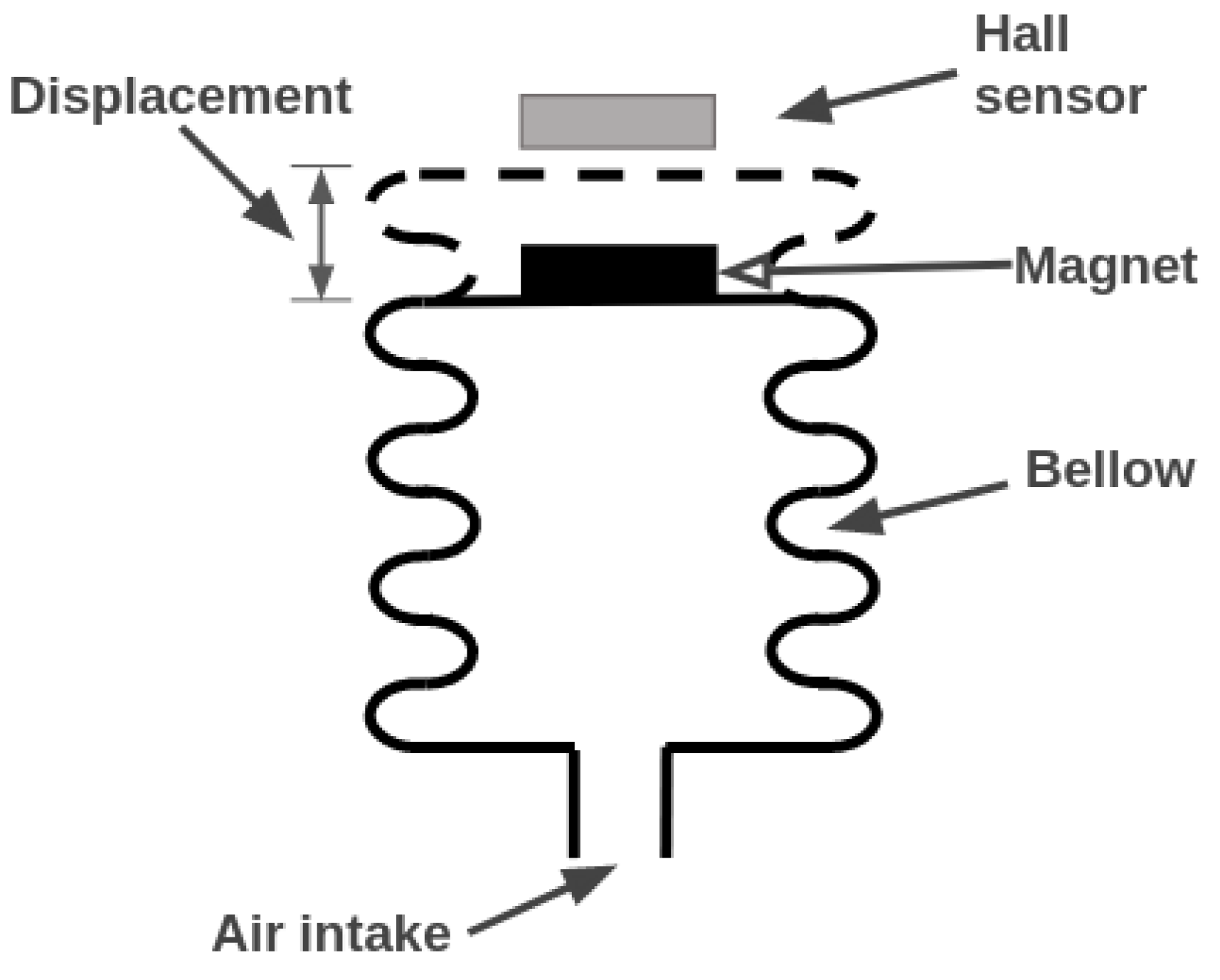

Figure 23.

Working principle of the pressure sensor. The Hall-effect sensor is fixed, while the magnet moves according to the applied pressure.

Figure 23.

Working principle of the pressure sensor. The Hall-effect sensor is fixed, while the magnet moves according to the applied pressure.

Figure 24.

Pressure sensor.

Figure 24.

Pressure sensor.

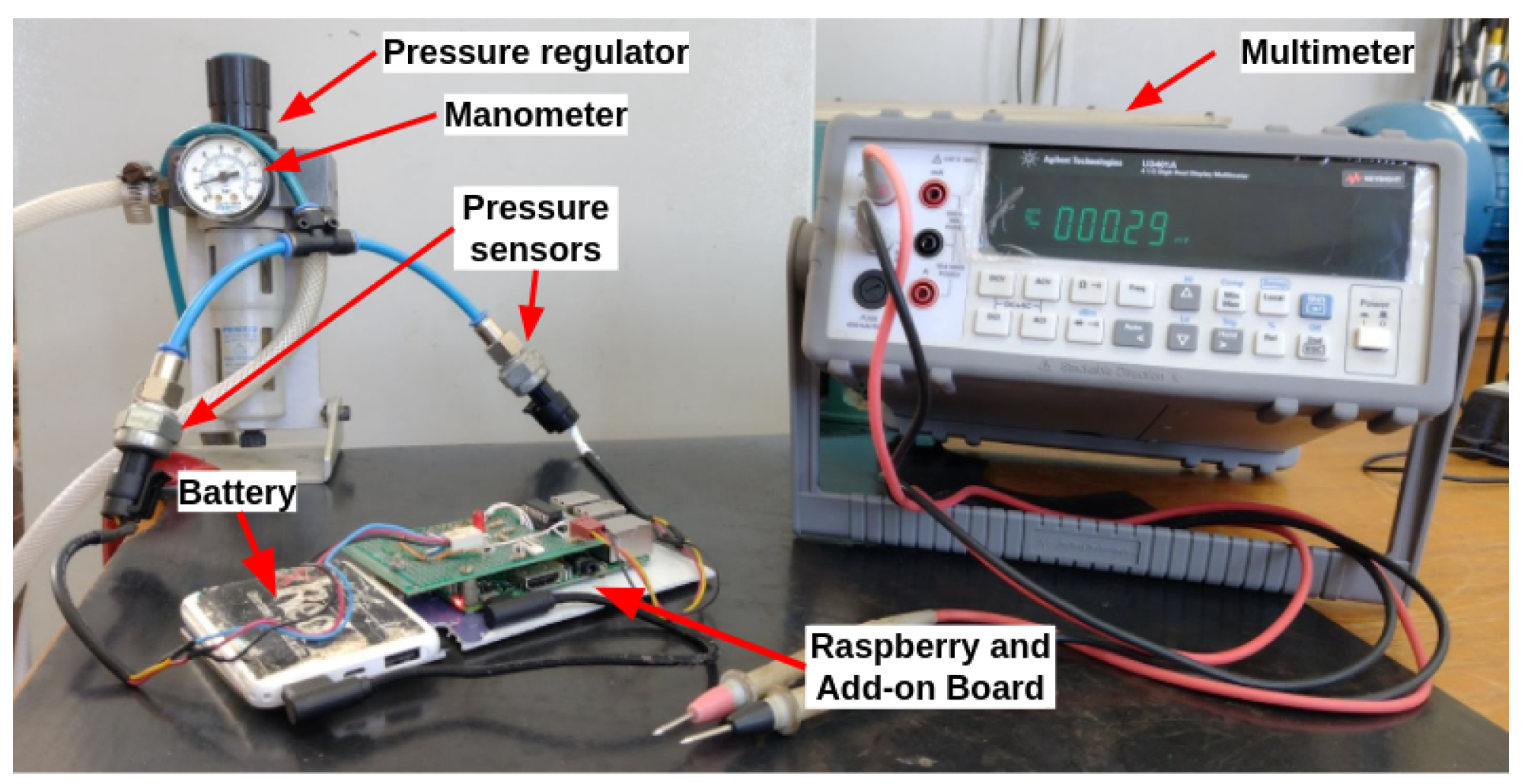

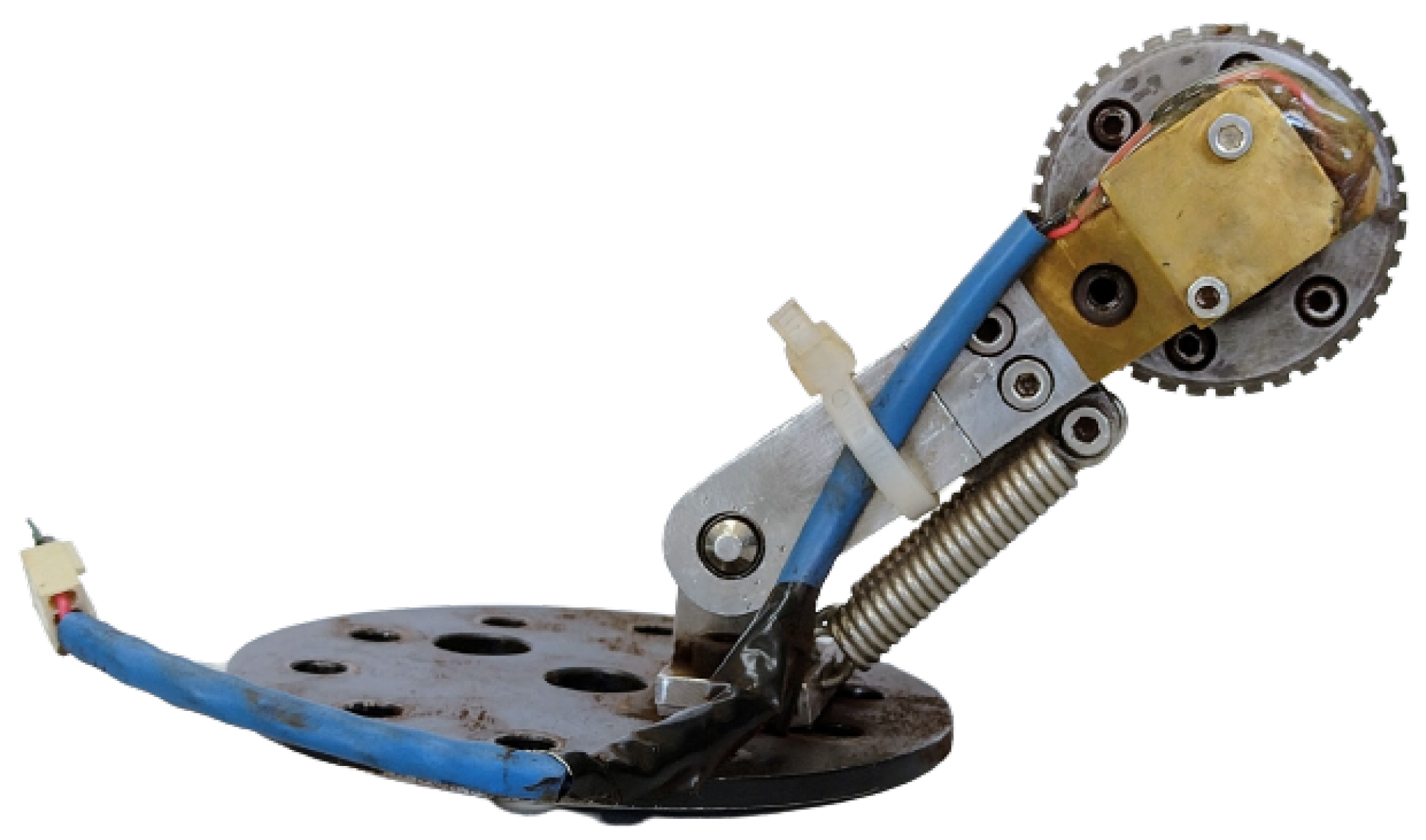

Figure 25.

Devices used in the curve-fitting procedure.

Figure 25.

Devices used in the curve-fitting procedure.

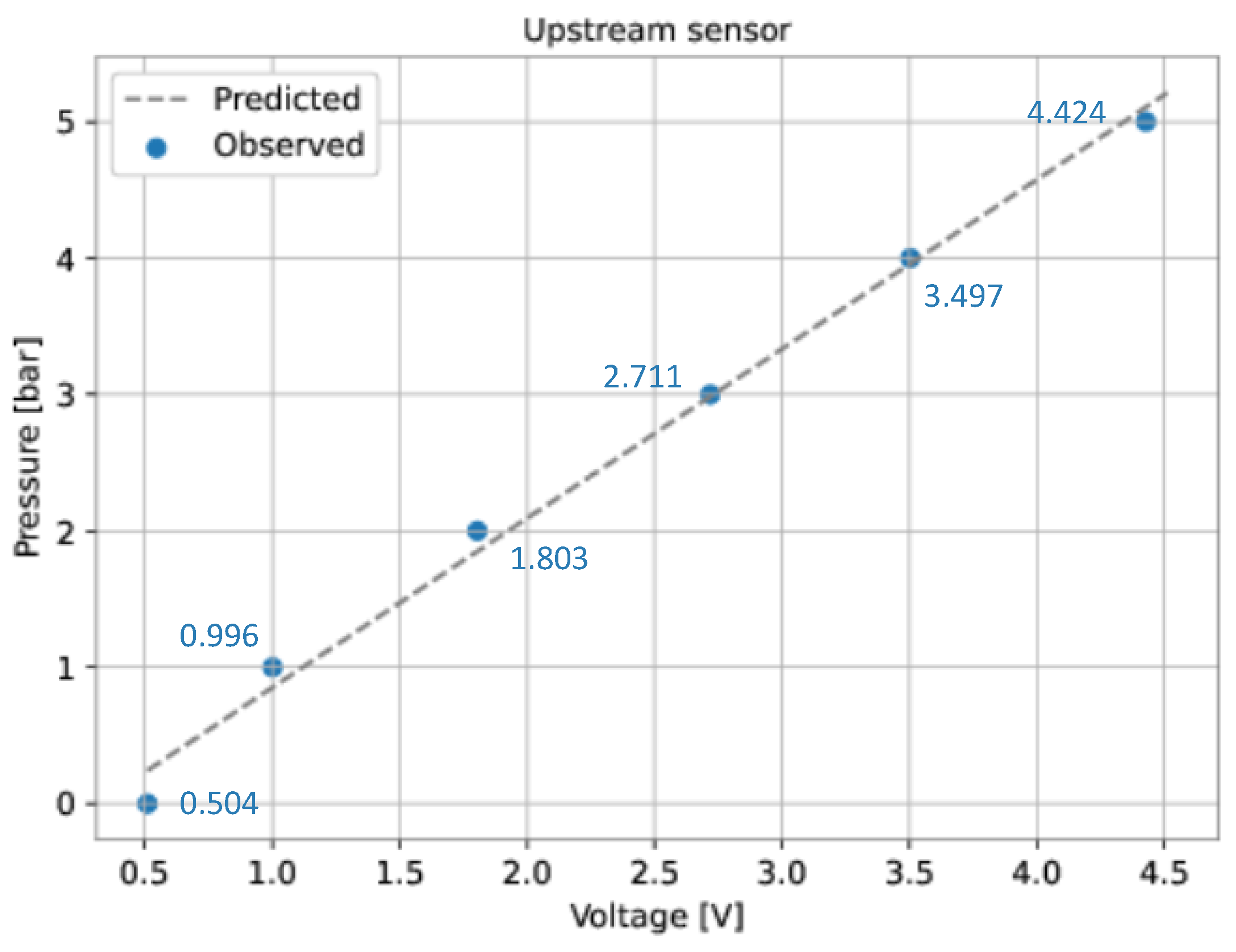

Figure 26.

Curve-fitting for the pressure sensors. Upstream sensor.

Figure 26.

Curve-fitting for the pressure sensors. Upstream sensor.

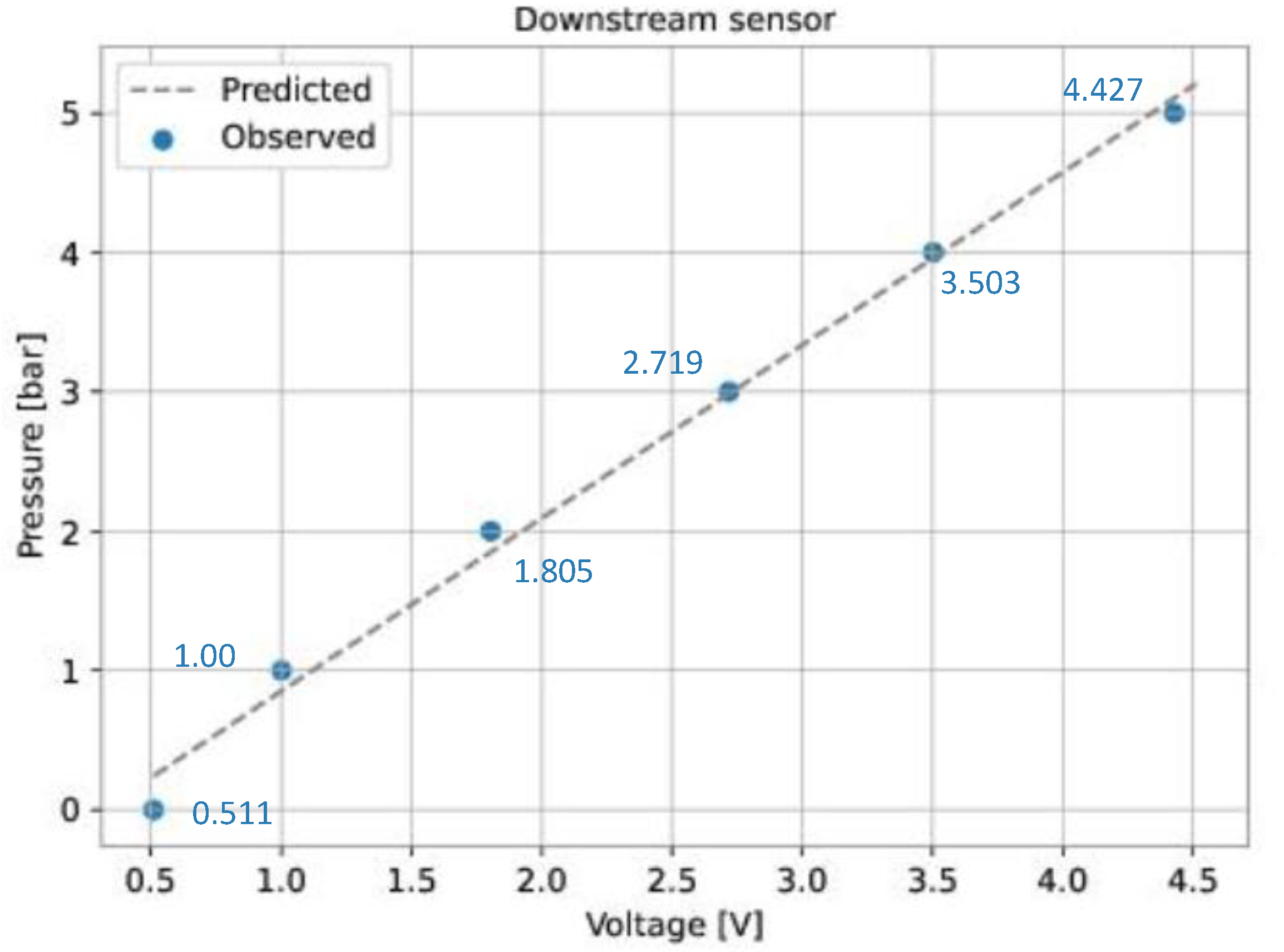

Figure 27.

Curve-fitting for the pressure sensors. Downstream sensor.

Figure 27.

Curve-fitting for the pressure sensors. Downstream sensor.

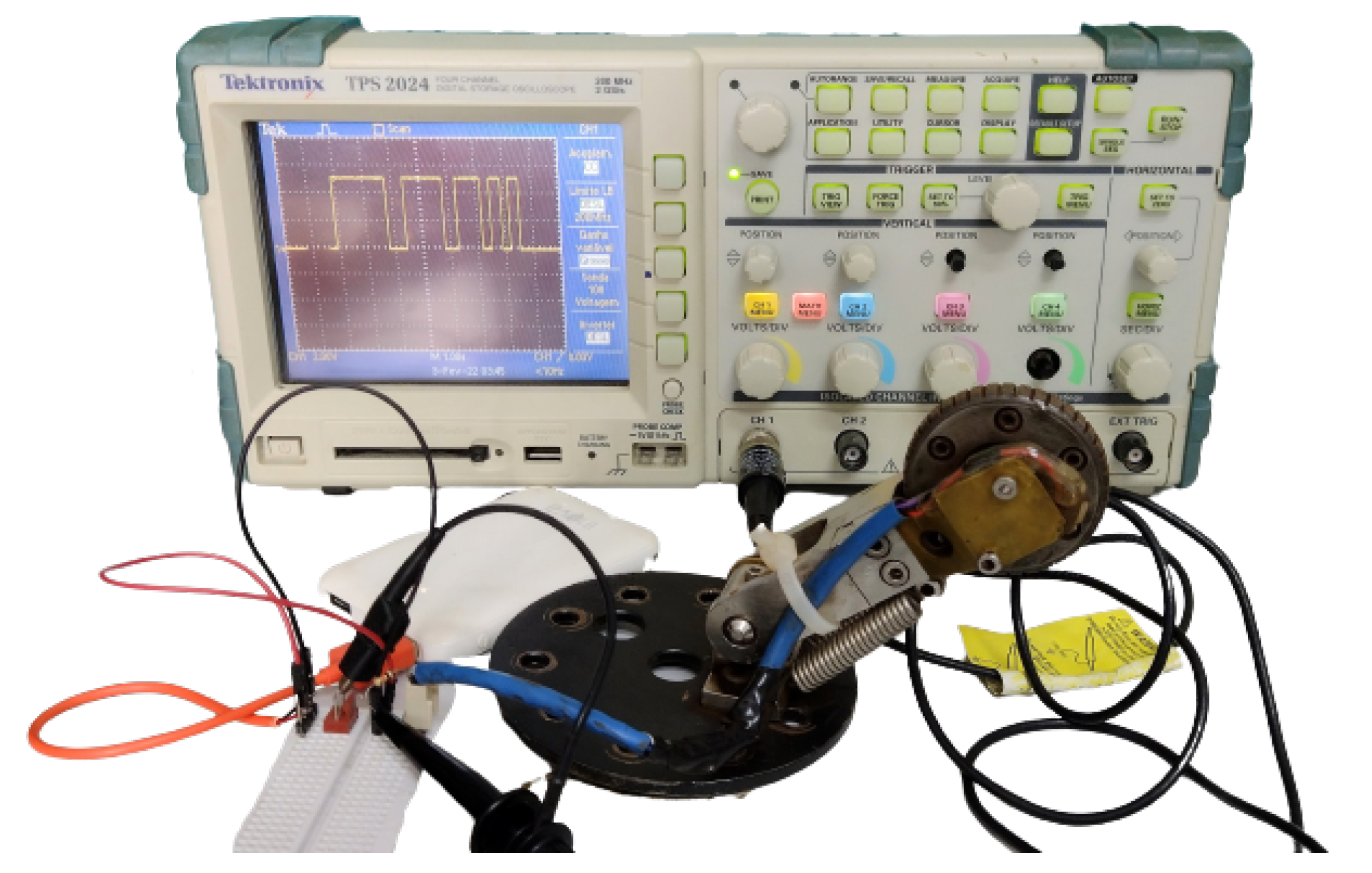

Figure 29.

Experimental setup that illustrates the Hall-effect switch’s output.

Figure 29.

Experimental setup that illustrates the Hall-effect switch’s output.

Figure 30.

Orientation of the accelerometer inside the PIG.

Figure 30.

Orientation of the accelerometer inside the PIG.

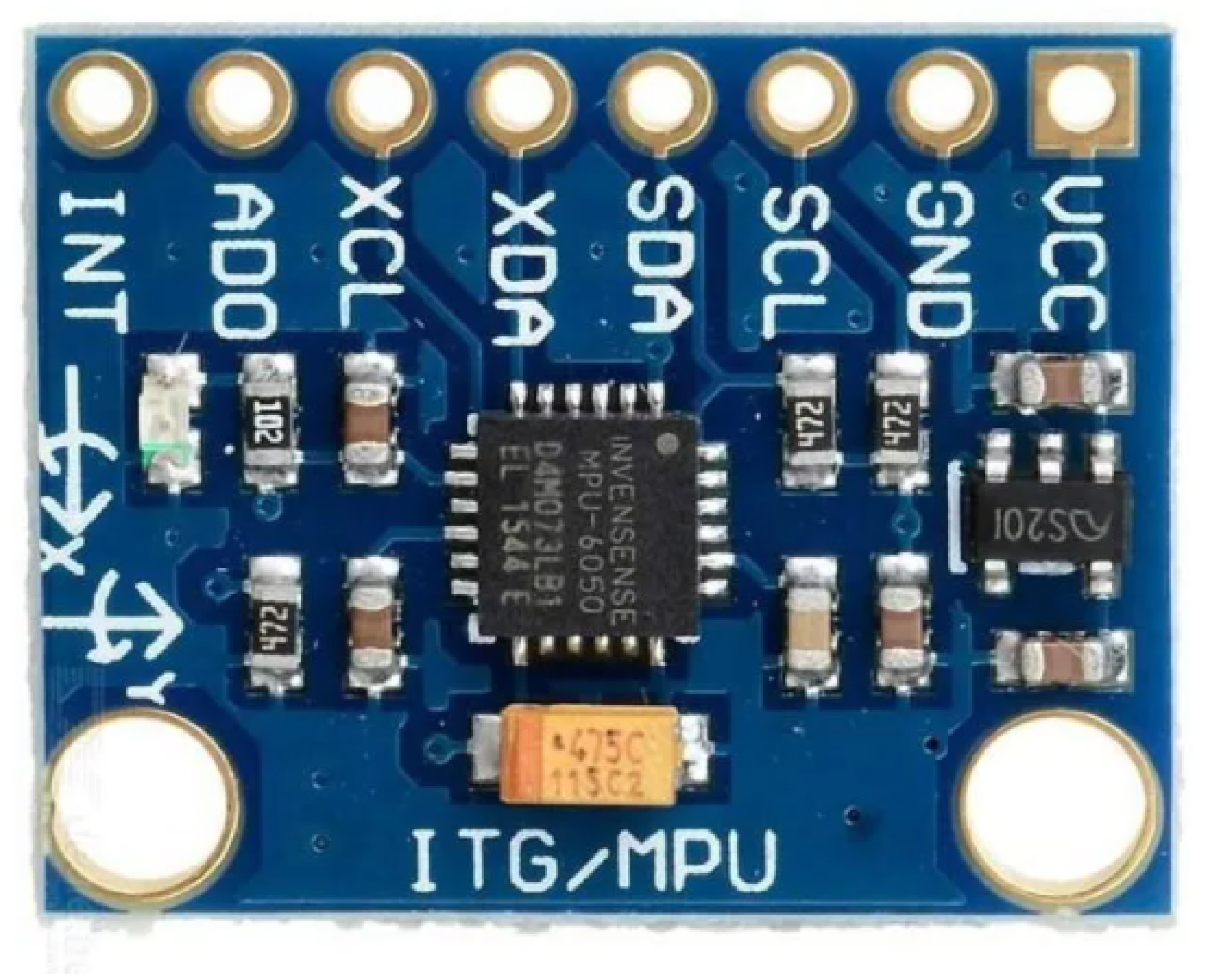

Figure 31.

The MEMS accelerometer MPU6050 was used to measure the PIG’s acceleration.

Figure 31.

The MEMS accelerometer MPU6050 was used to measure the PIG’s acceleration.

Figure 32.

Representation of the PIG launcher and receiver.

Figure 32.

Representation of the PIG launcher and receiver.

Figure 33.

Top-view drawing of the testing pipeline.

Figure 33.

Top-view drawing of the testing pipeline.

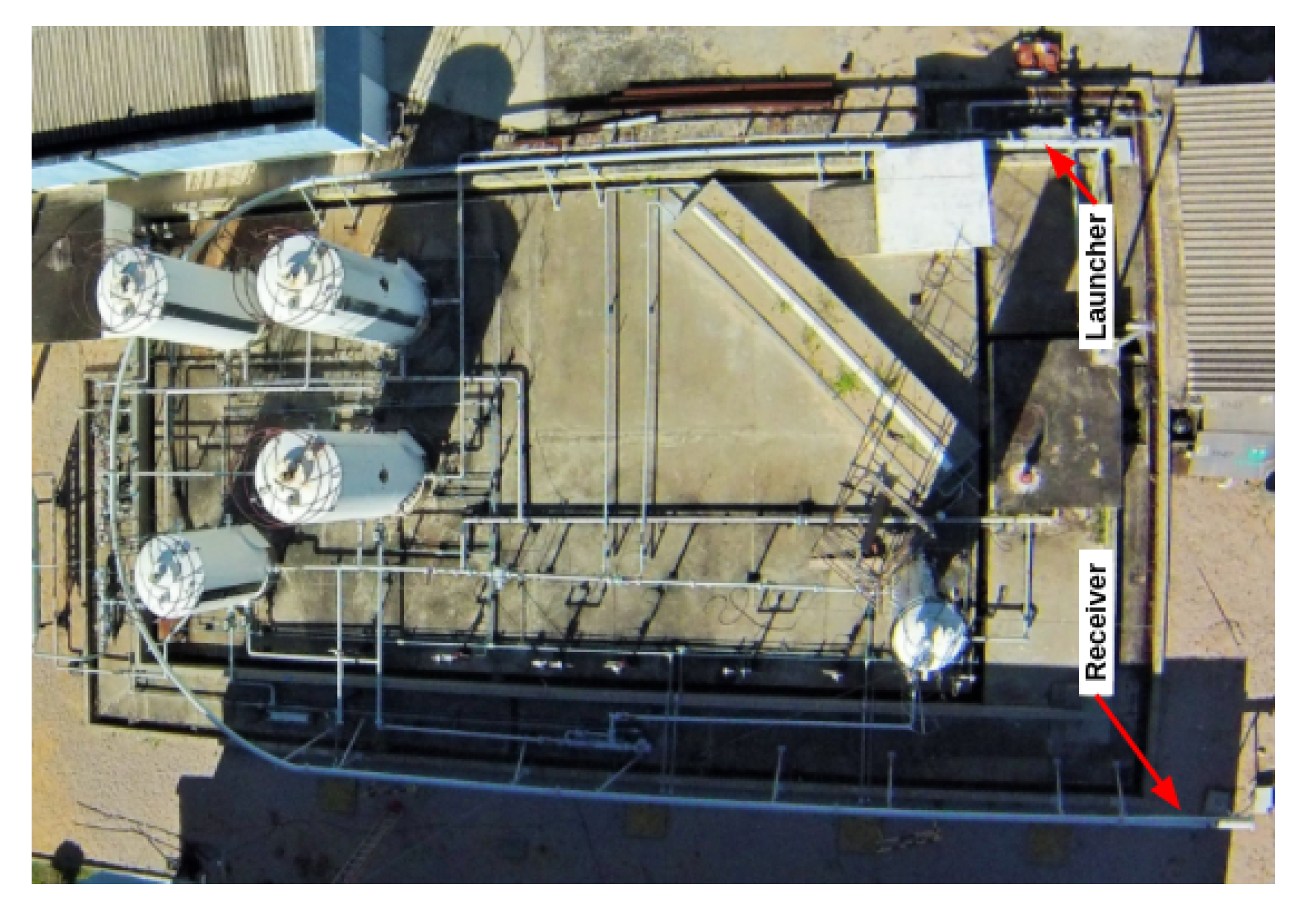

Figure 34.

Aerial photo of the testing pipeline.

Figure 34.

Aerial photo of the testing pipeline.

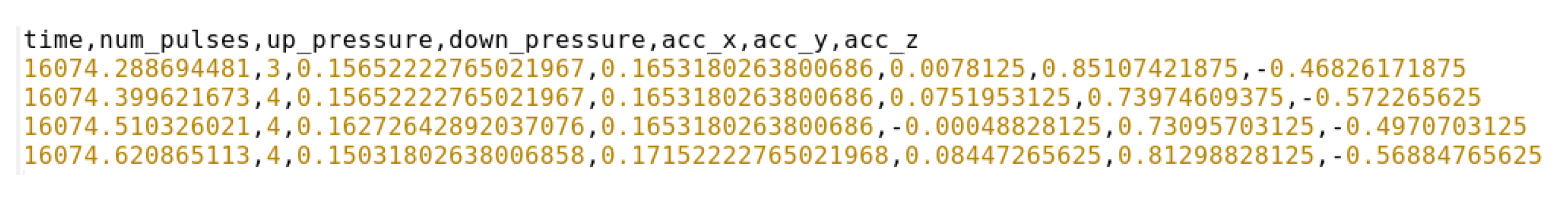

Figure 35.

Example of a comma-separated values (CSV) file used to record the data collected from the sensors.

Figure 35.

Example of a comma-separated values (CSV) file used to record the data collected from the sensors.

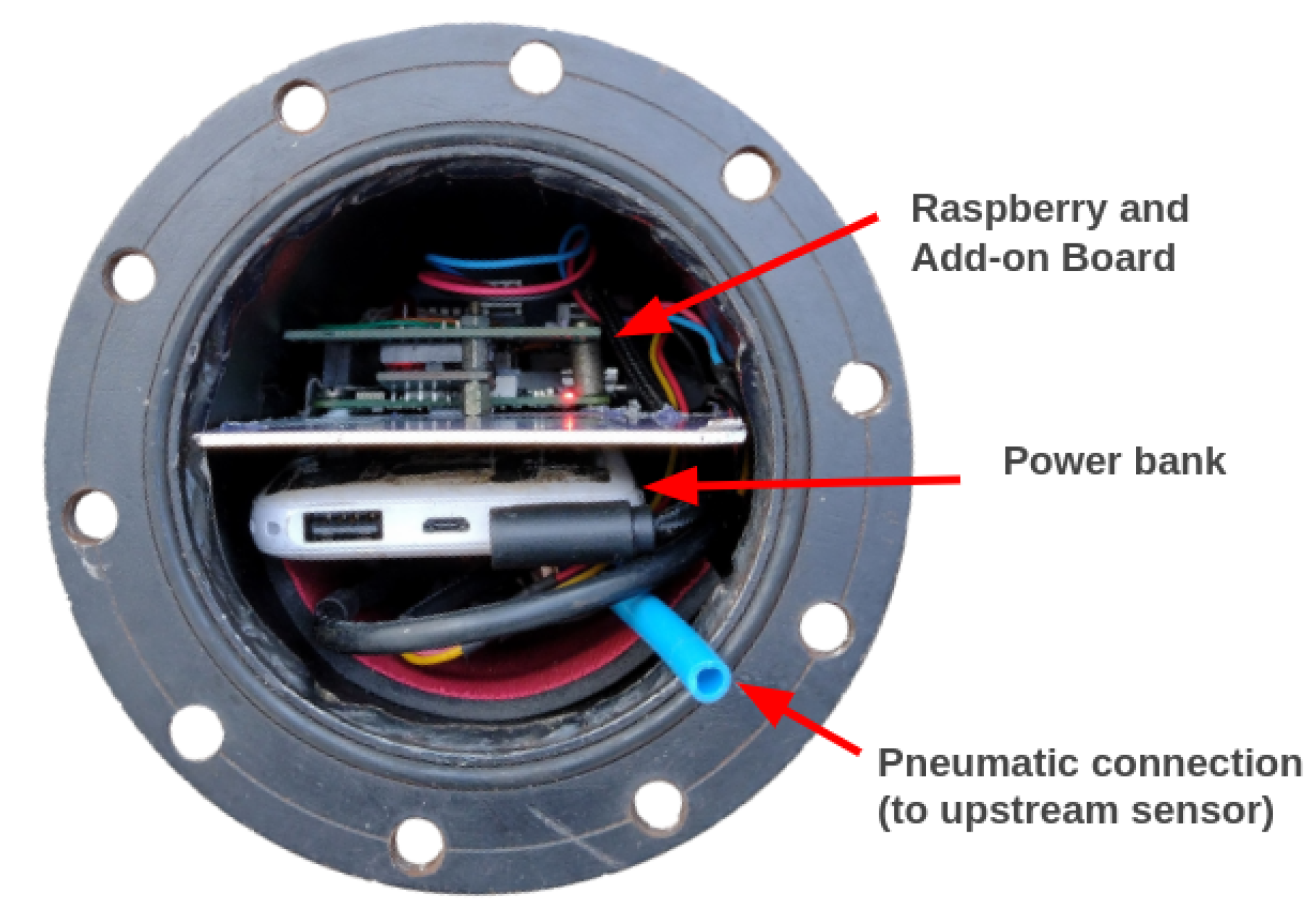

Figure 36.

Rear-view of the PIG with the embedded system installed inside.

Figure 36.

Rear-view of the PIG with the embedded system installed inside.

Figure 37.

Steps of the data collection procedure.

Figure 37.

Steps of the data collection procedure.

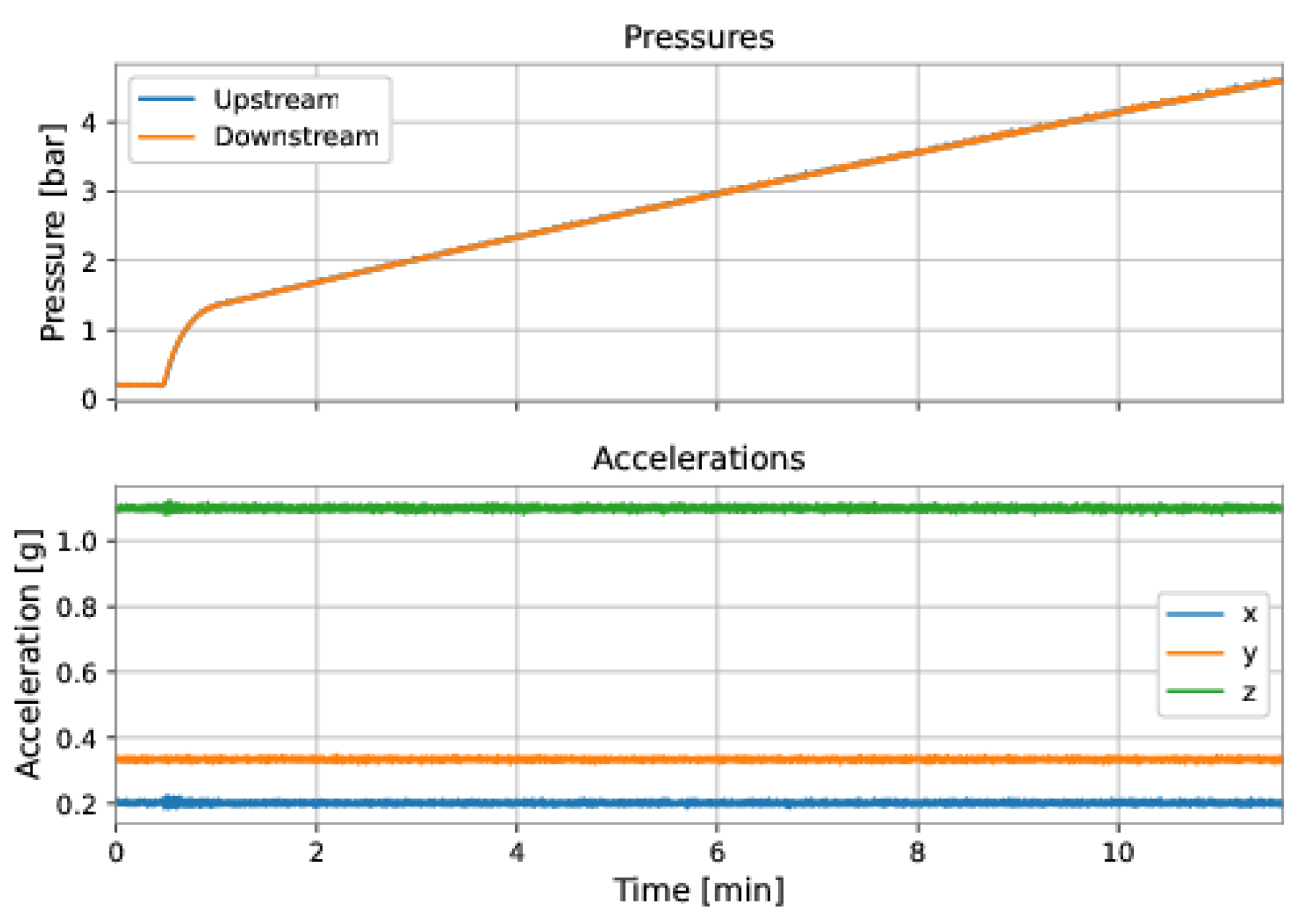

Figure 38.

Examples of samples that did not belong to the interest’s regions for the model’s training and, hence, were discarded from the dataset. Initial pressurization of the pipeline.

Figure 38.

Examples of samples that did not belong to the interest’s regions for the model’s training and, hence, were discarded from the dataset. Initial pressurization of the pipeline.

Figure 39.

Examples of samples that did not belong to the interest’s regions for the model’s training and, hence, were discarded from the dataset. PIG’s collision at the end of the pipeline.

Figure 39.

Examples of samples that did not belong to the interest’s regions for the model’s training and, hence, were discarded from the dataset. PIG’s collision at the end of the pipeline.

Figure 40.

Example of pressure outlier.

Figure 40.

Example of pressure outlier.

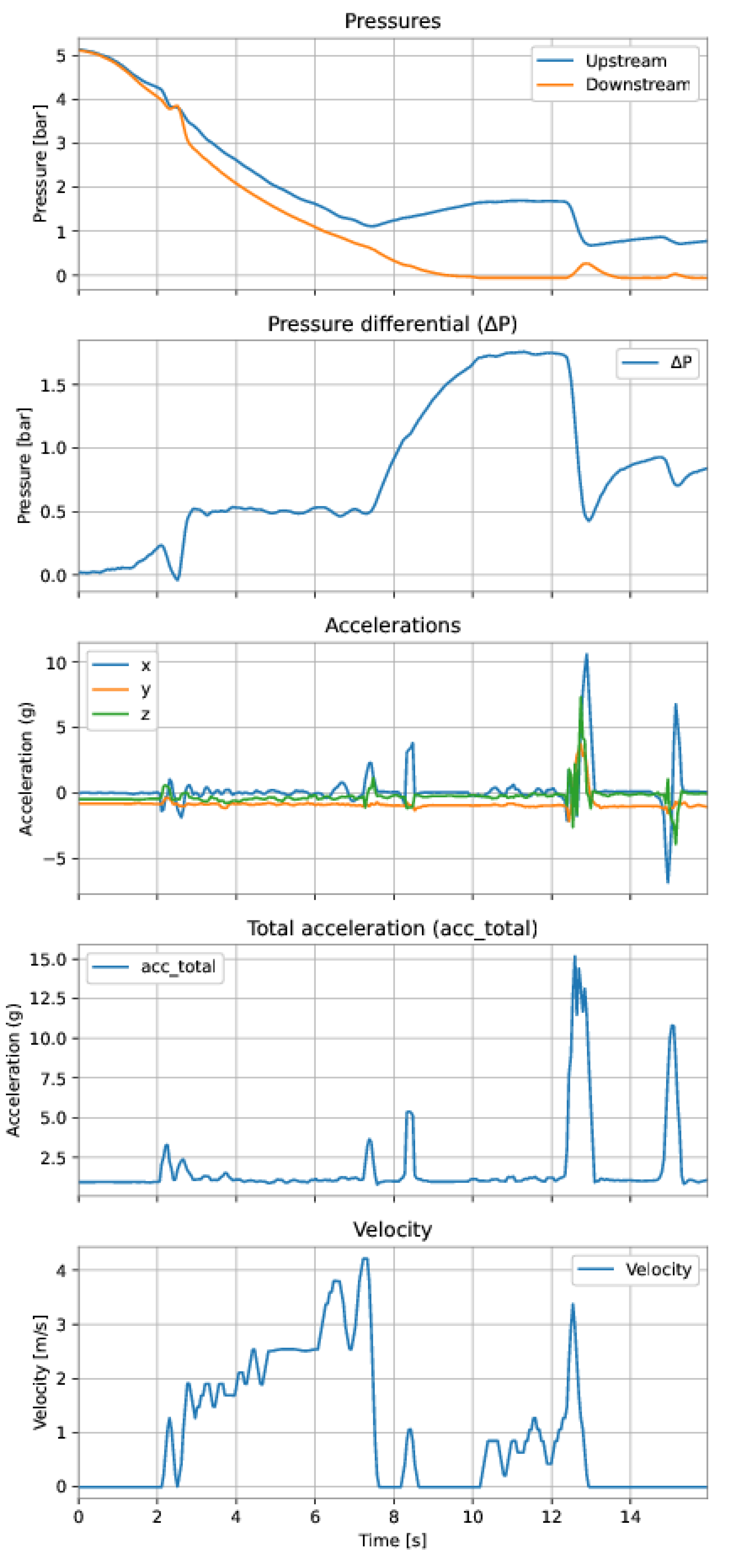

Figure 41.

Training dataset. After instant 14 s, it is possible to see a probable inconsistency in the velocity measurement, since the differential pressure and the accelerations varied significantly while the velocity remained z.

Figure 41.

Training dataset. After instant 14 s, it is possible to see a probable inconsistency in the velocity measurement, since the differential pressure and the accelerations varied significantly while the velocity remained z.

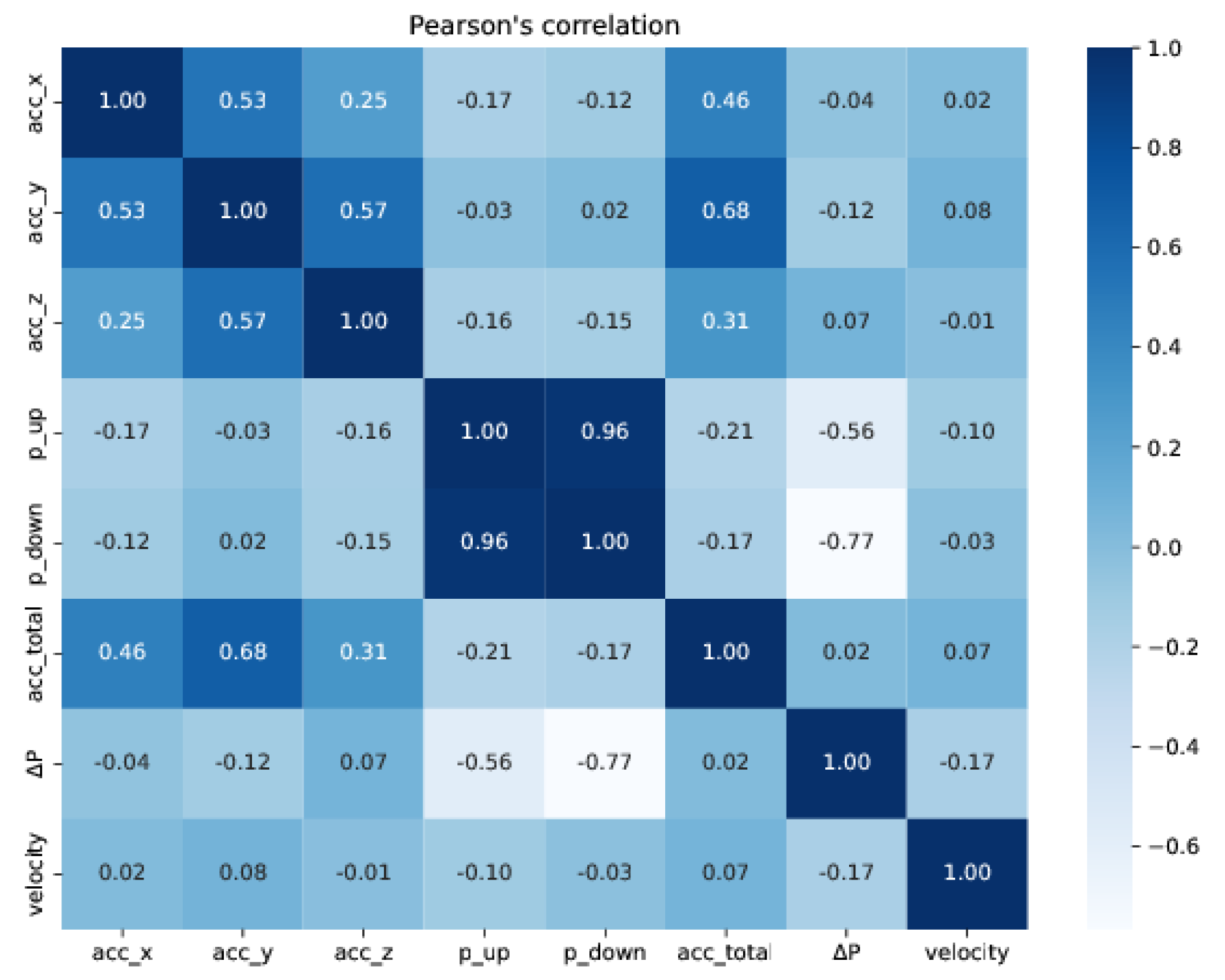

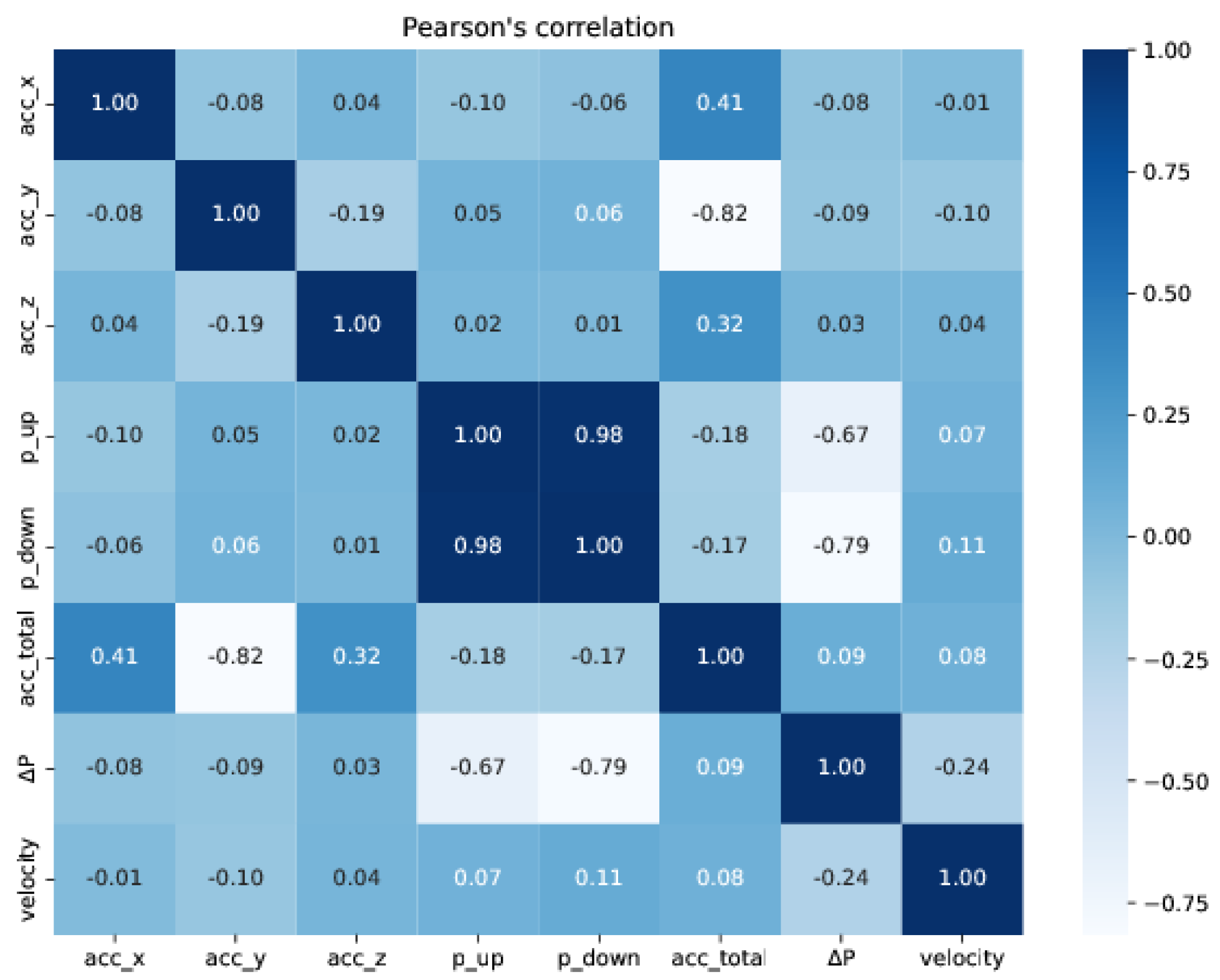

Figure 43.

Heat map representation of Pearson’s correlations for the training set.

Figure 43.

Heat map representation of Pearson’s correlations for the training set.

Figure 44.

Heat map representation of Pearson’s correlations for the test set.

Figure 44.

Heat map representation of Pearson’s correlations for the test set.

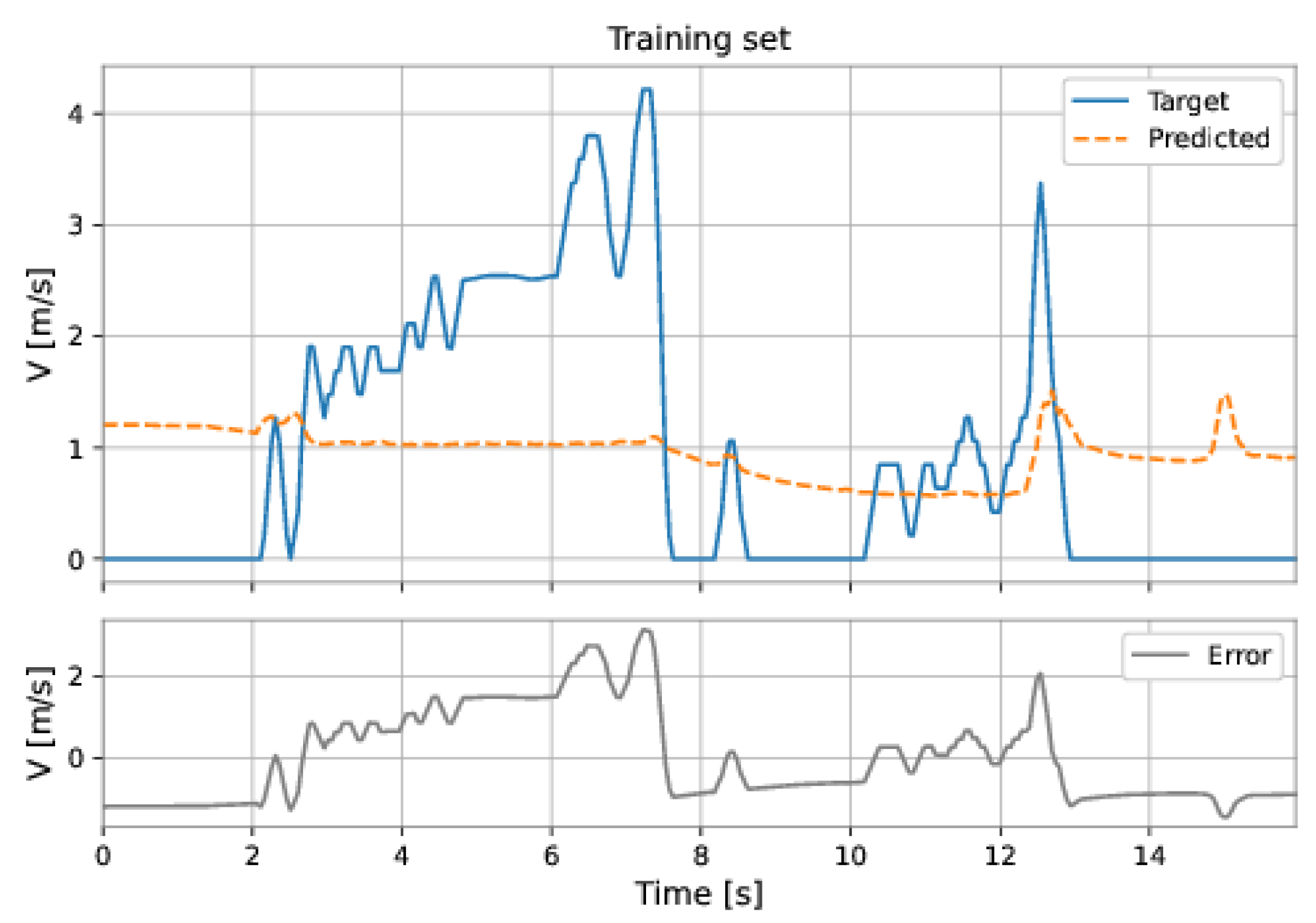

Figure 45.

Linear regression predictions on the training. The orange dashed line is the velocity predicted by the model, the blue solid line is the target velocity, and the gray line is the absolute error, defined as the target velocity minus the predicted v.

Figure 45.

Linear regression predictions on the training. The orange dashed line is the velocity predicted by the model, the blue solid line is the target velocity, and the gray line is the absolute error, defined as the target velocity minus the predicted v.

Figure 46.

Linear regression predictions on the test sets. The orange dashed line is the velocity predicted by the model, the blue solid line is the target velocity, and the gray line is the absolute error, defined as the target velocity minus the predicted v.

Figure 46.

Linear regression predictions on the test sets. The orange dashed line is the velocity predicted by the model, the blue solid line is the target velocity, and the gray line is the absolute error, defined as the target velocity minus the predicted v.

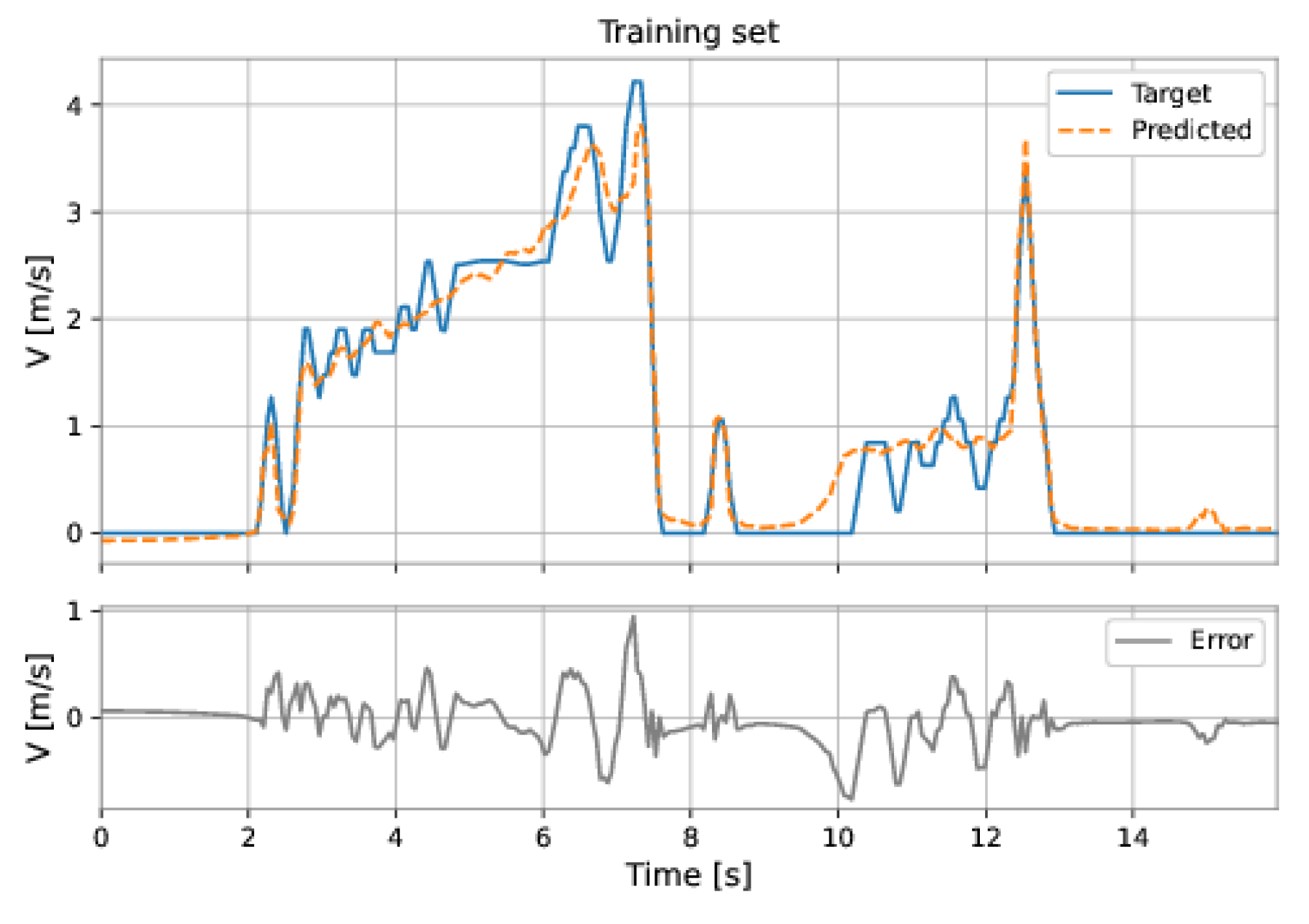

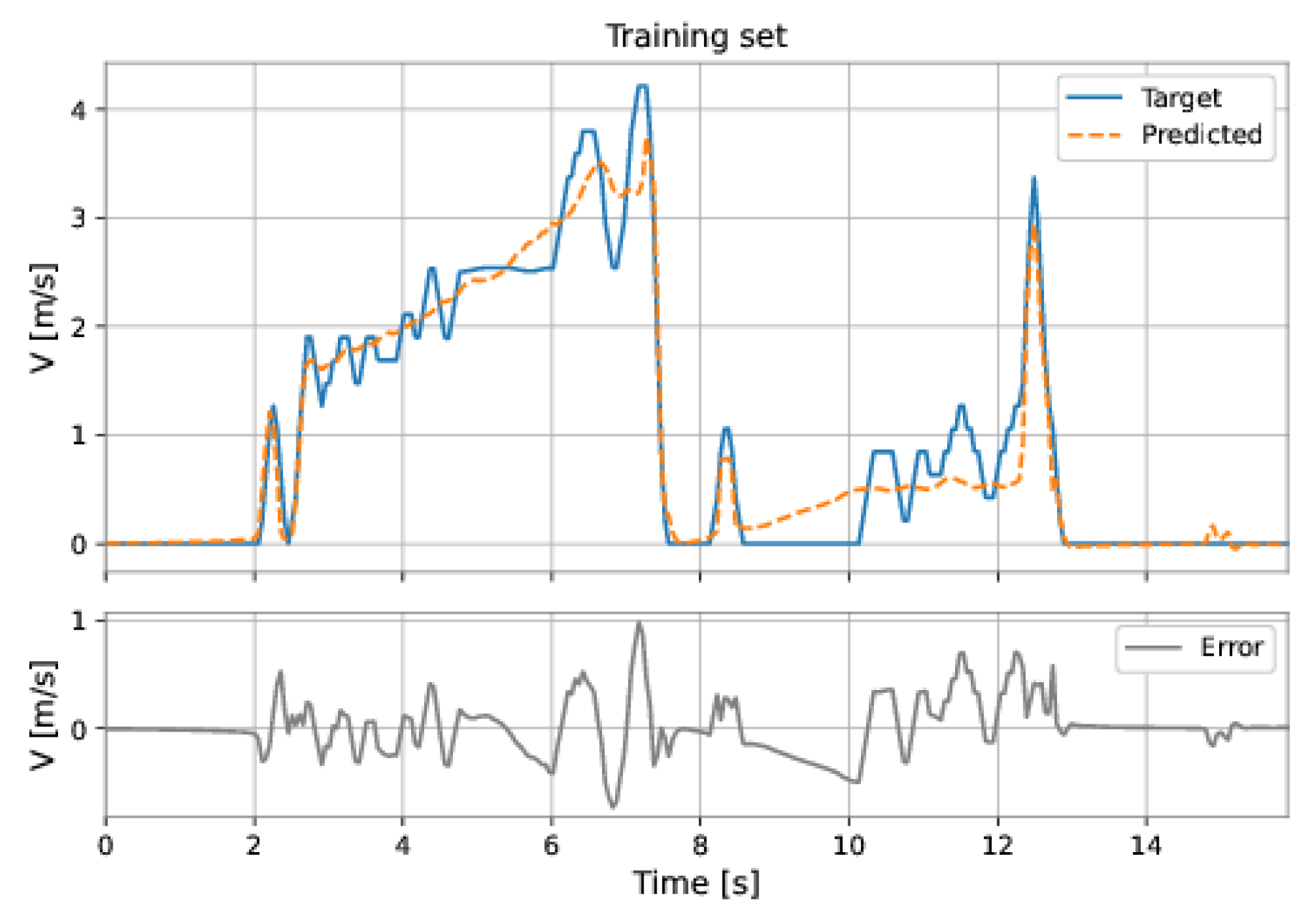

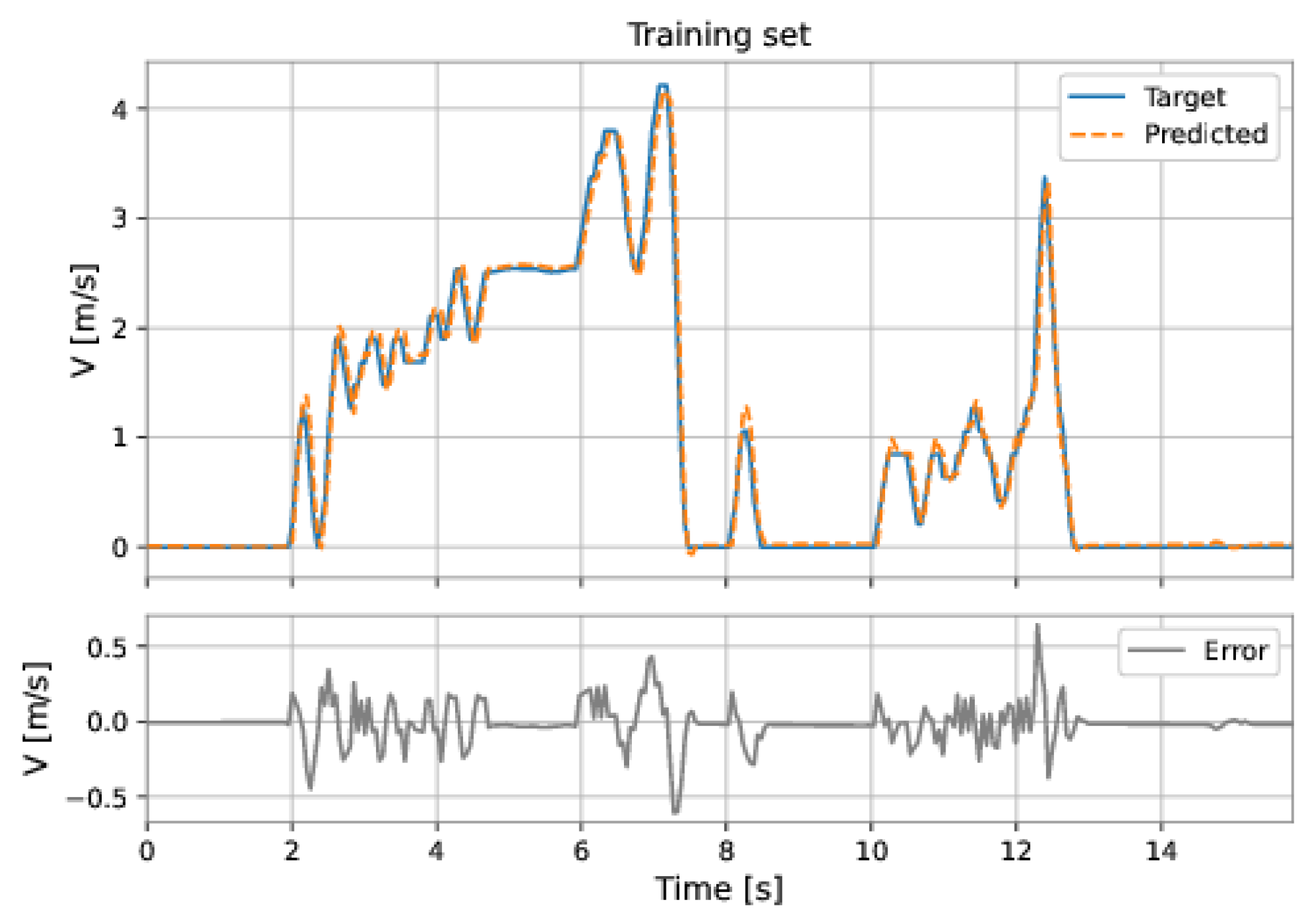

Figure 47.

MLP’s predictions on the training set.

Figure 47.

MLP’s predictions on the training set.

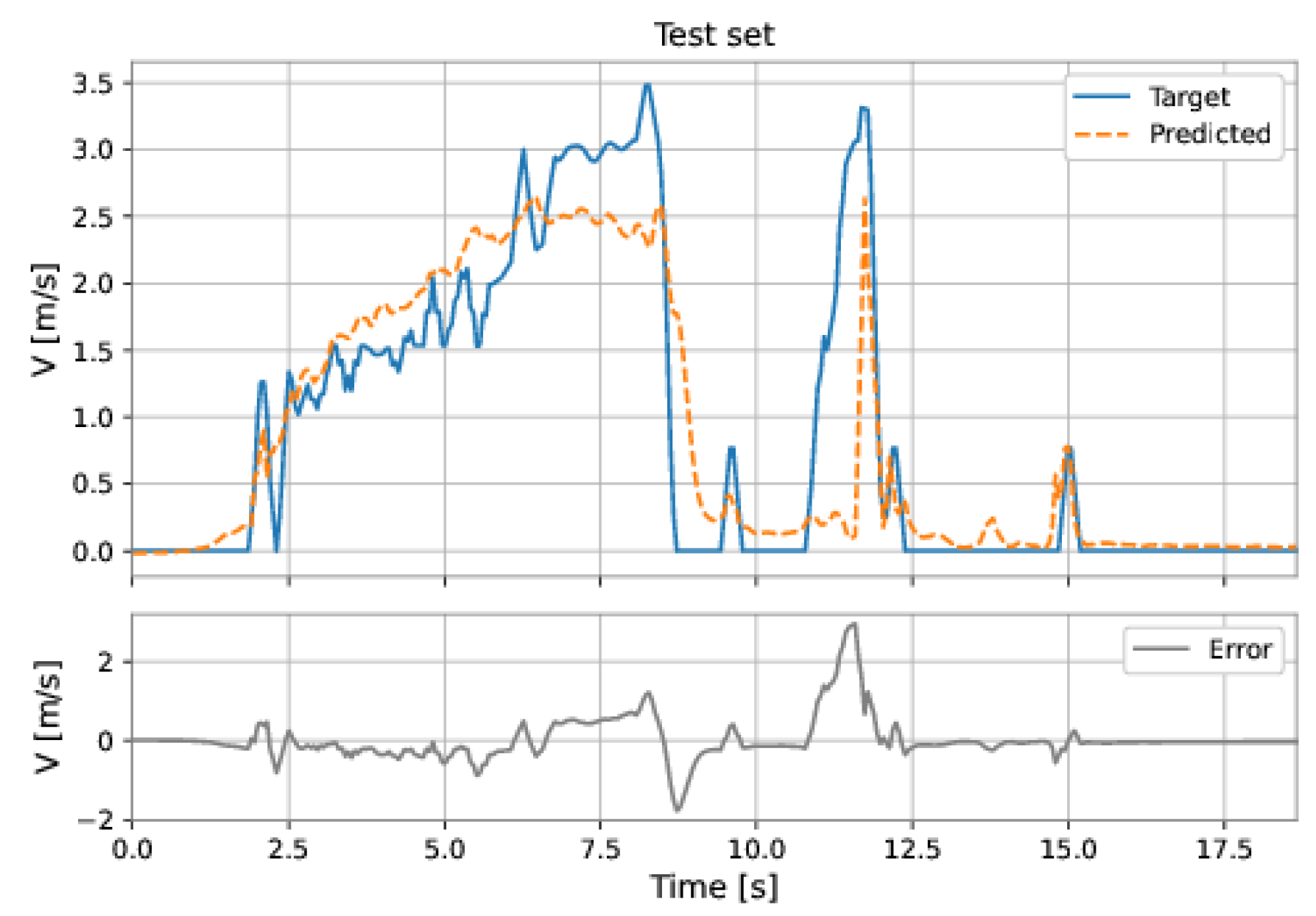

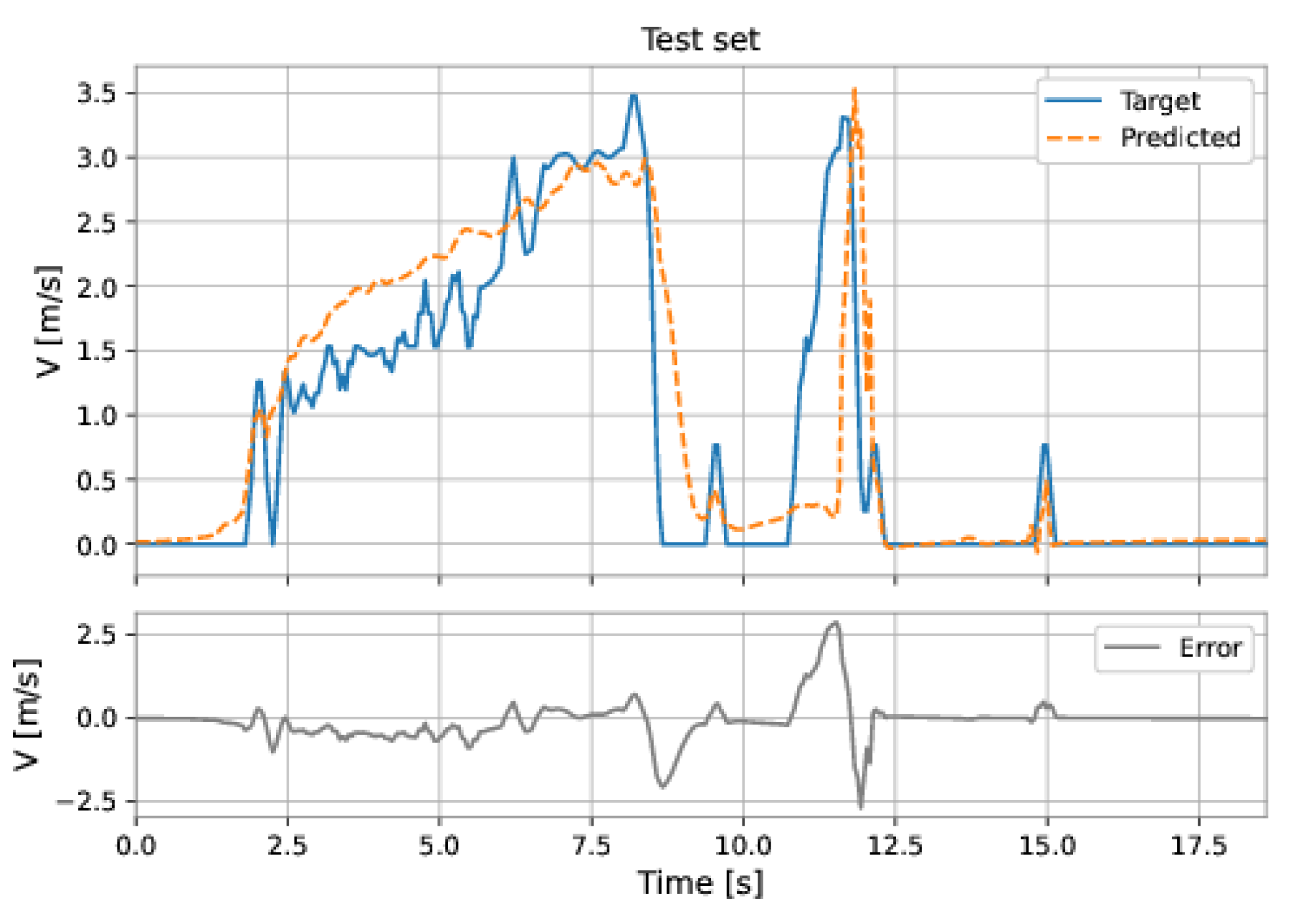

Figure 48.

MLP’s predictions on the test set.

Figure 48.

MLP’s predictions on the test set.

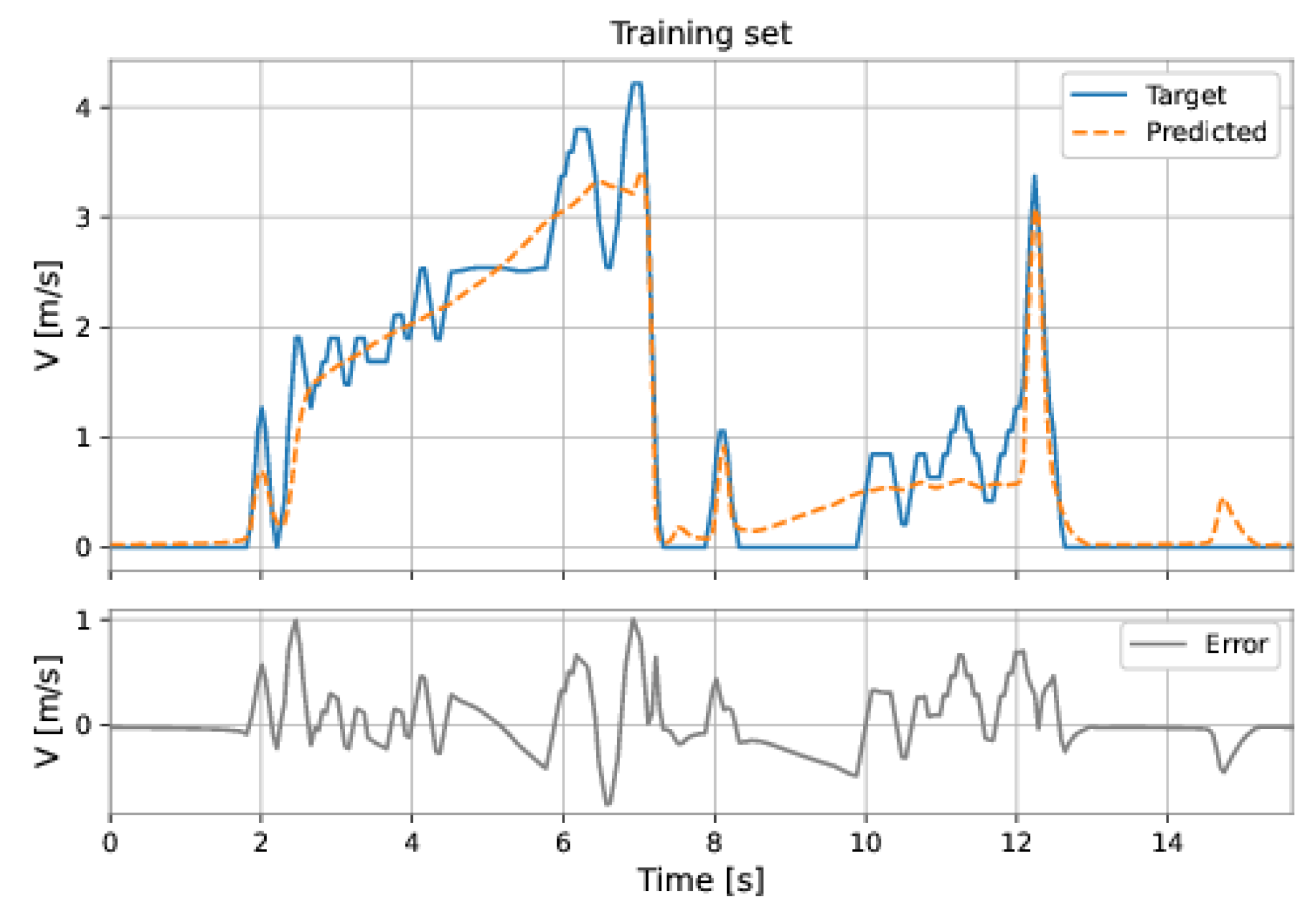

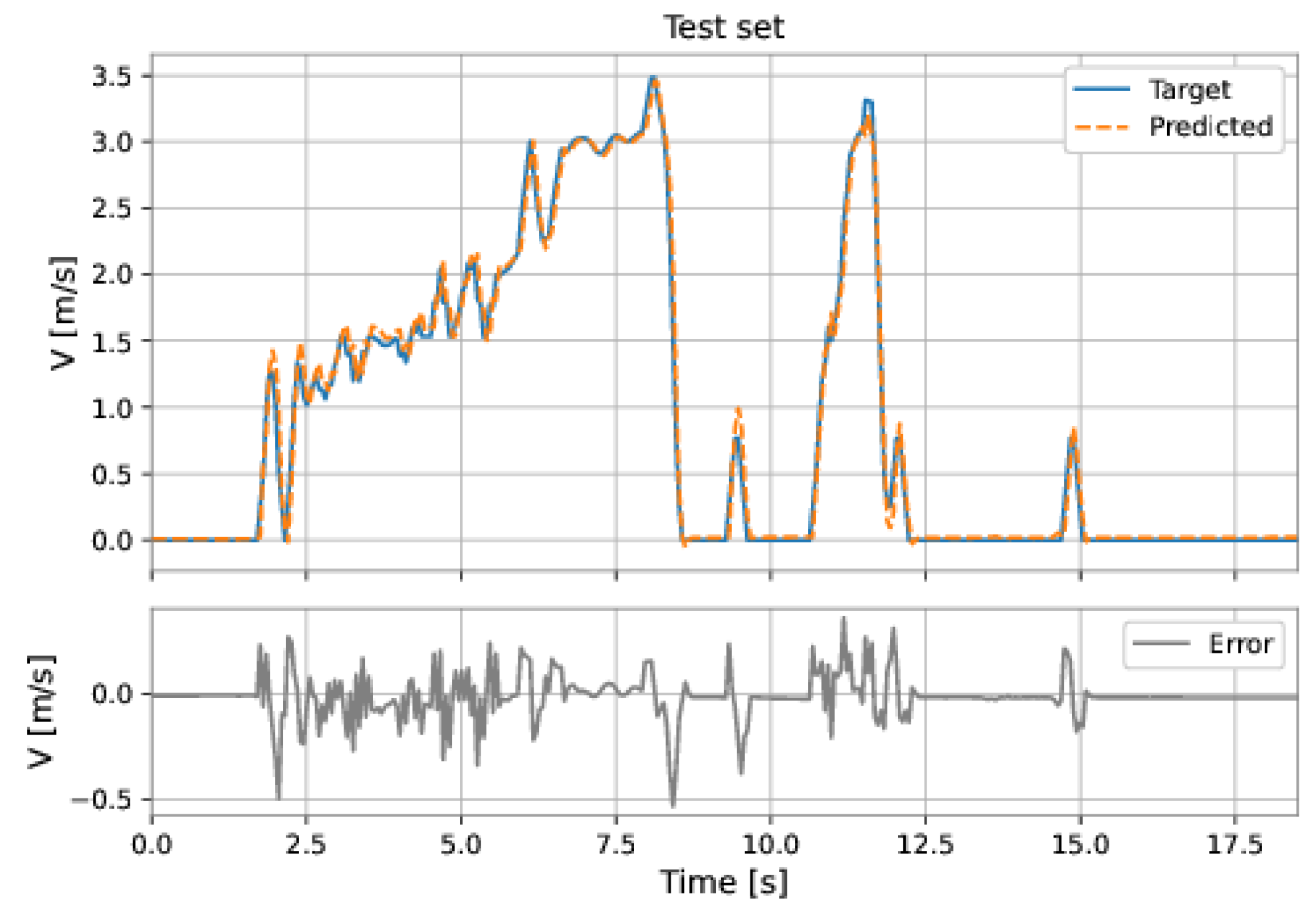

Figure 49.

MLP-TDNN’s predictions on the training set.

Figure 49.

MLP-TDNN’s predictions on the training set.

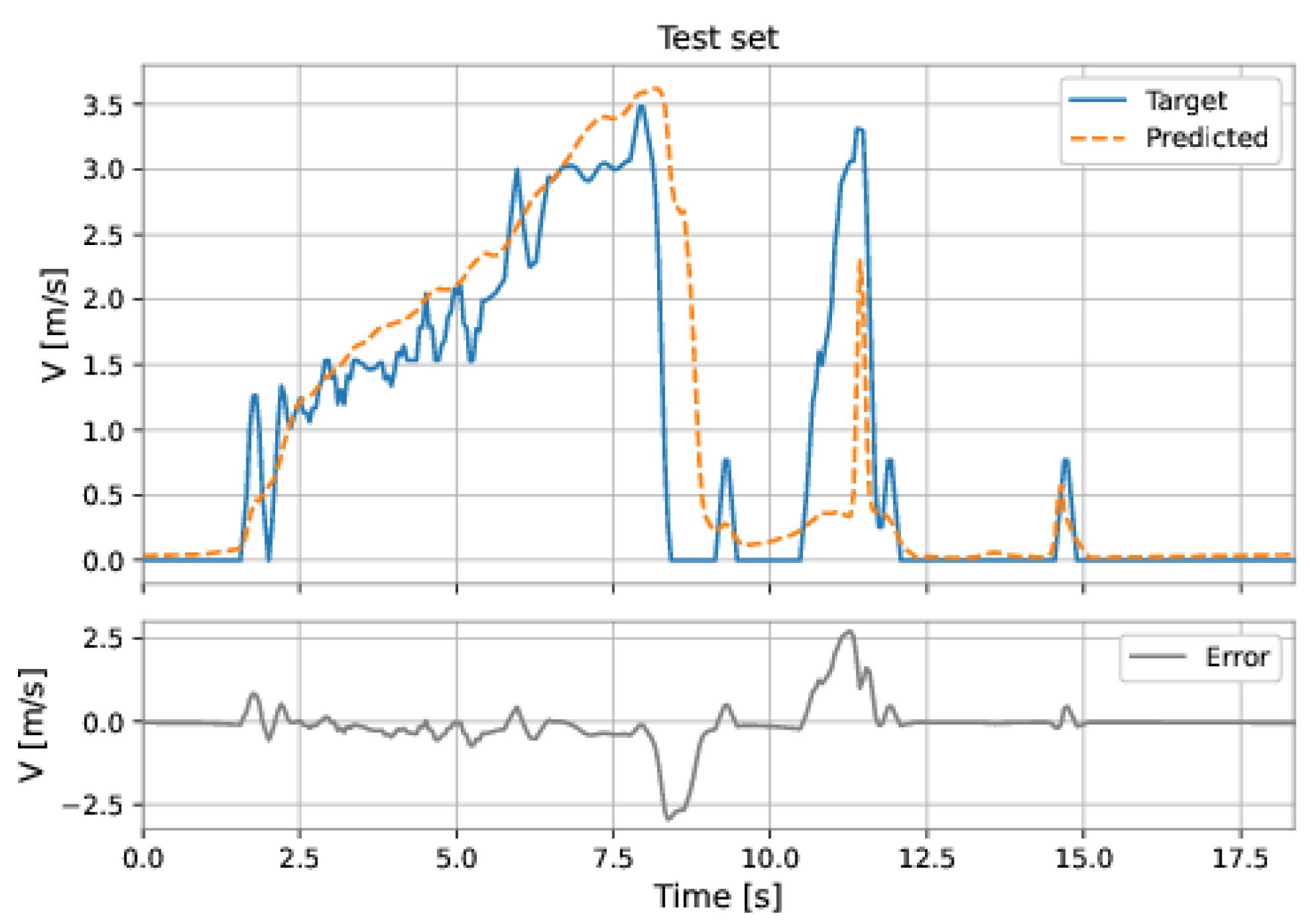

Figure 50.

MLP-TDNN’s predictions on the test set.

Figure 50.

MLP-TDNN’s predictions on the test set.

Figure 51.

MLP’s predictions on the training set.

Figure 51.

MLP’s predictions on the training set.

Figure 52.

MLP’s predictions on the test set.

Figure 52.

MLP’s predictions on the test set.

Figure 53.

MLP’s predictions on the training set.

Figure 53.

MLP’s predictions on the training set.

Figure 54.

MLP’s predictions on the test set.

Figure 54.

MLP’s predictions on the test set.

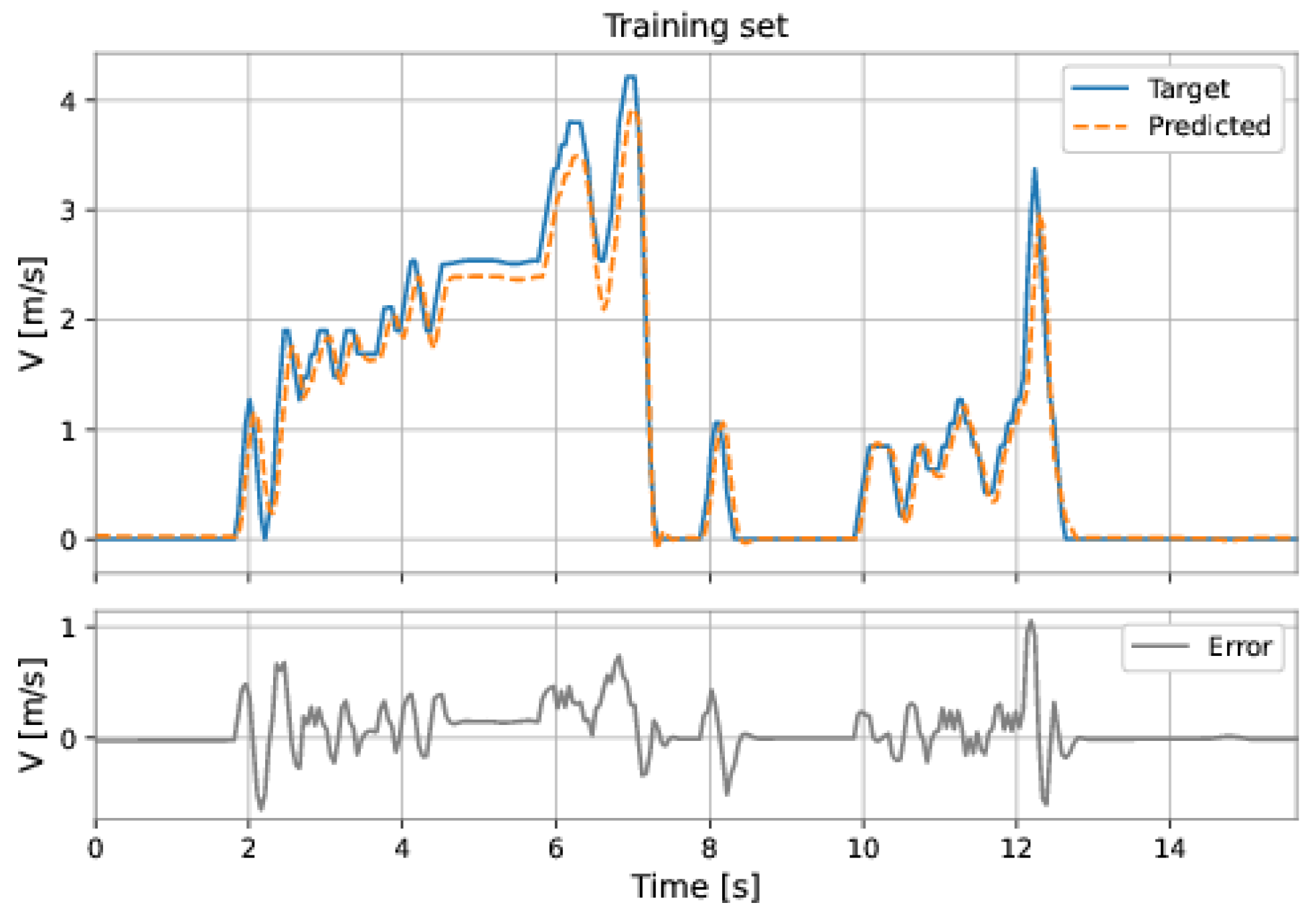

Figure 55.

LSTM-NARX results (series-parallel). Training set.

Figure 55.

LSTM-NARX results (series-parallel). Training set.

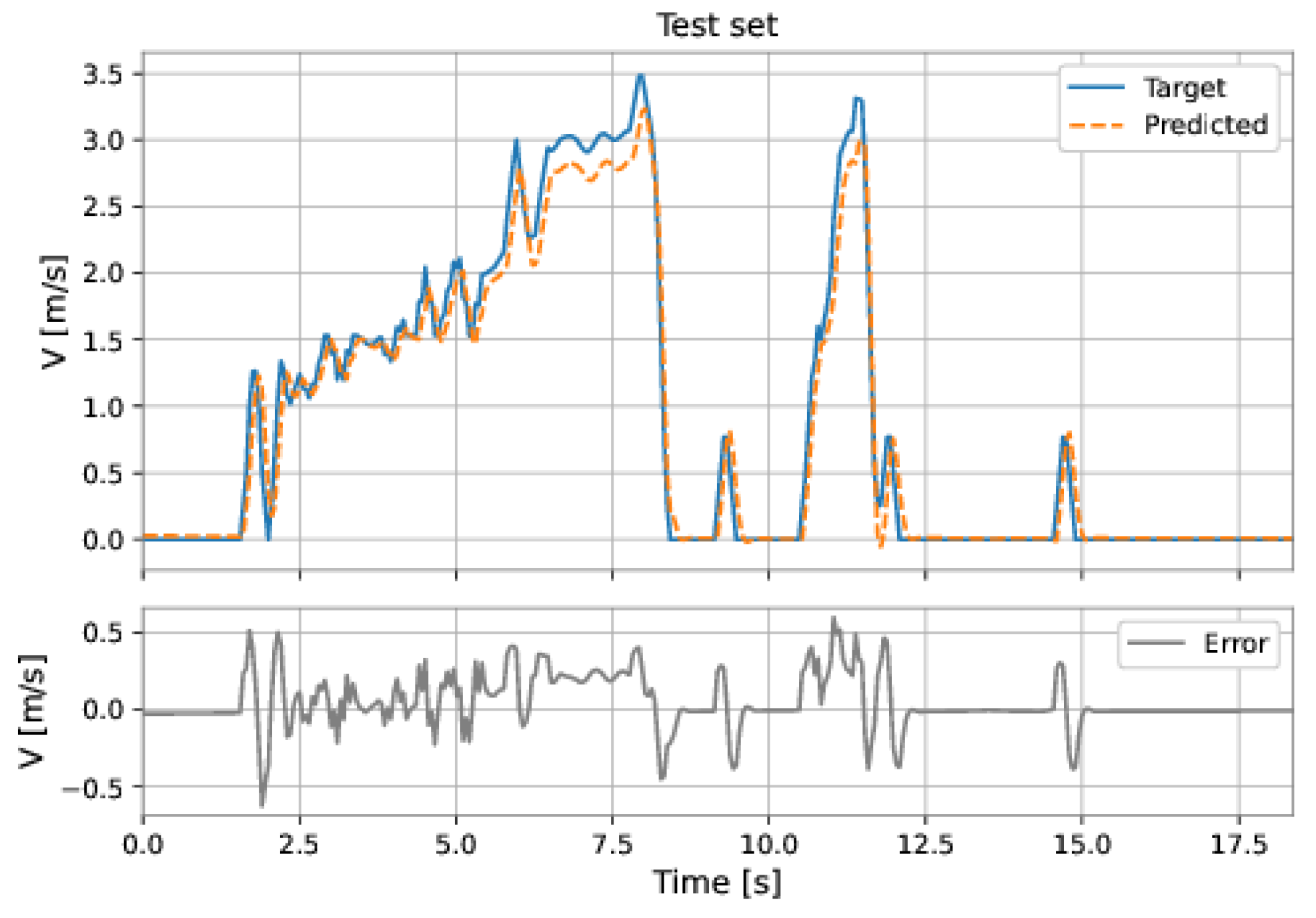

Figure 56.

LSTM-NARX results (series-parallel). Test set.

Figure 56.

LSTM-NARX results (series-parallel). Test set.

Table 1.

Main features of the Raspberry Pi used in this investigation (Raspberry Pi 3 Model B+).

Table 1.

Main features of the Raspberry Pi used in this investigation (Raspberry Pi 3 Model B+).

| Parameter | Value |

|---|

| Operating system | Raspbian GNU/Linux 10 (buster) |

| Processor | Cortex-A53 (ARMv8) 64 bits quad-core |

| Clock | 1.4 GHz |

| RAM memory | 1 GB |

| I/O interface | 40 GPIO pins |

| Communication | Bluetooth 4.2, IEEE 802.11 5 GHz,

Gigabit Ethernet |

| Dimensions | 85 × 56 × 17 mm |

Table 2.

Features of the pressure sensors.

Table 2.

Features of the pressure sensors.

| Features | Description |

|---|

| Working principle | Hall effect |

| Pressure range | 0–5 bar |

| Output voltage | 0.5–4.5 VDC |

| Supply voltage | 5 VDC |

| Response time | 2.0 ms |

| Measurement accuracy | ±1.5% FS (75 mbar) |

Table 3.

Pressures and corresponding voltages for the pressure sensors.

Table 3.

Pressures and corresponding voltages for the pressure sensors.

| Pressure (bar) | Upstream Sensor (V) | Downstream Sensor (V) |

|---|

| 0.0 | 0.504 | 0.511 |

| 1.0 | 0.996 | 1.00 |

| 2.0 | 1.803 | 1.805 |

| 3.0 | 2.711 | 2.719 |

| 4.0 | 3.497 | 3.503 |

| 5.0 | 4.424 | 4.427 |

Table 4.

Main features of the embedded system’s power bank.

Table 4.

Main features of the embedded system’s power bank.

| Feature | Description |

|---|

| Battery type | Lithium Polymer (LiPo) |

| Capacity | 5000 mAh |

| Output voltage | 5 VDC |

| Output current | 2 A |

Table 5.

Performance of the linear regression models. Each model used a different combination of features. All means that the model used all the features from the data sets.

Table 5.

Performance of the linear regression models. Each model used a different combination of features. All means that the model used all the features from the data sets.

| Model | Features | Training | Test |

|---|

| 1 | All | 1.1118 | 1.2765 |

| 2 | , acc_total | 1.1498 | 1.0874 |

| 3 | , acc_total | 1.1504 | 1.089 |

| 4 | , , , acc_total | 1.1186 | 1.1186 |

Table 6.

The root mean square error (RMSE) on the training and test sets obtained by each model.

Table 6.

The root mean square error (RMSE) on the training and test sets obtained by each model.

| Model | Training | Test |

|---|

| MLP | 0.2217 | 0.5457 |

| MLP-TDNN | 0.2548 | 0.6091 |

| LSTM-TDNN | 0.2875 | 0.6591 |

| MLP-NARX | 0.1314 | 0.1057 |

| LSTM-NARX | 0.2248 | 0.1780 |