RGBD Salient Object Detection, Based on Specific Object Imaging

Abstract

1. Introduction

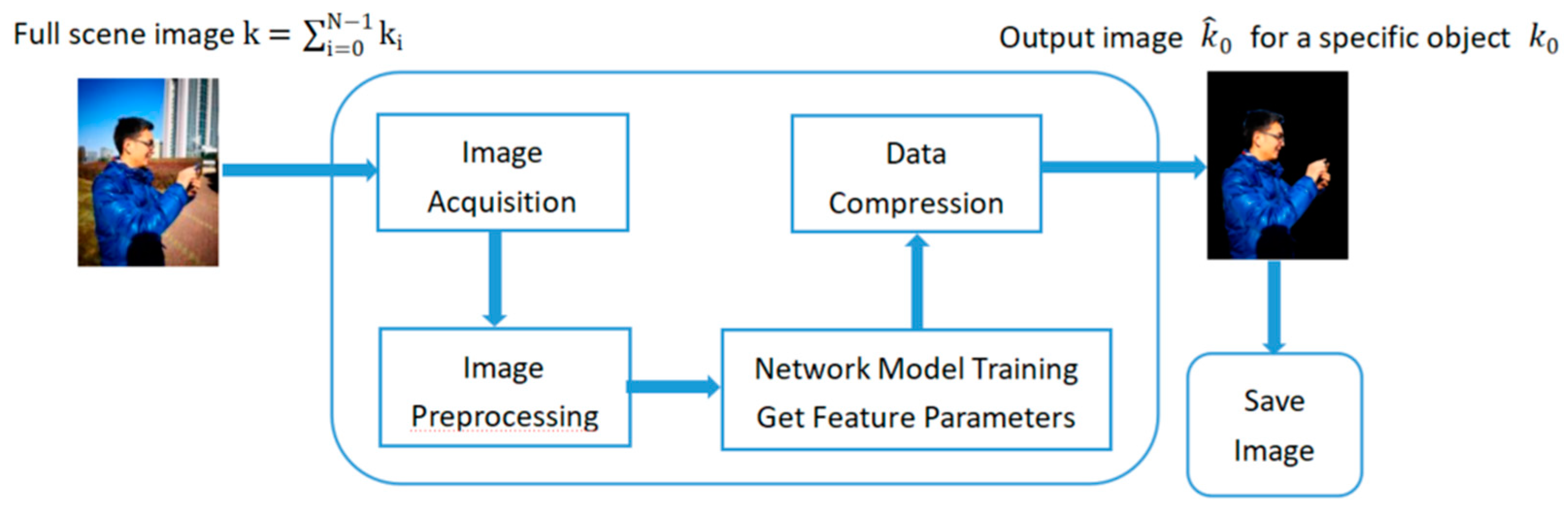

- This paper proposes a salient object detection method, based on specific object imaging, to detect and image salient objects.

- The experiments are carried out under the benchmark SIP datasets. The proposed new method can also complete the target detection and imaging under the condition of using a small sample dataset. The amount of data required is greatly reduced, and the results are better.

2. Related Work

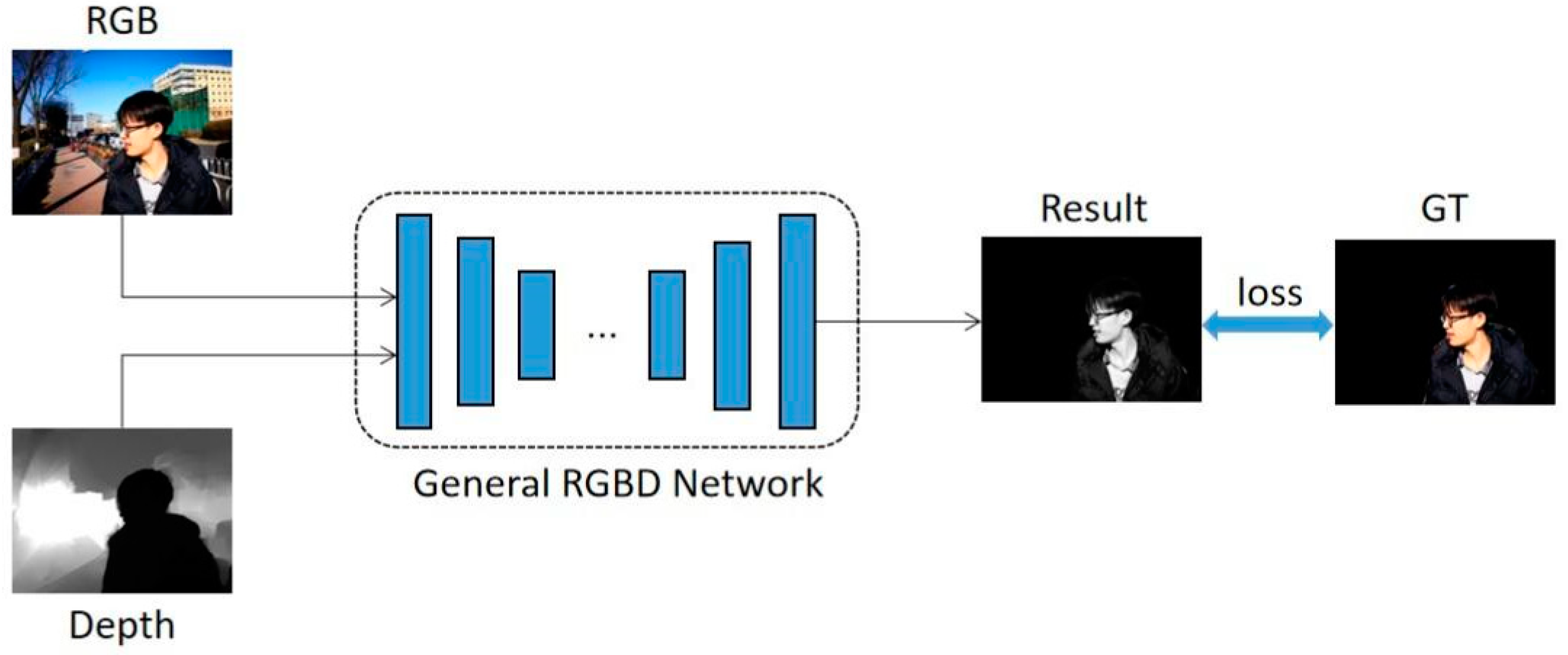

3. RGBD Salient Object Detection, Based on Specific Object Imaging

4. Experiments

4.1. Datasets

4.2. Experimental Setup

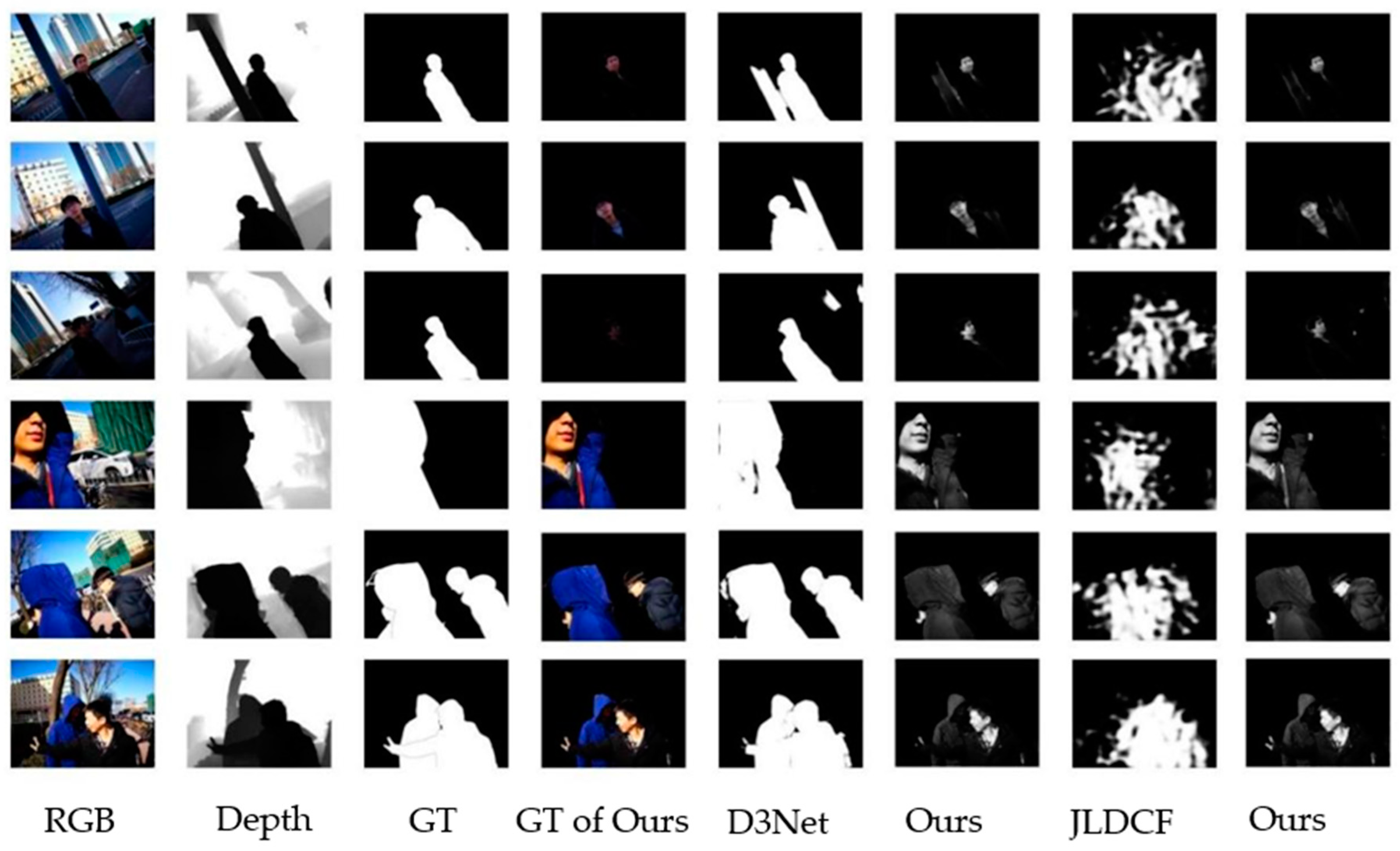

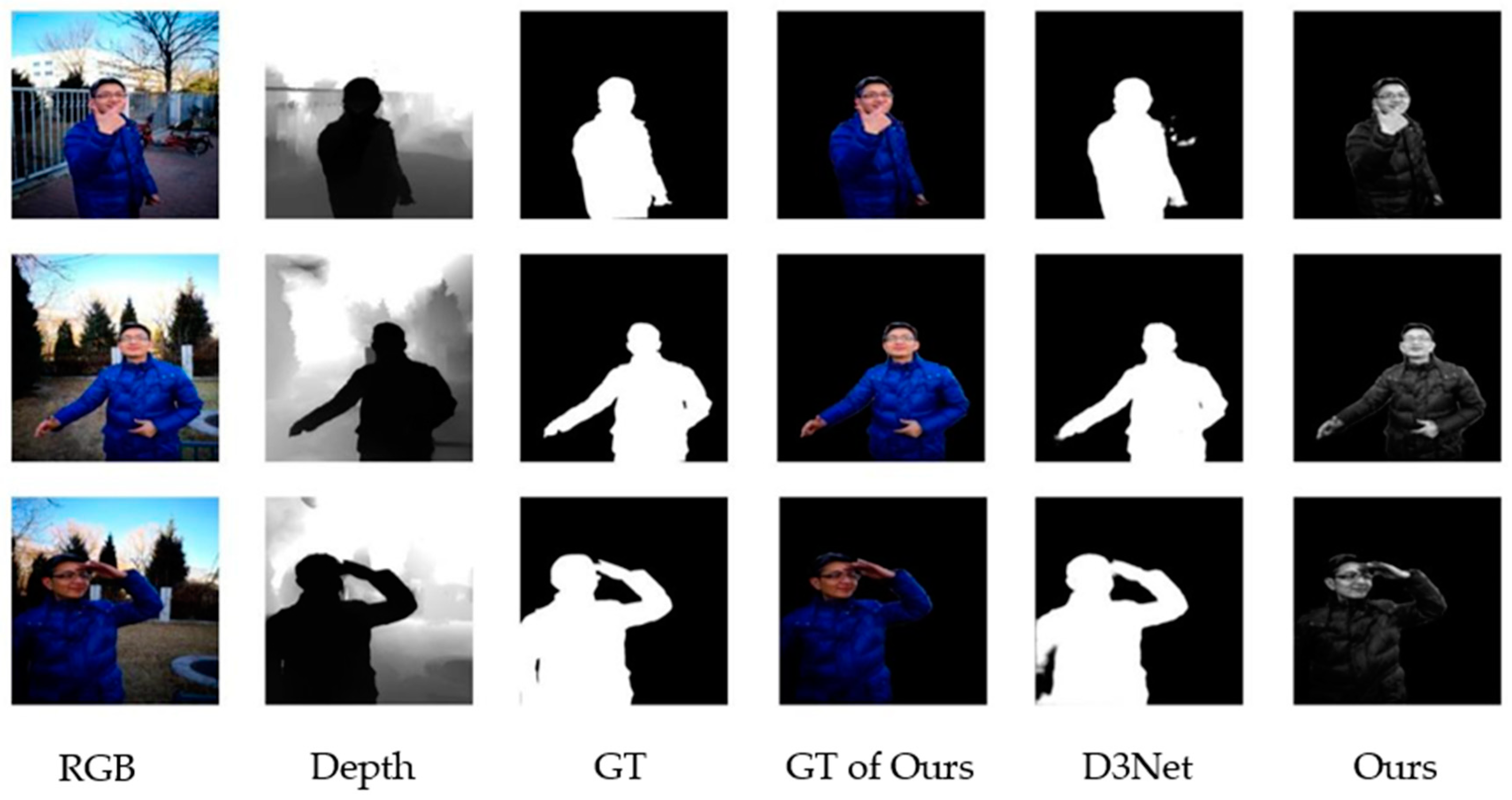

4.3. Result

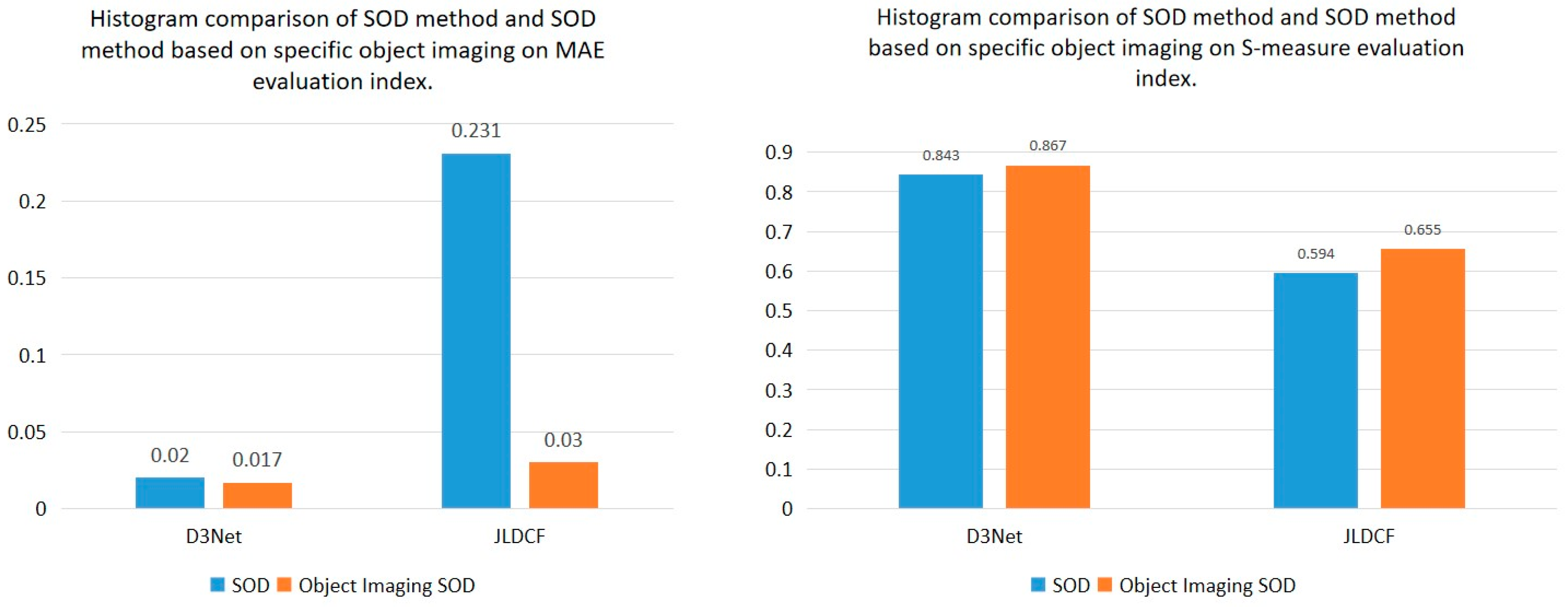

4.4. Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Fan, D.-P.; Cheng, M.-M.; Liu, J.-J.; Gao, S.-H.; Hou, Q.; Borji, A. Salient Objects in Clutter: Bringing Salient Object Detection to the Foreground. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 196–212. [Google Scholar]

- Ramadan, H.; Lachqar, C.; Tairi, H. Saliency-guided automatic detection and segmentation of tumor in breast ultrasound images. Biomed. Signal Process. Control. 2020, 60, 101945. [Google Scholar] [CrossRef]

- Zou, B.; Liu, Q.; Yue, K.; Chen, Z.; Chen, J.; Zhao, G. Saliency-based segmentation of optic disc in retinal images. Chin. J. Electron. 2019, 28, 71–75. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, J.; Li, B.; Meng, M.Q.-H. Saliency based ulcer detection for wireless capsule endoscopy diagnosis. IEEE Trans. Med. Imag. 2015, 34, 2046–2057. [Google Scholar] [CrossRef]

- Hong, S.; You, T.; Kwak, S.; Han, B. Online Tracking by Learning Discriminative Saliency Map with Convolutional Neural Network. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 597–606. [Google Scholar]

- Cane, T.; Ferryman, J. Saliency-Based Detection for Maritime Object Tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1257–1264. [Google Scholar]

- Liu, Y.; Shao, Y.; Sun, F. Person Re-Identification Based on Visual Saliency. In Proceedings of the 2012 IEEE 12th International Conference on Intelligent Systems Design and Applications (ISDA), Kochi, India, 27–29 November 2012; pp. 884–889. [Google Scholar]

- Zhao, R.; Oyang, W.; Wang, X. Person Re-Identification by Saliency Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 356–370. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Xu, C.; Gao, Z.; Rodrigues, J.J.P.C.; de Albuquerque, V.H.C. Industrial Pervasive Edge Computing-Based Intelligence IoT for Surveillance Saliency Detection. IEEE Trans. Ind. Inform. 2021, 17, 5012–5020. [Google Scholar] [CrossRef]

- Gao, Z.; Xu, C.; Zhang, H.; Li, S.; de Albuquerque, V.H.C. Trustful Internet of Surveillance Things Based on Deeply Represented Visual Co-Saliency Detection. IEEE Internet Things J. 2020, 7, 4092–4100. [Google Scholar] [CrossRef]

- Gao, Z.; Zhang, H.; Dong, S.; Sun, S.; Wang, X.; Yang, G.; Wu, W.; Li, S.; de Albuquerque, V.H.C. Salient Object Detection in the Distributed Cloud-Edge Intelligent Network. IEEE Netw. 2020, 34, 216–224. [Google Scholar] [CrossRef]

- Ottonelli, S.; Spagnolo, P.; Mazzeo, P.L.; Leo, M. Improved Video Segmentation with Color and Depth using a Stereo Camera. In Proceedings of the 2013 IEEE International Conference on Industrial Technology (ICIT), Cape Town, South Africa, 25–28 February 2013; pp. 1134–1139. [Google Scholar] [CrossRef]

- Wang, X.; Li, S.; Chen, C.; Hao, A.; Qin, H. Knowing Depth Quality in Advance: A Depth Quality Assessment Method for RGB-D Salient Object Detection. arXiv 2020, arXiv:2008.04157. [Google Scholar]

- Ciptadi, A.; Hermans, T.; Rehg, J.M. An in Depth View of Saliency. In Proceedings of the British Machine Vision Conference, Bristol, UK, 9–13 September 2013. [Google Scholar] [CrossRef]

- Ren, J.; Gong, X.; Yu, L.; Zhou, W.; Yang, M.-Y. Exploiting Global Priors for RGB-D Saliency Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 25–32. [Google Scholar]

- Ju, R.; Ge, L.; Geng, W.; Ren, T.; Wu, G. Depth Saliency Based on Anisotropic Center-Surround Difference. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1115–1119. [Google Scholar]

- Feng, D.; Barnes, N.; You, S.; McCarthy, C. Local Background Enclosure for RGB-D Salient Object Detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2343–2350. [Google Scholar]

- Wang, S.-T.; Zhou, Z.; Qu, H.-B.; Li, B. Visual Saliency Detection for RGB-D Images with Generative Model. In Proceedings of the 13th Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; pp. 20–35. [Google Scholar]

- Lang, C.; Nguyen, T.V.; Katti, H.; Yadati, K.; Kankanhalli, M.; Yan, S. Depth Matters: Influence of Depth Cues on Visual Saliency. In Proceedings of the European Conference Computer Vision, Florence, Italy, 7–13 October 2012; pp. 101–115. [Google Scholar]

- Peng, H.; Li, B.; Xiong, W.; Hu, W.; Ji, R. Rgbd Salient Object Detection: A Benchmark and Algorithms. In Proceedings of the 13th European Conference Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 92–109. [Google Scholar]

- Zhu, J.; Zhang, X.; Fang, X.; Dong, F.; Qiu, Y. Modal-Adaptive Gated Recoding Network for RGB-D Salient Object Detection. IEEE Signal Process. Lett. 2022, 29, 359–363. [Google Scholar] [CrossRef]

- Liu, C.; Zhou, W.; Chen, Y.; Lei, J. Asymmetric Deeply Fused Network for Detecting Salient Objects in RGB-D Images. IEEE Signal Process. Lett. 2020, 27, 1620–1624. [Google Scholar] [CrossRef]

- Huang, R.; Xing, Y.; Wang, Z. RGB-D Salient Object Detection by a CNN With Multiple Layers Fusion. IEEE Signal Process. Lett. 2019, 26, 552–556. [Google Scholar] [CrossRef]

- Huang, Z.; Chen, H.-X.; Zhou, T.; Yang, Y.-Z.; Wang, C.-Y. Multi-level cross-modal interaction network for rgb-d salient object detection. Neurocomputing 2021, 452, 200–211. [Google Scholar] [CrossRef]

- Zhang, M.; Fei, S.X.; Liu, J.; Xu, S.; Piao, Y.; Lu, H. Asymmetric Two-Stream Architecture for Accurate Rgb-D Saliency Detection. In Proceedings of the 16th European Conference Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 374–390. [Google Scholar]

- Fan, D.-P.; Zhai, Y.; Borji, A.; Yang, J.; Shao, L. Bbs-Net: Rgb-D Salient Object Detection with a Bifurcated Backbone Strategy network. In Proceedings of the European Conference Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 275–292. [Google Scholar]

- Zhao, X.; Zhang, L.; Pang, Y.; Lu, H.; Zhang, L. A Single Stream Network for Robust and Real-Time Rgb-D Salient Object Detection. In Proceedings of the European Conference Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 646–662. [Google Scholar]

- Chen, H.; Li, Y.; Su, D. RGB-D Saliency Detection by Multi-Stream Late Fusion Network. In Proceedings of the 11th International Conference Computer Vision Systems, Shenzhen, China, 10–13 July 2017; pp. 459–468. [Google Scholar]

- Fan, D.-P.; Lin, Z.; Zhang, Z.; Zhu, M.; Cheng, M.-M. Rethinking RGB-D salient object detection: Models, data sets, and large-scale benchmarks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2075–2089. [Google Scholar] [CrossRef]

- Fu, K.; Fan, D.-P.; Ji, G.-P.; Zhao, Q. JL-DCF: Joint Learning and Densely-Cooperative Fusion Framework for RGB-D Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3049–3059. [Google Scholar] [CrossRef]

- Liu, T.; Sun, J.; Zheng, N.; Tang, X.; Shum, H. Learning to Detect a Salient Object. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Liu, Y.; Li, X.; Wang, L.; Niu, Y.; Liu, F. Oscillation analysis for salient object detection. Multimed. Tools Appl. 2014, 68, 659–679. [Google Scholar] [CrossRef]

- Li, J.; Chen, S.; Wang, S.; Lei, M.; Dai, X.; Liang, C.; Xu, K.; Lin, S.; Li, Y.; Fan, Y.; et al. An optical biomimetic eyes with interested object imaging. arXiv 2021, arXiv:2108.04236. [Google Scholar]

- Li, J.; Lei, M.; Xiaofang, D.A.I.; Wang, S.; Zhong, T.; Liang, C.; Wang, C.; Xie, P.; Ruiqiang, W.A.N.G. Compressed Sensing Based Object Imaging System and Imaging Method Therefor. U.S. Patent 2021/0144278 A1, 13 May 2021. [Google Scholar]

- Zhou, X.; Gong, W.; Fu, W.; Du, F. Application of Deep Learning in Object Detection. In Proceedings of the IEEE/ACIS 16th International Conference on Computer and Information Science (ICIS), Wuhan, China, 24–26 May 2017; pp. 631–634. [Google Scholar] [CrossRef]

- Cheng, Y.; Fu, H.; Wei, X.; Xiao, J.; Cao, X. Depth Enhanced Saliency Detection Method. In Proceedings of the International Conference on Internet Multimedia Computing and Service, Xiamen, China, 10–12 July 2014; pp. 23–27. [Google Scholar]

- Cong, R.; Lei, J.; Zhang, C.; Huang, Q.; Cao, X.; Hou, C. Saliency detection for stereoscopic images based on depth confidence analysis and multiple cues fusion. IEEE Signal Process. Lett. 2016, 23, 819–823. [Google Scholar] [CrossRef]

- Zhu, C.; Li, G.; Wang, W.; Wang, R. An Innovative Salient Object Detection using Center-Dark Channel Prior. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 1509–1515. [Google Scholar]

- Qu, L.; He, S.; Zhang, J.; Tian, J.; Tang, Y.; Yang, Q. RGBD Salient Object Detection via Deep Fusion. IEEE Trans. Image Process. 2017, 26, 2274–2285. [Google Scholar] [CrossRef]

- Chen, H.; Li, Y.-F.; Su, D. Attention-Aware Cross Modal Cross-Level Fusion Network for RGB-D Salient Object Detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 6821–6826. [Google Scholar]

- Jiang, B.; Zhou, Z.; Wang, X.; Tang, J.; Luo, B. cmsalgan: RGB-D salient object detection with cross-view generative adversarial networks. IEEE Trans. Multimed. 2020, 23, 1343–1353. [Google Scholar] [CrossRef]

- Zhou, X.; Li, G.; Gong, C.; Liu, Z.; Zhang, J. Attention-guided RGBD saliency detection using appearance information. Image Vis. Comput. 2020, 95, 103888. [Google Scholar] [CrossRef]

- Li, C.; Cong, R.; Kwong, S.; Hou, J.; Fu, H.; Zhu, G.; Zhang, D.; Huang, Q. ASIF-Net: Attention Steered Interweave Fusion Network for RGB-D Salient Object Detection. IEEE Trans. Cybern. 2020, 51, 88–100. [Google Scholar] [CrossRef] [PubMed]

- Borji, A.; Cheng, M.-M.; Jiang, H.; Li, J. Salient Object Detection: A Benchmark. IEEE Trans. Image Process. 2015, 24, 5706–5722. [Google Scholar] [CrossRef] [PubMed]

- Fan, D.-P.; Cheng, M.-M.; Liu, Y.; Li, T.; Borji, A. Structure-Measure: A New Way to Evaluate Foreground Maps. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4558–4567. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, X.; Li, J.; Li, L.; Shangguan, C.; Huang, S. RGBD Salient Object Detection, Based on Specific Object Imaging. Sensors 2022, 22, 8973. https://doi.org/10.3390/s22228973

Liao X, Li J, Li L, Shangguan C, Huang S. RGBD Salient Object Detection, Based on Specific Object Imaging. Sensors. 2022; 22(22):8973. https://doi.org/10.3390/s22228973

Chicago/Turabian StyleLiao, Xiaolian, Jun Li, Leyi Li, Caoxi Shangguan, and Shaoyan Huang. 2022. "RGBD Salient Object Detection, Based on Specific Object Imaging" Sensors 22, no. 22: 8973. https://doi.org/10.3390/s22228973

APA StyleLiao, X., Li, J., Li, L., Shangguan, C., & Huang, S. (2022). RGBD Salient Object Detection, Based on Specific Object Imaging. Sensors, 22(22), 8973. https://doi.org/10.3390/s22228973