A New SCAE-MT Classification Model for Hyperspectral Remote Sensing Images

Abstract

:1. Introduction

- A new stacked convolutional autoencoder network model transfer (SCAE-MT) is developed;

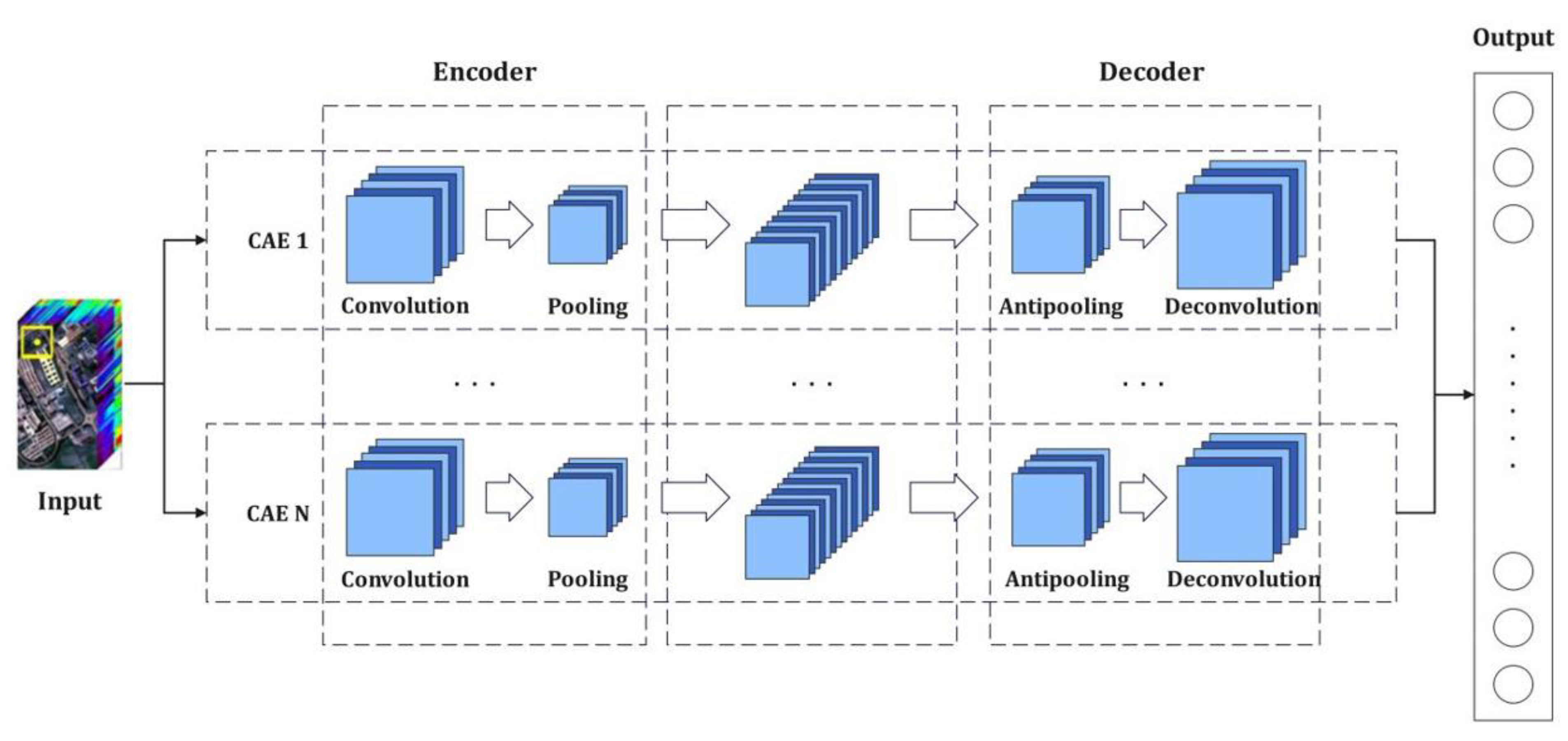

- The stacked convolutional auto-encoding network is used to effectively extract the deep features of the HRSI;

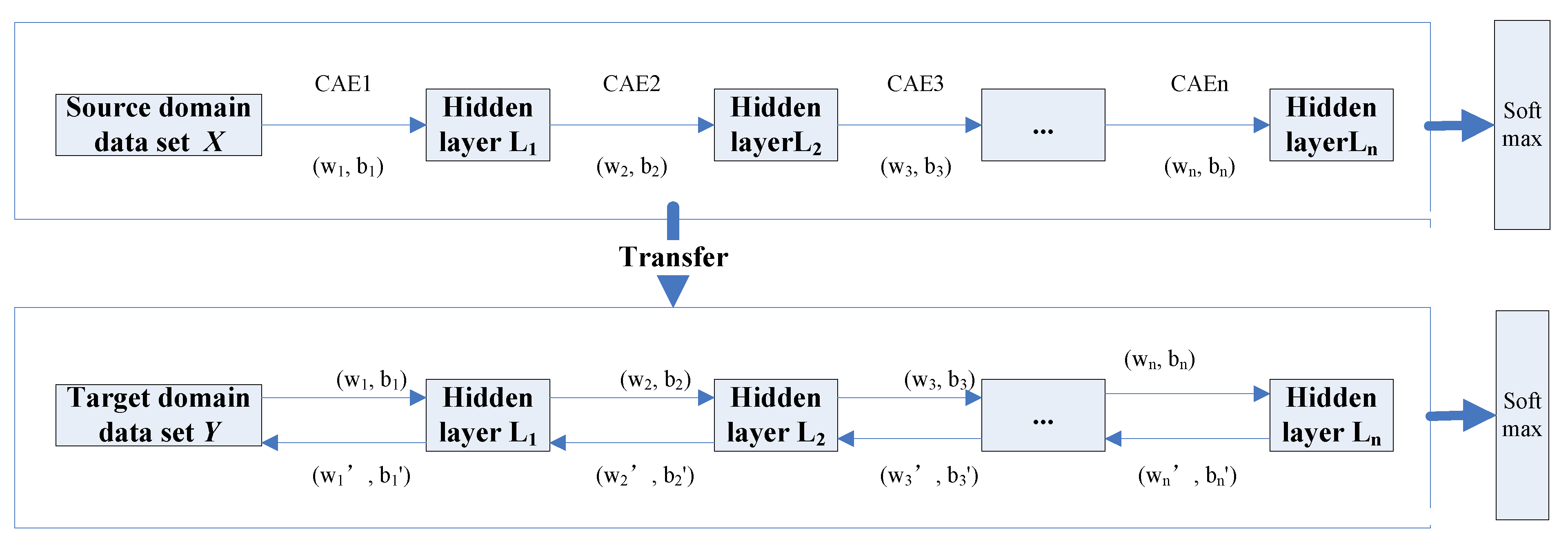

- The transfer learning strategy is employed in order to develop a SCAE network model transfer under small and limited training samples;

- The SCAE-MT is used to propose a new HRSI classification method in order to solve the small samples that can be found in the HRSI.

2. Image Classification with SCAE-MT

2.1. The Idea of Image Classification

2.2. Processes of Implementation

- Step 1: Deep feature extraction

- Step 2: Classification based on SCAE-MT

3. Experiment Results and Analysis

3.1. Experimental Datasets

3.2. Experiment Environment and Parameter Settings

3.3. Experimental Results and Analysis

4. Conclusions and Prospect

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chang, C.I.; Kuo, Y.M.; Chen, S.; Liang, C.C.; Ma, K.Y.; Hu, P.F. Self-mutual information-based band selection for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5979–5997. [Google Scholar] [CrossRef]

- Song, M.; Shang, X.; Chang, C.I. 3-D receiver operating characteristic analysis for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8093–8115. [Google Scholar] [CrossRef]

- Shang, X.; Song, M.; Chang, C.I. An iterative random training sample selection approach to constrained energy minimization for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1625–1629. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, H.; Liu, Y. Dual-channel convolution network with image-based global learning framework for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Chen, S.; Zhong, S.; Xue, B.; Li, X.; Zhao, L.; Chang, C.I. Iterative scale-invariant feature transform for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3244–3265. [Google Scholar] [CrossRef]

- Huang, C.; Zhou, X.; Ran, X.J.; Liu, Y.; Deng, W.Q.; Deng, W. Co-evolutionary competitive swarm optimizer with three-phase for large-scale complex optimization problem. Inf. Sci. 2022. [Google Scholar] [CrossRef]

- Sun, H.; Ren, J.; Zhao, H.; Yuen, P.; Tschannerl, J. Novel Gumbel-Softmax Trick Enabled Concrete Autoencoder with Entropy Constraints for Unsupervised Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5506413. [Google Scholar] [CrossRef]

- Zhang, X.; Liang, Y.; Li, C.; Huyan, N.; Jiao, L.; Zhou, H. Recursive autoencoders-based unsupervised feature learning for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1928–1932. [Google Scholar] [CrossRef] [Green Version]

- Shi, C.; Pun, C. Multiscale Superpixel-based hyperspectral image classification using recurrent neural networks with stacked autoencoder. IEEE Trans. Multimed. 2020, 22, 487–501. [Google Scholar] [CrossRef]

- Mei, S.; Ji, J.; Geng, Y.; Zhang, Z.; Li, X.; Du, Q. Unsupervised Spatial—Spectral Feature Learning by 3D Convolutional Autoencoder for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6808–6820. [Google Scholar] [CrossRef]

- Zhao, C.; Cheng, H.; Feng, S. A Spectral—Spatial change detection method based on simplified 3D convolutional autoencoder for multitemporal hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar]

- Zhang, M.; Li, W.; Tao, R.; Li, H.; Du, Q. Information Fusion for Classification of Hyperspectral and LiDAR Data Using IP-CNN. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5506812. [Google Scholar] [CrossRef]

- Dou, Z.; Gao, K.; Zhang, X.; Wang, H.; Han, L. Band Selection of hyperspectral images using attention-based autoencoders. IEEE Geosci. Remote Sens. Lett. 2021, 18, 147–151. [Google Scholar] [CrossRef]

- Ghasrodashti, E.K.; Sharma, N. Hyperspectral image classification using an extended Auto-Encoder method. Signal Process. Image Commun. 2020, 92, 116111. [Google Scholar] [CrossRef]

- Bansal, V.; Buckchash, H.; Raman, B. Discriminative Auto-Encoding for classification and representation learning problems. IEEE Signal Process. Lett. 2021, 28, 987–991. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.E.; Plaza, J.; Li, J.; Plaza, A. Active Learning with Convolutional Neural Networks for Hyperspectral Image Classification Using a New Bayesian Approach. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6440–6461. [Google Scholar] [CrossRef]

- Rao, M.; Tang, P.; Zhang, Z. Spatial—Spectral Relation Network for Hyperspectral Image Classification with Limited Training Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 5086–5100. [Google Scholar] [CrossRef]

- Prasad, S.; Labate, D.; Cui, M.; Zhang, Y. Morphologically Decoupled Structured Sparsity for Rotation-Invariant Hyperspectral Image Analysis. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4355–4366. [Google Scholar] [CrossRef]

- Gao, K.; Guo, W.; Yu, X.; Liu, B.; Yu, A.; Wei, X. Deep Induction Network for Small Samples Classification of Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3462–3477. [Google Scholar] [CrossRef]

- Dai, X.; Wu, X.; Wang, B.; Zhang, L. Semisupervised scene classification for remote sensing images: A method based on convolutional neural networks and ensemble learning. IEEE Geosci. Remote Sens. Lett. 2019, 16, 869–873. [Google Scholar] [CrossRef]

- Yan, P.; He, F.; Yang, Y.; Hu, F. Semi-supervised representation learning for remote sensing image classification based on generative adversarial networks. IEEE Access 2020, 8, 54135–54144. [Google Scholar] [CrossRef]

- Miao, W.; Geng, J.; Jiang, W. Semi-Supervised Remote-Sensing Image Scene Classification Using Representation Consistency Siamese Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5616614. [Google Scholar] [CrossRef]

- Deng, W.; Zhang, L.; Zhou, X.; Zhou, Y.; Sun, Y.; Zhu, W.; Chen, H.; Deng, W.; Chen, H.; Zhao, H. Multi-strategy particle swarm and ant colony hybrid optimization for airport taxiway planning problem. Inf. Sci. 2022, 612, 576–593. [Google Scholar] [CrossRef]

- Lv, Z.; Huang, H.; Li, X.; Zhao, M.; Benediktsson, J.A.; Sun, W.; Falco, N. Land cover change detection with heterogeneous remote sensing images: Review, Progress and Perspective. Proc. IEEE 2022. [Google Scholar] [CrossRef]

- Wei, Y.Y.; Zhou, Y.Q.; Luo, Q.F.; Deng, W. Optimal reactive power dispatch using an improved slime Mould algorithm. Energy Rep. 2021, 7, 8742–8759. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, Y.; Li, X.; Feng, R.; Gong, Y.; Jiang, Y.; Guan, X.; Li, S. Combing remote sensing information entropy and machine learning for ecological environment assessment of Hefei-Nanjing-Hangzhou region, China. J. Environ. Manag. 2022, 325, 116553. [Google Scholar] [CrossRef]

- Cao, H.; Shao, H.; Zhong, X.; Deng, Q.; Yang, X.; Xuan, J. Unsupervised domain-share CNN for machine fault transfer diagnosis from steady speeds to time-varying speeds. J. Manuf. Syst. 2022, 62, 186–198. [Google Scholar] [CrossRef]

- Song, Y.; Cai, X.; Zhou, X.; Zhang, B.; Chen, H.; Li, Y.; Deng, W.; Deng, W. Dynamic hybrid mechanism-based differential evolution algorithm and its application. Expert Syst. Appl. 2022, 213, 118834. [Google Scholar] [CrossRef]

- Zhao, H.; Liu, J.; Chen, H.; Chen, J.; Li, Y.; Xu, J.; Deng, W. Intelligent Diagnosis Using Continuous Wavelet Transform and Gauss Convolutional Deep Belief Network. IEEE Trans. Reliab. 2022. early access. [Google Scholar] [CrossRef]

- Zhou, X.; Ma, H.; Gu, J.; Chen, H.; Deng, W. Parameter adaptation-based ant colony optimization with dynamic hybrid mechanism. Eng. Appl. Artif. Intell. 2022, 114, 105139. [Google Scholar] [CrossRef]

- Chen, H.; Miao, F.; Chen, Y.; Xiong, Y.; Chen, T. A Hyperspectral Image Classification Method Using Multifeature Vectors and Optimized KELM. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2781–2795. [Google Scholar] [CrossRef]

- Deng, W.; Ni, H.; Liu, Y.; Chen, H.; Zhao, H. An adaptive differential evolution algorithm based on belief space and generalized opposition-based learning for resource allocation. Appl. Soft Comput. 2022, 127, 109419. [Google Scholar] [CrossRef]

- Yao, R.; Guo, C.; Deng, W.; Zhao, H. A novel mathematical morphology spectrum entropy based on scale-adaptive techniques. ISA Trans. 2022, 126, 691–702. [Google Scholar] [CrossRef] [PubMed]

- Feng, R.; Li, X.; Bai, J.; Ye, Y. MID: A Novel Mountainous Remote Sensing Imagery Registration Dataset Assessed by a Coarse-to-Fine Unsupervised Cascading Network. Remote Sens. 2022, 14, 4178. [Google Scholar] [CrossRef]

- He, Z.Y.; Shao, H.D.; Wang, P.; Janet, L.; Cheng, J.S.; Yang, Y. Deep transfer multi-wavelet auto-encoder for intelligent fault diagnosis of gearbox with few target training samples. Knowl.-Based Syst. 2020, 191, 105313. [Google Scholar] [CrossRef]

- Li, W.; Zhong, X.; Shao, H.; Cai, B.; Yang, X. Multi-mode data augmentation and fault diagnosis of rotating machinery using modified ACGAN designed with new framework. Adv. Eng. Inform. 2022, 52, 101552. [Google Scholar] [CrossRef]

- Ren, Z.; Han, X.; Yu, X.; Skjetne, R.; Leira, B.J.; Sævik, S.; Zhu, M. Data-driven simultaneous identification of the 6DOF dynamic model and wave load for a ship in waves. Mech. Syst. Signal Process. 2023, 184, 109422. [Google Scholar] [CrossRef]

- Zhao, W.; Chen, X.; Bo, Y.; Chen, J. Semisupervised Hyperspectral Image Classification with Cluster-Based Conditional Generative Adversarial Net. IEEE Geosci. Remote Sens. Lett. 2019, 17, 539–543. [Google Scholar] [CrossRef]

- Zheng, C.; Wang, N.; Cui, J. Hyperspectral image classification with small training sample size using superpixel-guided training sample enlargement. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7307–7316. [Google Scholar] [CrossRef]

- Yu, C.; Zhou, S.; Song, M.; Chang, C.I. Semisupervised hyperspectral band selection based on dual-constrained low-rank representation. IEEE Geosci. Remote. Sens. 2022, 19, 5503005. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, W.G.; Liao, Y.; Song, Z.; Shi, J.; Jiang, X.; Shen, C.; Zhu, Z. Bearing fault diagnosis via generalized logarithm sparse regularization. Mech. Syst. Signal Process. 2022, 167, 108576. [Google Scholar] [CrossRef]

- Sha, A.; Wang, B.; Wu, X.; Zhang, L. Semisupervised Classification for Hyperspectral Images Using Graph Attention Networks. IEEE Geosci. Remote Sens. Lett. 2020, 18, 157–161. [Google Scholar] [CrossRef]

- He, X.; Chen, Y.; Ghamisi, P. Heterogeneous transfer learning for hyperspectral image classification based on convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3246–3263. [Google Scholar] [CrossRef]

- Chen, H.; Ye, M.; Lei, L.; Lu, H.; Qian, Y. Semisupervised dual-dictionary learning for heterogeneous transfer learning on cross-scene hyperspectral images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3164–3178. [Google Scholar] [CrossRef]

- Qu, Y.; Baghbaderani, R.K.; Li, W.; Gao, L.; Zhang, Y.; Qi, H. Physically constrained transfer learning through shared abundance space for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2021. early access. [Google Scholar] [CrossRef]

- Yang, B.; Hu, S.; Guo, Q.; Hong, D. Multisource Domain Transfer Learning Based on Spectral Projections for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3730–3739. [Google Scholar] [CrossRef]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G. A Particle Swarm Optimization-Based Flexible Convolutional Autoencoder for Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 2295–2309. [Google Scholar] [CrossRef] [Green Version]

- Sun, Z.; Li, J.; Liu, P.; Cao, W.; Yu, T.; Gu, X. SAR Image Classification Using Greedy Hierarchical Learning with Unsupervised Stacked CAEs. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5721–5739. [Google Scholar] [CrossRef]

- Xing, F.; Xie, Y.; Su, H.; Liu, F.; Yang, L. Deep Learning in Microscopy Image Analysis: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 4550–4568. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, S.; Li, X.; Zhao, H.; Zhu, L.; Chen, S. Ground Target Recognition Using Carrier-Free UWB Radar Sensor with a Semi-Supervised Stacked Convolutional Denoising Autoencoder. IEEE Sens. J. 2021, 21, 20685–20693. [Google Scholar] [CrossRef]

- Li, X.; Zhang, L.; Du, B.; Zhang, L.; Shi, Q. Iterative Reweighting Heterogeneous Transfer Learning Framework for Supervised Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2022–2035. [Google Scholar] [CrossRef]

- Deng, C.; Xue, Y.; Liu, X.; Li, C.; Tao, D. Active Transfer Learning Network: A Unified Deep Joint Spectral–Spatial Feature Learning Model for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1741–1754. [Google Scholar] [CrossRef] [Green Version]

- He, X.; Chen, Y. Transferring CNN Ensemble for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2021, 18, 876–880. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, E.; Wang, J.; Leng, C.; Peng, J. MTFFN: Multimodal Transfer Feature Fusion Network for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6008005. [Google Scholar] [CrossRef]

- Liu, S.; Cao, Y.; Wang, Y.; Peng, J.; Mathiopoulos, P.T.; Li, Y. DFL-LC: Deep Feature Learning with Label Consistencies for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3669–3681. [Google Scholar] [CrossRef]

- Aydemir, M.S.; Bilgin, G. Semisupervised Hyperspectral Image Classification Using Deep Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3615–3622. [Google Scholar] [CrossRef]

- Wei, W.; Xu, S.; Zhang, L.; Zhang, J.; Zhang, Y. Boosting Hyperspectral Image Classification with Unsupervised Feature Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5502315. [Google Scholar] [CrossRef]

- Li, J.; Huang, X.; Gamba, P.; Bioucas-Dias, J.M.; Zhang, L.; Benediktsson, J.A.; Plaza, A. Multiple feature learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1592–1606. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Bruzzone, L.; Liu, S. Deep feature representation for hyperspectral image classification. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4951–4954. [Google Scholar]

- Miao, J.; Wang, B.; Wu, X.; Zhang, L.; Hu, B.; Zhang, J.Q. Deep feature extraction based on Siamese network and auto-encoder for hyperspectral image classification. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 8 July–2 August 2019; pp. 397–400. [Google Scholar]

- Du, B.; Xiong, W.; Wu, J.; Zhang, L.; Zhang, L.; Tao, D. Stacked convolutional denoising auto-encoders for feature representation. IEEE Trans. Cybern. 2016, 47, 1017–1027. [Google Scholar] [CrossRef]

- Yu, Y.; Hao, Z.; Li, G.; Liu, Y.; Yang, R.; Liu, H. Optimal search mapping among sensors in heterogeneous smart homes. Math. Biosci. Eng. 2023, 20, 1960–1980. [Google Scholar] [CrossRef]

- Xu, G.; Dong, W.; Xing, J.; Lei, W.; Liu, J.; Gong, L.; Feng, M.; Zheng, X.; Liu, S. Delay-CJ: A novel cryptojacking covert attack method based on delayed strategy and its detection. Digit. Commun. Netw. 2022. [Google Scholar] [CrossRef]

- Chen, H.Y.; Fang, M.; Xu, S. Hyperspectral remote sensing image classification with CNN based on quantum genetic-optimized sparse representation. IEEE Access 2020, 8, 99900–99909. [Google Scholar] [CrossRef]

- Xu, G.; Bai, H.; Xing, J.; Luo, T.; Xiong, N.N. SG-PBFT: A secure and highly efficient distributed blockchain PBFT consensus algorithm for intelligent Internet of vehicles. J. Parallel Distrib. Comput. 2022, 164, 1–11. [Google Scholar] [CrossRef]

- Yu, C.; Liu, C.; Yu, H.; Song, M.; Chang, C.I. Unsupervised domain adaptation with dense-based compaction for hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 12287–12299. [Google Scholar] [CrossRef]

| Data | Indian Pines | Salinas |

|---|---|---|

| Collection location | Indiana, USA | California, U.S. |

| Collection equipment | AVIRIS | AVIRIS |

| Spectral coverage (um) | 0.4∼2.5 | 0.4∼2.5 |

| Data size (pixel) | 145 × 145 | 512 × 217 |

| Spatial resolution (m) | 20 | 3.7 |

| Number of bands | 220 | 224 |

| Number of bands after denoising | 200 | 204 |

| Sample size | 10,249 | 54,129 |

| Number of categories | 16 | 16 |

| Indian Pines | Salinas | |||

|---|---|---|---|---|

| Category | Class Name | Number of Samples | Class Name | Number of Samples |

| 1 | Alfalfa | 46 | Brocoli_green_weeds_1 | 2009 |

| 2 | Corn-notill | 1428 | Brocoli_green_weeds_22 | 3726 |

| 3 | Corn-min | 830 | Fallow | 1976 |

| 4 | Corn | 237 | Fallow_rough_plow | 1394 |

| 5 | Grass-pasture | 483 | Fallow_smooth | 2678 |

| 6 | Grass-trees | 730 | Stubble | 3959 |

| 7 | Grass-pastue-mowed | 28 | Celery | 3579 |

| 8 | Hay-windrowed | 478 | Grapes_untrained | 11,271 |

| 9 | Oats | 20 | Soil_vinyard_develop | 6203 |

| 10 | Soybean-notill | 972 | Corn_senesced_green_weec | 3278 |

| 11 | Soybean-min | 2455 | Lettuce_romainc_4wk | 1068 |

| 12 | Soybean-clean | 593 | Lettuce_romainc_5wk | 1927 |

| 13 | Wheat | 205 | Lettuce_romainc_6wk | 916 |

| 14 | Woods | 1265 | Lettuce_romainc_7wk | 1070 |

| 15 | Bldg-Grass-Tree-Drives | 386 | Vinyard_untraincd | 7268 |

| 16 | Stone-Steel-Towers | 93 | Vinyard_vertical_trellis | 1870 |

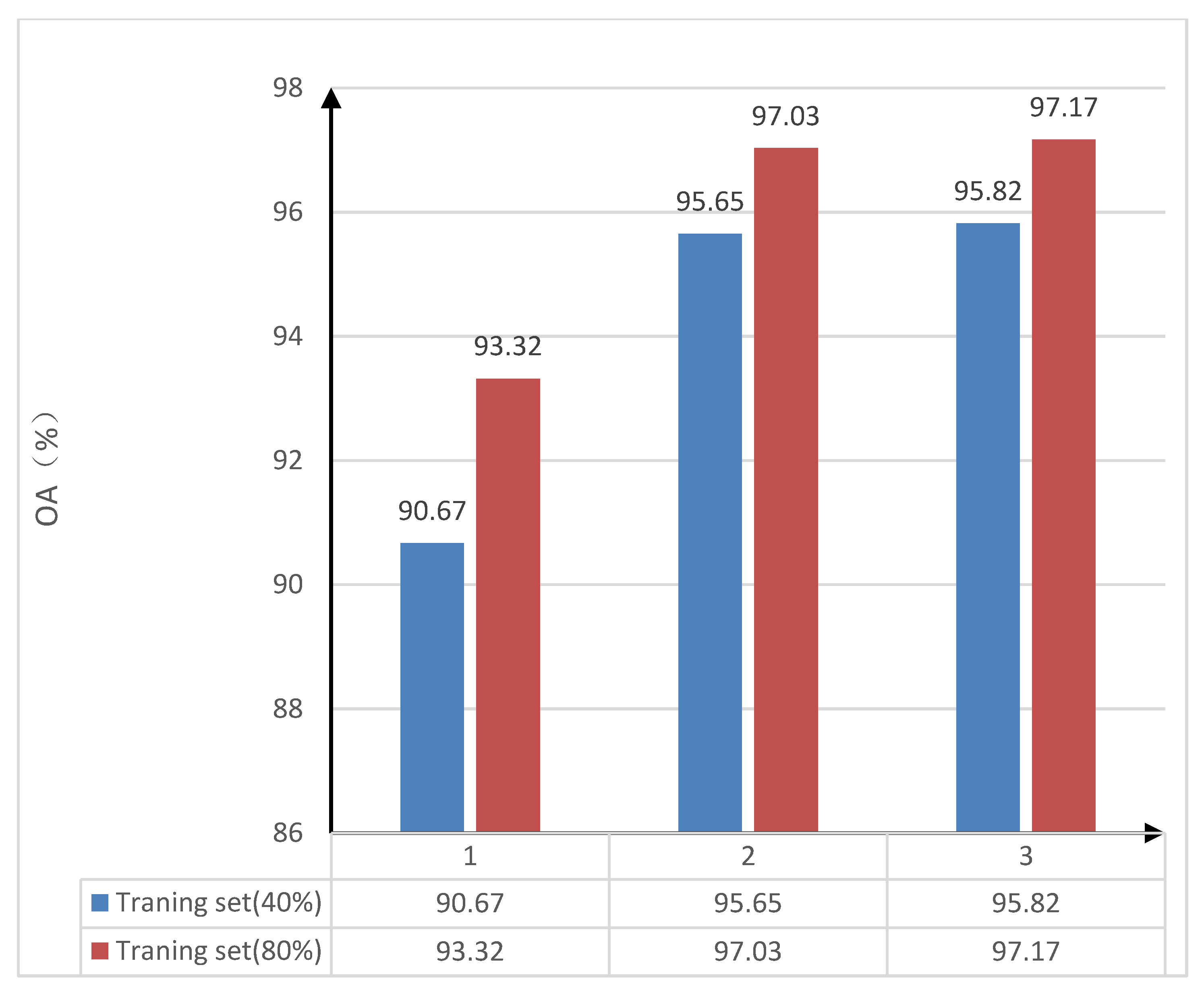

| Training Set (40%) | Training Set (80%) | |||||

|---|---|---|---|---|---|---|

| Number | 1 | 2 | 3 | 1 | 2 | 3 |

| OA (%) | 90.67 | 95.65 | 95.82 | 93.32 | 97.03 | 97.17 |

| Index | Method | Salinas Dataset | ||

| 5% | 10% | 15% | ||

| No transfer strategy | 80.58 | 82.74 | 87.13 | |

| OA (%) | Introduction of transfer strategy | 83.11 | 88.92 | 90.24 |

| Method | CAE (OA%) | SCAE (OA%) | SCAE-MT (OA%) |

|---|---|---|---|

| 5% | 79.19 | 81.62 | 83.11 |

| 10% | 84.77 | 86.41 | 88.92 |

| 15% | 86.53 | 87.82 | 90.24 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Chen, Y.; Wang, Q.; Chen, T.; Zhao, H. A New SCAE-MT Classification Model for Hyperspectral Remote Sensing Images. Sensors 2022, 22, 8881. https://doi.org/10.3390/s22228881

Chen H, Chen Y, Wang Q, Chen T, Zhao H. A New SCAE-MT Classification Model for Hyperspectral Remote Sensing Images. Sensors. 2022; 22(22):8881. https://doi.org/10.3390/s22228881

Chicago/Turabian StyleChen, Huayue, Ye Chen, Qiuyue Wang, Tao Chen, and Huimin Zhao. 2022. "A New SCAE-MT Classification Model for Hyperspectral Remote Sensing Images" Sensors 22, no. 22: 8881. https://doi.org/10.3390/s22228881