Land-Use and Land-Cover Classification in Semi-Arid Areas from Medium-Resolution Remote-Sensing Imagery: A Deep Learning Approach

Abstract

:1. Introduction

- To apply a 2D CNN architecture with fixed hyperparameters for LULC classification in semi-arid regions using medium-resolution remote-sensing imagery (Sentinel-2 data).

- To test the transferability of CNNs for semi-arid LULC classification in semi-arid regions.

- To evaluate and analyze the spectral bands, which can provide maximum class separability, minimize spectral confusion, and reduce the required computational power.

Overview of DL CNNs

2. Materials and Methods

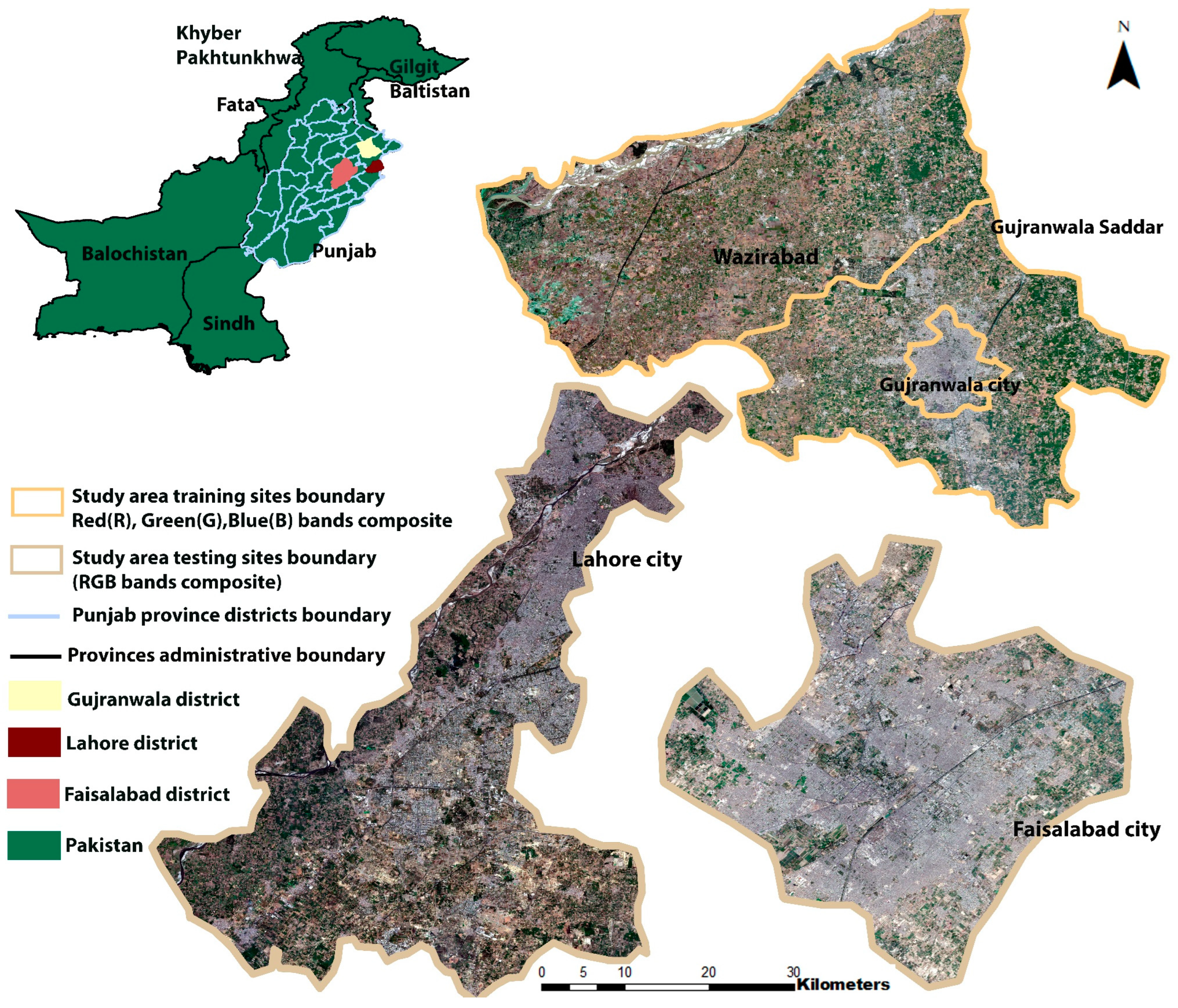

2.1. Study Areas

2.1.1. Training Sites

2.1.2. Testing Sites

2.2. Methodology

2.2.1. Satellite Data Acquisition

2.2.2. Sentinel-2 Data Pre-processing

- A 4-band composite was created by using NIR, green, blue, and red bands.

- A 10-band composite was created by adding the two SWIR bands and four vegetation red-edge bands to the 4-band composite.

2.2.3. Dataset Preparation

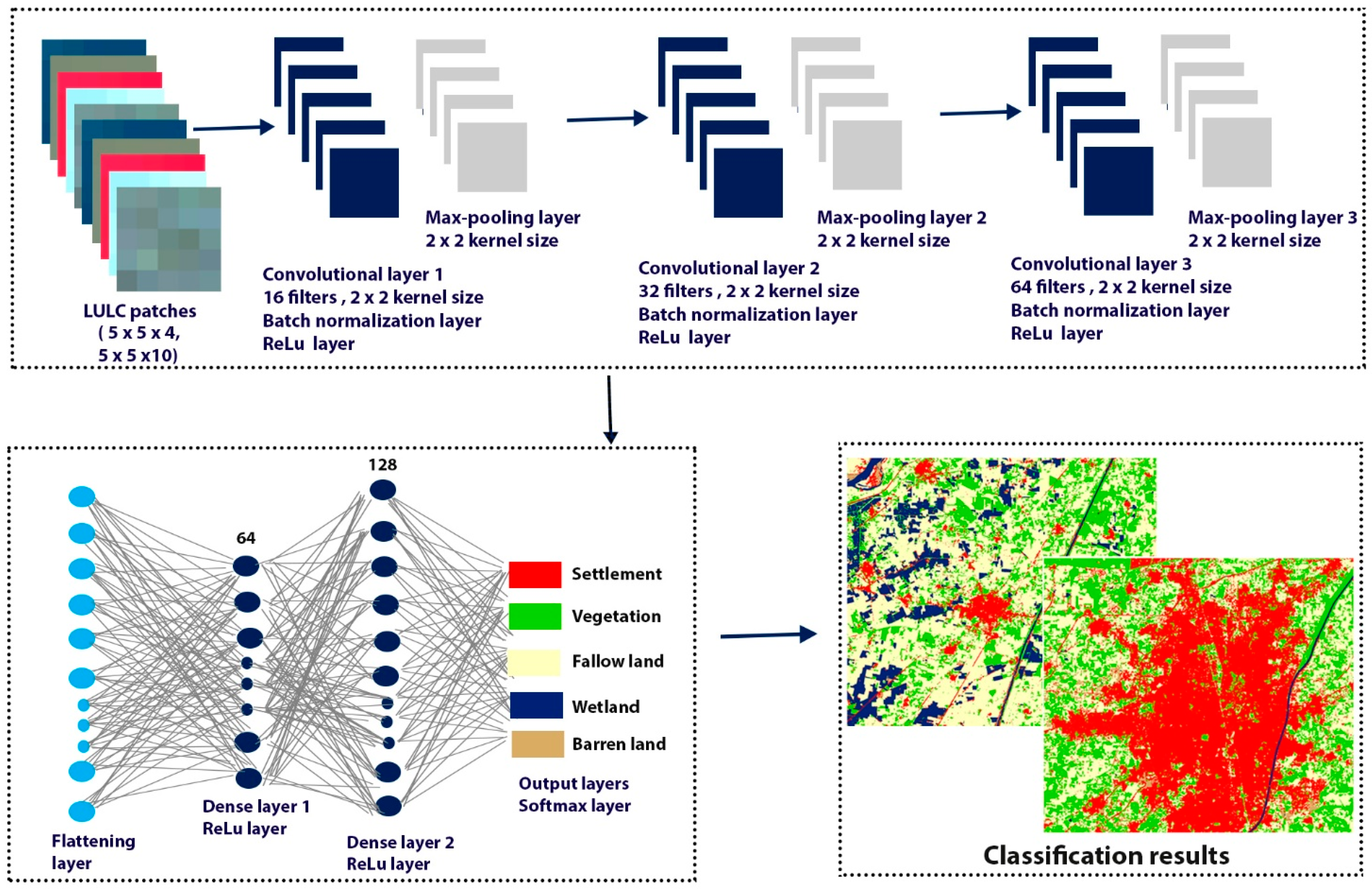

2.2.4. LULC Classification

2.2.5. The Proposed 2D CNN

Parameter Optimization

2.2.6. Performance Evaluation

3. Results

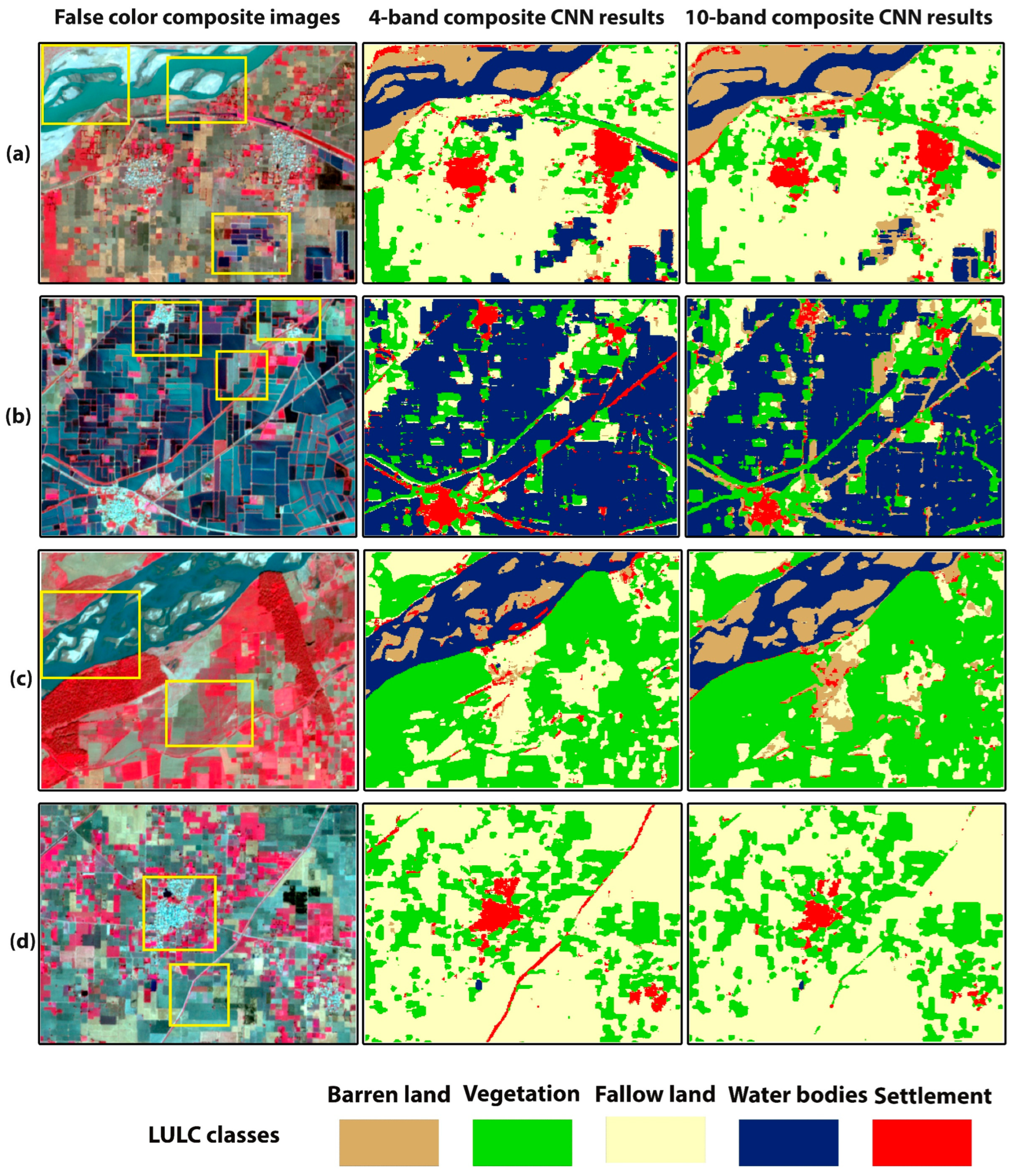

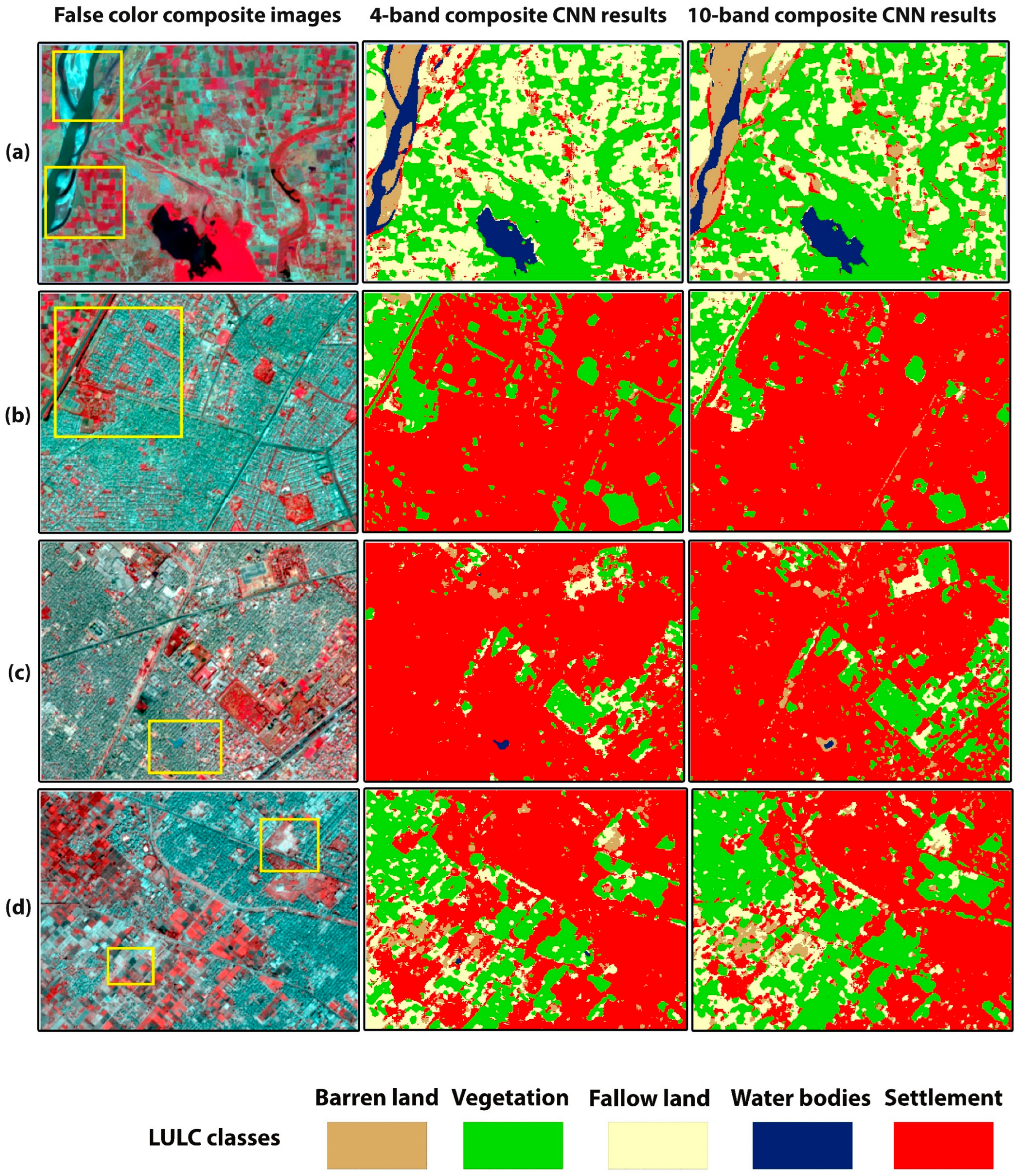

3.1. Qualitative Analysis of Training Site Land Cover Maps

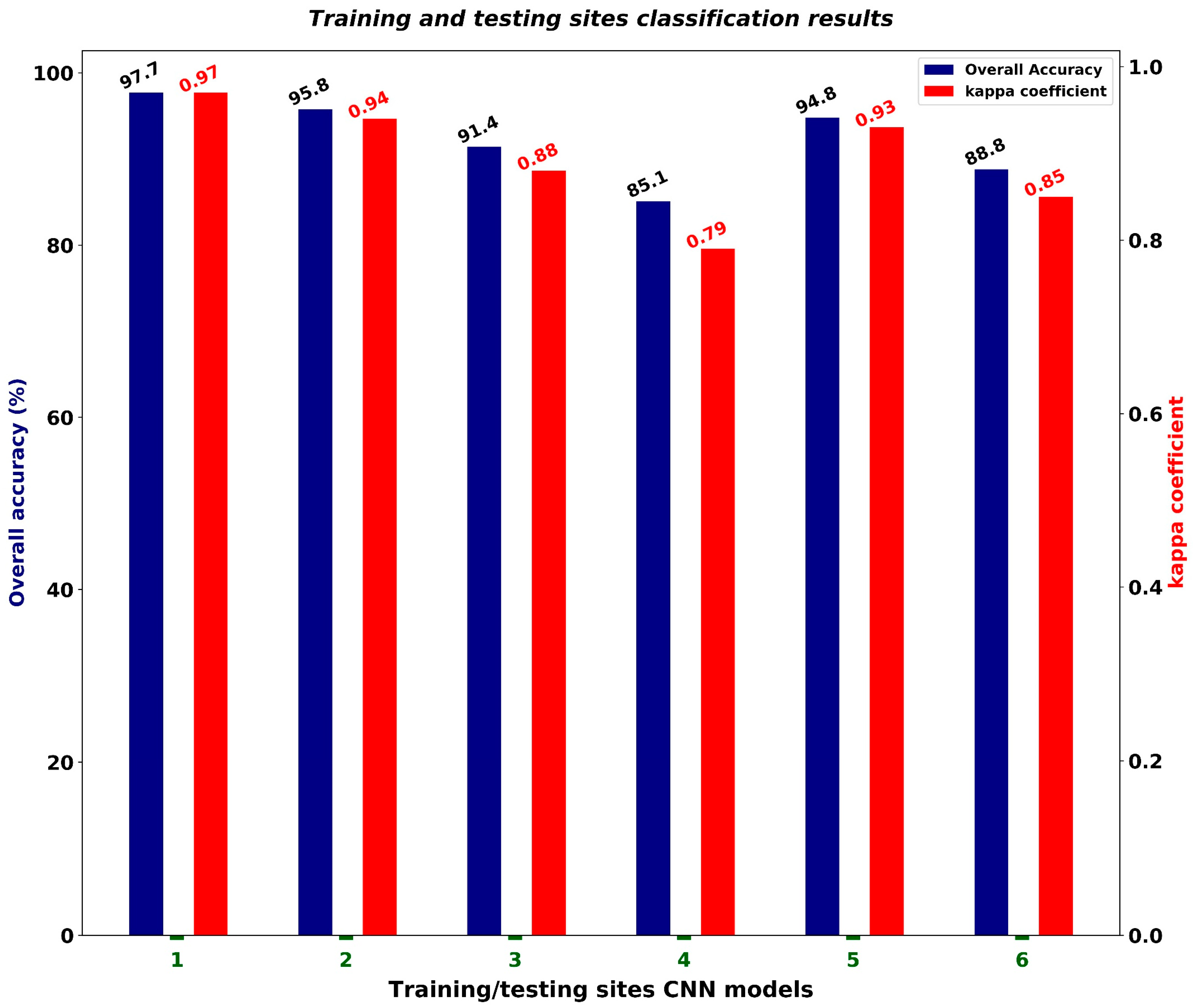

3.2. Quantitative Analysis of Training Site Classification Results

3.3. The Trained 4–10-Band CNN Models’ Prediction on Unseen Sites

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Wambugu, N.; Chen, Y.; Xiao, Z.; Wei, M.; Bello, S.A.; Junior, J.M.; Li, J. A Hybrid Deep Convolutional Neural Network for Accurate Land Cover Classification. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102515. [Google Scholar] [CrossRef]

- Yao, X.; Yang, H.; Wu, Y.; Wu, P.; Wang, B.; Zhou, X.; Wang, S. Land Use Classification of the Deep Convolutional Neural Network Method Reducing the Loss of Spatial Features. Sensors 2019, 19, 2792. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Carranza-García, M.; García-Gutiérrez, J.; Riquelme, J.C. A Framework for Evaluating Land Use and Land Cover Classification Using Convolutional Neural Networks. Remote Sens. 2019, 11, 274. [Google Scholar] [CrossRef] [Green Version]

- Chaves, M.E.D.; Picoli, M.C.A.; Sanches, I.D. Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review. Remote Sens. 2020, 12, 3062. [Google Scholar] [CrossRef]

- Thanh Noi, P.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2017, 18, 18. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tavares, P.A.; Beltrão, N.E.S.; Guimarães, U.S.; Teodoro, A.C. Integration of Sentinel-1 and Sentinel-2 for Classification and LULC Mapping in the Urban Area of Belém, Eastern Brazilian Amazon. Sensors 2019, 19, 1140. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Al-Najjar, H.A.H.; Kalantar, B.; Pradhan, B.; Saeidi, V.; Halin, A.A.; Ueda, N.; Mansor, S. Land Cover Classification from Fused DSM and UAV Images Using Convolutional Neural Networks. Remote Sens. 2019, 11, 1461. [Google Scholar] [CrossRef] [Green Version]

- Rousset, G.; Despinoy, M.; Schindler, K.; Mangeas, M. Assessment of Deep Learning Techniques for Land Use Land Cover Classification in Southern New Caledonia. Remote Sens. 2021, 13, 2257. [Google Scholar] [CrossRef]

- Song, H.; Kim, Y.; Kim, Y. A Patch-Based Light Convolutional Neural Network for Land-Cover Mapping Using Landsat-8 Images. Remote Sens. 2019, 11, 114. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Y.; Geiß, C.; So, E.; Jin, Y. Multitemporal Relearning with Convolutional LSTM Models for Land Use Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3251–3265. [Google Scholar] [CrossRef]

- Malarvizhi, K.; Kumar, S.V.; Porchelvan, P. Use of High Resolution Google Earth Satellite Imagery in Landuse Map Preparation for Urban Related Applications. Procedia Technol. 2016, 24, 1835–1842. [Google Scholar] [CrossRef]

- Stoian, A.; Poulain, V.; Inglada, J.; Poughon, V.; Derksen, D. Land Cover Maps Production with High Resolution Satellite Image Time Series and Convolutional Neural Networks: Adaptations and Limits for Operational Systems. Remote Sens. 2019, 11, 1986. [Google Scholar] [CrossRef] [Green Version]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Lasanta, T.; Vicente-Serrano, S.M. Complex Land Cover Change Processes in Semiarid Mediterranean Regions: An Approach Using Landsat Images in Northeast Spain. Remote Sens. Environ. 2012, 124, 1–14. [Google Scholar] [CrossRef]

- Ali, M.Z.; Qazi, W.; Aslam, N. A Comparative Study of ALOS-2 PALSAR and Landsat-8 Imagery for Land Cover Classification Using Maximum Likelihood Classifier. Egypt. J. Remote Sens. Sp. Sci. 2018, 21, S29–S35. [Google Scholar] [CrossRef]

- Abdi, A.M. Land Cover and Land Use Classification Performance of Machine Learning Algorithms in a Boreal Landscape Using Sentinel-2 Data. GISci. Remote Sens. 2020, 57, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Ghayour, L.; Neshat, A.; Paryani, S.; Shahabi, H.; Shirzadi, A.; Chen, W.; Al-Ansari, N.; Geertsema, M.; Pourmehdi Amiri, M.; Gholamnia, M. Performance Evaluation of Sentinel-2 and Landsat 8 OLI Data for Land Cover/Use Classification Using a Comparison between Machine Learning Algorithms. Remote Sens. 2021, 13, 1349. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Eurosat: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Dong, R.; Fu, H.; Wang, J.; Yu, L.; Gong, P. Integrating Google Earth Imagery with Landsat Data to Improve 30-m Resolution Land Cover Mapping. Remote Sens. Environ. 2020, 237, 111563. [Google Scholar] [CrossRef]

- Liu, C.; Zeng, D.; Wu, H.; Wang, Y.; Jia, S.; Xin, L. Urban Land Cover Classification of High-Resolution Aerial Imagery Using a Relation-Enhanced Multiscale Convolutional Network. Remote Sens. 2020, 12, 311. [Google Scholar] [CrossRef] [Green Version]

- Yu, Y.; Samali, B.; Rashidi, M.; Mohammadi, M.; Nguyen, T.N.; Zhang, G. Vision-Based Concrete Crack Detection Using a Hybrid Framework Considering Noise Effect. J. Build. Eng. 2022, 61, 105246. [Google Scholar] [CrossRef]

- Jozdani, S.E.; Johnson, B.A.; Chen, D. Comparing Deep Neural Networks, Ensemble Classifiers, and Support Vector Machine Algorithms for Object-Based Urban Land Use/Land Cover Classification. Remote Sens. 2019, 11, 1713. [Google Scholar] [CrossRef] [Green Version]

- Gaetano, R.; Ienco, D.; Ose, K.; Cresson, R. A Two-Branch CNN Architecture for Land Cover Classification of PAN and MS Imagery. Remote Sens. 2018, 10, 1746. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Hsu, C.-Y. Automated Terrain Feature Identification from Remote Sensing Imagery: A Deep Learning Approach. Int. J. Geogr. Inf. Sci. 2020, 34, 637–660. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, Q.; Zhang, Y.; Yan, H. A Deep Convolution Neural Network Method for Land Cover Mapping: A Case Study of Qinhuangdao, China. Remote Sens. 2018, 10, 2053. [Google Scholar] [CrossRef] [Green Version]

- Sharma, A.; Liu, X.; Yang, X.; Shi, D. A Patch-Based Convolutional Neural Network for Remote Sensing Image Classification. Neural Netw. 2017, 95, 19–28. [Google Scholar] [CrossRef]

- Heryadi, Y.; Miranda, E. Land Cover Classification Based on Sentinel-2 Satellite Imagery Using Convolutional Neural Network Model: A Case Study in Semarang Area, Indonesia. In Proceedings of the Asian Conference on Intelligent Information and Database Systems, Yogyakarta, Indonesia, 8–11 April 2019; Springer: Cham, Switzerland, 2019; pp. 191–206. [Google Scholar] [CrossRef]

- Campos-Taberner, M.; García-Haro, F.J.; Martínez, B.; Izquierdo-Verdiguier, E.; Atzberger, C.; Camps-Valls, G.; Gilabert, M.A. Understanding Deep Learning in Land Use Classification Based on Sentinel-2 Time Series. Sci. Rep. 2020, 10, 17188. [Google Scholar] [CrossRef]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal Convolutional Neural Network for the Classification of Satellite Image Time Series. Remote Sens. 2019, 11, 523. [Google Scholar] [CrossRef] [Green Version]

- Stepchenko, A.M. Land-Use Classification Using Convolutional Neural Networks. Autom. Control Comput. Sci. 2021, 55, 358–367. [Google Scholar] [CrossRef]

- Nirthika, R.; Manivannan, S.; Ramanan, A.; Wang, R. Pooling in Convolutional Neural Networks for Medical Image Analysis: A Survey and an Empirical Study. Neural Comput. Appl. 2022, 5321–5347. [Google Scholar] [CrossRef]

- Yu, X.; Wu, X.; Luo, C.; Ren, P. Deep Learning in Remote Sensing Scene Classification: A Data Augmentation Enhanced Convolutional Neural Network Framework. GISci. Remote Sens. 2017, 54, 741–758. [Google Scholar] [CrossRef] [Green Version]

- Jeczmionek, E.; Kowalski, P.A. Flattening Layer Pruning in Convolutional Neural Networks. Symmetry 2021, 13, 1147. [Google Scholar] [CrossRef]

- DISTRICT GUJRANWALA. Climate. Available online: https://gujranwala.punjab.gov.pk/climate_grw#:~:text=Gujranwala has a hot semi,C (accessed on 27 May 2022).

- District Lahore. District Profile. Available online: https://lahore.punjab.gov.pk/district_profile (accessed on 27 May 2022).

- PUNJAB PORTAL. Faisalabad. Available online: https://www.punjab.gov.pk/Faisalabad (accessed on 27 May 2022).

- Wei, Y.; Yuan, Q.; Shen, H.; Zhang, L. Boosting the Accuracy of Multispectral Image Pansharpening by Learning a Deep Residual Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1795–1799. [Google Scholar] [CrossRef] [Green Version]

- Qiu, C.; Mou, L.; Schmitt, M.; Zhu, X.X. Local Climate Zone-Based Urban Land Cover Classification from Multi-Seasonal Sentinel-2 Images with a Recurrent Residual Network. ISPRS J. Photogramm. Remote Sens. 2019, 154, 151–162. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep Learning for Remote Sensing Image Classification: A Survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the ICML 2010, Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Rwanga, S.S.; Ndambuki, J.M. Accuracy Assessment of Land Use/Land Cover Classification Using Remote Sensing and GIS. Int. J. Geosci. 2017, 8, 611. [Google Scholar] [CrossRef] [Green Version]

- Stehman, S. V Sampling Designs for Accuracy Assessment of Land Cover. Int. J. Remote Sens. 2009, 30, 5243–5272. [Google Scholar] [CrossRef]

- Foody, G.M. Status of Land Cover Classification Accuracy Assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Ettehadi Osgouei, P.; Kaya, S.; Sertel, E.; Alganci, U. Separating Built-up Areas from Bare Land in Mediterranean Cities Using Sentinel-2A Imagery. Remote Sens. 2019, 11, 345. [Google Scholar] [CrossRef] [Green Version]

- Zheng, H.; Du, P.; Chen, J.; Xia, J.; Li, E.; Xu, Z.; Li, X.; Yokoya, N. Performance Evaluation of Downscaling Sentinel-2 Imagery for Land Use and Land Cover Classification by Spectral-Spatial Features. Remote Sens. 2017, 9, 1274. [Google Scholar] [CrossRef] [Green Version]

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011; ISBN 1609181778. [Google Scholar]

- Zhu, X.X.; Bamler, R. A Sparse Image Fusion Algorithm with Application to Pan-Sharpening. IEEE Trans. Geosci. Remote Sens. 2012, 51, 2827–2836. [Google Scholar] [CrossRef]

| Spectral Bands | Central Wavelength (nm) | Spatial Resolution (m) |

|---|---|---|

| Band 2: Blue | 0.409 | 10 |

| Band 3: Green | 0.56 | 10 |

| Band 4: Red | 0.665 | 10 |

| Band 5: Vegetation Red-Edge | 0.705 | 20 |

| Band 6: Vegetation Red-Edge | 0.74 | 20 |

| Band 7: Vegetation Red-Edge | 0.783 | 20 |

| Band 8: Near infrared | 0.842 | 10 |

| Band 8A: Vegetation Red-Edge | 0.865 | 20 |

| Band 11: SWIR | 1.61 | 20 |

| Band 12: SWIR | 2.19 | 20 |

| LULC Classes | Training Patches (5 × 5) Pixels |

|---|---|

| Settlement | 2400 |

| Barren land | 2400 |

| Fallow land | 2400 |

| Vegetation | 2400 |

| Water bodies | 2400 |

| Parameter | Value |

|---|---|

| Dropout | 0.2, 0.5 |

| Learning Rate | 0.0001 |

| Epochs | 300 |

| Batch Size | 128 |

| Activation Functions | ReLu, softmax |

| Loss Function | categorical cross entropy |

| Optimizer | Adam |

| Model | OA | Kappa Coefficient | Training Time |

|---|---|---|---|

| 4-band CNN | 97.7 | 0.97 | 2 min 17 s |

| 10-band CNN | 95.8 | 0.94 | 3 min 42 s |

| LULC Classes | Barren Land | Settlement | Fallow Land | Vegetation | Water Bodies | Sum | UA (%) |

|---|---|---|---|---|---|---|---|

| Barren land | 116 | 1 | 8 | 0 | 0 | 125 | 92.8 |

| Settlement | 5 | 193 | 0 | 0 | 2 | 200 | 96.5 |

| Fallow land | 2 | 0 | 197 | 1 | 0 | 200 | 98.5 |

| Vegetation | 0 | 0 | 0 | 200 | 0 | 200 | 100 |

| Water bodies | 0 | 0 | 0 | 0 | 125 | 125 | 100 |

| Sum | 123 | 194 | 205 | 201 | 127 | 850 | |

| PA (%) | 94.3 | 99.4 | 96 | 99.5 | 98.4 |

| LULC Classes | Barren Land | Settlement | Fallow Land | Vegetation | Water Bodies | Sum | UA (%) |

|---|---|---|---|---|---|---|---|

| Barren land | 110 | 2 | 1 | 0 | 4 | 117 | 94 |

| Settlement | 8 | 186 | 1 | 0 | 4 | 199 | 93.46 |

| Fallow land | 7 | 2 | 202 | 1 | 0 | 212 | 95.28 |

| Vegetation | 1 | 1 | 3 | 197 | 0 | 202 | 97.5 |

| Water bodies | 0 | 0 | 0 | 0 | 120 | 120 | 100 |

| Sum | 126 | 191 | 207 | 198 | 128 | 850 | |

| PA (%) | 87.3 | 97.3 | 97.58 | 99.4 | 93.75 |

| Testing Sites | Model | OA (%) | Kappa Coefficient |

|---|---|---|---|

| Lahore city | 4-band CNN | 94.8 | 0.93 |

| 10-band CNN | 88.8 | 0.85 | |

| Faisalabad city | 4-band CNN | 91.4 | 0.88 |

| 10-band CNN | 85.1 | 0.79 |

| LULC Classes | Barren Land | Settlement | Fallow Land | Vegetation | Water Bodies | Sum | UA (%) |

|---|---|---|---|---|---|---|---|

| Barren land | 104 | 0 | 2 | 0 | 0 | 106 | 98.1 |

| Settlement | 9 | 225 | 2 | 0 | 1 | 237 | 94.9 |

| Fallow land | 2 | 2 | 131 | 13 | 3 | 151 | 86.75 |

| Vegetation | 0 | 0 | 10 | 247 | 0 | 257 | 96.1 |

| Water bodies | 0 | 0 | 0 | 0 | 99 | 99 | 100 |

| Sum | 115 | 227 | 145 | 260 | 103 | 850 | |

| PA (%) | 90.4 | 99.1 | 90.3 | 95 | 96.11 |

| LULC Classes | Barren Land | Settlement | Fallow Land | Vegetation | Water Bodies | Sum | UA (%) |

|---|---|---|---|---|---|---|---|

| Barren land | 76 | 1 | 8 | 0 | 12 | 97 | 78.3 |

| Settlement | 16 | 207 | 0 | 0 | 3 | 226 | 91.5 |

| Fallow land | 18 | 12 | 132 | 7 | 2 | 171 | 77.1 |

| Vegetation | 3 | 4 | 9 | 254 | 0 | 270 | 94 |

| Water bodies | 0 | 0 | 0 | 0 | 86 | 86 | 100 |

| Sum | 113 | 224 | 149 | 261 | 103 | 850 | |

| PA (%) | 67.2 | 92.4 | 88.5 | 97.3 | 83.4 |

| LULC Classes | Barren Land | Settlement | Fallow Land | Vegetation | Water Bodies | Sum | UA (%) |

|---|---|---|---|---|---|---|---|

| Barren land | 46 | 0 | 0 | 2 | 0 | 48 | 95.8 |

| Settlement | 16 | 319 | 0 | 0 | 0 | 335 | 95.2 |

| Fallow land | 13 | 3 | 89 | 34 | 2 | 141 | 63.1 |

| Vegetation | 0 | 0 | 3 | 213 | 0 | 216 | 98.6 |

| Water bodies | 0 | 0 | 0 | 0 | 110 | 110 | 100 |

| Sum | 75 | 322 | 92 | 249 | 112 | 850 | |

| PA (%) | 61.3 | 99 | 96.7 | 85.5 | 98.2 |

| LULC Classes | Barren Land | Settlement | Fallow Land | Vegetation | Water Bodies | Sum | UA (%) |

|---|---|---|---|---|---|---|---|

| Barren land | 32 | 2 | 2 | 0 | 27 | 63 | 50.7 |

| Settlement | 14 | 306 | 0 | 1 | 4 | 325 | 94.1 |

| Fallow land | 23 | 7 | 78 | 30 | 0 | 138 | 56.52 |

| Vegetation | 0 | 4 | 10 | 236 | 2 | 252 | 93.65 |

| Water bodies | 0 | 0 | 0 | 0 | 72 | 72 | 100 |

| Sum | 69 | 319 | 90 | 267 | 105 | 850 | |

| PA (%) | 46.37 | 95.9 | 86.6 | 88.38 | 68.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, K.; Johnson, B.A. Land-Use and Land-Cover Classification in Semi-Arid Areas from Medium-Resolution Remote-Sensing Imagery: A Deep Learning Approach. Sensors 2022, 22, 8750. https://doi.org/10.3390/s22228750

Ali K, Johnson BA. Land-Use and Land-Cover Classification in Semi-Arid Areas from Medium-Resolution Remote-Sensing Imagery: A Deep Learning Approach. Sensors. 2022; 22(22):8750. https://doi.org/10.3390/s22228750

Chicago/Turabian StyleAli, Kamran, and Brian A. Johnson. 2022. "Land-Use and Land-Cover Classification in Semi-Arid Areas from Medium-Resolution Remote-Sensing Imagery: A Deep Learning Approach" Sensors 22, no. 22: 8750. https://doi.org/10.3390/s22228750

APA StyleAli, K., & Johnson, B. A. (2022). Land-Use and Land-Cover Classification in Semi-Arid Areas from Medium-Resolution Remote-Sensing Imagery: A Deep Learning Approach. Sensors, 22(22), 8750. https://doi.org/10.3390/s22228750