Pilot Validation Study of Inertial Measurement Units and Markerless Methods for 3D Neck and Trunk Kinematics during a Simulated Surgery Task

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

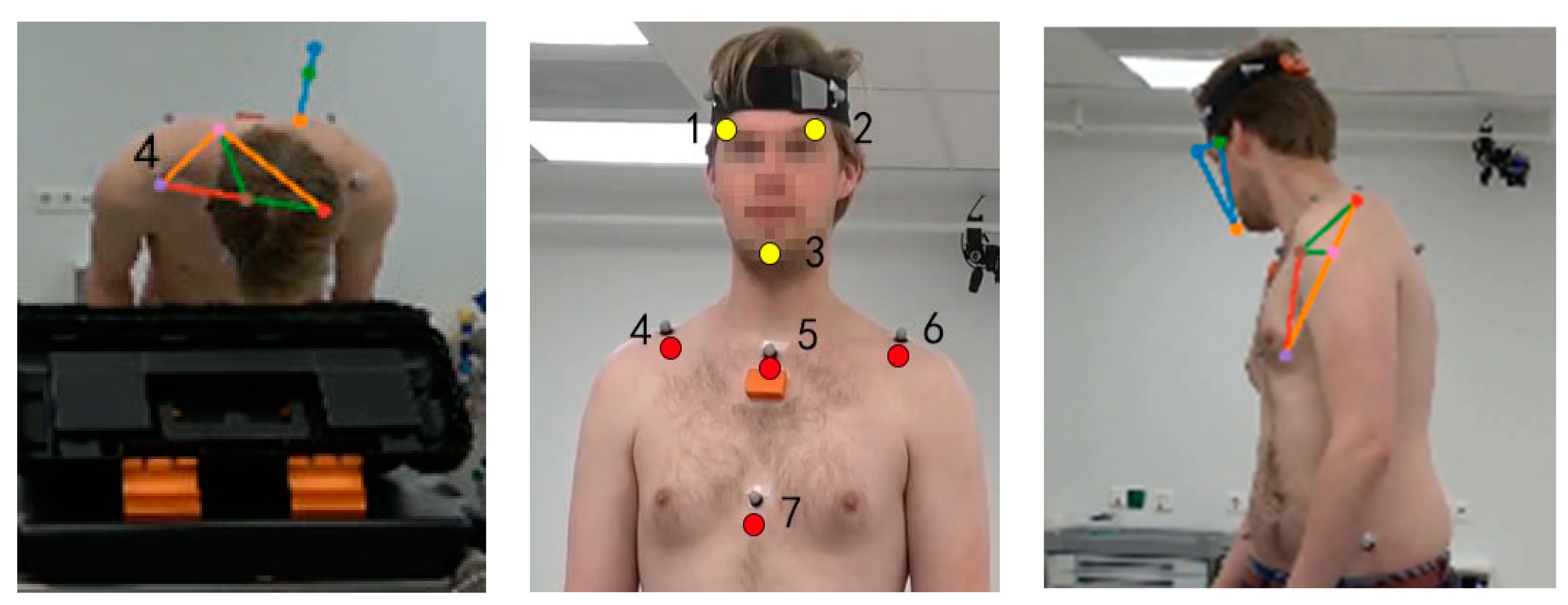

2.2. Materials

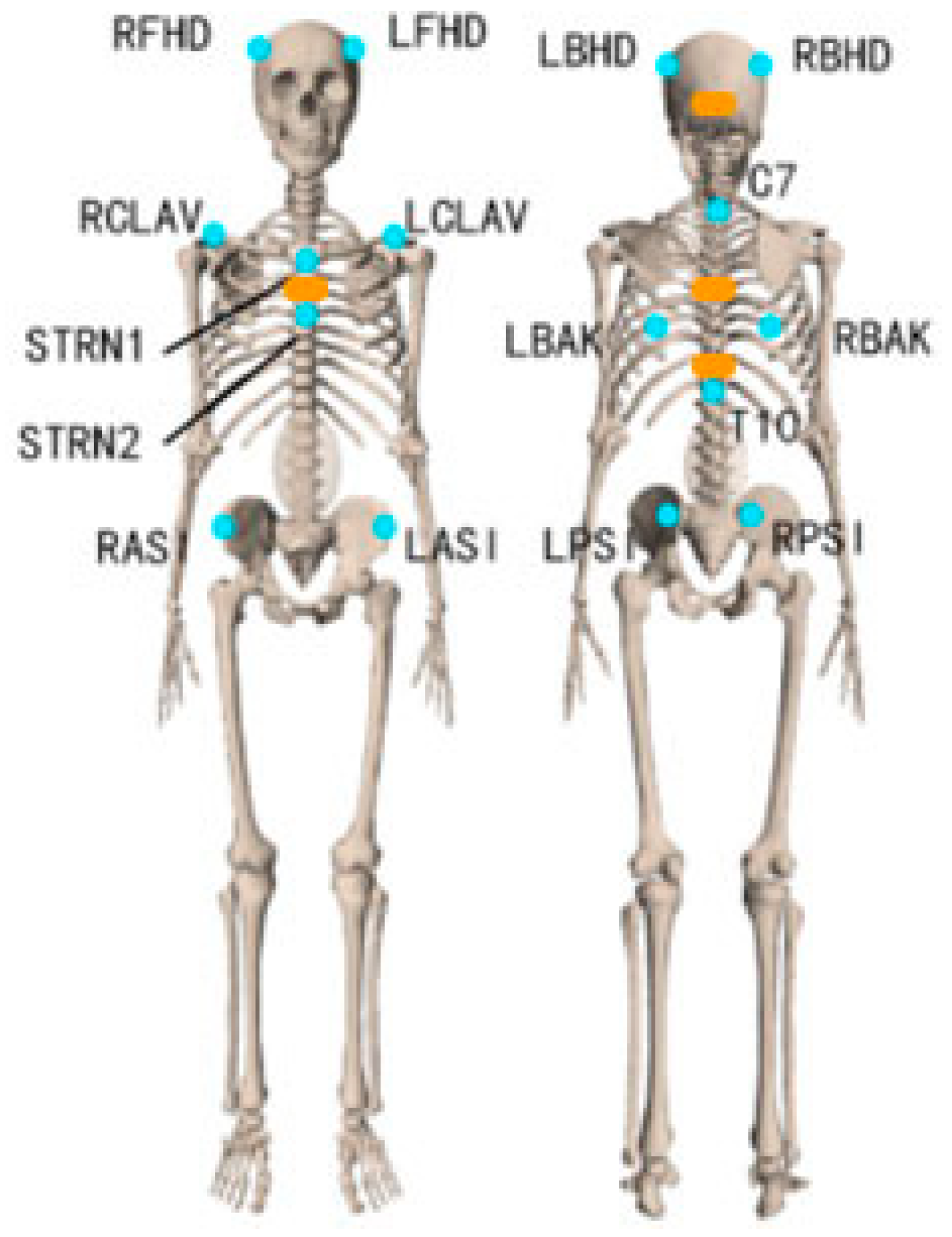

2.2.1. Marker Motion Capture Measurement

2.2.2. IMU Motion Capture Measurement

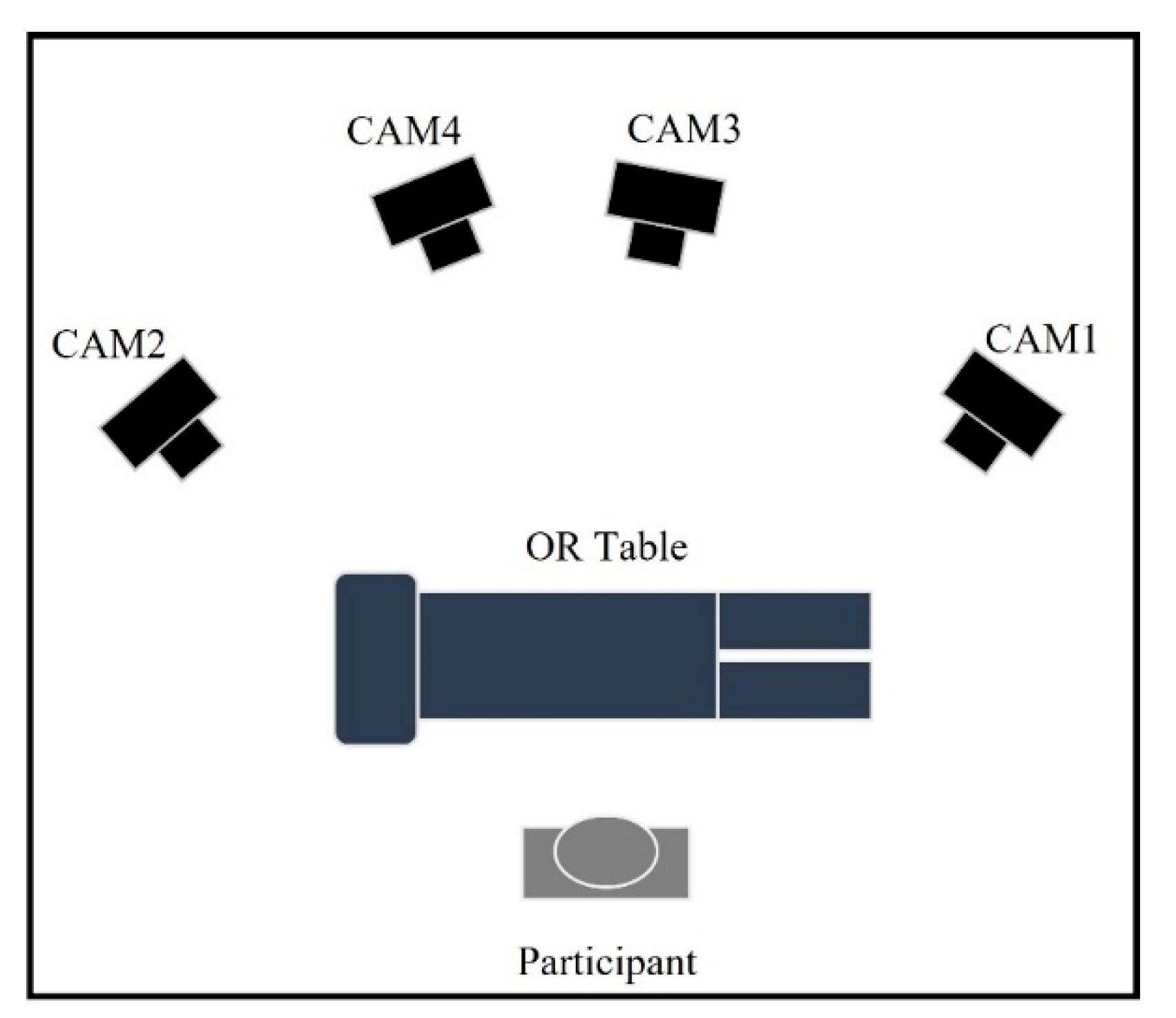

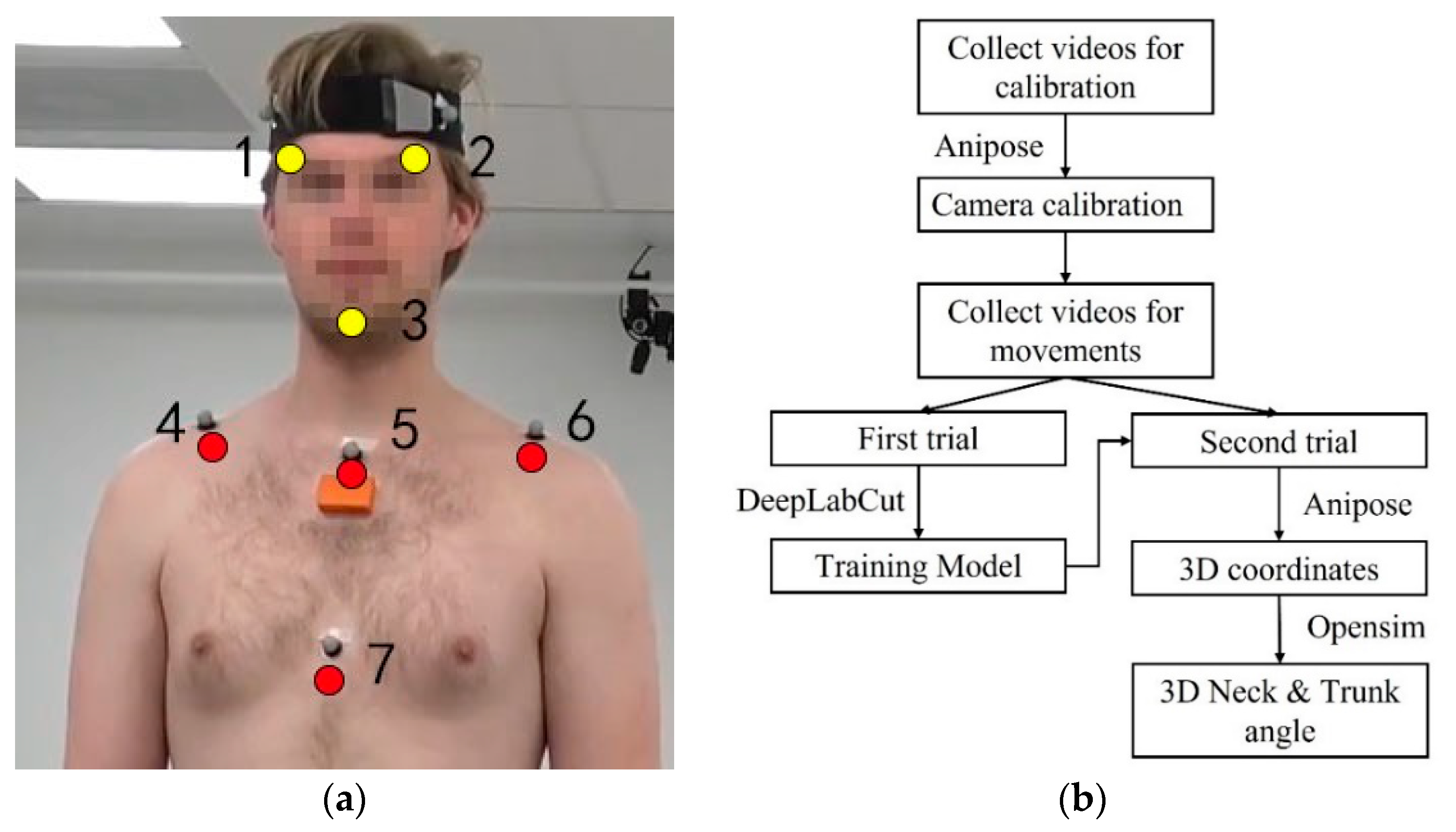

2.2.3. Markerless Motion Capture Measurement

2.3. Study Approach

2.4. Data Analysis

2.4.1. Marker-Based Data Processing

2.4.2. IMU-Based Data Processing

2.4.3. Markerless-Based Data Processing

2.5. Statistical Analysis

- The root mean square error (RMSE) between the IMU/markerless-based method and the marker-based method of the 3D neck and trunk angles over total movement time.

- The difference in absolute ROM between the IMU/markerless-based method and marker-based method of the 3D neck and trunk angles. The first data point of the angle-time series from each measurement system was subtracted to correct the offset between systems. For SP-movements, the ROM was defined as the maximum difference between the starting anatomical angle and the maximum angle of the neck and trunk [35]. For simulated surgery tasks, the ROM was defined as the difference between the minimum and maximum angle [17].

- Relative ROM error, the ratio of IMU/markerless ROM difference to the gold standard ROM.

- Paired t-tests on the mean differences in ROM between IMU/markerless-based method and marker-based method to obtain systematic biases.

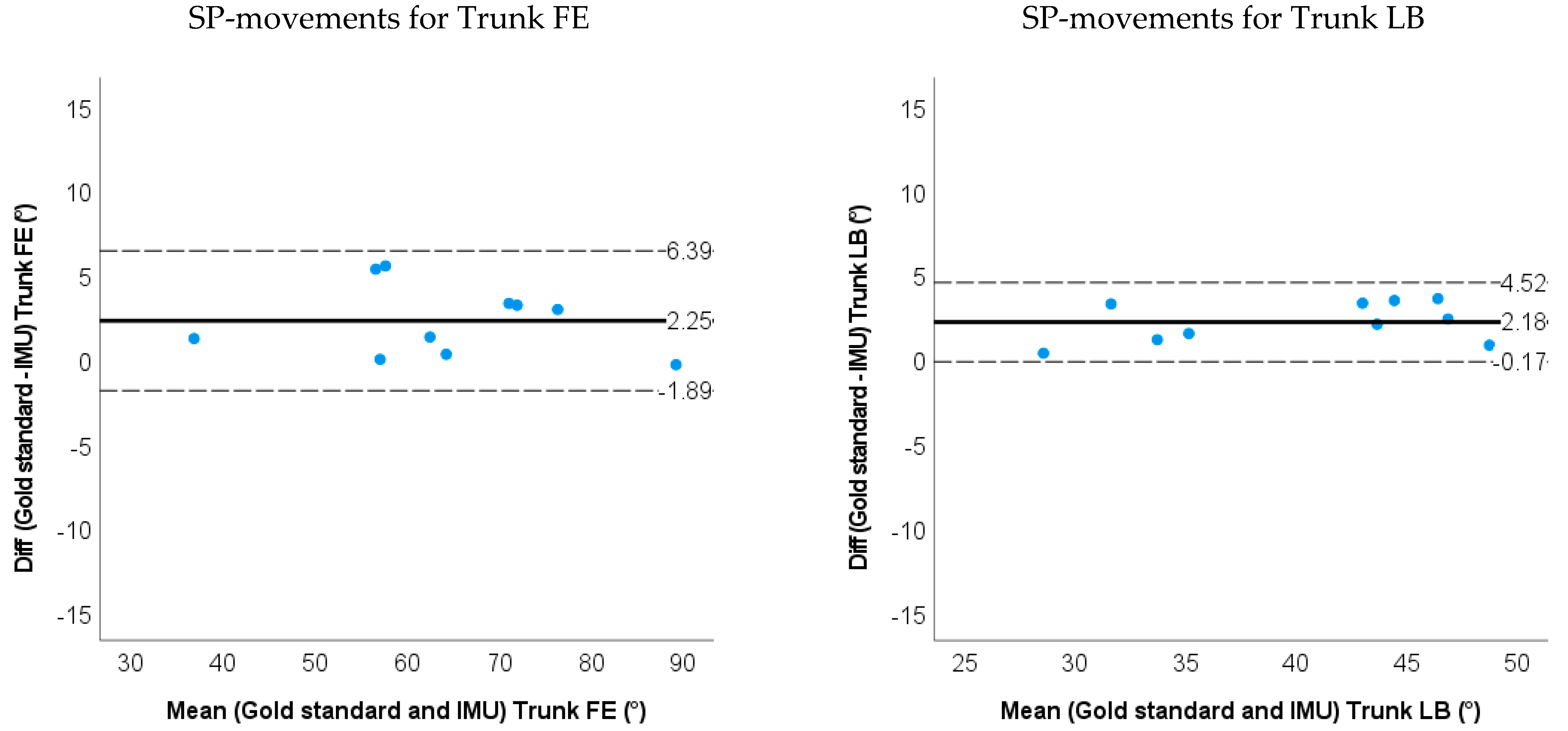

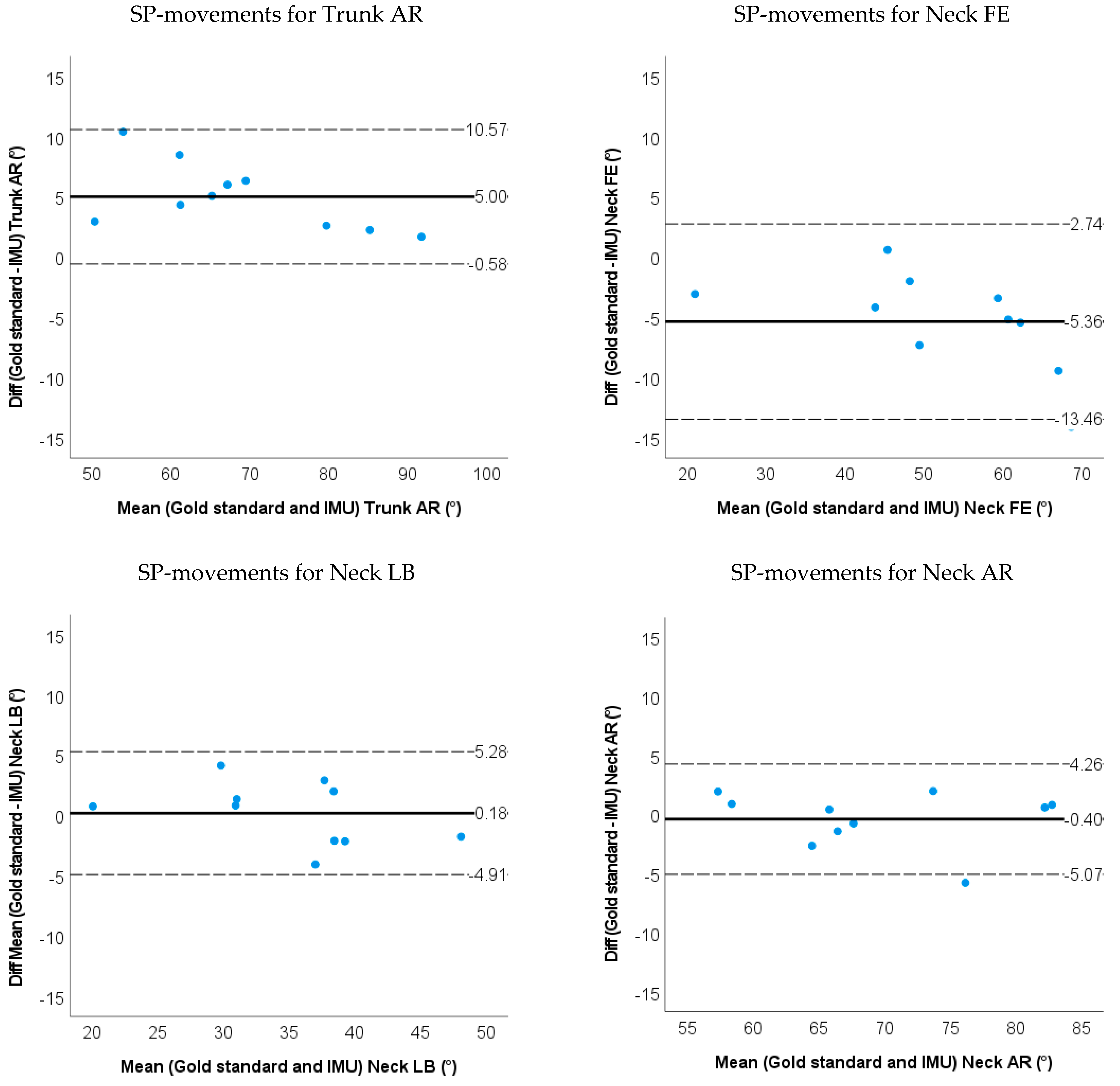

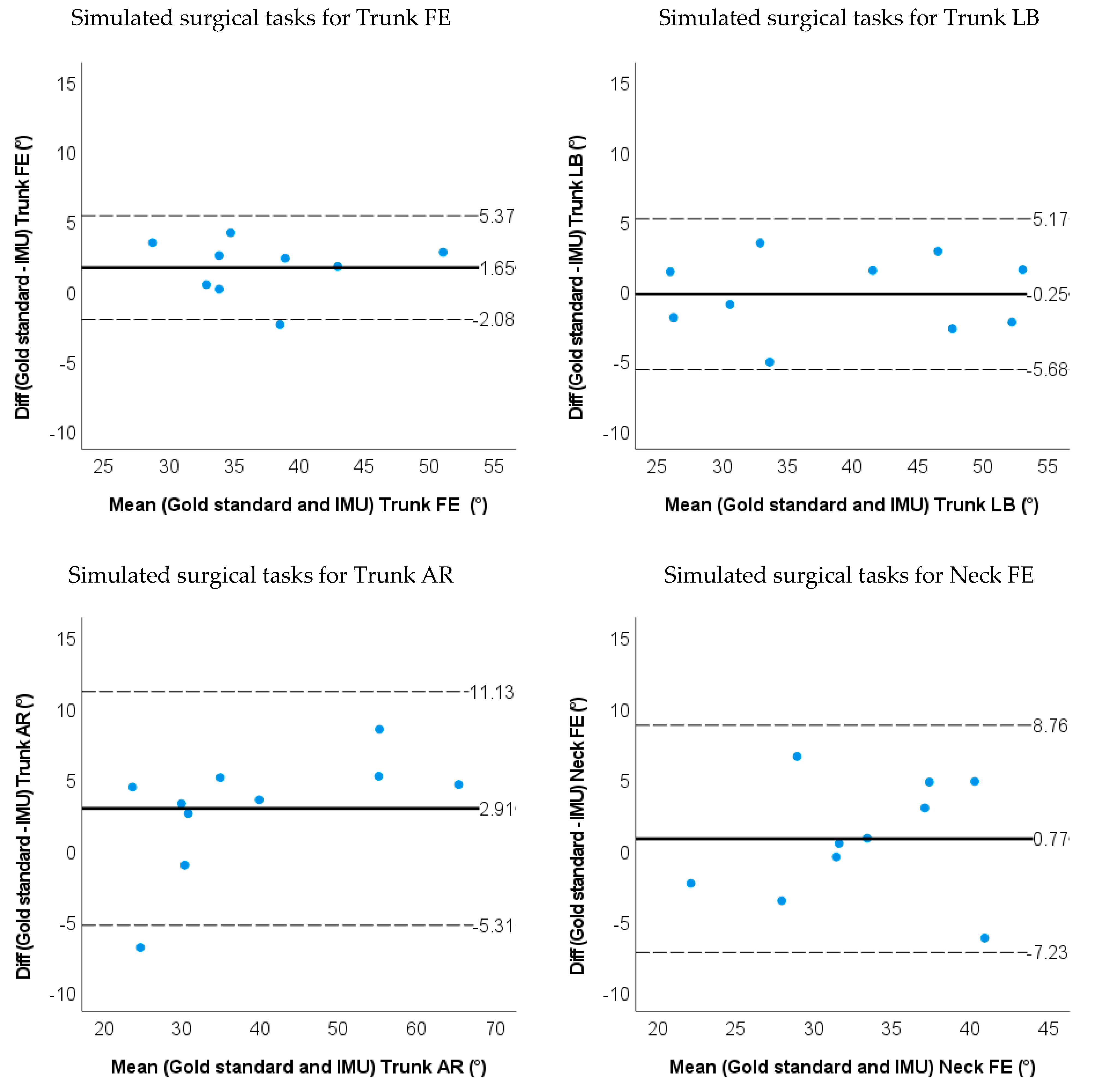

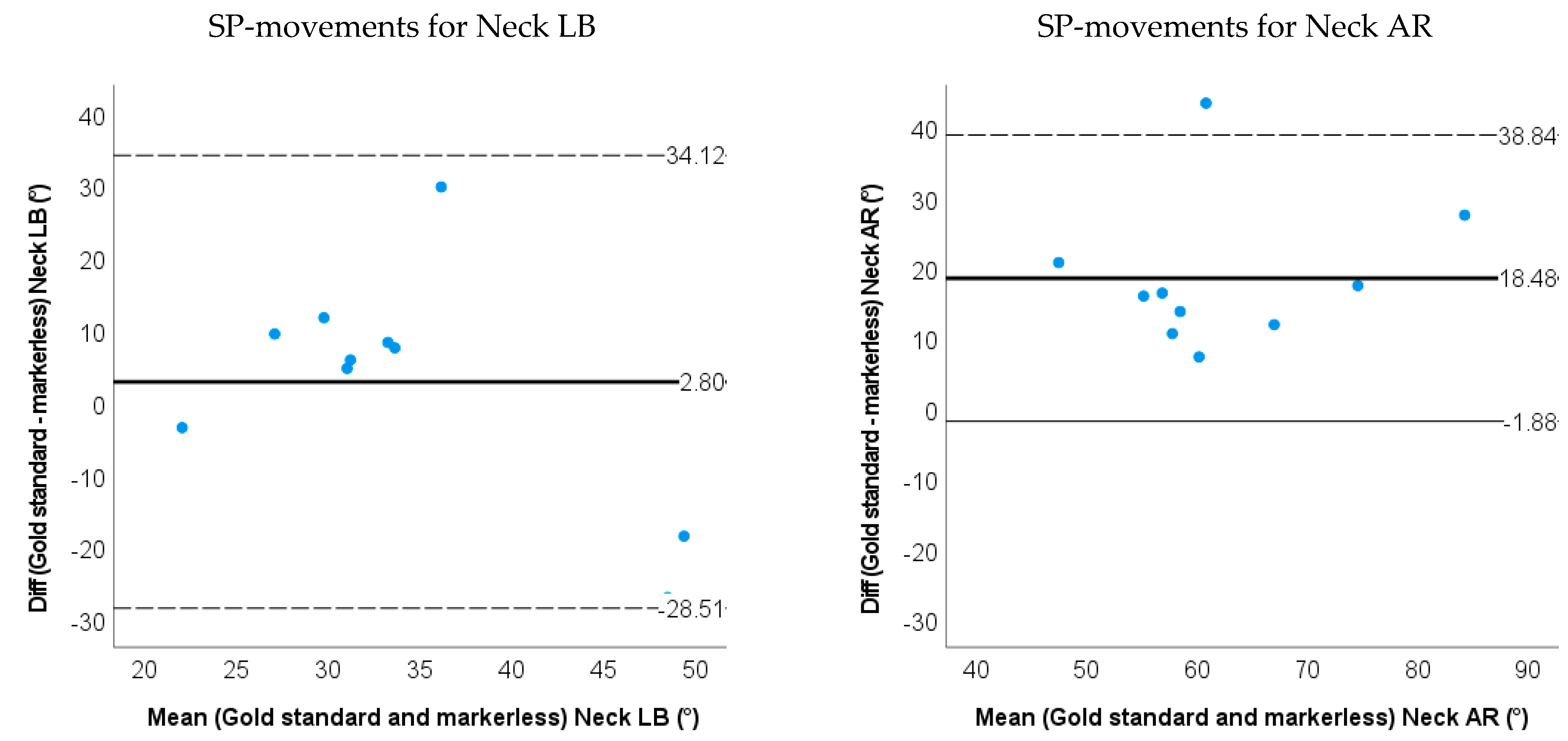

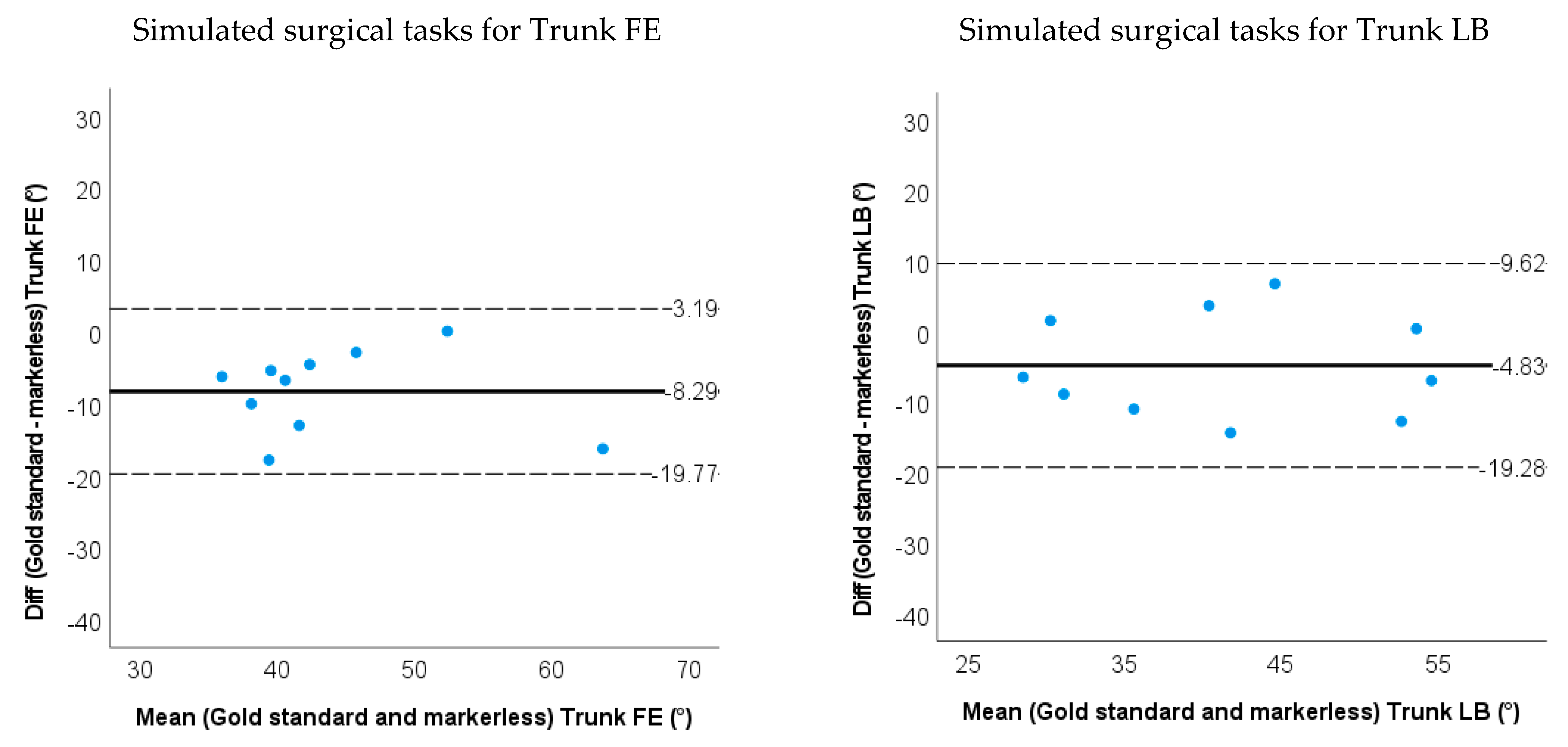

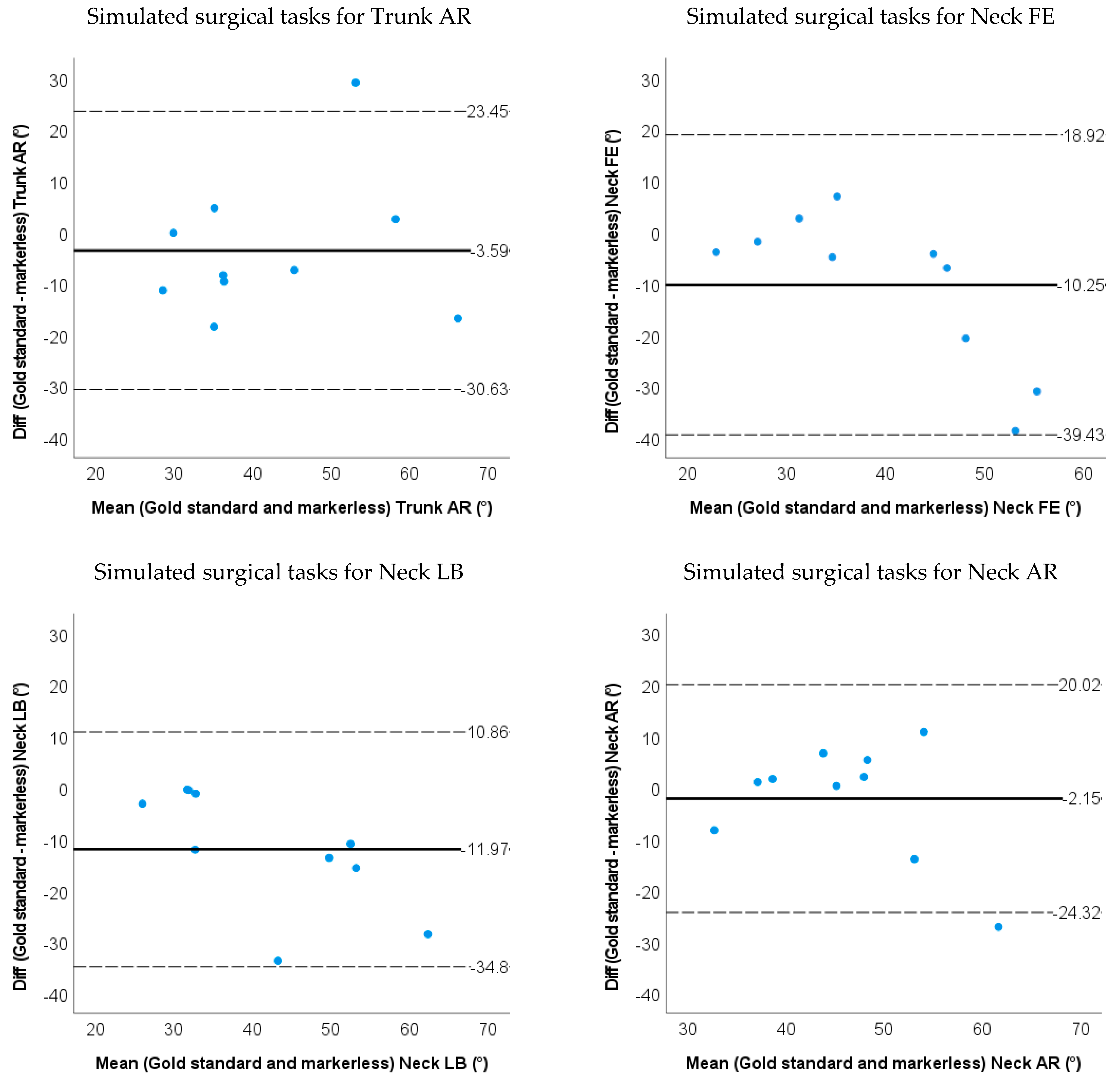

- Bland–Altman plots of the IMU and markerless method for 3D neck and trunk ROM were used to show the limits of agreement and systematic biases.

- The intraclass correlation coefficient ICC (2, 1) for ROM between the IMU/markerless-based method and marker-based method to establish the validity of the system. ICCs were considered as follows: 0.9–1 as excellent, 0.70–0.89 as good, 0.40–0.69 as acceptable, and <0.40 as low correlation [36]. The level of significance was set at 0.05.

3. Results

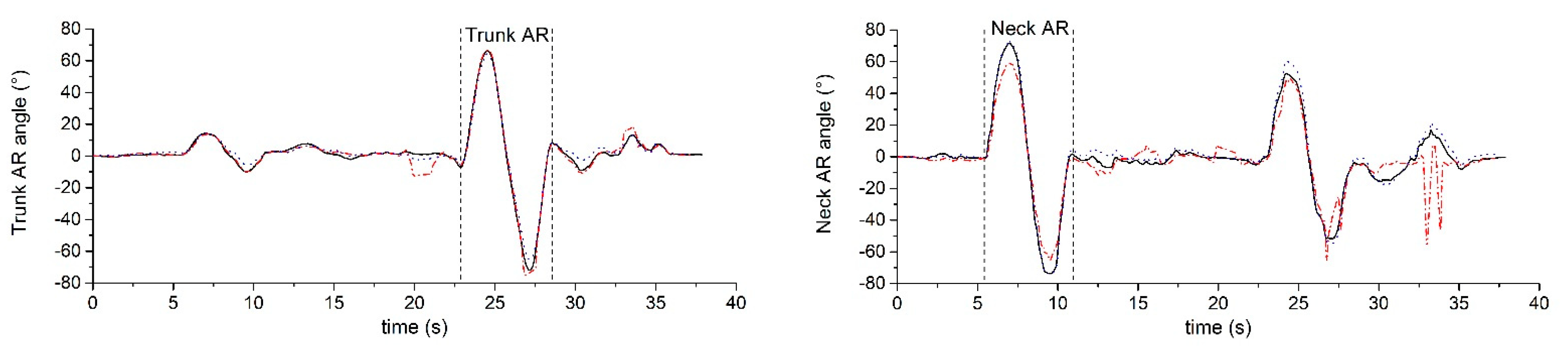

3.1. Accuracy and Validity for IMU-Based Neck and Trunk Kinematics

3.2. Accuracy and Validity for Markerless-Based Neck and Trunk Kinematics

4. Discussion

4.1. IMU Motion Capture Method

4.2. Markerless Motion Capture Method

4.3. Limitations and Recommendation

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Punnett, L.; Wegman, D.H. Work-Related Musculoskeletal Disorders: The Epidemiologic Evidence and the Debate. J. Electromyogr. Kinesiol. 2004, 14, 13–23. [Google Scholar] [CrossRef] [PubMed]

- Dianat, I.; Bazazan, A.; Souraki Azad, M.A.; Salimi, S.S. Work-Related Physical, Psychosocial and Individual Factors Associated with Musculoskeletal Symptoms among Surgeons: Implications for Ergonomic Interventions. Appl. Ergon. 2018, 67, 115–124. [Google Scholar] [CrossRef] [PubMed]

- Knudsen, M.L.; Ludewig, P.M.; Braman, J.P. Musculoskeletal Pain in Resident Orthopaedic Surgeons: Results of a Novel Survey. Iowa Orthop. J. 2014, 34, 190–196. [Google Scholar] [PubMed]

- Voss, R.K.; Chiang, Y.J.; Cromwell, K.D.; Urbauer, D.L.; Lee, J.E.; Cormier, J.N.; Stucky, C.C.H. Do No Harm, Except to Ourselves? A Survey of Symptoms and Injuries in Oncologic Surgeons and Pilot Study of an Intraoperative Ergonomic Intervention. J. Am. Coll. Surg. 2017, 224, 16–25.e1. [Google Scholar] [CrossRef] [PubMed]

- Adams, S.R.; Hacker, M.R.; McKinney, J.L.; Elkadry, E.A.; Rosenblatt, P.L. Musculoskeletal Pain in Gynecologic Surgeons. J. Minim. Invasive Gynecol. 2013, 20, 656–660. [Google Scholar] [CrossRef]

- Lietz, J.; Kozak, A.; Nienhaus, A. Prevalence and Occupational Risk Factors of Musculoskeletal Diseases and Pain among Dental Professionals in Western Countries: A Systematic Literature Review and Meta-Analysis. PLoS ONE 2018, 13, e0208628. [Google Scholar] [CrossRef]

- Pejčić, N.; Petrović, V.; Marković, D.; Miličić, B.; Dimitrijević, I.I.; Perunović, N.; Čakić, S. Assessment of Risk Factors and Preventive Measures and Their Relations to Work-Related Musculoskeletal Pain among Dentists. Work 2017, 57, 573–593. [Google Scholar] [CrossRef]

- Zarra, T.; Lambrianidis, T. Musculoskeletal Disorders amongst Greek Endodontists: A National Questionnaire Survey. Int. Endod. J. 2014, 47, 791–801. [Google Scholar] [CrossRef]

- Meijsen, P.; Knibbe, H.J.J. Work-Related Musculoskeletal Disorders of Perioperative Personnel in the Netherlands. AORN J. 2007, 86, 193–208. [Google Scholar] [CrossRef]

- Warren, N. Causes of Musculoskeletal Disorders in Dental Hygienists and Dental Hygiene Students: A Study of Combined Biomechanical and Psychosocial Risk Factors. Work 2010, 35, 441–454. [Google Scholar] [CrossRef]

- Norasi, H.; Tetteh, E.; Money, S.R.; Davila, V.J.; Meltzer, A.J.; Morrow, M.M.; Fortune, E.; Mendes, B.C.; Hallbeck, M.S. Intraoperative Posture and Workload Assessment in Vascular Surgery. Appl. Ergon. 2021, 92, 103344. [Google Scholar] [CrossRef] [PubMed]

- Wong, W.Y.; Wong, M.S.; Lo, K.H. Clinical Applications of Sensors for Human Posture and Movement Analysis: A Review. Prosthet. Orthot. Int. 2007, 31, 62–75. [Google Scholar] [CrossRef] [PubMed]

- Bolink, S.A.A.N.; Naisas, H.; Senden, R.; Essers, H.; Heyligers, I.C.; Meijer, K.; Grimm, B. Validity of an Inertial Measurement Unit to Assess Pelvic Orientation Angles during Gait, Sit-Stand Transfers and Step-up Transfers: Comparison with an Optoelectronic Motion Capture System. Med. Eng. Phys. 2016, 38, 225–231. [Google Scholar] [CrossRef] [PubMed]

- Parrington, L.; Jehu, D.A.; Fino, P.C.; Pearson, S.; El-Gohary, M.; King, L.A. Validation of an Inertial Sensor Algorithm to Quantify Head and Trunk Movement in Healthy Young Adults and Individuals with Mild Traumatic Brain Injury. Sensors 2018, 18, 4501. [Google Scholar] [CrossRef] [PubMed]

- Digo, E.; Pierro, G.; Pastorelli, S.; Gastaldi, L. Tilt-Twist Method Using Inertial Sensors to Assess Spinal Posture during Gait; Springer International Publishing: New York, NY, USA, 2020; Volume 980, ISBN 9783030196479. [Google Scholar]

- Michaud, F.; Lugrís, U.; Cuadrado, J. Determination of the 3D Human Spine Posture from Wearable Inertial Sensors and a Multibody Model of the Spine. Sensors 2022, 22, 4796. [Google Scholar] [CrossRef]

- Morrow, M.M.B.; Lowndes, B.; Fortune, E.; Kaufman, K.R.; Hallbeck, M.S. Validation of Inertial Measurement Units for Upper Body Kinematics. J. Appl. Biomech. 2017, 33, 227–232. [Google Scholar] [CrossRef]

- Xsens Technologies B.V. MVN User Manual; Xsens Technologies B.V.: Enschede, The Netherlands, 2021; 162p. [Google Scholar]

- Colyer, S.L.; Evans, M.; Cosker, D.P.; Salo, A.I.T. A Review of the Evolution of Vision-Based Motion Analysis and the Integration of Advanced Computer Vision Methods Towards Developing a Markerless System. Sports Med.-Open 2018, 4, 24. [Google Scholar] [CrossRef]

- Nath, T.; Mathis, A.; Chen, A.C.; Patel, A.; Bethge, M.; Mathis, M.W. Using DeepLabCut for 3D Markerless Pose Estimation across Species and Behaviors. Nat. Protoc. 2019, 14, 2152–2176. [Google Scholar] [CrossRef]

- Karashchuk, P.; Rupp, K.L.; Dickinson, E.S.; Walling-Bell, S.; Sanders, E.; Azim, E.; Brunton, B.W.; Tuthill, J.C. Anipose: A Toolkit for Robust Markerless 3D Pose Estimation. Cell Rep. 2021, 36, 109730. [Google Scholar] [CrossRef]

- Cronin, N.J.; Rantalainen, T.; Ahtiainen, J.P.; Hynynen, E.; Waller, B. Markerless 2D Kinematic Analysis of Underwater Running: A Deep Learning Approach. J. Biomech. 2019, 87, 75–82. [Google Scholar] [CrossRef]

- Van den Bogaart, M.; Bruijn, S.M.; Spildooren, J.; van Dieën, J.H.; Meyns, P. Using Deep Learning to Track 3D Kinematics. Gait Posture 2020, 81, 369–370. [Google Scholar] [CrossRef]

- Ota, M.; Tateuchi, H.; Hashiguchi, T.; Kato, T.; Ogino, Y.; Yamagata, M.; Ichihashi, N. Verification of Reliability and Validity of Motion Analysis Systems during Bilateral Squat Using Human Pose Tracking Algorithm. Gait Posture 2020, 80, 62–67. [Google Scholar] [CrossRef] [PubMed]

- Nakano, N.; Sakura, T.; Ueda, K.; Omura, L.; Kimura, A.; Iino, Y.; Fukashiro, S.; Yoshioka, S. Evaluation of 3D Markerless Motion Capture Accuracy Using OpenPose with Multiple Video Cameras. Front. Sports Act. Living 2020, 2, 50. [Google Scholar] [CrossRef] [PubMed]

- Zago, M.; Luzzago, M.; Marangoni, T.; de Cecco, M.; Tarabini, M.; Galli, M. 3D Tracking of Human Motion Using Visual Skeletonization and Stereoscopic Vision. Front. Bioeng. Biotechnol. 2020, 8, 181. [Google Scholar] [CrossRef]

- Vicon Motion Systems Ltd. Vicon® Plug-in Gait Reference Guide; Vicon Motion Systems Ltd.: Oxford, UK, 2017; 164p. [Google Scholar]

- An, G.H.; Lee, S.; Seo, M.W.; Yun, K.; Cheong, W.S.; Kang, S.J. Charuco Board-Based Omnidirectional Camera Calibration Method. Electronics 2018, 7, 421. [Google Scholar] [CrossRef]

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1994; ISBN 0-13-060774-6. [Google Scholar]

- Burkhart, K.; Grindle, D.; Bouxsein, M.L.; Anderson, D.E. Between-Session Reliability of Subject-Specific Musculoskeletal Models of the Spine Derived from Optoelectronic Motion Capture Data. J. Biomech. 2020, 112, 110044. [Google Scholar] [CrossRef]

- Delp, S.L.; Anderson, F.C.; Arnold, A.S.; Loan, P.; Habib, A.; John, C.T.; Guendelman, E.; Thelen, D.G. OpenSim: Open-Source Software to Create and Analyze Dynamic Simulations of Movement. IEEE Trans. Biomed. Eng. 2007, 54, 1940–1950. [Google Scholar] [CrossRef]

- Al Borno, M.; O’Day, J.; Ibarra, V.; Dunne, J.; Seth, A.; Habib, A.; Ong, C.; Hicks, J.; Uhlrich, S.; Delp, S. OpenSense: An Open-Source Toolbox for Inertial-Measurement-Unit-Based Measurement of Lower Extremity Kinematics over Long Durations. J. Neuroeng. Rehabil. 2022, 19, 22. [Google Scholar] [CrossRef]

- Hicks, J.L.; Uchida, T.K.; Seth, A.; Rajagopal, A.; Delp, S.L. Is My Model Good Enough? Best Practices for Verification and Validation of Musculoskeletal Models and Simulations of Movement. J. Biomech. Eng. 2015, 137, 020905. [Google Scholar] [CrossRef]

- Xsens Technologies. MT Manager User Manual Revision 2020.A; Xsens Technologies: Enschede, The Netherlands, 2020. [Google Scholar]

- Sers, R.; Forrester, S.; Moss, E.; Ward, S.; Ma, J.; Zecca, M. Validity of the Perception Neuron Inertial Motion Capture System for Upper Body Motion Analysis. Meas. J. Int. Meas. Confed. 2020, 149, 107024. [Google Scholar] [CrossRef]

- Shrout, P.E.; Fleiss, J.L. Intraclass Correlations: Uses in Assessing Rater Reliability. Psychol. Bull. 1979, 86, 420–428. [Google Scholar] [CrossRef] [PubMed]

- Mcginley, J.L.; Baker, R.; Wolfe, R.; Morris, M.E. The Reliability of Three-Dimensional Kinematic Gait Measurements: A Systematic Review. Gait Posture 2009, 29, 360–369. [Google Scholar] [CrossRef] [PubMed]

- Teufl, W.; Miezal, M.; Taetz, B.; Fröhlich, M.; Bleser, G. Validity, Test-Retest Reliability and Long-Term Stability of Magnetometer Free Inertial Sensor Based 3D Joint Kinematics. Sensors 2018, 18, 1980. [Google Scholar] [CrossRef]

- Kang, G.E.; Gross, M.M. Concurrent Validation of Magnetic and Inertial Measurement Units in Estimating Upper Body Posture during Gait. Meas. J. Int. Meas. Confed. 2016, 82, 240–245. [Google Scholar] [CrossRef]

| Task | Set-Up | Instructions |

|---|---|---|

| Picking up small objects, transferring them and putting them down | An operating table was placed in front of the participant. The height of the operating table was adjusted to the most comfortable position for participants. A box with tilted containers was placed on the operating table. The function of tilted containers is to block the vision of the participants so that they need to look down to see the forceps. Five small objects (bottle caps) were placed inside the box, two forceps were placed on the left table. | (1) Pick up both forceps on the left side of the table (one in each hand). (2) Use the forceps to grasp a bottle cap with the left hand from the left tilted container. (3) Transfer the object from the left-hand forceps to the right-hand forceps. (4) Put the bottle cap into the right tilted container. (5) Put the forceps down at the original position. (6) Get back to normal position. |

| Trunk FE | Trunk LB | Trunk AR | Neck FE | Neck LB | Neck AR | |||

|---|---|---|---|---|---|---|---|---|

| IMU method | SP-movements | RMSE | 2.3 (1.3) | 2.1 (0.9) | 4.7 (1.7) | 3.7 (2.2) | 2.0 (1.0) | 2.2 (1.1) |

| ROM difference | 2.3 (2.1) | 2.2 (1.2) | 5.0 (2.9) | 5.4 (4.1) | 0.2 (2.6) | 0.4 (2.4) | ||

| LOA | −1.9~6.4 | −0.2~4.5 | −0.6~10.6 | −13.5~2.74 | −4.9~5.3 | −5.1~4.3 | ||

| Relative ROM error | 0.035 | 0.053 | 0.070 | 0.11 | 0.0057 | 0.0058 | ||

| Simulated surgery task | RMSE | 2.3 (1.1) | 2.5 (1.2) | 3.6 (1.8) | 3.6 (2.2) | 3.9 (2.0) | 3.6 (2.1) | |

| ROM difference | 1.7 (1.9) | 0.3 (2.8) | 2.9 (4.2) | 0.8 (4.1) | 0.3 (2.8) | 2.2 (3.0) | ||

| LOA | −2.1~5.4 | −5.7~5.2 | −5.3~11.1 | −7.2~8.8 | −5.2~5.8 | −8.1~3.7 | ||

| Relative ROM error | 0.043 | 0.0077 | 0.072 | 0.024 | 0.0084 | 0.048 | ||

| Markerless method | SP-movements | RMSE | 9.6 (12.5) | 4.5 (4.0) | 14.9 (10.1) | 4.7 (3.0) | 7.6 (3.8) | 15.2 (8.2) |

| ROM difference | 6.4 (7.1) | 5.5 (12.1) | 11.7 (13.5) | 2.9 (8.5) | 2.8 (16.0) | 18.5 (10.4) | ||

| LOA | −7.5~20.4 | −29.2~18.2 | −14.8~38.1 | −13.6~19.5 | −28.5~34.1 | −1.9~38.8 | ||

| Relative ROM error | 0.10 | 0.13 | 0.16 | 0.058 | 0.080 | 0.26 | ||

| Simulated surgery task | RMSE | 5.5 (2.1) | 5.6 (3.3) | 8.7 (4.1) | 6.1 (3.2) | 7.0 (4.2) | 7.3 (2.7) | |

| ROM difference | 8.3 (5.8) | 4.8 (7.13) | 3.6 (13.8) | 10.3 (14.7) | 12.0 (11.1) | 2.2 (9.9) | ||

| LOA | −19.8~3.2 | −19.3~9.6 | −30.6~23.5 | −39.4~18.9 | −34.8~10.9 | −24.3~20.0 | ||

| Relative ROM error | 0.21 | 0.12 | 0.089 | 0.31 | 0.33 | 0.048 |

| Trunk FE | Trunk LB | Trunk AR | Neck FE | Neck LB | Neck AR | |||

|---|---|---|---|---|---|---|---|---|

| IMU method | SP-movements | ICC (2,1) | 0.98 | 0.95 | 0.92 | 0.90 | 0.99 | 0.97 |

| 95% CI (p value) | 0.74~1.00 (p < 0.001) | 0.10~0.99 (p < 0.001) | 0.03~0.98 (p < 0.001) | 0.16~0.98 (p < 0.001) | 0.96~1.00 (p < 0.001) | 0.88~0.99 (p < 0.001) | ||

| Simulated surgery task | ICC (2,1) | 0.96 | 0.97 | 0.95 | 0.80 | 0.96 | 0.95 | |

| 95% CI (p value) | 0.72~0.99 (p < 0.001) | 0.88~0.99 (p < 0.001) | 0.74~0.99 (p < 0.001) | 0.39~0.95 (p < 0.01) | 0.83~0.99 (p < 0.001) | 0.73~0.99 (p < 0.001) | ||

| Markerless method | SP-movements | ICC (2,1) | 0.83 | 0.59 | 0.08 | 0.86 | 0.09 | 0.28 |

| 95% CI (p value) | 0.25~0.96 (p < 0.001) | 0.041~0.88 (p < 0.05) | −0.25~0.56 (p = 0.351) | 0.57~0.96 (p < 0.001) | −0.61~0.67 (p = 0.408) | −0.10~0.72 (p < 0.05) | ||

| Simulated surgery task | ICC (2,1) | 0.55 | 0.70 | 0.56 | 0.31 | 0.47 | 0.42 | |

| 95% CI (p value) | −0.11~0.88 (p < 0.01) | 0.18~0.92 (p < 0.01) | −0.06~0.87 (p < 0.05) | −0.19~0.75 (p = 0.122) | −0.11~0.83 (p < 0.05) | −0.27~0.82 (p = 0.1) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Greve, C.; Verkerke, G.J.; Roossien, C.C.; Houdijk, H.; Hijmans, J.M. Pilot Validation Study of Inertial Measurement Units and Markerless Methods for 3D Neck and Trunk Kinematics during a Simulated Surgery Task. Sensors 2022, 22, 8342. https://doi.org/10.3390/s22218342

Zhang C, Greve C, Verkerke GJ, Roossien CC, Houdijk H, Hijmans JM. Pilot Validation Study of Inertial Measurement Units and Markerless Methods for 3D Neck and Trunk Kinematics during a Simulated Surgery Task. Sensors. 2022; 22(21):8342. https://doi.org/10.3390/s22218342

Chicago/Turabian StyleZhang, Ce, Christian Greve, Gijsbertus Jacob Verkerke, Charlotte Christina Roossien, Han Houdijk, and Juha M. Hijmans. 2022. "Pilot Validation Study of Inertial Measurement Units and Markerless Methods for 3D Neck and Trunk Kinematics during a Simulated Surgery Task" Sensors 22, no. 21: 8342. https://doi.org/10.3390/s22218342

APA StyleZhang, C., Greve, C., Verkerke, G. J., Roossien, C. C., Houdijk, H., & Hijmans, J. M. (2022). Pilot Validation Study of Inertial Measurement Units and Markerless Methods for 3D Neck and Trunk Kinematics during a Simulated Surgery Task. Sensors, 22(21), 8342. https://doi.org/10.3390/s22218342