Classification of Activities of Daily Living Based on Grasp Dynamics Obtained from a Leap Motion Controller

Abstract

1. Introduction

2. Materials and Methods

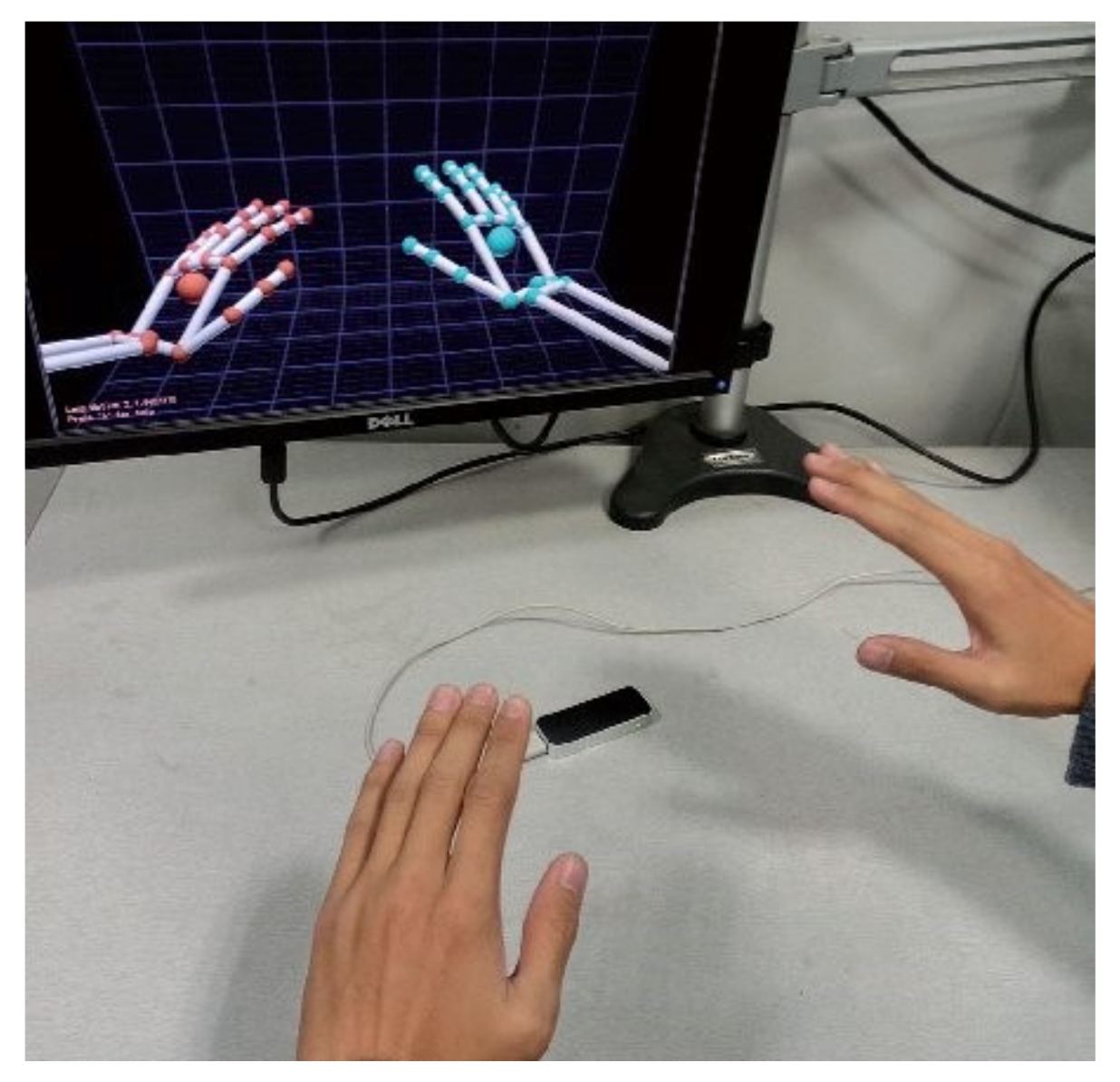

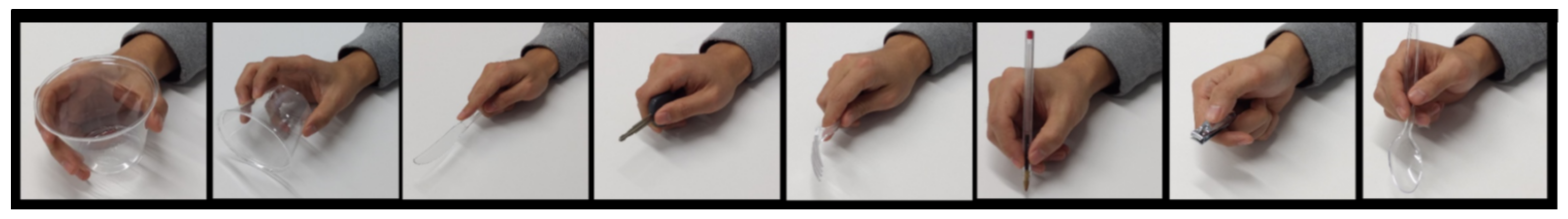

2.1. Subjects and Data Acquisition

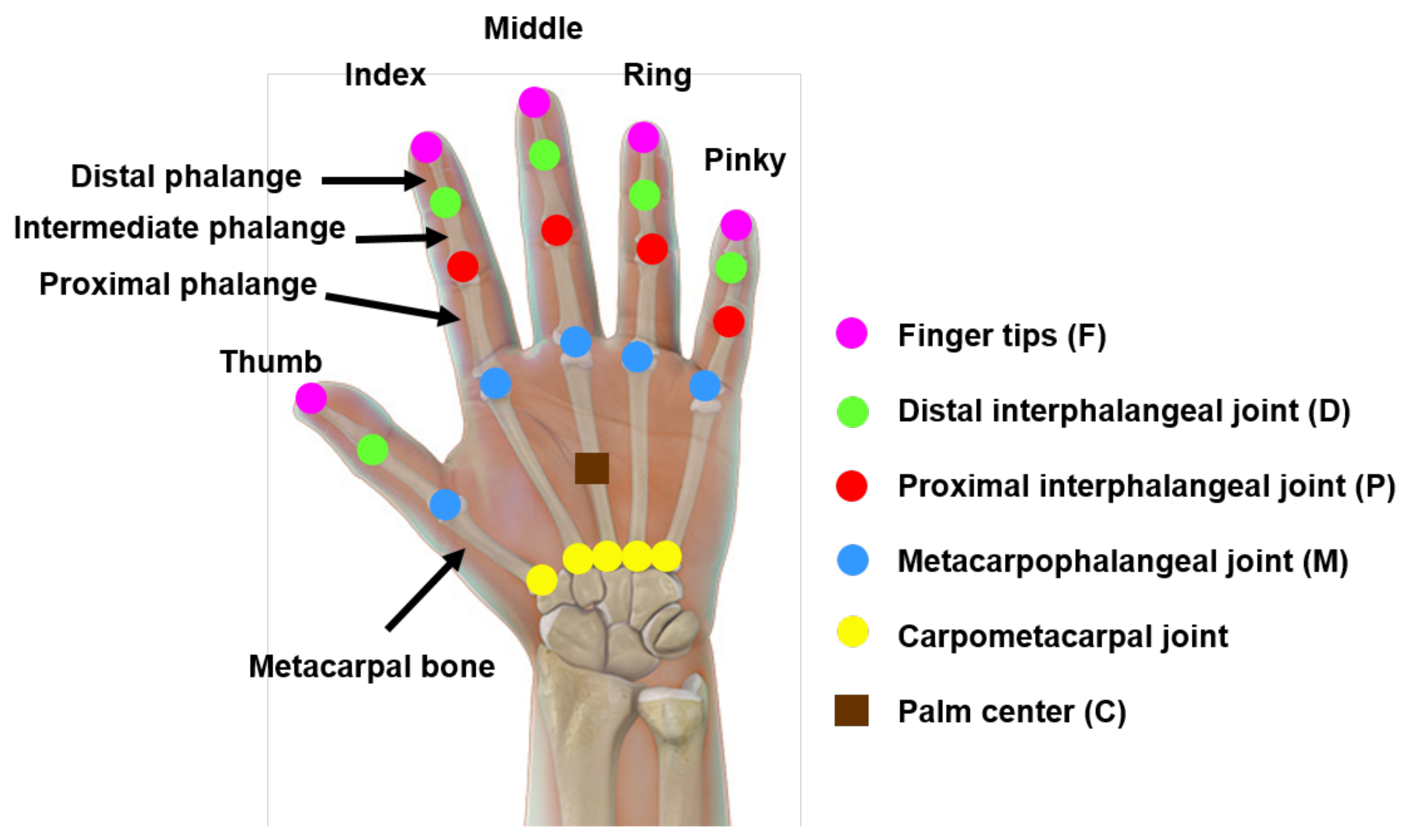

2.2. Preprocessing

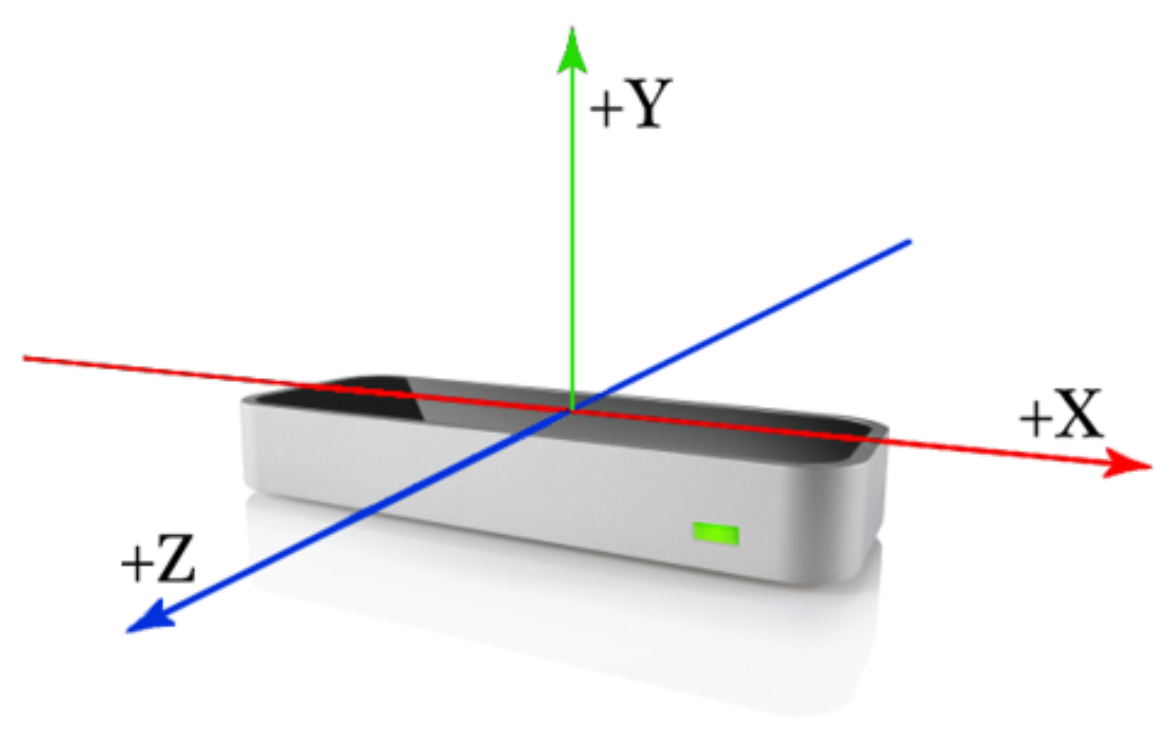

2.2.1. Change of Basis

2.2.2. Filtering

2.3. Features and Classifiers

2.3.1. Feature Extraction

- Adjacent Fingertips Angle (AFA): This feature demonstrates the angle between every two adjacent fingertip vectors, which is the angle between the vectors from the palm center to the fingertips. The AFA is calculated by Equation (3), where represents the fingertip location. This feature was normalized to the interval of [0, 1] by dividing the angles by . Lu et al. [61] achieved a classification accuracy of 74.9% using the combination of this feature and the hidden conditional neural field (HCNF) as the classifier.

- Adjacent Tips Distance (ATD): This feature represents the Euclidean distance between every two adjacent fingertips and is calculated by Equation (4), in which represents the fingertip location. There are four spaces between the five fingers of each hand, so there are four ATDs in each hand. This feature was normalized to the interval of [0, 1] by dividing the calculated distances by M. Lu et al. [61] achieved an accuracy level of 74.9% by using the combination of this feature and HCNF.

- Normalized Palm-Tip Distance (NPTD): This feature represents the Euclidean distance between the Palm Center and each fingertip. The NPTD is calculated by Equation (5) where represents the fingertip location, and C is the location of the palm center. This feature was normalized to the interval [0, 1] by dividing the distance by M. Lu et al. [61] achieved an accuracy level of 81.9% using the combination of this feature and HCNF, while Marin et al. [63] achieved an accuracy level of 76.1% using the combination of the Support Vector Machine (SVM) with the Radial Basis Function (RBF) kernel and Random Forest (RF) algorithms.

- Fingertip- Angle (FHA): This feature determines the angle between the vector from the palm center to the projection of every fingertip on the palm plane and , which is the finger direction of the hand coordinate system, as presented in Figure 8. FHA is calculated by Equation (7), in which is the projection of the on the palm plane. The palm plane is a plane that is orthogonal to the vector and contains . By dividing the angles by , this feature was normalized to the interval of [0, 1]. Lu et al. [61] and Marin et al. [63] achieved accuracy levels of 80.3% and 74.2% when classifying FHA features by HCNF and by using the combination of RBF-SVM with RF.

- Fingertip Elevation (FTE): Another geometrical feature is the fingertip elevation, which defines the fingertip distance from the palm plane. The FTE is calculated by Equation (8) in which “” is the sign function, and is the normal vector to the palm plane. Like previous features, the is the projection of the on the palm plane. Lu et al. [61] achieved an accuracy level of 78.7% using the combination of this feature and HCNF, while Marin et al. [63] achieved an accuracy level of 73.1% when classifying FTE features by the combination of SVM with the RBF kernel and RF.

2.3.2. Classification

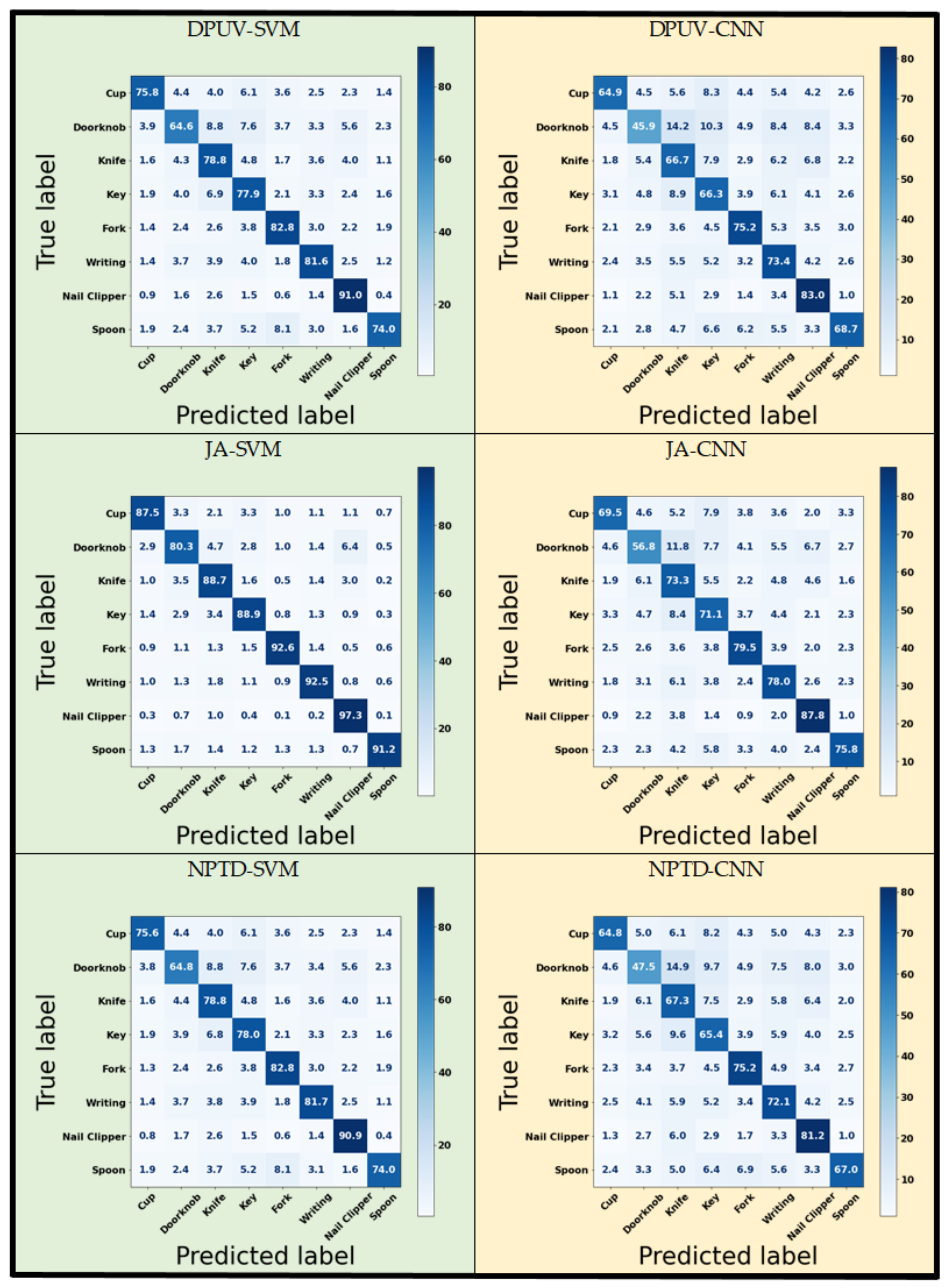

3. Results and Discussion

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Cramer, S.C.; Nelles, G.; Benson, R.R.; Kaplan, J.D.; Parker, R.A.; Kwong, K.K.; Kennedy, D.N.; Finklestein, S.P.; Rosen, B.R. A functional MRI study of subjects recovered from hemiparetic stroke. Stroke 1997, 28, 2518–2527. [Google Scholar] [CrossRef] [PubMed]

- Hatem, S.M.; Saussez, G.; Della Faille, M.; Prist, V.; Zhang, X.; Dispa, D.; Bleyenheuft, Y. Rehabilitation of motor function after stroke: A multiple systematic review focused on techniques to stimulate upper extremity recovery. Front. Hum. Neurosci. 2016, 10, 442. [Google Scholar] [CrossRef] [PubMed]

- Langhorne, P.; Bernhardt, J.; Kwakkel, G. Stroke rehabilitation. Lancet 2011, 377, 1693–1702. [Google Scholar] [CrossRef]

- Available online: http://www.strokeassociation.org/STROKEORG/AboutStroke/Impact-of-Stroke-Stroke-statistics/{_}UCM/{_}310728/{_}Article.jsp#\.WNPkhvnytAh (accessed on 12 July 2017).

- Duruoz, M.T. Hand Function; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Demain, S.; Wiles, R.; Roberts, L.; McPherson, K. Recovery plateau following stroke: Fact or fiction? Disabil. Rehabil. 2006, 28, 815–821. [Google Scholar] [CrossRef]

- Lennon, S. Physiotherapy practice in stroke rehabilitation: A survey. Disabil. Rehabil. 2003, 25, 455–461. [Google Scholar] [CrossRef]

- Page, S.J.; Gater, D.R.; Bach-y Rita, P. Reconsidering the motor recovery plateau in stroke rehabilitation. Arch. Phys. Med. Rehabil. 2004, 85, 1377–1381. [Google Scholar] [CrossRef]

- Matheus, K.; Dollar, A.M. Benchmarking grasping and manipulation: Properties of the objects of daily living. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 5020–5027. [Google Scholar] [CrossRef]

- Katz, S. Assessing self-maintenance: Activities of daily living, mobility, and instrumental activities of daily living. J. Am. Geriatr. Soc. 1983, 31, 721–727. [Google Scholar] [CrossRef]

- Dollar, A.M. Classifying human hand use and the activities of daily living. In The Human Hand as an Inspiration for Robot Hand Development; Springer: Berlin/Heidelberg, Germany, 2014; pp. 201–216. [Google Scholar] [CrossRef]

- Lawton, M.P.; Brody, E.M. Assessment of older people: Self-maintaining and instrumental activities of daily living. Gerontologist 1969, 9, 179–186. [Google Scholar] [CrossRef]

- Mohammed, H.I.; Waleed, J.; Albawi, S. An Inclusive Survey of Machine Learning based Hand Gestures Recognition Systems in Recent Applications. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Sanya, China, 12–14 November 2021; IOP Publishing: Bristol, UK, 2021; Volume 1076, p. 012047. [Google Scholar]

- Allevard, T.; Benoit, E.; Foulloy, L. Hand posture recognition with the fuzzy glove. In Modern Information Processing; Elsevier: Amsterdam, The Netherlands, 2006; pp. 417–427. [Google Scholar] [CrossRef]

- Garg, P.; Aggarwal, N.; Sofat, S. Vision based hand gesture recognition. Int. J. Comput. Inf. Eng. 2009, 3, 186–191. [Google Scholar]

- Alonso, D.G.; Teyseyre, A.; Soria, A.; Berdun, L. Hand gesture recognition in real world scenarios using approximate string matching. Multimed. Tools Appl. 2020, 79, 20773–20794. [Google Scholar] [CrossRef]

- Stinghen Filho, I.A.; Gatto, B.B.; Pio, J.; Chen, E.N.; Junior, J.M.; Barboza, R. Gesture recognition using leap motion: A machine learning-based controller interface. In Proceedings of the 2016 7th International Conference on Sciences of Electronics, Technologies of Information and Telecommunications (SETIT), Hammamet, Tunisia, 18–20 December 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Chuan, C.H.; Regina, E.; Guardino, C. American sign language recognition using leap motion sensor. In Proceedings of the 2014 13th International Conference on Machine Learning and Applications, Detroit, MI, USA, 3–5 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 541–544. [Google Scholar]

- Chong, T.W.; Lee, B.G. American sign language recognition using leap motion controller with machine learning approach. Sensors 2018, 18, 3554. [Google Scholar] [CrossRef] [PubMed]

- Mohandes, M.; Aliyu, S.; Deriche, M. Arabic sign language recognition using the leap motion controller. In Proceedings of the 2014 IEEE 23rd International Symposium on Industrial Electronics (ISIE), Istanbul, Turkey, 1–4 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 960–965. [Google Scholar] [CrossRef]

- Hisham, B.; Hamouda, A. Arabic Static and Dynamic Gestures Recognition Using Leap Motion. J. Comput. Sci. 2017, 13, 337–354. [Google Scholar] [CrossRef]

- Elons, A.; Ahmed, M.; Shedid, H.; Tolba, M. Arabic sign language recognition using leap motion sensor. In Proceedings of the 2014 9th International Conference on Computer Engineering & Systems (ICCES), Vancouver, BC, Canada, 22–24 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 368–373. [Google Scholar] [CrossRef]

- Hisham, B.; Hamouda, A. Arabic sign language recognition using Ada-Boosting based on a leap motion controller. Int. J. Inf. Technol. 2021, 13, 1221–1234. [Google Scholar] [CrossRef]

- Karthick, P.; Prathiba, N.; Rekha, V.; Thanalaxmi, S. Transforming Indian sign language into text using leap motion. Int. J. Innov. Res. Sci. Eng. Technol. 2014, 3, 5. [Google Scholar]

- Kumar, P.; Gauba, H.; Roy, P.P.; Dogra, D.P. A multimodal framework for sensor based sign language recognition. Neurocomputing 2017, 259, 21–38. [Google Scholar] [CrossRef]

- Kumar, P.; Saini, R.; Behera, S.K.; Dogra, D.P.; Roy, P.P. Real-time recognition of sign language gestures and air-writing using leap motion. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 157–160. [Google Scholar] [CrossRef]

- Zhi, D.; de Oliveira, T.E.A.; da Fonseca, V.P.; Petriu, E.M. Teaching a robot sign language using vision-based hand gesture recognition. In Proceedings of the 2018 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Ottawa, ON, Canada, 12–14 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Anwar, A.; Basuki, A.; Sigit, R.; Rahagiyanto, A.; Zikky, M. Feature extraction for indonesian sign language (SIBI) using leap motion controller. In Proceedings of the 2017 21st International Computer Science and Engineering Conference (ICSEC), Bangkok, Thailand, 15–18 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Nájera, L.O.R.; Sánchez, M.L.; Serna, J.G.G.; Tapia, R.P.; Llanes, J.Y.A. Recognition of mexican sign language through the leap motion controller. In Proceedings of the International Conference on Scientific Computing (CSC), Albuquerque, NM, USA, 10–12 October 2016; p. 147. [Google Scholar]

- Simos, M.; Nikolaidis, N. Greek sign language alphabet recognition using the leap motion device. In Proceedings of the 9th Hellenic Conference on Artificial Intelligence, Thessaloniki, Greece, 18–20 May 2016; pp. 1–4. [Google Scholar]

- Potter, L.E.; Araullo, J.; Carter, L. The leap motion controller: A view on sign language. In Proceedings of the 25th Australian Computer-Human Interaction Conference: Augmentation, Application, Innovation, Collaboration, Adelaide, Australia, 25–29 November 2013; pp. 175–178. [Google Scholar]

- Guzsvinecz, T.; Szucs, V.; Sik-Lanyi, C. Suitability of the kinect sensor and leap motion controller—A literature review. Sensors 2019, 19, 1072. [Google Scholar] [CrossRef]

- Castañeda, M.A.; Guerra, A.M.; Ferro, R. Analysis on the gamification and implementation of Leap Motion Controller in the IED Técnico industrial de Tocancipá. Interact. Technol. Smart Educ. 2018, 15, 155–164. [Google Scholar] [CrossRef]

- Bassily, D.; Georgoulas, C.; Guettler, J.; Linner, T.; Bock, T. Intuitive and adaptive robotic arm manipulation using the leap motion controller. In Proceedings of the ISR/Robotik 2014; 41st International Symposium on Robotics, Munich, Germany, 2–3 June 2014; VDE: Offenbach, Germany, 2014; pp. 1–7. [Google Scholar]

- Chen, S.; Ma, H.; Yang, C.; Fu, M. Hand gesture based robot control system using leap motion. In Proceedings of the International Conference on Intelligent Robotics and Applications, Portsmouth, UK, 24–27 August 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 581–591. [Google Scholar] [CrossRef]

- Siddiqui, U.A.; Ullah, F.; Iqbal, A.; Khan, A.; Ullah, R.; Paracha, S.; Shahzad, H.; Kwak, K.S. Wearable-sensors-based platform for gesture recognition of autism spectrum disorder children using machine learning algorithms. Sensors 2021, 21, 3319. [Google Scholar] [CrossRef]

- Ameur, S.; Khalifa, A.B.; Bouhlel, M.S. Hand-gesture-based touchless exploration of medical images with leap motion controller. In Proceedings of the 2020 17th International Multi-Conference on Systems, Signals & Devices (SSD), Marrakech, Morocco, 28–1 March 2017; IEEE: Piscataway, NJ, USA, 2020; pp. 6–11. [Google Scholar] [CrossRef]

- Karashanov, A.; Manolova, A.; Neshov, N. Application for hand rehabilitation using leap motion sensor based on a gamification approach. Int. J. Adv. Res. Sci. Eng 2016, 5, 61–69. [Google Scholar]

- Alimanova, M.; Borambayeva, S.; Kozhamzharova, D.; Kurmangaiyeva, N.; Ospanova, D.; Tyulepberdinova, G.; Gaziz, G.; Kassenkhan, A. Gamification of hand rehabilitation process using virtual reality tools: Using leap motion for hand rehabilitation. In Proceedings of the 2017 First IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 10–12 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 336–339. [Google Scholar] [CrossRef]

- Wang, Z.r.; Wang, P.; Xing, L.; Mei, L.p.; Zhao, J.; Zhang, T. Leap Motion-based virtual reality training for improving motor functional recovery of upper limbs and neural reorganization in subacute stroke patients. Neural Regen. Res. 2017, 12, 1823. [Google Scholar] [CrossRef]

- Li, W.J.; Hsieh, C.Y.; Lin, L.F.; Chu, W.C. Hand gesture recognition for post-stroke rehabilitation using leap motion. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 386–388. [Google Scholar] [CrossRef]

- Škraba, A.; Koložvari, A.; Kofjač, D.; Stojanović, R. Wheelchair maneuvering using leap motion controller and cloud based speech control: Prototype realization. In Proceedings of the 2015 4th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 14–18 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 391–394. [Google Scholar] [CrossRef]

- Travaglini, T.; Swaney, P.; Weaver, K.D.; Webster, R., III. Initial experiments with the leap motion as a user interface in robotic endonasal surgery. In Robotics and Mechatronics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 171–179. [Google Scholar] [CrossRef]

- Qi, W.; Ovur, S.E.; Li, Z.; Marzullo, A.; Song, R. Multi-sensor guided hand gesture recognition for a teleoperated robot using a recurrent neural network. IEEE Robot. Autom. Lett. 2021, 6, 6039–6045. [Google Scholar] [CrossRef]

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Review of three-dimensional human-computer interaction with focus on the leap motion controller. Sensors 2018, 18, 2194. [Google Scholar] [CrossRef] [PubMed]

- Nogales, R.; Benalcázar, M. Real-time hand gesture recognition using the leap motion controller and machine learning. In Proceedings of the 2019 IEEE Latin American Conference on Computational Intelligence (LA-CCI), Guayaquil, Ecuador, 11–15 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Rekha, J.; Bhattacharya, J.; Majumder, S. Hand gesture recognition for sign language: A new hybrid approach. In Proceedings of the International Conference on Image Processing Computer Vision and Pattern Recognition (IPCV), Las Vegas, NV, USA, 18–21 July 2011; p. 1. [Google Scholar]

- Rowson, J.; Yoxall, A. Hold, grasp, clutch or grab: Consumer grip choices during food container opening. Appl. Ergon. 2011, 42, 627–633. [Google Scholar] [CrossRef]

- Cutkosky, M.R. On grasp choice, grasp models, and the design of hands for manufacturing tasks. IEEE Trans. Robot. Autom. 1989, 5, 269–279. [Google Scholar] [CrossRef]

- Available online: http://new.robai.com/assets/Cyton-Gamma-300-Arm-Specifications_2014.pdf (accessed on 12 July 2022).

- Yu, N.; Xu, C.; Wang, K.; Yang, Z.; Liu, J. Gesture-based telemanipulation of a humanoid robot for home service tasks. In Proceedings of the 2015 IEEE International Conference on Cyber Technology in Automation, Control and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1923–1927. [Google Scholar] [CrossRef]

- Available online: https://www.ultraleap.com/product/leap-motion-controller/ (accessed on 12 July 2022).

- Available online: https://www.ultraleap.com/company/news/blog/how-hand-tracking-works/ (accessed on 12 July 2022).

- Sharif, H.; Seo, S.B.; Kesavadas, T.K. Hand gesture recognition using surface electromyography. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 682–685. [Google Scholar] [CrossRef]

- Available online: https://www.upperlimbclinics.co.uk/images/hand-anatomy-pic.jpg (accessed on 12 July 2022).

- Available online: https://developer-archive.leapmotion.com/documentation/python/devguide/Leap_Overview.html (accessed on 12 July 2022).

- Available online: https://developer-archive.leapmotion.com/documentation/csharp/devguide/Leap_Coordinate_Mapping.html#:text=Leap%20Motion%20Coordinates,10cm%2C%20z%20%3D%20%2D10cm (accessed on 12 July 2022).

- Craig, J.J. Introduction to Robotics: Mechanics and Control; Pearson Educacion: London, UK, 2005. [Google Scholar]

- Change of Basis. Available online: https://math.hmc.edu/calculus/hmc-mathematics-calculus-online-tutorials/linear-algebra/change-of-basis (accessed on 12 July 2022).

- Patel, K. A review on feature extraction methods. Int. J. Adv. Res. Electr. Electron. Instrum. Eng. 2016, 5, 823–827. [Google Scholar] [CrossRef]

- Lu, W.; Tong, Z.; Chu, J. Dynamic hand gesture recognition with leap motion controller. IEEE Signal Process. Lett. 2016, 23, 1188–1192. [Google Scholar] [CrossRef]

- Yang, Q.; Ding, W.; Zhou, X.; Zhao, D.; Yan, S. Leap motion hand gesture recognition based on deep neural network. In Proceedings of the 2020 Chinese Control And Decision Conference (CCDC), Hefei, China, 22–24 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2089–2093. [Google Scholar] [CrossRef]

- Marin, G.; Dominio, F.; Zanuttigh, P. Hand gesture recognition with jointly calibrated leap motion and depth sensor. Multimed. Tools Appl. 2016, 75, 14991–15015. [Google Scholar] [CrossRef]

- Avola, D.; Bernardi, M.; Cinque, L.; Foresti, G.L.; Massaroni, C. Exploiting recurrent neural networks and leap motion controller for the recognition of sign language and semaphoric hand gestures. IEEE Trans. Multimed. 2018, 21, 234–245. [Google Scholar] [CrossRef]

- Fonk, R.; Schneeweiss, S.; Simon, U.; Engelhardt, L. Hand motion capture from a 3d leap motion controller for a musculoskeletal dynamic simulation. Sensors 2021, 21, 1199. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Z.; Liu, W.; Ji, M. Wireless sEMG-based identification in a virtual reality environment. Microelectron. Reliab. 2019, 98, 78–85. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, K.; Qian, J.; Zhang, L. Real-time surface EMG pattern recognition for hand gestures based on an artificial neural network. Sensors 2019, 19, 3170. [Google Scholar] [CrossRef] [PubMed]

- Khairuddin, I.M.; Sidek, S.N.; Majeed, A.P.A.; Razman, M.A.M.; Puzi, A.A.; Yusof, H.M. The classification of movement intention through machine learning models: The identification of significant time-domain EMG features. PeerJ Comput. Sci. 2021, 7, e379. [Google Scholar] [CrossRef] [PubMed]

- Abbaspour, S.; Lindén, M.; Gholamhosseini, H.; Naber, A.; Ortiz-Catalan, M. Evaluation of surface EMG-based recognition algorithms for decoding hand movements. Med. Biol. Eng. Comput. 2020, 58, 83–100. [Google Scholar] [CrossRef] [PubMed]

- Kehtarnavaz, N.; Mahotra, S. Digital Signal Processing Laboratory: LabVIEW-Based FPGA Implementation; Universal-Publishers: Irvine, CA, USA, 2010. [Google Scholar]

- Kumar, B.; Manjunatha, M. Performance analysis of KNN, SVM and ANN techniques for gesture recognition system. Indian J. Sci. Technol. 2016, 9, 1–8. [Google Scholar] [CrossRef]

- Huo, J.; Keung, K.L.; Lee, C.K.; Ng, H.Y. Hand Gesture Recognition with Augmented Reality and Leap Motion Controller. In Proceedings of the 2021 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Singapore, 13–16 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1015–1019. [Google Scholar] [CrossRef]

- Li, F.; Li, Y.; Du, B.; Xu, H.; Xiong, H.; Chen, M. A gesture interaction system based on improved finger feature and WE-KNN. In Proceedings of the 2019 4th International Conference on Mathematics and Artificial Intelligence, Chegndu, China, 12–15 April 2019; pp. 39–43. [Google Scholar] [CrossRef]

- Sumpeno, S.; Dharmayasa, I.G.A.; Nugroho, S.M.S.; Purwitasari, D. Immersive Hand Gesture for Virtual Museum using Leap Motion Sensor Based on K-Nearest Neighbor. In Proceedings of the 2019 International Conference on Computer Engineering, Network, and Intelligent Multimedia (CENIM), Surabaya, Indonesia, 17–18 November 2020; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Ding, I., Jr.; Hsieh, M.C. A hand gesture action-based emotion recognition system by 3D image sensor information derived from Leap Motion sensors for the specific group with restlessness emotion problems. Microsyst. Technol. 2020, 28, 1–13. [Google Scholar] [CrossRef]

- Nogales, R.; Benalcázar, M. Real-Time Hand Gesture Recognition Using KNN-DTW and Leap Motion Controller. In Proceedings of the Conference on Information and Communication Technologies of Ecuador, Virtual, 17–19 June 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 91–103. [Google Scholar] [CrossRef]

- Available online: https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html (accessed on 30 June 2022).

- Sha’Abani, M.; Fuad, N.; Jamal, N.; Ismail, M. kNN and SVM classification for EEG: A review. In Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2020; pp. 555–565. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic Differentiation in Pytorch. 2017. Available online: https://openreview.net/forum?id=BJJsrmfCZ (accessed on 30 June 2022).

- Available online: https://pytorch.org/ (accessed on 30 June 2022).

- Kritsis, K.; Kaliakatsos-Papakostas, M.; Katsouros, V.; Pikrakis, A. Deep convolutional and lstm neural network architectures on leap motion hand tracking data sequences. In Proceedings of the 2019 27th European Signal Processing Conference (EUSIPCO), A Coruna, Spain, 2–6 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Naguri, C.R.; Bunescu, R.C. Recognition of dynamic hand gestures from 3D motion data using LSTM and CNN architectures. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1130–1133. [Google Scholar] [CrossRef]

- Lupinetti, K.; Ranieri, A.; Giannini, F.; Monti, M. 3d dynamic hand gestures recognition using the leap motion sensor and convolutional neural networks. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Lecce, Italy, 7–10 September 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 420–439. [Google Scholar] [CrossRef]

- Ikram, A.; Liu, Y. Skeleton Based Dynamic Hand Gesture Recognition using LSTM and CNN. In Proceedings of the 2020 2nd International Conference on Image Processing and Machine Vision, Bangkok, Thailand, 5–7 August 2020; pp. 63–68. [Google Scholar] [CrossRef]

| Object | Dynamic Task |

|---|---|

| Cup | Grabbing a cup from the table top and bringing it to mouth to pretend drinking from the cup and put it back on the table |

| Fork | Bringing pretended food from a paper plate on the table to the person’s mouth |

| Key | Locking/unlocking a pretended door lock while holding a car key |

| Knife | Cutting a pretended stake by moving the knife back and forth |

| Nail Clipper | Holding a nail clipper and pressing/releasing its handles |

| Pen | Tracing one line of uppercase letter “A”s, with 4 randomly distributed font sizes |

| Spherical Doorknob * | Rotating a doorknob clockwise and counter clockwise |

| Spoon | Bringing pretended food from a paper plate on the table to the person’s mouth |

| Time-domain | Geometrical | AFA, ATD, DPUV, FHA, FTE, JA, NPTD |

| Non-geometrical | MAV, RMS, VAR, WL | |

| Frequency-domain | DFT | |

| Description of acronyms: Adjacent Fingertips Angle(AFA), Adjacent Tips Distance (ATD), Distal Phalanges Unit Vectors (DPUV), Fingertip- Angle (FHA), Fingertip Elevation (FTE), Joint Angle (JA), Normalized Palm-Tip Distance (NPTD), Mean Absolute Value (MAV), Root Mean Square (RMS), Variance (VAR), Waveform Length (WL), Discrete Fourier Transform (DFT) | ||

| Feature | CNN | |||

|---|---|---|---|---|

| Accuracy | Precision | Recall | F-Score | |

| Pure data | 63.5 | 50.5 | 40.2 | 41.2 |

| MAV | 85.1 | 80.5 | 80.1 | 80.2 |

| RMS | 84.1 | 78.1 | 77.8 | 77.9 |

| VAR | 34.8 | 32.9 | 23.5 | 23.3 |

| WL | 36.7 | 31.4 | 29.2 | 29.6 |

| AFA | 57.3 | 54.4 | 52.3 | 52.6 |

| ATD | 99.88 | 97.5 | 97.3 | 97.4 |

| DPUV | 72 | 68.8 | 68 | 68.3 |

| FHA | 70.2 | 66.1 | 65.3 | 65.5 |

| FTE | 41.5 | 29 | 25.4 | 24.5 |

| JA | 77.4 | 74.4 | 73.9 | 74.2 |

| NPTD | 71.5 | 68.4 | 67.6 | 67.9 |

| DFT | 58.4 | 53.4 | 50.4 | 51.4 |

| JA+DPUV | 80.4 | 77.1 | 76.6 | 76.8 |

| JA+NPTD | 78.8 | 74.3 | 73.7 | 74 |

| MAV+RMS | 84 | 79.5 | 78.9 | 79.2 |

| MAV+JA+NPTD | 88.4 | 83.8 | 83.6 | 83.7 |

| MAV+JA+NPTD+DPUV | 87.59 | 82.9 | 82.5 | 82.7 |

| Feature | SVM | |||

|---|---|---|---|---|

| Accuracy | Precision | Recall | F-Score | |

| Pure data | 68.9 | 70.5 | 67.3 | 68.2 |

| MAV | 79.5 | 81.1 | 77.9 | 78.99 |

| RMS | 76.3 | 78.4 | 74.7 | 75.8 |

| VAR | 24.6 | 61.1 | 21.8 | 20.3 |

| WL | 29.4 | 48.7 | 26.7 | 25.6 |

| AFA | 49.6 | 57.1 | 46.9 | 47.5 |

| ATD | 75.1 | 80.3 | 74.2 | 76.1 |

| DPUV | 79.3 | 79.2 | 78.3 | 78.7 |

| FHA | 64.2 | 66.2 | 62.5 | 63.3 |

| FTE | 30.8 | 50.8 | 29 | 30.4 |

| JA | 90.3 | 90.2 | 89.9 | 90 |

| NPTD | 79.3 | 79.2 | 78.3 | 78.6 |

| DFT | 52.4 | 77.6 | 50 | 54.3 |

| JA+DPUV | 94.7 | 94.4 | 94.4 | 94.4 |

| JA+NPTD | 92.3 | 92.2 | 91.9 | 92 |

| MAV+RMS | 79 | 80.5 | 77.5 | 78.4 |

| MAV+JA+NPTD | 92.5 | 92.3 | 91.9 | 92 |

| MAV+JA+NPTD+DPUV | 95.1 | 94.8 | 94.7 | 94.8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharif, H.; Eslaminia, A.; Chembrammel, P.; Kesavadas, T. Classification of Activities of Daily Living Based on Grasp Dynamics Obtained from a Leap Motion Controller. Sensors 2022, 22, 8273. https://doi.org/10.3390/s22218273

Sharif H, Eslaminia A, Chembrammel P, Kesavadas T. Classification of Activities of Daily Living Based on Grasp Dynamics Obtained from a Leap Motion Controller. Sensors. 2022; 22(21):8273. https://doi.org/10.3390/s22218273

Chicago/Turabian StyleSharif, Hajar, Ahmadreza Eslaminia, Pramod Chembrammel, and Thenkurussi Kesavadas. 2022. "Classification of Activities of Daily Living Based on Grasp Dynamics Obtained from a Leap Motion Controller" Sensors 22, no. 21: 8273. https://doi.org/10.3390/s22218273

APA StyleSharif, H., Eslaminia, A., Chembrammel, P., & Kesavadas, T. (2022). Classification of Activities of Daily Living Based on Grasp Dynamics Obtained from a Leap Motion Controller. Sensors, 22(21), 8273. https://doi.org/10.3390/s22218273