Identification and Localisation Algorithm for Sugarcane Stem Nodes by Combining YOLOv3 and Traditional Methods of Computer Vision

Abstract

1. Introduction

2. Overall Algorithm Design

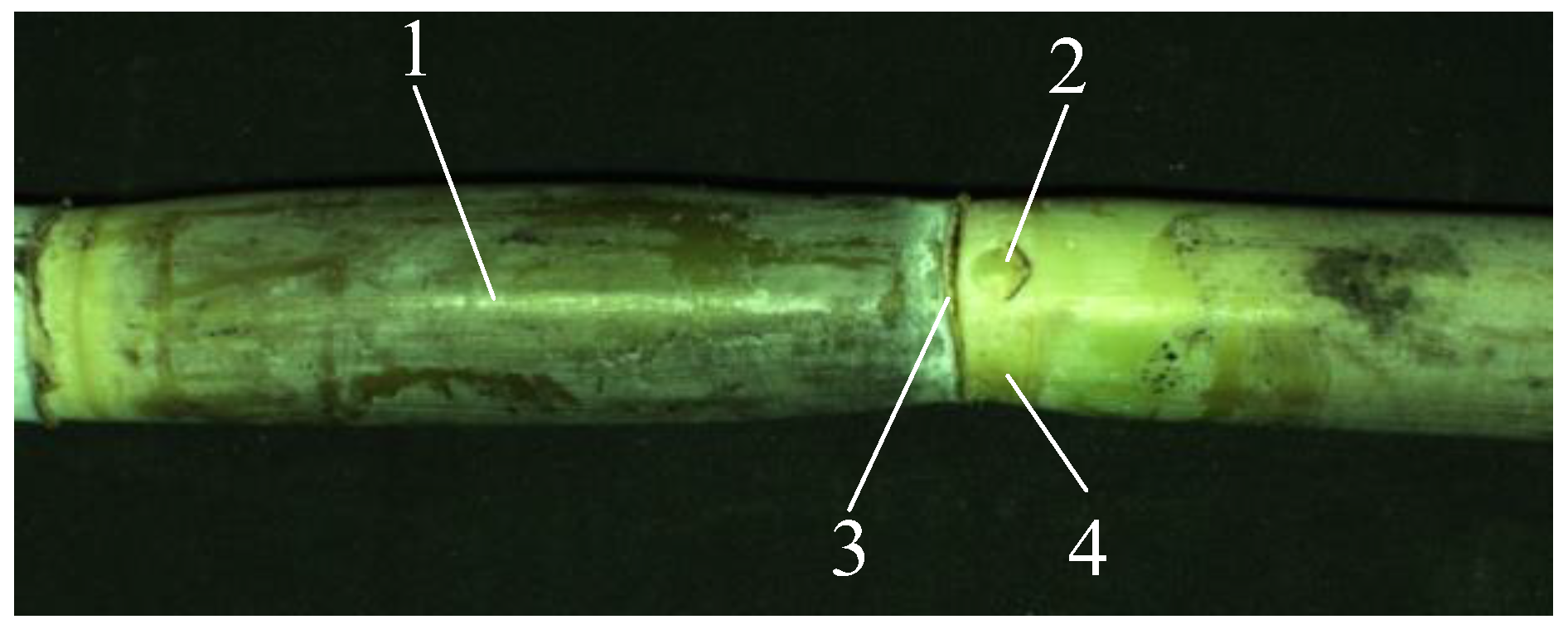

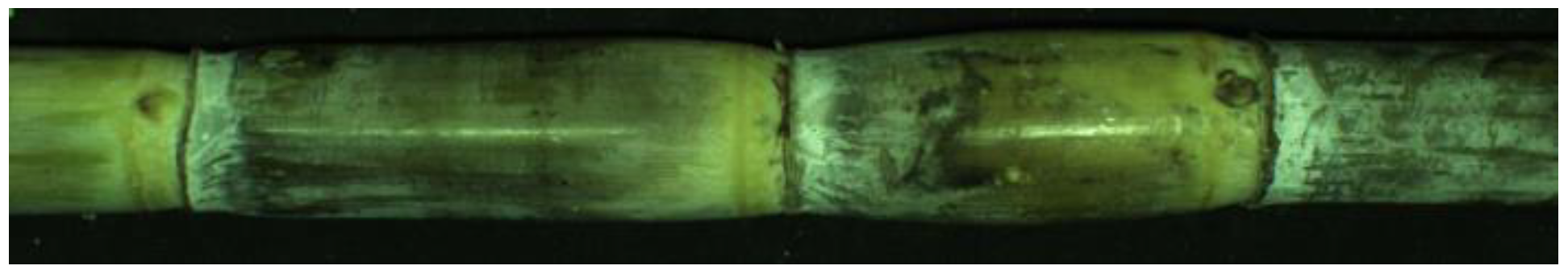

2.1. Analysis of Cane Stem Characteristics

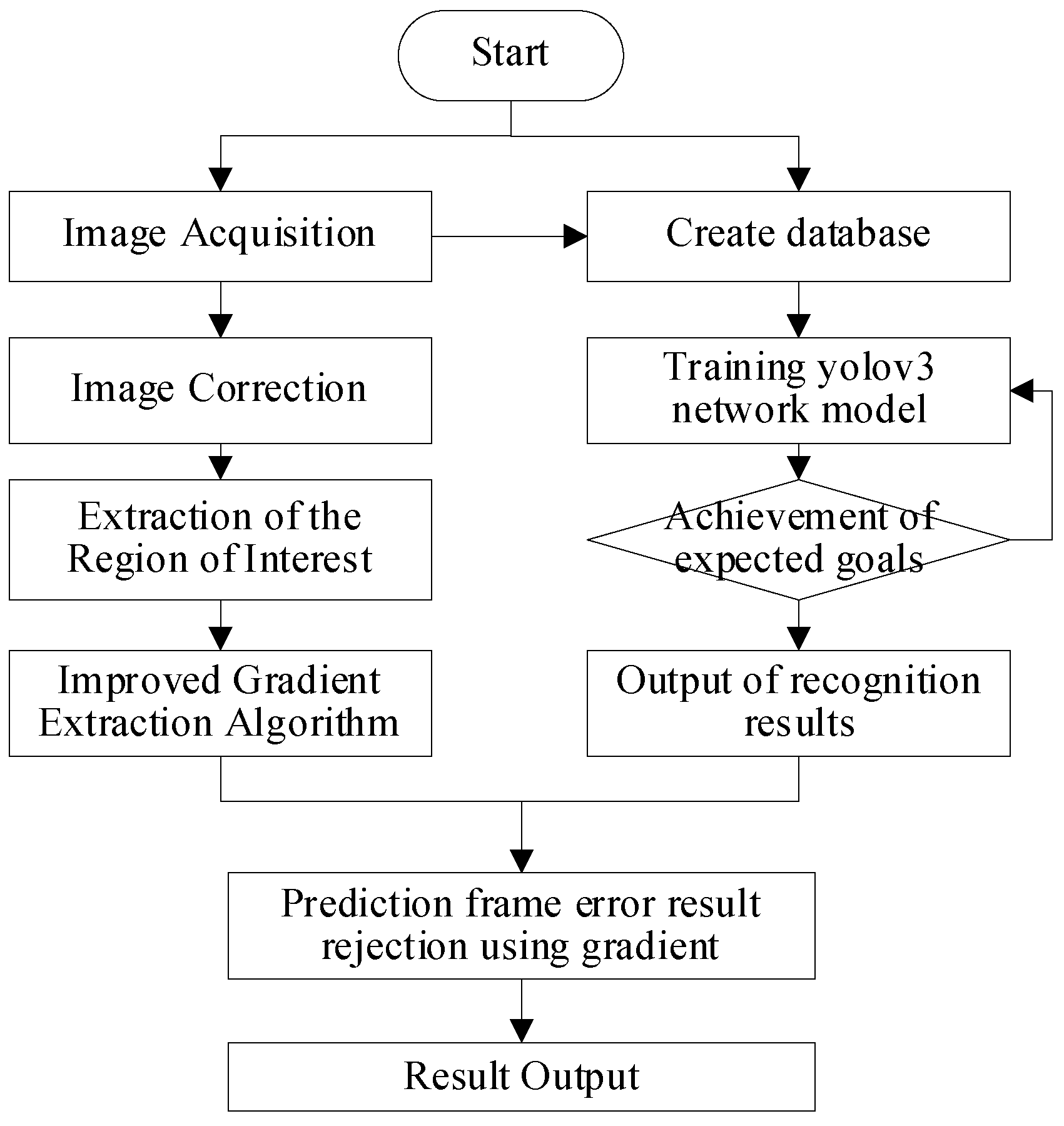

2.2. Design of a Recognition Process

3. Image Preprocessing

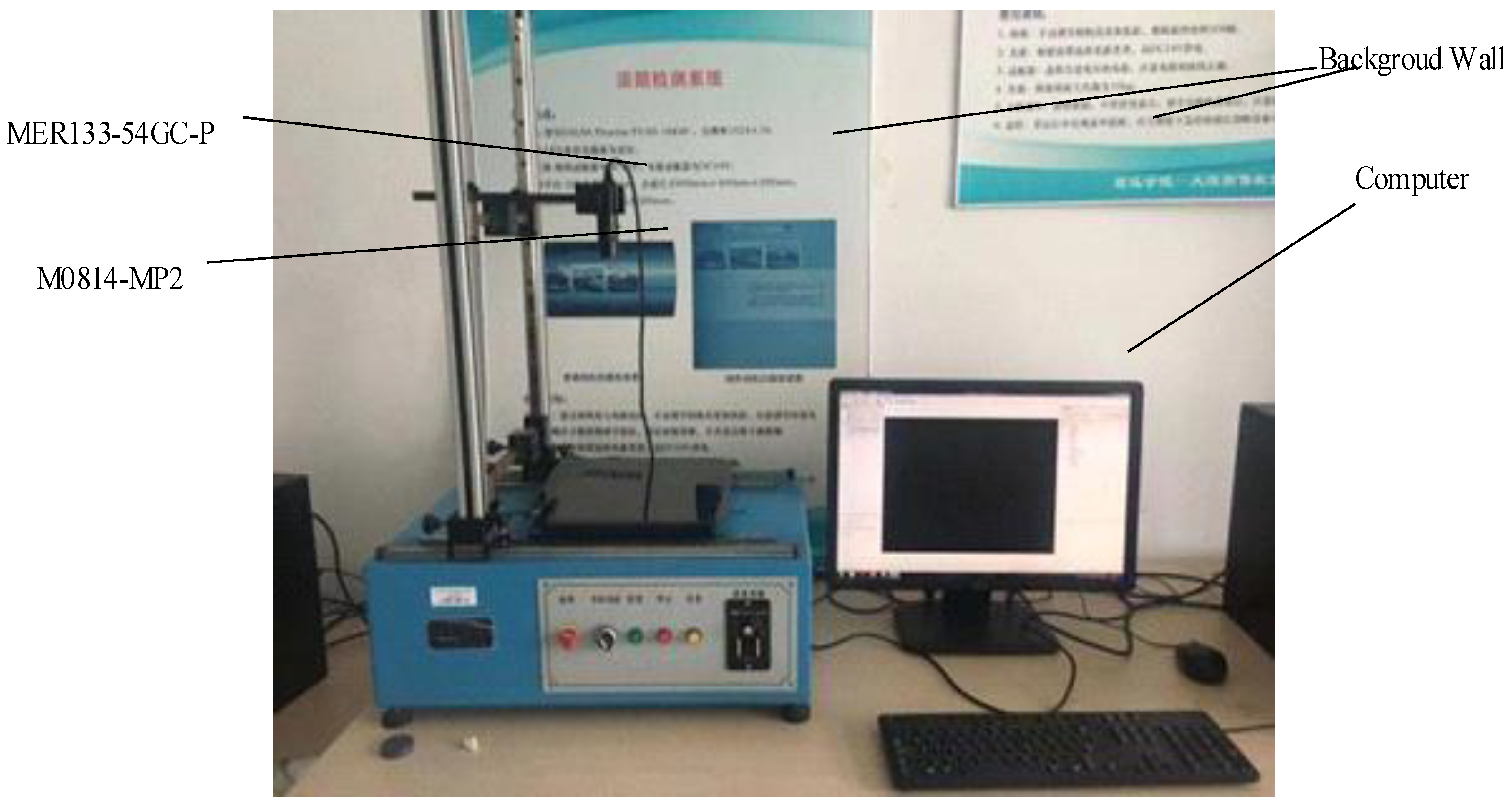

3.1. Image Acquisition

3.2. Image Preprocessing

3.2.1. Image Correction

3.2.2. Extraction of the Region of Interest

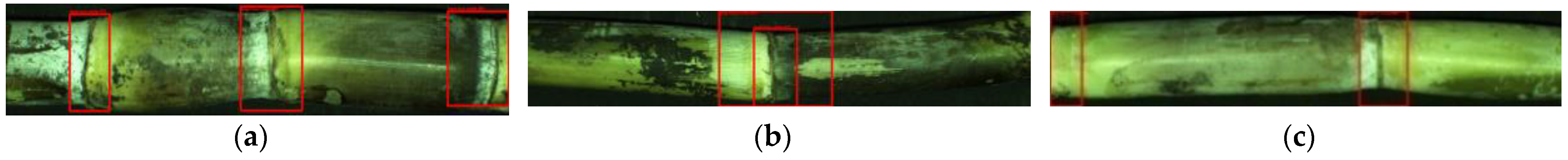

4. Initial Recognition and Location of Sugarcane Stem Nodes Based on the YOLOv3 Model

4.1. The Data Set

4.2. Evaluation Criteria

4.3. Analysis of the Model Performance

5. Accurate Recognition and Location of Sugarcane Stem Nodes Based on the Improved Edge Extraction Algorithm and the Localization Algorithm

5.1. Canny Algorithm and Its Defects

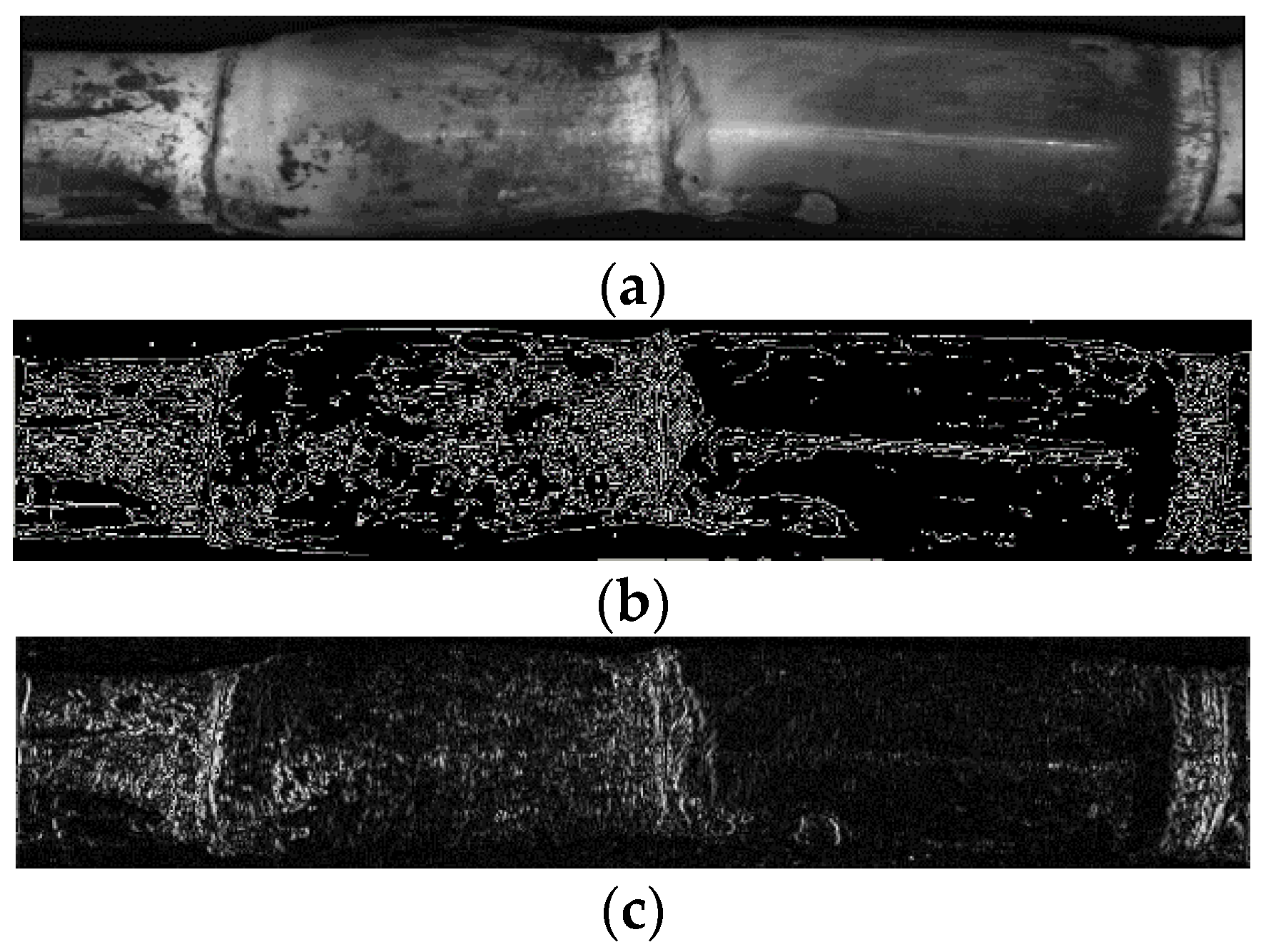

5.2. Improved Gradient Extraction Algorithm

5.2.1. Improved Gradient Operator

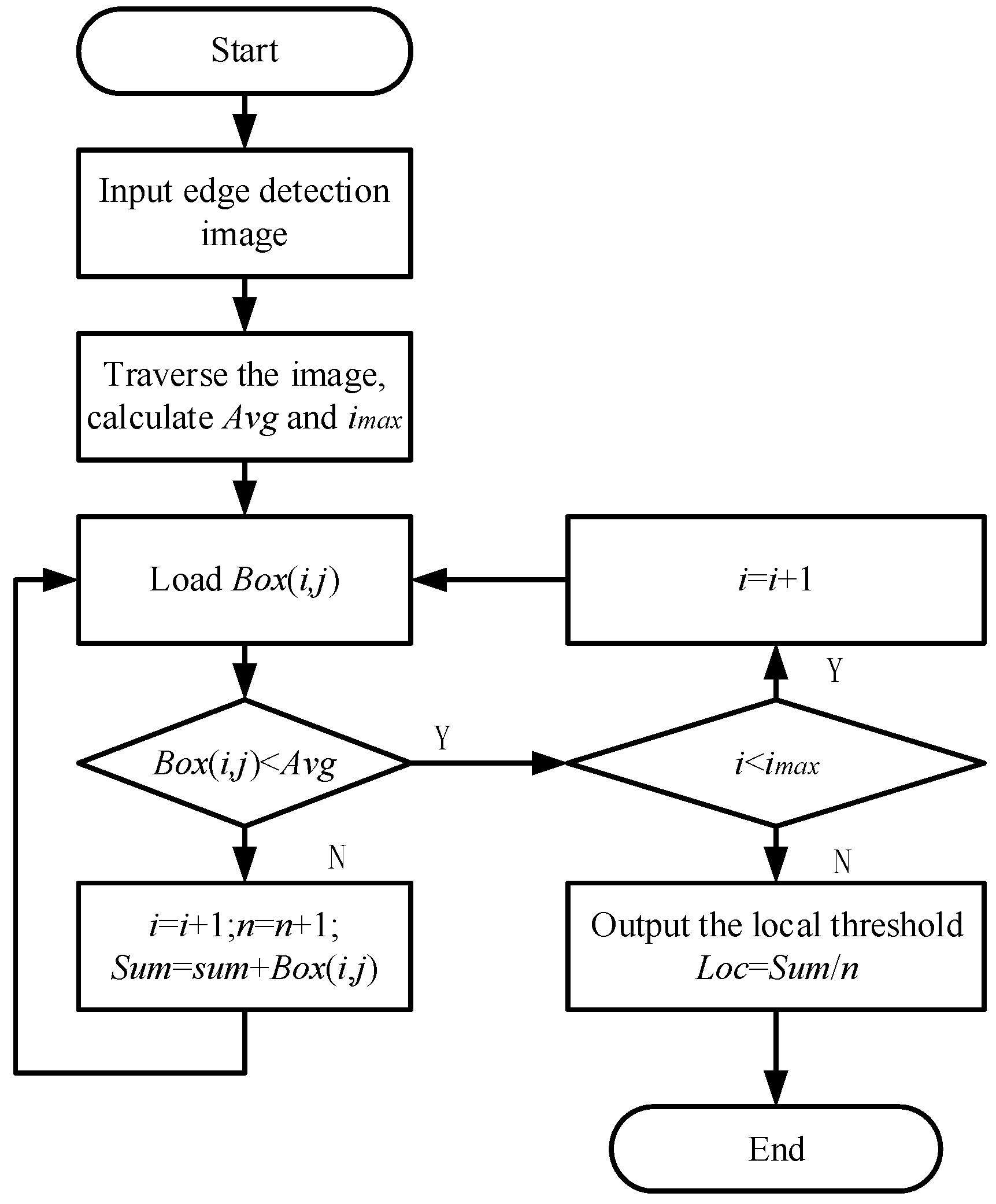

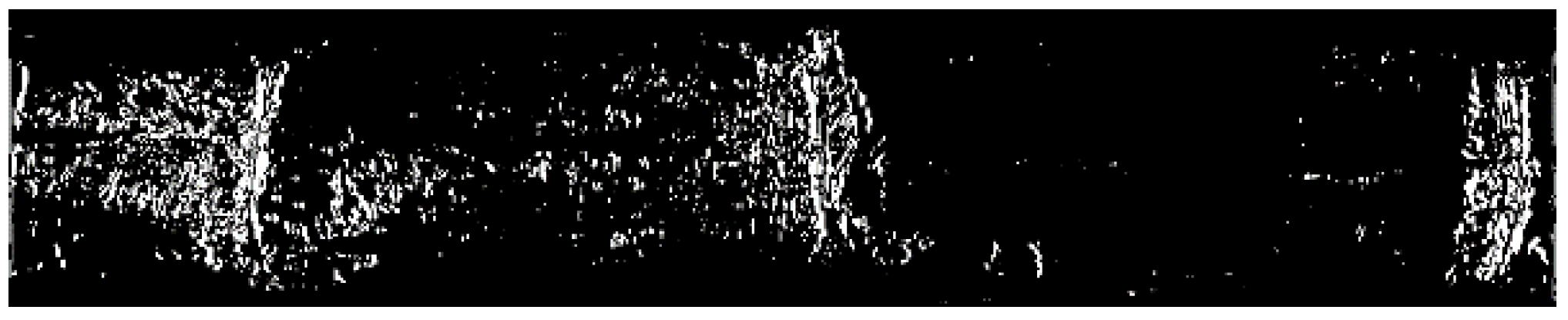

5.2.2. Local Threshold

5.3. Localisation Algorithm for Stem Nodes

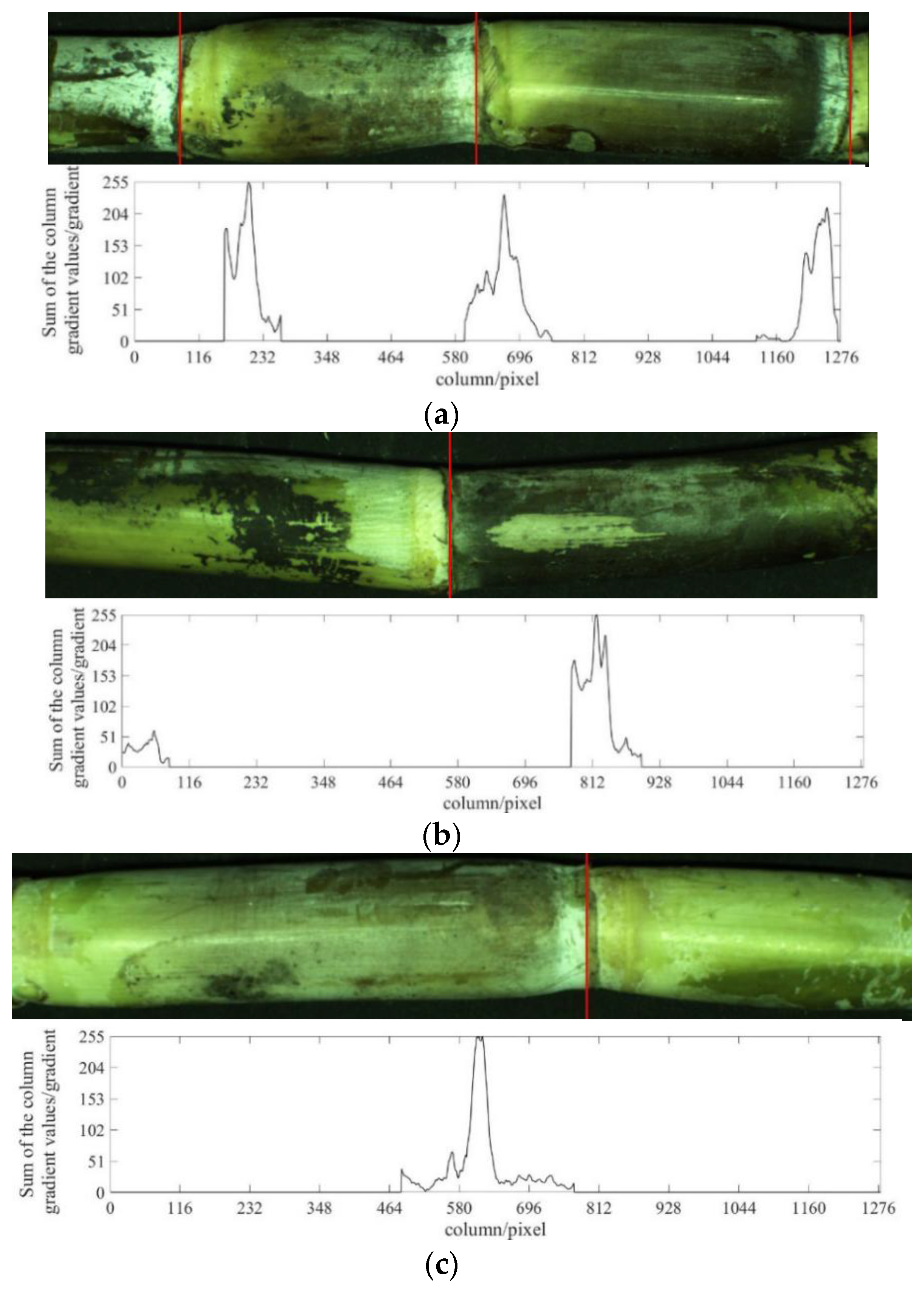

- ①

- The positions of the prediction boxes are taken as the detection regions of stem nodes. If the distance between the centre of the detection regions is less than 50 pixels, the two prediction boxes are combined as a new detection region of stem nodes, such as Figure 8b;

- ②

- The gradient values of each column in the detection area are summed and the position of the maximum value is taken as the position of the suspected stem node;

- ③

- If the sum of the column gradient values of the position of the suspected stem node is greater than or equal to 200, it will be judged as a stem node and marked on the original image. Otherwise, it will be judged as a non-stem node.

6. Experimental Results and Discussion

6.1. Analysis of the Identification Results

6.2. Comparison with Other Methods

7. Conclusions

- (1)

- A new gradient operator was used to extract the edge of a sugarcane R component image. Compared to the Canny operator, the experimental results show that the new operator is better. The stem node has a strong margin and the margin of the internode is thinner, which can greatly highlight slight differences between the stem node and the internode;

- (2)

- A local threshold determination method was proposed, which removes pixels whose gradient values in the prediction box are lower than the average value of the image. Then, the average gradient value of the remaining points in the prediction box is calculated to obtain the local threshold, which is used as the threshold of binarization of the edge detection image. The experimental results show that the noise between stem nodes is obviously reduced after binarization, which means the local threshold binarization has a good denoising effect;

- (3)

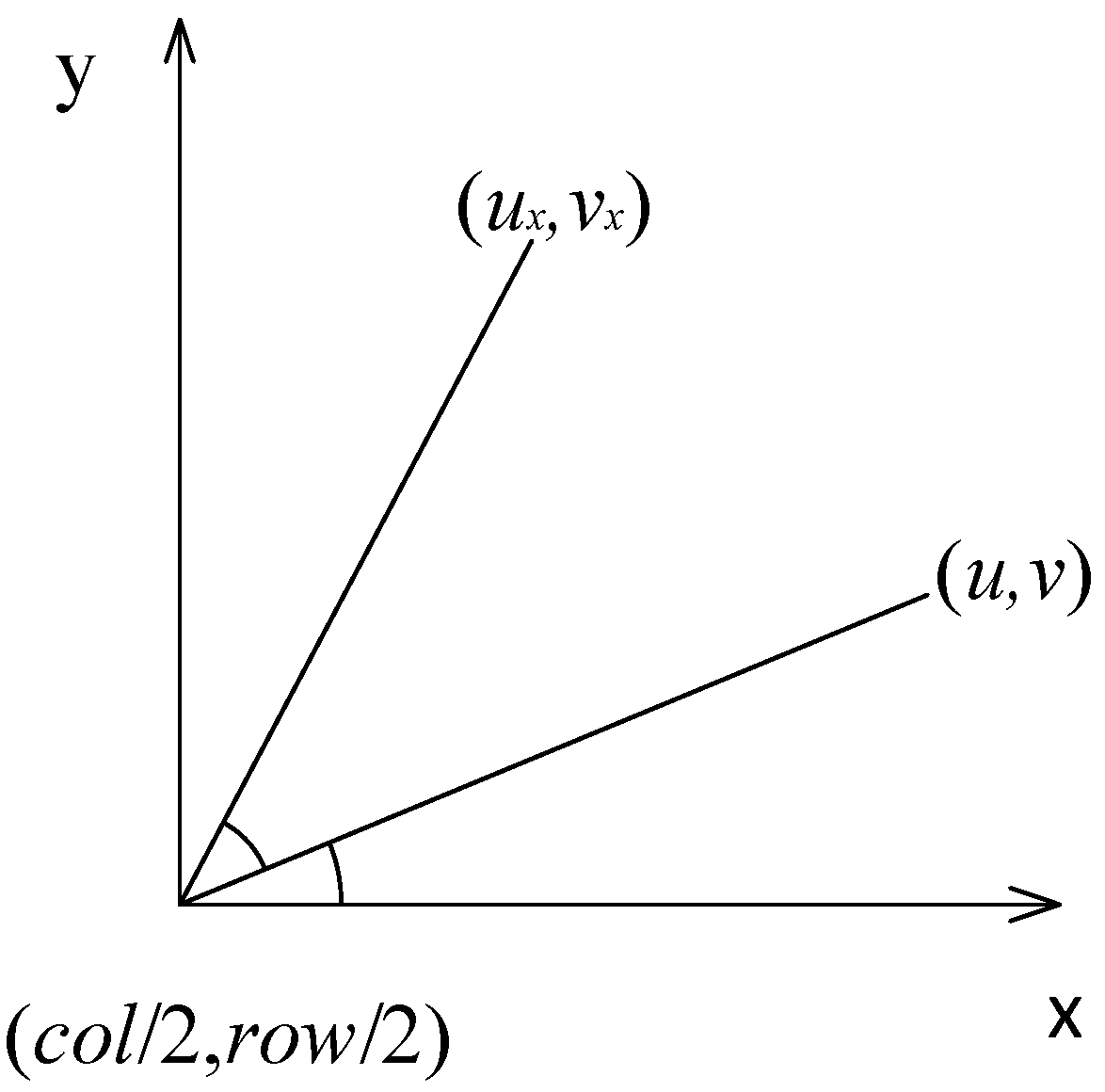

- We used polar coordinates to derive a rotation matrix. The rotation matrix is used to calculate the coordinates of the upper left vertex and the lower right vertex of the circumscribed rectangle of the sugarcane contour after rotation. The circumscribed rectangle of the sugarcane contour is redrawn through two points to obtain a sugarcane image of the region of interest after rotation, eliminating the influence of the background on image recognition; and

- (4)

- We proposed an identification and localization algorithm for sugarcane stem nodes combining a YOLOv3 network and traditional methods of computer vision. The experimental results show that the precision rate of the identification algorithm for sugarcane stem nodes proposed in this paper was 99.68%, the recall rate was 100%, and the harmonic mean was 99.84%. Compared to the original network, the precision rate and harmonic mean were improved by 2.28% and 1.13%, respectively.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Qu, Y. Present situation and countermeasure of whole-process mechanization of sugarcane production in China. Mod. Agric. Equip. 2019, 40, 3–8. [Google Scholar] [CrossRef]

- Tang, Y.; Ma, Z.; Ke, X. Study on sugarcane production model with whole mechanization and moderate refinement—Take huituo agriculture development co. ltd. as an example. Sugarcane Canesugar 2021, 50, 6–13. [Google Scholar] [CrossRef]

- Khojastehnazhand, M.; Ramezani, H. Machine vision system for classification of bulk raisins using texture features. J. Food Eng. 2020, 271, 109864. [Google Scholar] [CrossRef]

- Thakur, R.; Suryawanshi, G.; Patel, H.; Sangoi, J. An innovative approach for fruit ripeness classification. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems, Madurai, India, 13–15 May 2020; pp. 550–554. [Google Scholar] [CrossRef]

- Galata, D.L.; Mészáros, L.A.; Kállai-Szabó, N.; Szabó, E.; Pataki, H.; Marosi, G.; Nagy, Z.K. Applications of machine vision in pharmaceutical technology: A review. Eur. J. Pharm. Sci. 2021, 159, 105717. [Google Scholar] [CrossRef] [PubMed]

- Ying, B.; Xu, Y.; Zhang, S.; Shi, Y.; Liu, L. Weed detection in images of carrot fields based on improved YOLO v4. Traitement Signal 2021, 38, 341–348. [Google Scholar] [CrossRef]

- Nare, B.; Tewari, V.K.; Chandel, A.; Prakash Kumar, S.; Chethan, C.R. A mechatronically integrated autonomous seed material generation system for sugarcane: A crop of industrial significance. Ind. Crops Prod. 2019, 128, 1–12. [Google Scholar] [CrossRef]

- Zhou, D.; Fan, Y.; Deng, G.; He, F.; Wang, M. A new design of sugarcane seed cutting systems based on machine vision. Comput. Electron. Agric. 2020, 175, 105611. [Google Scholar] [CrossRef]

- Moshashai, K.; Almasi, M.; Minaei, S.; Borghei, A.M. Identification of sugarcane nodes using image processing and machine vision technology. Int. J. Agric. Res. 2008, 3, 357–364. [Google Scholar] [CrossRef]

- Lu, S.; Wen, Y.; Ge, W.; Pen, H. Recognition and features extraction of sugarcane nodes based on machine vision. Trans. Chin. Soc. Agric. Mach. 2010, 41, 190–194. [Google Scholar] [CrossRef]

- Huang, Y.; Huang, T.; Huang, M.; Yin, K.; Wang, X. Recognition of sugarcane nodes based on local mean. J. Chin. Agric. Mech. 2017, 38, 76–80. [Google Scholar] [CrossRef]

- Chen, J.; Qiang, H.; Xu, G.; Wu, J.; Liu, X.; Mo, R.; Huang, R. Sugarcane stem nodes based on the maximum value points of the vertical projection function. Ciência Rural 2020, 50, 32–36. [Google Scholar] [CrossRef]

- Garg, D.; Goel, P.; Pandya, S.; Ganatra, A.; Kotecha, K. A deep learning approach for face detection using YOLO. In Proceedings of the 2018 IEEE Punecon, Pune, India, 30 November–2 December 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Izidio, D.M.; Ferreira, A.; Medeiros, H.R.; Barros, E.N.D.S. An embedded automatic license plate recognition system using deep learning. Des. Autom. Embed. Syst. 2020, 24, 23–43. [Google Scholar] [CrossRef]

- Hossain, M.S.; Al-Hammadi, M.; Muhammad, G. Automatic fruit classification using deep learning for industrial applications. IEEE Trans. Ind. Inform. 2018, 15, 1027–1034. [Google Scholar] [CrossRef]

- Militante, S.V.; Gerardo, B.D.; Medina, R.P. Sugarcane Disease Recognition using Deep Learning. In Proceedings of the 2019 IEEE Eurasia Conference on IOT, Yunlin, Taiwan, 3–6 October 2019; pp. 575–578. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Li, X.; Zhang, K.; Li, K.; Yuan, H.; Huang, Z. Increasing the real-time dynamic identification efficiency of sugarcane nodes by improved YOLOv3 network. Trans. Chin. Soc. Agric. Eng. 2019, 35, 185–191. [Google Scholar] [CrossRef]

- Chen, R. Theory and Practice of Modern Sugarcane Breeding; China Agriculture Press: Beijing, China, 2003. [Google Scholar]

- Guan, Y.; Guan, Y. Research and application of affine transformation based on OpenCV. Comput. Technol. Dev. 2016, 26, 58–63. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-Time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. Computer vision and pattern recognition. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Huang, F.; Jiang, Y. Optimization of edge extraction algorithm for objects in complex background. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2021; Volume 1880, p. 012027. [Google Scholar] [CrossRef]

| Model | TP | FP | FN | Precision (P) | Recall (R) | Harmonic Mean (F) | Average Recognition Time/s |

|---|---|---|---|---|---|---|---|

| YOLOv3 | 615 | 16 | 0 | 97.46 | 100 | 98.72 | 0.17 |

| Model | TP | FP | FN | Precision (P) | Recall (R) | Harmonic Mean (F) | Average Recognition Time/s |

|---|---|---|---|---|---|---|---|

| Improved algorithm | 615 | 2 | 0 | 99.68 | 100 | 99.84 | 0.415 |

| YOLOv3 | 615 | 16 | 0 | 97.46 | 100 | 98.72 | 0.17 |

| Methods | Number of Samples | Methods Detail | Average Recognition Rate/% | Average Recognition Time/s |

|---|---|---|---|---|

| Zhou et al. [8] | 119 | Search potential node positions in the gradient feature vector. | 93.00 | 0.539 |

| Lu et al. [10] | 3200 | Clustering analysis was introduced to identify sugarcane nodes blocks which were got by support vector machine. | 93.36 | 0.76 |

| Huang et al. [11] | 130 | The corresponding position of maximum average grey value determine the position of sugarcane nodes. | 90.77 | 0.481 |

| Li et al. [18] | 12,000 | Improve YOLOv3 network by reduce the residual junction formed and number of anchors. | 90.38 | 0.0287 |

| The algorithm in this paper | 750 | 99.84 | 0.415 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, D.; Zhao, W.; Chen, Y.; Zhang, Q.; Deng, G.; He, F. Identification and Localisation Algorithm for Sugarcane Stem Nodes by Combining YOLOv3 and Traditional Methods of Computer Vision. Sensors 2022, 22, 8266. https://doi.org/10.3390/s22218266

Zhou D, Zhao W, Chen Y, Zhang Q, Deng G, He F. Identification and Localisation Algorithm for Sugarcane Stem Nodes by Combining YOLOv3 and Traditional Methods of Computer Vision. Sensors. 2022; 22(21):8266. https://doi.org/10.3390/s22218266

Chicago/Turabian StyleZhou, Deqiang, Wenbo Zhao, Yanxiang Chen, Qiuju Zhang, Ganran Deng, and Fengguang He. 2022. "Identification and Localisation Algorithm for Sugarcane Stem Nodes by Combining YOLOv3 and Traditional Methods of Computer Vision" Sensors 22, no. 21: 8266. https://doi.org/10.3390/s22218266

APA StyleZhou, D., Zhao, W., Chen, Y., Zhang, Q., Deng, G., & He, F. (2022). Identification and Localisation Algorithm for Sugarcane Stem Nodes by Combining YOLOv3 and Traditional Methods of Computer Vision. Sensors, 22(21), 8266. https://doi.org/10.3390/s22218266