Abstract

The current paradigm shift in orthodontic treatment planning is based on facially driven diagnostics. This requires an affordable, convenient, and non-invasive solution for face scanning. Therefore, utilization of smartphones’ TrueDepth sensors is very tempting. TrueDepth refers to front-facing cameras with a dot projector in Apple devices that provide real-time depth data in addition to visual information. There are several applications that tout themselves as accurate solutions for 3D scanning of the face in dentistry. Their clinical accuracy has been uncertain. This study focuses on evaluating the accuracy of the Bellus3D Dental Pro app, which uses Apple’s TrueDepth sensor. The app reconstructs a virtual, high-resolution version of the face, which is available for download as a 3D object. In this paper, sixty TrueDepth scans of the face were compared to sixty corresponding facial surfaces segmented from CBCT. Difference maps were created for each pair and evaluated in specific facial regions. The results confirmed statistically significant differences in some facial regions with amplitudes greater than 3 mm, suggesting that current technology has limited applicability for clinical use. The clinical utilization of facial scanning for orthodontic evaluation, which does not require accuracy in the lip region below 3 mm, can be considered.

1. Introduction

The paradigm shift in orthodontic treatment planning is currently leaning towards soft tissue-driven considerations. Although 3D facial diagnosis is undoubtedly a crucial factor in treatment planning, it has been difficult to capture by the traditional means of 2D digital diagnostics. The 3D diagnostic workflow will soon be considered a routine procedure, but the affordability of high-end facial scanners is slowing this change. The ability to use the smartphone sensor introduced in 2017 in the iPhone X has given the world a new method of affordable and convenient facial scanning. It captures more than 250,000 3D data points of a face in 10 s as the patient slowly turns their head in front of the iPhone or iPad. Despite various professional dental applications proclaiming themselves as reliable and accurate solutions, the clinical accuracy has been questionable [1,2].

Dental imaging is a standard clinical procedure that is an important source of information for various purposes. Soft tissue images of the face are important records for evaluating the maxillofacial area in many fields such as orthodontics, orthognathic surgery, and facial plastic surgery, and are used for diagnostic processing, treatment planning, and outcome analysis, among others. The application of 3D scanning methods in healthcare, especially cone beam computed tomography (CBCT) imaging in the field of maxillofacial surgery and orthodontics, has expanded significantly over the last decade [3,4].

CBCT is a three-dimensional (3D) diagnostic X-ray imaging technique which provides craniofacial imaging with low distortion and higher image accuracy than conventional imaging. When the acquired data is processed in the volume, CBCT generates 3D panoramic and cephalometric pictures [5,6]. CBCT also has certain limitations. These devices are being used in dental care mainly to image the hard tissues of the orofacial structure and have a limited capacity to identify soft tissues due to the lack of contrast resolution and texture, limiting their usage for soft tissue analysis. Furthermore, the use of stabilizing tools for CBCT scanning such as chin rests or forehead restraints could deform the surface anatomy of the facial soft tissue, and it is also susceptible to a variety of artifacts, including metal and motion artifacts, which can have a negative impact on image quality [7,8]. To address the lack of soft tissue data provided by CBCT scanners, 3D facial scanners have been integrated into digital routines such as stereophotogrammetry, laser, and structured-light systems, offering a non-ionizing technique for creating a copy of the facial soft tissue with a precise portrayal of texture and static geometry in three dimensions [9] since the texture and color is significant for treatment planning in orthodontics. These complementary methods could be precise, quick, and easy to use.

Utilization of smartphones in combination with artificial intelligence is a common practice in orthodontics today. Artificial intelligence (AI) in the form of dental monitoring software uses the patient’s cell phone for regular scanning. This has advantages in the pandemic era [10], as well as in self-evaluating coaching tele-health solutions [11]. AI is also currently widely used in the diagnosis of 3D facial scans created with smartphones [12].

3D face scanning is a fast-expanding field with enormous potential in a wide range of uses, but it is still new and quite unexplored. As smartphone availability and capabilities expand, so does the potential for 3D face-scanning apps. They have a variety of uses in medicine and dentistry, such as face identification, emotion capturing, facial cosmetic planning and surgery, and maxillofacial rehabilitation.

Facial scanners can generate a 3D topography of a patient’s facial surface anatomy, which, when paired with a digital study model and a CBCT scan, creates a 3D “virtual patient” for improved diagnosis, treatment planning, and patient outcomes [13,14,15].

Recent innovations of devices such as smartphones and tablets have demonstrated that scanning is also possible using LiDAR and TrueDepth technology.

LiDAR, which stands for light detection and ranging, is a radar-like remote sensing technology. The difference is that radar detects its surroundings using radio waves, while LiDAR requires laser energy. The technology refers to a remote sensing technology that generates concentrated light beams and calculates the time taken to detect the reflections by the sensor [1,16,17,18].

For the 3D reconstruction, depth sensors are used, which have been utilized for a long time in 3D scanners, game systems (Microsoft Kinect, for example), and lately, in laptops and smartphones. These sensors are resilient in a variety of lighting conditions (day or night, with or without glare and shadows), thereby outperforming other sensor types. Sensors may be positioned at the back and at the front of the device. The depth sensors on the front of the unit have a shorter range and can identify and map hundreds of landmarks in real time; they are primarily used to detect the face and produce its 3D image. Their essential use is for biometric smartphone security (e.g., Apple Face ID), to recognize the face of the Apple device owner.

Facial recognition has improved dramatically in only a few years. As of April 2020, the best face identification algorithm has an error rate of just 0.08% compared to 4.1% for the leading algorithm in 2014, according to tests by the National Institute of Standards and Technology (NIST) [19]. To recognize faces in Apple devices (i.e., iPhone X and later), the front-facing cameras with a Dot projector provide depth data in real time along with visual information. The core technology responsible for this process is called TrueDepth. The system uses LEDs to project an irregular grid of over 30,000 infrared dots to record depth within a matter of milliseconds [20,21] and can provide a rapid, reliable, and direct method for producing 3D data [1,22,23,24].

TrueDepth (Bellus3D Dental Pro) scanning performed on an iPhone or iPad with a 3D capture system can use an affordable program for face scanning such as Bellus3D FaceApp, which has simple instructions and was created to be precise and accurate in recognizing facial landmarks [25,26,27]. It is also available for Android and iPhone devices, is compatible with the Windows 10 operating system, and it allows simple export of STL files. The first such hardware and software solution using an accessory camera for Android smartphones was created in March 2015 in Silicon Valley with aim to generate detailed 3D face scans [28,29]. Later, the company presented the FaceApp application, dedicated for iPhoneX users. The functionality of this app is the same as in the Android version, except instead of using any additional hardware devices, it used the front-facing TrueDepth camera the same way as in Apple Face ID.

Facial scanning can provide useful correlative data for many studies that would benefit from regular, noninvasive evaluations of head and neck soft-tissue morphology, as the change of body mass index is not a very representative value when the facial morphology is the merit [30,31]. Three-dimensionally printed extraoral orthodontic appliances in growing patients would benefit from regular, noninvasive and reasonably accessible facial scanning [32]. Tsolakis et al., 2022, as well, presents ideas that can be widely utilized for regular evaluations of growth or therapeutical changes of facial morphology [33].

FaceApp captures more than 250,000 3D data points on a face in 10 s while the user slowly turns their head in front of the camera. The app then reconstructs a virtual high-resolution version of the face that can be rotated, zoomed in or out, and viewed in three dimensions. Additionally, the face model can be viewed with interactive lighting, using the device’s gyro to control viewing angles. Apple just released iOS 15.4 with some improvements for the iPhone 12 and iPhone 13 when it comes to using the Face ID feature while using a mask.

Mobile phone 3D facial scanning in combination with AI algorithms incorporated in a smartphone app, for example, Face2Gene (FDNA Inc., Boston MA, USA), is currently forming a powerful tool for early diagnostics. Diseases not only manifest as internal structural and functional abnormalities, but also have facial characteristics and appearance deformities. Specific facial phenotypes are potential diagnostic markers, especially for endocrine and metabolic syndromes, genetic disorders, and facial neuromuscular diseases [34,35].

The goal of this work was to assess whether the facial scan created with TrueDepth sensors and compiled with the Bellus Dental Pro app is accurate compared to the surface of the face from the CBCT, and, in the case of inaccuracies, to determine which facial regions are incorrectly imaged and to what extent.

2. Materials and Methods

This paper is focused on the analysis and deviations of facial soft tissue scans between CBCT and the TrueDepth scanner using the Bellus 3D FaceApp application, respectively. Specific facial areas, attributes, and points were chosen for evaluation in these scans using programs Invivo 6 (Anatomage Inc., San Jose, CA, USA) Dental and Meshmixer™ (Autodesk®®, Inc., San Rafael, CA, USA).

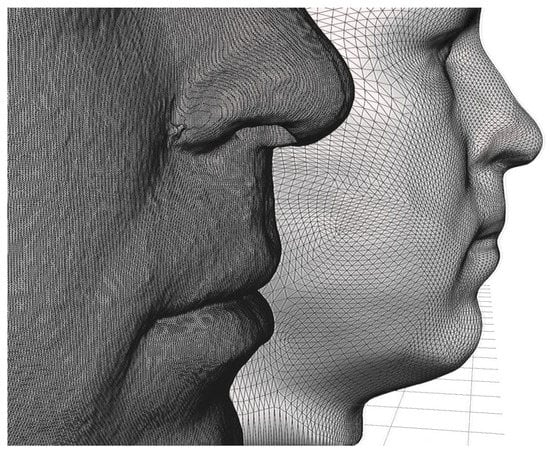

The assessment of the accuracy of the facial scan was based on the CBCT scan, which accurately reflects the true morphology of the face. From the CBCT of the head, a portion representing the face was segmented and exported as an STL shell. The difference between the polygon resolution of the STL from the CBCT and the STL from the face scan (TrueDepth—Bellus3D Dental Pro) is obvious, as shown in Figure 1.

Figure 1.

The difference between the polygon resolution of the STL from the CBCT (in the front) and the STL from the face scan (TrueDepth—Bellus3D Dental Pro) in the back is noticeable.

The methods presented can be divided into 3 parts:

- Collecting scans;

- Modification, positioning, and analysis of the scans;

- Data analysis and comparison.

2.1. Collecting Scans—Selection Criteria

To achieve an extensive set of CBCT–facial scan pairs, it was necessary to imply strict criteria. To avoid any deliberate differences between the CBCT scan and facial scan:

- Facial expression: only calm, neutral faces with closed mouth and no facial expression were compared;

- Change in BMI: only pairs of scans separated by less than 7 days were included;

- Extreme artifacts: only CBCTs without extensive artifacts were included.

None of the above procedures (CBCT or 3D facial scanning) were performed exclusively for this study; rather, they were part of the diagnostic procedures during orthodontic planning. All included patients signed an informed consent form.

In the first part of our study, we collected 60 CBCT scans of patients (41 women and 19 men) and 60 3D facial scans acquired with the Bellus 3D Dental Pro application using the scanner. Bellus 3D Dental Pro is an application available for iPads and Apple’s iPhone 12 Pro. The patient’s face was scanned from every angle using the application’s instructions. The instructions were clear and easy to follow.

For each scan, patients had to maintain a neutral facial expression and relax both the jaw and eyes into a comfortable resting position. The measurement and display time is less than 10 s while the user rotates the head from left to right, allowing accurate 3D facial models to be created. This application helps capture a person’s face and create a high-resolution STL (Standard Tessellation Language) file, which is a 3D model of that object that can then be loaded into three-dimensional analysis software [25,36,37].

Both types of scans were taken at approximately the same time so that there would be no bias in the results due to weight gain or loss or other physical changes in the patient’s face that might occur over time.

2.2. Modification, Positioning and Analyzing of the Scans

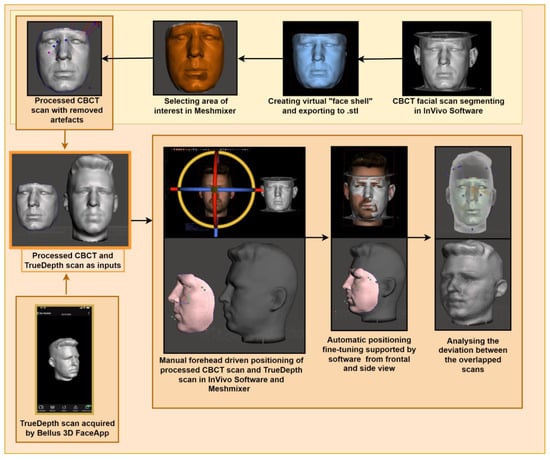

The second part of our research was devoted to modifying and positioning the two scans in the correct positions so that they overlapped and could later be used to analyze specific facial areas, points, and attributes. This process consisted of several steps (Figure 2).

Figure 2.

The schematic approach of the proposed method.

In this work, sixty TrueDepth scans of the face were compared with sixty corresponding facial surfaces segmented with CBCT. For the CBCT segmentation, the CBCT scan was segmented into Invivo 6 (Anatomage Inc., San Jose, CA, USA) in the Medical Design Studio module. Various programs can be used for the segmentation of CT/CBCT medical data [38] We used −700 opacity (Isosurface) where we could clearly see all facial soft tissues. We modified each CBCT scan so we could create a virtual “face shell” without unnecessary internal head structures. We used the function Freehand Volume Sculpting and removed excessive parts of the face. We also changed the subsample in the panel isosurface to 1. The final scan was saved in the STL file format. This file was opened in the Meshmixer program (Meshmixer™ (Autodesk®®, Inc., San Rafael, CA, USA) to process the face scan. We used the Brush function to further modify and select the areas of interest. Then the Optimize and Boundaries functions were used. We used the Invert and Erase functions to obtain the shape of our final face shell. Then, we removed artifacts using the Analysis Inspector function. We then exported this final CBCT scan and saved it again in STL binary format. All 60 CBCT face scans were modified in this way.

We used the above modified final CBCT scans and 3D TrueDepth model scans of patients and opened them both in the In Vivo Dental program. We manually positioned them to overlap the best. Then, we used the Mesh registrations function so that the scans overlapped even more. In this function, we used points that change less for different facial expressions, such as forehead, temples, and nose. We then exported these positioned TrueDepth model scans in STL binary format.

In the next step, we opened our final CBCT “face shell” model scan and our positioned TrueDepth model scan in the Meshmixer™ (Autodesk®®, Inc., San Rafael, CA, USA) program. We used the Analysis—Deviation function with 3 mm-maximum deviation. This function highlighted specific deviations between the two different scans.

2.3. Data Analysis and Comparison

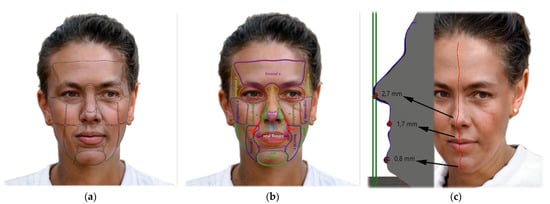

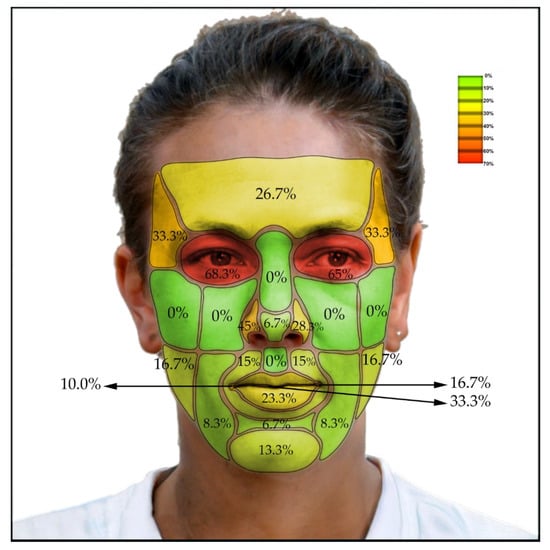

In the third part of the research, we created a chart with different facial areas, points, and attributes. We measured 21 facial locations and their deviations of CBCT to TrueDepth scans (Figure 3). We recorded if the deviation was present on the specific facial location of each patient. We specifically focused on the deviations of Aesthetic and Harmony lines of these scans. We decided the clinical evaluation of the amount of deviation of TrueDepth scans to CBCT scans and afterwards conclude any protentional usage of the TrueDepth scanner in dental medicine (Table 1). We suggested that 3 mm or more of deviation should be clinically relevant and are questioning the suitability of this method.

Figure 3.

AI face generator was used to create this hermaphrodite face. It shows: (a) Observed facial locations; (b) Abbreviations of locations used: region (r.), parotid-masseteric region (pmr), labials commissure (lc), ala nasi (an), apex of nose (apn), sulcus nasolabialis (sn), sulcus mentolabialis (sm), vermillion border (vb), philtrum (phil); (c) Example of comparison of sagittal sections of the facial profile of the CBCT surface shell and the Bellus 3D shell. In this way, the differences between the shells were measured and the results of the differential maps were checked in the median plane.

Table 1.

Clinical evaluation of amount of deviation between CBCT and 3D TrueDepth (Bellus3D Dental Pro) scans.

It is known that even a subtle facial expression may cause significant volumetric changes in the face [39]. As any facial expressions identified on CBCT or facial scans were excluded from the sample, only minimal unrecognizable differences slipped the attention of evaluators of estimated ranges up to two millimeters. It is also known that the skin of the face undergoes circadian rhythm changes in a pattern of volumetric changes throughout the day and is also dependent on the water intake and body metabolism with changes that typically does not exceed 2 mm [40,41,42,43].

As the process of superimposition can be inaccurate, two independent operators participated in superimposition procedures of the 3D mesh—pairs with utilization of the automated best fitting algorithm. A 100% agreement was reached between both operators in the evaluation of matching discrepancies between scans, which eliminated the need for another operator or calibration of the evaluators. Alignment surfaces of the meshes included for the best-fit algorithm were predominantly the forehead area and other large surfaces of the face, including the cheekbone area that does not suffer from CBCT artifacts of marginal areas of CBCT, as well as artifacts caused by metallic dental fillings or prosthetic works. The CBCT scan was considered as reference, similar to a study published by Revilla-León et al. in 2021 [44]. In this study, a difference between CBCT and Face Camera Pro Bellus was evaluated, and it also confirmed a non-normal distribution of trueness and precision values (p < 0.05).

Following these findings and considerations, a professional clinical orthodontic consideration was made to define differences greater than 3 mm as clinically relevant to compensate for potential bias in the range less than 3 mm that might result from subtle changes in facial expression or variations in skin volume during circadian cycles.

Differences between the aligned 3D meshes were visualized as heatmaps that disregarded positive or negative overlaps in favor to the absolute difference between the mesh surfaces. This absolute difference also does not reference any particular cephalometric points in (x, y, z) directions.

To compare the results in women and men, we used Fisher’s exact test in the contingency tables because the expected frequencies were low in most cases. All tests were performed at a significance level of 0.05.

To compare the scans from the lateral view, we selected seven different sites: the frontal region, nasal tip, philtrum, vermillion border, oral fissure, mentolabial sulcus, and mental region. To compare these seven sites, we used the Friedman test. Since the Friedman test is not parametric, it uses ranks. The higher the value, the higher the rank.

The statistics were analyzed in the statistical software IBM SPSS 21.

To compare seven different locations in the middle of the face, we used Friedman test. Since the Friedman test is non-parametric, it works with ranks. The higher the value, the higher the rank.

To compare results in women in men, we used Fisher’s exact test in the contingency tables because in most cases, the expected frequencies were low. All tests were made at an alpha significance level equal to 0.05.

3. Results

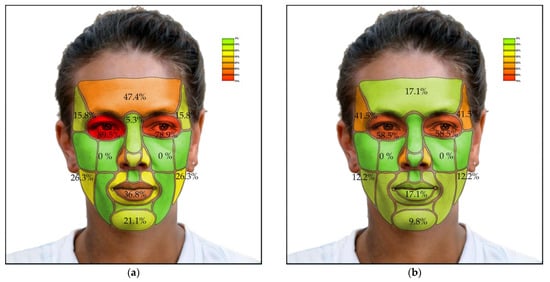

The amount of clinically relevant deviation (differences greater than 3 mm) was measured between the TrueDepth (Bellus3D Dental Pro) scan and the CBCT scan at specific locations on the face (Table 2). The lowest total deviation (less than or equal to 10%) was observed at the tip of the nose, bridge of the nose, nasolabial sulcus, philtrum, mentolabial sulcus, zygomatic bone, infraorbital region, and cheek region. A higher number of deviations (more than 30%) was observed on the right ala nasi, oral fissure, temporal region, and orbital region, with the highest values in the orbital region (65 and 68.3%).

Table 2.

The amount of deviation of TrueDepth (Bellus3D Dental Pro) scans from CBCT scans. First column represents the facial location; second the percentage of men with deviation more than 3 mm between these scans; third column, the same as the second but in women; and the last column represents the difference between men and women evaluated using Fisher’s exact test. In the last column, bold values represent significant differences.

Some differences were found between the right and left parts of some paired regions, such as in the ala nasi and the labial commissure. Significant differences in the amounts of deviations were also observed in males compared with females, statistically confirmed by Fisher’s exact 2-sided test in the frontal and right orbital regions. The amounts of clinically relevant deviations of all measured facial regions between the two scans in men, women, and overall are indicated by colored difference maps in Figure 3a,b. As can be seen in the figure, the deviations are lowest in the mid and lower facial regions, with higher accuracy in the facial prominences and lower accuracy in the deeper structures.

In addition, a double-check comparison was performed in seven regions visible from the lateral view of both aligned facial shells using Friedmann’s test. Since the Friedmann test is non-parametric, it works with ranks. The highest average rank was for oral fissures (4.94), the lowest rank for the sulcus mentolabialis (2.8) (Table 3).

Table 3.

The comparison of seven locations visible from side view of overlapped scans using Friedmann’s test. The locations are arranged from lowest to highest rank.

Figure 4a shows the percentage of deviation in TrueDepth scans from CBCT scans in men and Figure 4b in women. Figure 5 shows the complete results for both.

Figure 4.

The percentage of deviation in TrueDepth scans from CBCT scans: (a) Results in men; (b) Results in women.

Figure 5.

The percentage of deviation in TrueDepth (Bellus3D Dental Pro) scans from CBCT scans—all results together.

4. Discussion

Our results confirm that scans created with TrueDepth sensors (Bellus3D Dental Pro App) are more accurate than one would think at first glance. This study states that the prominences of the face are more accurately imaged, but the accuracy of the concave structures is significantly worse, with higher accuracy in the middle and lower regions of the face. On the other hand, the deviation of 3 mm is still a significant difference from the point of view of orthodontic treatment in terms of micro-aesthetics, but not for a general area of the face. The era of facially driven orthodontics enabled by affordable, noninvasive facial scanning technologies will begin alongside increased capabilities of ubiquitous facial scanners in the form of cell phones and tablets. For macro-proportional facial assessment, the technology is already mature. However, for micro-aesthetic assessment, particularly around the lips, it is not mature yet.

It can be said that the cell phone scanning approach cannot yet compete with CBCT scans in terms of accuracy, although it clearly dominates in terms of availability and noninvasiveness. Higher accuracy in CBCT scans improves the quality of the data recorded by the scanner, which ultimately improves the outcome. The current accuracy of the TrueDepth sensor used with the Bellus3D Dental Pro application is not sufficient to achieve high facial accuracy and therefore cannot be used for detailed orthodontic treatment planning.

CBCT scans, which were used as the gold standard in this study, are not the ideal imaging modality for 3D facial soft tissue evaluations. Primarily, stereophotogrammetry should have been considered for evaluating the accuracy of the captured facial image from the TrueDepth technology of the smartphone. Possible errors in heatmap regional evaluation were reduced with assessment by two independent evaluators that were in agreement on all evaluated pairs, as well as repeated alignment by the described method, which never resulted in significantly different alignments by any factor.

The reproducibility of the captured 3D Images using smartphones with Bellus3D Pro was reasonable. With proper posture as a strict limitation of the face-scanning procedure, the resulting facial scans had submillimeter discrepancies. Poor repeatability of facial scanning may impact negatively on the validity of the method.

In a recent study of D’Ettorre et al. in 2022 [45], the surface-to-surface deviation analysis between Bellus3D and 3dMD(stereophotogrammetry) showed an overlap percentage of 80.01% ± 5.92% within the range of 1 mm discrepancy. A recent systematic review paper focusing on stereophotogrammetry and smartphone technology by Quinzi et al., 2022, concluded that “Stationary stereophotogrammetry devices showed a mean accuracy that ranged from 0.087 to 0.860 mm, portable stereophotogrammetry scanners from 0.150 to 0.849 mm, and smartphones from 0.460 to 1.400 mm.” [46]. The volumetric estimation errors are typically bigger in smartphone scanning than in photogrammetry [47].

A limitation of this study is the small initial number of participants, where the ratio of women and men was not balanced. This could cause unexpected differences between men and women or between the right and left sides of some paired facial regions.

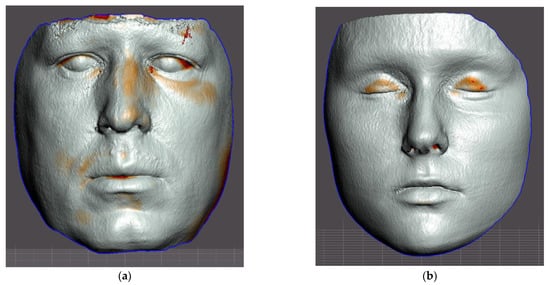

Another limitation of this study is the comparison of the temporal and frontal regions between the CBCT and the TrueDepth scans, which suffered from major artifacts in these regions. The upper border of the CBCT was located typically in the middle horizontal level of the frontal bone, representing many artifacts. Despite the crop of these artifacts, the data of the upper part of the forehead often contained artifacts or were missing in the CBCT scans (Figure 6, left). In consideration of why the discrepancy was observed most frequently in the orbital region, the probable explanation is differences between closed and opened eyelids. Closed eyes in CBCT scans were typical (Figure 6b), whereas open eyes in the TrueDepth scan could influence the result (Figure 6, right scan).

Figure 6.

Examples of aligned segmented CBCT face surfaces and face TrueDepth–Bellus3D scans of authors of this paper. On the resulting differential maps (heatmaps), red indicates differences between both scans: (a) Left surface shows frequent disruptive artifacts on the upper border of CBCT scan; (b) The right scan shows frequent differences resulting from eyes closed during the CBCT scanning.

Sensors are frequently utilized in orthodontics [48,49]. According to the recent study of Cho et al., 2022, “From the 3D CT images, 3D models, also called digital impressions, can be computed for CAD/CAM-based fabrication of dental restorations or orthodontic devices. However, the cone-beam angle-dependent artifacts, mostly caused by the incompleteness of the projection data acquired in the circular cone-beam scan geometry, can induce significant errors in the 3D models.” [50]. Even research of Pojda et al., 2021, confirms the necessity of cheaper and more convenient alternatives to orthodontic facial 3D imaging [51]. Sensors for optical scanning and high-definition CT scanning (microCT) are frequently utilized on the borders of orthodontics and forensic dentistry in the identification of dental patterns, opening new possibilities of human remains identification [52].

A key context to this discussion is that the TrueDepth scanner available on an Apple smartphone or tablet has an advantage in terms of cost and availability, the scans can be performed in a short time with real-time processing, and, of course, the scans can be performed without the patient receiving radiation. In addition, it could open new opportunities for telemedicine applications in dental practices.

Moreover, the TrueDepth scanner, which may be used on certain Apple devices, has tremendous potential, and could potentially be used in a variety of medical settings in the future. Given the pace of development of smartphones and applications, we can predict that the precision and quality of scans will gradually improve.

5. Conclusions

The results confirmed statistically significant differences between facial surfaces from CBCT and TrueDepth (Bellus3D Dental Pro cell phone application) scans in some facial regions with amplitudes greater than 3 mm. This suggests that the current TrueDepth sensor from Apple has limited clinical use in orthodontic applications. However, clinical application of the described approach in orthodontic facial analysis, which does not require accuracy within 3 mm, can be considered.

Author Contributions

Conceptualization, A.T. and V.K.; Data curation, R.H., K.R. and V.K.; Formal analysis, A.T., R.H., K.R. and V.K.; Funding acquisition, A.T.; Investigation, A.T., K.R. and V.K.; Methodology, A.T. and V.K.; Project administration, A.T.; Resources, A.T.; Software, A.T., R.H. and V.K.; Supervision, A.T. and V.K.; Validation, A.T., R.H., K.R. and V.K.; Visualization, A.T. and V.K.; Writing—original draft, A.T., M.S., R.H., K.R., R.U., J.S. and V.K.; Writing—review & editing, A.T., M.S., R.U. and V.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The analyzed data were anonymized. Because the data collection was part of routine clinical procedures and written informed consent was obtained for each case, IRB approval was waived.

Informed Consent Statement

Written informed consent was obtained from all subjects involved in the study prior undertaking the orthodontic aligner therapy.

Data Availability Statement

Anonymized data supporting the reported results are freely available at: https://docs.google.com/spreadsheets/d/1wfbPd66RdPE4EIjg6c4jTTp5-kJH5dBWIPBwEODouMw/edit?usp=sharing (accessed on 12 September 2022). The authors ensure that the data shared are in accordance with the consent provided by participants on the use of confidential data.

Acknowledgments

We acknowledge the technological support of the digital dental lab infrastructure of 3Dent Medical Ltd., Bratislava, Slovakia company as well as dental clinic Sangre Azul Ltd. Bratislava, Slovakia. We also acknowledge L. Wsolová and I. Waczulíkova for their contribution in providing the statistical analysis of our data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Vogt, M.; Rips, A.; Emmelmann, C. Comparison of IPad Pro®’s LiDAR and TrueDepth Capabilities with an Industrial 3D Scanning Solution. Technologies 2021, 9, 25. [Google Scholar] [CrossRef]

- Breitbarth, A.; Schardt, T.; Kind, C.; Brinkmann, J.; Dittrich, P.-G.; Notni, G. Measurement Accuracy and Dependence on External Influences of the IPhone X TrueDepth Sensor. Photonics Educ. Meas. Sci. 2019, 11144, 27–33. [Google Scholar] [CrossRef]

- Pellitteri, F.; Brucculeri, L.; Spedicato, G.A.; Siciliani, G.; Lombardo, L. Comparison of the Accuracy of Digital Face Scans Obtained by Two Different Scanners: An in Vivo Study. Angle Orthod. 2021, 91, 641–649. [Google Scholar] [CrossRef]

- Alhammadi, M.S.; Al-Mashraqi, A.A.; Alnami, R.H.; Ashqar, N.M.; Alamir, O.H.; Halboub, E.; Reda, R.; Testarelli, L.; Patil, S. Diagnostics Accuracy and Reproducibility of Facial Measurements of Digital Photographs and Wrapped Cone Beam Computed Tomography (CBCT) Photographs. Diagnostics 2021, 11, 757. [Google Scholar] [CrossRef]

- Ezhov, M.; Gusarev, M.; Golitsyna, M.; Yates, J.M.; Kushnerev, E.; Tamimi, D.; Aksoy, S.; Shumilov, E.; Sanders, A.; Orhan, K. Clinically Applicable Artificial Intelligence System for Dental Diagnosis with CBCT. Sci. Rep. 2021, 11, 15006. [Google Scholar] [CrossRef]

- Pauwels, R. What Is CBCT and How Does It Work? In Maxillofacial Cone Beam Computed Tomography: Principles, Techniques and Clinical Applications; Springer: Berlin/Heidelberg, Germany, 2018; pp. 13–42. [Google Scholar] [CrossRef]

- Jain, S.; Choudhary, K.; Nagi, R.; Shukla, S.; Kaur, N.; Grover, D. New Evolution of Cone-Beam Computed Tomography in Dentistry: Combining Digital Technologies. Imaging Sci. Dent. 2019, 49, 179–190. [Google Scholar] [CrossRef]

- Mao, W.-Y.; Lei, J.; Lim, L.Z.; Gao, Y.; A Tyndall, D.; Fu, K. Comparison of Radiographical Characteristics and Diagnostic Accuracy of Intraosseous Jaw Lesions on Panoramic Radiographs and CBCT. Dentomaxillofac. Radiol. 2021, 50, 20200165. [Google Scholar] [CrossRef] [PubMed]

- Shujaat, S.; Bornstein, M.M.; Price, J.B.; Jacobs, R. Integration of Imaging Modalities in Digital Dental Workflows—Possibilities, Limitations, and Potential Future Developments. Dentomaxillofac. Radiol. 2021, 50, 20210268. [Google Scholar] [CrossRef] [PubMed]

- Thurzo, A.; Urbanová, W.; Waczulíková, I.; Kurilová, V.; Mriňáková, B.; Kosnáčová, H.; Gális, B.; Varga, I.; Matajs, M.; Novák, B. Dental Care and Education Facing Highly Transmissible SARS-CoV-2 Variants: Prospective Biosafety Setting: Prospective, Single-Arm, Single-Center Study. Int. J. Environ. Res. Public Health 2022, 19, 7693. [Google Scholar] [CrossRef]

- Thurzo, A.; Kurilová, V.; Varga, I. Artificial Intelligence in Orthodontic Smart Application for Treatment Coaching and Its Impact on Clinical Performance of Patients Monitored with AI-Telehealth System. Healthcare 2021, 9, 1695. [Google Scholar] [CrossRef]

- Thurzo, A.; Urbanová, W.; Novák, B.; Czako, L.; Siebert, T.; Stano, P.; Mareková, S.; Fountoulaki, G.; Kosnáčová, H.; Varga, I. Where Is the Artificial Intelligence Applied in Dentistry? Systematic Review and Literature Analysis. Healthcare 2022, 10, 1269. [Google Scholar] [CrossRef] [PubMed]

- Dzelzkalēja, L.; Knēts, J.K.; Rozenovskis, N.; Sīlītis, A. Mobile Apps for 3D Face Scanning. Lect. Notes Netw. Syst. 2022, 295, 34–50. [Google Scholar] [CrossRef]

- Amornvit, P.; Sanohkan, S. The Accuracy of Digital Face Scans Obtained from 3D Scanners: An In Vitro Study. Int. J. Environ. Res. Public Health 2019, 16, 5061. [Google Scholar] [CrossRef]

- Gupta, M. Digital Diagnosis and Treatment Planning. In Digitization in Dentistry; Springer Nature: Berlin, Germany, 2021; pp. 29–63. [Google Scholar] [CrossRef]

- BCC Research Editorial Brief History of LiDAR, Its Evolution and Market Definition. Available online: https://blog.bccresearch.com/brief-history-of-lidar-evolution-and-market-definition (accessed on 30 July 2022).

- Schmid, K.; Carter, J.; Waters, K.; Betzhold, L.; Hadley, B.; Mataosky, R.; Halleran, J. Lidar 101: An Introduction to Lidar Technology, Data, and Applications; NOAA: Washington, DC, USA, 2012. [Google Scholar]

- Cruz, R.D.; Malong, B. Reality Bytes It’s Real Life, Only Better; Outlook Publishing India Pvt. Ltd.: New Delhi, India, 2020. [Google Scholar]

- How Accurate Are Facial Recognition Systems—and Why Does It Matter?|Center for Strategic and International Studies. Available online: https://www.csis.org/blogs/technology-policy-blog/how-accurate-are-facial-recognition-systems-%E2%80%93-and-why-does-it-matter (accessed on 3 September 2022).

- TrueDepth|Apple Wiki|Fandom. Available online: https://apple.fandom.com/wiki/TrueDepth (accessed on 3 September 2022).

- What Is Apple Face ID and How Does It Work? Available online: https://www.pocket-lint.com/phones/news/apple/142207-what-is-apple-face-id-and-how-does-it-work (accessed on 3 September 2022).

- Kołakowska, A.; Szwoch, W.; Szwoch, M. A Review of Emotion Recognition Methods Based on Data Acquired via Smartphone. Sensors 2020, 20, 6367. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Zhang, S.; Ogai, H. Deep 3D Object Detection Networks Using LiDAR Data: A Review. IEEE Sens. J. 2020, 21, 1152–1171. [Google Scholar] [CrossRef]

- Ko, K.; Gwak, H.; Thoummala, N.; Kwon, H.; Kim, S. SqueezeFace: Integrative Face Recognition Methods with LiDAR Sensors. J. Sens. 2021, 2021, 4312245. [Google Scholar] [CrossRef]

- Cameron, A.; Custódio, A.L.N.; Bakr, M.; Reher, P. A Simplified CAD/CAM Extraoral Surgical Guide for Therapeutic Injections. J. Dent. Anesthesia Pain Med. 2021, 21, 253–260. [Google Scholar] [CrossRef] [PubMed]

- Alazzam, A.; Aljarba, S.; Alshomer, F.; Alawirdhi, B. The Utility of Smartphone 3D Scanning, Open-Sourced Computer-Aided Design, and Desktop 3D Printing in the Surgical Planning of Microtia Reconstruction: A Step by Step Guide and Concept Assessment. JPRAS Open 2021, 30, 17–22. [Google Scholar] [CrossRef]

- Alisha, K.H.; Batra, P.; Raghavan, S.; Sharma, K.; Talwar, A. A New Frame for Orienting Infants with Cleft Lip and Palate during 3-Dimensional Facial Scanning. Cleft Palate-Craniofacial J. 2021, 59, 946–950. [Google Scholar] [CrossRef]

- Jayaratne, Y.S.; McGrath, C.P.; Zwahlen, R.A. A Comparison of CBCT Soft Tissue with Bellus3D Facial Scan Superimposition. PLoS ONE 2012, 7, e49585. [Google Scholar] [CrossRef]

- Here Is How Face ID with a Mask Works to Unlock Your IPhone|NextPit. Available online: https://www.nextpit.com/how-to-use-face-id-with-mask-ios-iphone (accessed on 3 September 2022).

- Fountoulaki, G.; Thurzo, A. Change in the Constricted Airway in Patients after Clear Aligner Treatment: A Retrospective Study. Diagnostics 2022, 12, 2201. [Google Scholar] [CrossRef] [PubMed]

- Tsolakis, I.A.; Palomo, J.M.; Matthaios, S.; Tsolakis, A.I. Dental and Skeletal Side Effects of Oral Appliances Used for the Treatment of Obstructive Sleep Apnea and Snoring in Adult Patients—A Systematic Review and Meta-Analysis. J. Pers. Med. 2022, 12, 483. [Google Scholar] [CrossRef] [PubMed]

- Tsolakis, I.A.; Gizani, S.; Tsolakis, A.I.; Panayi, N. Three-Dimensional-Printed Customized Orthodontic and Pedodontic Appliances: A Critical Review of a New Era for Treatment. Children 2022, 9, 1107. [Google Scholar] [CrossRef] [PubMed]

- Tsolakis, I.A.; Verikokos, C.; Perrea, D.; Bitsanis, E.; Tsolakis, A.I. Effects of Diet Consistency on Mandibular Growth. A Review. J. Hell. Veter. Med. Soc. 2019, 70, 1603–1610. [Google Scholar] [CrossRef]

- Qiang, J.; Wu, D.; Du, H.; Zhu, H.; Chen, S.; Pan, H. Review on Facial-Recognition-Based Applications in Disease Diagnosis. Bioengineering 2022, 9, 273. [Google Scholar] [CrossRef]

- Latorre-Pellicer, A.; Ascaso, Á.; Trujillano, L.; Gil-Salvador, M.; Arnedo, M.; Lucia-Campos, C.; Antoñanzas-Pérez, R.; Marcos-Alcalde, I.; Parenti, I.; Bueno-Lozano, G.; et al. Evaluating Face2Gene as a Tool to Identify Cornelia de Lange Syndrome by Facial Phenotypes. Int. J. Mol. Sci. 2020, 21, 1042. [Google Scholar] [CrossRef]

- Wu, Z.; Zhang, Q. A PorTable 3D Shape Measurement System Developed with a Smartphone PorTable 3D Shape Measurement Based on Mobile Platform View Project Flapping Wing Compliant Mechanism View Project A PorTable 3D Shape Measurement System Developed with a Smartphone. 2019. Available online: https://doi.org/10.13140/RG.2.2.21942.45126 (accessed on 10 October 2022).

- Rudy, H.L.; Wake, N.; Yee, J.; Garfein, E.S.; Tepper, O.M. Three-Dimensional Facial Scanning at the Fingertips of Patients and Surgeons: Accuracy and Precision Testing of IPhone X Three-Dimensional Scanner. Plast. Reconstr. Surg. 2020, 146, 1407–1417. [Google Scholar] [CrossRef] [PubMed]

- Mandolini, M.; Brunzini, A.; Facco, G.; Mazzoli, A.; Forcellese, A.; Gigante, A. Comparison of Three 3D Segmentation Software Tools for Hip Surgical Planning. Sensors 2022, 22, 5242. [Google Scholar] [CrossRef]

- Rawlani, R.; Qureshi, H.; Rawlani, V.; Turin, S.Y.; Mustoe, T.A. Volumetric Changes of the Mid and Lower Face with Animation and the Standardization of Three-Dimensional Facial Imaging. Plast. Reconstr. Surg. 2019, 143, 76–85. [Google Scholar] [CrossRef]

- le Fur, I.; Reinberg, A.; Lopez, S.; Morizot, F.; Mechkouri, M.; Tschachler, E. Analysis of Circadian and Ultradian Rhythms of Skin Surface Properties of Face and Forearm of Healthy Women. J. Investig. Dermatol. 2001, 117, 718–724. [Google Scholar] [CrossRef] [PubMed]

- Reinberg, A.; Koulbanis, C.; Soudant, E.; Nicolai, A.; Mechkouri, M.; Smolensky, M. Day-Night Differences in Effects of Cosmetic Treatments on Facial Skin. Effects on Facial Skin Appearance. Chronobiol. Int. 2009, 7, 69–79. [Google Scholar] [CrossRef] [PubMed]

- Ferrario, V.F.; Sforza, C.; Poggio, C.E.; Schmitz, J.H. Facial Volume Changes During Normal Human Growth and Development. Anat. Rec. 1998, 250, 480–487. [Google Scholar] [CrossRef]

- Mailey, B.; Baker, J.L.; Hosseini, A.; Collins, J.; Suliman, A.; Wallace, A.M.; Cohen, S.R. Evaluation of Facial Volume Changes after Rejuvenation Surgery Using a 3-Dimensional Camera. Aesthetic Surg. J. 2015, 36, 379–387. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Revilla-León, M.; Zandinejad, A.; Nair, M.K.; Barmak, B.A.; Feilzer, A.J.; Özcan, M. Accuracy of a Patient 3-Dimensional Virtual Representation Obtained from the Superimposition of Facial and Intraoral Scans Guided by Extraoral and Intraoral Scan Body Systems. J. Prosthet. Dent. 2021. [Google Scholar] [CrossRef]

- D’Ettorre, G.; Farronato, M.; Candida, E.; Quinzi, V.; Grippaudo, C. A Comparison between Stereophotogrammetry and Smartphone Structured Light Technology for Three-Dimensional Face Scanning. Angle Orthod. 2022, 92, 358–363. [Google Scholar] [CrossRef]

- Quinzi, V.; Polizzi, A.; Ronsivalle, V.; Santonocito, S.; Conforte, C.; Manenti, R.J.; Isola, G.; Giudice, A. Facial Scanning Accuracy with Stereophotogrammetry and Smartphone Technology in Children: A Systematic Review. Children 2022, 9, 1390. [Google Scholar] [CrossRef]

- Saif, W.; Alshibani, A. Smartphone-Based Photogrammetry Assessment in Comparison with a Compact Camera for Construction Management Applications. Appl. Sci. 2022, 12, 1053. [Google Scholar] [CrossRef]

- Nahajowski, M.; Lis, J.; Sarul, M. The Use of Microsensors to Assess the Daily Wear Time of Removable Orthodontic Appliances: A Prospective Cohort Study. Sensors 2022, 22, 2435. [Google Scholar] [CrossRef]

- Chae, J.M.; Rogowski, L.; Mandair, S.; Bay, R.C.; Park, J.H. A CBCT Evaluation of Midpalatal Bone Density in Various Skeletal Patterns. Sensors 2021, 21, 7812. [Google Scholar] [CrossRef]

- Cho, M.H.; Hegazy, M.A.A.; Cho, M.H.; Lee, S.Y. Cone-Beam Angle Dependency of 3D Models Computed from Cone-Beam CT Images. Sensors 2022, 22, 1253. [Google Scholar] [CrossRef]

- Pojda, D.; Tomaka, A.A.; Luchowski, L.; Tarnawski, M. Integration and Application of Multimodal Measurement Techniques: Relevance of Photogrammetry to Orthodontics. Sensors 2021, 21, 8026. [Google Scholar] [CrossRef] [PubMed]

- Thurzo, A.; Jančovičová, V.; Hain, M.; Thurzo, M.; Novák, B.; Kosnáčová, H.; Lehotská, V.; Moravanský, N.; Varga, I. Human Remains Identification Using Micro-CT, Spectroscopic and A.I. Methods in Forensic Experimental Reconstruction of Dental Patterns after Concentrated Acid Significant Impact. Molecules 2022, 27, 4035. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).