Assessment of the Performance of a Portable, Low-Cost and Open-Source Device for Luminance Mapping through a DIY Approach for Massive Application from a Human-Centred Perspective

Abstract

1. Introduction

- What is the response of a low-cost camera compared with a professional camera photometer in different controlled environments with different light sources?

- Is there a considerable difference between the luminance values of the low-cost camera and the professional one, and is it possible to consider an eventually differentiated correction factor for the different lighting systems?

- Eventually, is it possible to consider an even simpler algorithm that automatically adjusts the luminance distribution of the low-cost system considering the different lighting systems to adapt to that of the professional camera?

2. Materials and Methods

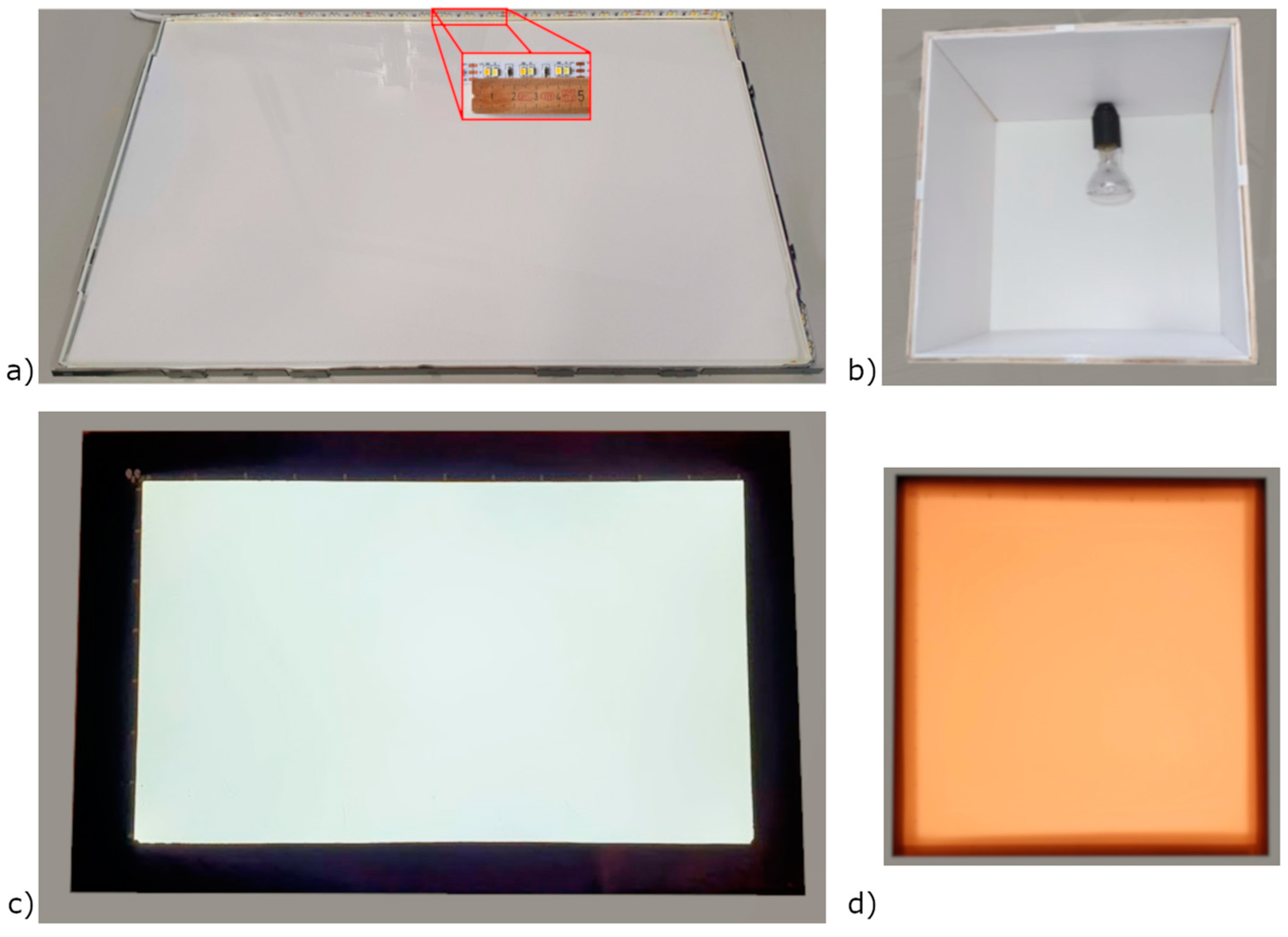

2.1. Lighting Panels Used as a Reference Luminance Source

- An aluminium frame where the led strip was located on the long sides of the aluminium frame;

- An ethylene vinyl acetate EVA layer;

- A reflective paper;

- A light guide panel;

- A diffuser paper.

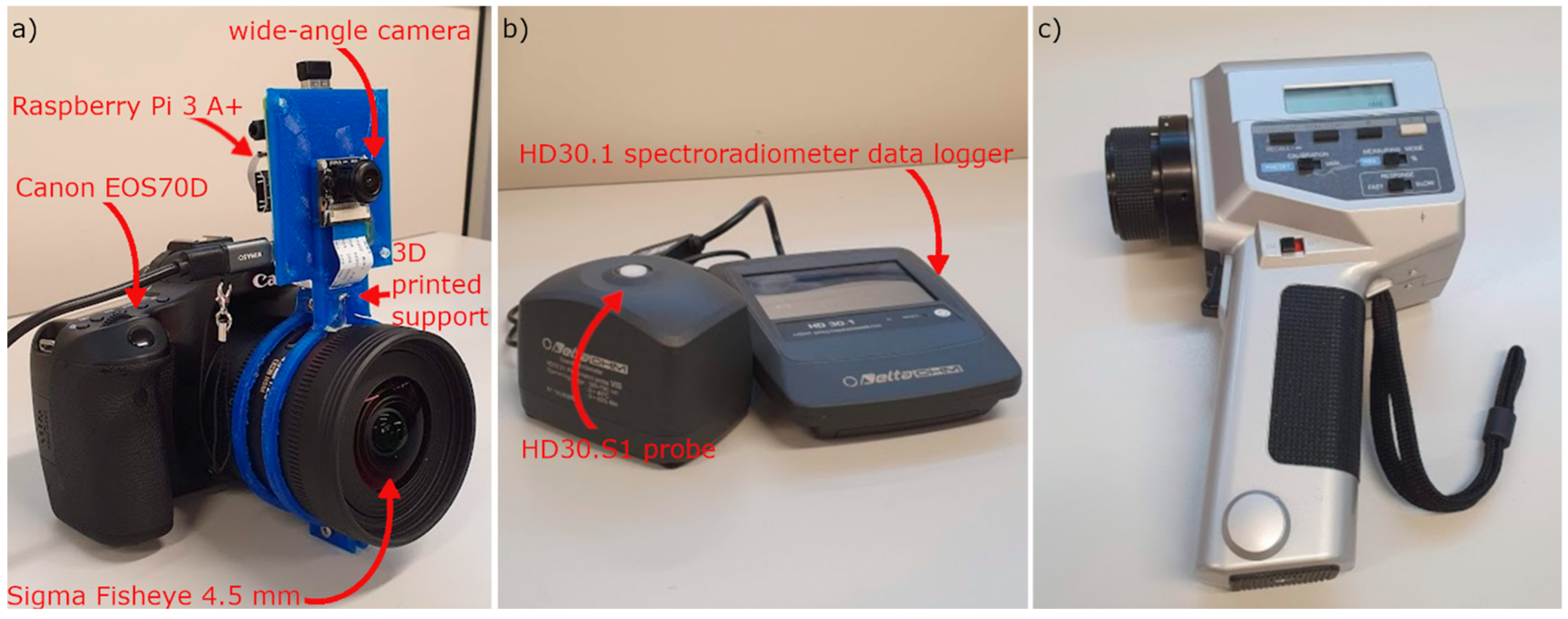

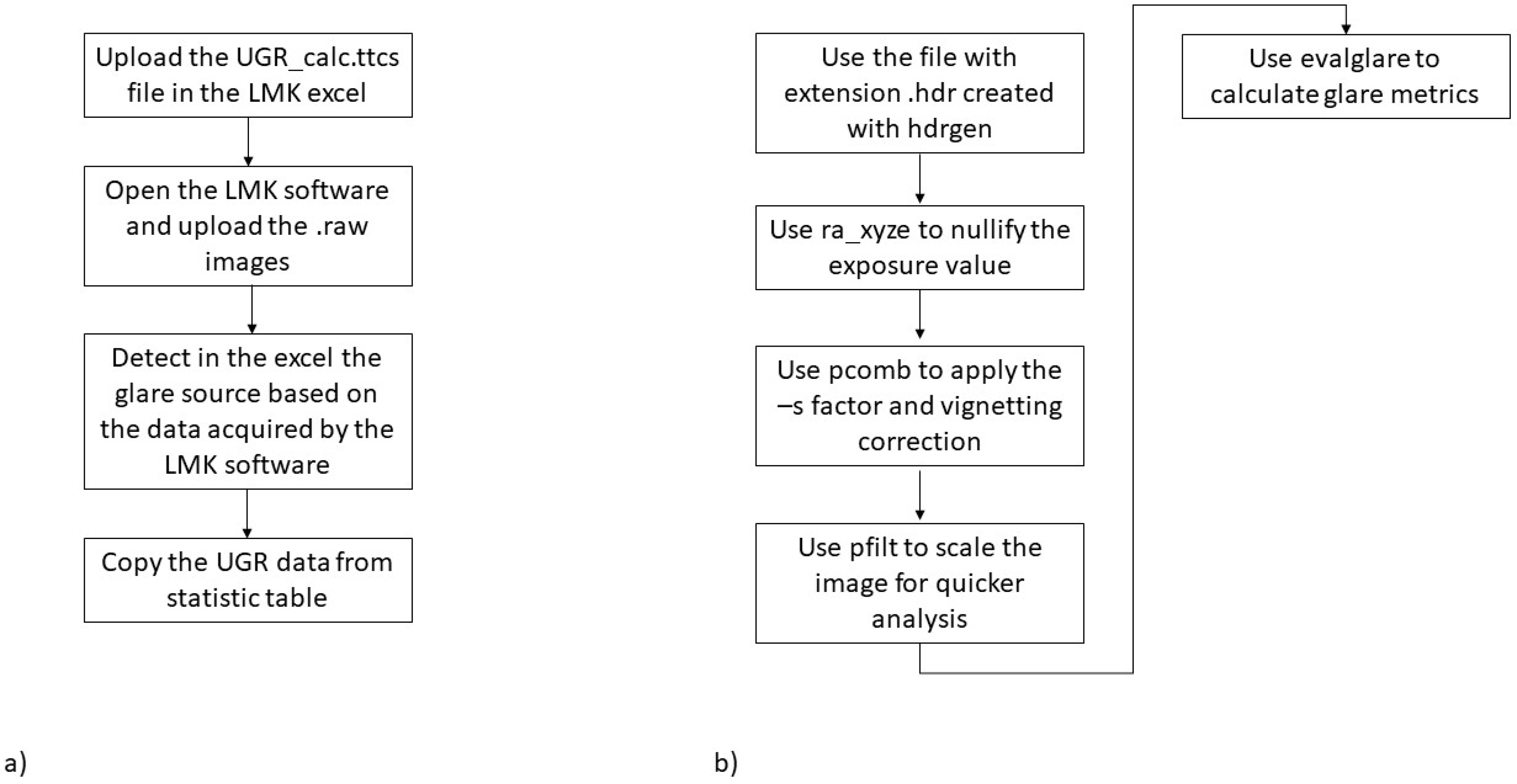

2.2. Equipment Used and Flowchart Used to Acquire the High Dynamic Range Images

2.3. Final Setup

3. Results

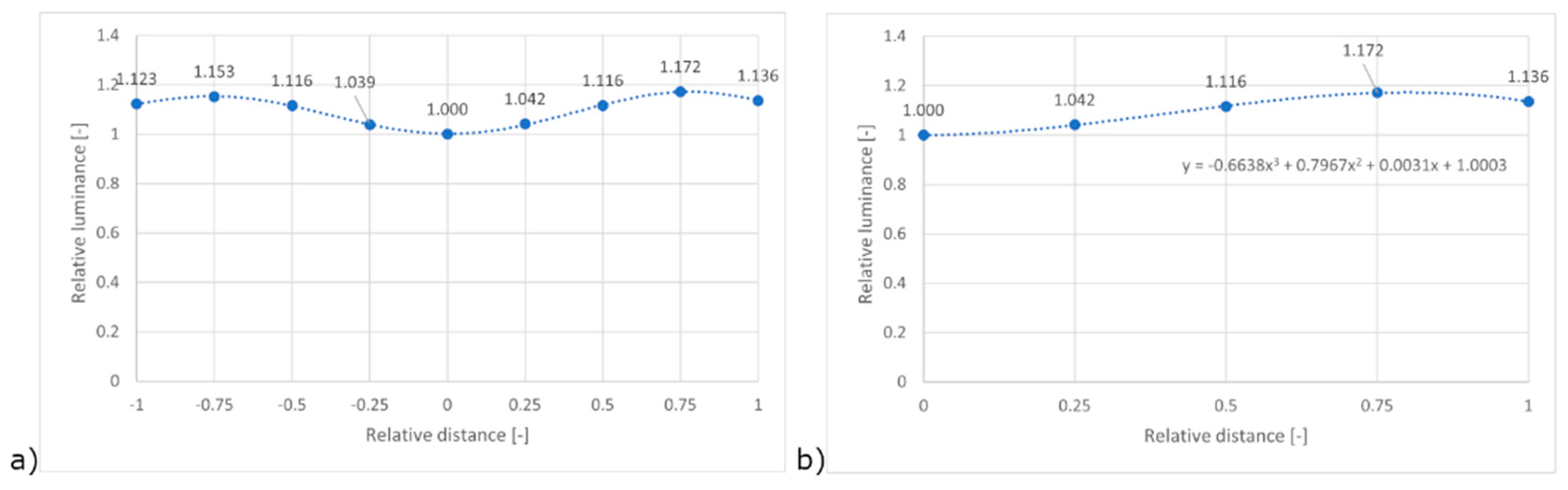

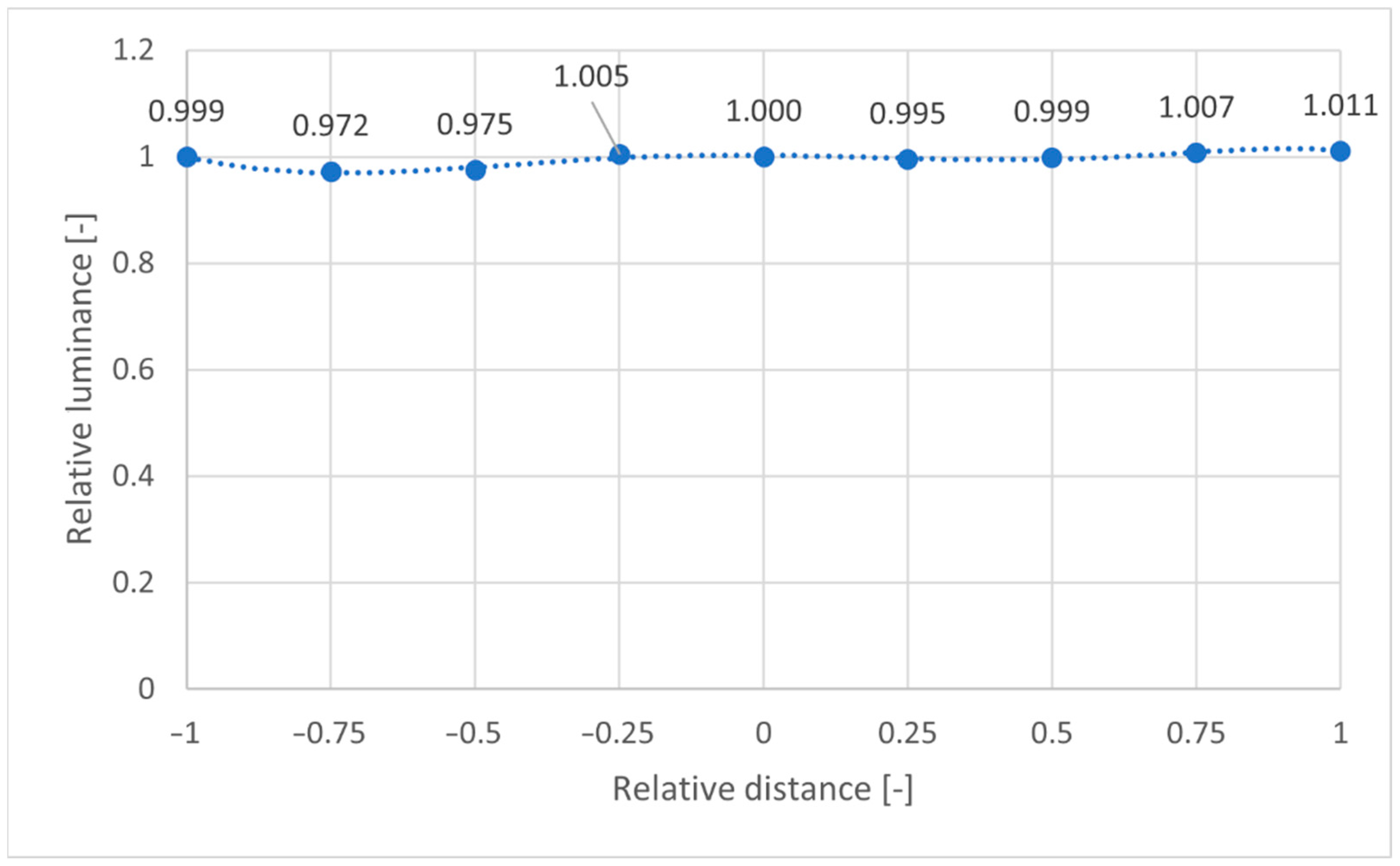

3.1. Vignetting Assessment

- By applying the software correction of the low-cost camera as described above, the centre of the image records lower luminance values than those moving towards the corner of the image.

- It is possible to confirm the symmetrical distribution of the values, in line with expectations.

3.2. Panel and Cube Characterisation with Konica Minolta Luminance Reference Meter

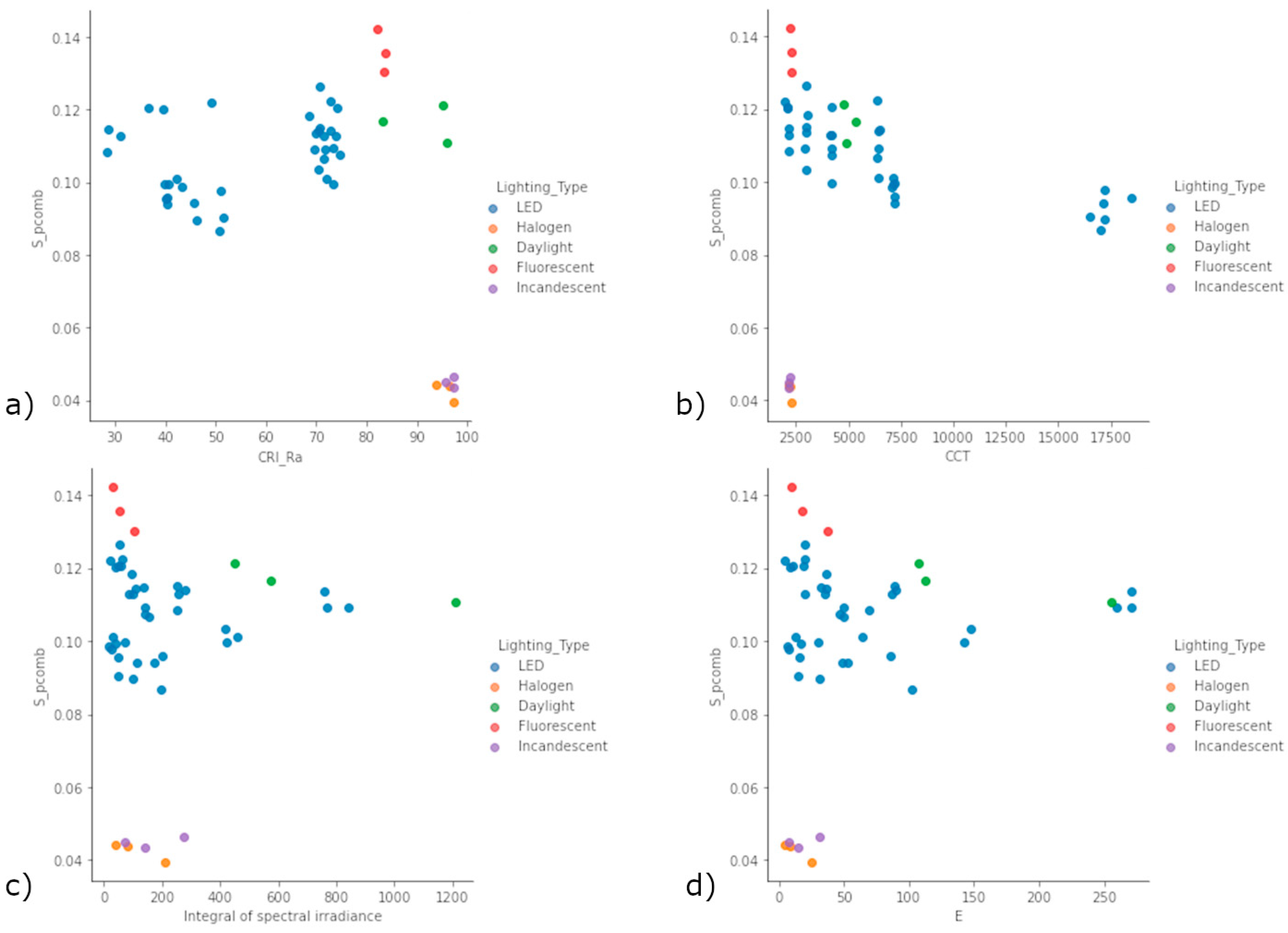

3.3. Camera Photometer and Raspberry Camera Comparison

- IF CRI_Ra ≤ 81 => “LED” => S = 0.105;

- ELSE IF 81 < CRI_Ra ≤ 90 & Integral of spectral irradiance< 300 => “Fluorescent” => S = 0.136;

- ELSE IF CRI_Ra > 90 & Integral of spectral irradiance< 300 => “Incandescent” of “Halogen” => S = 0.043;

- ELSE Daylight => S = 0.116.

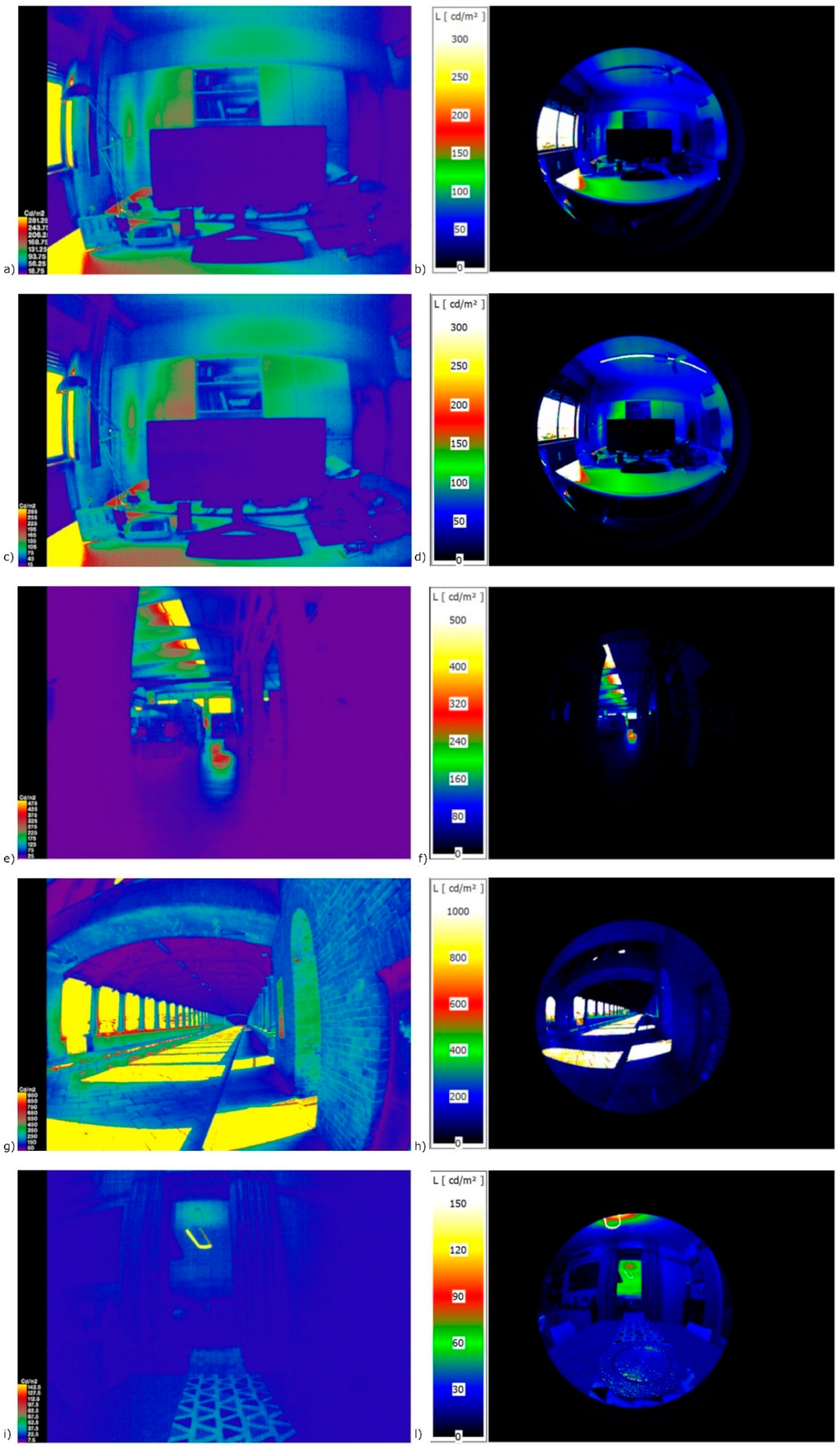

3.4. False-Colour Analysis in Real Cases

- indoor space, office with daylight only (lat: 45.40182, long: 9.24962; date: 07/04/2022; time: 13:02) (CRI_Ra > 90 (96.4) and Integral of spectral irradiance > 300 (1112.5) => “Daylight” => S = 0.116);

- indoor space, office with daylight and fluorescent lamps (lat: 45.40182, long: 9.24962; date: 07/04/2022; time: 13:22) (CRI_Ra > 90 (94.3) and Integral of spectral irradiance > 300 (1226.1) => “Daylight” => S = 0.116);

- indoor space, industrial fabric (lat: 45.40182, long: 9.24962; date: 07/04/2022; time: 14:02) (CRI_Ra > 90 (96.5) and Integral of spectral irradiance > 300 (507.87) => “Daylight” => S = 0.116);

- outdoor space, Ponte Coperto (PV) (lat: 45.180681, long: 9.156303; date: 06/26/2022; time: 08:50) (CRI_Ra > 90 (96.5) and Integral of spectral irradiance > 300 (4680.44) => “Daylight” => S = 0.116);

- indoor space, living room at dusk (lat: 45.163057, long: 9.135930; date: 07/05/2022; time: 21:28) (CRI_Ra ≤ 81 (80.2) => “LED” => S = 0.105).

- The raspicam is less resolute and also has less FoV, but we already knew this in advance;

- Even in a very low light scenario (living room at dusk), it is possible to highlight a good comparison in terms of luminance distribution, demonstrating a good criterion for selection of the light source and, consequently, the correct S factor to apply to a low-cost HDR image.

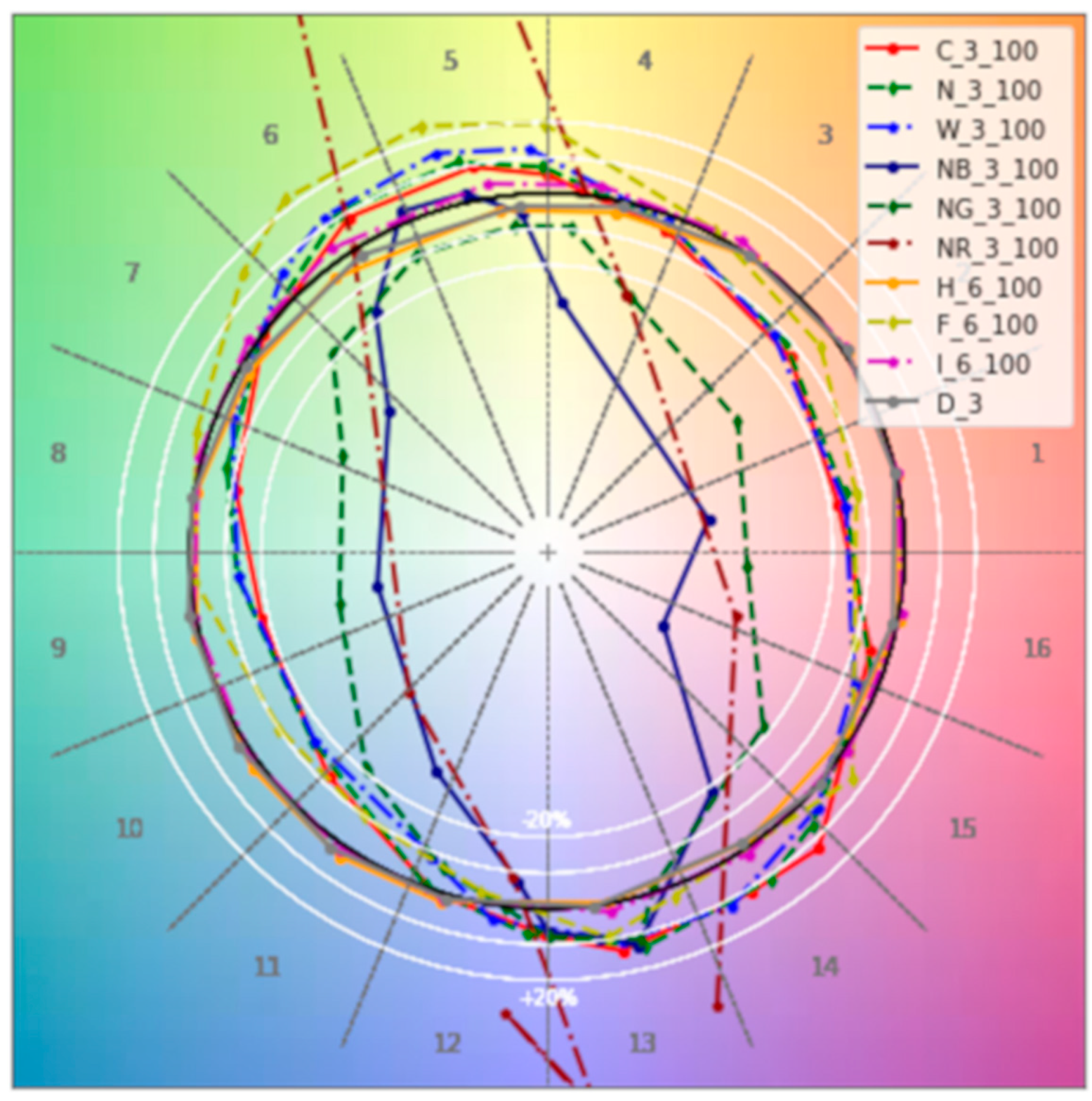

3.5. Glare Index Analysis

- The first one considers a task area, as recommended in Ref [17]—a useful approach, especially in the case of scenarios 1, 2 and 5, where users are expected to concentrate their gaze towards a specific area. The average luminance is calculated, and each pixel exceeding this value multiplied by a default factor equal to 5 [17] is considered a glare source.

- The second approach—especially useful in the case of walking, when users are not concentrated in a specific area—does not consider a task area, in contrast to what is reported in Ref [17]. This allows us to consider the entire area captured. In this case, a constant threshold luminance level equal to 1500 cd/m2 is used. This second method also considers the difference in glare assessment due to the different FoV of the acquired figures. Depending on the derived HDR image, two different approaches are considered (Figure 12).

- a.

- The first method—the most accurate—is based on the analysis of the overall luminance histogram and sets the first minimum after the first maximum as the luminance threshold level.

- b.

- The second method is based on using a task area defined in the LMK LabSoft, and the average luminance of the task zone area is defined as the threshold level. The threshold level is multiplied by a factor set to 5.

- c.

- The third method is based on manually setting a luminance threshold level—in this case, equal to 1500 cd/m2—for the first four scenarios, while for the fifth, a value equal to 1000 cd/m2 is considered.

- ra_xyze -r -o 20220705_2128.hdr > 20220705_2128_EVinpixel.hdr

- The pcomb function is then used to apply the S factor and vignetting adjusting, as reported in the following example:

- pcomb -f vignettingfilter.cal -s 0.105 -o 20220705_2128_EVinpixel.hdr > 20220705_2128_EVinpixel_0105corr.hdr

- pfilt -1 -e 1 -x 1120 -y 840 20220705_2128_EVinpixel_0105corr.hdr > 20220705_2128_EVinpixel_0105corr.pic

- Pfilt -1 -e 1 -x 1120 -y 840 xxx.hdr > xxx.pic (where “xxx” expresses the name of the initial hdr file)

- In the case of considering the task area, the following script is used, which allows first calculating the glare indices and then saving a pic file with the highlighted task area by considering the following script:

- evalglare -T 580 350 0.7 -vth -vv 122 -vh 90 -c taskarea.pic 20220704_1302_EVinpixel_0116corr.pic

- In the case of scenarios 3 and 4, typically a walking scenario, the y position of the task area is lowered slightly and set equal to 100, imagining that the user is focused on looking at the area where they will place their feet. Then, the pic file is converted to a more useful tif file by considering the following:

- ra_tiff -z taskarea.pic taskarea.tif

- Meanwhile, in the case of considering the entire area captured, the following script is considered:

- evalglare -vth -vv 122 -vh 90 -b 1500 xxx.pic > glare_xxx.txt

4. Discussion and Future Improvements

5. Conclusions

- Luminance mapping can be performed using a low-cost camera if it is subjected to a time-consuming but necessary calibration process;

- The S factor of the pcomb function allows us to consider a correction factor that can be applied to the low-cost system to better match the luminance values of the professional device;

- The S factor can be differentiated by considering different light sources, and in our study, we introduce a rough algorithm that performs this;

- The calibration process could be replicated following a DIY approach to account for the different limitations/improvements, as described in the previous section.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| 50_C | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | 30 | 33 | 36 |

| 0.5 | 440 | 704 | 655 | 731 | 678 | 631 | 568 | 584 | 636 | 640 | 613 | 620 | 479 |

| 3 | 691 | 1040 | 1066 | 1050 | 1030 | 1029 | 1024 | 1009 | 1011 | 1022 | 1074 | 1054 | 680 |

| 6 | 693 | 1009 | 1017 | 1033 | 1028 | 1037 | 1044 | 1052 | 1031 | 1035 | 1033 | 1019 | 706 |

| 9 | 692 | 983 | 1002 | 1009 | 1024 | 1058 | 1083 | 1089 | 1050 | 1027 | 1016 | 1012 | 665 |

| 12 | 654 | 980 | 1001 | 990 | 1001 | 1057 | 1072 | 1083 | 1030 | 1017 | 995 | 975 | 658 |

| 15 | 700 | 961 | 976 | 982 | 990 | 1017 | 1031 | 1036 | 1019 | 1012 | 1002 | 958 | 698 |

| 18 | 748 | 985 | 979 | 984 | 997 | 1018 | 1020 | 1022 | 1010 | 1016 | 1009 | 984 | 682 |

| 21 | 528 | 701 | 759 | 786 | 715 | 812 | 557 | 758 | 439 | 523 | 545 | 568 | 488 |

| 100_C | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | 30 | 33 | 36 |

| 0.5 | 787 | 1190 | 1231 | 1129 | 1209 | 1099 | 1071 | 1287 | 1284 | 1142 | 1161 | 1376 | 761 |

| 3 | 1527 | 1887 | 1882 | 1897 | 1866 | 1852 | 1846 | 1799 | 1811 | 1823 | 1918 | 1896 | 1269 |

| 6 | 1164 | 1799 | 1807 | 1858 | 1867 | 1874 | 1888 | 1870 | 1858 | 1849 | 1873 | 1792 | 1244 |

| 9 | 1126 | 1755 | 1758 | 1823 | 1855 | 1902 | 1950 | 1916 | 1853 | 1837 | 1820 | 1768 | 1141 |

| 12 | 1171 | 1766 | 1763 | 1797 | 1834 | 1887 | 1926 | 1890 | 1822 | 1805 | 1773 | 1717 | 1160 |

| 15 | 1200 | 1714 | 1725 | 1775 | 1787 | 1825 | 1848 | 1824 | 1809 | 1799 | 1767 | 1702 | 1101 |

| 18 | 1296 | 1752 | 1770 | 1766 | 1790 | 1821 | 1826 | 1802 | 1811 | 1807 | 1770 | 1748 | 1211 |

| 21 | 796 | 1358 | 1288 | 1343 | 1187 | 1671 | 1561 | 1288 | 1291 | 1423 | 1552 | 1278 | 802 |

| 50_W | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | 30 | 33 | 36 |

| 0.5 | 374 | 682 | 693 | 657 | 661 | 641 | 635 | 626 | 627 | 648 | 636 | 606 | 614 |

| 3 | 699 | 1028 | 1038 | 1060 | 1062 | 1023 | 999 | 989 | 985 | 999 | 1017 | 1048 | 654 |

| 6 | 643 | 989 | 1023 | 1043 | 1051 | 1032 | 1028 | 1019 | 1014 | 1009 | 1024 | 1020 | 666 |

| 9 | 641 | 974 | 1003 | 1017 | 1033 | 1056 | 1075 | 1055 | 1034 | 1021 | 1018 | 1011 | 627 |

| 12 | 654 | 960 | 986 | 988 | 1013 | 1043 | 1073 | 1046 | 1017 | 1008 | 1006 | 997 | 635 |

| 15 | 585 | 931 | 954 | 973 | 1001 | 1012 | 1014 | 1005 | 997 | 992 | 976 | 945 | 664 |

| 18 | 649 | 949 | 977 | 1001 | 1001 | 996 | 994 | 988 | 993 | 985 | 978 | 968 | 662 |

| 21 | 462 | 578 | 559 | 623 | 682 | 663 | 666 | 682 | 669 | 588 | 623 | 705 | 451 |

| 100_W | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | 30 | 33 | 36 |

| 0.5 | 719 | 1355 | 1356 | 1301 | 1287 | 1231 | 1212 | 1247 | 1314 | 1355 | 1387 | 1340 | 696 |

| 3 | 906 | 1865 | 1878 | 1908 | 1909 | 1852 | 1819 | 1796 | 1792 | 1826 | 1881 | 1916 | 830 |

| 6 | 1122 | 1775 | 1846 | 1878 | 1895 | 1881 | 1874 | 1848 | 1839 | 1843 | 1869 | 1847 | 1009 |

| 9 | 1037 | 1766 | 1822 | 1842 | 1883 | 1912 | 1944 | 1910 | 1871 | 1847 | 1860 | 1845 | 1092 |

| 12 | 1114 | 1771 | 1797 | 1803 | 1833 | 1899 | 1942 | 1878 | 1832 | 1812 | 1806 | 1794 | 1139 |

| 15 | 1006 | 1702 | 1754 | 1786 | 1814 | 1826 | 1821 | 1811 | 1805 | 1799 | 1779 | 1723 | 1095 |

| 18 | 1204 | 1735 | 1745 | 1797 | 1830 | 1820 | 1798 | 1800 | 1810 | 1803 | 1797 | 1808 | 970 |

| 21 | 723 | 1026 | 1062 | 1222 | 1219 | 1116 | 1085 | 1056 | 1099 | 1164 | 1028 | 1220 | 766 |

| 50_N | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | 30 | 33 | 36 |

| 0.5 | 497 | 642 | 705 | 682 | 665 | 649 | 697 | 654 | 664 | 678 | 718 | 753 | 544 |

| 3 | 573 | 1000 | 1011 | 1016 | 1012 | 990 | 978 | 959 | 964 | 972 | 1007 | 1021 | 596 |

| 6 | 661 | 952 | 989 | 1001 | 1011 | 1010 | 1010 | 996 | 990 | 989 | 1000 | 979 | 524 |

| 9 | 562 | 950 | 977 | 984 | 1008 | 1032 | 1048 | 1025 | 1007 | 990 | 986 | 969 | 534 |

| 12 | 576 | 949 | 960 | 969 | 992 | 1017 | 1038 | 1018 | 995 | 980 | 971 | 953 | 524 |

| 15 | 565 | 924 | 943 | 954 | 968 | 983 | 987 | 979 | 973 | 966 | 950 | 919 | 629 |

| 18 | 652 | 941 | 942 | 957 | 969 | 972 | 967 | 959 | 964 | 962 | 955 | 942 | 632 |

| 21 | 420 | 572 | 627 | 669 | 630 | 606 | 608 | 592 | 594 | 617 | 634 | 656 | 434 |

| 100_N | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | 30 | 33 | 36 |

| 0.5 | 570 | 996 | 982 | 1019 | 1003 | 886 | 910 | 913 | 1015 | 932 | 948 | 989 | 551 |

| 3 | 783 | 1786 | 1801 | 1820 | 1819 | 1780 | 1754 | 1730 | 1728 | 1750 | 1798 | 1817 | 1227 |

| 6 | 1055 | 1694 | 1769 | 1794 | 1810 | 1809 | 1810 | 1790 | 1771 | 1772 | 1794 | 1769 | 1108 |

| 9 | 1056 | 1695 | 1749 | 1770 | 1805 | 1845 | 1880 | 1841 | 1805 | 1778 | 1776 | 1742 | 1037 |

| 12 | 971 | 1686 | 1719 | 1737 | 1771 | 1825 | 1852 | 1826 | 1789 | 1761 | 1748 | 1710 | 926 |

| 15 | 899 | 1648 | 1690 | 1703 | 1729 | 1755 | 1765 | 1756 | 1749 | 1738 | 1708 | 1643 | 922 |

| 18 | 954 | 1673 | 1688 | 1710 | 1733 | 1737 | 1729 | 1718 | 1731 | 1726 | 1700 | 1666 | 964 |

| 21 | 656 | 999 | 1014 | 1042 | 1031 | 892 | 875 | 847 | 867 | 938 | 1005 | 1015 | 556 |

| Cube_H | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | |||

| 0.5 | 164 | 180 | 185 | 197 | 198 | 193 | 201 | 193 | 208 | 177 | |||

| 3 | 251 | 274 | 282 | 292 | 295 | 296 | 295 | 294 | 288 | 218 | |||

| 6 | 244 | 283 | 291 | 301 | 309 | 312 | 312 | 311 | 301 | 228 | |||

| 9 | 259 | 297 | 310 | 320 | 324 | 329 | 327 | 324 | 312 | 228 | |||

| 12 | 278 | 308 | 323 | 331 | 336 | 338 | 342 | 338 | 328 | 231 | |||

| 15 | 304 | 315 | 328 | 341 | 351 | 355 | 355 | 350 | 342 | 317 | |||

| 18 | 311 | 325 | 343 | 359 | 366 | 369 | 370 | 370 | 357 | 324 | |||

| 21 | 292 | 329 | 343 | 358 | 368 | 375 | 376 | 375 | 365 | 290 | |||

| 24 | 288 | 318 | 334 | 332 | 339 | 347 | 353 | 352 | 343 | 286 | |||

| 27 | 247 | 286 | 303 | 315 | 320 | 327 | 331 | 325 | 311 | 225 | |||

| Cube_F | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | |||

| 0.5 | 323 | 378 | 389 | 414 | 416 | 405 | 422 | 405 | 437 | 372 | |||

| 3 | 527 | 575 | 592 | 613 | 620 | 622 | 620 | 617 | 605 | 458 | |||

| 6 | 512 | 594 | 611 | 632 | 649 | 655 | 655 | 653 | 632 | 479 | |||

| 9 | 544 | 624 | 651 | 672 | 680 | 691 | 687 | 680 | 655 | 479 | |||

| 12 | 584 | 647 | 678 | 695 | 706 | 710 | 718 | 710 | 689 | 485 | |||

| 15 | 638 | 662 | 689 | 716 | 737 | 746 | 746 | 735 | 718 | 666 | |||

| 18 | 653 | 683 | 720 | 754 | 769 | 775 | 777 | 777 | 750 | 680 | |||

| 21 | 613 | 691 | 720 | 752 | 773 | 788 | 790 | 788 | 767 | 609 | |||

| 24 | 605 | 668 | 701 | 697 | 712 | 729 | 741 | 739 | 720 | 601 | |||

| 27 | 519 | 601 | 636 | 662 | 672 | 687 | 695 | 683 | 653 | 473 | |||

| Cube_I | |||||||||||||

| y/x [cm] | 0.5 | 3 | 6 | 9 | 12 | 15 | 18 | 21 | 24 | 27 | |||

| 0.5 | 307 | 359 | 369 | 393 | 395 | 385 | 401 | 385 | 415 | 353 | |||

| 3 | 501 | 547 | 563 | 583 | 589 | 591 | 589 | 587 | 575 | 435 | |||

| 6 | 487 | 565 | 581 | 600 | 616 | 622 | 622 | 620 | 600 | 455 | |||

| 9 | 517 | 593 | 618 | 638 | 646 | 656 | 652 | 646 | 622 | 455 | |||

| 12 | 555 | 614 | 644 | 660 | 670 | 674 | 682 | 674 | 654 | 461 | |||

| 15 | 606 | 628 | 654 | 680 | 700 | 708 | 708 | 698 | 682 | 632 | |||

| 18 | 620 | 648 | 684 | 716 | 730 | 736 | 738 | 738 | 712 | 646 | |||

| 21 | 583 | 656 | 684 | 714 | 734 | 748 | 750 | 748 | 728 | 579 | |||

| 24 | 575 | 634 | 666 | 662 | 676 | 692 | 704 | 702 | 684 | 571 | |||

| 27 | 493 | 571 | 604 | 628 | 638 | 652 | 660 | 648 | 620 | 449 | |||

References

- Hirning, M.; Coyne, S.; Cowling, I. The use of luminance mapping in developing discomfort glare research. J. Light Vis. Environ. 2010, 34, 101–104. [Google Scholar] [CrossRef][Green Version]

- Scorpio, M.; Laffi, R.; Masullo, M.; Ciampi, G.; Rosato, A.; Maffei, L.; Sibilio, S. Virtual reality for smart urban lighting design: Review, applications and opportunities. Energies 2020, 13, 3809. [Google Scholar] [CrossRef]

- Bellazzi, A.; Danza, L.; Devitofrancesco, A.; Ghellere, M.; Salamone, F. An artificial skylight compared with daylighting and LED: Subjective and objective performance measures. J. Build. Eng. 2022, 45, 103407. [Google Scholar] [CrossRef]

- Pierson, C.; Wienold, J.; Bodart, M. Review of Factors Influencing Discomfort Glare Perception from Daylight. LEUKOS J. Illum. Eng. Soc. N. Am. 2018, 14, 111–148. [Google Scholar] [CrossRef]

- Pierson, C.; Cauwerts, C.; Bodart, M.; Wienold, J. Tutorial: Luminance Maps for Daylighting Studies from High Dynamic Range Photography. LEUKOS J. Illum. Eng. Soc. N. Am. 2021, 17, 140–169. [Google Scholar] [CrossRef]

- Krüger, U.; Blattner, P.; Bergen, T.; Bouroussis, C.; Campos Acosta, J.; Distl, R.; Heidel, G.; Ledig, J.; Rykowski, R.; Sauter, G.; et al. CIE 244: 2021 Characterization of Imaging Luminance Measurement Devices (ILMDs); International Commission on Illumination: Vienna, Austria, 2021; p. 59. [Google Scholar]

- Filipeflop Raspberry Pi 3 Model B-Raspberry Pi. Available online: https://www.raspberrypi.com/products/raspberry-pi-3-model-b/ (accessed on 8 March 2022).

- LMK Camera Photometer Descricption. Available online: https://www.technoteam.de/apool/tnt/content/e5183/e5432/e5733/e6645/lmk_ma_web_en2016_eng.pdf (accessed on 16 July 2022).

- Deltaohm Spectroradiometer Description. Available online: https://www.deltaohm.com/product/hd30-1-spectroradiometer-data-logger/ (accessed on 16 July 2022).

- Wolska, A.; Sawicki, D. Practical application of HDRI for discomfort glare assessment at indoor workplaces. Meas. J. Int. Meas. Confed. 2020, 151, 107179. [Google Scholar] [CrossRef]

- Anyhere Software. Available online: anyhere.com (accessed on 16 July 2022).

- Lens Shading Correction for Raspberry Pi Cam. Available online: https://openflexure.discourse.group/t/lens-shading-correction-for-raspberry-pi-camera/682/2 (accessed on 16 July 2022).

- Bowman, R.W.; Vodenicharski, B.; Collins, J.T.; Stirling, J. Flat-Field and Colour Correction for the Raspberry Pi Camera Module. arXiv 2021, arXiv:1911.13295. [Google Scholar] [CrossRef]

- Jacobs, A.; Wilson, M. Determining Lens Vignetting with HDR Techniques. In Proceedings of the XII National Conference on Lighting, Varna, Bulgaria, 10–12 June 2007; pp. 10–12. [Google Scholar]

- ies-tm30 Files of All Considered Lighting Sources. Available online: https://cnrsc-my.sharepoint.com/personal/francesco_salamone_cnr_it/_layouts/15/onedrive.aspx?id=%2Fpersonal%2Ffrancesco_salamone_cnr_it%2FDocuments%2FDottorato_Vanvitelli%2FPaper_raspi_cam%2FPaper_Annex_ies-tm30&ga=1 (accessed on 1 September 2022).

- Pcomb-Radiance. Available online: https://floyd.lbl.gov/radiance/man_html/pcomb.1.html (accessed on 16 July 2022).

- Evalglare-Radiance. Available online: https://www.radiance-online.org/learning/documentation/manual-pages/pdfs/evalglare.pdf/at_download/file (accessed on 16 July 2022).

- Operation Manual LMK LabSoft. Available online: https://www.technoteam.de/apool/tnt/content/e5183/e5432/e5733/e5735/OperationmanualLMKLabSoft_eng.pdf (accessed on 16 July 2022).

- Pfilt-Radance. Available online: https://floyd.lbl.gov/radiance/man_html/pfilt.1.html (accessed on 14 March 2022).

- Carlucci, S.; Causone, F.; De Rosa, F.; Pagliano, L. A review of indices for assessing visual comfort with a view to their use in optimization processes to support building integrated design. Renew. Sustain. Energy Rev. 2015, 47, 1016–1033. [Google Scholar] [CrossRef]

- Sawicki, D.; Wolska, A. The Unified semantic Glare scale for GR and UGR indexes. In Proceedings of the IEEE Lighting Conference of the Visegrad Countries, Karpacz, Poland, 13–16 September 2016. [Google Scholar] [CrossRef]

- Mead, A.; Mosalam, K. Ubiquitous luminance sensing using the Raspberry Pi and Camera Module system. Light. Res. Technol. 2017, 49, 904–921. [Google Scholar] [CrossRef]

- Huynh, T.T.M.; Nguyen, T.-D.; Vo, M.-T.; Dao, S.V.T. High Dynamic Range Imaging Using A 2x2 Camera Array with Polarizing Filters. In Proceedings of the 19th International Symposium on Communications and Information Technologies (ISCIT), Ho Chi Minh City, Vietnam, 25–27 September 2019; pp. 183–187. [Google Scholar]

| Variable | Value |

|---|---|

| Integral spectral mismatch for halogen metal discharge lamps | 2–9 [%] |

| Integral spectral mismatch for high-pressure sodium discharge lamps | 7–13 [%] |

| Integral spectral mismatch for fluorescent lamps | 8–10 [%] |

| Integral spectral mismatch for LED white | 5–12 [%] |

| Calibration uncertainty ΔL | 2.5 [%] |

| Repeatability ΔL | 0.5–2 [%] |

| Uniformity ΔL | ±2 [%] |

| Configuration | Min Luminance [cd/m2] | Mean Luminance [cd/m2] | Max Luminance [cd/m2] |

|---|---|---|---|

| 50_C | 1072 | 1081 | 1089 |

| 100_C | 1890 | 1920 | 1950 |

| 50_W | 1046 | 1062 | 1075 |

| 100_W | 1878 | 1918 | 1944 |

| 50_N | 1018 | 1032 | 1048 |

| 100_N | 1826 | 1849 | 1880 |

| Cube_H | 370 | 373 | 376 |

| Cube_F | 777 | 783 | 790 |

| Cube_I | 738 | 744 | 750 |

| Configuration 1 | Camera Photometer | Default Raspberry Values | S_Pcomb Factor_Mean | Raspberry Corrected Value | E | CCT | CRI _Ra | Integral of Spectral Irradiance |

|---|---|---|---|---|---|---|---|---|

| [cd/m2] | [-] | [-] | [cd/m2] 2 | [lx] | [K] | [-] | [mW/m2] | |

| 100_C_1 | 1961 | 17163 | 0.114257 | 1802.115 | 36 | 6471 | 70.4 | 109.36 |

| 100_N_1 | 1880 | 16645 | 0.112947 | 1747.725 | 35 | 4143 | 71.5 | 99.42 |

| 100_W_1 | 1944 | 16437 | 0.118270 | 1725.885 | 36 | 3068 | 68.6 | 97.5 |

| 100_C_2 | 1956 | 17144 | 0.114092 | 1800.12 | 90 | 6428 | 72.7 | 279.99 |

| 100_N_2 | 1873 | 16590 | 0.112899 | 1741.95 | 87 | 4215 | 74 | 257.82 |

| 100_W_2 | 1946 | 16929 | 0.114951 | 1777.545 | 89 | 3013 | 70.6 | 251.93 |

| 100_C_3 | 1844 | 16894 | 0.109151 | 1773.87 | 271 | 6430 | 71.8 | 841.3 |

| 100_N_3 | 1768 | 16168 | 0.109352 | 1697.64 | 260 | 4206 | 73.4 | 768.71 |

| 100_W_3 | 1836 | 16156 | 0.113642 | 1696.38 | 271 | 3008 | 69.9 | 758.82 |

| 100_NG_3 | 613 | 6388 | 0.095961 | 670.74 | 86 | 7195 | 40.4 | 203.81 |

| 100_NG_2 | 651 | 6535 | 0.099617 | 686.175 | 30 | 7218 | 40.5 | 72.62 |

| 100_NG_1 | 662 | 6548 | 0.101100 | 687.54 | 13 | 7120 | 42.2 | 33.13 |

| 100_NR_3 | 463 | 4270 | 0.108431 | 448.35 | 69 | 2189 | 28.5 | 253.05 |

| 100_NR_2 | 494 | 4374 | 0.112940 | 459.27 | 20 | 2170 | 31.1 | 85.18 |

| 100_NR_1 | 472 | 4264 | 0.110694 | 447.72 | 9 | 2093 | 39.5 | 41.07 |

| 100_NB_3 | 442 | 4947 | 0.089347 | 519.435 | 102 | 17022 | 50.8 | 195.23 |

| 100_NB_2 | 473 | 5279 | 0.089600 | 554.295 | 31 | 17186 | 46.2 | 100.36 |

| 100_NB_1 | 481 | 5318 | 0.090448 | 558.39 | 15 | 16515 | 51.4 | 50.36 |

| 50_C_1 | 1083 | 8851 | 0.122359 | 929.355 | 20 | 6376 | 72.8 | 62.5 |

| 50_N_1 | 1036 | 8596 | 0.120521 | 902.58 | 19 | 4174 | 74.1 | 57.67 |

| 50_W_1 | 1075 | 8506 | 0.126381 | 893.13 | 20 | 3003 | 70.7 | 56.29 |

| 50_C_2 | 1057 | 9918 | 0.106574 | 1041.39 | 50 | 6398 | 71.6 | 155.53 |

| 50_N_2 | 1017 | 9465 | 0.107448 | 993.825 | 47 | 4201 | 74.6 | 139.9 |

| 50_W_2 | 1058 | 9702 | 0.109050 | 1018.71 | 50 | 2928 | 69.5 | 140.27 |

| 50_C_3 | 988 | 9774 | 0.101085 | 1026.27 | 64 | 6408 | 71.9 | 459.01 |

| 50_N_3 | 937 | 9400 | 0.099681 | 987 | 142 | 4186 | 73.4 | 420.88 |

| 50_W_3 | 990 | 9565 | 0.103502 | 1004.325 | 148 | 3010 | 70.3 | 416.62 |

| 50_NG_3 | 333 | 3543 | 0.093988 | 372.015 | 49 | 7192 | 40.3 | 116.2 |

| 50_NG_2 | 353 | 3552 | 0.099381 | 372.96 | 17 | 7146 | 39.8 | 41.41 |

| 50_NG_1 | 361 | 3660 | 0.098634 | 384.3 | 7 | 7049 | 43.2 | 19.51 |

| 50_NR_3 | 253 | 2209 | 0.114531 | 231.945 | 32 | 2187 | 28.6 | 139.14 |

| 50_NR_2 | 268 | 2224 | 0.120504 | 233.52 | 11 | 2109 | 36.7 | 48.01 |

| 50_NR_1 | 270 | 2213 | 0.122006 | 232.365 | 5 | 1996 | 49.2 | 22.63 |

| 50_NB_3 | 182 | 1932 | 0.094203 | 202.86 | 53 | 17168 | 45.6 | 172.12 |

| 50_NB_2 | 252 | 2640 | 0.095455 | 277.2 | 16 | 18485 | 40.2 | 51.24 |

| 50_NB_1 | 261 | 2671 | 0.097716 | 280.455 | 8 | 17191 | 51 | 28.67 |

| 100_H_4 | 334 | 7566 | 0.044145 | 317.772 | 5 | 2147 | 93.7 | 40.97 |

| 100_H_5 | 332 | 7560 | 0.043915 | 317.52 | 9 | 2205 | 96.5 | 80.42 |

| 100_H_6 | 316 | 8000 | 0.039500 | 336 | 25 | 2272 | 97.2 | 212.55 |

| 100_F_4 | 781 | 5496 | 0.142103 | 747.456 | 10 | 2198 | 82.05 | 32.96 |

| 100_F_5 | 766 | 5649 | 0.135546 | 768.264 | 18 | 2268 | 83.8 | 55.51 |

| 100_F_6 | 757 | 5812 | 0.130282 | 790.432 | 37 | 2262 | 83.5 | 105.71 |

| 100_I_4 | 748 | 16657 | 0.044894 | 749.565 | 8 | 2131 | 95.8 | 71.66 |

| 100_I_5 | 750 | 17242 | 0.043510 | 775.89 | 15 | 2138 | 97.2 | 141.38 |

| 100_I_6 | 749 | 16162 | 0.046312 | 727.29 | 31 | 2221 | 97.3 | 274.74 |

| D_1 | 101 | 912 | 0.110746 | 105.792 | 255 | 4913 | 95.9 | 1210.23 |

| D_2 | 104 | 891 | 0.116723 | 103.356 | 113 | 5369 | 83.2 | 576.69 |

| D_3 | 106 | 875 | 0.121143 | 101.5 | 107 | 4804 | 95.2 | 449.3 |

| Low-Cost | Professional | ||||

|---|---|---|---|---|---|

| Scenario No. | Method 1 | Method 2 | Method a | Method b | Method c |

| 1 | 20.15 (unacceptable 2) | 20.43 (unacceptable 2) | 21.99 1 (unacceptable 2) | 22.72 1 (just uncomfortable 2) | 21.50 1 (unacceptable 2) |

| 2 | 21.16 (unacceptable 2) | 21.35 (unacceptable 2) | 21.871 (unacceptable 2) | 22.43 1 (just uncomfortable 2) | 20.90 1 (unacceptable 2) |

| 3 | 16.42 (just acceptable 2) | 24.08 (just uncomfortable 2) | 19.37 1 (unacceptable 2) | 17.84 1 (just acceptable 2) | 16.22 1 (just acceptable 2) |

| 4 | 21.95 (unacceptable 2) | 27.95 (uncomfortable 2) | 25.99 1 (uncomfortable 2) | 21.56 1 (unacceptable 2) | 22.67 1 (just uncomfortable 2) |

| 5 | 0.00 (imperceptible 2) | 2.13 1 (imperceptible 2) | 1.97 1 (imperceptible 2) | 2.08 1 (imperceptible 2) | 0.00 1 (imperceptible 2) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salamone, F.; Sibilio, S.; Masullo, M. Assessment of the Performance of a Portable, Low-Cost and Open-Source Device for Luminance Mapping through a DIY Approach for Massive Application from a Human-Centred Perspective. Sensors 2022, 22, 7706. https://doi.org/10.3390/s22207706

Salamone F, Sibilio S, Masullo M. Assessment of the Performance of a Portable, Low-Cost and Open-Source Device for Luminance Mapping through a DIY Approach for Massive Application from a Human-Centred Perspective. Sensors. 2022; 22(20):7706. https://doi.org/10.3390/s22207706

Chicago/Turabian StyleSalamone, Francesco, Sergio Sibilio, and Massimiliano Masullo. 2022. "Assessment of the Performance of a Portable, Low-Cost and Open-Source Device for Luminance Mapping through a DIY Approach for Massive Application from a Human-Centred Perspective" Sensors 22, no. 20: 7706. https://doi.org/10.3390/s22207706

APA StyleSalamone, F., Sibilio, S., & Masullo, M. (2022). Assessment of the Performance of a Portable, Low-Cost and Open-Source Device for Luminance Mapping through a DIY Approach for Massive Application from a Human-Centred Perspective. Sensors, 22(20), 7706. https://doi.org/10.3390/s22207706