Trainable Quaternion Extended Kalman Filter with Multi-Head Attention for Dead Reckoning in Autonomous Ground Vehicles

Abstract

1. Introduction

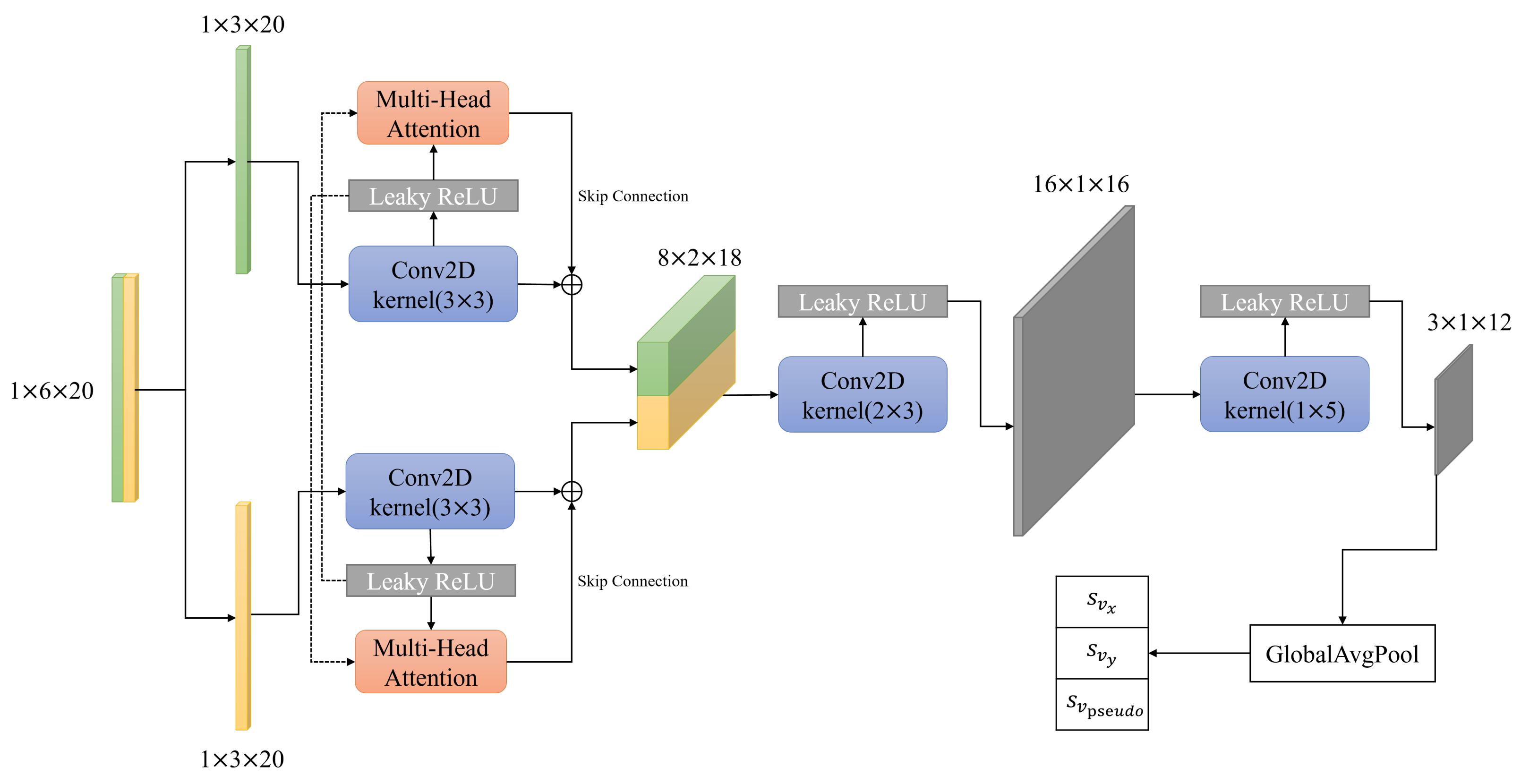

- In this work, we propose a novel approach to improve the accuracy of EKF-based IMU localization with a convolutional neural network (CNN) architecture. Specifically, we design a stable training method that can find the optimal parameters of the system and the observation-noise covariance in real time by reducing the error in each iteration. Furthermore, the system is designed and tested for online training, unlike many other approaches, such as [12], where the algorithm is trained offline using batch and multiple epochs. The intention behind this is that the algorithm is to be trained continuously while SLAM is functioning online, in which case a sequence of IMU data points is observed and acquired.

- Our proposed CNN module consists of multi-head attention (MHA) layers to model the cross-modal fusion of different sources of modalities (e.g., multiple IMUs, lidars, etc.). The MHA was initially proposed to address the problems of natural language processing (NLP) [13], and it was later discovered to be effective in modeling cross-modal interactions between different modalities [14]. These previous works inspired us to model cross-modal interactions that combine different sensor information sources via the attention mechanism.

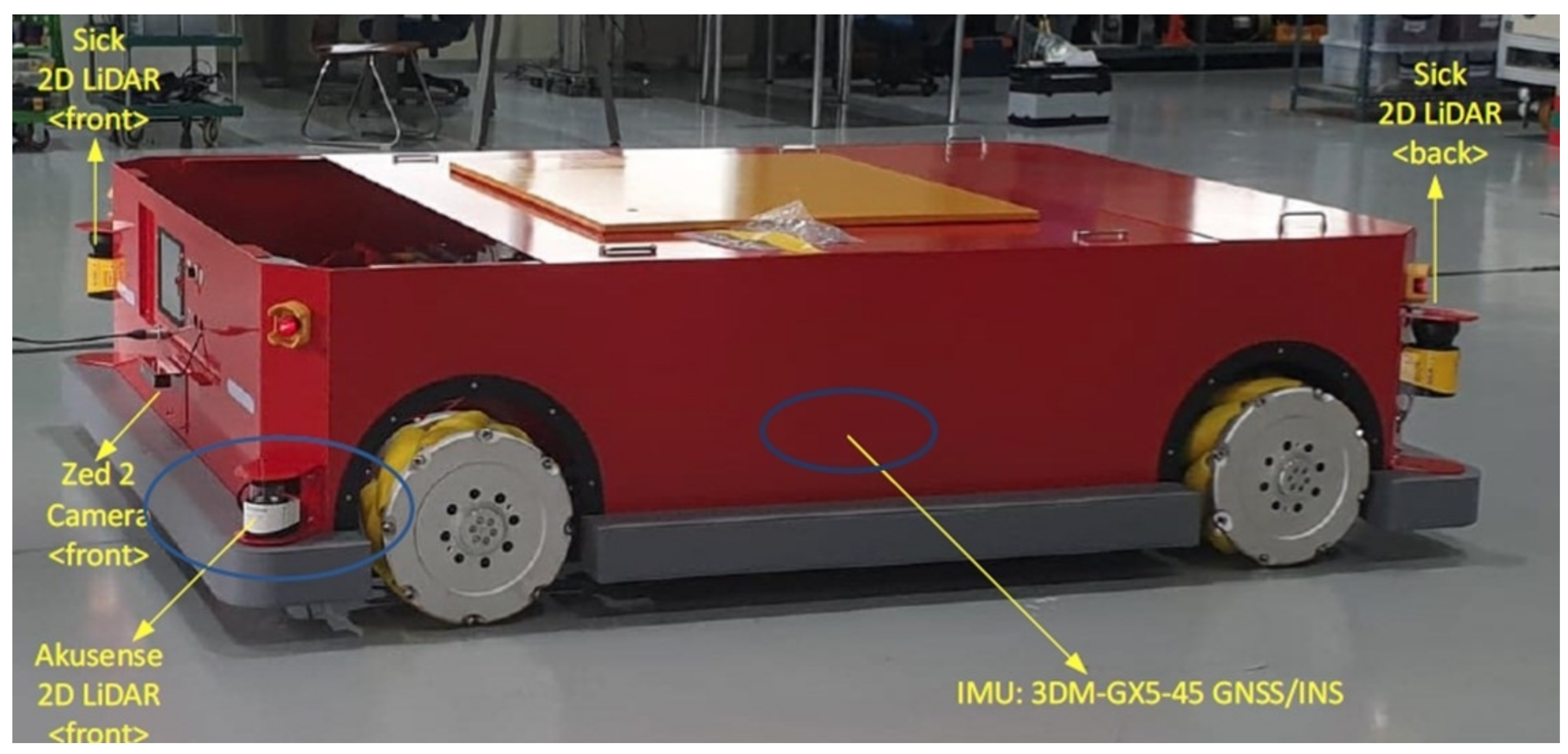

- We conducted extensive experiments using an actual robotic platform to assess the effectiveness of our proposed method in the real world (a factory environment in our case). We designed real-world scenarios for the online training, where the SLAM might fail in some cases and only the IMU(s) can provide sensory information for the EKF-based localization module. The algorithm is also trained continuously while the robot is online and navigating.

2. Related Work

3. Quaternion-Based Extended Kalman Filter

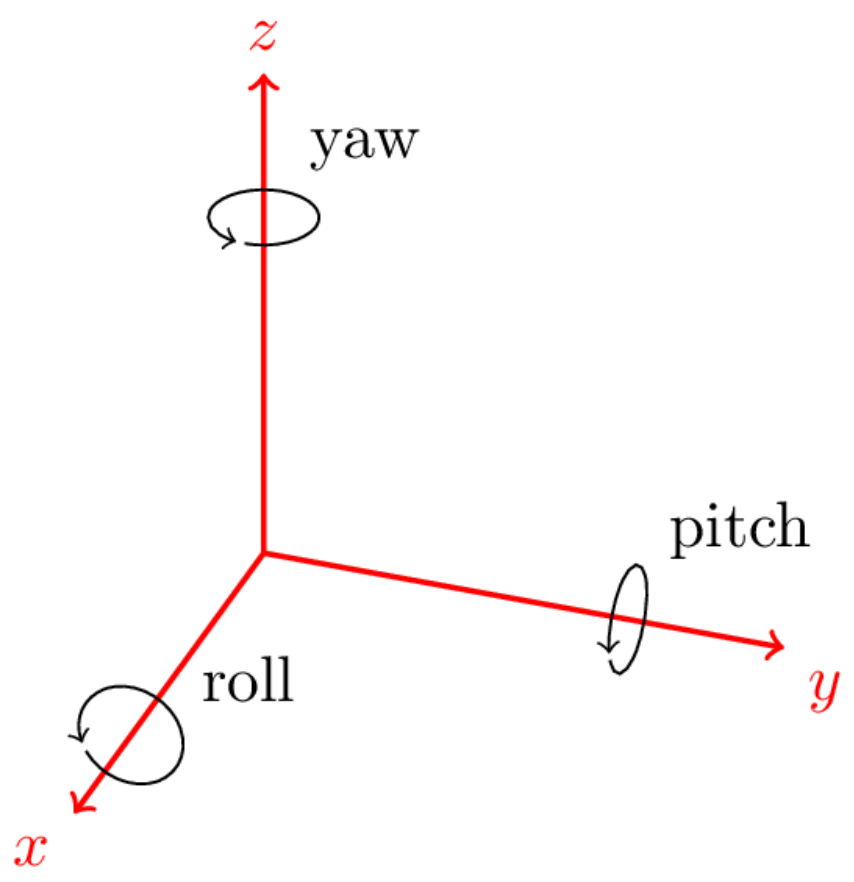

3.1. IMU Inclination Calculation

3.2. IMU Integration Model

3.3. EKF Correction with Measurements

4. Covariance Optimization

4.1. Adjustable Covariance

4.2. Online Training Method

4.3. Implementation Details

5. Experiments and Results

5.1. Dataset

5.2. Experimental Setup

- The ratio of the position error to the total path length when SLAM fails.

- Azimuth angle error relative to the total path length when SLAM fails.

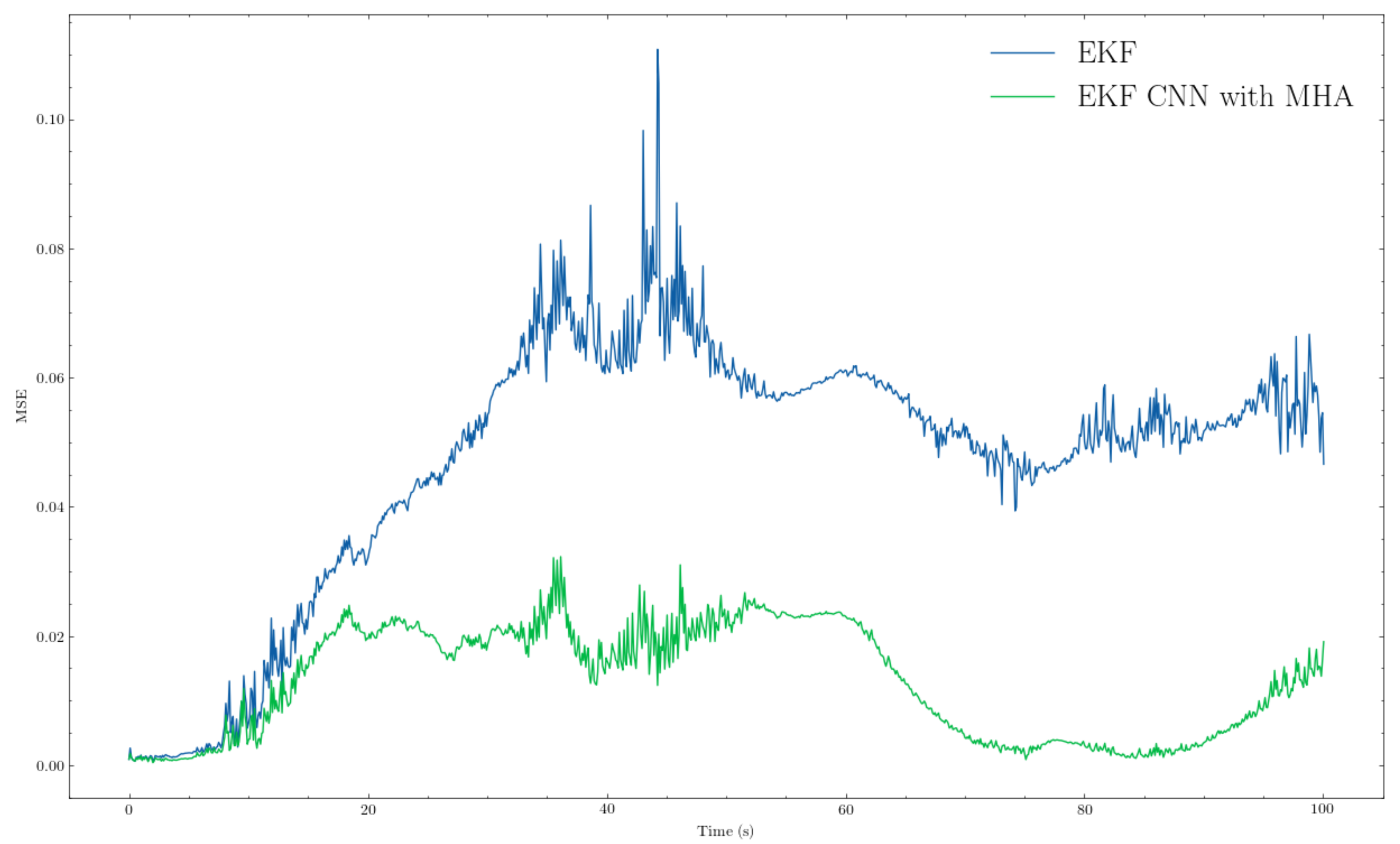

- :The overall average of the squares of the errors between ground truths (SLAM) and object to be assessed (estimated position and orientation) when SLAM fails.

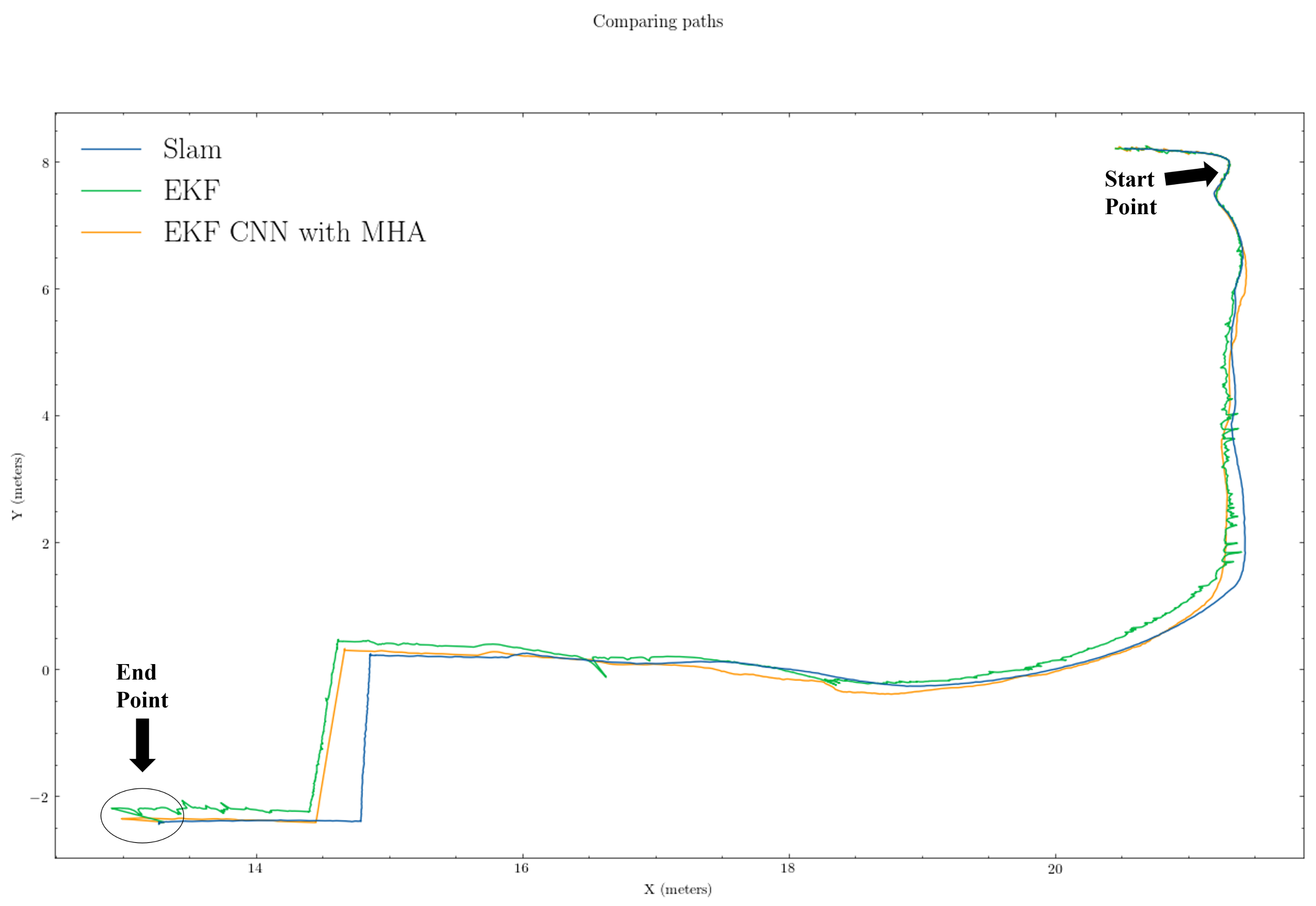

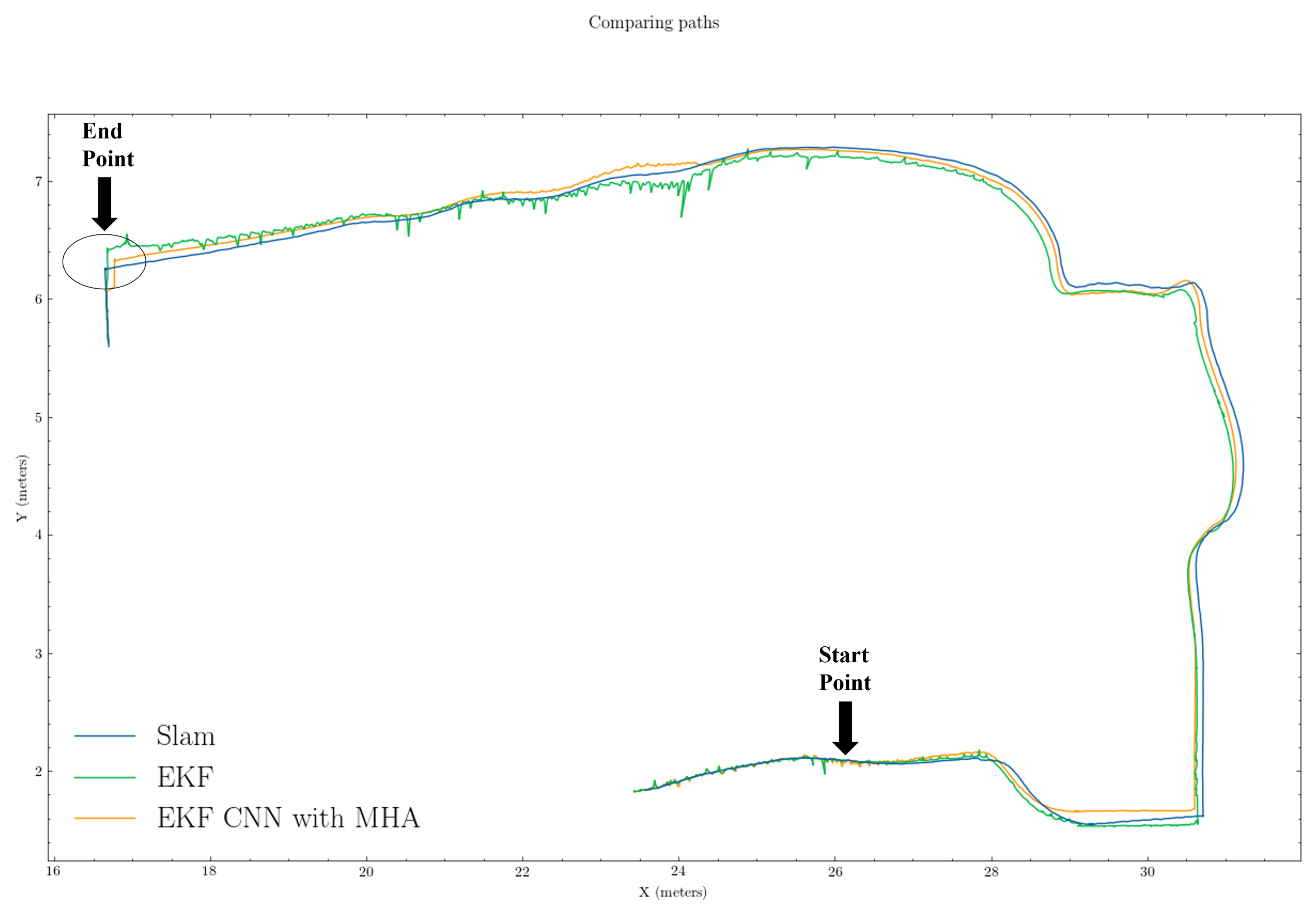

5.3. Result and Analysis

5.4. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alatise, M.B.; Hancke, G.P. A Review on Challenges of Autonomous Mobile Robot and Sensor Fusion Methods. IEEE Access 2020, 8, 39830–39846. [Google Scholar] [CrossRef]

- Ott, F.; Feigl, T.; Loffler, C.; Mutschler, C. ViPR: Visual-Odometry-aided Pose Regression for 6DoF Camera Localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 42–43. [Google Scholar]

- Zhang, E.; Masoud, N. Increasing GPS Localization Accuracy With Reinforcement Learning. IEEE Trans. Intell. Transp. Syst. 2020, 22, 2615–2626. [Google Scholar] [CrossRef]

- Lidow, A.; De Rooij, M.; Strydom, J.; Reusch, D.; Glaser, J. GaN Transistors for Efficient Power Conversion; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Zhao, J. A Review of Wearable IMU (Inertial-Measurement-Unit)-based Pose Estimation and Drift Reduction Technologies. J. Phys. Conf. Ser. 2018, 1087, 9. [Google Scholar] [CrossRef]

- Thrun, S.; Burgard, W.; Fox, D. Probability Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Malyavej, V.; Kumkeaw, W.; Aorpimai, M. Indoor robot localization by RSSI/IMU sensor fusion. In Proceedings of the 2013 10th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Krabi, Thailand, 15–17 May 2013; pp. 1–6. [Google Scholar]

- Brossard, M.; Bonnabel, S. Learning wheel odometry and IMU errors for localization. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 291–297. [Google Scholar]

- Yan, Y.; Zhang, B.; Zhou, J.; Zhang, Y.; Liu, X. Real-Time Localization and Mapping Utilizing Multi-Sensor Fusion and Visual–IMU–Wheel Odometry for Agricultural Robots in Unstructured, Dynamic and GPS-Denied Greenhouse Environments. Agronomy 2022, 12, 1740. [Google Scholar] [CrossRef]

- Jurado, J.; Kabban, C.M.S.; Raquet, J. A regression-based methodology to improve estimation of inertial sensor errors using Allan variance data. Navig. J. Inst. Navig. 2019, 66, 251–263. [Google Scholar] [CrossRef]

- Brossard, M.; Barrau, A.; Bonnabel, S. AI-IMU dead-reckoning. IEEE Trans. Intell. Veh. 2020, 5, 585–595. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Tsai, Y.H.H.; Bai, S.; Liang, P.P.; Kolter, J.Z.; Morency, L.P.; Salakhutdinov, R. Multimodal transformer for unaligned multimodal language sequences. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Volume 2019, p. 6558. [Google Scholar]

- Akhlaghi, S.; Zhou, N.; Huang, Z. Adaptive adjustment of noise covariance in Kalman filter for dynamic state estimation. In Proceedings of the 2017 IEEE Power & Energy Society General Meeting, Chicago, IL, USA, 16–20 July 2017; pp. 1–5. [Google Scholar]

- Hu, G.; Gao, B.; Zhong, Y.; Gu, C. Unscented kalman filter with process noise covariance estimation for vehicular ins/gps integration system. Inf. Fusion 2020, 64, 194–204. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Haarnoja, T.; Ajay, A.; Levine, S.; Abbeel, P. Backprop kf: Learning discriminative deterministic state estimators. Adv. Neural Inf. Process. Syst. 2016, 29, 4376–4384. [Google Scholar]

- Song, F.; Li, Y.; Cheng, W.; Dong, L.; Li, M.; Li, J. An Improved Kalman Filter Based on Long Short-Memory Recurrent Neural Network for Nonlinear Radar Target Tracking. Wirel. Commun. Mob. Comput. 2022, 2022, 8280428. [Google Scholar] [CrossRef]

- Gao, X.; Luo, H.; Ning, B.; Zhao, F.; Bao, L.; Gong, Y.; Xiao, Y.; Jiang, J. RL-AKF: An adaptive kalman filter navigation algorithm based on reinforcement learning for ground vehicles. Remote Sens. 2020, 12, 1704. [Google Scholar] [CrossRef]

- Wu, F.; Luo, H.; Jia, H.; Zhao, F.; Xiao, Y.; Gao, X. Predicting the noise covariance with a multitask learning model for Kalman filter-based GNSS/INS integrated navigation. IEEE Trans. Instrum. Meas. 2020, 70, 1–13. [Google Scholar] [CrossRef]

- Feng, K.; Li, J.; Zhang, X.; Shen, C.; Bi, Y.; Zheng, T.; Liu, J. A new quaternion-based Kalman filter for real-time attitude estimation using the two-step geometrically-intuitive correction algorithm. Sensors 2017, 17, 2146. [Google Scholar] [CrossRef] [PubMed]

- Kok, M.; Hol, J.D.; Schön, T.B. Using inertial sensors for position and orientation estimation. arXiv 2017, arXiv:1704.06053. [Google Scholar]

- Mochnac, J.; Marchevsky, S.; Kocan, P. Bayesian filtering techniques: Kalman and extended Kalman filter basics. In Proceedings of the 2009 19th International Conference Radioelektronika, Bratislava, Slovakia, 22–23 April 2009; pp. 119–122. [Google Scholar]

- NGOC, T.T.; KHENCHAF, A.; COMBLET, F. Evaluating Process and Measurement Noise in Extended Kalman Filter for GNSS Position Accuracy. In Proceedings of the 2019 13th European Conference on Antennas and Propagation (EuCAP), Krakow, Poland, 31 March–5 April 2019; pp. 1–5. [Google Scholar]

- Khairuddin, A.R.; Talib, M.S.; Haron, H. Review on simultaneous localization and mapping (SLAM). In Proceedings of the 2015 IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 27–29 November 2015; pp. 85–90. [Google Scholar]

- LeCun, Y.; Touresky, D.; Hinton, G.; Sejnowski, T. A theoretical framework for back-propagation. In Proceedings of the 1988 Connectionist Models Summer School, San Mateo, CA, USA, 17–26 June 1988; Volume 1, pp. 21–28. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Stanford Artificial Intelligence Laboratory. Robotic Operating System. Available online: https://www.ros.org (accessed on 1 September 2020).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Nam, D.V. Robust Multi-Sensor Fusion-based SLAM using State Estimation by Learning Observation Model. Ph.D. Thesis, Chungbuk National University, Cheongju, Korea, 2022. [Google Scholar]

| Trajectory | Failure Length (m) | EKF | Proposed | ||||

|---|---|---|---|---|---|---|---|

(%) | (deg/m) | (%) | (deg/m) | ||||

| 1 | 18.19 | 1.64180 | 0.01320 | 0.02055 | 0.97901 | 0.00639 | 0.01580 |

| 2 | 23.92 | 3.56478 | 0.10087 | 0.11934 | 2.79777 | 0.04045 | 0.07454 |

| 3 | 22.94 | 1.00380 | 0.22513 | 0.04406 | 0.84346 | 0.01948 | 0.01294 |

| 4 | 24.81 | 0.38022 | 0.02335 | 0.00504 | 0.37035 | 0.04509 | 0.00523 |

| Overall | 1.64765 | 0.09064 | 0.04725 | 1.24765 | 0.02785 | 0.02713 | |

| Model | (%) | (deg/m) | |

|---|---|---|---|

| EKF | 1.64765 | 0.09064 | 0.04725 |

| EKF+CNN_1 | 1.25114 | 0.02111 | 0.04084 |

| EKF+CNN_2 | 1.46609 | 0.03109 | 0.03696 |

| EKF+LSTM | 1.35288 | 0.03138 | 0.03573 |

| Proposed | 1.24765 | 0.02785 | 0.02713 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Milam, G.; Xie, B.; Liu, R.; Zhu, X.; Park, J.; Kim, G.; Park, C.H. Trainable Quaternion Extended Kalman Filter with Multi-Head Attention for Dead Reckoning in Autonomous Ground Vehicles. Sensors 2022, 22, 7701. https://doi.org/10.3390/s22207701

Milam G, Xie B, Liu R, Zhu X, Park J, Kim G, Park CH. Trainable Quaternion Extended Kalman Filter with Multi-Head Attention for Dead Reckoning in Autonomous Ground Vehicles. Sensors. 2022; 22(20):7701. https://doi.org/10.3390/s22207701

Chicago/Turabian StyleMilam, Gary, Baijun Xie, Runnan Liu, Xiaoheng Zhu, Juyoun Park, Gonwoo Kim, and Chung Hyuk Park. 2022. "Trainable Quaternion Extended Kalman Filter with Multi-Head Attention for Dead Reckoning in Autonomous Ground Vehicles" Sensors 22, no. 20: 7701. https://doi.org/10.3390/s22207701

APA StyleMilam, G., Xie, B., Liu, R., Zhu, X., Park, J., Kim, G., & Park, C. H. (2022). Trainable Quaternion Extended Kalman Filter with Multi-Head Attention for Dead Reckoning in Autonomous Ground Vehicles. Sensors, 22(20), 7701. https://doi.org/10.3390/s22207701