Human Activity Recognition Based on Residual Network and BiLSTM

Abstract

:1. Introduction

- (1)

- A new model, combining the ResNet with BiLSTM, is proposed to capture the spatial and temporal feature of sensor data. The rationality of this model is explained from the perspective of human lower limb movement and the corresponding IMU signal.

- (2)

- We introduce the BiLSTM into ResNet to extract the forward and backward dependencies of feature sequence which is useful to improve the performance of the network. We analyze the impact of model parameters on classification accuracy. The optimal network parameters are selected through experiments.

- (3)

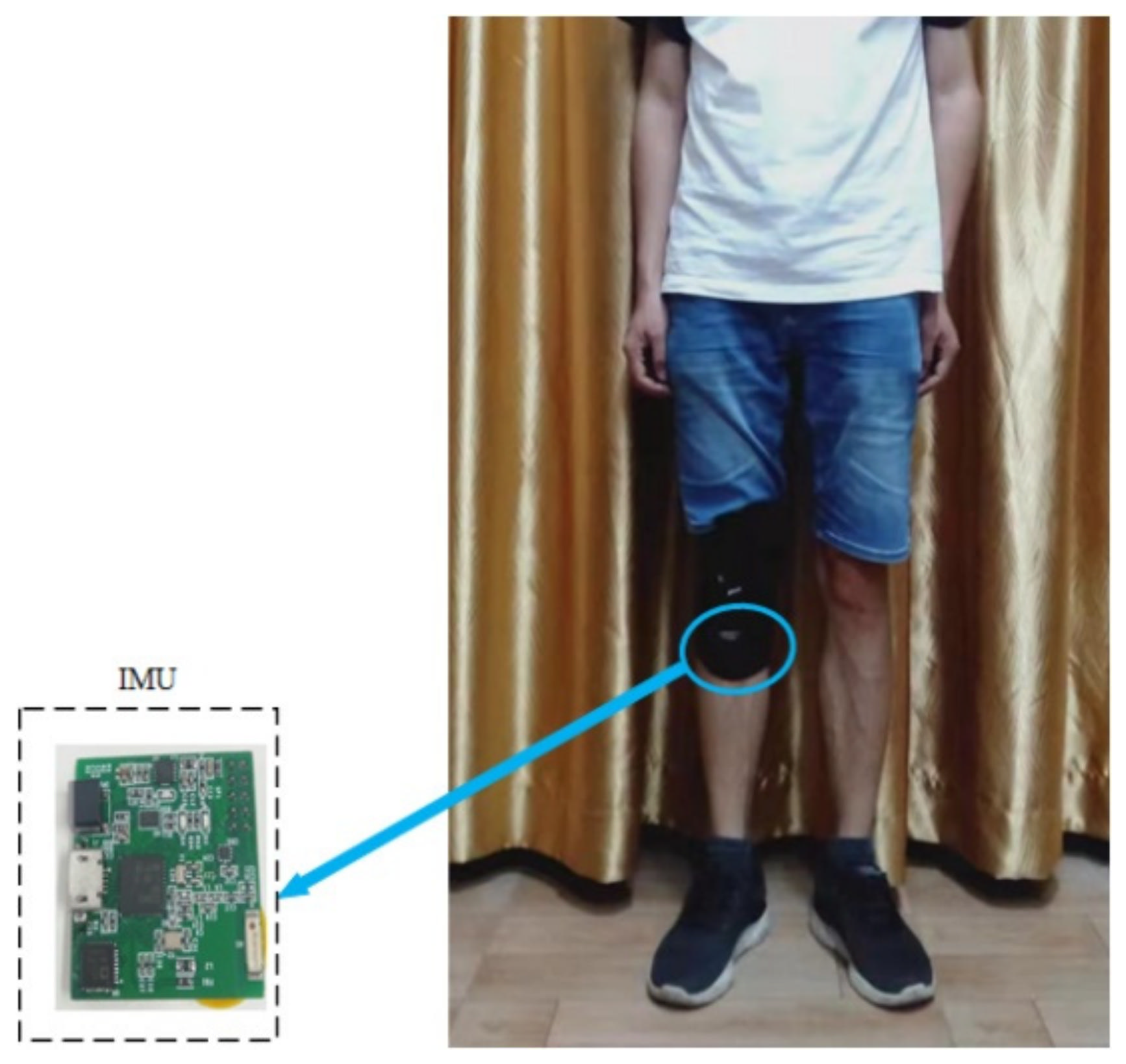

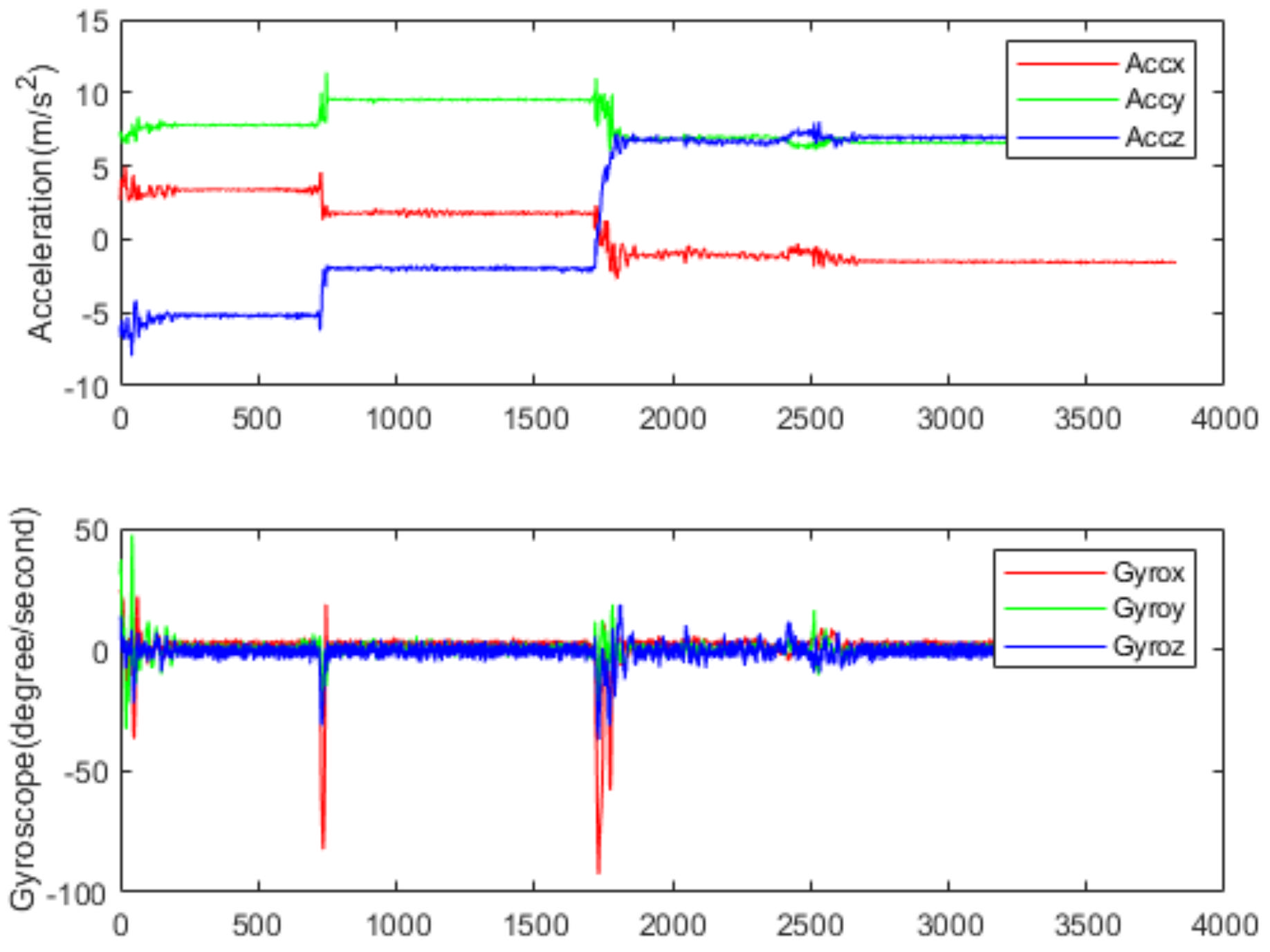

- An HAR dataset, in which the human activity data are collected by a self-developed IMU board, was made. The IMU board is attached to human shank to collect the activity data of the human lower limbs. Our model performs well on this dataset. The proposed model was also tested on both the WISDM and PAMA2 HAR datasets and outperforms existing solutions.

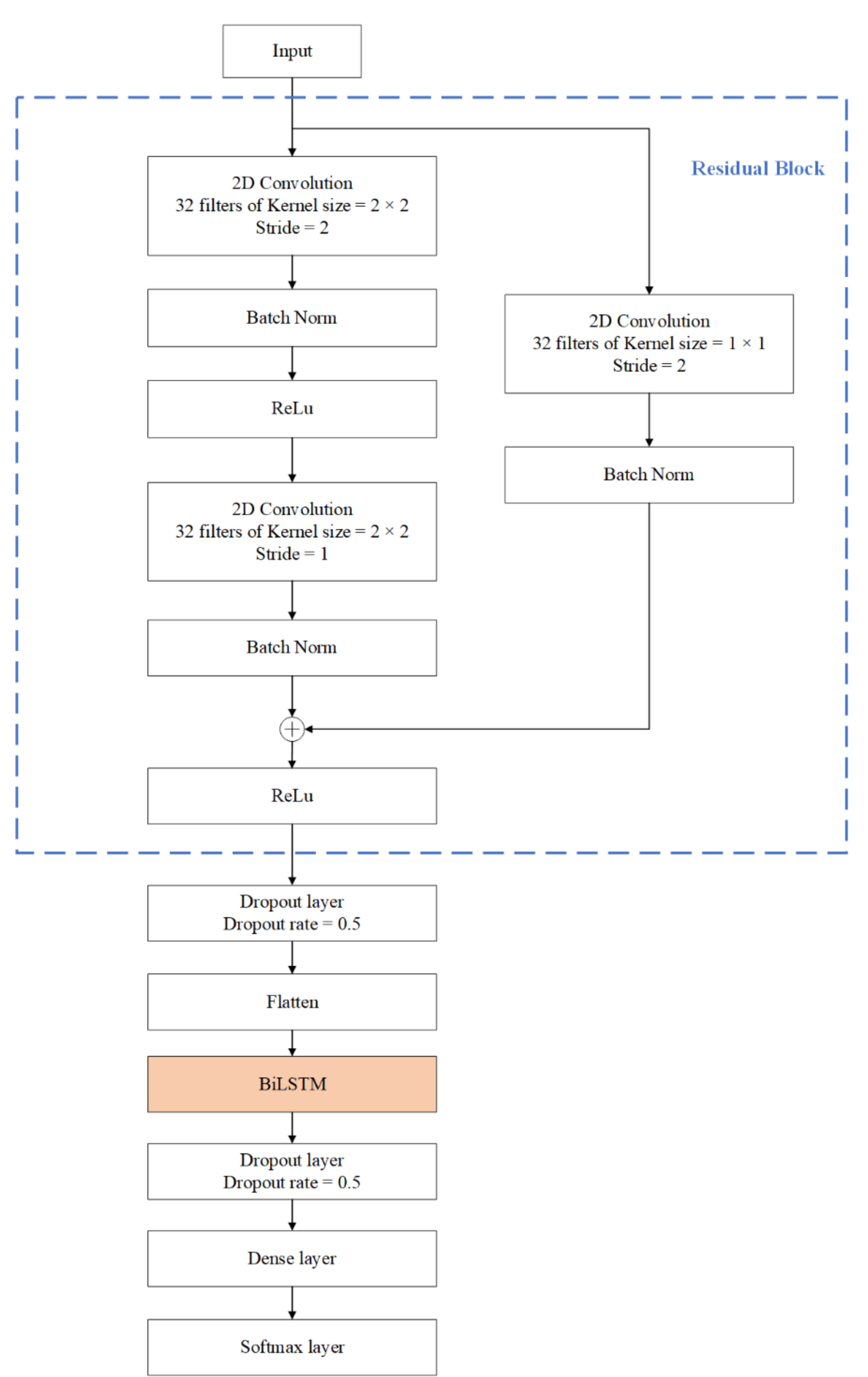

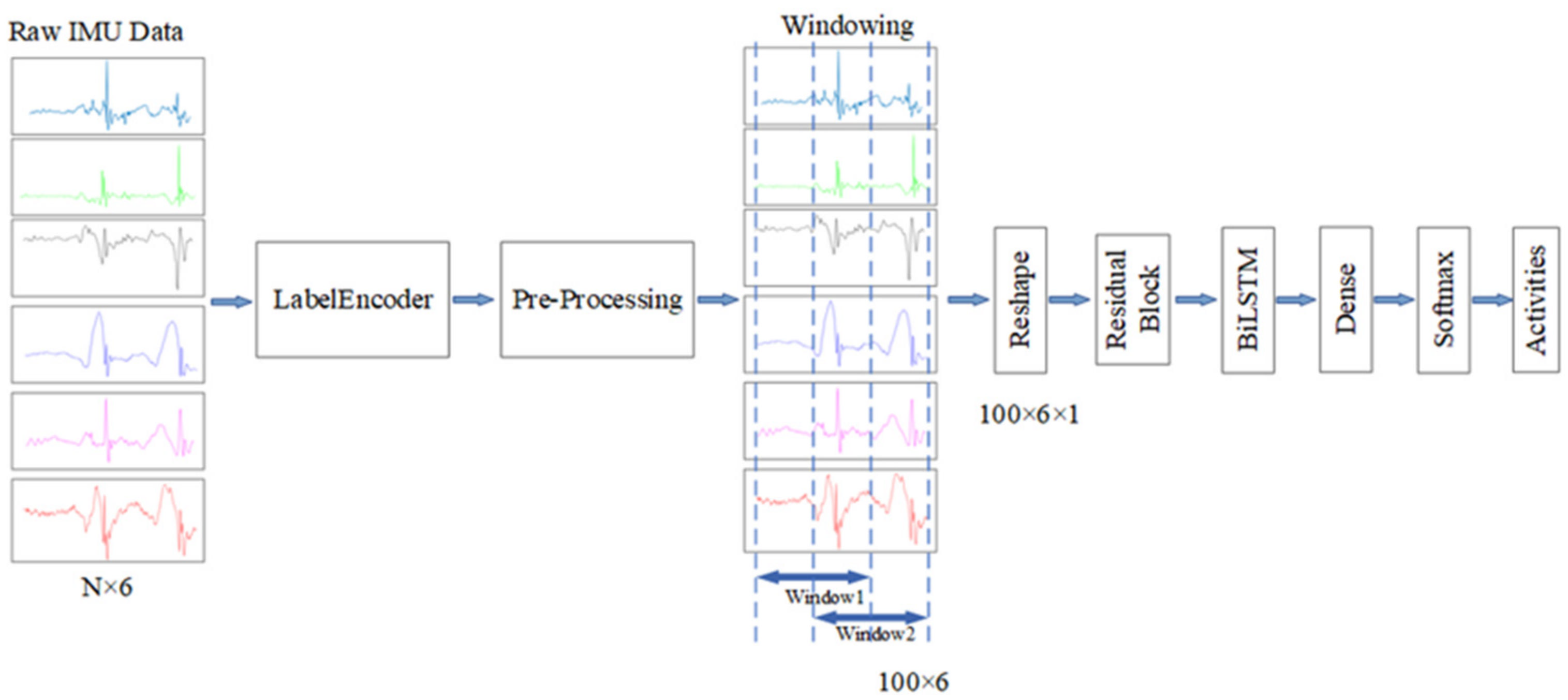

2. Proposed Approach

2.1. Spatial Feature Extraction Based on ResNet

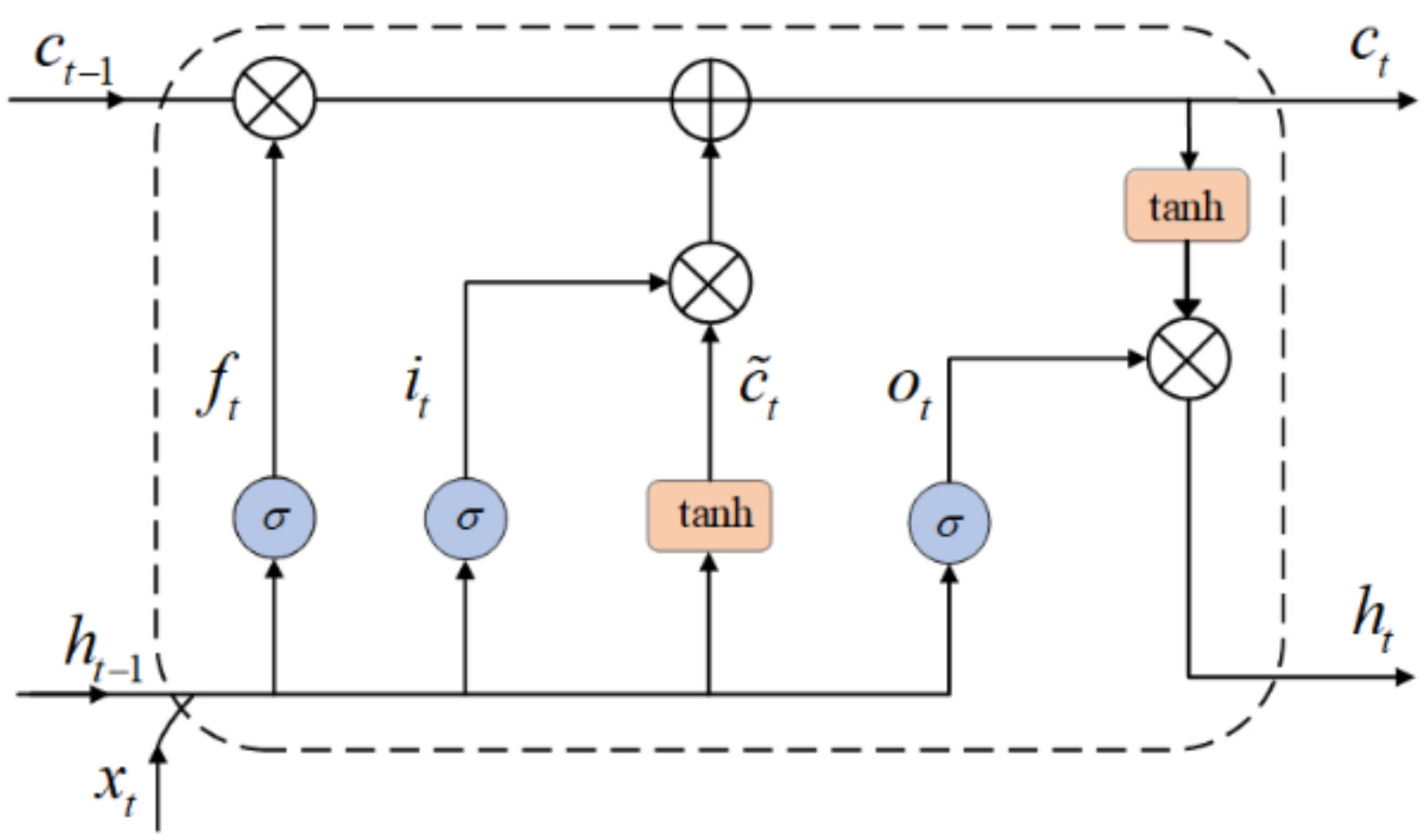

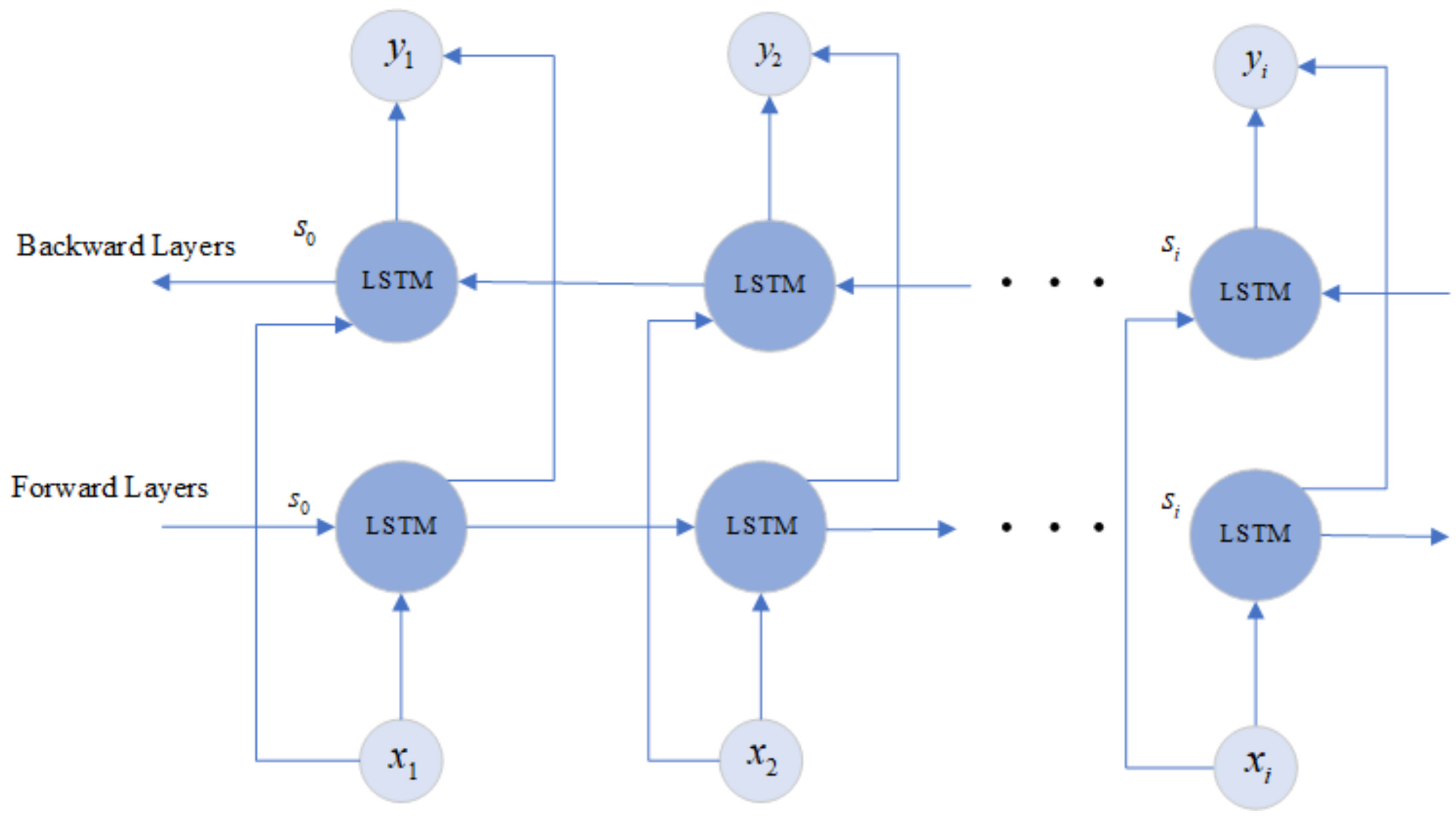

2.2. BiLSTM Layer

3. Experiments Results and Discussion

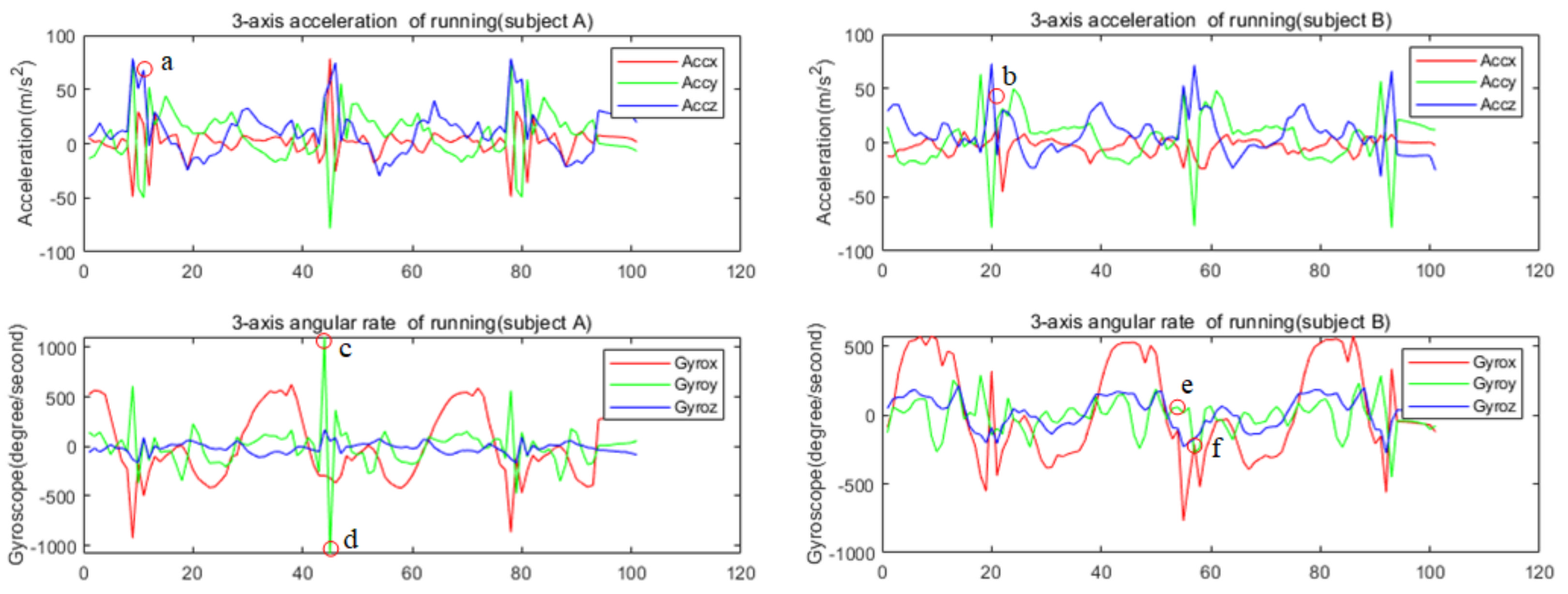

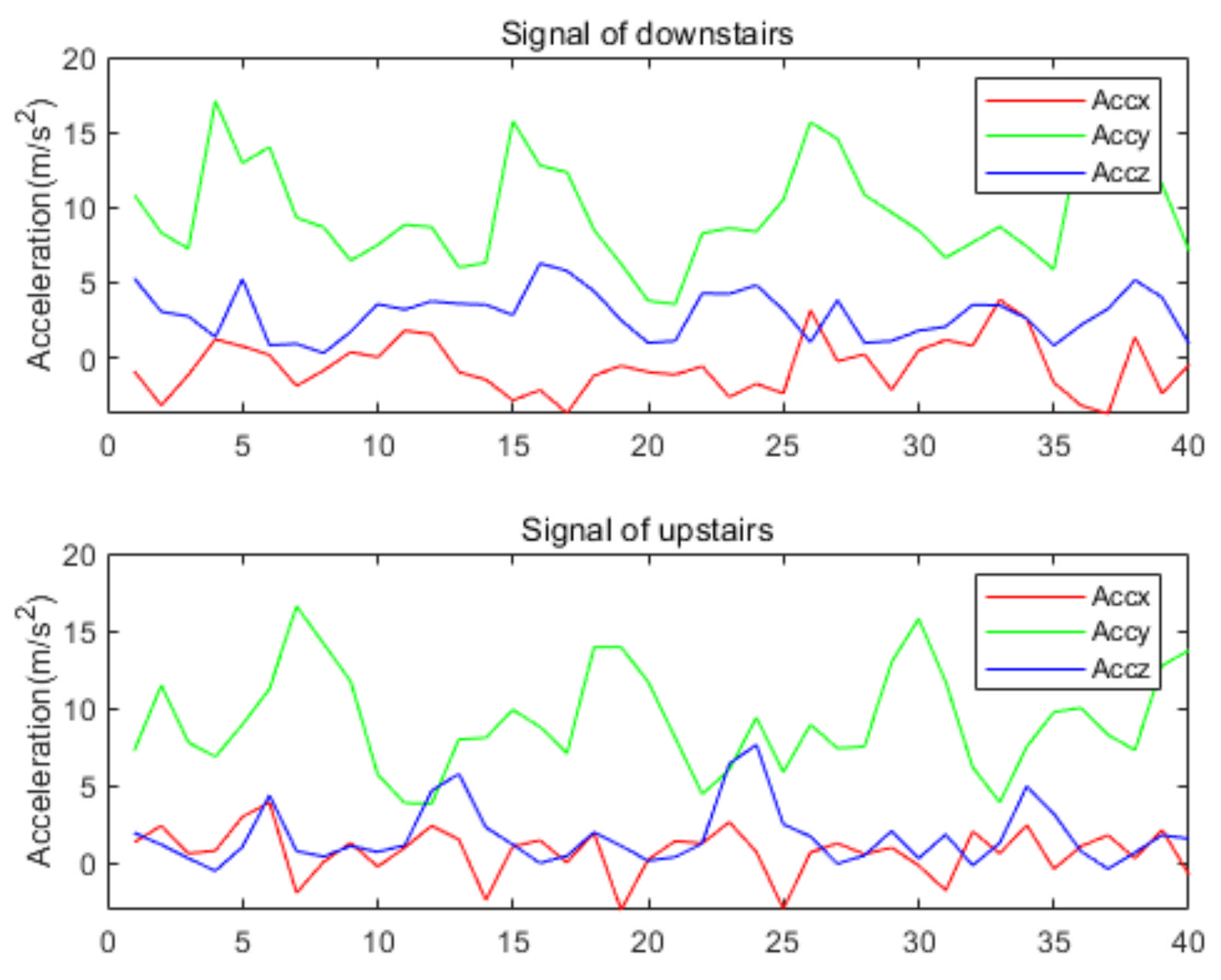

3.1. Data Collection

3.1.1. The Collection of Homemade Dataset

3.1.2. The Public Dataset

3.2. Data Preprocessing

3.3. Experimental Environment

3.4. Evaluation Index

3.5. The Optimal of Hyperparameters

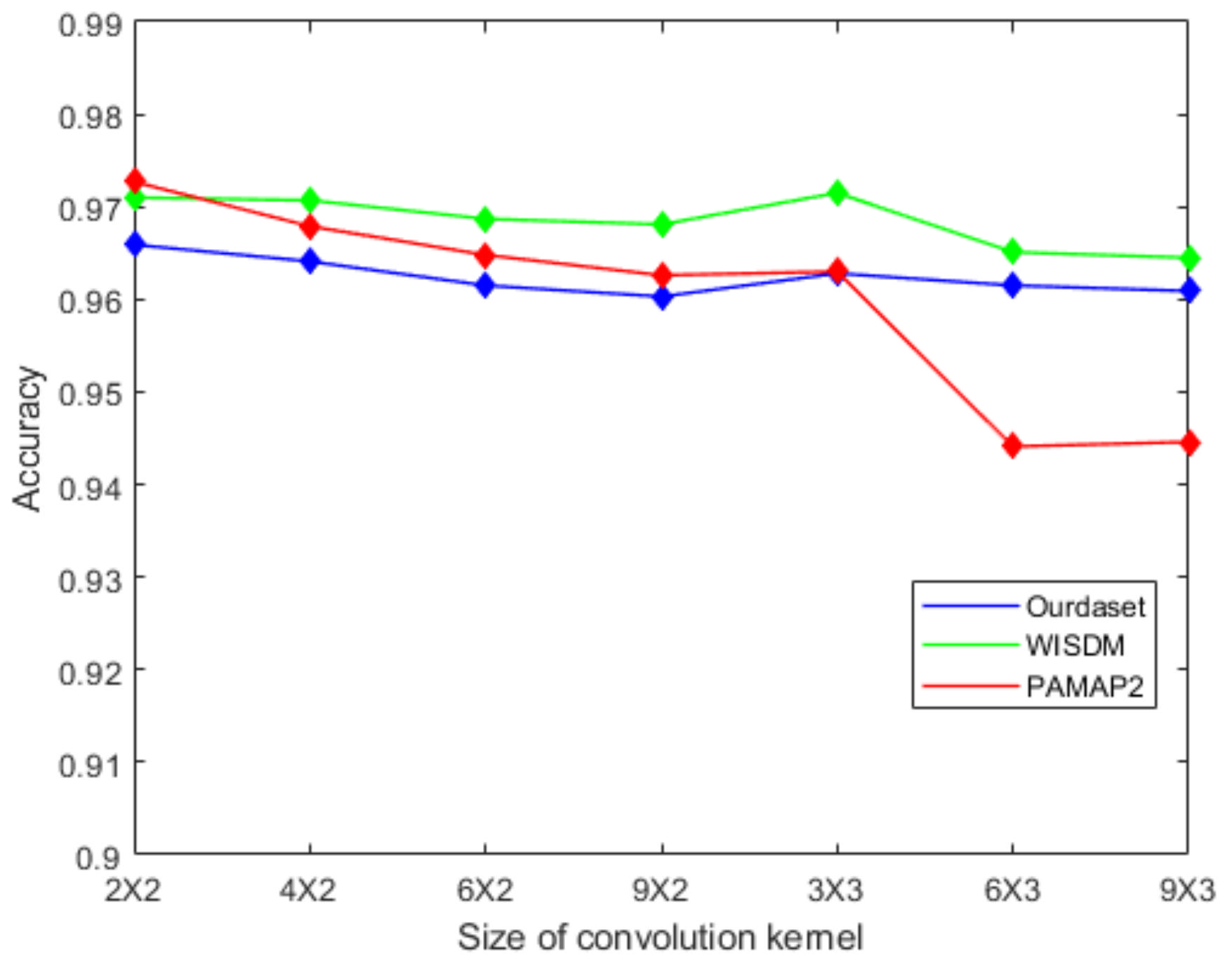

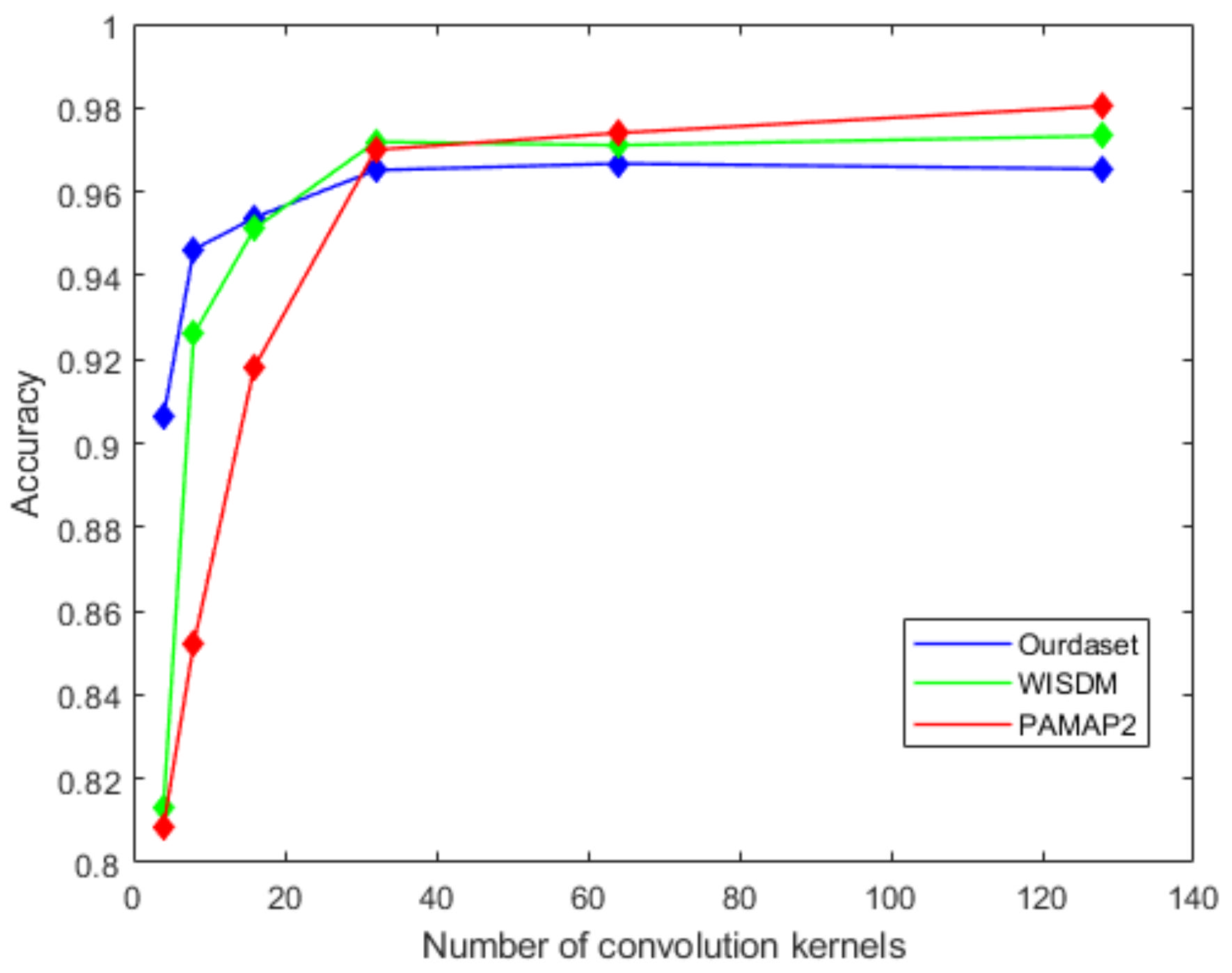

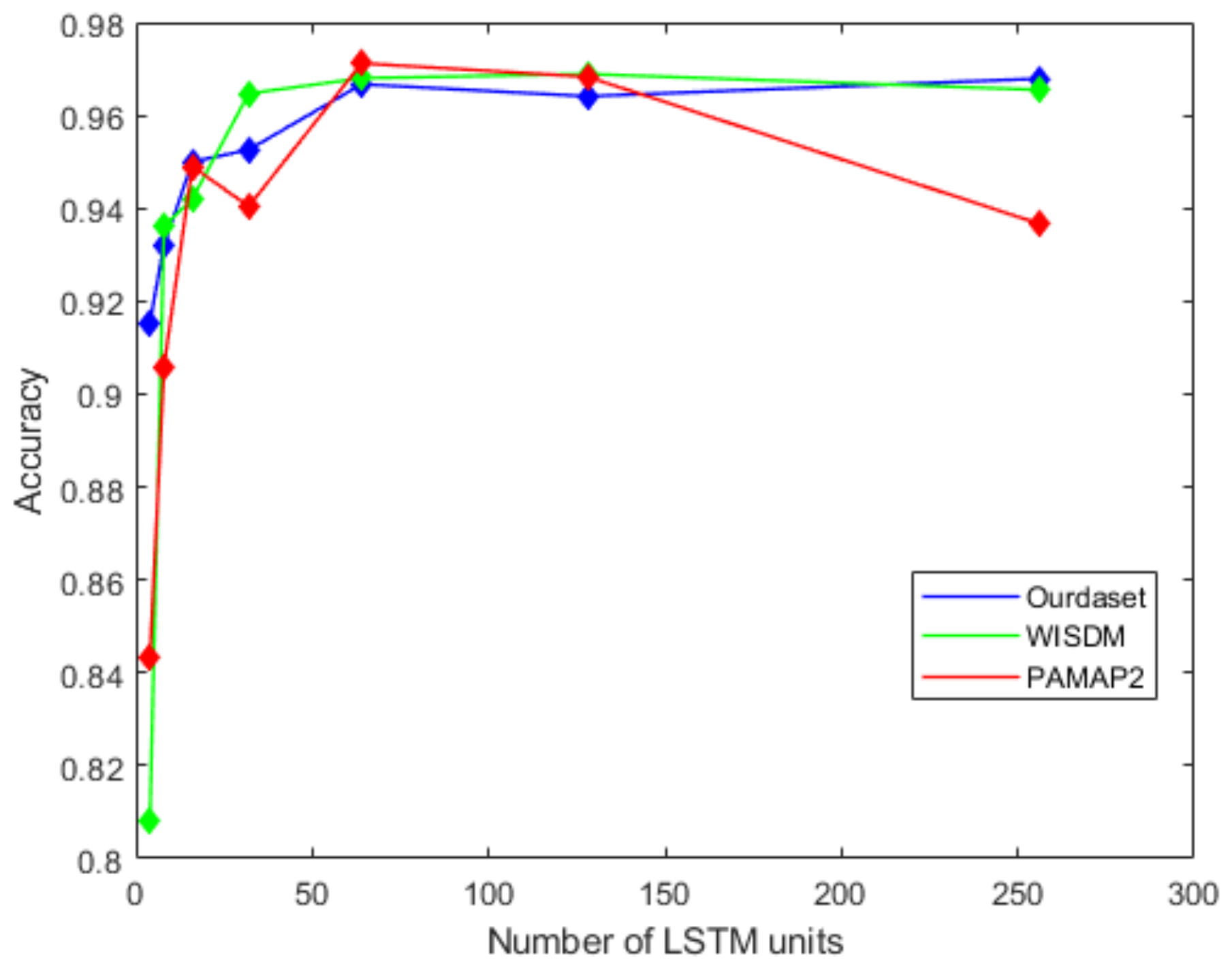

3.5.1. The Optimal of Model Parameters

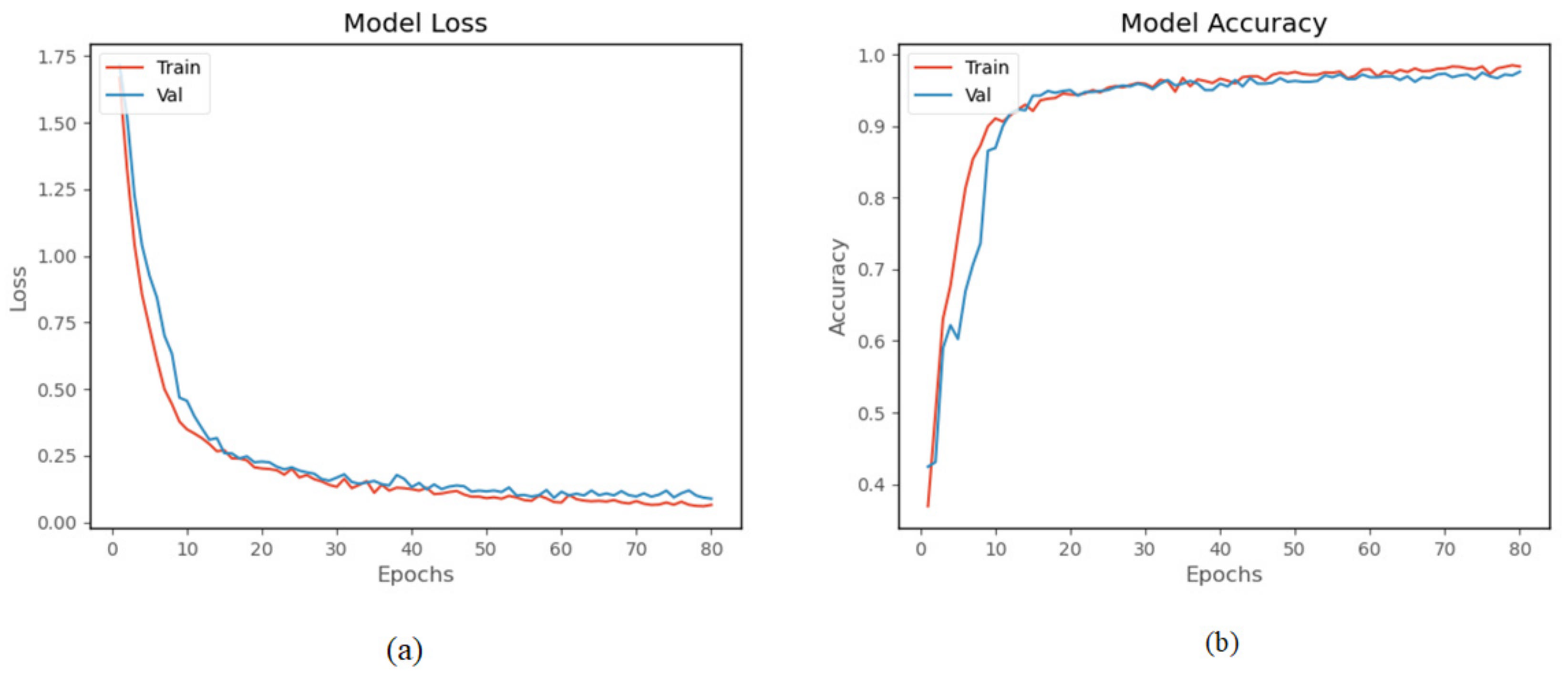

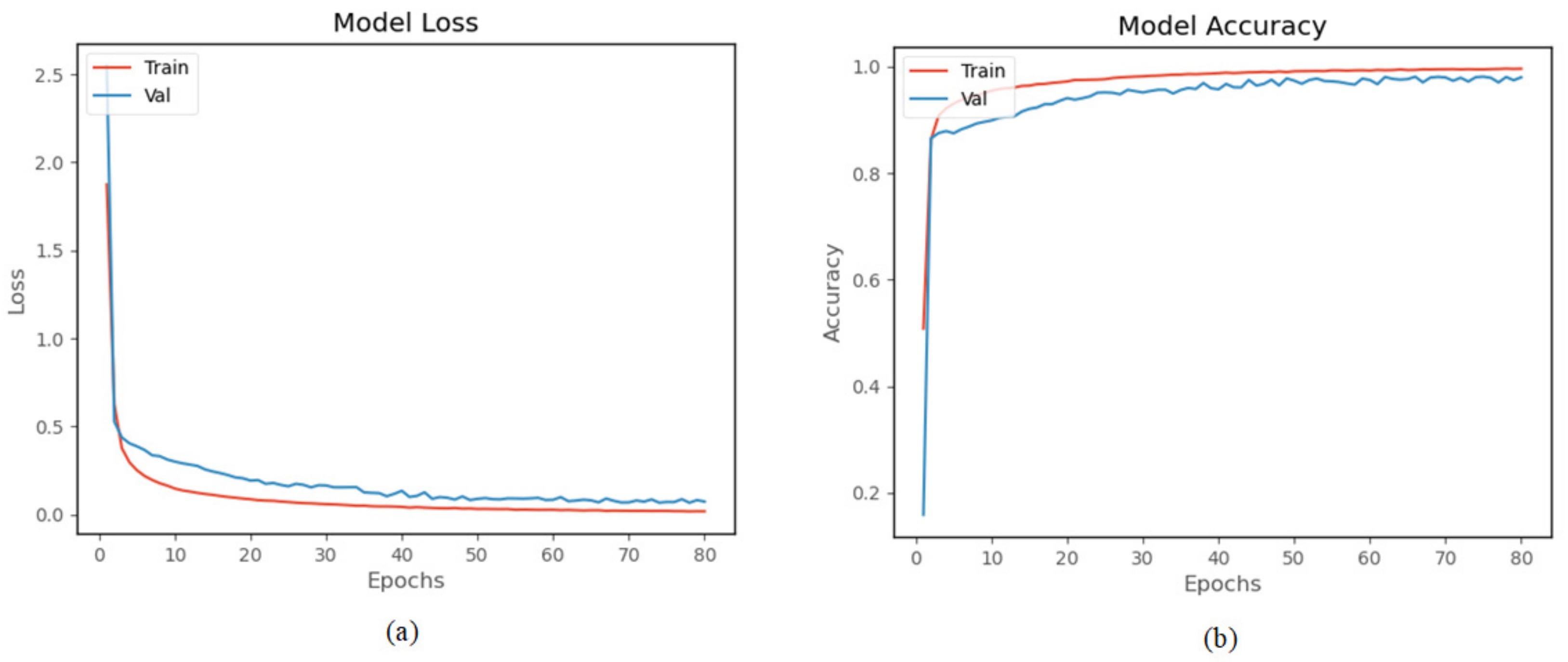

3.5.2. Hyperparameters of the Model Trained

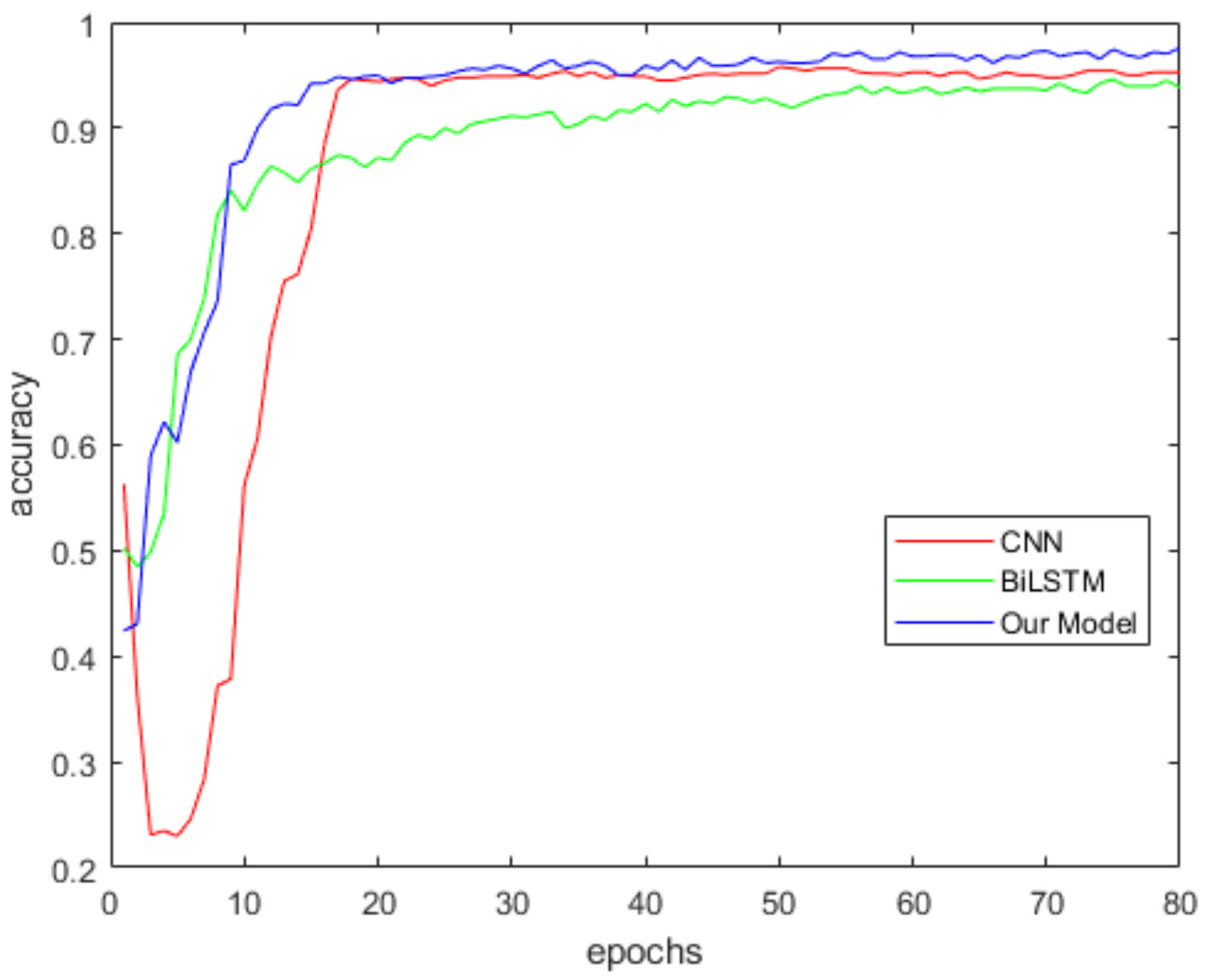

3.6. Experiment Result

3.7. Model Performance on Public Datasets

3.7.1. Performance on WISDM Dataset

3.7.2. Performance on PAMAP2 Dataset

3.8. Comparison with Existing Work

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Qi, J.; Yang, P.; Hanneghan, M.; Tang, S.; Zhou, B. A Hybrid Hierarchical Framework for Gym Physical Activity Recognition and Measurement Using Wearable Sensors. IEEE Internet Things 2019, 6, 1384–1393. [Google Scholar] [CrossRef] [Green Version]

- Asghari, P.; Soleimani, E.; Nazerfard, E. Online human activity recognition employing hierarchical hidden Markov models. J. Amb. Intel. Hum. Comp. 2020, 11, 1141–1152. [Google Scholar] [CrossRef] [Green Version]

- Dang, L.M.; Min, K.; Wang, H.; Piran, M.J.; Lee, C.H.; Moon, H. Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern. Recogn. 2020, 108, 1–24. [Google Scholar]

- Zhang, S.; Wei, Z.; Nie, J.; Huang, L.; Wang, S.; Li, Z. A Review on Human Activity Recognition Using Vision-Based Method. J. Healthc. Eng. 2017, 2017, 1–31. [Google Scholar] [CrossRef]

- Casale, P.; Pujol, O.; Radeva, P. Human Activity Recognition from Accelerometer Data Using a Wearable Device. In Proceedings of the Pattern Recognition and Image Analysis: 5th Iberian Conference, Las Palmas de Gran Canaria, Spain, 8–10 June 2011; Volume 6669, pp. 289–296. [Google Scholar]

- Alemayoh, T.T.; Lee, J.H.; Okamoto, S. New Sensor Data Structuring for Deeper Feature Extraction in Human Activity Recognition. Sensors 2021, 21, 2814. [Google Scholar] [CrossRef] [PubMed]

- Kwapisz, J.; Weiss, G.; Moore, S. Activity recognition using cell phone accelerometers. SIGKDD Explor. 2011, 12, 74–82. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Z.; Zhang, Y.; Bao, J.; Zhang, Y.; Deng, H. Human Activity Recognition Based on Motion Sensor Using U-Net. IEEE Access 2019, 7, 75213–75226. [Google Scholar] [CrossRef]

- Wu, W.; Dasgupta, S.; Ramirez, E.E.; Peterson, C.; Norman, G.J. Classification Accuracies of Physical Activities Using Smartphone Motion Sensors. J. Med. Internet Res. 2012, 14, 1–9. [Google Scholar] [CrossRef]

- Gomes, E.; Bertini, L.; Campos, W.R.; Sobral, A.P.; Mocaiber, I.; Copetti, A. Machine Learning Algorithms for Activity-Intensity Recognition Using Accelerometer Data. Sensors 2021, 21, 1214. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, D.; Gravina, R.; Fortino, G.; Jiang, Y.; Tang, K. Kernel fusion based extreme learning machine for cross-location activity recognition. Inform. Fusion 2017, 37, 1–9. [Google Scholar] [CrossRef]

- Tran, D.N.; Phan, D.D. Human Activities Recognition in Android Smartphone Using Support Vector Machine. In Proceedings of the 2016 7th International Conference on Intelligent Systems, Modelling and Simulation (ISMS), Bangkok, Thailand, 25–27 January 2016; pp. 64–68. [Google Scholar]

- Ramanujam, E.; Perumal, T.; Padmavathi, S. Human Activity Recognition With Smartphone and Wearable Sensors Using Deep Learning Techniques: A Review. IEEE Sens. J. 2021, 21, 13029–13040. [Google Scholar] [CrossRef]

- Almaslukh, B.; Al Muhtadi, J.; Artoli, A.M. A robust convolutional neural network for online smartphone-based human activity recognition. J. Intell. Fuzzy Syst. 2018, 35, 1609–1620. [Google Scholar] [CrossRef]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 62, 915–922. [Google Scholar] [CrossRef]

- Panwar, M.; Dyuthi, S.R.; Chandra, P.K.; Biswas, D.; Acharyya, A.; Maharatna, K.; Gautam, A.; Naik, G.R. CNN based approach for activity recognition using a wrist-worn accelerometer. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2017, 2017, 2438–2441. [Google Scholar]

- Huang, J.; Lin, S.; Wang, N.; Dai, G.; Xie, Y.; Zhou, J. TSE-CNN: A Two-Stage End-to-End CNN for Human Activity Recognition. IEEE J. Biomed. Health 2020, 24, 292–299. [Google Scholar] [CrossRef] [PubMed]

- Jiang, W.; Yin, Z. Human Activity Recognition Using Wearable Sensors by Deep Convolutional Neural Networks. In Proceedings of the MM ’15: ACM Multimedia Conference, Brisbane, Australia, 26–30 October 2015; pp. 1307–1310. [Google Scholar]

- Qi, W.; Su, H.; Yang, C.; Ferrigno, G.; De Momi, E.; Aliverti, A. A Fast and Robust Deep Convolutional Neural Networks for Complex Human Activity Recognition Using Smartphone. Sensors 2019, 19, 3731. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Su, T.; Sun, H.; Ma, C.; Jiang, L.; Xu, T. HDL: Hierarchical Deep Learning Model based Human Activity Recognition using Smartphone Sensors. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019. [Google Scholar]

- Dua, N.; Singh, S.N.; Semwal, V.B. Multi-input CNN-GRU based human activity recognition using wearable sensors. Computing 2021, 103, 1461–1478. [Google Scholar] [CrossRef]

- Ullah, M.; Ullah, H.; Khan, S.D.; Cheikh, F.A. Stacked Lstm Network for Human Activity Recognition Using Smartphone Data. In Proceedings of the 2019 8th European Workshop on Visual Information Processing (EUVIP), Roma, Italy, 28–31 October 2019. [Google Scholar]

- Zhao, Y.; Yang, R.; Chevalier, G.; Xu, X.; Zhang, Z. Deep Residual Bidir-LSTM for Human Activity Recognition Using Wearable Sensors. Math. Probl. Eng. 2018, 2018, 1–13. [Google Scholar] [CrossRef]

- Alawneh, L.; Mohsen, B.; Al-Zinati, M.; Shatnawi, A.; Al-Ayyoub, M. A Comparison of Unidirectional and Bidirectional LSTM Networks for Human Activity Recognition. In Proceedings of the 2020 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Austin, TX, USA, 23–27 March 2020; pp. 1–6. [Google Scholar]

- Nafea, O.; Abdul, W.; Muhammad, G.; Alsulaiman, M. Sensor-Based Human Activity Recognition with Spatio-Temporal Deep Learning. Sensors 2021, 21, 2141. [Google Scholar] [CrossRef]

- Nan, Y.; Lovell, N.H.; Redmond, S.J.; Wang, K.; Delbaere, K.; van Schooten, K.S. Deep Learning for Activity Recognition in Older People Using a Pocket-Worn Smartphone. Sensors 2020, 20, 7195. [Google Scholar] [CrossRef] [PubMed]

- Mekruksavanich, S.; Jitpattanakul, A. Deep Convolutional Neural Network with RNNs for Complex Activity Recognition Using Wrist-Worn Wearable Sensor Data. Electronics 2021, 10, 1685. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Hawash, H.; Chakrabortty, R.K.; Ryan, M.; Elhoseny, M.; Song, H. ST-DeepHAR: Deep Learning Model for Human Activity Recognition in IoHT Applications. IEEE Internet Things 2021, 8, 4969–4979. [Google Scholar] [CrossRef]

- Mahmud, S.; Tonmoy, M.T.H.; Bhaumik, K.K.; Rahman, A.K.M.M.; Amin, M.A.; Shoyaib, M.; Khan, M.A.H.; Ali, A.A. Human Activity Recognition from Wearable Sensor Data Using Self-Attention. arXiv 2020, arXiv:2003.09018. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, CA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Mekruksavanich, S.; Jitpattanakul, A. LSTM Networks Using Smartphone Data for Sensor-Based Human Activity Recognition in Smart Homes. Sensors 2021, 21, 1636. [Google Scholar] [CrossRef]

- Radman, A.; Suandi, S.A. BiLSTM regression model for face sketch synthesis using sequential patterns. Neural. Comput. Appl. 2021, 33, 12689–12702. [Google Scholar] [CrossRef]

- Reiss, A.; Stricker, D. Introducing a New Benchmarked Dataset for Activity Monitoring. In Proceedings of the 2012 16th International Symposium on Wearable Computers, Newcastle, UK, 18–22 June 2012; pp. 108–109. [Google Scholar]

- Zhao, C.; Huang, X.; Li, Y.; Yousaf Iqbal, M. A Double-Channel Hybrid Deep Neural Network Based on CNN and BiLSTM for Remaining Useful Life Prediction. Sensors 2020, 20, 7109. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Huang, J.; Tu, X.; Ding, G.; Shen, T.; Xiao, X. A Wearable Activity Recognition Device Using Air-Pressure and IMU Sensors. IEEE Access 2019, 7, 6611–6621. [Google Scholar] [CrossRef]

- Singh, S.P.; Sharma, M.K.; Lay-Ekuakille, A.; Gangwar, D.; Gupta, S. Deep ConvLSTM With Self-Attention for Human Activity Decoding Using Wearable Sensors. IEEE Sens. J. 2021, 21, 8575–8582. [Google Scholar] [CrossRef]

- Shi, L.; Xu, H.; Ji, W.; Zhang, B.; Sun, X.; Li, J. Real-Time Human Activity Recognition System Based on Capsule and LoRa. IEEE Sens. J. 2020, 21, 667–677. [Google Scholar] [CrossRef]

- Gao, W.; Zhang, L.; Teng, Q.; He, J.; Wu, H. DanHAR: Dual Attention Network for multimodal human activity recognition using wearable sensors. Appl. Soft Comput. 2021, 111, 107728. [Google Scholar] [CrossRef]

- Xia, K.; Huang, J.; Wang, H. LSTM-CNN Architecture for Human Activity Recognition. IEEE Access 2020, 8, 56855–56866. [Google Scholar] [CrossRef]

- Wan, S.; Qi, L.; Xu, X.; Tong, C.; Gu, Z. Deep Learning Models for Real-time Human Activity Recognition with Smartphones. Mobile Netw. Appl. 2020, 25, 743–755. [Google Scholar] [CrossRef]

| Activity | Sitting | Standing | Walking | Running | Going Upstairs | Going Downstairs |

|---|---|---|---|---|---|---|

| 42.4% | 12.7% | 14.4% | 9.6% | 10.9% | 10% |

| Hyperparameters | Value |

|---|---|

| Loss function | Cross entropy |

| Optimizer | Adam |

| Batch size | 64 |

| Learning rate | 0.0003 (for our dataset) |

| 0.0006 (for WISDM) | |

| 0.00003 (for PAMAP2) | |

| Training times | 80 |

| Activity | HA1 | HA2 | HA3 | HA4 | HA5 | HA6 | RCL | F1S |

|---|---|---|---|---|---|---|---|---|

| HA1 | 65 | 1 | 2 | 5 | 1 | 1 | 0.87 | 0.92 |

| HA2 | 0 | 112 | 0 | 0 | 0 | 0 | 1.00 | 0.99 |

| HA3 | 0 | 1 | 97 | 1 | 0 | 0 | 0.98 | 0.95 |

| HA4 | 0 | 0 | 3 | 328 | 0 | 0 | 0.99 | 0.99 |

| HA5 | 1 | 0 | 3 | 0 | 77 | 4 | 0.91 | 0.94 |

| HA6 | 1 | 0 | 1 | 0 | 1 | 75 | 0.96 | 0.95 |

| PRC | 0.97 | 0.98 | 0.92 | 0.98 | 0.97 | 0.94 |

| Activity | HA1 | HA2 | HA3 | HA4 | HA5 | HA6 | RCL | F1S |

|---|---|---|---|---|---|---|---|---|

| HA1 | 455 | 3 | 0 | 1 | 29 | 11 | 0.91 | 0.90 |

| HA2 | 10 | 1919 | 0 | 0 | 5 | 7 | 0.98 | 0.99 |

| HA3 | 0 | 0 | 68 | 0 | 1 | 0 | 0.98 | 0.99 |

| HA4 | 0 | 0 | 0 | 53 | 0 | 0 | 1.00 | 0.99 |

| HA5 | 36 | 12 | 0 | 0 | 469 | 10 | 0.88 | 0.90 |

| HA6 | 5 | 0 | 0 | 0 | 7 | 2049 | 0.99 | 0.99 |

| PRC | 0.89 | 0.99 | 1.00 | 0.98 | 0.91 | 0.98 |

| HA1 | HA2 | HA3 | HA4 | HA5 | HA6 | HA7 | HA8 | HA9 | HA10 | HA11 | HA12 | RCL | F1S | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HA1 | 493 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0.96 |

| HA2 | 46 | 504 | 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.90 | 0.95 |

| HA3 | 0 | 0 | 459 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0.99 |

| HA4 | 0 | 0 | 0 | 667 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0.99 |

| HA5 | 0 | 0 | 0 | 6 | 483 | 24 | 0 | 0 | 0 | 0 | 0 | 0 | 0.94 | 0.97 |

| HA6 | 0 | 1 | 0 | 1 | 3 | 490 | 17 | 0 | 0 | 0 | 0 | 0 | 0.96 | 0.96 |

| HA7 | 0 | 0 | 0 | 0 | 0 | 0 | 547 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0.98 |

| HA8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 295 | 2 | 0 | 0 | 0 | 0.99 | 0.98 |

| HA9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 246 | 11 | 0 | 0 | 0.93 | 0.96 |

| HA10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 500 | 9 | 0 | 0.98 | 0.96 |

| HA11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 19 | 669 | 0 | 0.97 | 0.98 |

| HA12 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 160 | 1.00 | 1.00 |

| PCR | 0.91 | 1.00 | 0.98 | 0.99 | 0.99 | 0.95 | 0.97 | 0.97 | 0.99 | 0.94 | 0.99 | 1.00 |

| Dataset | Reference | Accuracy | Fw | Params |

|---|---|---|---|---|

| WISDM | CNN [15] | 93.32% | - | - |

| TSE-CNN [17] | 95.7% | 94.01% | 9223 | |

| SC-CNN [6] | 97.08% | - | 1,176,972 | |

| CNN-GRU [21] | 97.21% | 97.22% | - | |

| LSTM-CNN [40] | 95.01% | 95.85% | 62,598 | |

| Our Model | 97.32% | 97.31% | 71,462 | |

| PAMAP2 | CNN-GRU [21] | 95.27% | 95.24% | - |

| CNN [41] | 91% | 91.16% | - | |

| Self-Attention [29] | - | 96% | 428,072 | |

| CNN-Attention [39] | 93.16% | - | 3,510,000 | |

| Our Model | 97.15% | 97.35% | 185,376 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wang, L. Human Activity Recognition Based on Residual Network and BiLSTM. Sensors 2022, 22, 635. https://doi.org/10.3390/s22020635

Li Y, Wang L. Human Activity Recognition Based on Residual Network and BiLSTM. Sensors. 2022; 22(2):635. https://doi.org/10.3390/s22020635

Chicago/Turabian StyleLi, Yong, and Luping Wang. 2022. "Human Activity Recognition Based on Residual Network and BiLSTM" Sensors 22, no. 2: 635. https://doi.org/10.3390/s22020635

APA StyleLi, Y., & Wang, L. (2022). Human Activity Recognition Based on Residual Network and BiLSTM. Sensors, 22(2), 635. https://doi.org/10.3390/s22020635