Deep Learning Methods for Speed Estimation of Bipedal Motion from Wearable IMU Sensors

Abstract

:1. Introduction

- We measured IMU data on eight human subjects while walking and running together with a reference speed recorded by a monowheel and provided those data as publicly. We also provide all codes to run the proposed methods to make replication of our results as convenient as possible.

- We test existing architectures of deep learning for the task of predicting the motion speed from the IMU data. We show the benefits of approaches based on auto-encoder topology. Moreover, we propose a novel decoder architecture that achieves the best results on our datasets. The architecture is motivated by the nature of the IMU signal.

- We provide sensitivity studies of the methods with respect to: (i) the subjects (via leave one out cross-validation), (ii) the number of IMU sensors on the body and their location, and (iii) availability of additional knowledge such as the length of the leg. We observed that these details are more important than the architecture of the neural network.

2. Related Work

2.1. Feature-Based Approaches

2.2. Neural Networks

2.3. Available Datasets

3. Methods

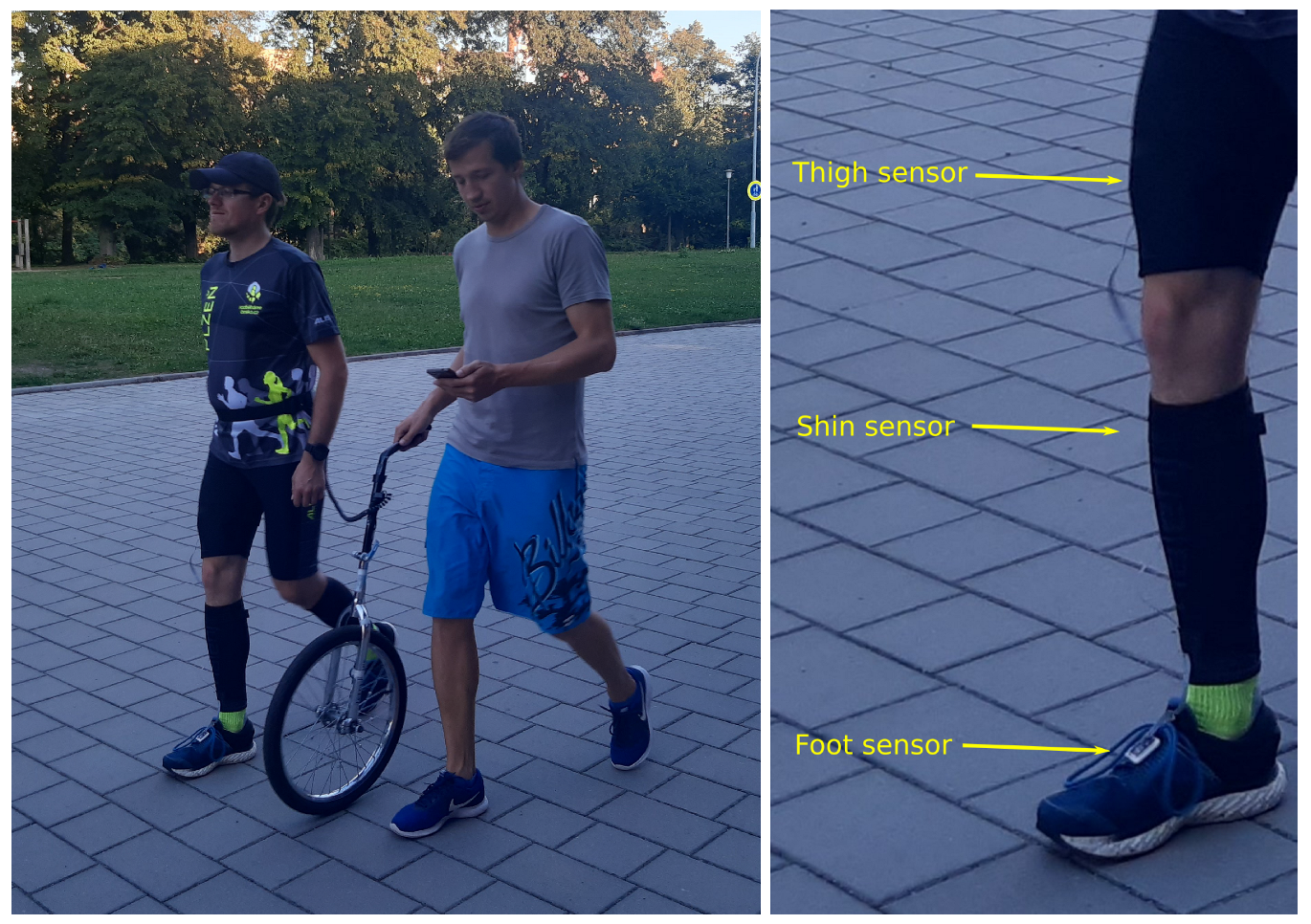

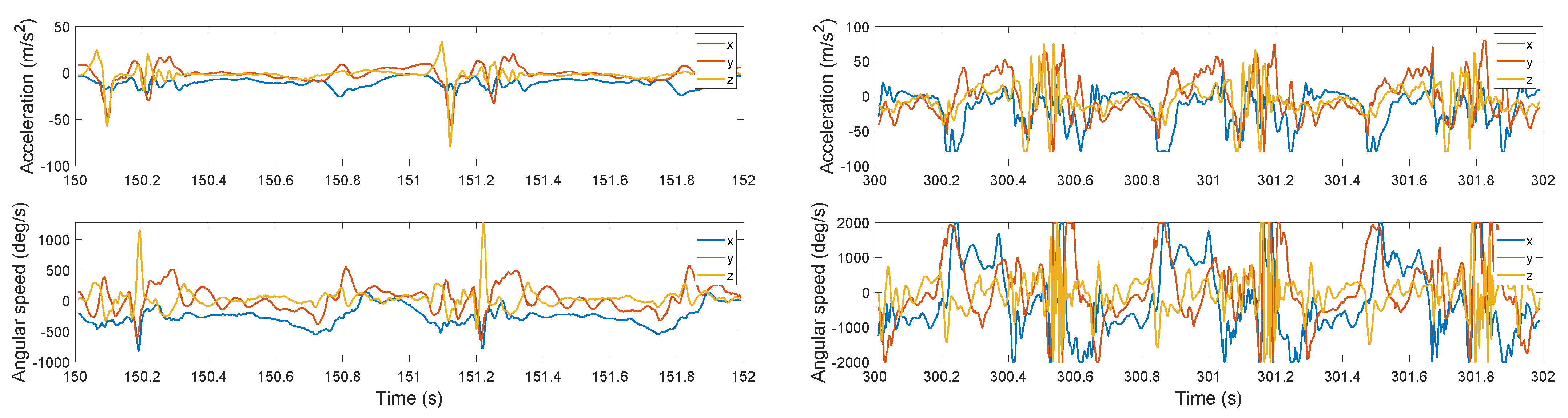

3.1. Data Acquisition: Sensors on a Single Leg

Collected Datasets

- Accelerometer column 1:9, in three locations: 1:3 Thigh, 4:6 Shin, 7:9 Foot (conversion to m/s by multiplier 0.0024).

- Gyroscope column 10:18 split into: 10:12 Thigh, 13:15 Shin, 16:18 Foot (conversion to deg/s by multiplier 0.061).

- Speed column 19 (km/h).

- Time column 20 (s).

3.2. Problem Formulation

3.3. Deep Learning Methods

Feed-Forward Networks

3.4. Semi-Supervised Variational Autoencoders

- Conventional Decoder: SVAE-LSTM-CNN

- Proposed Decoder: SVAE-Sine

4. Experiments

4.1. Experimental Protocol

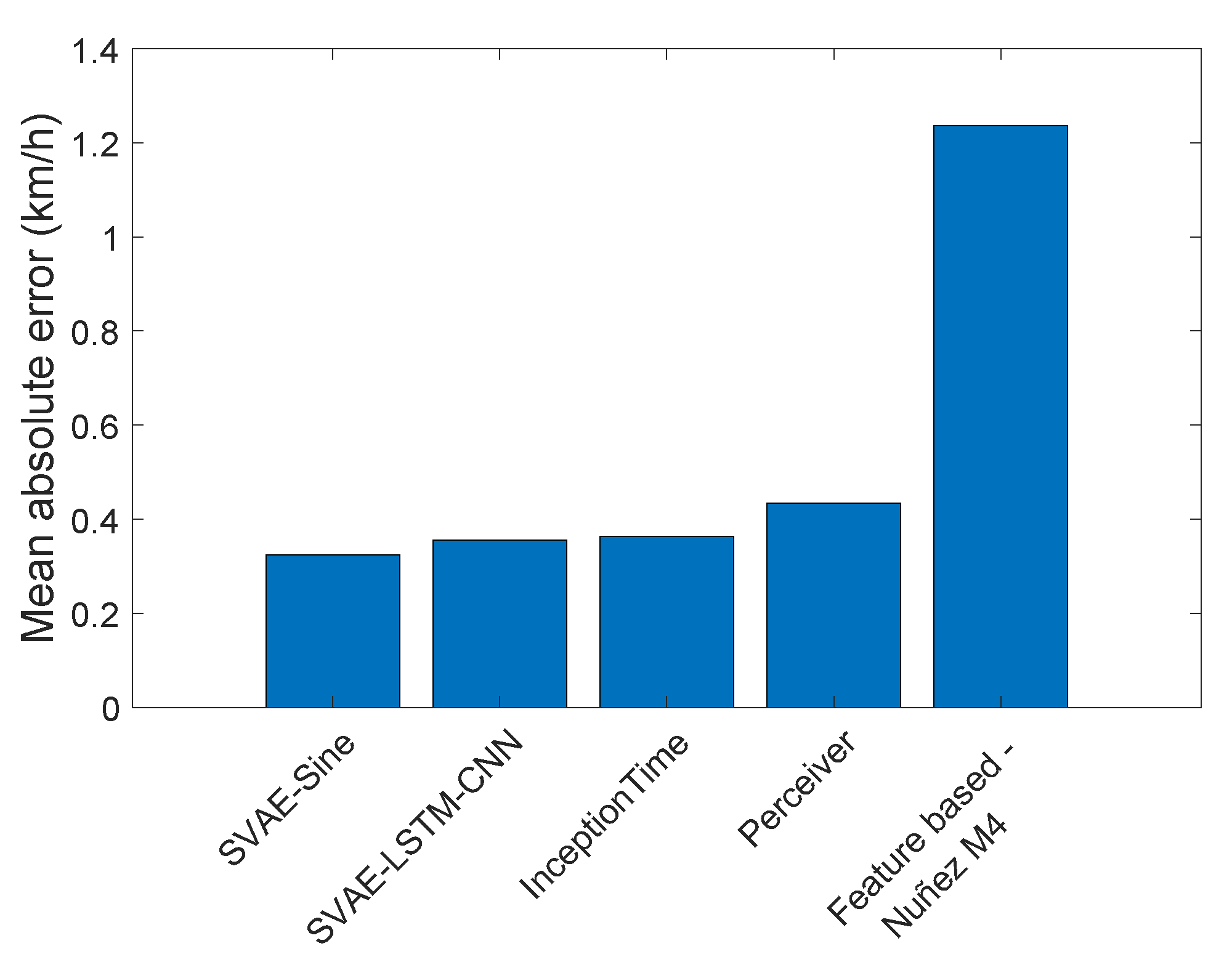

4.2. Conventional Feature-Based Methods

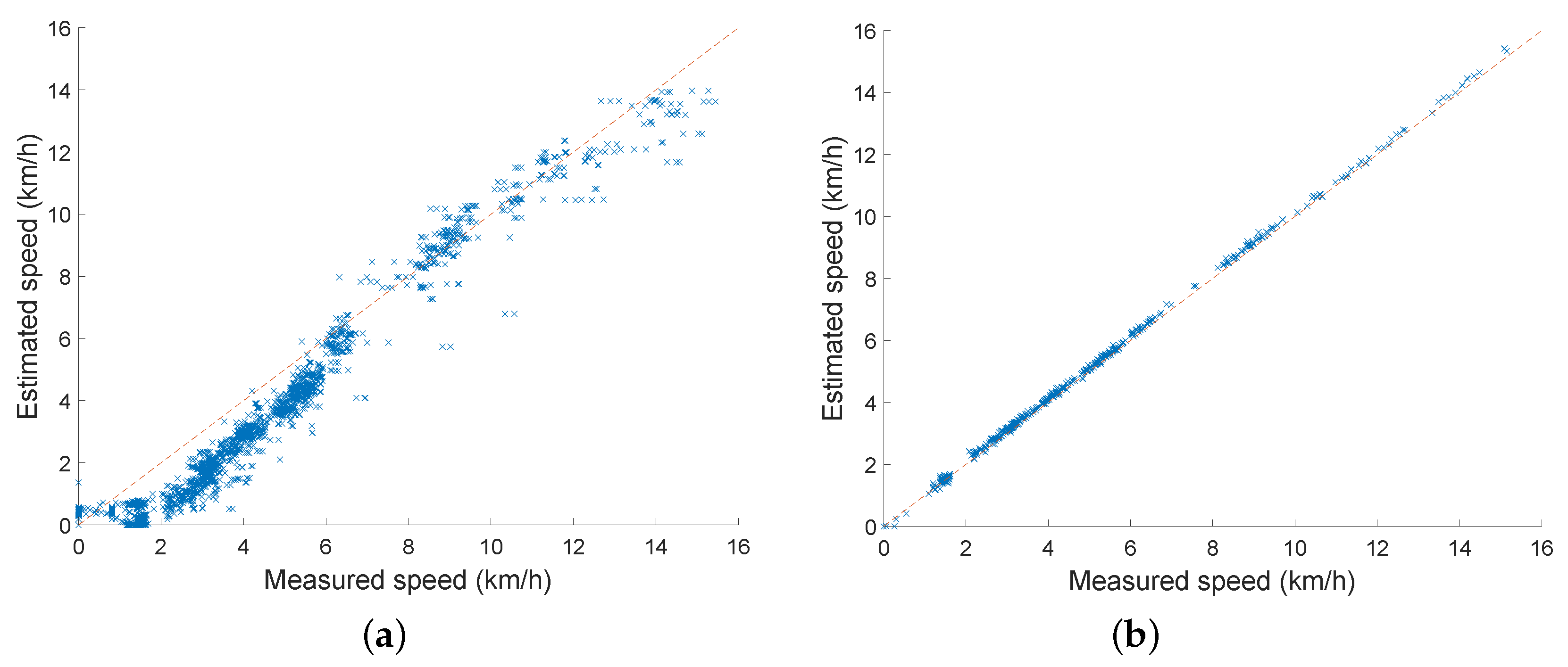

4.3. Deep Learning Methods

4.4. Method Comparison for a Single Foot Sensor

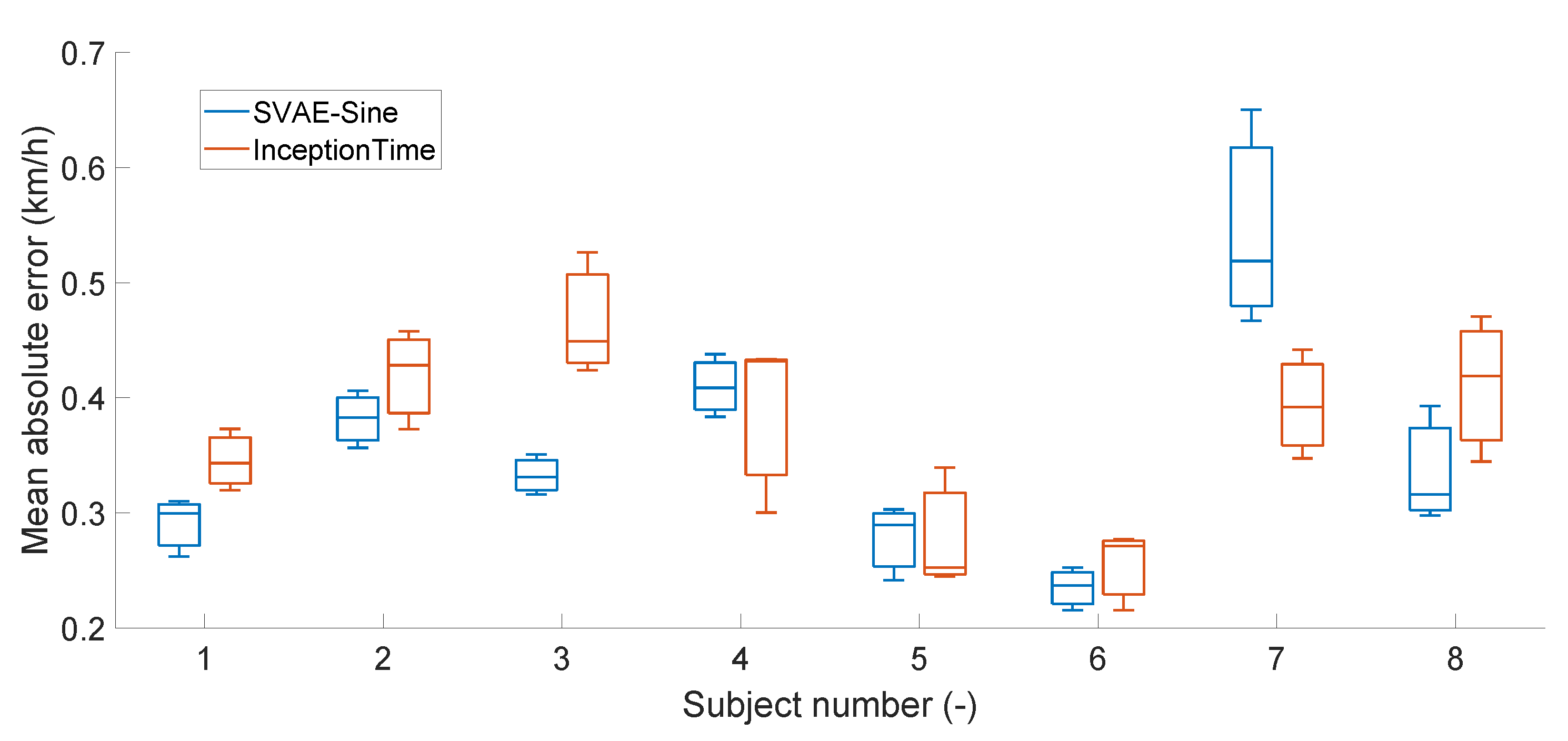

4.5. Inter-Subject Variability

4.6. Sensitivity to the Sensor Location

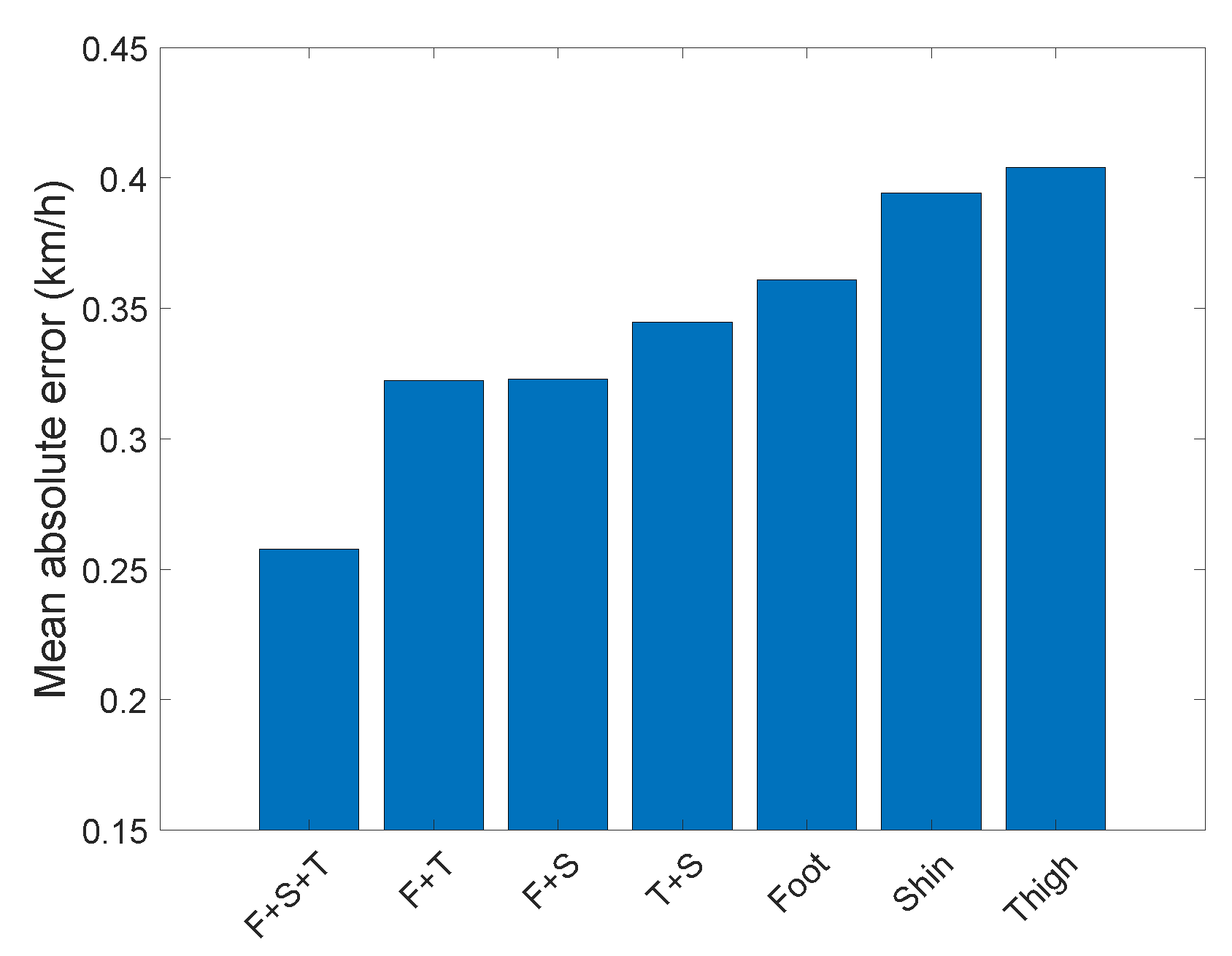

4.7. Additional Biometric Information

5. Discussion and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Details of Hyperparameter Selection

| Conv_Channels | Hidden_Size | Hidden_Layer_Depth | Latent_Length | Error km/h | ||

|---|---|---|---|---|---|---|

| 8 | 128 | 1 | 64 | 0.1 | 0.3552 | |

| 8 | 128 | 1 | 128 | 0.01 | 0.3814 | |

| 8 | 128 | 1 | 64 | 0.01 | 0.0001 | 0.3844 |

| Conv_Channels | Hidden_Size | Hidden_Layer_Depth | Latent_Length | Sin_Depth | Error km/h | ||

|---|---|---|---|---|---|---|---|

| 16 | 256 | 1 | 128 | 0.01 | 100 | 0.3238 | |

| 8 | 256 | 1 | 128 | 0.01 | 50 | 0.3640 | |

| 8 | 256 | 1 | 64 | 0.1 | 10 | 0.3693 |

| n_Filters | Kernel_Sizes | Bottleneck_Channels | Error km/h |

|---|---|---|---|

| 16 | [21, 41, 81] | 8 | 0.3630 |

| 16 | [11, 21, 41] | 8 | 0.3662 |

| 8 | [21, 41, 81] | 4 | 0.3799 |

| Num_Freq_ Bands | Max_ Freq | Depth | Num_ Latents | Latent_ Dim | Cross_ Dim | Cross_ Dim_Head | Latent_ Dim_Head | Error km/h |

|---|---|---|---|---|---|---|---|---|

| 6 | 10.0 | 6 | 256 | 128 | 256 | 32 | 64 | 0.4339 |

| 6 | 15.0 | 6 | 256 | 128 | 512 | 32 | 16 | 0.4691 |

| 12 | 15.0 | 12 | 512 | 256 | 128 | 64 | 64 | 0.4956 |

References

- McGinnis, R.S.; Mahadevan, N.; Moon, Y.; Seagers, K.; Sheth, N.; Wright, J.A., Jr.; DiCristofaro, S.; Silva, I.; Jortberg, E.; Ceruolo, M.; et al. A machine learning approach for gait speed estimation using skin-mounted wearable sensors: From healthy controls to individuals with multiple sclerosis. PLoS ONE 2017, 12, e0178366. [Google Scholar] [CrossRef] [PubMed]

- Schimpl, M.; Lederer, C.; Daumer, M. Development and validation of a new method to measure walking speed in free-living environments using the actibelt® platform. PLoS ONE 2011, 6, e23080. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Soltani, A.; Dejnabadi, H.; Savary, M.; Aminian, K. Real-world gait speed estimation using wrist sensor: A personalized approach. IEEE J. Biomed. Health Inform. 2019, 24, 658–668. [Google Scholar] [CrossRef] [PubMed]

- Ojeda, L.; Borenstein, J. Non-GPS navigation for security personnel and first responders. J. Navig. 2007, 60, 391. [Google Scholar] [CrossRef] [Green Version]

- Yang, S.; Li, Q. Inertial sensor-based methods in walking speed estimation: A systematic review. Sensors 2012, 12, 6102–6116. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, R.; Yang, H.; Höflinger, F.; Reindl, L.M. Adaptive zero velocity update based on velocity classification for pedestrian tracking. IEEE Sens. J. 2017, 17, 2137–2145. [Google Scholar] [CrossRef]

- Wang, Y.; Shkel, A.M. Adaptive threshold for zero-velocity detector in ZUPT-aided pedestrian inertial navigation. IEEE Sens. Lett. 2019, 3, 1–4. [Google Scholar] [CrossRef]

- Wagstaff, B.; Peretroukhin, V.; Kelly, J. Improving foot-mounted inertial navigation through real-time motion classification. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017; pp. 1–8. [Google Scholar]

- Bai, N.; Tian, Y.; Liu, Y.; Yuan, Z.; Xiao, Z.; Zhou, J. A high-precision and low-cost IMU-based indoor pedestrian positioning technique. IEEE Sens. J. 2020, 20, 6716–6726. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Aminian, K.; Robert, P.; Jequier, E.; Schutz, Y. Estimation of speed and incline of walking using neural network. IEEE Trans. Instrum. Meas. 1995, 44, 743–746. [Google Scholar] [CrossRef]

- Sikandar, T.; Rabbi, M.F.; Ghazali, K.H.; Altwijri, O.; Alqahtani, M.; Almijalli, M.; Altayyar, S.; Ahamed, N.U. Using a Deep Learning Method and Data from Two-Dimensional (2D) Marker-Less Video-Based Images for Walking Speed Classification. Sensors 2021, 21, 2836. [Google Scholar] [CrossRef]

- Kawaguchi, N.; Nozaki, J.; Yoshida, T.; Hiroi, K.; Yonezawa, T.; Kaji, K. End-to-end walking speed estimation method for smartphone PDR using DualCNN-LSTM. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019; pp. 463–470. [Google Scholar]

- Yan, H.; Herath, S.; Furukawa, Y. Ronin: Robust neural inertial navigation in the wild: Benchmark, evaluations, and new methods. arXiv 2019, arXiv:1905.12853. [Google Scholar]

- Feigl, T.; Kram, S.; Woller, P.; Siddiqui, R.H.; Philippsen, M.; Mutschler, C. RNN-aided human velocity estimation from a single IMU. Sensors 2020, 20, 3656. [Google Scholar] [CrossRef] [PubMed]

- Qian, Y.; Yang, K.; Zhu, Y.; Wang, W.; Wan, C. Combining deep learning and model-based method using Bayesian Inference for walking speed estimation. Biomed. Signal Process. Control 2020, 62, 102117. [Google Scholar] [CrossRef]

- Li, Y.; Wang, L. Human Activity Recognition Based on Residual Network and BiLSTM. Sensors 2022, 22, 635. [Google Scholar] [CrossRef]

- Fawaz, H.I.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.A.; Petitjean, F. Inceptiontime: Finding alexnet for time series classification. Data Min. Knowl. Discov. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Jaegle, A.; Gimeno, F.; Brock, A.; Zisserman, A.; Vinyals, O.; Carreira, J. Perceiver: General Perception with Iterative Attention. arXiv 2021, arXiv:2103.03206. [Google Scholar]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Yue-Hei Ng, J.; Hausknecht, M.; Vijayanarasimhan, S.; Vinyals, O.; Monga, R.; Toderici, G. Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4694–4702. [Google Scholar]

- Lotter, W.; Kreiman, G.; Cox, D. Deep predictive coding networks for video prediction and unsupervised learning. arXiv 2016, arXiv:1605.08104. [Google Scholar]

- Alam, M.N.; Munia, T.T.K.; Fazel-Rezai, R. Gait speed estimation using Kalman Filtering on inertial measurement unit data. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017; pp. 2406–2409. [Google Scholar]

- Nuñez, E.H.; Parhar, S.; Iwata, I.; Setoguchi, S.; Chen, H.; Daneault, J.F. Comparing different methods of gait speed estimation using wearable sensors in individuals with varying levels of mobility impairments. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 3792–3798. [Google Scholar]

- Arumukhom Revi, D.; De Rossi, S.M.M.; Walsh, C.J.; Awad, L.N. Estimation of Walking Speed and Its Spatiotemporal Determinants Using a Single Inertial Sensor Worn on the Thigh: From Healthy to Hemiparetic Walking. Sensors 2021, 21, 6976. [Google Scholar] [CrossRef]

- Leng, L.; Zhang, J.; Khan, M.K.; Chen, X.; Alghathbar, K. Dynamic weighted discrimination power analysis: A novel approach for face and palmprint recognition in DCT domain. Int. J. Phys. Sci. 2010, 5, 2543–2554. [Google Scholar]

- Huang, C.; Zhang, F.; Xu, Z.; Wei, J. The Diverse Gait Dataset: Gait segmentation using inertial sensors for pedestrian localization with different genders, heights and walking speeds. Sensors 2022, 22, 1678. [Google Scholar] [CrossRef]

- Barth, J.; Oberndorfer, C.; Pasluosta, C.; Schülein, S.; Gassner, H.; Reinfelder, S.; Kugler, P.; Schuldhaus, D.; Winkler, J.; Klucken, J.; et al. Stride segmentation during free walk movements using multi-dimensional subsequence dynamic time warping on inertial sensor data. Sensors 2015, 15, 6419–6440. [Google Scholar] [CrossRef] [PubMed]

- Rampp, A.; Barth, J.; Schülein, S.; Gaßmann, K.G.; Klucken, J.; Eskofier, B.M. Inertial sensor-based stride parameter calculation from gait sequences in geriatric patients. IEEE Trans. Biomed. Eng. 2014, 62, 1089–1097. [Google Scholar] [CrossRef] [PubMed]

- Kluge, F.; Gaßner, H.; Hannink, J.; Pasluosta, C.; Klucken, J.; Eskofier, B.M. Towards mobile gait analysis: Concurrent validity and test-retest reliability of an inertial measurement system for the assessment of spatio-temporal gait parameters. Sensors 2017, 17, 1522. [Google Scholar] [CrossRef]

- Murata, Y.; Kaji, K.; Hiroi, K.; Kawaguchi, N. Pedestrian dead reckoning based on human activity sensing knowledge. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014; pp. 797–806. [Google Scholar]

- Chen, C.; Zhao, P.; Lu, C.X.; Wang, W.; Markham, A.; Trigoni, N. Oxiod: The dataset for deep inertial odometry. arXiv 2018, arXiv:1809.07491. [Google Scholar]

- Yan, H.; Shan, Q.; Furukawa, Y. RIDI: Robust IMU double integration. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 621–636. [Google Scholar]

- Khandelwal, S.; Wickström, N. Gait event detection in real-world environment for long-term applications: Incorporating domain knowledge into time-frequency analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 1363–1372. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Mohamed, S.; Rezende, D.J.; Welling, M. Semi-supervised learning with deep generative models. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2014; pp. 3581–3589. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Singh, S.P.; Sharma, M.K.; Lay-Ekuakille, A.; Gangwar, D.; Gupta, S. Deep ConvLSTM with self-attention for human activity decoding using wearable sensors. IEEE Sens. J. 2020, 21, 8575–8582. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Tibshirani, R. The Elements of Statistical Learning; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2001; Volume 1. [Google Scholar]

- Wang, J.; Perez, L. The effectiveness of data augmentation in image classification using deep learning. In Convolutional Neural Networks for Visual Recognition; Stanford University: Stanford, CA, USA, 2017. [Google Scholar]

- Blecha, T.; Soukup, R.; Kaspar, P.; Hamacek, A.; Reboun, J. Smart firefighter protective suit-functional blocks and technologies. In Proceedings of the 2018 IEEE International Conference on Semiconductor Electronics (ICSE), Kuala Lumpur, Malaysia, 15–17 August 2018; p. C4. [Google Scholar]

- Erhan, D.; Courville, A.; Bengio, Y.; Vincent, P. Why does unsupervised pre-training help deep learning? In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 201–208. [Google Scholar]

| Method | Year | Public Data | Features | Neural Architecture | Run | Source Code | Placem. Study | Note |

|---|---|---|---|---|---|---|---|---|

| [13] | 2019 | Yes | – | CNN-LSTM | No | No | No | Smartphone |

| [9] | 2020 | No | ZUPT | – | No | No | No | |

| [1] | 2017 | No | Custom | – | No | No | No | |

| [7] | 2019 | No | ZUPT | – | Yes | No | – | Adaptive threshold |

| [6] | 2017 | No | ZUPT | – | Yes | No | – | Missing ground truth |

| [16] | 2020 | No | ZUPT+NN | LSTM | No | No | No | |

| [24] | 2020 | No | ZUPT | – | No | Yes | – | Multiple methods |

| [23] | 2017 | No | ZUPT | – | No | No | – | Kalman |

| [8] | 2017 | No | ZUPT | – | Yes | No | – | Adaptive threshold |

| [3] | 2019 | No | Custom | – | Yes | No | No | Wrist, Personalized |

| ours | - | Yes | – | CNN, RNN, VAE | Yes | Yes | Yes |

| Dataset | Year | Run | Sensor Location | Device | Reference | Available |

|---|---|---|---|---|---|---|

| [31] | 2014 | No | waist, shirt pocket, bag | Smartphone | Human label | Yes |

| [32] | 2018 | No | hand, pocket, bag, trolley | Smartphone | VICON | Yes |

| [34] | 2016 | Yes | ankles | VICON | Threadmill 1 | Yes |

| [14] | 2019 | No | hand, pocket, bag | Smartphone | Visual SLAM | Yes |

| [33] | 2018 | No | hand, pocket, bag, body | Smartphone | Visual SLAM | Yes |

| [28] | 2015 | No | foot | IMUs | Human label | on demand |

| [29] 2 | 2014 | No | foot | IMUs | Human label | on demand |

| [30] | 2017 | No | foot | IMUs | Optical system | Yes |

| ours | - | Yes | foot, shin, thigh | IMUs | Monowheel | Yes |

| InceptionTime | Perceiver | ||

|---|---|---|---|

| Hyper-Parameter | Range | Hyper-Parameter | Range |

| number of filters | [2, 4, 8, 16, 32] | Number of freq. bands | [6, 12] |

| kernel sizes | [[5, 11, 21], [11, 21, 41], [21, 41, 81]] | Maximum frequency | [3, 5, 10, 15] |

| Depth | [6, 12] | ||

| bottleneck channels | [2, 4, 8] | Number of latents | [128, 256, 512] |

| Dimension of latents | [64, 128, 256] | ||

| Dimension of cross layer | [512, 256, 128] | ||

| Dim. of att. head for cross layer | [64, 32, 16] | ||

| Dim. of att. head for latents | [64, 32, 16] | ||

| Encoder | Decoder | ||

|---|---|---|---|

| Hyper-Parameter | Range | Hyper-Parameter | Range |

| Convolution channels | [1, 2, 4, 8, 16] | Sine: size of hidden layer | [10, 50, 100] |

| Size of hidden layer | [128, 256, 512] | LSTM-CNN: same as encoder | |

| Depth of hidden layer | [1, 2] | ||

| Length of latent z | [64, 128, 256] | ||

| Predictor weight | [0.1, 0.01, 0.001, 0.0001] | ||

| KL weight | [1, 1, 1, 1] | ||

| ID | Method Features | Scale 1 | Scale 2 | Cut-Off Freq. | Error [km/h] |

|---|---|---|---|---|---|

| M2 | heel-strike to heel-strike segmentation | 1.0 | 1.2 | 0.82 | 4.9 |

| M4 | mid-stance to mid-stance segmentation | 0.6 | 5.6 | 0.98 | 1.2 |

| M5 | M4 + gravity compensation | 3.2 | −3.0 | 0.80 | 3.7 |

| M7 | mid-swing to mid-swing segmentation | −0.02 | 1.9 | 18.2 | |

| M8 | M7 + outlier elimination | −0.002 | 1.9 | 10.8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Justa, J.; Šmídl, V.; Hamáček, A. Deep Learning Methods for Speed Estimation of Bipedal Motion from Wearable IMU Sensors. Sensors 2022, 22, 3865. https://doi.org/10.3390/s22103865

Justa J, Šmídl V, Hamáček A. Deep Learning Methods for Speed Estimation of Bipedal Motion from Wearable IMU Sensors. Sensors. 2022; 22(10):3865. https://doi.org/10.3390/s22103865

Chicago/Turabian StyleJusta, Josef, Václav Šmídl, and Aleš Hamáček. 2022. "Deep Learning Methods for Speed Estimation of Bipedal Motion from Wearable IMU Sensors" Sensors 22, no. 10: 3865. https://doi.org/10.3390/s22103865

APA StyleJusta, J., Šmídl, V., & Hamáček, A. (2022). Deep Learning Methods for Speed Estimation of Bipedal Motion from Wearable IMU Sensors. Sensors, 22(10), 3865. https://doi.org/10.3390/s22103865