Abstract

Agriculture is crucial to the economic prosperity and development of India. Plant diseases can have a devastating influence towards food safety and a considerable loss in the production of agricultural products. Disease identification on the plant is essential for long-term agriculture sustainability. Manually monitoring plant diseases is difficult due to time limitations and the diversity of diseases. In the realm of agricultural inputs, automatic characterization of plant diseases is widely required. Based on performance out of all image-processing methods, is better suited for solving this task. This work investigates plant diseases in grapevines. Leaf blight, Black rot, stable, and Black measles are the four types of diseases found in grape plants. Several earlier research proposals using machine learning algorithms were created to detect one or two diseases in grape plant leaves; no one offers a complete detection of all four diseases. The photos are taken from the plant village dataset in order to use transfer learning to retrain the EfficientNet B7 deep architecture. Following the transfer learning, the collected features are down-sampled using a Logistic Regression technique. Finally, the most discriminant traits are identified with the highest constant accuracy of 98.7% using state-of-the-art classifiers after 92 epochs. Based on the simulation findings, an appropriate classifier for this application is also suggested. The proposed technique’s effectiveness is confirmed by a fair comparison to existing procedures.

1. Introduction

The agricultural sector is a significant and new framework for researchers in the field of computer vision today. Agriculture’s primary goal is to produce a wide range of valuable and substantial crops and plants. In farming, plant pathogens diminish the amount and characteristics of the product, so they must be controlled early [1]. Recently, agricultural researchers have focused their efforts on diseases of various fruits and crops. The researchers devised several methods for detecting and classifying diseases in fruits and crops [2,3]. Grapes seem to be a complex fruit to grow because the plants are constantly attacked by viruses, resulting in a significant reduction in grape production [4]. As a result, it is critical to control contaminated crops before they wreak havoc on product quality and quantity. Human inspections are mostly used for disease diagnosis, but some disadvantages make this procedure complicated, including time, expense, availability, and the need for many efforts. Various bacterial and fungal diseases manifest themselves primarily occur on the surface of leaf area and fruit area. Lesions, like pests, have complicated patterns that make them difficult to understand. Bacteria, on the other hand, thrive as single cells with a simpler life cycle. They reproduce by dividing a single cell into two; a method called binary fusion. Viruses are microscopic particles that contain genes and fibers but no membrane proteins [5]. On grape leaves, diseases like rust, scab, downy mildew with powdery mildew, and some more diseases like leaf blight, black measles, and black rot can occur. Image processing-based automated systems have recently been developed, which can efficiently identify and recognize diseases in Horticulture. The researchers use image processing to determine the diseased part’s location, color, form, scale, and boundaries. Pre-processing and symptom segmentation are done using a variety of new techniques.

In 1970, various image processing techniques were employed to tackle challenges connected to naked eye inspection in an agricultural field. In the agricultural industry, several existing procedures consider segmentation of distinct crop components such as fruits, stem and leaf, extracting and identifying different diseases and spots created by stress [6]. When a big number of training samples are not available to process, traditional image processing approaches function well. Some of the drawbacks related to plant disease detection are the fusion of background and object regions, similarity and shapes extracted during feature extraction. As a result, recent deep approaches effectively addressed the majority of the aforementioned issues.

Several conventional machine learning approaches have gained more practice in identifying and diagnosing plant lesions and are limited to image segmentation, feature extraction, and pattern recognition procedures [7]. It is impossible to extract features from a large amount of dataset using traditional techniques such as manual detection and machine learning. A deep learning model, on the other hand, retrieves the data abstraction from bottomless layers that are more useful and valuable than the machine learning techniques that cannot be applied on image dataset. The inclusion of redundant feature information is one of the drawbacks of employing deep architecture [8]. For classification, Convolutional Neural Network (CNN) [9] architecture incorporates some layers such as ReLu, Max-Pooling, Convolutional, Softmax and Fully Connected Layer [10]. A large amount of data, without any segmentation, is required to train a CNN model. Normally, raw data is fed into a CNN model as an input. VGGNet [11], ResNet, AlexNet, and Inception V3, EfficientNet are the most popular and advanced architectures in CNN [12]. PlantVillage is a typical training dataset that contains roughly 54,000 plant leaf picture datasets with different 36 classes, and the models are trained on it. It is always not possible to obtain a large amount of dataset for the training of models. The researchers employed the Transfer Learning (TL) method for this. They used this method to retrain the model on a specified dataset with the same parameters. When comparing traditional techniques to CNN models, transfer learning techniques are preferable due to the number of characteristics extracted. Due to its excellent generalization ability and robustness, the transfer learning approach with deep learning techniques excels in many areas, including the processing of signals, identification, and recognition of face data, road crack detection, and medical image analysis. Furthermore, these techniques have achieved promising output in the area of farming with disease detections, benefiting more smallholders and horticultural workers [13], in areas such as crop disease diagnosis [14], weed recognition, fine seed selection, pest detection, fruit counting, and land cover research, among others, which has contributed to image classification.

The novel contributions in this research work involves pre-processing of digital images and extracting their feature planes, followed by classification based on the type of leaf disease. Also, the use of different pre-processing techniques focuses our analysis on the leaf disease, as this element contains all of the information regarding the leaf illness. Color feature information has also been added as an image to determine if it may aid the classification process in correctly detecting disease utilizing Hybrid Convolutional Neural Network (Hy-CNN) transfer learning and more accurate feature reduction.

The content in rest of paper is described as follows: Section 2 discusses about related works; Section 3 is important as it describes the detail of dataset and classifies the stress of leaf using proposed Hy-CNN model. Section 4 discusses experimental result in terms of performance analysis. Section 5 discusses in terms of comparison with other models. Finally, Section 6 shows conclusion and future scope.

2. Related Work

An automatic model for detecting and classifying an unhealthy area of plants is discussed by Atila et al. [15]. The accuracy of the Efficient Net CNN-based state-of-the-art model was compared with different models in order to detect various diseases of a plant leaf. The accuracy achieved with this model is 96.18% as compared to different architectures. The united Convolutional Neural Network for the identification of disease in grape plant is mentioned by Ji et al. [16]. United Model’s representational potential is bolstered by high-level feature fusion, allowing it to outperform the competition in the grape leaf diseases identification mission. The F-CNN and S-CNN model [17] with full image and segmented images to classify and detect disease in plant leaves. When the trained CNN model is applied to a segmented image instead of to a complete image, the accuracy achieved is more than the full image. The 23-layered deep CNN model is compared it with all other different and machine learning models in terms of accuracy is discussed by Azimi et al. [18]. As compared with other features, the nitrogen stress features are easily classified using the proposed CNN model. Disease caused various loos both in the field of crop production and economy growth. Gadekallu et al. [19] introduce a hybrid PCA technique with optimized algorithm named whale optimization for feature extraction and evaluated the data in terms of accuracy and superiority. Rust and Cercospora are the primary two diseases that affect the quality and productivity of the coffee plant. The texture features for extraction using k-means and thresholding segmentation algorithm is done by Sinha et al. [20], and then the relation between infected part and healthy part is identified using texture analysis on the olive plant. Sorte et al. [21] suggested texture-based pattern recognition algorithm to detect leaf lesions on the coffee plant. The attributes (local binary attribute and statistical attribute) are calculated and compared with the CNN identification rate. The performance of deep learning models in terms of “learning rate”, “batch size”, “activation function type” and “regularization rate” using tensorflow application is calculated by Kallam et al. [22]. With these terms the author finds the number of hidden layers with test and training loss. The classification of Okra’s plant disease [23], depending on pod length, using different techniques. Different models are used to recognize wormholes, insects, and pests with AlexNet, GoogleNet, and ResNet50. The accuracy achieved using ResNet50 is better than other techniques. A CNN model with eight hidden layers that perform well than machine learning techniques is well explained by Franczyk et al. [24].

With some traditional techniques the detection process requires high cost, more human intervention and maintenance. With “Automatic and Intelligent Data Collector and Classifier” the scope for detecting and visualization of disease become easier and cost effective well explained by Kundu et al. [25]. Bacterial, fungus, algae, nematodes are some of the common diseases on plant leaves. The disease analysis expert is required for the detection of diseases in plant at early stage. Using color feature [26] techniques the feature vector extracts the common disease features and passes on the values to the proposed classifier for detection and classification of leaf disease. With some deep learning techniques and pre-processing the number of unbalanced images is balanced for training and testing by Oyewola et al. [27]. For color stretching the gamma correction and decorrelation is applied. The result depicts the better accuracy with balanced dataset. Using color distribution the trainable images are modified and recognize the diseases using color space transformation technique. The image histogram transformation technique is employed by Abayomi-Alli et al. [28]. A model for identification of a number of lesions that cause damage to crops which led to a shortage of cultivation is discussed by Basavaiah et al. [29]. Using plant village datasets of different classes are classified using different techniques and achieve maximum accuracy of 98%. One of the most significant operations in precision agriculture is image-based fungal disease prediction for detecting the occurrence and quantifying the severity of variability in crops. Some work with machine learning models for comparative analysis with SVM is discussed by Abdu et al. [30]. Both models were deployed on a dataset of large-scale horticulture leaf lesion pictures using conventional surroundings and considering the critical elements of architecture, processing capacity, and training data. Convolutional Networks are a type of Neural Network that has shown to be particularly good in image recognition and classification. Different techniques are covered under CNN Network for the classification of disease using images of all fields such as medical images [31], hand gesture images, disease images, and diabetic images [32]. Techniques such as VGG16, VGG19, ResNet, Inception, MobileNet, and EfficientNet are pre-defined models for the better classification of the segmented part. Convolutional Neural Networks offers better accuracy result as given in TTA algorithm with feature extraction and classification technique.

Table 1 obtained by various authors using pre-trained deep learning models, which were obtained according to the dataset each study employed, in an arbitrary order.

Table 1.

The result of the state-of-art models.

With different recent deep learning technologies, a comparison is also being performed. These studies concentrated on the issue of overfitting and computational time. They used a number of approaches to accomplish this, including selecting crucial traits for accurate classification. Furthermore, instead of using many classifiers for a fair comparison, they focused on one classifier for features classification. As per various discussed works that there exist some gaps for research works, i.e., the number of classes and optimization tradeoffs, types of diseases classified, epochs optimization, better accuracy, etc., that need to be addressed in the use of architectures in horticulture plant leaf lesion detection. Majorly effectiveness of classes and accuracy of deep learning architectures offer the efficiency of the classifications. In this work, to enhance the amount of training image samples, high correlation function are used to accomplish the data. Then, for improving pixel intensity of an image, normalizing technique was done.

3. Materials and Methods

For proper pest management and fertilizer application, early detection of diseases in grape leaves is required as per gaps identified by the literature covered. Farmers may produce low-profit yields despite their hard work if these biotic stresses are not identified promptly. Several image processing algorithms have been developed to detect lesions, alerting farmers and allowing for early diagnosis.

3.1. Data Materials

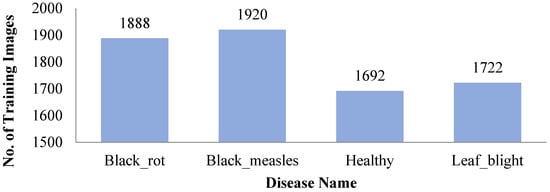

The diseases such as powdery mildew, downy mildew, rust, black rot, scab, etc., [16] are found on grape leaves. The dataset is collected from PlantVillage [33] which is composed of healthy images (2115 images), including diseased images of black rot (caused by ascomycetous fungus; 2360 images), black measles (caused by phaeomoniella aleophilum fungus; 2400 images), and leaf blight (caused by pseudocercospora Vitis fungus; 2152 images) as shown in Figure 1 below.

Figure 1.

Number of Training Dataset.

All the images are resized to 224 × 224 × 3. A total of 9027 images of the grapes crop from are split into a ratio of 80:20 for validation and testing as displayed in Table 2 with symptoms. This study calculates the accuracy or performance of several approaches.

Table 2.

Description of Training, Testing Data with symptoms.

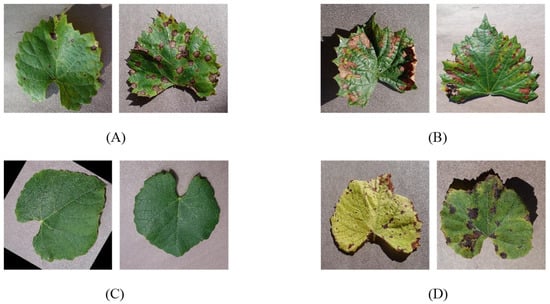

Only the leaf part is considered to identify lesions in grape leaves because the grape flower and fruit are short-lived, while the leaf is present throughout the year. Furthermore, the stem of a grape will rarely present disease symptoms promptly, while the leaf’s shape, texture, and color are all affected by the state of the plant, typically provide more detail. There are few sample images collected from plant village of grape disease leaf dataset are shown in Figure 2.

Figure 2.

Few samples grape leaf image dataset. (A) Black rot leaf image; (B) Black_measles leaf image; (C) Healthy leaf image; (D) Leaf_blight leaf image.

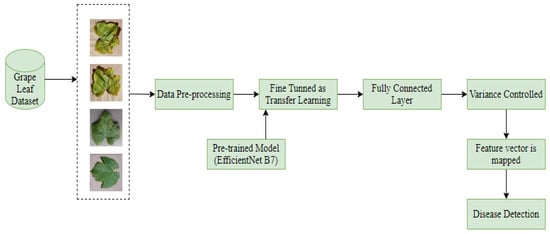

3.2. Proposed Hy-CNN Model

In this part, for the detection and classification of grape leaf disease using “Deep Transfer Learning” a proposed model approach is consisting of four different steps:—data pre-processing (Resizing and normalizing the dataset), training of deep CNN model with transfer learning, feature reduction and classification technique for classify the disease data. The Hy-CNN model used block diagram is composed of different steps, as given in Figure 3.

Figure 3.

Hy-CNN model block diagram for grape leaf disease detection and classification.

3.2.1. Image Pre-Processing

According to the literature, preprocessing of images is the most significant operation that must be performed to obtain an appropriate data with no undesired distortions and highlight the picture properties that will be relevant for later processing [34]. The images in the dataset are downsized to 224 × 224 × 3 resolutions to speed up the training process by applying a dataset of homogeneous images. It changes the representation of an image, i.e., its color, shape, texture, or removes noise [35]. Another key issue is overfitting, which occurs when a large number of pictures produce random noise. For the preventative measures against overfitting the correct range of training and testing dataset is collected with correct dimension of data. As the data have different intensity values and scale that are used at the time of training, all photos are then normalized to the same scale. Image normalization is a technique for narrowing the range of pixel intensity. Equation (1) shows the general form of normalization.

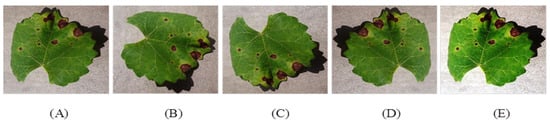

where, F denotes the normalization value, Fmin minimum pixel value, Fmax maximum pixel intensity value w.r.t an image. For increasing the size of dataset, different data augmentation technique is followed such as rotation, flipping and image brightness. Figure 4 shows different image samples after augmentation.

Figure 4.

Data Pre-processing (A) Original image, (B) 90° rotated image, (C) Vertically flipped image, (D) Horizontally flipped image, (E) Intensified image.

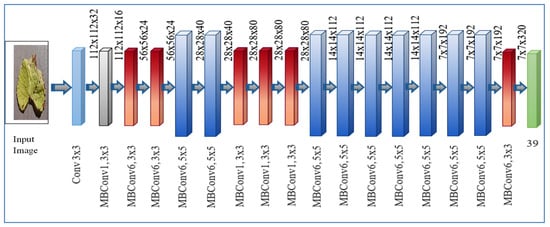

3.2.2. EfficientNet

Since 2012, as the utilization of the models increases for the training of ImageNet dataset become complicated, success has increased, although many are ineffective in terms of compute burden. The EfficientNet model can be regarded a set of CNN models because it achieves an accuracy rate of 84.4 percent with 66 M parameter in the classification task of ImageNet dataset, making it one of the state-of-the-art models [36]. The EfficientNet model consists of 8 different models ranging from B0 to B7, the number of estimated parameters does not rise significantly as the number of models increases, while accuracy increases dramatically. The EfficientNet utilizes a new activation function called Leaky ReLu activation function in place of Rectifier Linear Unit (ReLu). EfficientNet, unlike other state-of-the-art models, produces more efficient results by scaling width, resolution and depth uniformly when the model is scaled down. The initial stage in the compound scaling strategy under a fixed resource limitation is to look for a grid to determine the relationship between the different scaling dimensions of the baseline network [37].

The key building component introduced by MobileNet V2 i.e MBConv bottleneck was used by EfficientNet, but is employed significantly more than MobileNetV2 because to the increased “Floating point operations per second” (FLOPS) budget. Direct connections are used between bottlenecks with considerably fewer channels than expansion layers in MBConv because blocks are made up of a layer that expands and then compresses the channels [35]. The calculation is reduced by k2 factor as the as the layers design get separate, here k is the kernel size, which represents the width and height of the 2D convolution window.

Mathematically, EfficientNet is defined in (Equation (2)) as:

where, represents the layer mean and repeats times in variance of x. represents the shape input in tensor of Y w.r.t the layer x. The inputs of the images change from to. For increasing the model accuracy, the layers must scale with a proportional ratio optimized with the given formula:

Memory(P) <= destinated_memory

FLOPS(P) <= destinated_flops

The height, breadth, resolution is represented with x, y, z in Equation (3). A number of layers used in model with details of parameters are shown in Equation (4) and Table 3. A systematic diagram of EfficientNet B7 is represented in Figure 5.

Table 3.

Model layer and parameter categorization.

Figure 5.

Systematic diagram of EfficientNet B7 architecture for leaf disease detection.

Some of the few abbreviations used in paper are mention in Table 4, which further used to preprocess images, Segmentation, Feature Extraction, and Classification.

Table 4.

Abbreviations used in this work.

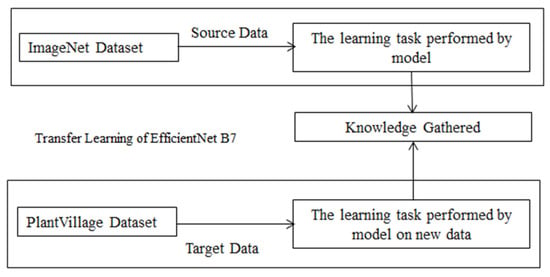

3.2.3. Training of Model Based on Transfer Learning

Deep learning, which is more modern, has had a major impact in a variety of fields. In the agricultural arena, gathering a large quantity of data for training of model that is new from start is difficult. The training data determines how well a system performs. With transfer learning the data get independent with this approach. It is simple to train a model from an insufficient model using transfer learning [38]. In this study, the EfficientNet B7 CNN model is used for training on the PlantVillage dataset. For the prediction of grape leaf disease this transfer learning model is used. The model is trained on raw data with resized image from 256 × 256 to 224 × 224. The model once trained on this raw data get knowledge of detecting the disease on plant leaf. The trained model then applies on grape leaf dataset for the detection and identification of leaf disease. The working of re-train model based on transfer learning is shown in Figure 6.

Figure 6.

Re-training of proposed model.

3.2.4. Feature Extraction

The FC layer is used to activate features after retraining a CNN model. The Leaky ReLu activation function is used to extract features from this layer. The extracted vector has a one-dimensional length of Nx1000 and a total length of. However, it has been discovered through random trials that all retrieved traits are not significant for last classification. Furthermore, the presence of those non-essential elements reduces the system’s efficiency [39]. The ReLU activation function has been improved with the Leaky ReLU function. For all input values less than zero, the gradient of the ReLU activation function is 0, deactivating the neurons in that region and perhaps creating the dying ReLU problem.

The term “leaky ReLU” was coined to describe a solution to this issue. We specify the ReLU activation function as an extremely small linear component of x instead of declaring it as 0 for negative values of inputs(x). This activation function’s formula is as follows in Equation (5):

If the input is positive, this method returns x, but if the input is negative, it returns a very little number, 0.01 times x. As a result, it also outputs negative values. The gradient of the left side of the graph now has a non-zero value as a result of this tiny change. As a result, there would be no more dead neurons in that area.

3.2.5. Feature Reduction

Feature relevance scores are important in predictive modelling projects because they provide insight into the data, insight into the model, and the foundation for dimensionality reduction and feature selection, which can improve the efficiency and efficacy of a predictive model on the problem [40]. It cuts down on the amount of time and storage space needed. It aids in the removal of multi-collinearity, which improves the interpretation of the machine learning model’s parameters. When data is reduced to very low dimensions, such as 2D or 3D, it becomes easier to visualize.

It avoids the dimensionality curse. Features are vital in pattern recognition since they help to describe the item. The quality of features is critical for accurate classification. Because the features that are extracted from the source image data are insufficient for the recognition of different variety of reasons, including feature redundancy and noisy features, a strategy for feature reduction or optimization is necessary. For feature reduction a new approach name Logistic Regression is used in this work. Variance is commonly used to determine how each pixel differs from its neighbors and to classify pixels into distinct areas. Furthermore, it is beneficial to compare the differences between two virtually identical photos [41,42]. Logistic regression is used to predict the output of a categorical dependent variable. As a result, the outcome must be either discrete or categorical. It can be 0 or 1, Yes or No, true or false, and so on, but rather than giving exact values like 0 and 1, it gives probabilistic values that are somewhere in between.

Logistic Regression is very similar to Linear Regression. The Linear Regression is employed for the regression problems, while for classification difficulties, Logistic Regression is used. Mathematical step for getting the logistic regression is given (Equation (6)).

The Algorithm 1 describes the complete process of Hybrid Convolutional Neural Network (Hy-CNN) model with feature extractor and shows the result in 0 or 1.

| Algorithm 1: Training and Testing of Hy-CNN. |

| Input: Grape image data loader batch Output: Trained Model Processing Steps: 1. If a set is training data set, then follow steps 2 to 5. 2. resize to dimension (256 × 256) 3. pre-processing to resize the image (224 × 224) 4. normalize pixel values [0, 1] 5. standardize pixel values to (256 × 256) 6. If a set is (224 × 224), then follows steps 7 to 9 7. normalize pixel value [0, 1] 8. standardize pixel values to (256 × 256) 9. augment the data with different augmentation techniques 10. Model training with MODEL = EfficientNet B7 11. for a model in MODEL use steps 12 to 13 12. fined tuned with transfer learning 13. use Leaky ReLu activation function 14. for epoch = 30, 50, 70, 100. 15. Set learning rate 0.001 use steps 16 to 17 16. for image in data loader batch: 17. update model parameter 18. end of for loop of step 16 19. if training accuracy does not improve for 9 epochs, follow steps 20 to 21 20. 21. unfreeze feature layers 22. 23. end of for loop of step 14 24. end of for loop of step 11 25. for epochs = 30, 50, 70, 100 use steps 26 to 29 26. for a testing image in data loader batch: update model parameter 27. end of for loop of step 26 28. if testing accuracy does not improve up to 7 layers 29. 30. end of for loop of step 25 |

Training and Testing Algorithm (TTA) accepts as input of Grape image data loader batch (Bh) 256 × 256 × 3 and after preprocessing resize the image 224 × 224 × 3. Now normalize the pixel value range, which varies from 0 to 1 with the same standard pixel value.

For the training data, freeze the feature layers with different epochs and a learning rate of 0.001. For increasing the training accuracy of the data, the images are uploaded with more parameters. If training accuracy does not improve with the given parameters, unfreeze the features where the layers. If the testing data accuracy does not improve up to 7 layers, increase the layers and achieve the best performance based on the CNN model.

3.3. Statistical Analysis

A dataset of Grape leaf disease photos was employed in the suggested EfficientNet B7 model, which contributed to statistical analysis parameters. With a size of 224 × 224, the entire dataset was statistically significant at the 0.05 level. It provides significant evidence against the null hypothesis, as the null hypothesis has a less than 5% chance of being right using one-way ANOVA method using SPSS-2021, IBM, USA. The significant difference was determined when p < 0.05. The parameter accuracy, efficiency and precision values are evaluated statistically for their statistical parameters like mean and standard deviations. The parameters accuracy, efficiency and precision are found to be statistically different at p ≤ 0.05. This shows the effect of our proposed model on these parameters. In the data analysis, the various four classes of dataset particular exposure for general impairment have been understood. After 92 epochs, the most discriminant features are identified with the maximum constant accuracy of 98.7% utilizing state-of-the-art classifiers using the suggested EfficientNet B7 model. Based on the confusion matrix of dataset variance, this research looked at Precision, Recall, and F1-Score. As a result, choose the confusion matrix to limit the danger of job status and outcome.

4. Results

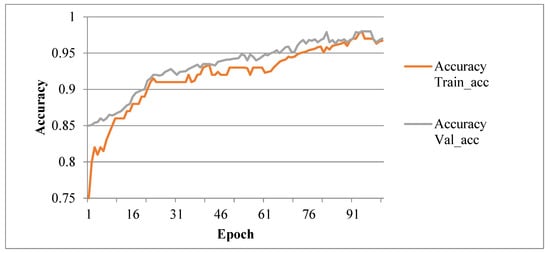

In this work, CNN based Efficient B7 model is tested and validated to detect lesions on plant leaves. Python-based algorithms are used for image preprocessing with Anaconda3 (Python 3.6), Keras-GPU library, and OpenCV-python3 library. These are used for data augmentation and CNN, respectively. For this experimental setup, GPU (Graphics Processing Unit) with 12 GB RAM and 68 GB Hard Disk is used to accelerate Deep CNN training and testing. The Grape leaf dataset is taken from the plant village for training and testing to evaluate the Hy-CNN approach’s performance. The 30, 50, 70, 100 epochs value are used for training Hy-CNN model. The accuracy obtained from each epoch is plotted in the graphs shown in Figure 7. The model’s accuracy is shown in Table 5 per each epoch.

Figure 7.

Accuracy Graph of Hybrid Convolutional Neural Network (Hy-CNN).

Table 5.

Accuracy table of Training and Testing with models.

Performance Evaluation

In this work, there are few different constraints such as “precision value”, “recall”, and “F1 score” are given below in Equation (7), Equation (8), and Equation (9) respectively. Precision is an association of different set of the data samples that are estimated to each other in term of disease detection in grape leaf. The association of four different classes such as “Black_rot”, “Healthy”, “Black_measles” and Leaf_blight” is 0.964.

where TP: True Positive, FP: False Positive.

The recall takes the responsibility of relevant cases of different classes of data association in the disease detection of grape leaf dataset in 2 different cases “True Positive”, “False Negative”.

where TP: True Positive, FN: False Negative.

F1-score is evaluating the two-way classification (Precision and Recall) of the given dataset.

There are four classes of grape leaf such as Leaf blight, Healthy with Black measles, and Black rot. The “True Positive (TP)”, “True Negative (TN)”, “False Negative (FN)”, and “False Positive (FP)” are some of the duration terms of this context; true positive represents a healthy leaf. In case of true negative the leaves are incorrectly identified as healthy leaf. The false positive represents the correct identification of diseased leaf images. The correct identification is identified incorrectly as plants leaf in a False Negative [43,44].

5. Discussion

There are two different Convolutional network models for image classification such as “Image Classification” (IC) and “Object Detection” (OD) is discussed in Table 6. The image classification and detection are the two basic terms for image recognition.

Table 6.

Parametric data calculation of precision, F1-Score and recall.

Based upon effective results shown in Table 6, the Hybrid Convolutional Neural Network (Hy-CNN) model shows the highest accuracy of 98.7% for identification of leaf disease after 92 epochs with largest F1 score value as compared with others [45]. Comparable effects, specifically constrained recall, had been found for EfficientNet B7 trained to detect leaves inside the grapes crop. The researchers analyzed the uniqueness of Convolutional Neural Network (CNN) model for identification of disease with different epochs. The comparison of Hy-CNN model with some deep learning models is shown in Table 7. Different varieties of deep learning models are used for the detection, recognition and characterization of lesion detection in plant leaf. Some authors implement different Convolutional Neural Network (CNN) models such as EfficientNet—CNN [13], united deep learning model [15], F-CNN & S-CNN [17] on different plant leaf images. Some suggested proposed models with less accuracy as 92.01% [18], some with hybrid analysis model [19] with an accuracy of 95.1% on plant leaf. For more clear classification some texture image analysis [21] is implemented on coffee leaf images.

Table 7.

Comparison of Hy-CNN model with existing deep learning models.

With some deep learning models, depending upon the layers of model some show more better rest for the classification of okra plant leaf [23] and coffee leaf [30]. The Hybrid Convolutional Neural Network (Hy-CNN) transfer learning model shows efficient result for the detection of grape leaf lesions using feature extractor model. Efficient-Net B7 is a convolutional neural network architecture and compound scaling method that uniformly scales all dimensions of depth/width/resolution using a compound coefficient but different input sizes in the same method create an issue. It has also linearly increased dropout ratio such as from 0.2 for EfficientNet-B0 to 0.5 for B7. EfficientNet models use 37 Billion FLOPS by an order of magnitude (up to 8.4× parameter reductions and up to 16× FLOPS reduction) for this it uses e GPU which has the time-consuming process.

6. Conclusions

This paper presents a new deep transfer learning-based model for detecting grape plant leaf lesions. Using FC layer the features are extracted, and then, using proposed variance technique extraneous features are eliminated from the feature extractor vector. The resulting characteristics are classified using classification algorithms with 98.7% classification accuracy. Based on the findings, it is summarized that with the transfer learning model the training become more mature as compare to training the model from the ground up. Furthermore, the next step of reducing characteristics will improve the classification accuracy. For image processing and classification, algorithms have gained popularity. Dataset is cleaned by reducing the resolution to downgrades. Considering the following optimization parameters such as dropout ratio, FLOPS size by an order of magnitude, processing speed, accuracy, efficiency and precision metric, the Hybrid Convolutional Neural Network (Hy-CNN) is superior to other CNN architectures. Also conclude that the fitness function become the desirable feature selection function for calculating the accuracy. The dataset is divided into 80:20 strategy based on the outcomes. The fundamental drawback of this work is data availability, which is mitigated in part by incorporating a data augmentation stage. Similarly, selecting the most relevant features is critical; otherwise, the total classification accuracy may suffer. It did well in classifying grape leaf diseases, but the guaranteed/universal strategy to selecting the most valuable traits is still absent. The plant leaf disease dataset can be expanded by boosting plants diversity and the number of classification classes. Then another future direction is fine-tuning the DL model based on its epochs to suit the need of datasets and their classes to categorize. Also used the morphological properties such as texture (color, shape and size), and spectral for the detection of leaf disease. This will aid in developing models that can make more accurate predictions for more diverse diseases of plants in the future.

Author Contributions

Conceptualization, P.K. and S.H.; methodology, P.K., S.H., R.T.; software, P.K., S.H., R.T.; validation, S.H., R.T., S.U. and S.B.; resources, R.T., S.U.; writing—original draft preparation, P.K., S.H., R.T.; writing—review and editing, P.K., S.H., R.T., S.B., A.M.; supervision, S.H., R.T., S.U.; project administration, S.U., S.B., A.M., A.M.A.; funding acquisition, S.B., A.M., A.M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study does not involve humans or animals.

Data Availability Statement

Data is available in public access for research purpose at: Hughes, D. P.; Salathe, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv preprint arXiv:1511.08060 2015.

Acknowledgments

Work is carried in GPUs based systems of University of Petroleum and Energy Studies, Dehradun, Uttarakhand.

Conflicts of Interest

The authors declare no conflict of interest, financially or not submitted anywhere else.

References

- Maddikunta, P.K.R.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.-V. Unmanned Aerial Vehicles in Smart Agriculture: Applications, Requirements, and Challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Hang, J.; Zhang, D.; Chen, P.; Zhang, J.; Wang, B. Classification of Plant Leaf Diseases Based on Improved Convolutional Neural Network. Sensors 2019, 19, 4161. [Google Scholar] [CrossRef] [Green Version]

- Basha, S.M.; Rajput, D.S.; Janet, J.; Somula, R.S.; Ram, S. Principles and Practices of Making Agriculture Sustainable: Crop Yield prediction using Random Forest. Scalable Comput. Pract. Exp. 2020, 21, 591–599. [Google Scholar] [CrossRef]

- Zhu, J.; Wu, A.; Wang, X.; Zhang, H. Identification of grape diseases using image analysis and BP neural networks. Multimed. Tools Appl. 2019, 79, 14539–14551. [Google Scholar] [CrossRef]

- Islam, M.; Anh, D.; Wahid, K.; Bhowmik, P. Detection of potato diseases using image segmentation and multiclass support vector machine. In Proceedings of the 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE), Windsor, ON, Canada, 30 April–3 May 2017; pp. 1–4. [Google Scholar]

- Nagaraju, M.; Chawla, P.; Upadhyay, S.; Tiwari, R. Convolution network model based leaf disease detection using augmentation techniques. Expert Syst. 2021, e12885. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kuwata, K.; Shibasaki, R. Estimating crop yields with deep learning and remotely sensed data. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 858–861. [Google Scholar]

- Kaur, P.; Harnal, S.; Tiwari, R.; Alharithi, F.S.; Almulihi, A.H.; Noya, I.D.; Goyal, N. A Hybrid Convolutional Neural Network Model for Diagnosis of COVID-19 Using Chest X-ray Images. Int. J. Environ. Res. Public Health 2021, 18, 12191. [Google Scholar] [CrossRef]

- Hossain, S.M.M.; Tanjil, M.M.M.; Ali, M.A.B.; Islam, M.Z.; Islam, M.S.; Mobassirin, S.; Sarker, I.H.; Islam, S.M.R. Rice Leaf Diseases Recognition Using Convolutional Neural Networks. In Advanced Data Mining and Applications. ADMA 2020; Springer: Cham, Switzerland, 2020; Volume 12447, pp. 299–314. [Google Scholar]

- Khaldi, Y.; Benzaoui, A.; Ouahabi, A.; Jacques, S.; Taleb-Ahmed, A. Ear Recognition Based on Deep Unsupervised Active Learning. IEEE Sens. J. 2021, 21, 20704–20713. [Google Scholar] [CrossRef]

- Arbaoui, A.; Ouahabi, A.; Jacques, S.; Hamiane, M. Concrete Cracks Detection and Monitoring Using Deep Learning-Based Multiresolution Analysis. Electronics 2021, 10, 1772. [Google Scholar] [CrossRef]

- Mary, N.A.B.; Singh, A.R.; Athisayamani, S. Classification of Banana Leaf Diseases Using Enhanced Gabor Feature Descriptor. In Inventive Communication and Computational Technologies; Springer: Singapore, 2021; Volume 145, pp. 229–242. [Google Scholar]

- Kaur, P.; Gautam, V. Plant Biotic Disease Identification and Classification Based on Leaf Image: A Review. In Proceedings of 3rd International Conference on Computing Informatics and Networks, Delhi, India, 29–30 July 2020; Volume 167, pp. 597–610. [Google Scholar]

- Atila, Ü.; Uçar, M.; Akyol, K.; Uçar, E. Plant leaf disease classification using EfficientNet deep learning model. Ecol. Inform. 2021, 61, 101182. [Google Scholar] [CrossRef]

- Ji, M.; Zhang, L.; Wu, Q. Automatic grape leaf diseases identification via UnitedModel based on multiple convolutional neural networks. Inf. Process. Agric. 2019, 7, 418–426. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2019, 7, 566–574. [Google Scholar] [CrossRef]

- Azimi, S.; Kaur, T.; Gandhi, T.K. A deep learning approach to measure stress level in plants due to Nitrogen deficiency. Measurement 2020, 173, 108650. [Google Scholar] [CrossRef]

- Gadekallu, T.R.; Rajput, D.S.; Reddy, M.P.K.; Lakshmanna, K.; Bhattacharya, S.; Singh, S.; Jolfaei, A.; Alazab, M. A novel PCA–whale optimization-based deep neural network model for classification of tomato plant diseases using GPU. J. Real-Time Image Process. 2020, 18, 1383–1396. [Google Scholar] [CrossRef]

- Sinha, A.; Shekhawat, R.S. Olive Spot Disease Detection and Classification using Analysis of Leaf Image Textures. Procedia Comput. Sci. 2020, 167, 2328–2336. [Google Scholar] [CrossRef]

- Sorte, L.X.B.; Ferraz, C.T.; Fambrini, F.; Goulart, R.D.R.; Saito, J.H. Coffee Leaf Disease Recognition Based on Deep Learning and Texture Attributes. Procedia Comput. Sci. 2019, 159, 135–144. [Google Scholar] [CrossRef]

- Kallam, S.; Basha, S.M.; Rajput, D.S.; Patan, R.; Balamurugan, B.; Basha, S.A.K. Evaluating the Performance of Deep Learning Techniques on Classification Using Tensor Flow Application. In Proceedings of the 2018 International Conference on Advances in Computing and Communication Engineering (ICACCE), Paris, France, 22–23 June 2018; pp. 331–335. [Google Scholar]

- Raikar, M.M.; Meena, S.M.; Kuchanur, C.; Girraddi, S.; Benagi, P. Classification and Grading of Okra-ladies finger using Deep Learning. Procedia Comput. Sci. 2020, 171, 2380–2389. [Google Scholar] [CrossRef]

- Franczyk, B.; Hernes, M.; Kozierkiewicz, A.; Kozina, A.; Pietranik, M.; Roemer, I.; Schieck, M. Deep learning for grape variety recognition. Procedia Comput. Sci. 2020, 176, 1211–1220. [Google Scholar] [CrossRef]

- Kundu, N.; Rani, G.; Dhaka, V.; Gupta, K.; Nayak, S.; Verma, S.; Ijaz, M.; Woźniak, M. IoT and Interpretable Machine Learning Based Framework for Disease Prediction in Pearl Millet. Sensors 2021, 21, 5386. [Google Scholar] [CrossRef]

- Almadhor, A.; Rauf, H.; Lali, M.; Damaševičius, R.; Alouffi, B.; Alharbi, A. AI-Driven Framework for Recognition of Guava Plant Diseases through Machine Learning from DSLR Camera Sensor Based High Resolution Imagery. Sensors 2021, 21, 3830. [Google Scholar] [CrossRef]

- Oyewola, D.O.; Dada, E.G.; Misra, S.; Damaševičius, R. Detecting cassava mosaic disease using a deep residual convolutional neural network with distinct block processing. PeerJ Comput. Sci. 2021, 7, e352. [Google Scholar] [CrossRef]

- Abayomi-Alli, O.O.; Damasevicius, R.; Misra, S.; Maskeliūnas, R. Cassava disease recognition from low-quality images using enhanced data augmentation model and deep learning. Expert Syst. 2021, 38, e12746. [Google Scholar] [CrossRef]

- Basavaiah, J.; Anthony, A.A. Tomato Leaf Disease Classification using Multiple Feature Extraction Techniques. Wirel. Pers. Commun. 2020, 115, 633–651. [Google Scholar] [CrossRef]

- Abdu, A.M.; Mokji, M.M.M.; Sheikh, U.U.U. Machine learning for plant disease detection: An investigative comparison between support vector machine and deep learning. IAES Int. J. Artif. Intell. 2020, 9, 670–683. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Maddikunta, P.K.R.; Pham, Q.-V.; Gadekallu, T.R.; Krishnan, S.R.; Chowdhary, C.L.; Alazab, M.; Piran, J. Deep learning and medical image processing for coronavirus (COVID-19) pandemic: A survey. Sustain. Cities Soc. 2020, 65, 102589. [Google Scholar] [CrossRef]

- Gadekallu, T.R.; Khare, N.; Bhattacharya, S.; Singh, S.; Maddikunta, P.K.R.; Srivastava, G. Deep neural networks to predict diabetic retinopathy. J. Ambient. Intell. Humaniz. Comput. 2020, 1–14. [Google Scholar] [CrossRef]

- Hughes, D.P.; Salathe, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv 2015, arXiv:1511.08060. [Google Scholar]

- Joshi, R.C.; Kaushik, M.; Dutta, M.K.; Srivastava, A.; Choudhary, N. VirLeafNet: Automatic analysis and viral disease diagnosis using deep-learning in Vigna mungo plant. Ecol. Inform. 2020, 61, 101197. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.; Paul, M.; Pal, R.; De, D. Tea leaf disease detection using multi-objective image segmentation. Multimed. Tools Appl. 2020, 80, 753–771. [Google Scholar] [CrossRef]

- Jasim, M.A.; Al-Tuwaijari, J.M. Plant Leaf Diseases Detection and Classification Using Image Processing and Deep Learning Techniques. In Proceedings of the 2020 International Conference on Computer Science and Software Engineering (CSASE), Duhok, Iraq, 16–18 April 2020; pp. 259–265. [Google Scholar]

- Tran, T.-T.; Choi, J.-W.; Le, T.-T.H.; Kim, J.-W. A Comparative Study of Deep CNN in Forecasting and Classifying the Macronutrient Deficiencies on Development of Tomato Plant. Appl. Sci. 2019, 9, 1601. [Google Scholar] [CrossRef] [Green Version]

- Bi, C.; Wang, J.; Duan, Y.; Fu, B.; Kang, J.-R.; Shi, Y. MobileNet Based Apple Leaf Diseases Identification. Mob. Netw. Appl. 2020, 1–9. [Google Scholar] [CrossRef]

- Too, E.C.; Yujian, L.; Njuki, S.; Yingchun, L. A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Subetha, T.; Khilar, R.; Christo, M.S. A comparative analysis on plant pathology classification using deep learning architecture—Resnet and VGG19. Mater. Today Proc. 2021. [Google Scholar] [CrossRef]

- Jiang, D.; Li, F.; Yang, Y.; Yu, S. A Tomato Leaf Diseases Classification Method Based on Deep Learning. In Proceedings of the 2020 Chinese Control And Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 1446–1450. [Google Scholar]

- Chen, J.; Chen, J.; Zhang, D.; Sun, Y.; Nanehkaran, Y. Using deep transfer learning for image-based plant disease identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Xiong, Y.; Liang, L.; Wang, L.; She, J.; Wu, M. Identification of cash crop diseases using automatic image segmentation algorithm and deep learning with expanded dataset. Comput. Electron. Agric. 2020, 177, 105712. [Google Scholar] [CrossRef]

- Salih, T.A.; Ali, A.J.; Ahmed, M.N. Deep Learning Convolution Neural Network to Detect and Classify Tomato Plant Leaf Diseases. OALib 2020, 7, 1–12. [Google Scholar] [CrossRef]

- Wspanialy, P.; Moussa, M. A detection and severity estimation system for generic diseases of tomato greenhouse plants. Comput. Electron. Agric. 2020, 178, 105701. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).