Abstract

Light Detection and Ranging (LiDAR) systems are novel sensors that provide robust distance and reflection strength by active pulsed laser beams. They have significant advantages over visual cameras by providing active depth and intensity measurements that are robust to ambient illumination. However, the systemsstill pay limited attention to intensity measurements since the output intensity maps of LiDAR sensors are different from conventional cameras and are too sparse. In this work, we propose exploiting the information from both intensity and depth measurements simultaneously to complete the LiDAR intensity maps. With the completed intensity maps, mature computer vision techniques can work well on the LiDAR data without any specific adjustment. We propose an end-to-end convolutional neural network named LiDAR-Net to jointly complete the sparse intensity and depth measurements by exploiting their correlations. For network training, an intensity fusion method is proposed to generate the ground truth. Experiment results indicate that intensity–depth fusion can benefit the task and improve performance. We further apply an off-the-shelf object (lane) segmentation algorithm to the completed intensity maps, which delivers consistent robust to ambient illumination performance. We believe that the intensity completion method allows LiDAR sensors to cope with a broader range of practice applications.

1. Introduction

Unlike visual cameras, which passively capture the light emitted or reflected by objects, LiDAR sensors actively project pulsed laser beams and measure the surrounding environment through backscattered echoes [1]. Therefore, LiDAR sensors can function well even in adverse illumination conditions. With robust depth measurements, LiDAR sensors are crucial to many applications such as autonomous vehicles [2,3], classification [4], and instance detection [5,6].

The intensity output of LiDAR sensors is a stream of laser reflection. With this unique mechanism, mobile LiDAR sensors such as Velodyne VLP-16 can provide richer object material information in addition to the depth map. However, the unique mechanism only provides intensity measurements with sparse circular results. It leads to the situation that existing computer vision techniques, including optical flow [7], relocalization [8], and object (lane) segmentation [9], all having demonstrated impressive performance on visual images, cannot directly operate on this unique sparse LiDAR intensity structure.

To acquire dense intensity maps from LiDAR sensors, some works focus on adding a more complicated rotation mechanism, such as two-axis scanning with one laser sensor [10], to obtain a dense intensity map, called LiDAR-intensity imagery [11]. Based on this dense map, visual-liked navigation [12] and perception [13] can be produced on the LiDAR intensity. The shortcoming is that there is a distortion due to motion and the LiDAR’s scanning nature, akin to a slow-rolling shutter camera, and the distortion is hardly compensated. Additionally, since the rotation mechanism rotates slowly, many regions will be missed when the carrier moves too fast. Another solution is to increase the number of lasers as much as possible, such as VLS-128. Nevertheless, it still cannot provide the image-level density and inevitably leads to unacceptable prices.

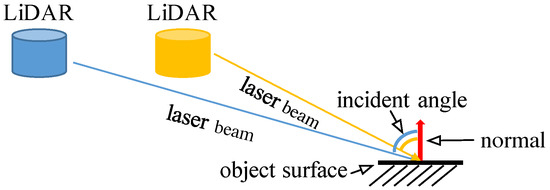

It should be noted that the sparse depth measurements also limit the performance in handling tasks such as object detection and navigation. Hence, various works focus on filling in the missing depth values on a dense depth map using only depth measurements, and this is called depth completion [14,15,16,17,18]. Following this thought, LiDAR intensity completion may be an complementary solution. As far as we know, very few methods have been proposed to address the LiDAR intensity completion. Current public completion-related LiDAR datasets, such as KITTI Completion Benchmark [19], do not provide the benchmark for LiDAR intensity completion, and hence the evaluation is still in the preliminary stage. Meanwhile, compared to the depth, the intensity is much more complicated. As shown in Figure 1, the intensity measured by LiDAR can be decomposed into four main factors under the Lambertian reflectance assumption [20]: measurement distance, the surface reflectance, the strength of the incident ray, and the incident angle [21]. These factors make the LiDAR intensity completion more challenging. It should be noted that the measurement distance is one of the impact factors, implying that the intensity is correlated with the measurement distance.

Figure 1.

Intensity measurements. The attenuation in the traveling and the incident angle can influence the received intensity. Therefore, from different views, the intensity values are different for the same position.

In this paper, we propose a LiDAR intensity completion method to bridge the gap between LiDAR intensity and conventional computer vision. For the LiDAR intensity completion, we propose LiDAR-Net, a convolutional neural network to simultaneously learn both intensity and depth information from LiDAR sensors to complete the intensity map. LiDAR-Net consists of an encoder–decoder network with LiDAR information fusion and an inverse network. The encoder consists of a sequence of convolutions to downsample the resolution. The decoder, on the other hand, has a reversed structure with transposed convolutions to upsample the resolution. Between the contracting and symmetric expanding paths, long skip connections improve the sharpness of completion results. A network with several convolutions produces material-related intensity maps using the results of the encoder–decoder network.

To train this network, a vehicle carrying a Velodyne HDL64 LiDAR was used to collect data in two 500 × 500 areas. Then, we rectified the raw intensity by Lambertian reflection model and fused sparse intensity maps from a sequence of data frames to build an intensity dataset. To further validate the potential of dense completed intensity maps, an object segmentation method was used to detect the lanes on the road.

The main contributions of this work can be summarized as follows:

- LiDAR-Net, a novel intensity completion method is proposed using intensity–depth fusion. The experiment results show that the proposed method can provide competitive performance compared with state-of-the-art completion methods.

- A LiDAR intensity fusion method is proposed to generate the intensity ground truth for training. Using multiple types of intensity data from the proposed method for training can improve the performance of the LiDAR intensity completion.

- The proposed method is tested in object (lane) segmentation based on completed intensity maps. The result shows that off-the-shelf computer vision techniques can operate on the completed LiDAR intensity maps. Moreover, the LiDAR intensity completion provides more robust lane segmentation results than visual cameras under adverse conditions.

The rest of the paper is organized as follows. Section 2 reviews related work. Section 3 first introduces the proposed intensity fusion method for ground truth generation, then discusses the proposed architecture and how to train the network on the self-built dataset. Furthermore, Section 4 presents the experimental results in detail. Finally, conclusions are drawn in Section 5.

2. Related Work

There are two types of perception technologies: passive and active, according to the energy source used in the detection. LiDAR sensors and visual cameras are representative of these two classes, respectively. Since LiDAR sensors function well even in adverse lighting conditions, they are crucial to many applications. In these applications, 3D object detection is an important task [22,23,24,25,26]. However, for those objects without spatial volumes, such as road signs on the ground, they cannot be detected using the point cloud data. Therefore, the intensity from LiDAR can be a valuable information source.

2.1. LiDAR Intensity

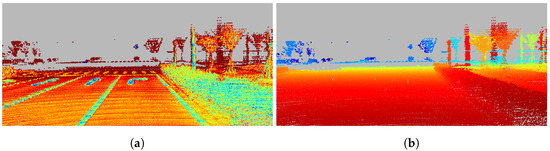

LiDAR intensity measurements have implicit correlations with depth measurements, as shown in Figure 2. Moreover, LiDAR intensity is steadily available even in some adverse conditions since LiDAR sensors are robust to lighting variation [27]. Therefore, the intensity measurements are a potential information source. Nonetheless, intensity measurements are dependent on multiple factors and, therefore, difficult to model. The surface observed by the sensors is assumed not to contain mixed microstructures and conforms to the Lambertian reflectance assumption [20]. In that case, the factors affecting the intensity strength measured by LiDAR consist of three main parts: the surface reflectance, the strength of the incident ray, and the incidence angle.

Figure 2.

Densified intensity and depth maps obtained from multiple frames: (a) densified intensity (b) densified depth.

Following the Lambertian assumptions, the researchers proposed corresponding theoretical methods to correct geometric effects [28,29,30]. However, the theoretical model parameters are difficult to compute accurately and are challenging to apply to short distances (e.g., 10 m) [27]. Hence, Höfle and Pfeifer adopted an empirical quadratic function related to the depth to correct intensity [31]. Moreover, refs. [28,32] normalized the intensity to obtain the intensity only related to the surface reflectivity. The limitation is that these methods require dense depth maps, which prohibits their adaptation to sparse scanners such as vehicle-mounted LiDAR.

2.2. Sparse to Dense

Intensity information contains much valuable information. Therefore, some work has focused on using intensity to accomplish tasks [1]. Aided by intensity information, ref. [1] presents some examples: [33] detected the damage and degradation of concrete structures, ref. [34] detected road markings and maintenance holes, etc. However, most of these works rely on the dense intensity maps, which the LiDAR mounted on a moving car usually cannot directly provide.

To obtain dense intensity maps, two approaches were proposed: hardware-based and algorithm-based methods. The hardware-based method ensures that the laser traverses the entire area by adding a complex rotating mechanism [12]. The algorithm-based method uses the correlation between discrete sample points to estimate the missing information. Asvadi [35] proposed using Delaunay triangulation to interpolate the missing intensity. Melotti [36] generated dense depth and intensity maps through a bilateral filter implementation, which was used as input to the CNN network to achieve pedestrian classification. These methods need neighbor information to interpolate the missing data, which requires sufficient information near the interpolated point. This requirement limits the application of these methods to further sparse structures.

The algorithm-based LiDAR intensity completion methods are similar to the methods used for depth completion [17,18,37,38]. These depth completion methods employ hand-crafted features or kernels to complete the missing values. Most methods are only designed explicitly for Kinect sensors that inherently provide more dense depth maps with different techniques than LiDAR. Recently, the learning-based approaches showcase their promising performance thanks to the rapid advance of deep learning. Uhrig [19] creatively proposed enhancing the sparse LiDAR depth measurements via the sparsity-invariant convolution layer. Moreover, Eldesokey [39] modeled confidence propagation through layers to reduce the number of model parameters.

The works mentioned above accomplished the depth completion only from the depth information. However, it was soon found that the information from other modalities, e.g., color images, can significantly improve the performance [14]. Recently, more explorations in network design have been conducted to harness deep neural networks’ power [15,16,40,41,42,43]. Nevertheless, none of these methods can be directly applied to LiDAR intensity completion. Compared to depth completion, the case for LiDAR intensity is more complicated since the intensity is determined by the incidence angle, surface reflectance, and distance from the sensor, making it difficult for the network to grasp the essence with limited training data.

3. Method

This section first introduces the proposed intensity fusion method to generate densified intensity ground truth since the raw LiDAR measurement is too sparse. After that, the LiDAR-Net, a novel supervised neural network that completes depth and intensity simultaneously, will be introduced.

3.1. Intensity Fusion for Ground-Truth Generation

Since the LiDAR sensor is not motionless, each data frame is obtained from a different view. The challenge is that raw intensity describes the reflected pulse’s strength, which is inconsistent under different views. This leads to different intensity values from the same position with different measurement distances or angles. Hence, a dense intensity map cannot be directly generated using adjacent frames.

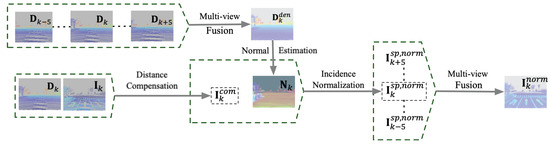

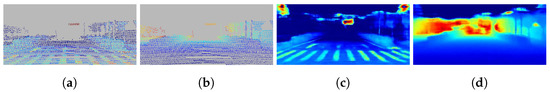

As mentioned in Section 2, the intensity values are mainly related to the distance traveled by light, the surface reflectivity, and the incident angle. Only the reflectance of the object surface is consistent. Therefore, we need to eliminate the effect of distance and incident angle before fusing multi-view data. In summary, as shown in Figure 3 and Figure 4, the intensity fusion method consists of four main steps to obtain the intensity ground truth: distance compensation, incidence normalization, multi-view fusion, and inverse reproduction.

Figure 3.

Distance compensation, incidence normalization, and multi-view fusion. The pixels on the image are enlarged five times to increase the visualization. The raw intensity measurement is compensated by the corresponding depth map and merged by poses to obtain . The incident angle is obtained from of the fused depth map to avoid the error caused by the normal estimation in the sparse point cloud. The multiple normalized intensity maps are fused to obtain a densified one.

Figure 4.

Inverse reproduction. The densified will be inversed to produce more dense artificial intensity maps. This process is the inverse process of the above steps except for the multi-view fusion.

Before introducing the intensity fusion method, some notations will be defined first. The depth and intensity maps collected at the sampling time k by projecting LiDAR sensor data are denoted by and , where .

3.1.1. Distance Compensation

The compensated intensity map is computed from the raw intensity map through

where is a map position vector, and is a distance-aware compensation term defined by

where K, , and are the intrinsic parameters of each laser beam after the official calibration [44]. The compensated intensity values can thereby be almost independent of depth.

3.1.2. Incidence Normalization

The incidence normalization step will normalize to obtain the normalized intensity map, which is irrelevant to the incident angle. First, it is assumed that all surfaces follow the Lambertian Cosine Law [20]. With this assumption, the compensated intensity can be normalized into a sparse normalized intensity by:

where v is the incident angle corresponding to the position , and is a constant coefficient that allows the normalized intensity to be distributed between 0 and 255. In the implementation, a data-driven parameter estimation method [32] is applied to estimate the parameters and obtain the optimal parameter value in Equation (3).

The only missing parameter is the incident angle v, which can be calculated with the depth map. However, the LiDAR sensors only provide sparse depth measurements, which cannot produce accurate surface normal estimation. Hence, the previous and subsequent LiDAR data frames are reprojected to the current frame to obtain a more dense depth map ,

where denotes the transformation between the k-th and the j-th frame, and is the projection model to obtain the map position and the corresponding depth value. Thus, with ground-truth poses, a densified depth map is collected by using multiple frames. In the implementation, 11 frames are projected onto the current frame according to the known transformation matrix.

With the densified depth map, the surface normal will be estimated so that can be computed by

where is the incidence light direction, and is the surface normal. With , the normalization can be used to recover from .

3.1.3. Multi-View Fusion

Since is irrelevant to the depth and incidence angle, similar to the depth map re-projection mentioned in incidence normalization, a more dense will be acquired through reprojection of the multi-view normalized intensity maps. The missing value will be filled by

where .

3.1.4. Inverse Reproduction

This operation consists of inverse normalization and inverse compensation. These two steps can be considered as the inverse version of the incidence normalization and distance compensation using dense data. In this operation, we will inverse the densified to generate the artificial raw intensity according to

where and were obtained from the previous steps. An example is shown in Figure 4.

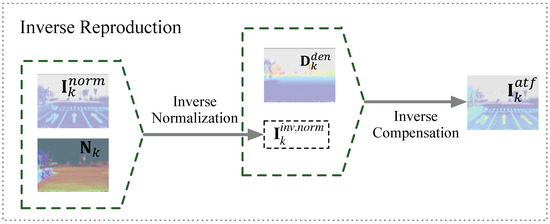

3.2. LiDAR-Net

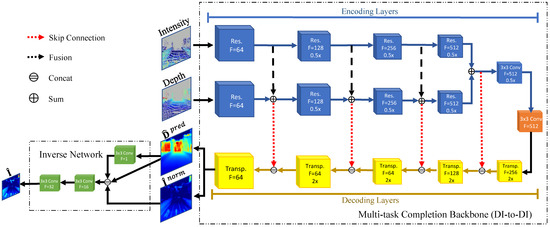

As shown in Figure 5, the LiDAR-Net, including the multi-task completion backbone and the inverse normalization parts, completes the intensity using intensity and depth measurements simultaneously.

Figure 5.

LiDAR-Net architecture. The pixels on the input are enlarged five times to increase the visualization. The output and of the backbone will be input for the inverse normalization network, as shown in the upper left corner. Because it is difficult to quickly estimate the normal using , the inverse normalization network attempts to fit the inverse normalization process shown in Equation (7) with several convolution layers and predicts the dense supervised by .

The multi-task completion backbone consists of a contracting path(encoding layers) to capture the shared context and a symmetric expanding path(decoding layers) that enables precise prediction [45]. We fuse the features extracted from depth and intensity to combine geometry and reflectivity information in the contracting path. Meanwhile, high-resolution features from the contracting path are combined with the upsampled output. Therefore, this network can give a more precise prediction. Since the material-related is more consistent under different views, it is used for training the backbone with the depth map.

In the inverse network, a successive network fuses the backbone’s output to provide a dense intensity. This network will be supervised by the artificial intensity . Finally, the LiDAR-Net will predict first and then .

3.2.1. Architecture

The densified depth and are the ground truth of the Multi-task Completion Backbone shown in Figure 5. The feature extraction (encoding) layers of the network are highlighted in blue. Both intensity and depth encoders consist of a series of ResNet blocks [46]. The sum of each layer’s depth and intensity features in the encoder is concatenated with the corresponding decoder layer. The last component of the encoding structure, a convolution layer, is used to further downsize the feature resolution. In the intermediate layer, a convolution shown in orange has a kernel size of 3-by-3. The decoding layers highlighted in yellow consist of five transposed convolutions to upsample the spatial resolution and combine the information from both intensity and depth encoders.

Since it is difficult to quickly estimate the normal without guidance, an inverse normalization network will be set to attempt to fit the inverse process with several convolutional layers and predict the dense supervised by . Therefore, after backbone processing, and will be input into the inverse normalization network, as shown in the upper left corner of Figure 5.

In the LiDAR-Net, all convolutions are followed by batch normalization [47] and ReLU, except at the last layer.

3.2.2. Training

The training is divided into two steps. First, and its corresponding dense are used to supervise the multi-task completion backbone. After the convergence, the entire LiDAR-Net, including the multi-task completion backbone and the inverse network, will be supervised by , , and to obtain the optimal intensity prediction. The difference between the network input and output is penalized on a pixel set of available known sparse depth. The depth loss is defined as

Similarly, for normalized intensity maps, the loss through all available data can be defined as

In summary, the loss is minimized in the training process, and the overall loss function containing two terms to supervise and is defined by

where is a weighting coefficient, which is set to .

In the second step, the proposed network can simultaneously complete the depth, the material-related , and the theoretical dense intensity value of the current frame . The loss for final intensity completion is defined as

In this step, the overall loss function contains three terms to supervise , , and , and is defined by

where and are weighting coefficients and are set to and in the experiments, respectively.

4. Experiments

In this section, the quality of the generated ground truth in the dataset is first evaluated. Then, the proposed LiDAR-Net network is evaluated in detail. Since there is no open-source method designed for LiDAR intensity completion and no open-source method using depth and intensity information for completion simultaneously, first we use depth completion methods for comparison and then design an ablation experiment to verify and demonstrate the proposed algorithm’s effectiveness and accuracy. To further illustrate the intensity–depth fusion’s effectiveness, we design a comparison experiment with the state-of-the-art depth completion algorithm, which only utilizes depth information. Finally, an off-the-shelf vision technique will operate on the dense intensity maps obtained from the proposed network to find the lanes on the road. This experiment will verify that LiDAR intensity completion can be used to bridge the gap between vision techniques and LiDAR sensors.

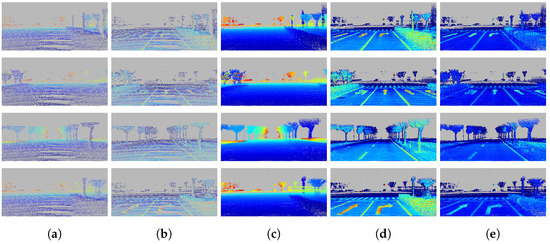

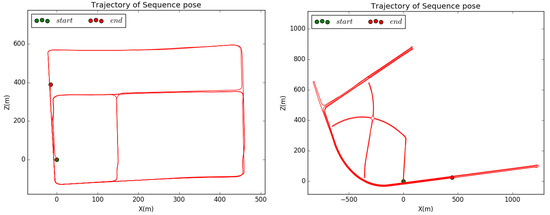

Since none of the existing datasets provide training data of dense depth and intensity for completion, we use the proposed intensity fusion method to create a dataset to obtain the ground truth for training and validation. As shown in Figure 6, there are two scenes. A vehicle carried a Velodyne HDL64 LiDAR and collected data for two 500 × 500 areas, as shown in Figure 7, at a speed of about 40 km per hour. A Global Navigation Satellite System (GNSS) called Novatel provided accurate pose information.

Figure 6.

The overview of the dataset. Four different instances are demonstrated. Each pixel in (a,b) is enlarged five times to increase the visualization. Colder color in the depth map indicates a farther distance, while colder color in the intensity map indicates a weaker strength. The dataset consists of two inputs: sparse depth and sparse intensity. It has three ground-truth values: densified depth, artificial intensity , and normalized intensity . eliminates the influence of distance and the incidence angle, resulting in sharper edges between different materials and a better consistency within the same material: (a) sparse input depth ; (b) sparse input intensity ; (c) densified depth ; (d) artificial intensity ; (e) normalized intensity .

Figure 7.

Trajectories of the two scenes in the dataset.

Our dataset takes the sparse depth and the measured raw intensity as input, and the densified depth maps, , , and as the ground truth. The train/validation/test sets contain 8647/1730/300 frames, and each frame is scaled from to . Moreover, to prove the generality of this method, a dataset was generated in different environments with more dynamic scenes in another city in the same way, and the train/validation/test sets contain 9340/1037/697 frames scaled to . Point clouds with intensity and depth from 11 frames are accumulated, as mentioned in Section 3.1, to increase density.

In the implementation, LiDAR-Net was trained using the Adam [48] optimizer with an initial learning rate of for 40 epochs with a batch size of 8. The learning rate was reduced to every ten epochs, and the weights and were set to and . We used four Nvidia GTX 1080Ti GPUs with 11Gb of RAM, and it took roughly 16 h to train the LiDAR-Net.

For each network, we tried our best to adjust the parameters and record the optimal values. The error metric of the KITTI depth completion benchmark, including the root mean square error (RMSE) and the mean absolute error (MAE), was used as the evaluation indicator.

4.1. Evaluation of Intensity Ground Truth

To evaluate the quality of the obtained ground truth, we analyzed each step’s effect in the intensity fusion method, firstly through qualitative perspectives and then by a numerical quantitative analysis, to illustrate some visual intuition and enhance the credibility.

The essential part of the proposed intensity fusion method is to obtain consistent intensity information from different viewing angles and different distances. Therefore, the intensity distribution of the same material in the normalized intensity maps should show consistency. After the inverse reproduction, the artificial intensity maps should have the same distribution as the raw intensity maps.

4.1.1. Consistency in Normalized Intensity Maps

As mentioned in Section 3.1, we sequentially used Equations (1) and (3) to compensate and normalize each frame’s raw intensity map before the fusion. Since the obtained should be only related to material reflectivity, the intensity values should be more consistent in the same material.

As shown in Figure 8, the arrows on the road surface show that the normalized intensity of the same material is more robust to variations in the distance and incidence angle than . Moreover, the of the shrub remains consistent even when the incidence angle changes drastically. Furthermore, shows an advantage in distinguishing between different materials, such as the lane and the road.

Figure 8.

Two types of intensity ground truth (enhanced for visualization): (a) artificial intensity ; (b) normalized intensity . The boundaries of different materials of are much more distinguishable. In addition, the intensity of the same material shows consistency in . (a) . (b) .

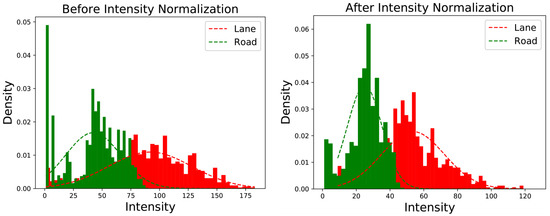

As shown in Figure 9, the histogram indicates that after normalization, the intensity distribution of the same object is more concentrated, which implies a higher consistency after incidence normalization.

Figure 9.

Histogram statistics before (left) and after (right) the incidence normalization. The red and green columns represent the statistical results of the lane and the road areas, respectively. Gaussian curves were used to fit their mathematical distributions. The smaller overlapping area and the sharper distribution indicate a better result after normalization.

4.1.2. Quality of Artificial Intensity Maps

is the artificial intensity map that is collected theoretically for the current frame, so and should follow a similar distribution and material discrimination. Since it is difficult to compare them from the picture, we used two quantitative results to evaluate the similarity between the artificial intensity and the original raw intensity. In each evaluation, the road surface areas and the lane areas were manually selected for computing the distributions.

If one object’s intensity distribution is assumed as Gaussian distributions, the indicator proposed in [32] can be employed to assess the similarity of the intensity distribution for the same object V in different intensity maps and is written below:

where and denote the mean and standard deviation of the selected map regions, and is the intensity map under evaluation. As shown in Table 1, the between and is almost equal to one, which indicates that the results reserve the same pattern of the intensity distribution for the same object.

Table 1.

Intensity consistency of the same object. The closer the value is to 1, the greater the similarity of the same object’s intensity distribution in two intensity maps.

In order to prove that the artificial intensity retains the distribution difference between different objects, the overlapping coefficient [49] defined below can be used to measure the similarity between different Gaussian distributions from different objects,

where i is the intersection between two probability density functions, and represents the cumulative distribution functions. Therefore, the smaller the value, the higher the discrimination between different materials will be. As shown in Table 2, the of and are almost equal to each other. The result indicates that, for different objects, and have similar distributions, implying that the value of is a reasonable synthetic measurement.

Table 2.

Intensity consistency of different objects. Similar values indicate that the relative intensity distributions of different objects in the two images are similar.

4.2. Comparison of Intensity Completion

Most methods only focus on LiDAR depth completion, and there are no Lidar intensity completion methods with open-source code available. Therefore, several methods for LiDAR depth completion that can work on a single input type will be evaluated on the LiDAR intensity dataset. Their intensity completion results are then used in the comparison experiment.

For comparison, results were obtained using the following state-of-the-art methods: Sparse-to-dense [15], SparseConvs [19], nConv-CNN [39], and pNCNN [50]. In addition, the reported results of IP-Basic [51] are also included in the comparison. Sparse-to-dense, SparseConvs, nConv-CNN, and pNCNN represent the most advanced methods using learning-based techniques, while IP-Basic leverages non-learning models.

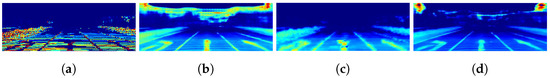

As shown in Figure 10, using the proposed dataset, the LiDAR-Net can use spare input to provide dense results. The completion results are satisfactory, except in the area where the original information is missing. A qualitative comparison is shown in Figure 11. Ip-Basic almost failed in Scene 2, and the sparse-to-dense method cannot provide satisfactory results. For pNCNN, although the prediction result is correct around the corner, the edges of the lane on the ground are blurry. Hence, the proposed method outperformed the rest in the completion task while preserving sharper textures.

Figure 10.

Input (a,b) and output (c,d). The proposed completion system takes the sparse depth and intensity from a LiDAR sensor as input (row 1) to obtain the dense completion result (row 2). Each pixel in (a,b) is enlarged five times to increase the visualization: (a) sparse input intensity; (b) sparse input depth; (c) intensity completion; (d) depth completion.

Figure 11.

Comparison of intensity completion. Colder color in the intensity map indicates weaker strength: (a) Ip-Basic [51]; (b) sparse-to-dense [15]; (c) pNCNN [50]; (d) ours.

The quantitative results are shown in Table 3. If the method cannot complete the training, the results are marked with an ‘x’. The results indicate that the proposed method generates the best performance. The reason is that most methods are dedicated to the KITTI completion dataset. In the KITTI dataset, the input depth maps are denser. In contrast, our dataset provides more spare data since many fields are beyond the range of the LiDAR sensors due to the spacious road environment. This sparse dataset makes the completion task more challenging. Moreover, the spatial characteristics of the depth maps used in some methods are invalid for the intensity completion task. As shown in the table, the performance of the learning-based methods is close. The possible reason is that the data, such as the lane lines, account for a small percentage of the overall data. Therefore, the good completion of these areas cannot impact the metric a lot, even though the completion results of the proposed method are clearly better than other methods, as shown in the qualitative comparison.

Table 3.

Comparison of intensity completion accuracy. The results from state-of-the-art completion algorithms are shown in the bottom part. The best results are shown in bold; ’x’ denotes a failure.

4.3. Completion Ablation Experiments

To verify the architecture of the proposed method, some detailed ablation studies are conducted in this section. There are two ablation experiments to evaluate the effectiveness of intensity–depth fusion and show the necessity of incidence normalization. The first experiment will verify the complementarity between depth and intensity. The second will confirm the benefit of the inverse network with the additional supervision.

4.3.1. Effectiveness of Intensity–Depth Fusion

Unlike the depth completion schemes, the proposed method uses both the depth and intensity data from a single LiDAR to achieve intensity completion jointly. To verify the complementarity of depth and intensity, the model in [15], which is also a U-like network using only intensity information , serves as the baseline of the single-input model.

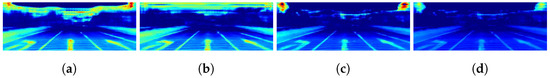

As depicted in Figure 12a and Table 4, the results from the method using only intensity show that when the information source includes only sparse intensity maps, the completion result has ripples, and the intensity of the lane with the same material is inconsistent. Corresponding to this phenomenon, the fusion of depth improves the consistency of intensity, as shown in Figure 12b. The reason may be that the geometry information can reduce the ripple effect. Therefore, the correlation between depth and intensity learned by the network, especially in those scenes where the intensity value is regularly distributed such as the road surface, can help achieve better completion. Unfortunately, the addition of depth information does not entirely solve the ripple issue due to the intrinsic ripple distribution in the depth and intensity ground truth, as shown in Figure 8.

Figure 12.

Qualitative analysis of ablation study: (a) the intensity map completed by the single input model supervised by ; (b) the completion result from using only the proposed completion backbone network supervised by and ; (c,d) are and completed by LiDAR-Net supervised by , , and ; (a) from onlyI (); (b) from DI-to-DI (+); (c) from LiDAR-Net (++); (d) from LiDAR-Net (++).

Table 4.

Quantitative analysis of ablation study. The best results are shown in bold. The ablation study indicates that intensity–depth fusion and supervision with normalized intensity can improve the performance.

4.3.2. Effectiveness of Supervision with Normalized Intensity

The LiDAR-Net proposed in Section 3.2 is supervised by at the end of the decoder and by at the end of the inverse network. To verify the effectiveness of the inverse normalization network, we also present the experiment results on DI-to-DI, which is directly supervised by at the end of the decoder but without the inverse normalization network.

As shown in Table 4, based on the DI-to-DI network, LiDAR-Net further enhances the performance of the completion with only a few extra parameters.

Figure 12c shows that with the supervision aided by the densified normalized intensity , the ripple effect mentioned above is less evident than the results of DI-to-DI network. Meanwhile, as shown in Figure 12d, the predicted is more related to materials and more robust to distance and incidence angle. It indicates that the additional material information from enables the network to learn possible distributions of intensity through the correlation between depth and reflectivity, even in the ripple area where the supervision information is unavailable.

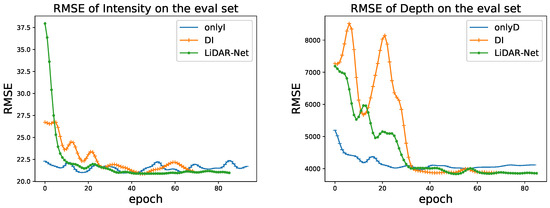

We also find that LiDAR-Net converges much faster than DI-to-DI, as shown in Figure 13. The LiDAR-Net improves the completion performance. It also reduces the difficulty of convergence and oscillation in the training process.

Figure 13.

Convergence curves. LiDAR-Net (green) converges on intensity (left) faster than DI-to-DI (yellow) and onlyI (blue) and achieves better depth (right) completion performance.

The results from the experiments show that the addition of the inverse network leads to a faster and smoother convergence and that serves as an additional supervisor to improve the completion performance.

4.4. Comparison of Depth Completion

Since the proposed method has the capability for depth completion, this section will compare the performance of the proposed method with several state-of-the-art depth completion methods [19,39,51] to show the benefit of intensity information. Since the KITTI depth completion benchmark does not provide simultaneously the ground truth of depth and of intensity, we have to test various methods on our dataset.

As shown in the lower part of Table 5, the proposed method, which uses both intensity and depth information, provides the highest accuracy of depth completion according to RMSE. The result proves that using only depth is insufficient to train the network to predict the area where values are missing. In other words, since the input of the proposed dataset is too sparse, the results of the state-of-the-art methods tend to be unsatisfactory. This result explains why many methods seek the assistance of RGB cameras to provide better completion outcomes.

Table 5.

Comparison of depth completion accuracy. The results from state-of-the-art depth completion algorithms are shown in the bottom part. The best results are shown in bold. We use ‘i’ and ‘d’ to represent Lidar intensity and depth, respectively; ‘x’ denotes a failure.

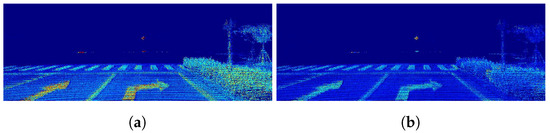

4.5. Lane Segmentation

As discussed in the introduction, as an active sensor, LiDAR is not affected by the ambient light and can respond to dramatic lighting variations in autopilot situations. With the proposed method, many modern computer vision methods can be employed, such as object segmentation. To demonstrate the significant advantage of dense LiDAR intensity, we investigate the lane segmentation performance using RGB images versus completed LiDAR intensity under normal and low illumination conditions.

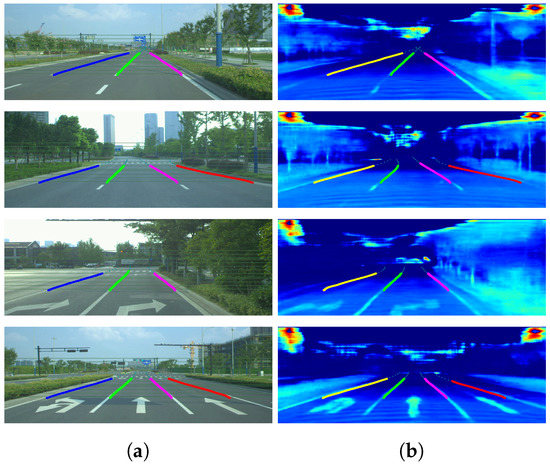

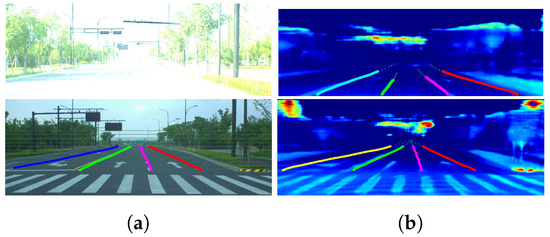

The SCNN [52] is used as a segmentation model. Without any alteration, the results in Table 6 indicate that the dense LiDAR intensity can be used for lane segmentation with comparable performance. The performance of the proposed method can also be proved in Figure 14. Furthermore, it should be noted that, as shown in Figure 15, in complex illumination environments, the lane lines obtained from the completed LiDAR intensity are easy to detect in both scenarios while the RGB images are not usable under certain conditions.

Table 6.

Comparison of lane segmentation results. .

Figure 14.

Lane segmentation results in good illumination conditions. Under normal lighting conditions, the dense intensity after completion can reach the same performance as RGB images in lane segmentation. It shows that traditional vision methods can be applied to the LiDAR intensity maps without modification: (a) RGB; (b) intensity.

Figure 15.

Lane segmentation results in complex illumination conditions. The upper and lower rows are the data under two different illumination conditions. The left and right images are the data from a visible light camera and LiDAR, respectively. Different colored lines indicate the segmentation results of different lanes. It shows that the LiDAR intensity maps have great potential for applications under adverse illumination conditions: (a) RGB; (b) intensity.

5. Conclusions

In this paper, we proposed a LiDAR-Net to achieve joint intensity completion using both the sparse depth and the intensity of the LiDAR sensors. The proposed LiDAR-Net can achieve satisfactory performance in experiments. With the intensity–depth fusion, the proposed method can provide a better performance of the completion. Moreover, a dataset was built for the intensity completion task. In this dataset, the influence of incidence angle and depth is eliminated to obtain more accurate intensity ground truth that can be used for training, which finally helps improve the model’s performance. The supplementary experiment demonstrates that the completed intensity map can be used for lane detection, showing the potential for practical applications. With the validation of lane detection, we hope that the proposed method will appeal to the computer vision community’s interest in LiDAR intensity measurements.

In future work, new BRDF models, such as the Phong [53], Oren–Nayar [54], or the Torrance–Sparrow [55] reflectance model, will be used to replace Lambertian assumption since the intensity is only corrected by the incident angle and may induce the so-called “over-correction effect” [56] on a large incidence angle.

For applications, we will explore the application potential of dense LiDAR intensity in the field of computer vision, such as semantic detection, tracking, and relocalization. In addition to the 2D tasks, the dense intensity completion method can also be extended to 3D vision tasks such as LiDAR-based 3D object detection and classification.

Author Contributions

Conceptualization, W.D. and S.C.; methodology, S.C. and Z.H.; software, W.D. and S.C.; validation, W.D. and S.C.; formal analysis, W.D. and S.C.; investigation, W.D. and S.C.; resources, W.D.; data curation, S.C.; writing—original draft preparation, S.C.; writing—review and editing, W.D., S.C., Z.H., Y.X. and D.K.; visualization, S.C.; supervision, W.D.; project administration, W.D.; funding acquisition, W.D. All authors have read and agreed to the published version of the manuscript.

Funding

Zhejiang Provincial Natural Science Foundation of China under Grant No.LQ22F030022, National Key Research and Development Program of China under Grant 2022ZD0208800, the Open Research Project of the State Key Laboratory of Industrial Control Technology, Zhejiang University, China (No. ICT2022B04), Key Laboratory of Brain Machine Collaborative Intelligence Foundation of Zhejiang Province.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kashani, A.; Olsen, M.; Parrish, C.; Wilson, N. A review of LiDAR radiometric processing: From ad hoc intensity correction to rigorous radiometric calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef]

- Wan, G.; Yang, X.; Cai, R.; Li, H.; Zhou, Y.; Wang, H.; Song, S. Robust and Precise Vehicle Localization Based on Multi-Sensor Fusion in Diverse City Scenes. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4670–4677. [Google Scholar]

- Abdelaziz, N.; El-Rabbany, A. An Integrated INS/LiDAR SLAM Navigation System for GNSS-Challenging Environments. Sensors 2022, 22, 4327. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Chen, Z.; Liu, G.; Chen, K.; Wang, L.; Xiang, W.; Zhang, R. Railway Overhead Contact System Point Cloud Classification. Sensors 2021, 21, 4961. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Zhao, S.; Zhao, W.; Zhang, L.; Shen, J. One-Stage Anchor-Free 3D Vehicle Detection from LiDAR Sensors. Sensors 2021, 21, 2651. [Google Scholar] [CrossRef]

- Brkić, I.; Miler, M.; Ševrović, M.; Medak, D. Automatic roadside feature detection based on LiDAR road cross section images. Sensors 2022, 22, 5510. [Google Scholar] [CrossRef]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2462–2470. [Google Scholar]

- Xue, F.; Wang, X.; Yan, Z.; Wang, Q.; Wang, J.; Zha, H. Local supports global: Deep camera relocalization with sequence enhancement. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2841–2850. [Google Scholar]

- Kim, J.; Park, C. End-to-end ego lane estimation based on sequential transfer learning for self-driving cars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 30–38. [Google Scholar]

- Dong, H.; Anderson, S.; Barfoot, T.D. Two-axis scanning lidar geometric calibration using intensity imagery and distortion mapping. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3672–3678. [Google Scholar]

- Anderson, S.; McManus, C.; Dong, H.; Beerepoot, E.; Barfoot, T.D. The gravel pit lidar-intensity imagery dataset. In Technical Report ASRL-2012-ABLOOl; UTIAS: North York, ON, Canada, 2012. [Google Scholar]

- Barfoot, T.D.; McManus, C.; Anderson, S.; Dong, H.; Beerepoot, E.; Tong, C.H.; Furgale, P.; Gammell, J.D.; Enright, J. Into darkness: Visual navigation based on a lidar-intensity-image pipeline. In Robotics research; Springer: Berlin/Heidelberg, Germany, 2016; pp. 487–504. [Google Scholar]

- Brodu, N.; Lague, D. 3D terrestrial lidar data classification of complex natural scenes using a multi-scale dimensionality criterion: Applications in geomorphology. ISPRS J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef]

- Mal, F.; Karaman, S. Sparse-to-dense: Depth prediction from sparse depth samples and a single image. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Ma, F.; Cavalheiro, G.V.; Karaman, S. Self-supervised sparse-to-dense: Self-supervised depth completion from lidar and monocular camera. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3288–3295. [Google Scholar]

- Qiu, J.; Cui, Z.; Zhang, Y.; Zhang, X.; Liu, S.; Zeng, B.; Pollefeys, M. Deeplidar: Deep surface normal guided depth prediction for outdoor scene from sparse lidar data and single color image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 3313–3322. [Google Scholar]

- Chen, B.; Lv, X.; Liu, C.; Jiao, H. SGSNet: A Lightweight Depth Completion Network Based on Secondary Guidance and Spatial Fusion. Sensors 2022, 22, 6414. [Google Scholar] [CrossRef]

- Chen, L.; Li, Q. An Adaptive Fusion Algorithm for Depth Completion. Sensors 2022, 22, 4603. [Google Scholar] [CrossRef]

- Uhrig, J.; Schneider, N.; Schneider, L.; Franke, U.; Brox, T.; Geiger, A. Sparsity invariant cnns. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 11–20. [Google Scholar]

- Lambert, J.H. Photometria Sive de Mensura et Gradibus Luminis, Colorum et Umbrae; Klett: Stuttgart, Germany, 1760. [Google Scholar]

- Tatoglu, A.; Pochiraju, K. Point cloud segmentation with LIDAR reflection intensity behavior. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St Paul, MN, USA, 14–18 May 2012; pp. 786–790. [Google Scholar]

- Yin, J.; Shen, J.; Guan, C.; Zhou, D.; Yang, R. Lidar-based online 3d video object detection with graph-based message passing and spatiotemporal transformer attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11495–11504. [Google Scholar]

- Ou, J.; Huang, P.; Zhou, J.; Zhao, Y.; Lin, L. Automatic Extrinsic Calibration of 3D LIDAR and Multi-Cameras Based on Graph Optimization. Sensors 2022, 22, 2221. [Google Scholar] [CrossRef]

- Meng, Q.; Wang, W.; Zhou, T.; Shen, J.; Jia, Y.; Van Gool, L. Towards a weakly supervised framework for 3d point cloud object detection and annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4454–4468. [Google Scholar] [CrossRef]

- Meng, Q.; Wang, W.; Zhou, T.; Shen, J.; Van Gool, L.; Dai, D. Weakly supervised 3d object detection from lidar point cloud. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 515–531. [Google Scholar]

- Li, F.; Jin, W.; Fan, C.; Zou, L.; Chen, Q.; Li, X.; Jiang, H.; Liu, Y. PSANet: Pyramid splitting and aggregation network for 3D object detection in point cloud. Sensors 2020, 21, 136. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Jaakkola, A.; Kaasalainen, M.; Krooks, A.; Kukko, A. Analysis of incidence angle and distance effects on terrestrial laser scanner intensity: Search for correction methods. Remote Sens. 2011, 3, 2207–2221. [Google Scholar] [CrossRef]

- Sasidharan, S. A Normalization scheme for Terrestrial LiDAR Intensity Data by Range and Incidence Angle. In OSF Preprints; Center for Open Science: Charlottesville, VA, USA, 2018. [Google Scholar]

- Starek, M.; Luzum, B.; Kumar, R.; Slatton, K. Normalizing lidar intensities. In Geosensing Engineering and Mapping (GEM); University of Florid: Gainesville, FL, USA, 2006. [Google Scholar]

- Habib, A.F.; Kersting, A.P.; Shaker, A.; Yan, W.Y. Geometric calibration and radiometric correction of LiDAR data and their impact on the quality of derived products. Sensors 2011, 11, 9069–9097. [Google Scholar] [CrossRef]

- Höfle, B.; Pfeifer, N. Correction of laser scanning intensity data: Data and model-driven approaches. ISPRS J. Photogramm. Remote Sens. 2007, 62, 415–433. [Google Scholar] [CrossRef]

- Jutzi, B.; Gross, H. Normalization of LiDAR intensity data based on range and surface incidence angle. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2009, 38, 213–218. [Google Scholar]

- Masiero, A.; Guarnieri, A.; Pirotti, F.; Vettore, A. Semi-automated detection of surface degradation on bridges based on a level set method. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 15–21. [Google Scholar] [CrossRef]

- Guan, H.; Yu, Y.; Li, J.; Liu, P.; Zhao, H.; Wang, C. Automated extraction of manhole covers using mobile LiDAR data. Remote Sens. Lett. 2014, 5, 1042–1050. [Google Scholar] [CrossRef]

- Asvadi, A.; Garrote, L.; Premebida, C.; Peixoto, P.; Nunes, U.J. Real-time deep convnet-based vehicle detection using 3d-lidar reflection intensity data. In Proceedings of the Iberian Robotics Conference; Springer: Berlin/Heidelberg, Germany, 2017; pp. 475–486. [Google Scholar]

- Melotti, G.; Premebida, C.; Gonçalves, N.M.d.S.; Nunes, U.J.; Faria, D.R. Multimodal CNN pedestrian classification: A study on combining LIDAR and camera data. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3138–3143. [Google Scholar]

- Xue, H.; Zhang, S.; Cai, D. Depth image inpainting: Improving low rank matrix completion with low gradient regularization. IEEE Trans. Image Process. 2017, 26, 4311–4320. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, X.; Shi, J.; Zhang, G.; Bao, H.; Li, H. Depth completion from sparse lidar data with depth-normal constraints. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 2811–2820. [Google Scholar]

- Eldesokey, A.; Felsberg, M.; Khan, F.S. Propagating confidences through cnns for sparse data regression. In Proceedings of the British Machine Vision Conference (BMVC), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Huang, Z.; Fan, J.; Cheng, S.; Yi, S.; Wang, X.; Li, H. Hms-net: Hierarchical multi-scale sparsity-invariant network for sparse depth completion. IEEE Trans. Image Process. 2019, 29, 3429–3441. [Google Scholar] [CrossRef]

- Jaritz, M.; De Charette, R.; Wirbel, E.; Perrotton, X.; Nashashibi, F. Sparse and dense data with cnns: Depth completion and semantic segmentation. In Proceedings of the IEEE 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 52–60. [Google Scholar]

- Shivakumar, S.S.; Nguyen, T.; Chen, S.W.; Taylor, C.J. DFuseNet: Deep Fusion of RGB and Sparse Depth Information for Image Guided Dense Depth Completion. arXiv 2019, arXiv:1902.00761. [Google Scholar]

- Chodosh, N.; Wang, C.; Lucey, S. Deep convolutional compressed sensing for lidar depth completion. arXiv 2018, arXiv:1803.08949. [Google Scholar]

- LiDAR, V. HDL-32E User Manual; Velodyne LiDAR Inc.: San Jose, CA, USA, 2015. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning. PMLR, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Inman, H.F.; Bradley Jr, E.L. The overlapping coefficient as a measure of agreement between probability distributions and point estimation of the overlap of two normal densities. Commun. Stat.-Theory Methods 1989, 18, 3851–3874. [Google Scholar] [CrossRef]

- Eldesokey, A.; Felsberg, M.; Holmquist, K.; Persson, M. Uncertainty-aware cnns for depth completion: Uncertainty from beginning to end. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12014–12023. [Google Scholar]

- Ku, J.; Harakeh, A.; Waslander, S.L. In defense of classical image processing: Fast depth completion on the cpu. In Proceedings of the IEEE 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 16–22. [Google Scholar]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as deep: Spatial cnn for traffic scene understanding. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Tan, K.; Cheng, X. Specular reflection effects elimination in terrestrial laser scanning intensity data using Phong model. Remote Sens. 2017, 9, 853. [Google Scholar] [CrossRef]

- Carrea, D.; Abellan, A.; Humair, F.; Matasci, B.; Derron, M.H.; Jaboyedoff, M. Correction of terrestrial LiDAR intensity channel using Oren–Nayar reflectance model: An application to lithological differentiation. ISPRS J. Photogramm. Remote Sens. 2016, 113, 17–29. [Google Scholar] [CrossRef]

- Bolkas, D. Terrestrial laser scanner intensity correction for the incidence angle effect on surfaces with different colours and sheens. Int. J. Remote Sens. 2019, 40, 7169–7189. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A. Radiometric correction and normalization of airborne LiDAR intensity data for improving land-cover classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7658–7673. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).