1. Introduction

In the past decades, with the rapid development of remote sensing technology, multiple types of remote sensing satellites have been sent into outer space, i.e., multispectral, hyper-spectral and synthetic aperture radar satellites [

1]. The data from these various satellites is used for different purposes, such as national defense, agriculture, land use/land cover, geology, urban change detection and so on [

2]. However, owing to some technical constrains and defects of their imaging mechanism, the information captured by sensors alone is limited, which cannot meet the needs of many practical applications completely. Optical sensors and SARs are typical representatives in this field. Optical satellite data is most suitable for human perception, which has been widely used in practice. However, as the storage space and the electromagnetic wave bandwidth of sensors used to communicate with ground receiving stations are limited, it is difficult for an optical sensor to capture images with both a high spatial resolution and a high spectral resolution simultaneously, thus many sensors are designed to have a panchromatic band with a high spatial resolution and several multispectral bands with a low spatial resolution, such as Landsat, IKONOS and so on. Optical sensor imaging has a serious defect, that is, it is easily affected by external environment, such as rain, snow, clouds and night, etc., which seriously limit the use of optical satellites in practice [

3]. Compared with optical remote sensing sensors, SARs are active remote sensing sensors that emit microwaves by themselves, which could not be affected by weather change or imaging time, and the earth surface can be observed under the conditions including rain, snow, clouds and even night thanks to their capability. Due to the long wavelength of SARs, they even can penetrate some materials on the earth surface and obtain information of subsurface features [

2,

4]. The information contained in SAR images is mainly the moisture, roughness and terrain of the observed surface. In addition, some materials will be clearly reflected in the images, such as metal materials. All these characteristics make SAR images completely different from visual light images, the information they contain will be a complement for visual light data. However, SAR data lacks spectral information due to the single frequency used, making it quite difficult for people to interpret SAR images correctly [

5]. In order to make full use of the potential of SAR data and better understand the research area to meet practical work needs, it is necessary to fuse SAR and optical images from different sensors to generate a fused image, which is more informational and reliable as a feasible method [

6,

7]. The specific goal is to inject spectral information of multispectral images into SAR images as much as possible to improve the interpretation ability of SAR images.

In general, image fusion can be simply defined as “the combination of two or more different images to produce a new image with a greater quality and reliability using a certain algorithm” [

7,

8,

9]. At present, many algorithms have been proposed to address the problem of the fusion of SAR and optical images [

1,

7,

10]. According to the published literatures, the main fusion methods of SAR and optical images can be roughly classified into four categories: component substitution (CS) methods, multi-resolution decomposition methods, hybrid methods and model-based methods [

1,

11]. The principle of component substitution methods is that multispectral images are projected into another space to separate spatial and spectral information, then spatial components are substituted by SAR images, subsequently, an inverse transformation is performed on the optical images projected into the original space and the image fusion task is finished. Intensity-Hue-Saturation transform (IHS), principal component analysis (PCA) and Gram–Schmidt transform (GS) are the main methods belonging to this category [

12,

13]. The structure of this type of method is relatively simple and the amount of calculation is small, so they have been integrated into some commercial remote sensing image processing software, e.g., ENVI and ERDAS. However, fused images often contain serious spectral distortion owing to large differences between SAR images and optical images. Multi-resolution analysis (MRA) methods mainly include pyramid decomposition, discrete wavelet transform (DWT), curvelet transform and contourlet transform [

14,

15,

16], etc. Source images to be fused are first decomposed into several scale levels, then fusion tasks are performed on source images of each level according to specific fusion rules, and finally a reverse transform is used to produce fused images. Through this type of method, spectral distortion can be reduced, and signals can be increased to noise ratio at the cost of a large count computational complexity. The hybrid methods of IHS transform and MRA are presented to overcome the shortcomings of CS and MRA methods mentioned above. Chibani et al. made use of A’trous wavelet to extract the features of SAR images, and integrate them into multi-spectral images through IHS transform. Hong et al. proposed a high-resolution method for the fusion of SAR and moderate-resolution multispectral images based on IHS through discrete wavelet transform [

4]. Chu et al. presented a method to merge PalSAR-2 SAR and Sentinel-2A optical images using IHS and shearlet transform [

15]. Recently, fusion methods based on models are also used to merge SAR and optical images such as variational models, sparse representation as well as deep learning [

17,

18,

19], etc. The main idea of this type of fusion method based on variational models is that the difference between the pixel intensity of fused images and source images should be as small as possible, thus the image fusion problem is converted into a problem of objective function optimization [

17]. Shao et al. expanded this idea to fuse SAR and multispectral images based on the minimal gradient and intensity difference between the fused images and source images [

20]. An over-complete dictionary was first created based on some related images through the fusion method using sparse representation; secondly, source images were represented according to the dictionary, and then the coefficients were calculated according to fusion rules; finally, fusion result was constructed based on the dictionary. The key question of the above fusion methods is how to create a dictionary through this approach. At present, deep learning is a hot point in the image processing field, which is also used for remote sensing image fusion. In the fusion method proposed by Shakya et al., deep learning was used to determinate the weight in the fusion process through the learning of specific images [

18]. However, it can be found from the algorithm structure that this type of method is relatively complex and requires a lot of calculation. Meanwhile, the amount of remote sensing image data is usually huge, thus the hybrid methods are more suitable for remote sensing image fusion at present.

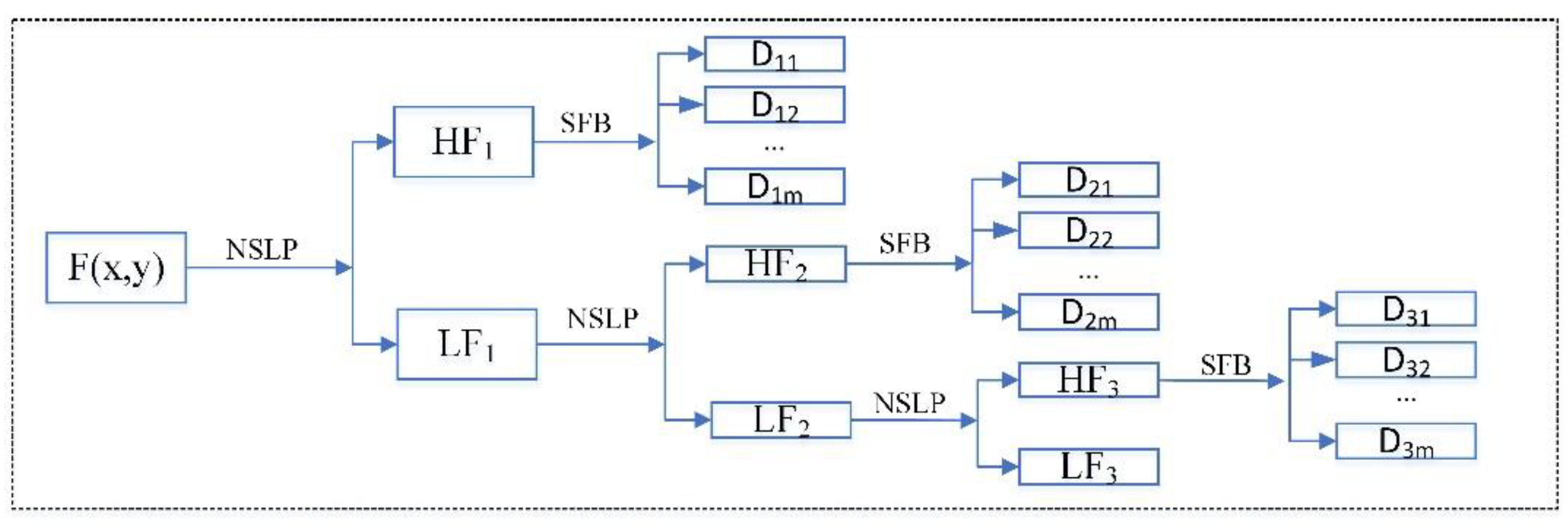

During the image fusion process using a hybrid model, MRA tools and fusion rules are two main problems. The MRA tools could be used to decompose images, which include pyramid decomposition, wavelet transform, curvelet transform, contourlet transform and shearlet transform, etc. Compared with other MRA tools, the shearlet transform has the best capability to describe the detailed image information [

21]. If the subbands of the images are better separated, the images are more easily injected into relevant information, and fusion rules determine the purpose as well as evaluation criteria of image fusion. Chibani et al. injected the texture information of SAR images into multispectral images. Alparone et al. extracted texture information of SARs using A’trous wavelet and added the information to multispectral images [

22]. Youcef et al. utilized A’trous wavelet to decompose SAR images, then integrated high-frequency components of them to the intensity components of multispectral images [

14]. All these fusion rules mentioned above indicate that their aim is to generate an improved multispectral image. However, in practice, SAR images are easier to be obtained than optical images, especially in the case of bad weather at some places, thus SAR images can reflect the surface conditions more quickly. Meanwhile, it is difficult to capture optical images. So, what we really need is to enhance the interpretability of SAR images in such conditions, rather than improving multispectral image information. Hong et al. proposed a method based on IHS transform and wavelet decomposition to merge SAR images with multispectral ones. Firstly, multispectral images were converted into IHS space, then SAR images and the intensity components of multispectral images were decomposed, new low-frequency components were replaced through a weighted combination of both SAR and optical images, while the high-frequency components of SAR images were adopted to the new high-frequency components, an inverse wavelet transform was performed to obtain a new intensity, finally, an inverse IHS transform was used to finish the whole fusion process, during which SAR image information was retained completely. Meanwhile the color information of multispectral images was fused into new images, which would greatly help to improve the interpretation ability of SAR images [

4]. However, due to the weak ability of wavelet transform to depict image details, there is still space for the improvement of the fusion effect. Chu et al. [

15] proposed a fusion method using shearlet and IHS transform, whose process was similar to Hong’s method, and the difference lay in the image decomposition methods and fusion rules. Compared with wavelet transform, more spatial details of the images can be captured through shearlet transform. It is proposed to determine the weight of low-frequency component coefficients and use the difference of a coefficient variation of a 3 × 3-size window. The high-frequency coefficients are selected from the high-frequency coefficients of SAR and optical images according to the criterion of the maximum energy in a 3 × 3-size window, whose advantage is that the information changed in SAR and optical images will be integrated into the new images, and the information of the new images will be richer. The disadvantage is that the fusion rules may destroy the original structure of spatial information and bring difficulties to the interpretation of fused images. Sheng et al. [

23] also proposed a similar method, a fusion rule using structure similarity and luminance difference of sparse representation in a low-frequency domain, and a sum-modified-Laplacian pyramid in a high-frequency domain was used. Good fusion results were obtained through the method with little color distortion. However, owing to the requirement of building an over-complete dictionary, it needs a lot of computation, and some SAR image information would be lost, which might cause some troubles for SAR image interpretation.

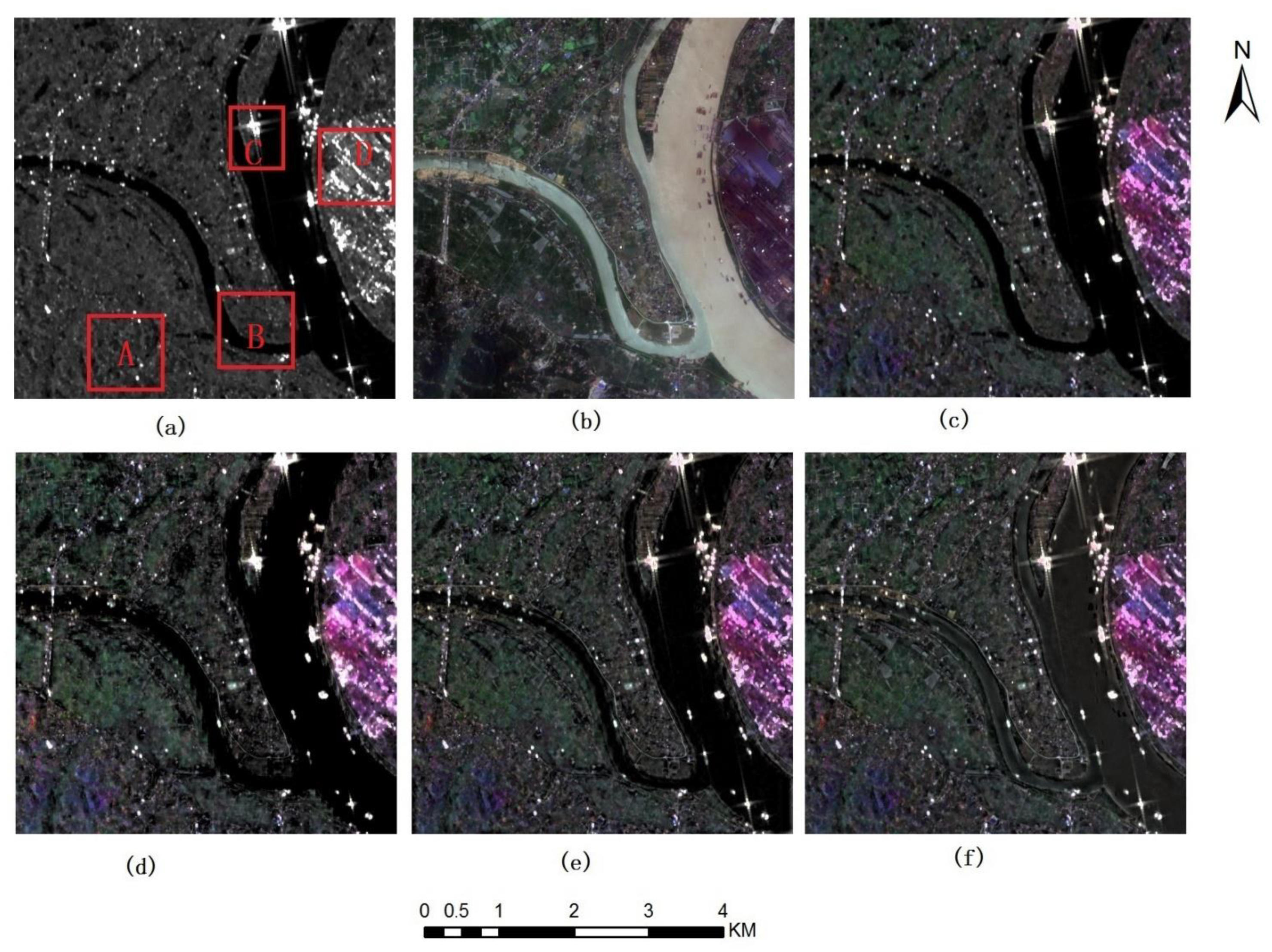

Keeping this in view, in order to make better use of the SAR images in which ground spatial information can be collected without being affected by external conditions and increase the interpretability of the SAR images, a novel fusion method of SAR and multispectral images is proposed in this paper based on shearlet and activity measure. The goal of image fusion is to inject the color and spatial information contained in multispectral images into SAR images as much as possible, so as to improve the interpretability of the SAR images. The main steps are similar with those of Shearlet+IHS fusion method [

15]. The difference between the methods mainly lies in the difference of their fusion purpose and fusion rules. In order to verify and evaluate the method proposed in this paper, three datasets of different remote sensing images are used for fusion and evaluation. One dataset contains Sentinel 1A images and Sentinel 2B images, one contains ALOS PALSAR images and landsat5 TM images, and the other contains GF3 images and Sentinel 2A images. In addition, considering that different polarization modes of SARs have a great impact on the strength of SAR signals, each dataset in this paper includes co-polarization SAR images and cross polarization SAR images. Fusion results are evaluated visually and statistically, which are compared with those of other methods, such as IHS, DWT+IHS and Countourlet+IHS, Fused images of different polarization modes are also compared. Visual and statistical evaluations demonstrate that better fusion results could be obtained and produced through the method proposed in this paper compared with other methods, successfully injecting the spectral and spatial information of multispectral images into SAR images, and the amount of information as well as interpretation ability of SAR images is greatly improved.

The rest of the paper is organized as follows. The experiment data and the fusion method proposed in this paper are described in detail in

Section 2. Then

Section 3 provides experiment results and analyses. The experiment results are discussed in

Section 4 and the paper is concluded finally in

Section 5.

4. Discussion

Among various image fusion algorithms, the hybrid method combining multi-scale decomposition method with IHS method is one of the most popular methods used by researchers. Theoretically, the more levels and directions of image decomposition are, the more details of the images will be described, and the better the image fusion effect will be. Because different polarization modes will cause SAR images to show different characteristics, the effect of different fusion methods on the fusion of SAR images with different polarization modes and multispectral images is rarely mentioned in previous studies. Therefore, new multi-scale decomposition tools are used for the method proposed in this paper, to fuse SAR images, namely shearlet transform and IHS transform, and their fusion effect is compared with that of traditional wavelet transform and contourlet transform. In this paper, Sentinel 1A VV SAR image data, Sentinel 1A VH SAR and Sentinel 2A multispectral data are selected for experiments. The experimental results indicate that the visual interpretation effect and statistical indexes of the two groups of experimental data through the method proposed are better than that of other methods, which shows that this method is better than other traditional methods in fusing SAR images and multispectral images as well as improving SAR image interpretation ability. C-band GF3 SAR images, L-band ALOS radar data and multispectral image fusion are also selected in this paper. The fusion results also show that good results have been achieved through this method in L-band and C-band SAR image fusion.

However, although good results have been achieved through the method proposed in the fusion of SAR and multispectral images with different electromagnetic frequencies and spatial resolutions, there is still a problem to be further studied in the multi-resolution decomposition and fusion algorithms, that is, the determination of the optimal decomposition scale of the images. Due to different content of the images, the number of layers and directions of image decomposition should change automatically in the fusion process, but these parameters are fixed at present, which may cause excessive or insufficient image decomposition, and the image fusion effect cannot be optimized. This problem needs to be further studied in the future.

5. Conclusions

The interpretation of SAR images is the basis of SAR image application, but due to its imaging mechanism, it is very difficult to interpret SAR images directly. Fusing them with multispectral images is one of the effective means to improve the interpretation ability of SAR images. On the basis of previous research, a fusion method combining shearlet transform with activity measure is proposed in this paper, whose aim is to improve the interpretation ability of SAR images, rather than to simply improve the amount of information in the fused images. In order to evaluate the effectiveness of this method, SAR images from three different sensors, Sentinel 1A, ALOS and GF3, are selected here. These SAR working electromagnetic bands are L-bands and C-bands respectively. The polarization modes of the SAR images include HH, VV, HV and VH. The applied multispectral images contain Sentinel 2 and Landsat 5 TM images, and the designed bands include blue, green, red and near-infrared bands. The fused images through the method proposed are visually compared and statistically analyzed based on the fusion results through previous IHS methods, DWT-IHS methods and contourlet-IHS methods. The description of ground objects in the fusion image produced by the method proposed is obviously more delicate than other methods visually. In the fusion of sentinel 1A VV image and sentinel 2A multispectral images, the mean high-frequency correlation coefficient between the fusion images generated by the method proposed and the SAR images is 0.706, and the mean correlation coefficient with the multispectral images is 0.004, which are higher than the indexes of the other two hybrid methods. The high-frequency correlation coefficient between the fusion image of IHS method and SAR image is the largest, but the correlation coefficient with multispectral images is the smallest. Although IHS method can integrate the spatial information of SAR image into the fusion image well, its ability is the weakest in the fusion of spectral information. The results of other groups of tests are similar to the above results. All these show that through the method proposed in this paper, the spectral information can be better integrated with the spatial detail information of multispectral images while maintaining the spatial information of the original SAR images, meanwhile effectively improving the interpretation ability of SAR images.