Multiple Preprocessing Hybrid Level Set Model for Optic Disc Segmentation in Fundus Images

Abstract

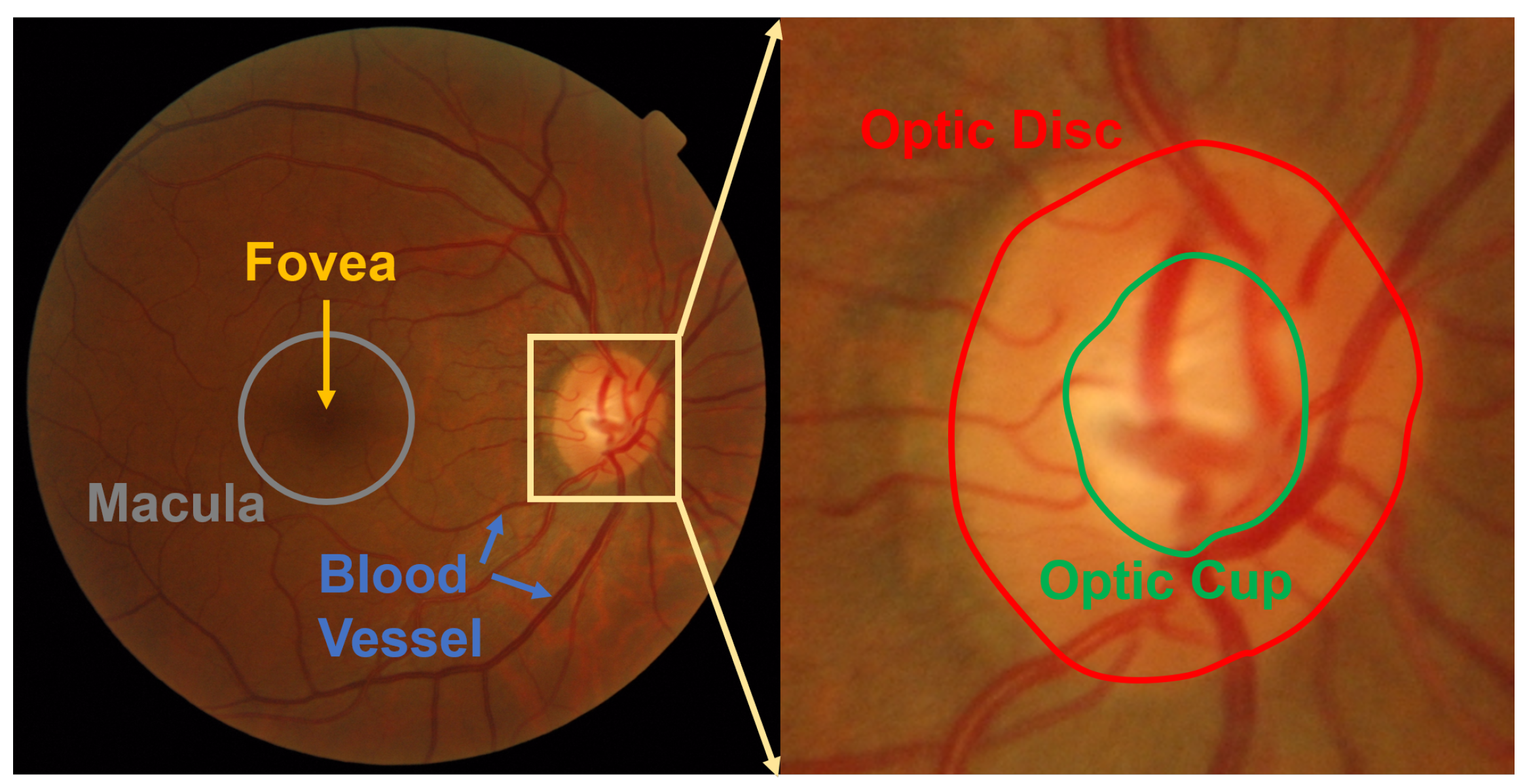

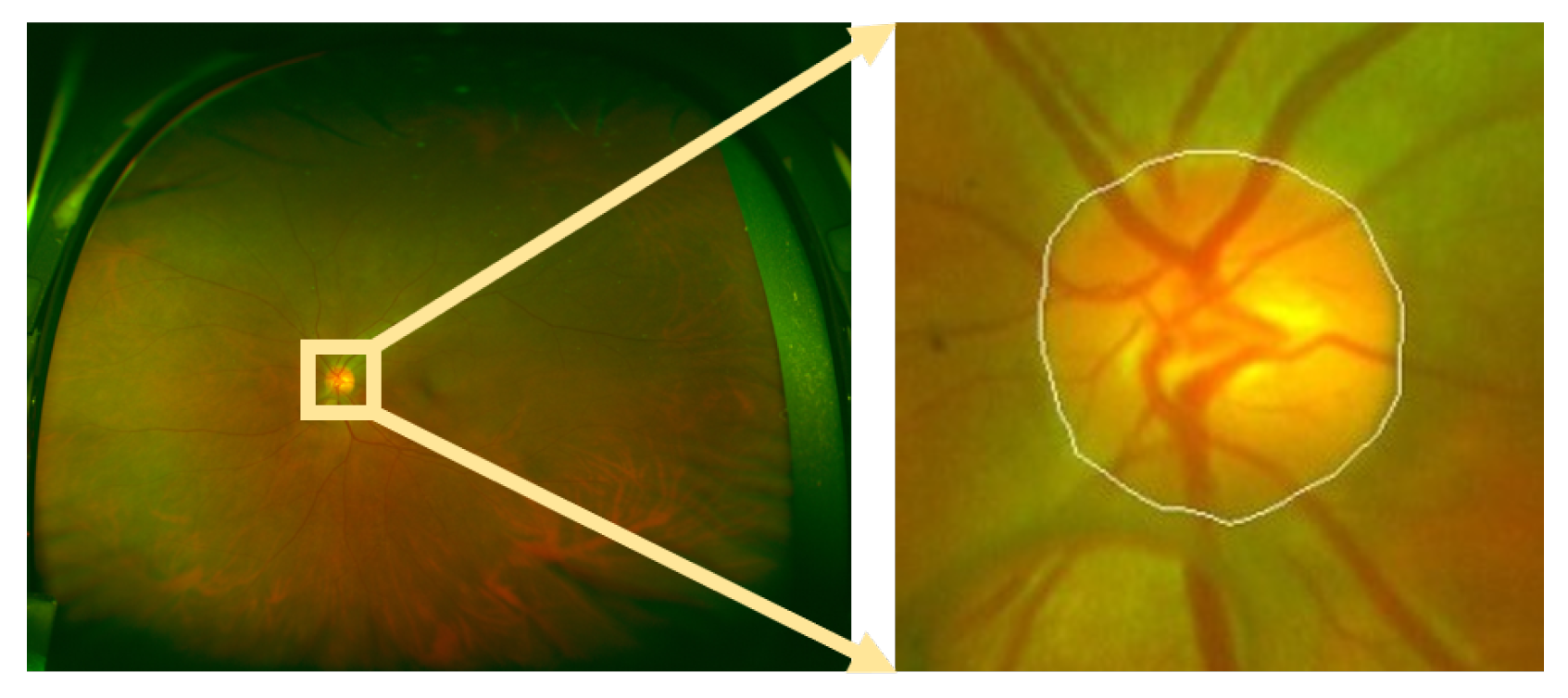

:1. Introduction

- 1.

- The proposed method has higher robustness that could successfully segment the OD in both the posterior and wide-angle fundus images.

- 2.

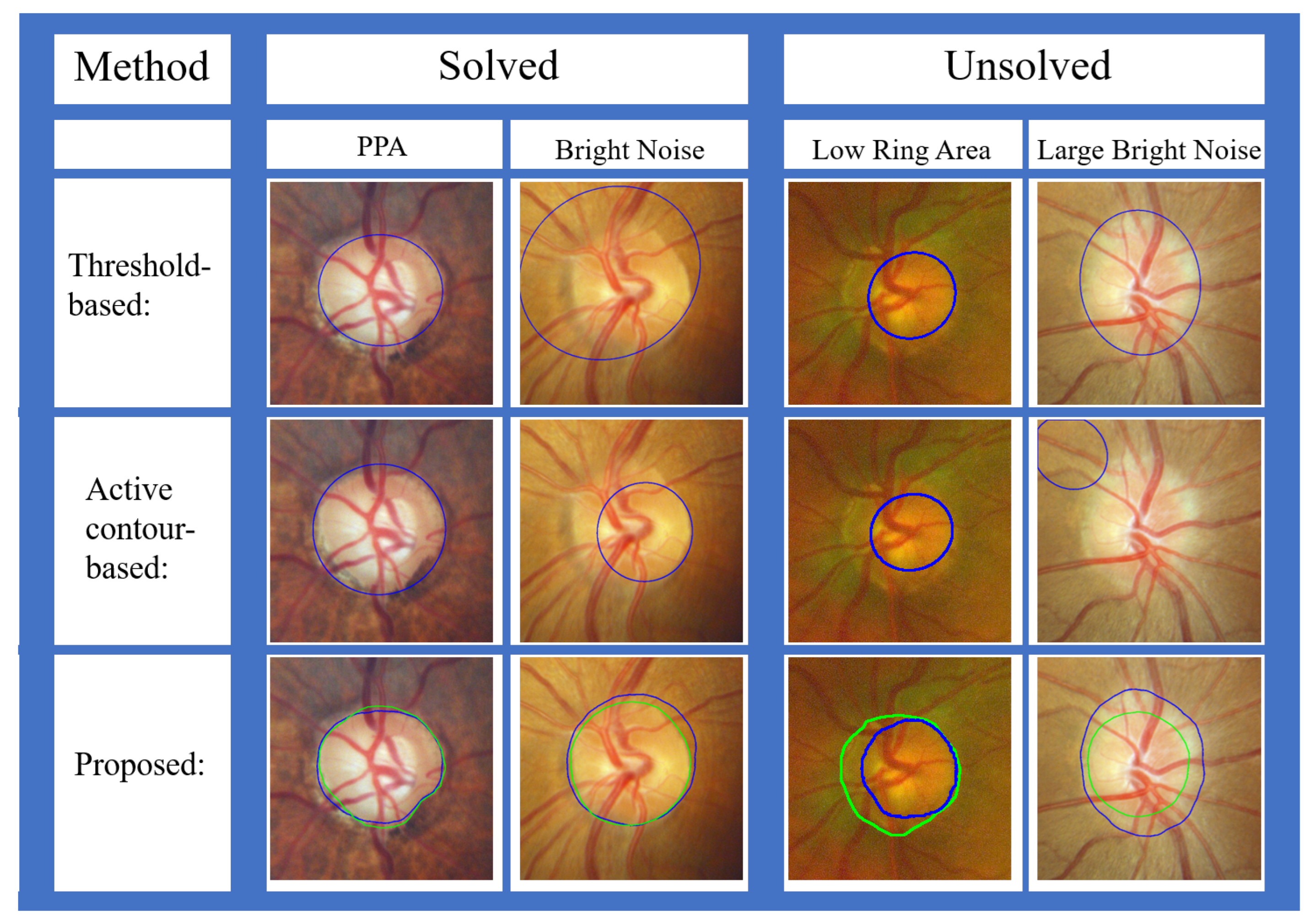

- The effect of PPA and bright noise causes undersegmentation, and the OD being occluded by blood vessels causes undersegmentation. The proposed approach achieves high accuracy in OD segmentation results by solving these problems.

- 3.

- A new evaluation method, FSE, is proposed for clinicians to subjectively evaluate OD segmentation results.

2. Related Work

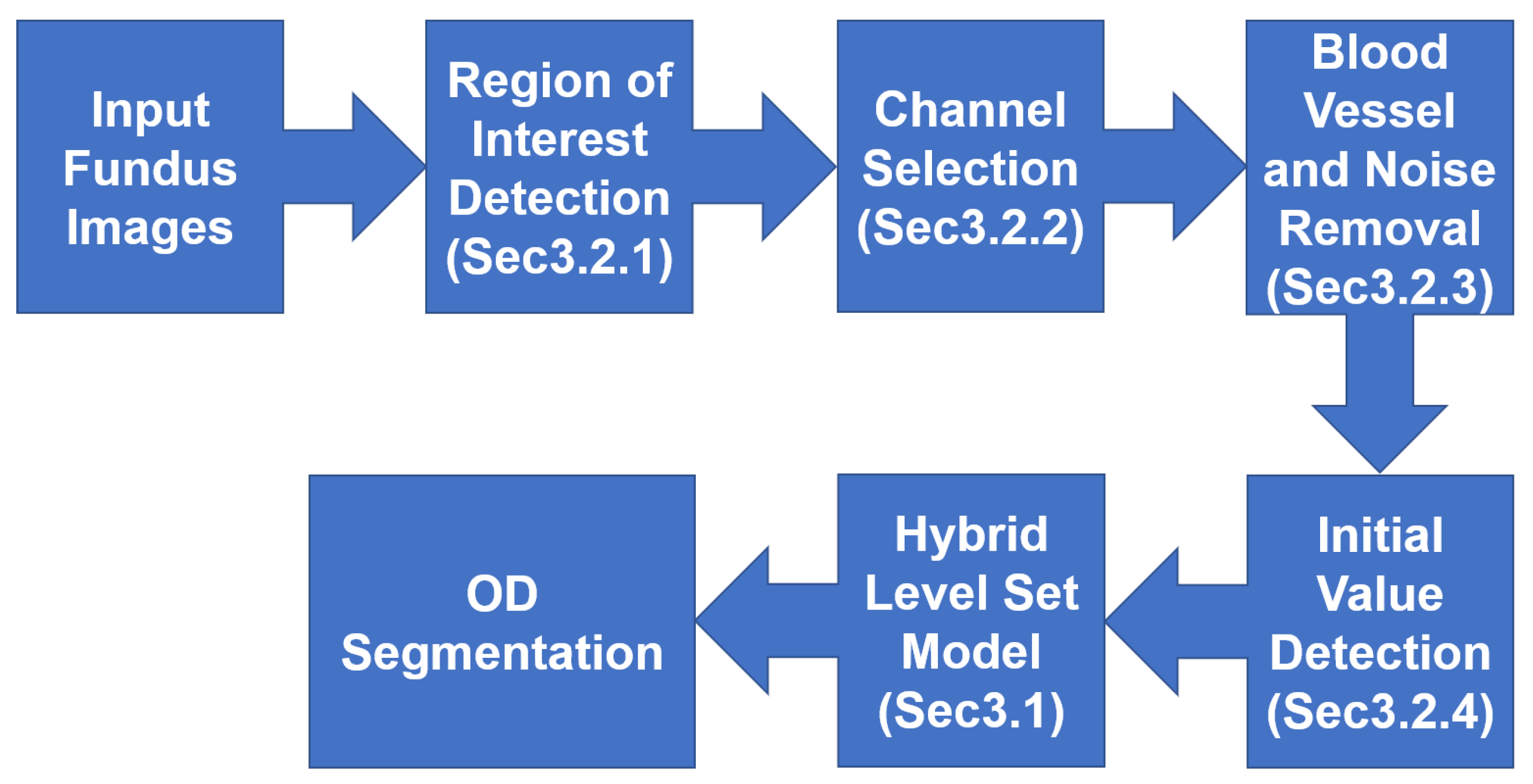

3. Multiple Preprocessing Hybrid Level Set Model

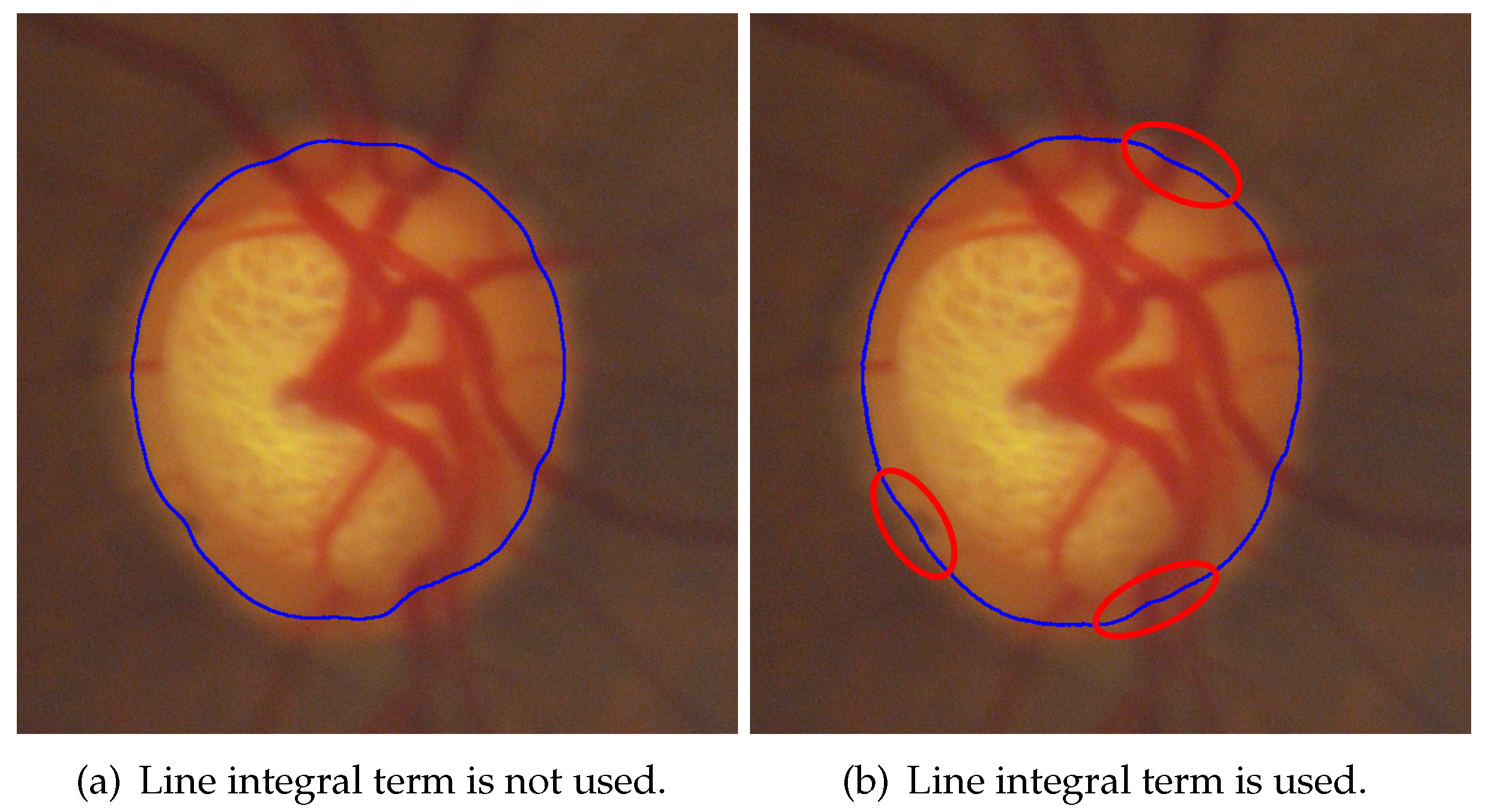

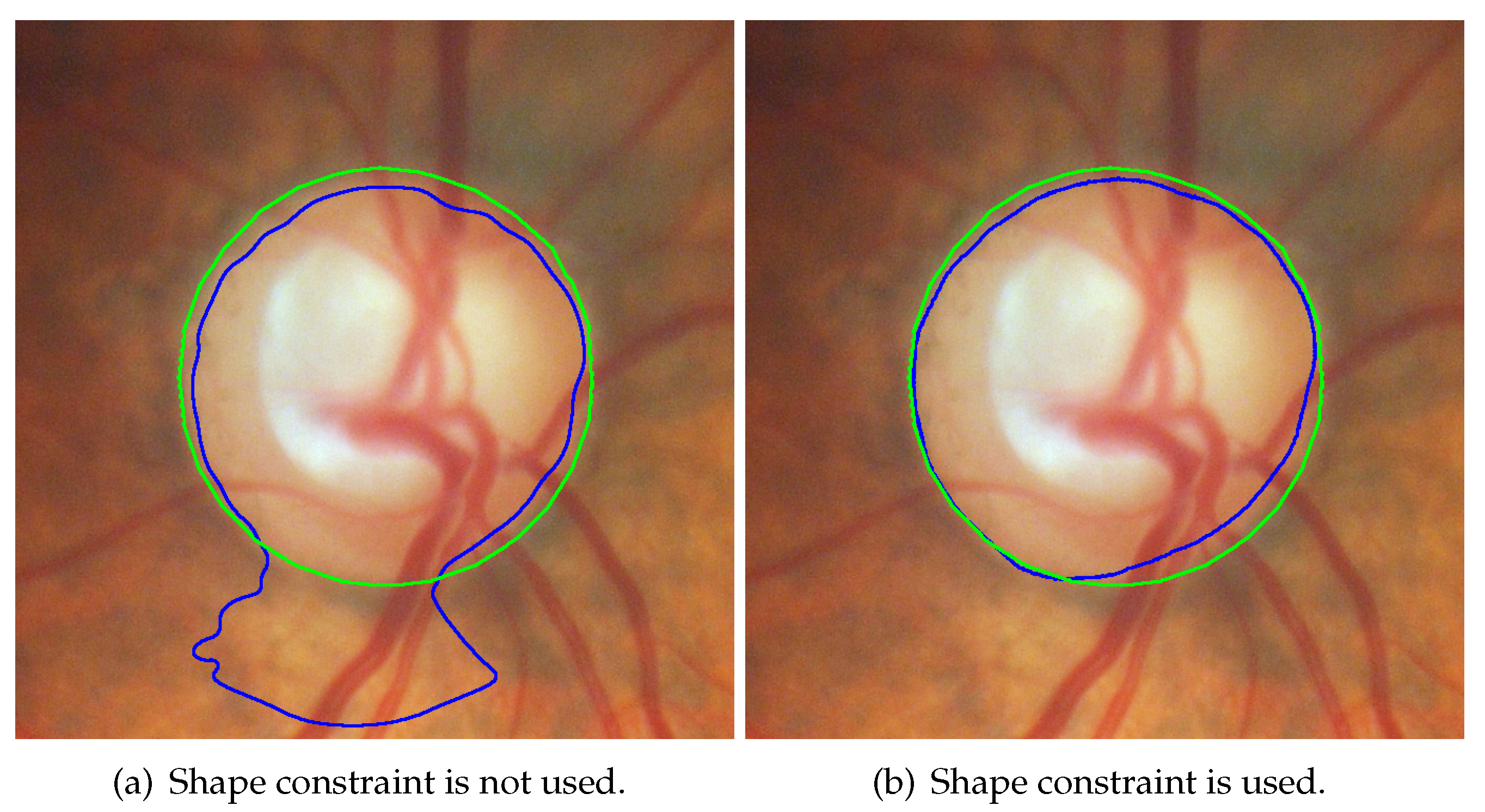

3.1. Hybrid Level Set Model

3.2. Multiple Preprocessing

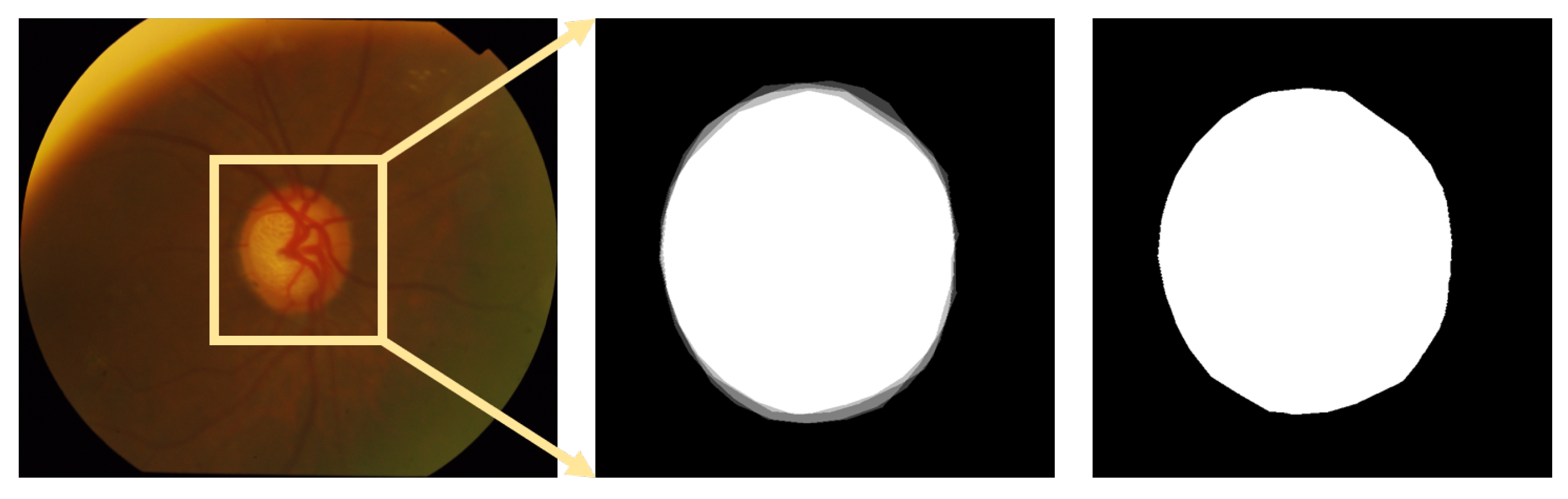

3.2.1. Region of Interest (ROI) Detection

- Step 1: Extracting the green channel (Figure 9b and 10b) from the RGB color space and inverting it (Figure 9c and 10c). In order to take advantage of the feature of high vascular density, it is necessary to roughly segment the blood vessels. In fundus images, blood vessels have a high contrast in the green channel, which is why the green channel is extracted. However, blood vessels are darker than those in other areas in the green channel. Thus, for using morphological top-hat transformation to segment blood vessels, the green channel is inverted.

- Step 3: Finding several circular areas with the highest vascular density (Figure 9e and 10e). The radius of circular areas is the average OD radius. Because of the rough and imprecise blood-vessel map, selecting only a few areas may not include OD. Thus, 20 circular areas were selected in this paper.

- Step 5: Extracting the rough ROI (Figure 9g and 10g) by this circular area. The side length of rough ROI is 4 times the average diameter of OD and the center of rough ROI is the center of circular area. As shown in Figure 11, there was still an error (the OD was not located at the center of ROI image) if locating OD only on the basis of high vascular density and high brightness.

3.2.2. Channel Selection

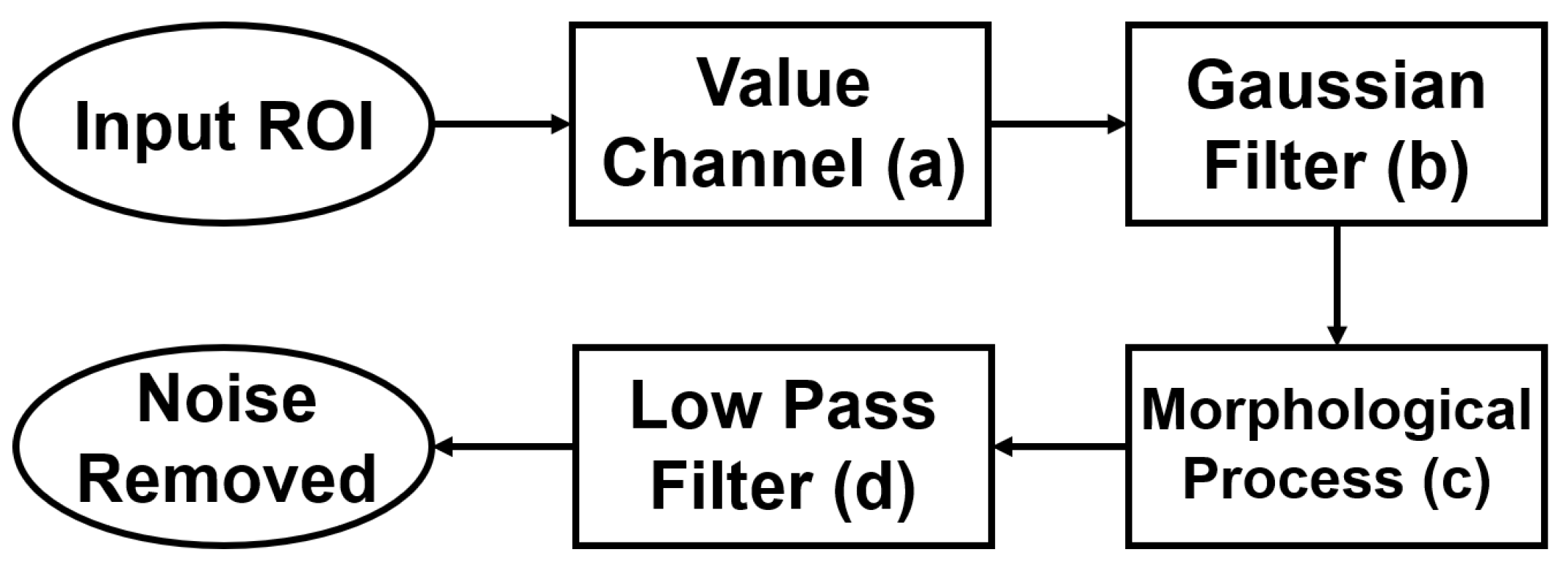

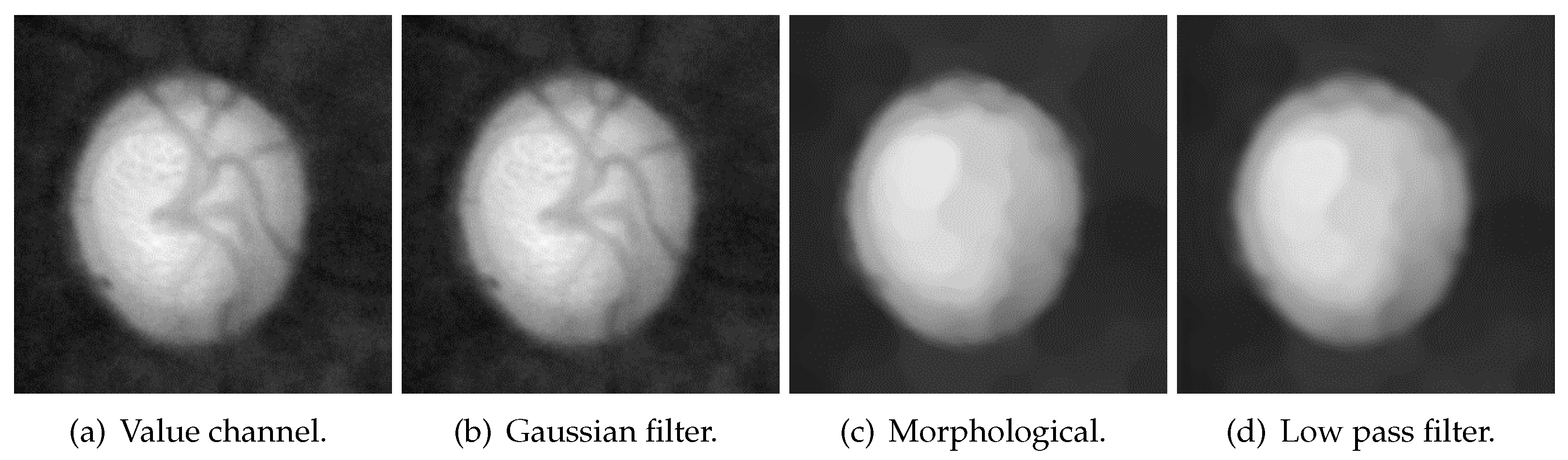

3.2.3. Blood-Vessel and Noise Removal

3.2.4. Initial-Value Detection

4. Experiment

4.1. Data Sets

4.2. Evaluation Criteria

4.3. Parameters

4.4. Experimental Results

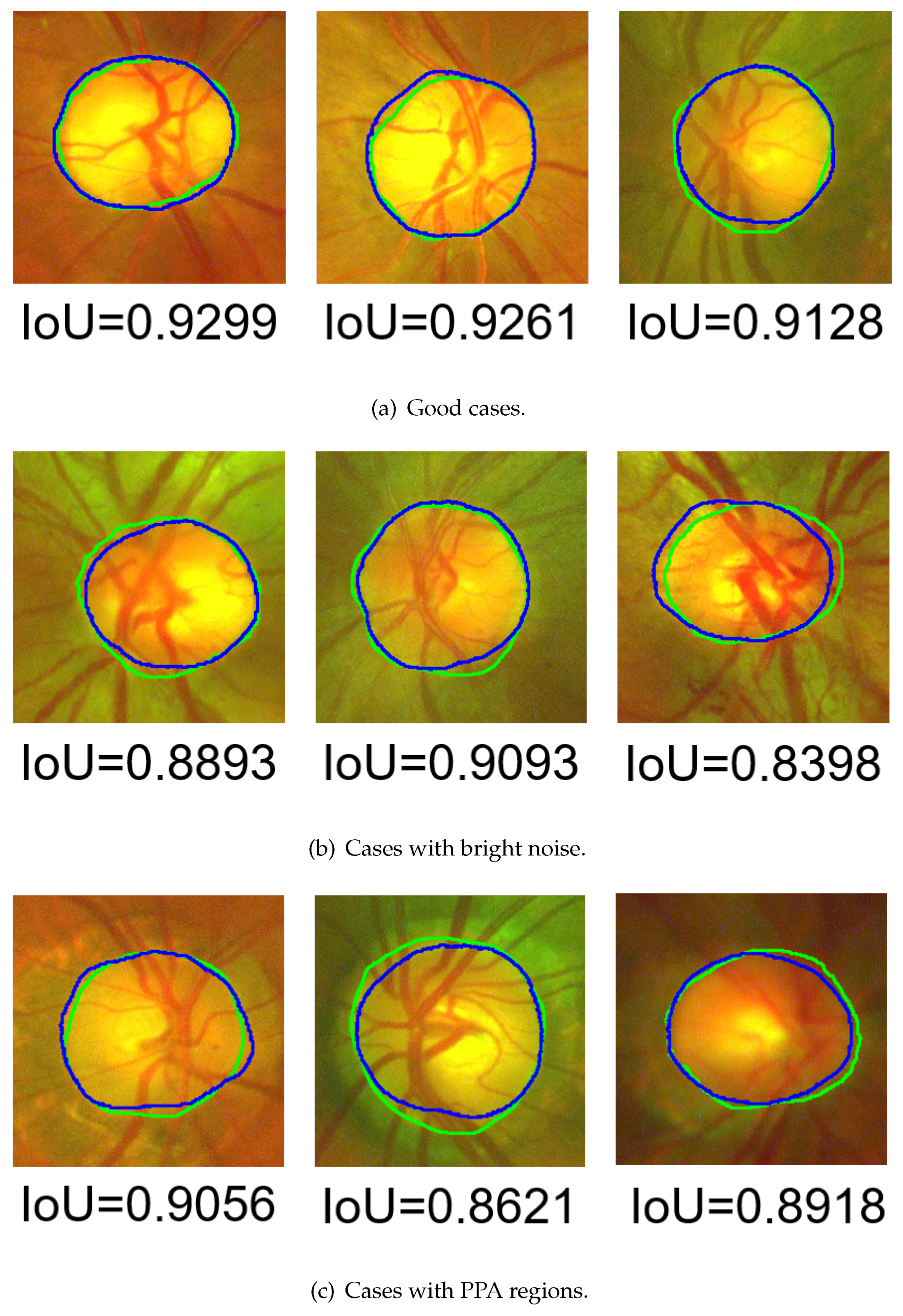

4.4.1. Segmentation Results

4.4.2. Discussion

- 1.

- Different light sources were used when posterior and wide-angle fundus images are taken. Posterior fundus images use white light sources, while wide-angle fundus images utilize red and green light sources. The proposed method utilizes the value channel in the HSV color space to segment the OD. The value channel is the maximal value of the red, green, and blue of these three channels. However, there is no blue channel information in wide-angle fundus images, which may reduce the segmentation accuracy.

- 2.

- The resolution of the ROI on posterior fundus images is about , while the resolution of the ROI on wide-angle fundus images is about . The low resolution of wide-angle fundus images may also be one of the reasons for the low segmentation accuracy.

- 1.

- As shown in Figure 25a, the existence of too-strong blood vessels causes oversegmentation. Because the blood vessels are too thick or multiple blood vessels are entangling, there are still dark shadows after noise removal. The pixel values covered by blood vessels were lower than those in other areas, resulting in undersegmentation.

- 2.

- As shown in Figure 25b, if there is a large area of bright noise around the OD, the OD is also undersegmented. This situation is predictable, since the proposed method is an area-based level set model, and the initial value is based on thresholding.

- 3.

- As shown in Figure 25c, the brightness of the ring area (the area between the OD and OC boundaries) was too low, which caused a large error in the initial-value detection, resulting in oversegmentation.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| OD | Optic disc |

| OC | Optic cup |

| CNR | Contrast-to-noise ratio |

| IoU | Intersection over union |

| FSE | Four-side evaluation |

| TMUEH | Tianjin Medical University Eye Hospital |

| DR | Diabetic retinopathy |

| FOV | Field of view |

| PPA | Parapapillary atrophy |

| ROI | Region of interest |

| GT | Ground truth |

| HLSM | Hybrid level set model |

References

- Hayreh, S.S. Blood supply of the optic nerve head and its role in optic atrophy, glaucoma, and oedema of the optic disc. Br. J. Ophthalmol. 1969, 53, 721. [Google Scholar] [CrossRef] [PubMed]

- Garway-Heath, D.F.; Ruben, S.T.; Viswanathan, A.; Hitchings, R.A. Vertical cup/disc ratio in relation to optic disc size: Its value in the assessment of the glaucoma suspect. Br. J. Ophthalmol. 1998, 82, 1118–1124. [Google Scholar] [CrossRef] [PubMed]

- Niemeijer, M.; Abramoff, M.; Van Ginneken, B. Automated localization of the optic disc and the fovea. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 3538–3541. [Google Scholar] [CrossRef]

- Tranos, P.G.; Wickremasinghe, S.S.; Stangos, N.T.; Topouzis, F.; Tsinopoulos, I.; Pavesio, C.E. Macular edema. Surv. Ophthalmol. 2004, 49, 470–490. [Google Scholar] [CrossRef]

- Claro, M.; Santos, L.; Silva, W.; Araújo, F.; Moura, N.; Macedo, A. Automatic glaucoma detection based on optic disc segmentation and texture feature extraction. CLEI Electron. J. 2016, 19, 5. [Google Scholar] [CrossRef]

- Dashtbozorg, B.; Mendonça, A.M.; Campilho, A. Optic disc segmentation using the sliding band filter. Comput. Biol. Med. 2015, 56, 1–12. [Google Scholar] [CrossRef]

- Khan, M.A.; Mir, N.; Sarirete, A.; Nasir, M.R.; Abdelazim, M.M.; Yasin, M.Z. Optic Disc Detection and Segmentation with Vessel Convergence and Elliptical Symmetry Evidences. Procedia Comput. Sci. 2019, 163, 609–617. [Google Scholar] [CrossRef]

- Xu, H.; Koch, P.; Chen, M.; Lau, A.; Reid, D.M.; Forrester, J.V. A clinical grading system for retinal inflammation in the chronic model of experimental autoimmune uveoretinitis using digital fundus images. Exp. Eye Res. 2008, 87, 319–326. [Google Scholar] [CrossRef]

- Toslak, D.; Thapa, D.; Chen, Y.; Erol, M.K.; Chan, R.P.; Yao, X. Trans-palpebral illumination: An approach for wide-angle fundus photography without the need for pupil dilation. Opt. Lett. 2016, 41, 2688–2691. [Google Scholar] [CrossRef]

- Mary, V.S.; Rajsingh, E.B.; Naik, G.R. Retinal fundus image analysis for diagnosis of glaucoma: A comprehensive survey. IEEE Access 2016, 4, 4327–4354. [Google Scholar] [CrossRef]

- Zhang, Z.; Yin, F.S.; Liu, J.; Wong, W.K.; Tan, N.M.; Lee, B.H.; Cheng, J.; Wong, T.Y. Origa-light: An online retinal fundus image database for glaucoma analysis and research. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 3065–3068. [Google Scholar] [CrossRef]

- Kauppi, T.; Kalesnykiene, V.; Kamarainen, J.K.; Lensu, L.; Sorri, I.; Raninen, A.; Voutilainen, R.; Uusitalo, H.; Kälviäinen, H.; Pietilä, J. The diaretdb1 diabetic retinopathy database and evaluation protocol. In Proceedings of the BMVC, University of Warwick, Coventry, UK, 10–13 September 2007; Volume 1, pp. 1–10. [Google Scholar]

- Sivaswamy, J.; Krishnadas, S.; Joshi, G.D.; Jain, M.; Tabish, A.U.S. Drishti-gs: Retinal image dataset for optic nerve head (onh) segmentation. In Proceedings of the 2014 IEEE 11th international symposium on biomedical imaging (ISBI), Beijing, China, 29 April–2 May 2014; pp. 53–56. [Google Scholar] [CrossRef]

- Inoue, M. Wide-angle viewing system. Microincision Vitr. Surg. 2014, 54, 87–91. [Google Scholar] [CrossRef]

- Trucco, E.; Ruggeri, A.; Karnowski, T.; Giancardo, L.; Chaum, E.; Hubschman, J.P.; Al-Diri, B.; Cheung, C.Y.; Wong, D.; Abramoff, M.; et al. Validating retinal fundus image analysis algorithms: Issues and a proposal. Investig. Ophthalmol. Vis. Sci. 2013, 54, 3546–3559. [Google Scholar] [CrossRef] [PubMed]

- Ren, F.; Li, W.; Yang, J.; Geng, H.; Zhao, D. Automatic optic disc localization and segmentation in retinal images by a line operator and level sets. Technol. Health Care 2016, 24, S767–S776. [Google Scholar] [CrossRef] [PubMed]

- Muramatsu, C.; Hatanaka, Y.; Sawada, A.; Yamamoto, T.; Fujita, H. Computerized detection of peripapillary chorioretinal atrophy by texture analysis. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 5947–5950. [Google Scholar] [CrossRef]

- Septiarini, A.; Harjoko, A.; Pulungan, R.; Ekantini, R. Optic disc and cup segmentation by automatic thresholding with morphological operation for glaucoma evaluation. Signal Image Video Process. 2017, 11, 945–952. [Google Scholar] [CrossRef]

- Muramatsu, C.; Nakagawa, T.; Sawada, A.; Hatanaka, Y.; Hara, T.; Yamamoto, T.; Fujita, H. Determination of cup-to-disc ratio of optical nerve head for diagnosis of glaucoma on stereo retinal fundus image pairs. In Proceedings of the Medical Imaging 2009: Computer-Aided Diagnosis International Society for Optics and Photonics, Lake Buena Vista, FL, USA, 3 March 2009; Volume 7260, p. 72603L. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, J.; Wong, D.W.K.; Yin, F.; Cheung, C.; Baskaran, M.; Aung, T.; Wong, T.Y. Automatic optic disc segmentation with peripapillary atrophy elimination. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 6224–6227. [Google Scholar] [CrossRef]

- Li, H.; Chutatape, O. A Model-Based Approach for Automated Feature Extraction in Fundus Images. In Proceedings of the ICCV, Nice, France, 13–16 October 2003; Volume 2003, pp. 394–399. [Google Scholar] [CrossRef]

- Li, H.; Chutatape, O. Boundary detection of optic disk by a modified ASM method. Pattern Recognit. 2003, 36, 2093–2104. [Google Scholar] [CrossRef]

- Yin, F.; Liu, J.; Ong, S.H.; Sun, Y.; Wong, D.W.; Tan, N.M.; Cheung, C.; Baskaran, M.; Aung, T.; Wong, T.Y. Model-based optic nerve head segmentation on retinal fundus images. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 2626–2629. [Google Scholar] [CrossRef]

- Rehman, Z.U.; Naqvi, S.S.; Khan, T.M.; Arsalan, M.; Khan, M.A.; Khalil, M. Multi-parametric optic disc segmentation using superpixel based feature classification. Expert Syst. Appl. 2019, 120, 461–473. [Google Scholar] [CrossRef]

- Xue, L.Y.; Lin, J.W.; Cao, X.R.; Zheng, S.H.; Yu, L. Optic disk detection and segmentation for retinal images using saliency model based on clustering. J. Comput. 2018, 29, 66–79. [Google Scholar] [CrossRef]

- Hamednejad, G.; Pourghassem, H. Retinal optic disk segmentation and analysis in fundus images using DBSCAN clustering algorithm. In Proceedings of the 2016 23rd Iranian Conference on Biomedical Engineering and 2016 1st International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 24–25 November 2016; pp. 122–127. [Google Scholar] [CrossRef]

- Abdullah, A.S.; Rahebi, J.; Özok, Y.E.; Aljanabi, M. A new and effective method for human retina optic disc segmentation with fuzzy clustering method based on active contour model. Med. Biol. Eng. Comput. 2020, 58, 25–37. [Google Scholar] [CrossRef]

- Dai, B.; Wu, X.; Bu, W. Optic disc segmentation based on variational model with multiple energies. Pattern Recognit. 2017, 64, 226–235. [Google Scholar] [CrossRef]

- Yu, H.; Barriga, E.S.; Agurto, C.; Echegaray, S.; Pattichis, M.S.; Bauman, W.; Soliz, P. Fast localization and segmentation of optic disk in retinal images using directional matched filtering and level sets. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 644–657. [Google Scholar] [CrossRef]

- Esmaeili, M.; Rabbani, H.; Dehnavi, A.M. Automatic optic disk boundary extraction by the use of curvelet transform and deformable variational level set model. Pattern Recognit. 2012, 45, 2832–2842. [Google Scholar] [CrossRef]

- Wong, D.; Liu, J.; Lim, J.; Jia, X.; Yin, F.; Li, H.; Wong, T. Level-set based automatic cup-to-disc ratio determination using retinal fundus images in ARGALI. In Proceedings of the 2008 30th annual international conference of the IEEE engineering in medicine and biology society, Vancouver, BC, Canada, 20–25 August 2008; pp. 2266–2269. [Google Scholar] [CrossRef]

- Joshi, G.D.; Sivaswamy, J.; Krishnadas, S. Optic disk and cup segmentation from monocular color retinal images for glaucoma assessment. IEEE Trans. Med. Imaging 2011, 30, 1192–1205. [Google Scholar] [CrossRef] [PubMed]

- Naqvi, S.S.; Fatima, N.; Khan, T.M.; Rehman, Z.U.; Khan, M.A. Automatic optic disk detection and segmentation by variational active contour estimation in retinal fundus images. Signal Image Video Process. 2019, 13, 1191–1198. [Google Scholar] [CrossRef]

- Fu, H.; Cheng, J.; Xu, Y.; Wong, D.W.K.; Liu, J.; Cao, X. Joint optic disc and cup segmentation based on multi-label deep network and polar transformation. IEEE Trans. Med. Imaging 2018, 37, 1597–1605. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Fu, D.; Huang, Z.; Tong, H. Optic disc segmentation in fundus images using adversarial training. IET Image Process. 2019, 13, 375–381. [Google Scholar] [CrossRef]

- Yu, S.; Xiao, D.; Frost, S.; Kanagasingam, Y. Robust optic disc and cup segmentation with deep learning for glaucoma detection. Comput. Med. Imaging Graph. 2019, 74, 61–71. [Google Scholar] [CrossRef]

- Wang, L.; Liu, H.; Lu, Y.; Chen, H.; Zhang, J.; Pu, J. A coarse-to-fine deep learning framework for optic disc segmentation in fundus images. Biomed. Signal Process. Control. 2019, 51, 82–89. [Google Scholar] [CrossRef]

- Kim, J.; Tran, L.; Chew, E.Y.; Antani, S. Optic disc and cup segmentation for glaucoma characterization using deep learning. In Proceedings of the 2019 IEEE 32nd International Symposium on Computer-Based Medical Systems (CBMS), Cordoba, Spain, 5–7 June 2019; pp. 489–494. [Google Scholar] [CrossRef]

- Gu, Z.; Cheng, J.; Fu, H.; Zhou, K.; Hao, H.; Zhao, Y.; Zhang, T.; Gao, S.; Liu, J. Ce-net: Context encoder network for 2d medical image segmentation. IEEE Trans. Med. Imaging 2019, 38, 2281–2292. [Google Scholar] [CrossRef]

- Al-Bander, B.; Williams, B.M.; Al-Nuaimy, W.; Al-Taee, M.A.; Pratt, H.; Zheng, Y. Dense fully convolutional segmentation of the optic disc and cup in colour fundus for glaucoma diagnosis. Symmetry 2018, 10, 87. [Google Scholar] [CrossRef]

- Pachade, S.; Porwal, P.; Kokare, M.; Giancardo, L.; Mériaudeau, F. NENet: Nested EfficientNet and adversarial learning for joint optic disc and cup segmentation. Med. Image Anal. 2021, 74, 102253. [Google Scholar] [CrossRef]

- Srivastava, R.; Cheng, J.; Wong, D.W.; Liu, J. Using deep learning for robustness to parapapillary atrophy in optic disc segmentation. In Proceedings of the 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), Brooklyn, NY, USA, 16–19 April 2015; pp. 768–771. [Google Scholar] [CrossRef]

- Sreng, S.; Maneerat, N.; Hamamoto, K.; Win, K.Y. Deep learning for optic disc segmentation and glaucoma diagnosis on retinal images. Appl. Sci. 2020, 10, 4916. [Google Scholar] [CrossRef]

- Li, C.; Xu, C.; Gui, C.; Fox, M.D. Distance regularized level set evolution and its application to image segmentation. IEEE Trans. Image Process. 2010, 19, 3243–3254. [Google Scholar] [CrossRef] [PubMed]

- Osher, S.; Fedkiw, R.P. Level set methods: An overview and some recent results. J. Comput. Phys. 2001, 169, 463–502. [Google Scholar] [CrossRef]

- Chan, T.; Vese, L. An active contour model without edges. In Proceedings of the International Conference on Scale-Space Theories in Computer Vision, Corfu, Greece, 26–27 September 1999; Springer: Berlin/Heidelberg, Germany, 1999; pp. 141–151. [Google Scholar] [CrossRef]

- Gao, Y.; Yu, X.; Wu, C.; Zhou, W.; Wang, X.; Chu, H. Accurate and efficient segmentation of optic disc and optic cup in retinal images integrating multi-view information. IEEE Access 2019, 7, 148183–148197. [Google Scholar] [CrossRef]

- Fu, Y.; Chen, J.; Li, J.; Pan, D.; Yue, X.; Zhu, Y. Optic disc segmentation by U-net and probability bubble in abnormal fundus images. Pattern Recognit. 2021, 117, 107971. [Google Scholar] [CrossRef]

- Manju, K.; Sabeenian, R.; Surendar, A. A review on optic disc and cup segmentation. Biomed. Pharmacol. J. 2017, 10, 373–379. [Google Scholar] [CrossRef]

- Almazroa, A.; Burman, R.; Raahemifar, K.; Lakshminarayanan, V. Optic disc and optic cup segmentation methodologies for glaucoma image detection: A survey. J. Ophthalmol. 2015, 2015, 8092. [Google Scholar] [CrossRef]

- Liu, T.; Li, H.; Song, W.; Jiao, S.; Zhang, H.F. Fundus camera guided photoacoustic ophthalmoscopy. Curr. Eye Res. 2013, 38, 1229–1234. [Google Scholar] [CrossRef] [Green Version]

- Akram, M.U.; Usmani, D.; Ahmad, T.; Abbas, S.; Noor, S.F. Seamless fundus image stitching using wld to improve field of view. In Proceedings of the 2015 Fifth International Conference on Digital Information and Communication Technology and its Applications (DICTAP), Beirut, Lebanon, 29 April–1 May 2015; pp. 106–110. [Google Scholar] [CrossRef]

- Jackman, T.; Webster, J. Milestones, Rivalries and Controversy, Part III. Trans. Amer. Ophth Soc. 1887, 23, 568–571. [Google Scholar]

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A.; et al. Feedback on a publicly distributed image database: The Messidor database. Image Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef]

- Carmona, E.J.; Rincón, M.; García-Feijoó, J.; Martínez-de-la Casa, J.M. Identification of the optic nerve head with genetic algorithms. Artif. Intell. Med. 2008, 43, 243–259. [Google Scholar] [CrossRef]

- Ayub, J.; Ahmad, J.; Muhammad, J.; Aziz, L.; Ayub, S.; Akram, U.; Basit, I. Glaucoma detection through optic disc and cup segmentation using K-mean clustering. In Proceedings of the 2016 international conference on computing, electronic and electrical engineering (ICE Cube), Quetta, Pakistan, 11–12 April 2016; pp. 143–147. [Google Scholar] [CrossRef]

- Sevastopolsky, A. Optic disc and cup segmentation methods for glaucoma detection with modification of U-Net convolutional neural network. Pattern Recognit. Image Anal. 2017, 27, 618–624. [Google Scholar] [CrossRef]

- Lu, Z.; Chen, D. Weakly supervised and semi-supervised semantic segmentation for optic disc of fundus image. Symmetry 2020, 12, 145. [Google Scholar] [CrossRef]

- Juneja, M.; Singh, S.; Agarwal, N.; Bali, S.; Gupta, S.; Thakur, N.; Jindal, P. Automated detection of Glaucoma using deep learning convolution network (G-net). Multimed. Tools Appl. 2020, 79, 15531–15553. [Google Scholar] [CrossRef]

- Wang, S.; Yu, L.; Li, K.; Yang, X.; Fu, C.W.; Heng, P.A. Boundary and entropy-driven adversarial learning for fundus image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, switzerland, 2019; pp. 102–110. [Google Scholar] [CrossRef]

- Zilly, J.; Buhmann, J.M.; Mahapatra, D. Glaucoma detection using entropy sampling and ensemble learning for automatic optic cup and disc segmentation. Comput. Med. Imaging Graph. 2017, 55, 28–41. [Google Scholar] [CrossRef]

- Thakur, N.; Juneja, M. Optic disc and optic cup segmentation from retinal images using hybrid approach. Expert Syst. Appl. 2019, 127, 308–322. [Google Scholar] [CrossRef]

| Channel | Average | Variance |

|---|---|---|

| Red | 4.9824 | 5.4550 |

| Blue | 4.4111 | 11.6290 |

| Green | 4.6481 | 5.9764 |

| Hue | 1.8755 | 10.1563 |

| Saturation | 2.1173 | 3.0322 |

| Value | 5.0408 | 5.4443 |

| Lightness | 4.6617 | 5.1113 |

| A (green/magenta) | 2.0801 | 2.5126 |

| B (blue/yellow) | 2.6658 | 4.4554 |

| Score | Criteria |

|---|---|

| 0 | There are obvious errors in the boundaries of the four sides. |

| 1 | There are errors in the boundaries of four sides, but better than 0. |

| 2 | Only one side is accurate enough * |

| 3 | Two sides are accurate enough. |

| 4 | Three sides are accurate enough. |

| 5 | All sides are accurate enough. |

| Parameters | Value |

|---|---|

| The average radius of OD | In posterior fundus images, it is set as the of the radius of the visible circular area. In wide-angle fundus images, it is set as the of the radius of the visible circular area. |

| 0.1 | |

| 3.0 | |

| 0.2 | |

| 4.3 | |

| 2.0 | |

| 1.1 |

| Dataset | DRISHTI-GS | TMUEH |

|---|---|---|

| Maximal IoU | 0.9767 | 0.9300 |

| Minimal IoU | 0.5933 | 0.5205 |

| Average IoU | 0.9275 | 0.8179 |

| Variance in IoU | 0.0025 | 0.0104 |

| IoU | 88/101 cases | 8/37 cases |

| IoU | 11/101 cases | 20/37 cases |

| IoU | 2/101 cases | 9/37 cases |

| Dataset | DRISHTI-GS | TMUEH |

|---|---|---|

| Average FSE | 4.6436 | 3.5946 |

| FSE = 5 | 83/101 cases | 12/37 cases |

| FSE = 4 | 8/101 cases | 10/37 cases |

| FSE = 3 | 5/101 cases | 7 /37 cases |

| FSE = 2 | 2/101 cases | 5/37 cases |

| FSE = 1 | 3/101 cases | 2/37 cases |

| FSE = 0 | 0/101 cases | 1 case/37 cases |

| Approaches | Average IoU |

|---|---|

| U-Net [57] | 0.8900 |

| BEAL-Deeplabv4+ [60] | 0.8620 |

| LARKIFCM [62] | 0.9100 |

| U-Net [58] | 0.9187 |

| EE-CNN [61] | 0.9140 |

| U-Net [59] | 0.9062 |

| Proposed | 0.9275 |

| Dataset | Active Contour-Based [31] | Threshold-Based [18] | Proposed |

|---|---|---|---|

| Maximal IoU | 0.9695 | 0.9720 | 0.9767 |

| Minimal IoU | 0 | 0 | 0.5933 |

| Average IoU | 0.8757 | 0.8760 | 0.9275 |

| Variance in IoU | 0.0149 | 0.0255 | 0.0025 |

| IoU | 58/101 cases | 74/101 cases | 88/101 cases |

| IoU | 29/101 cases | 17/101 cases | 11/101 cases |

| IoU | 14/101 cases | 10/101 cases | 2/101 cases |

| Dataset | Active Contour-Based [31] | Threshold-Based [18] | Proposed |

|---|---|---|---|

| Maximal IoU | 0.9425 | 0.9419 | 0.9300 |

| Minimal IoU | 0.1711 | 0.4671 | 0.5205 |

| Average IoU | 0.7321 | 0.7614 | 0.8179 |

| Variance in IoU | 0.0330 | 0.0141 | 0.0104 |

| IoU | 3/37 cases | 4 /37 cases | 8/37 cases |

| IoU | 16/37 cases | 15/37 cases | 20/37 cases |

| IoU | 18/37 cases | 18/37 cases | 9/37 cases |

| Method | Active Contour-Based [31] | Threshold-Based [18] | Proposed |

|---|---|---|---|

| Average FSE | 4.3069 | 4.3762 | 4.6436 |

| FSE = 5 | 60/101 cases | 74/101 cases | 83/101 cases |

| FSE = 4 | 19/101 cases | 14 /101 cases | 8/101 cases |

| FSE = 3 | 17/101 cases | 3/101 cases | 5/101 cases |

| FSE = 2 | 4/101 cases | 3/101 cases | 2/101 cases |

| FSE = 1 | 0/101 cases | 1 case/101 cases | 3/101 cases |

| FSE = 0 | 1 case/101 cases | 6/101 cases | 0/101 cases |

| Method | Active Contour-Based [31] | Threshold-Based [18] | Proposed |

|---|---|---|---|

| Average FSE | 3.4595 | 3.5135 | 3.5946 |

| FSE = 5 | 12/37 cases | 14/37 cases | 12/37 cases |

| FSE = 4 | 11/37 cases | 6 /37 cases | 10/37 cases |

| FSE = 3 | 3/37 cases | 7/37 cases | 7/37 cases |

| FSE = 2 | 7/37 cases | 7/37 cases | 5/37 cases |

| FSE = 1 | 1 case/37 cases | 1 case/37 cases | 2/37 cases |

| FSE = 0 | 3/37 cases | 2/37 cases | 1 case /37 cases |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, X.; Wang, L.; Du, W.; Fujiwara, Y.; Peng, Y. Multiple Preprocessing Hybrid Level Set Model for Optic Disc Segmentation in Fundus Images. Sensors 2022, 22, 6899. https://doi.org/10.3390/s22186899

Xue X, Wang L, Du W, Fujiwara Y, Peng Y. Multiple Preprocessing Hybrid Level Set Model for Optic Disc Segmentation in Fundus Images. Sensors. 2022; 22(18):6899. https://doi.org/10.3390/s22186899

Chicago/Turabian StyleXue, Xiaozhong, Linni Wang, Weiwei Du, Yusuke Fujiwara, and Yahui Peng. 2022. "Multiple Preprocessing Hybrid Level Set Model for Optic Disc Segmentation in Fundus Images" Sensors 22, no. 18: 6899. https://doi.org/10.3390/s22186899

APA StyleXue, X., Wang, L., Du, W., Fujiwara, Y., & Peng, Y. (2022). Multiple Preprocessing Hybrid Level Set Model for Optic Disc Segmentation in Fundus Images. Sensors, 22(18), 6899. https://doi.org/10.3390/s22186899