Single-Shot Object Detection via Feature Enhancement and Channel Attention

Abstract

:1. Introduction

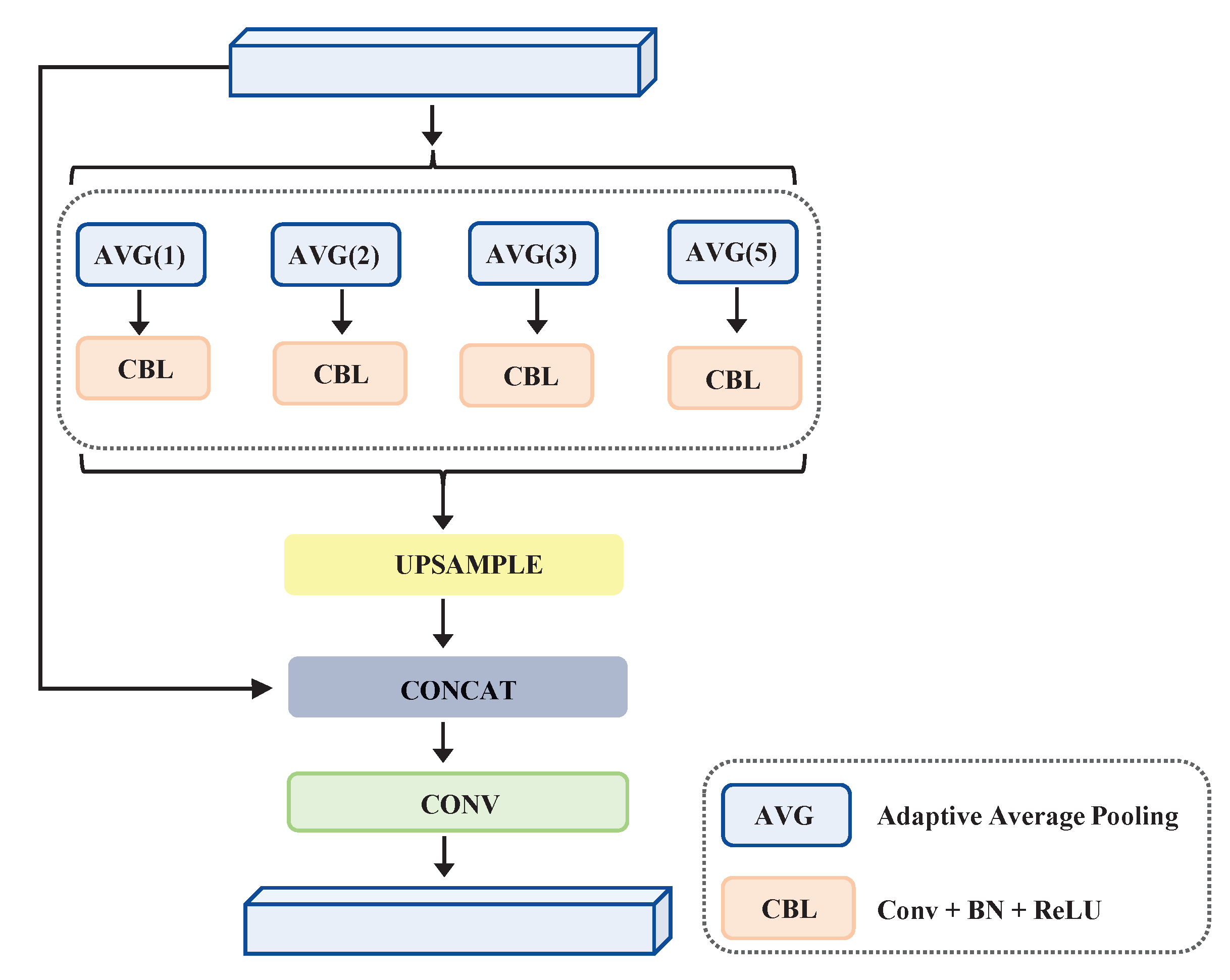

- For feature enhancement, we propose a lightweight efficient feature-extraction module (EFM) and pyramidal aggregation module (PAM). The EFM applies various dilation rates, batch normalization (BN), and ReLU to more richly explore the contextual information of the CNN. The PAM uses different adaptive average pooling sizes to exploit richer semantic information from the deep layers, and upsampling is embraced to keep the same feature size as that of the original input feature map.

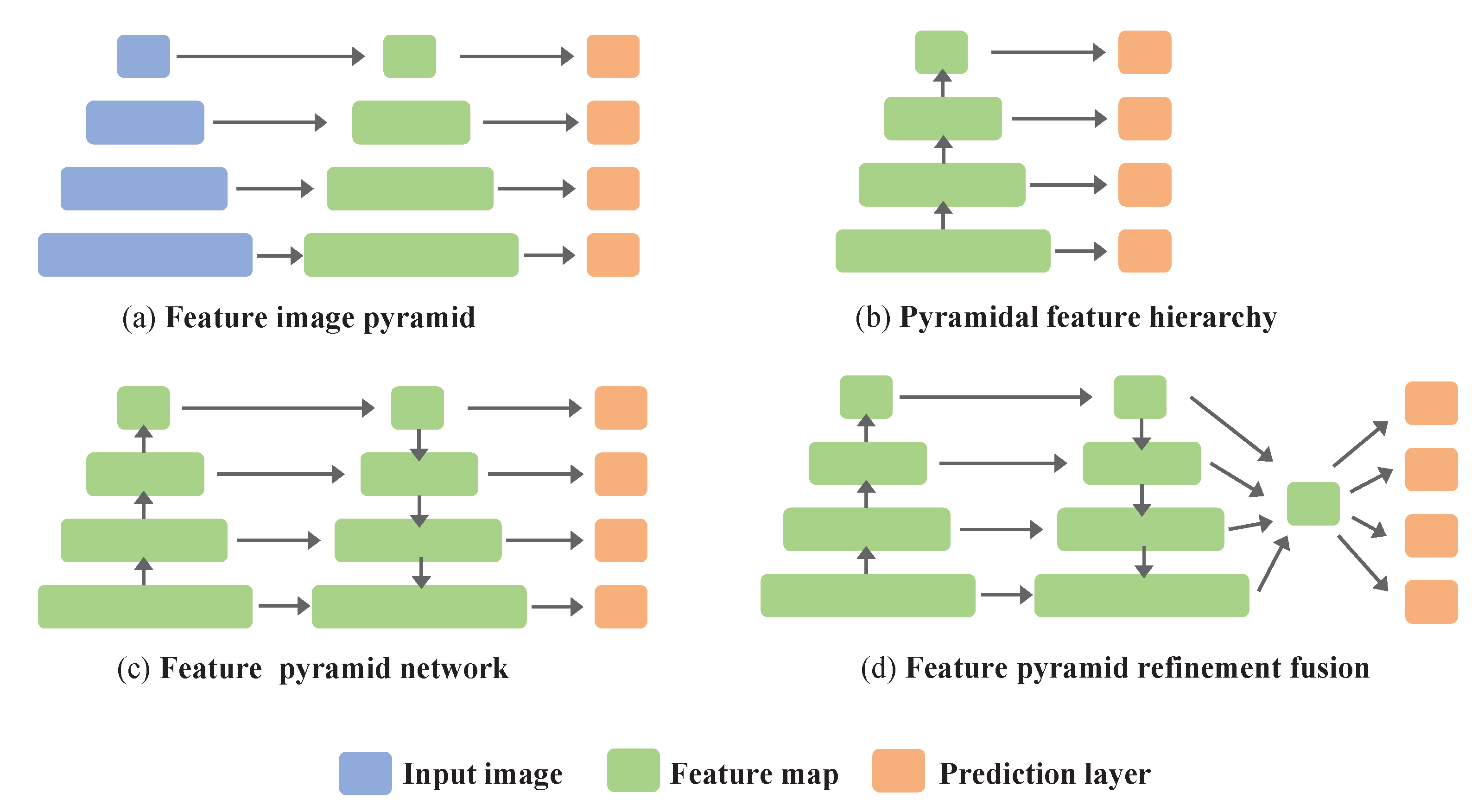

- Aiming to make full multi-scale features, we built an effective feature pyramid refinement fusion (FPRF) to calibrate the multi-scale features during the fusion process. The FPRF broadens the ways of the single lateral connections of the traditional FPN and enriches the approaches of multi-scale feature fusion, thus greatly improving the detection performance.

- To alleviate the aliasing effects of the FPN, we introduce an attention-guided module (AGM); an improved channel attention mechanism was developed to ameliorate the problem of fused features, and it is efficient and speeds up the training process.

- By applying the above four improvements, we designed a feature-enhancement and channel-attention-guided single-shot detector (FCSSD). Experiments on the PASCAL VOC2007 and MS COCO2017 datasets showed the effectiveness of our proposed FCSSD and that it can outperform mainstream object detectors.

2. Related Work

2.1. Deep-Learning-Based Object Detectors

2.2. Enhancement of Feature Representation

2.3. Attention Mechanisms

3. Methodology

3.1. FCSSD Architecture

3.2. Efficient Feature-Extraction Module

3.3. Pyramidal Aggregation Module

3.4. Feature Pyramid Refinement Fusion

3.5. Attention-Guided Module

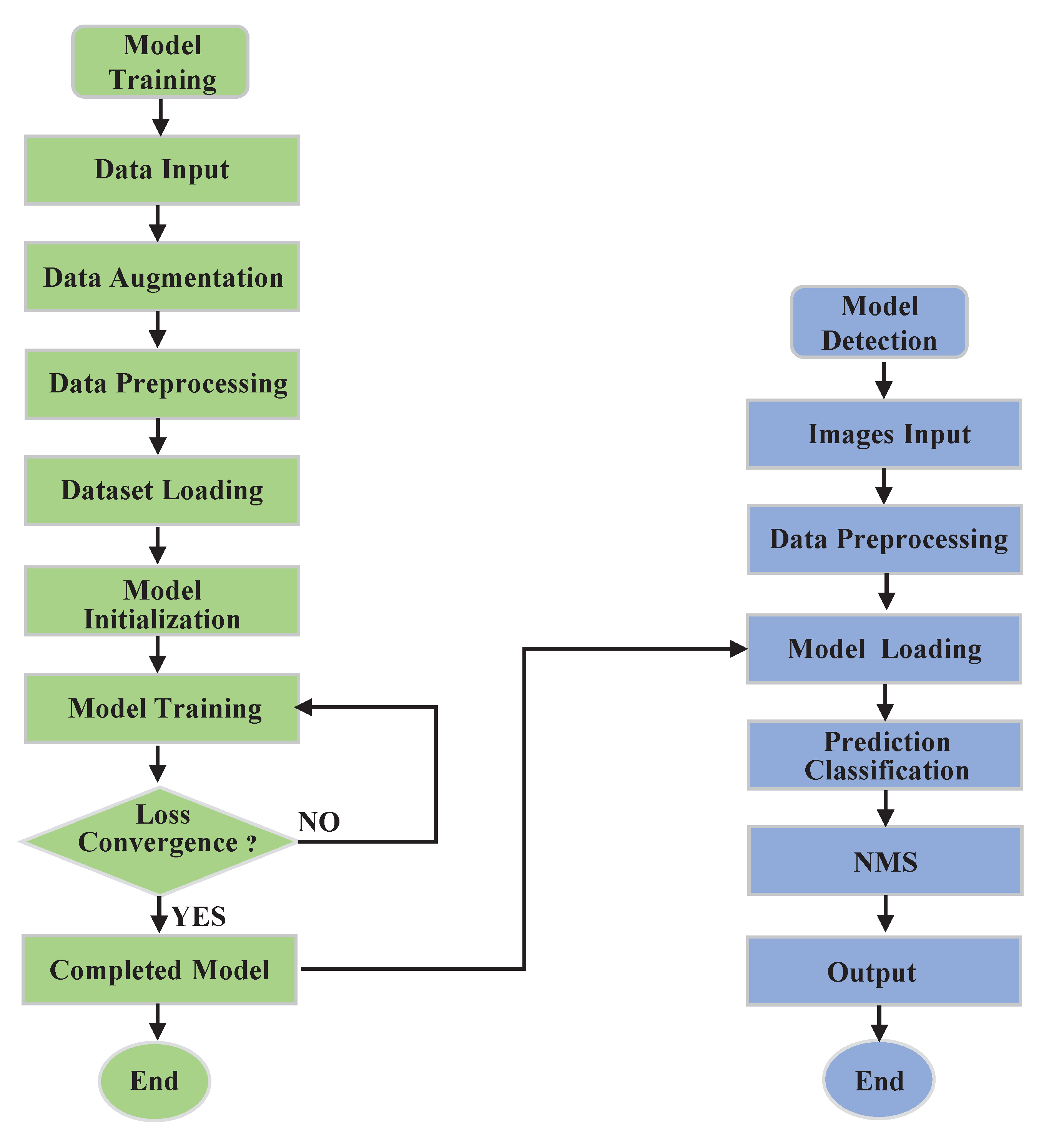

4. Results

4.1. Dataset and Experimental Details

4.2. Evaluation Metric

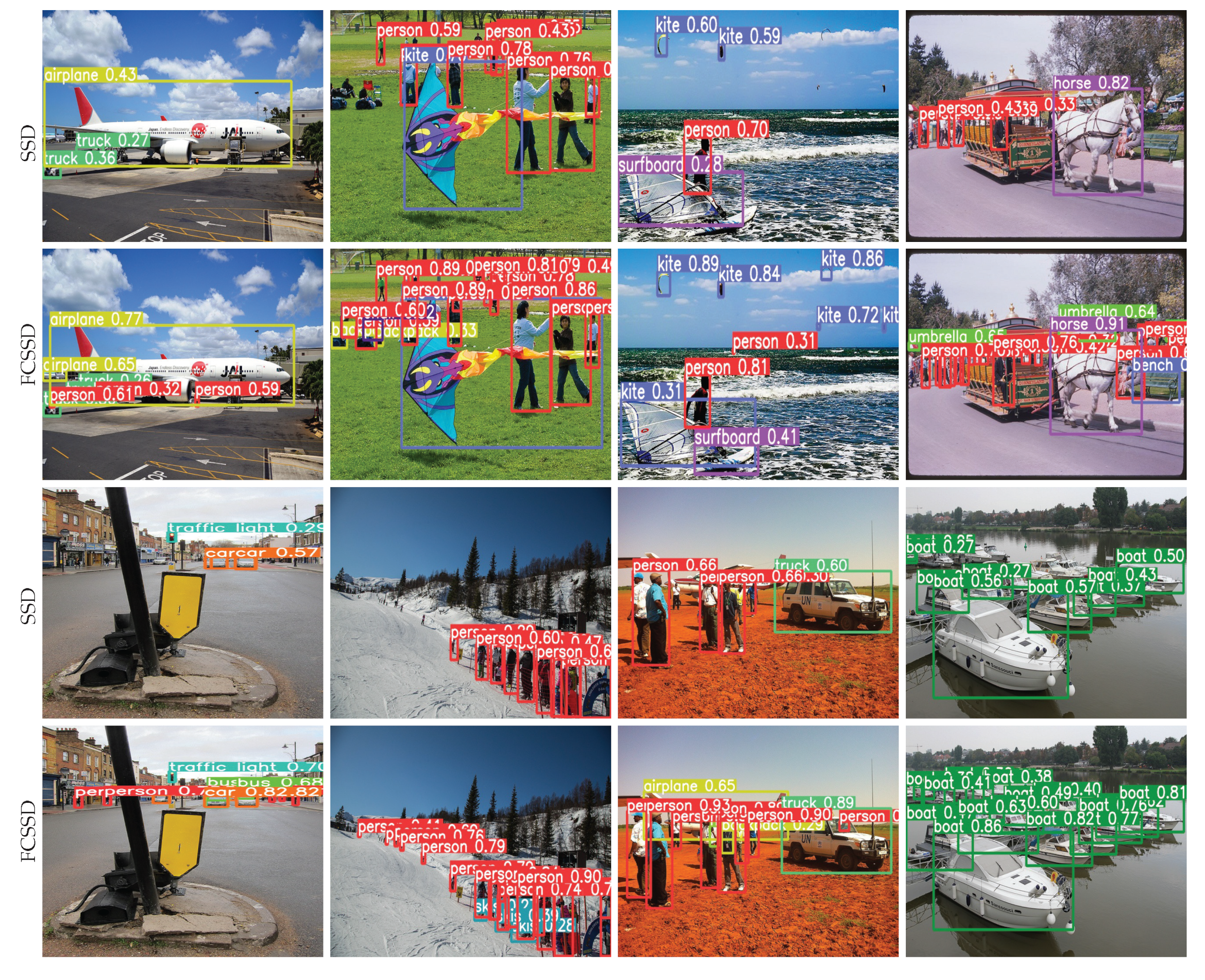

4.3. Experimental Analysis

4.3.1. PASCAL VOC 2007

4.3.2. MS COCO

4.3.3. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kang, K.; Li, H.; Yan, J.; Zeng, X.; Yang, B.; Xiao, T.; Zhang, C.; Wang, Z.; Wang, R.; Wang, X.; et al. T-cnn: Tubelets with convolutional neural networks for object detection from videos. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2896–2907. [Google Scholar] [CrossRef]

- Wu, Q.; Shen, C.; Wang, P.; Dick, A.; Van Den Hengel, A. Image captioning and visual question answering based on attributes and external knowledge. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1367–1381. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; He, K.; Sun, J. Instance-aware semantic segmentation via multi-task network cascades. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3150–3158. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision; 2015; pp. 1440–1448. Available online: https://openaccess.thecvf.com/content_iccv_2015/html/Girshick_Fast_R-CNN_ICCV_2015_paper.html (accessed on 9 July 2022).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. Available online: https://proceedings.neurips.cc/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html (accessed on 9 July 2022). [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Li, Z.; Zhou, F. FSSD: Feature fusion single shot multibox detector. arXiv 2017, arXiv:1712.00960. [Google Scholar]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra r-cnn: Towards balanced learning for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 821–830. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020; pp. 10781–10790. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Liu, Y.; Wang, Y.; Wang, S.; Liang, T.; Zhao, Q.; Tang, Z.; Ling, H. Cbnet: A novel composite backbone network architecture for object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11653–11660. [Google Scholar]

- Kim, S.W.; Kook, H.K.; Sun, J.Y.; Kang, M.C.; Ko, S.J. Parallel feature pyramid network for object detection. In Proceedings of the European Conference on Computer Vision (ECCV), online, 8–14 September 2018; pp. 234–250. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Li, Y.; Chen, Y.; Wang, N.; Zhang, Z. Scale-aware trident networks for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 6054–6063. [Google Scholar]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-shot refinement neural network for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4203–4212. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Ren, M.; Zemel, R.S. End-to-end instance segmentation with recurrent attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6656–6664. [Google Scholar]

- Lu, J.; Xiong, C.; Parikh, D.; Socher, R. Knowing when to look: Adaptive attention via a visual sentinel for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 375–383. [Google Scholar]

- Li, H.; Liu, Y.; Ouyang, W.; Wang, X. Zoom out-and-in network with map attention decision for region proposal and object detection. Int. J. Comput. Vis. 2019, 127, 225–238. [Google Scholar] [CrossRef]

- Choi, H.T.; Lee, H.J.; Kang, H.; Yu, S.; Park, H.H. SSD-EMB: An improved SSD using enhanced feature map block for object detection. Sensors 2021, 21, 2842. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Qilong, W.; Banggu, W.; Pengfei, Z.; Peihua, L.; Wangmeng, Z.; Qinghua, H. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In CVF Conference on Computer Vision and Pattern Recognition (CVPR); Available online: https://arxiv.org/abs/1910.03151 (accessed on 9 July 2022).

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-excite: Exploiting feature context in convolutional neural networks. Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 510–519. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), online, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Jiang, D.; Sun, B.; Su, S.; Zuo, Z.; Wu, P.; Tan, X. FASSD: A feature fusion and spatial attention-based single shot detector for small object detection. Electronics 2020, 9, 1536. [Google Scholar] [CrossRef]

- Luo, Y.; Cao, X.; Zhang, J.; Guo, J.; Shen, H.; Wang, T.; Feng, Q. CE-FPN: Enhancing channel information for object detection. Multimed. Tools Appl. 2022, 81, 30685–30704. [Google Scholar] [CrossRef]

- Li, H.; Liu, L.; Du, J.; Jiang, F.; Guo, F.; Hu, Q.; Fan, L. An Improved YOLOv3 for Foreign Objects Detection of Transmission Lines. IEEE Access 2022, 10, 45620–45628. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhao, C.; Wang, J.; Zhao, X.; Wu, Y.; Lu, H. Couplenet: Coupling global structure with local parts for object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4126–4134. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Salt Lake City, UT, USA, 18–22 June 2018; pp. 385–400. [Google Scholar]

- Bell, S.; Zitnick, C.L.; Bala, K.; Girshick, R. Inside-outside net: Detecting objects in context with skip pooling and recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2874–2883. [Google Scholar]

- Kong, T.; Sun, F.; Yao, A.; Liu, H.; Lu, M.; Chen, Y. Ron: Reverse connection with objectness prior networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5936–5944. [Google Scholar]

- Jeong, J.; Park, H.; Kwak, N. Enhancement of SSD by concatenating feature maps for object detection. arXiv 2017, arXiv:1705.09587. [Google Scholar]

- Woo, S.; Hwang, S.; Kweon, I.S. Stairnet: Top-down semantic aggregation for accurate one shot detection. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1093–1102. [Google Scholar]

- Zhang, Z.; Qiao, S.; Xie, C.; Shen, W.; Wang, B.; Yuille, A.L. Single-shot object detection with enriched semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5813–5821. [Google Scholar]

- Pang, Y.; Wang, T.; Anwer, R.M.; Khan, F.S.; Shao, L. Efficient featurized image pyramid network for single shot detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7336–7344. [Google Scholar]

- Zhao, Q.; Sheng, T.; Wang, Y.; Tang, Z.; Chen, Y.; Cai, L.; Ling, H. M2det: A single-shot object detector based on multi-level feature pyramid network. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9259–9266. [Google Scholar]

- Cao, J.; Pang, Y.; Li, X. Triply supervised decoder networks for joint detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7392–7401. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), online, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/cvf Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13029–13038. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

| Name | Train Images | Validation Images | Test Images | Category |

|---|---|---|---|---|

| VOC 2007 [23] | 2501 | 2510 | 4952 | 20 |

| MS COCO 2017 [40] | 11287 | 5000 | 40670 | 80 |

| Method | Training Data | Backbone | Input size | mAP | FPS |

|---|---|---|---|---|---|

| Two-stage detectors: | |||||

| Faster R-CNN [8] | 07 + 12 | VGG-16 | ∼ | 73.2 | 7 |

| Faster R-CNN [8] | 07 + 12 | ResNet-101 | ∼ | 76.4 | 5 |

| R-FCN [10] | 07 + 12 | ResNet-101 | ∼ | 80.5 | 9 |

| CoupleNet [44] | 07 + 12 | VGG-16 | ∼ | 82.7 | 8 |

| ION [46] | 07 + 12 | VGG-16 | ∼ | 79.2 | 1.3 |

| One-stage detectors: | |||||

| SSD [15] | 07 + 12 | VGG-16 | 77.2 | 46 | |

| RON320++ [47] | 07 + 12 | VGG-16 | 76.6 | 20 | |

| DSSD [17] | 07 + 12 | ResNet-101 | 78.6 | 10 | |

| R-SSD [48] | 07 + 12 | VGG-16 | 78.5 | 35 | |

| YOLOv2 [12] | 07 + 12 | DarkNet-19 | 78.6 | 40 | |

| StrairNet [49] | 07 + 12 | VGG-16 | 78.8 | 30 | |

| DES [50] | 07 + 12 | VGG-16 | 79.7 | 76 | |

| PFPNet [26] | 07 + 12 | VGG-16 | 80.0 | 40 | |

| RFBNet [45] | 07 + 12 | VGG-16 | 80.5 | 83 | |

| RefineDet [29] | 07 + 12 | VGG-16 | 79.7 | 76 | |

| FCSSD (Ours) | 07 + 12 | VGG-16 | 81.5 | 55 | |

| SSD [15] | 07 + 12 | VGG-16 | 77.2 | 46 | |

| FSSD [16] | 07 + 12 | VGG-16 | 80.9 | 35.7 | |

| DES [50] | 07 + 12 | VGG-16 | 81.7 | 31 | |

| RefineDet [29] | 07 + 12 | VGG-16 | 81.8 | 21 | |

| RFBNet [45] | 07 + 12 | VGG-16 | 82.2 | 38 | |

| PFPNet [26] | 07 + 12 | VGG-16 | 82.3 | 26 | |

| FCSSD (Ours) | 07 + 12 | VGG-16 | 83.2 | 35 | |

| Method | Backbone | Input Size | Time (ms) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Two-stage detectors: | |||||||||

| Faster R-CNN [8] | VGG-16 | 147 | 24.2 | 45.3 | 23.5 | 7.7 | 26.4 | 37.1 | |

| Faster FPN [19] | ResNet-101-FPN | 240 | 36.2 | 59.1 | 39.0 | 18.2 | 39.0 | 48.2 | |

| CoupleNet [44] | ResNet-101 | 121 | 34.4 | 54.8 | 37.2 | 13.4 | 38.1 | 50.8 | |

| Mask R-CNN [9] | ResNetXt-101-FPN | 210 | 39.8 | 62.3 | 43.4 | 22.1 | 43.2 | 51.2 | |

| Cascade R-CNN [24] | ResNet-101-FPN | 141 | 42.8 | 62.1 | 46.3 | 23.7 | 45.5 | 55.2 | |

| One-stage detectors: | |||||||||

| SSD [15] | VGG-16 | 20 | 25.1 | 43.1 | 25.8 | 6.6 | 25.9 | 41.4 | |

| DSSD [17] | ResNet-101 | - | 28.0 | 46.1 | 29.2 | 7.4 | 28.1 | 47.6 | |

| RetinaNet [18] | ResNet-101 | 90 | 34.4 | 53.1 | 36.8 | 14.7 | 38.5 | 49.1 | |

| DES [50] | VGG-16 | - | 28.3 | 47.3 | 29.4 | 8.5 | 29.9 | 45.2 | |

| RFBNet [45] | VGG-16 | 15 | 30.3 | 49.3 | 31.8 | 11.8 | 31.9 | 45.9 | |

| EFIPNet [51] | VGG-16 | 14 | 30.0 | 48.8 | 31.7 | 10.9 | 32.8 | 46.3 | |

| RefineDet320+ [29] | VGG-16 | - | 35.2 | 56.1 | 37.7 | 19.5 | 37.2 | 47.0 | |

| M2det [52] | VGG-16 | - | 38.9 | 59.1 | 42.4 | 24.4 | 41.5 | 47.6 | |

| FCSSD(ours) | VGG-16 | 35 | 38.2 | 59.4 | 41.5 | 15.3 | 40.3 | 58.6 | |

| YOLOv2 [12] | DarkNet | 25 | 21.6 | 44.0 | 19.2 | 5.0 | 22.4 | 35.5 | |

| YOLOv3 [13] | DarkNet-53 | 35 | 31.0 | 55.3 | 32.3 | 15.3 | 33.2 | 42.8 | |

| SSD [15] | VGG-16 | 28 | 28.8 | 48.5 | 30.3 | 10.9 | 31.8 | 43.5 | |

| DSSD [17] | ResNet-101 | 156 | 33.2 | 53.3 | 35.2 | 13.0 | 35.4 | 51.1 | |

| RefineDet [29] | VGG-16 | 45 | 33.0 | 54.5 | 35.5 | 16.3 | 36.3 | 44.3 | |

| RefineDet [29] | ResNet-101 | - | 36.4 | 57.5 | 39.5 | 16.6 | 39.9 | 51.4 | |

| RFBNet-E [45] | VGG-16 | 30 | 34.4 | 55.7 | 36.4 | 17.6 | 37.0 | 47.6 | |

| TripleNet [53] | ResNet-101 | - | 37.4 | 59.3 | 39.6 | 18.5 | 39.0 | 52.7 | |

| EfficientDet-D1 [21] | EfficientNet-B1 | 50 | 39.6 | 58.6 | 42.3 | 17.9 | 44.3 | 56.0 | |

| CornerNet [54] | Hourglass-104 | 227 | 40.5 | 56.5 | 43.1 | 19.4 | 42.7 | 53.9 | |

| YOLOv4 [14] | CSPDarkNet53 | 38 | 41.2 | 62.8 | 44.3 | 20.4 | 44.4 | 56.0 | |

| M2det [52] | VGG-16 | - | 42.9 | 62.5 | 47.2 | 28.0 | 47.4 | 52.8 | |

| Scaled-YOLOv4 [55] | CD53 | - | 45.5 | 64.1 | 49.5 | 27.0 | 49.0 | 56.7 | |

| YOLOX-M [56] | Modified CSP v5 | - | 46.4 | 65.4 | 50.6 | 26.3 | 51.0 | 59.9 | |

| FCSSD(ours) | VGG-16 | 65 | 41.5 | 63.1 | 46.9 | 21.8 | 46.2 | 56.5 | |

| Method | EFM | PAM | FPRF | AGM | mAP |

|---|---|---|---|---|---|

| Baseline SSD | 77.2 | ||||

| (a) | ✓ | 78.6 | |||

| (b) | ✓ | 78.4 | |||

| (c) | ✓ | 79.2 | |||

| (d) | ✓ | ✓ | 79.5 | ||

| (e) | ✓ | ✓ | 80.2 | ||

| FCSSD (ours) | ✓ | ✓ | ✓ | ✓ | 81.5 |

| Scheme | Methods | ||||||

|---|---|---|---|---|---|---|---|

| A | Baseline SSD | 25.1 | 43.1 | 25.8 | 6.6 | 25.9 | 41.4 |

| B | A + EFM | 27.2 | 45.2 | 27.2 | 7.9 | 27.3 | 44.6 |

| C | B + PAM | 29.3 | 47.9 | 30.6 | 9.1 | 30.1 | 45.2 |

| D | C + FPRF | 37.5 | 56.9 | 38.8 | 13.5 | 38.3 | 54.2 |

| E | D + AGM | 38.2 | 59.4 | 41.5 | 15.3 | 40.3 | 58.6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wang, L.; Wang, Z. Single-Shot Object Detection via Feature Enhancement and Channel Attention. Sensors 2022, 22, 6857. https://doi.org/10.3390/s22186857

Li Y, Wang L, Wang Z. Single-Shot Object Detection via Feature Enhancement and Channel Attention. Sensors. 2022; 22(18):6857. https://doi.org/10.3390/s22186857

Chicago/Turabian StyleLi, Yi, Lingna Wang, and Zeji Wang. 2022. "Single-Shot Object Detection via Feature Enhancement and Channel Attention" Sensors 22, no. 18: 6857. https://doi.org/10.3390/s22186857

APA StyleLi, Y., Wang, L., & Wang, Z. (2022). Single-Shot Object Detection via Feature Enhancement and Channel Attention. Sensors, 22(18), 6857. https://doi.org/10.3390/s22186857