Open and Cost-Effective Digital Ecosystem for Lake Water Quality Monitoring

Abstract

:1. Introduction

- resistance to change: human resources are often reluctant to change their mindset and way of working [29];

- lack of data interoperability between systems: this is a particularly delicate topic, as in the environmental system there are usually many proprietary solutions, each with its own standards for data collection, logging, transmission and sharing. This very wide topic concerns architecture, protocols and standards [30];

- lack of an easy-to-play solution: this aspect is strongly connected to the previous one, since the problem of having different kinds of proprietary solutions highlights the lack of a simple and smooth way of importing and integrating different data formats in a single solution;

- costs: it is a common belief that the benefit or value of having data in real time and with high frequency cannot outweigh the initial investment and maintenance costs [21].

- it is an expensive procedure since it requires costly external analyses and manpower/working hours;

- highly qualified personnel is involved during the campaign, with very high cost per hour;

- results are based on a low temporal resolution time series (monthly samples);

- during the sampling process, a potentially dangerous radioactive component is manipulated by technicians.

2. Materials and Methods

- use as much as possible an open source approach either for the software or for the hardware part;

- use open standards for data integration and sharability;

- maximise the possibility of easily extending and customising the software;

- use cloud technologies to deploy server side components.

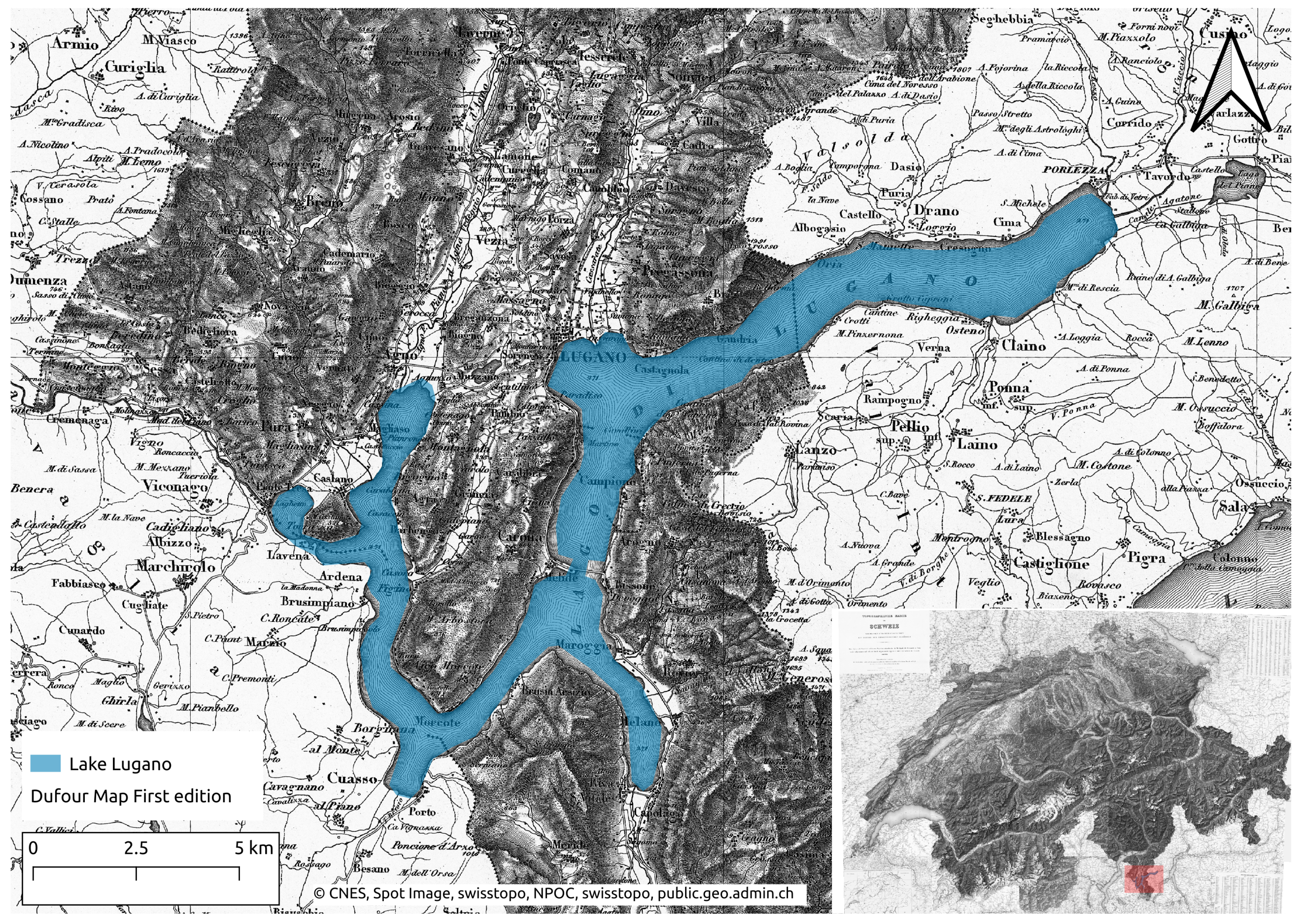

2.1. Area of Study

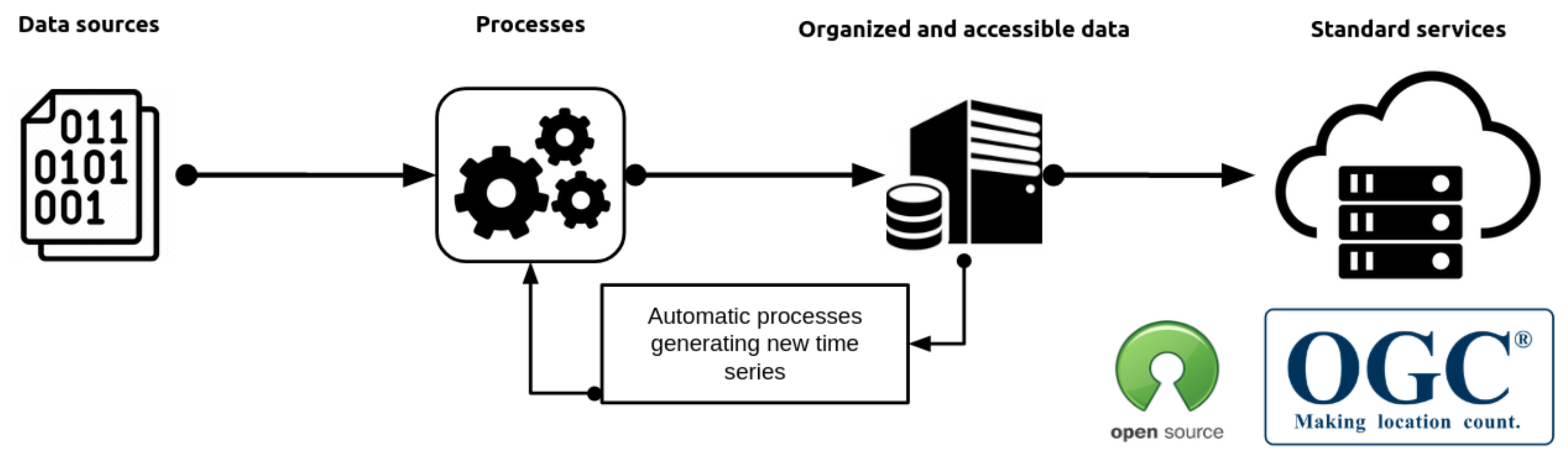

2.2. The System Architecture

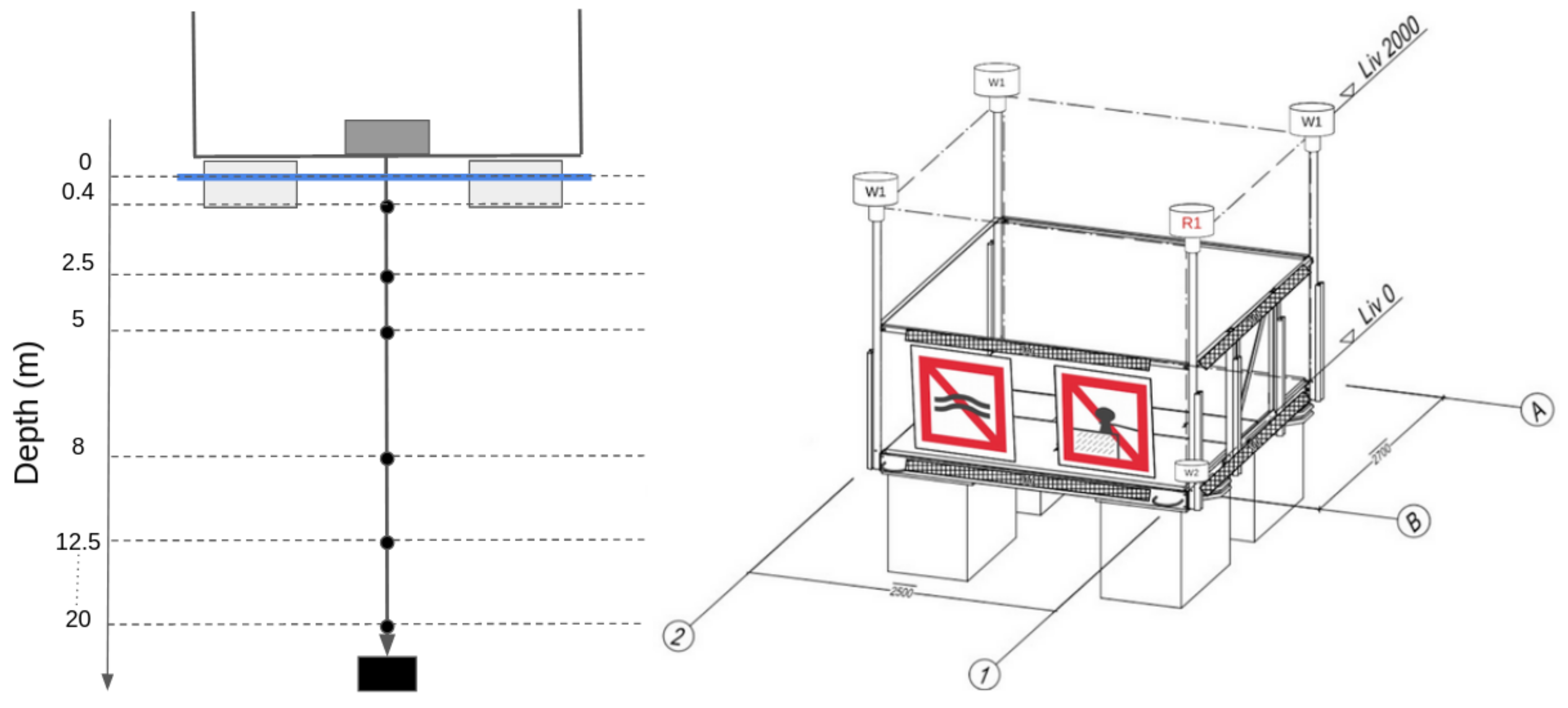

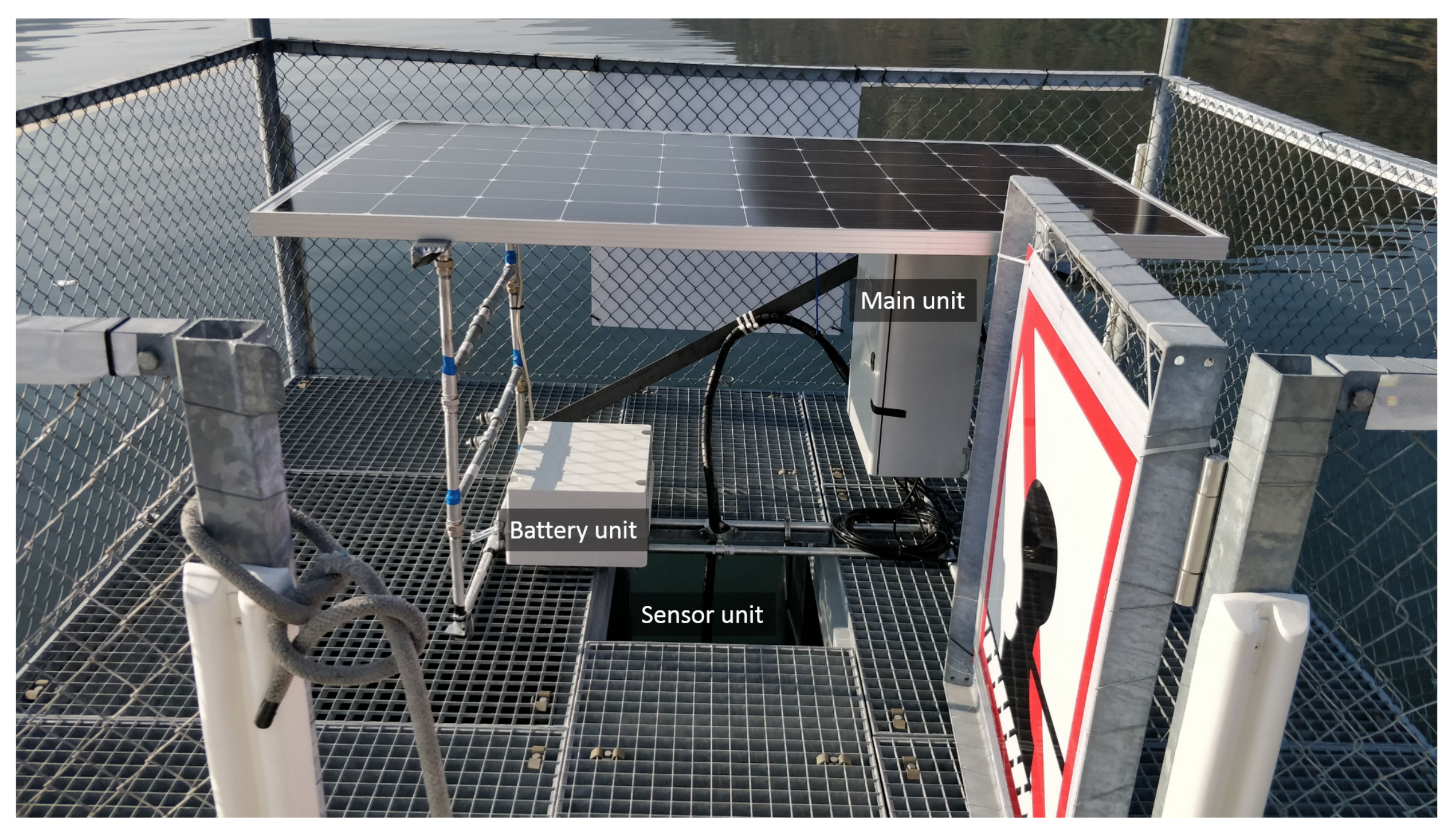

2.3. The Automatic High Frequency Monitoring (AHFM)

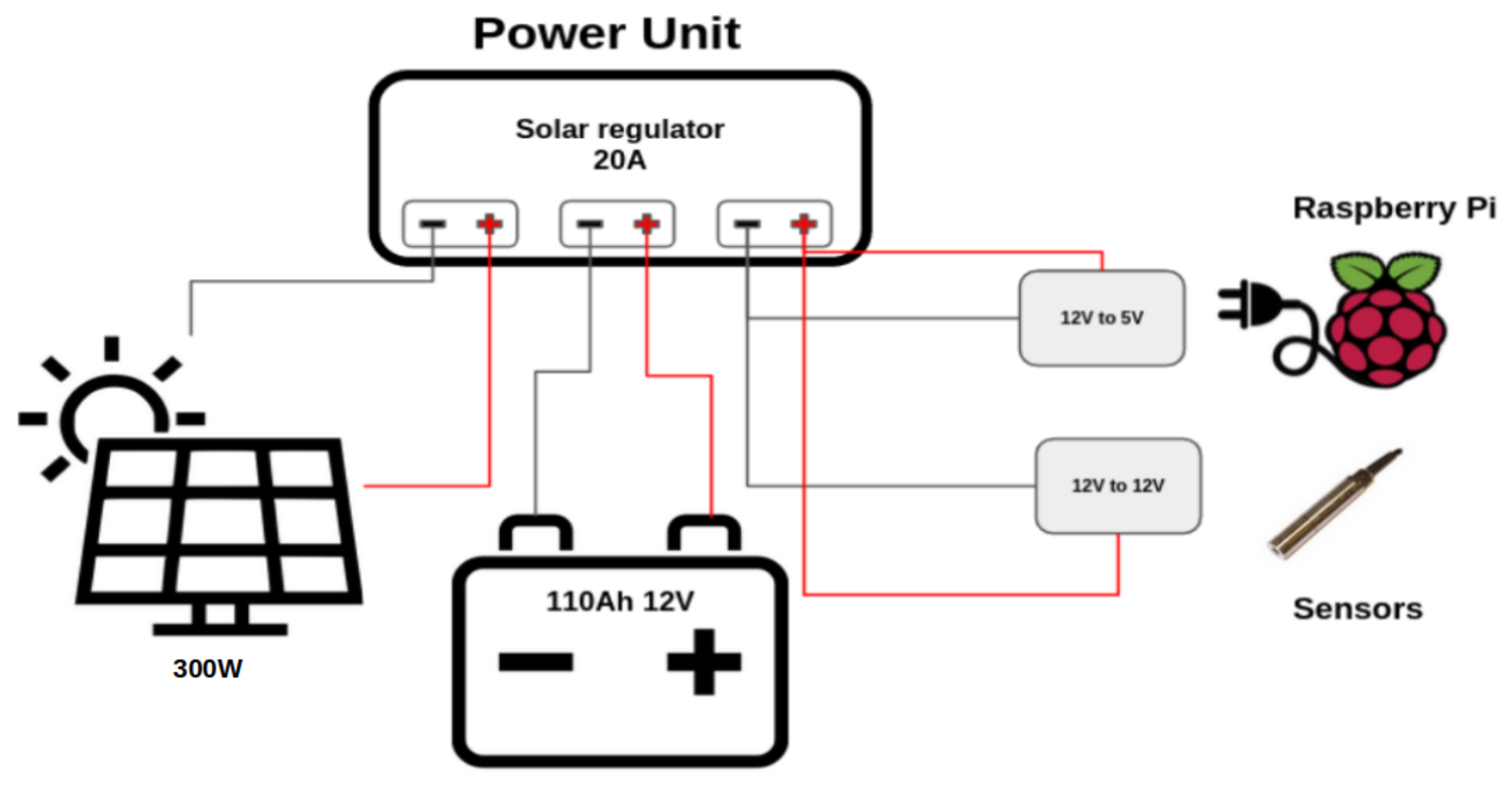

2.3.1. The Power Unit

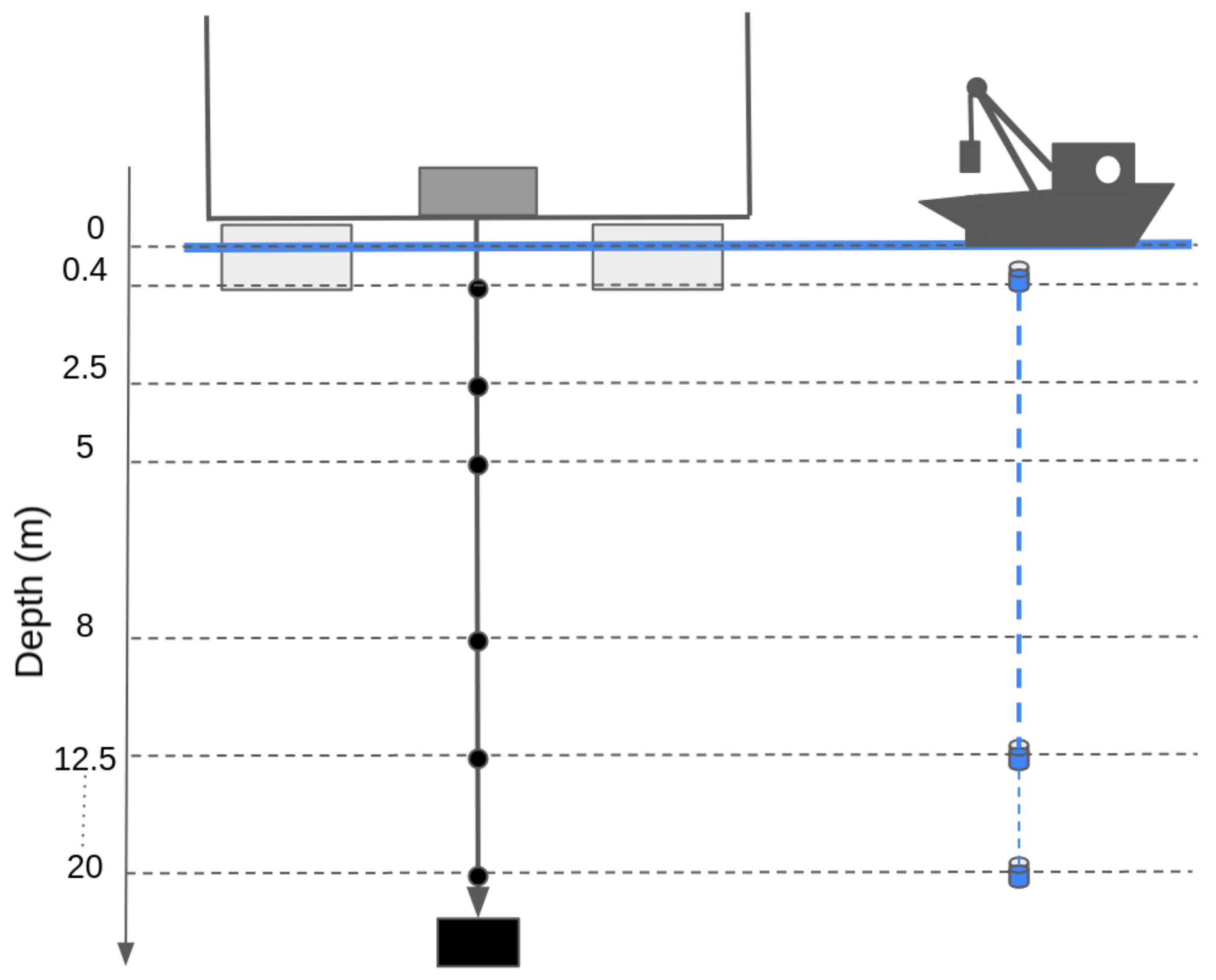

2.3.2. The Sensor Unit

2.3.3. The Main Unit

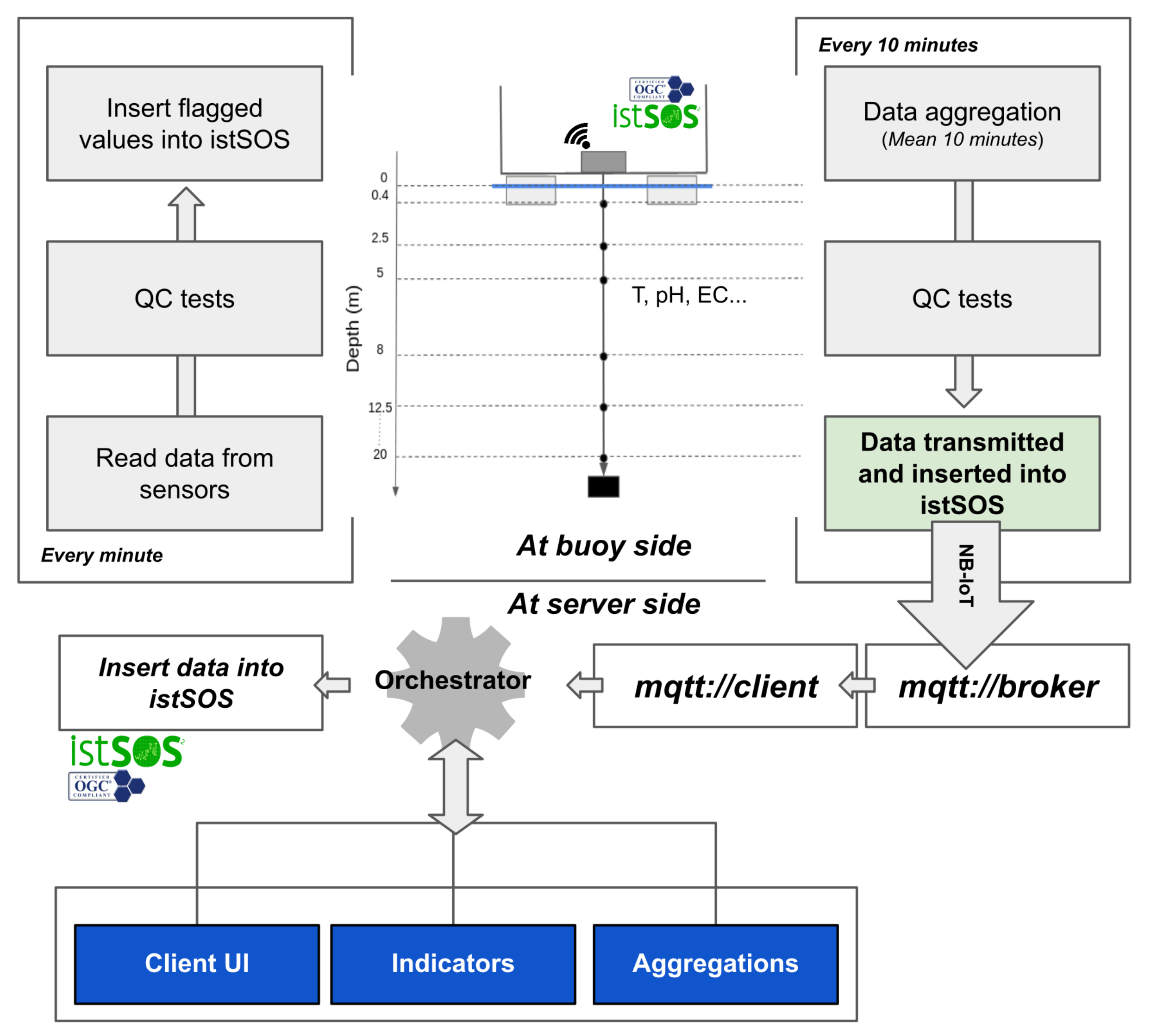

2.3.4. The Firmware Structure

- check the availability of the configured sensors;

- register sensors in local and remote istSOS;

- create the read and write script that collects raw data from sensors based on the frequency set in the configuration file;

- create the aggregation script that collects raw data from istSOS and aggregate them using the frequency set in the configuration file;

- generate the Python script which obtains the aggregated data from local istSOS, activates the NB-IoT module and transmits each single observation to the remote istSOS.

2.4. The Data Management

2.4.1. Data Sources and Standards

- data from manual sampling campaign based on sensor probes;

- data from laboratory analysis performed on water samples;

- data from satellites;

- data coming from a real-time monitoring system.

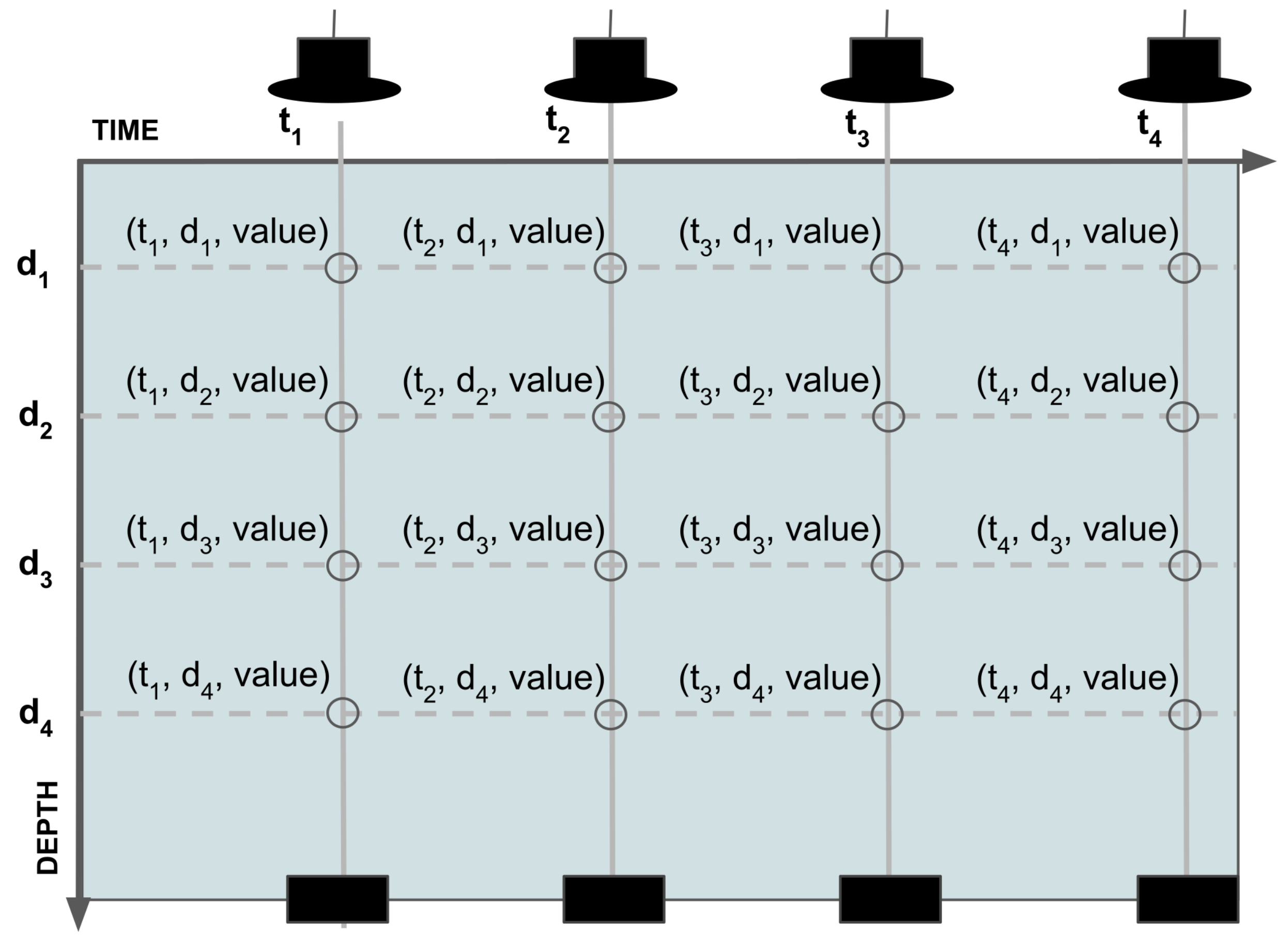

2.4.2. Support for Profile Data Type

2.5. Data Quality Control Process

QC Process at the Edge

3. Results

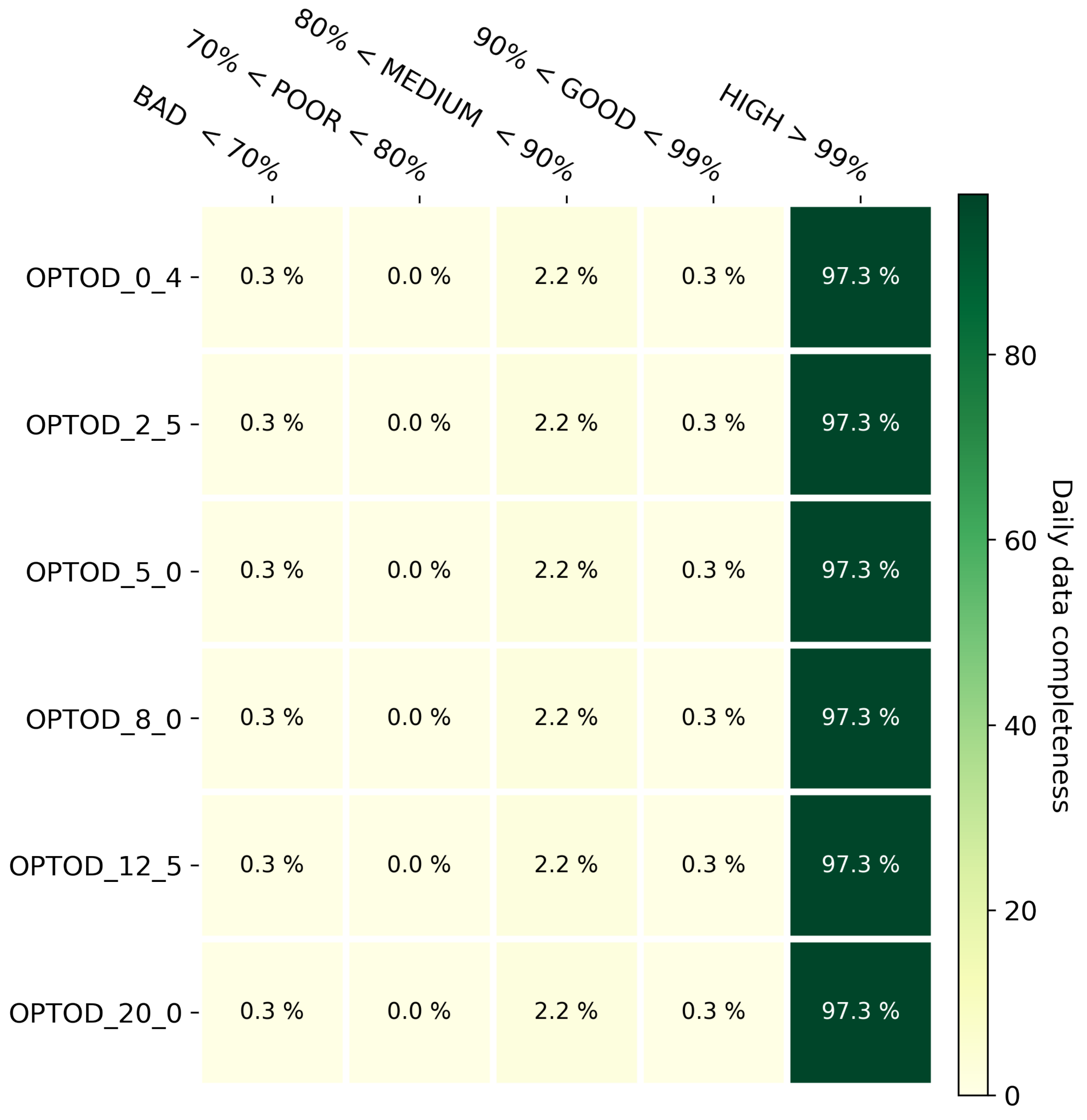

3.1. Data Completeness

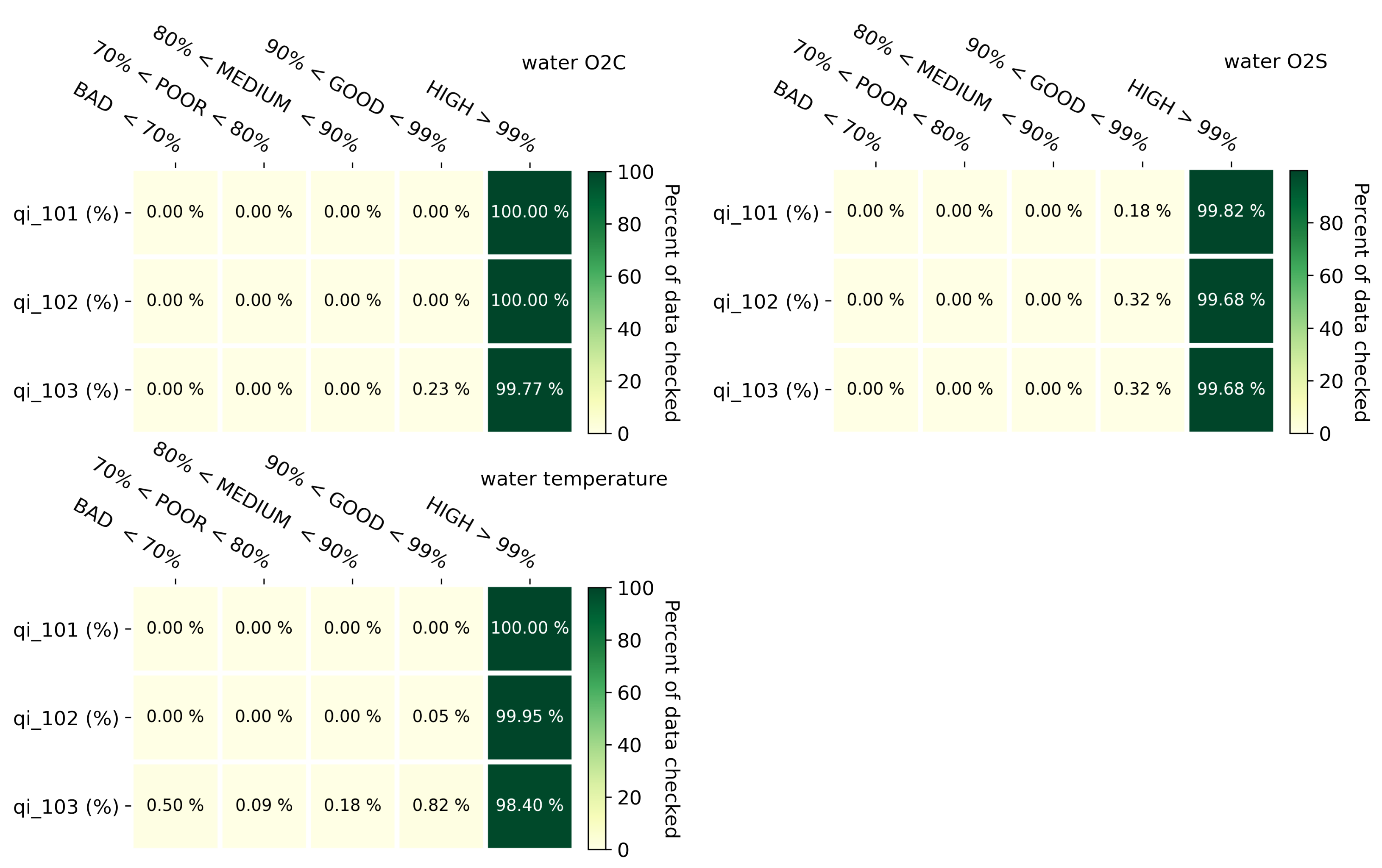

3.2. Data Quality

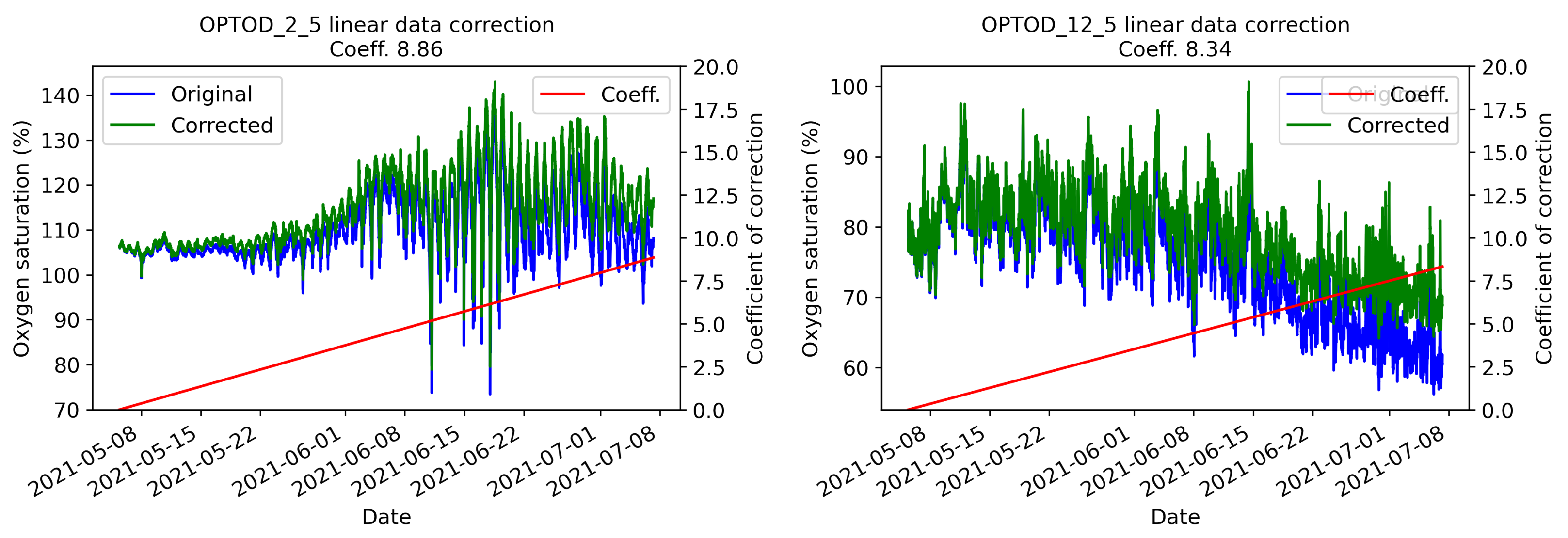

3.3. Maintenance and Calibration

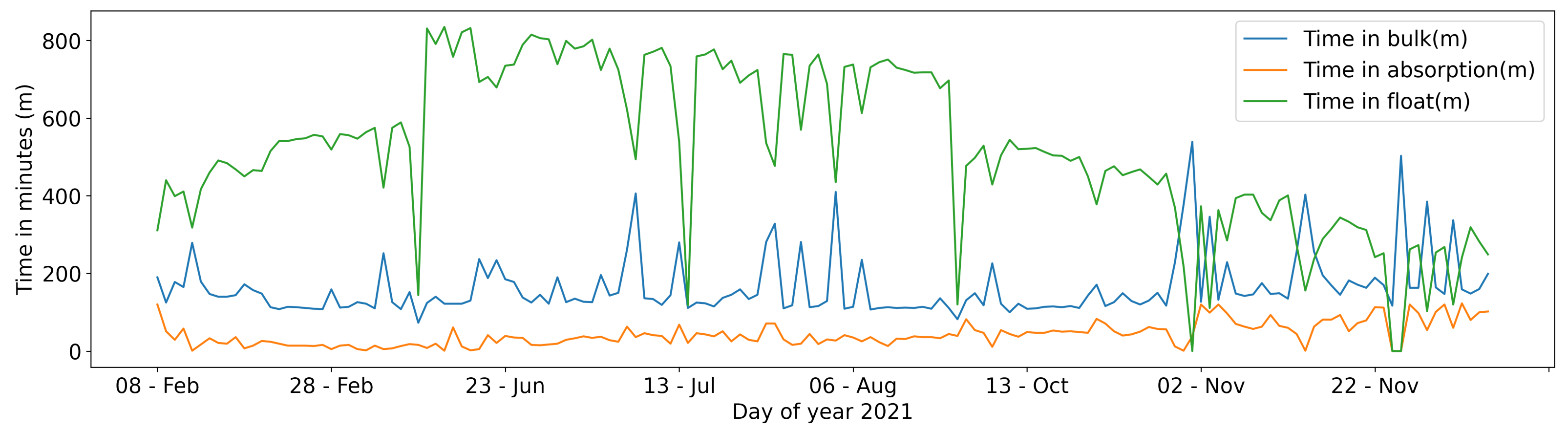

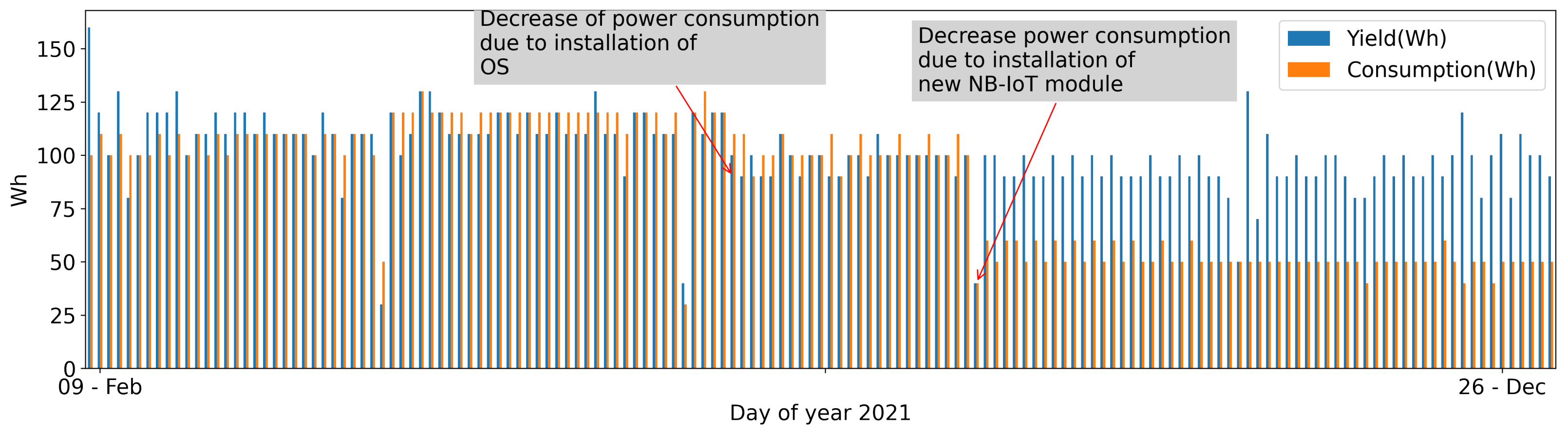

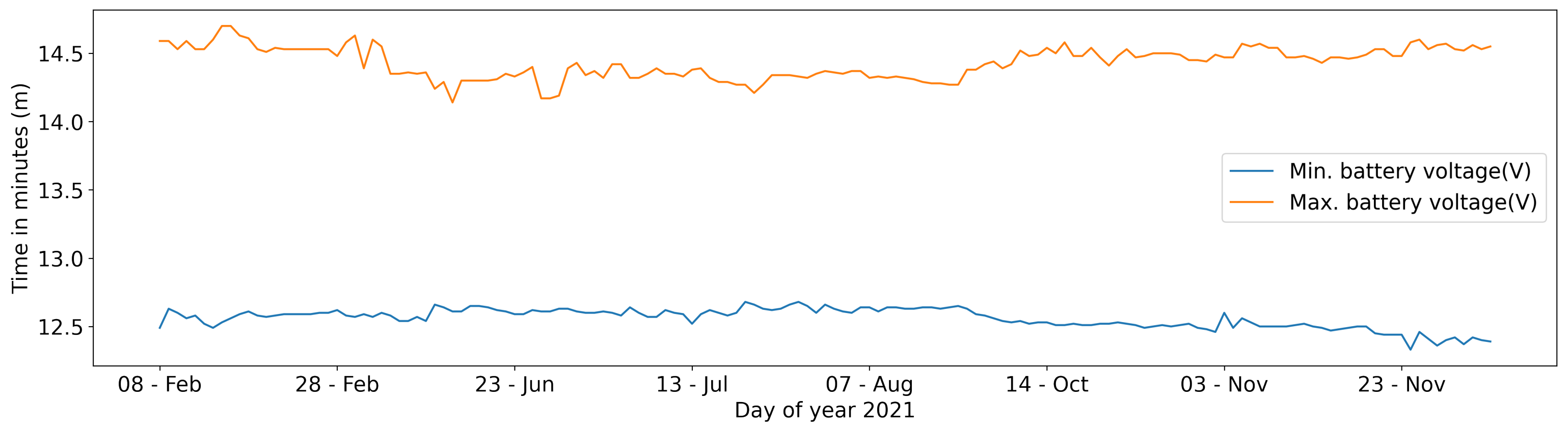

3.4. The Powering System Data

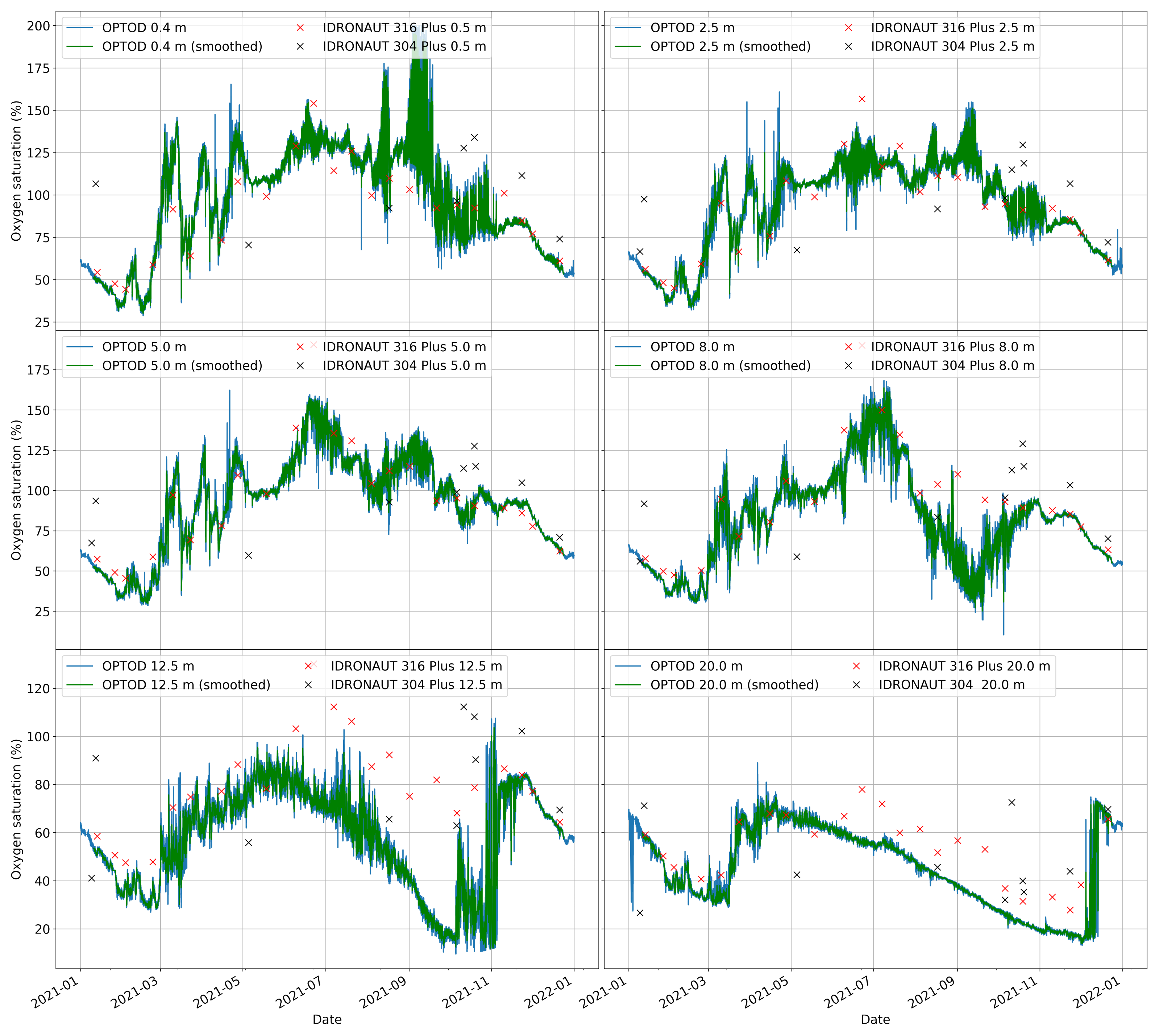

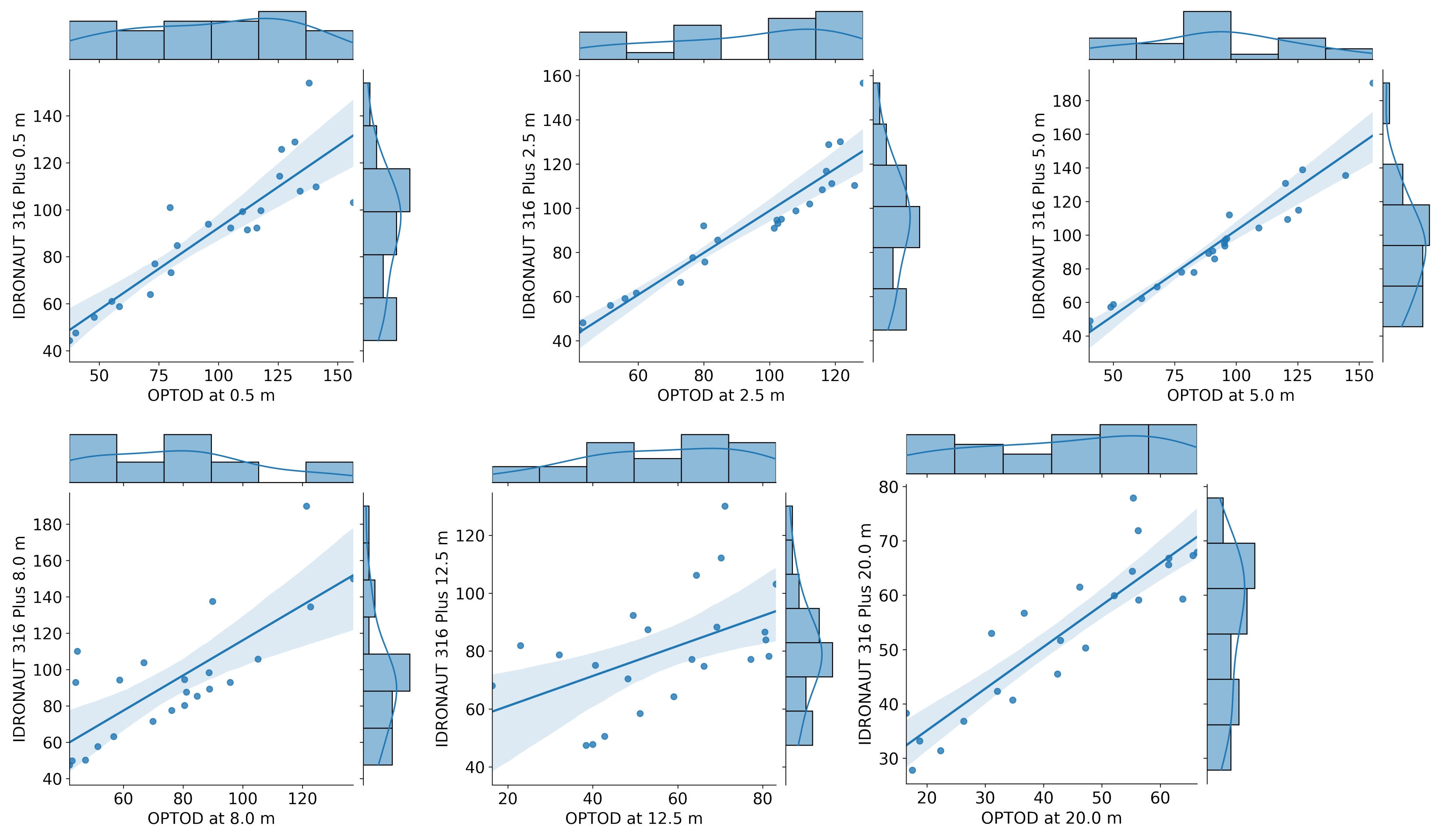

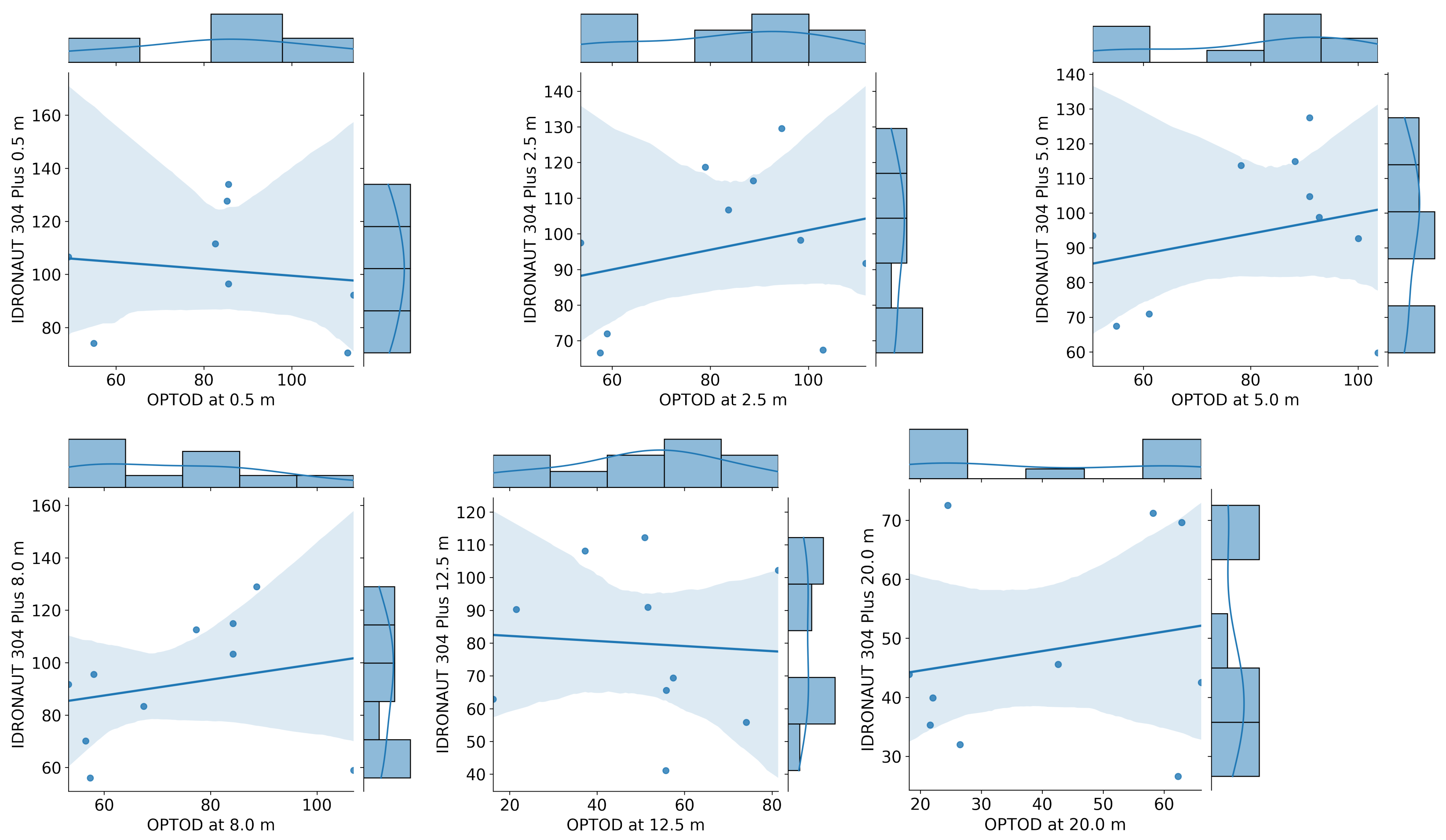

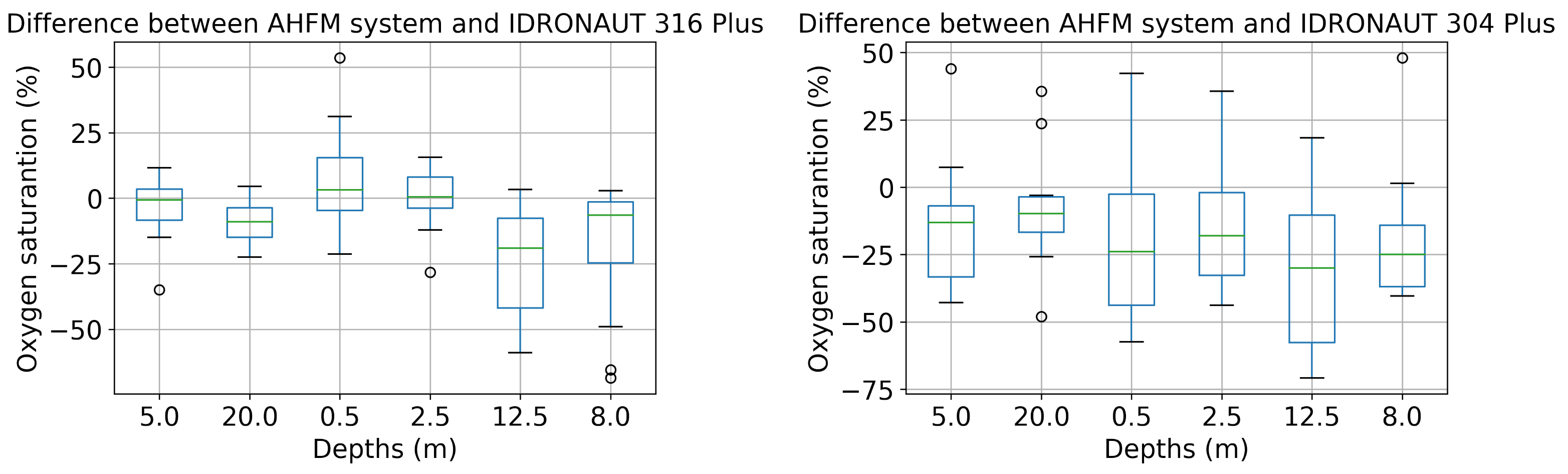

3.5. Comparison between AHFM System and IDRONAUT’s Probes

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bidoglio, G.; Vanham, D.; Bouraoui, F.; Barchiesi, S. The Water-Energy-Food-Ecosystems (WEFE) Nexus. In Encyclopedia of Ecology, 2nd ed.; Fath, B., Ed.; Elsevier: Oxford, UK, 2019; pp. 459–466. [Google Scholar] [CrossRef]

- Cronache, R. Siccità, sul Po la più grave crisi degli ultimi 70 anni. Terna: «Poca acqua per raffreddare le centrali». 2022. Available online: https://www.corriere.it/cronache/22_giugno_10/siccita-emergenza-bacino-po-la-piu-grave-crisi-ultimi-70-anni-0de23f50-e8b8-11ec-a288-5db7a6019886.shtml (accessed on 21 June 2022).

- Ho, L.T.; Goethals, P.L.M. Opportunities and Challenges for the Sustainability of Lakes and Reservoirs in Relation to the Sustainable Development Goals (SDGs). Water 2019, 11, 1462. [Google Scholar] [CrossRef]

- Lepori, F.; Capelli, C. Effects of Phosphorus Control on Primary Productivity and Deep-Water Oxygenation: Insights from Lake Lugano (Switzerland and Italy). Hydrobiologia 2021, 848, 613–629. [Google Scholar] [CrossRef]

- Schindler, D.W. Recent Advances in the Understanding and Management of Eutrophication. Limnol. Oceanogr. 2006, 51, 356–363. [Google Scholar] [CrossRef]

- Caspers, H. OECD: Eutrophication of Waters. Monitoring, Assessment and Control.—154 Pp. Paris: Organisation for Economic Co-Operation and Development 1982. (Publié En Français Sous Le Titre »Eutrophication Des Eaux. Méthodes de Surveillance, d’Evaluation et de Lutte«). Int. Rev. Gesamten Hydrobiol. Hydrogr. 1984, 69, 200. [Google Scholar] [CrossRef]

- Lepori, F.; Bartosiewicz, M.; Simona, M.; Veronesi, M. Effects of Winter Weather and Mixing Regime on the Restoration of a Deep Perialpine Lake (Lake Lugano, Switzerland and Italy). Hydrobiologia 2018, 824, 229–242. [Google Scholar] [CrossRef]

- Xu, L.D.; Xu, E.L.; Li, L. Industry 4.0: State of the Art and Future Trends. Int. J. Prod. Res. 2018, 56, 2941–2962. [Google Scholar] [CrossRef]

- Zambon, I.; Cecchini, M.; Egidi, G.; Saporito, M.G.; Colantoni, A. Revolution 4.0: Industry vs. Agriculture in a Future Development for SMEs. Processes 2019, 7, 36. [Google Scholar] [CrossRef]

- Ghobakhloo, M. Industry 4.0, Digitization, and Opportunities for Sustainability. J. Clean. Prod. 2020, 252, 119869. [Google Scholar] [CrossRef]

- Pozzoni, M.; Salvetti, A.; Cannata, M. Retrospective and Prospective of Hydro-Met Monitoring System in the Canton Ticino, Switzerland. Hydrol. Sci. J. 2020, 65, 1–15. [Google Scholar] [CrossRef]

- Hill, A.P.; Prince, P.; Snaddon, J.L.; Doncaster, C.P.; Rogers, A. AudioMoth: A Low-Cost Acoustic Device for Monitoring Biodiversity and the Environment. HardwareX 2019, 6, e00073. [Google Scholar] [CrossRef]

- Feenstra, B.; Papapostolou, V.; Hasheminassab, S.; Zhang, H.; Boghossian, B.D.; Cocker, D.; Polidori, A. Performance Evaluation of Twelve Low-Cost PM2.5 Sensors at an Ambient Air Monitoring Site. Atmos. Environ. 2019, 216, 116946. [Google Scholar] [CrossRef]

- Ambrož, M.; Hudomalj, U.; Marinšek, A.; Kamnik, R. Raspberry Pi-Based Low-Cost Connected Device for Assessing Road Surface Friction. Electronics 2019, 8, 341. [Google Scholar] [CrossRef]

- Alam, A.U.; Clyne, D.; Jin, H.; Hu, N.X.; Deen, M.J. Fully Integrated, Simple, and Low-Cost Electrochemical Sensor Array for in Situ Water Quality Monitoring. ACS Sens. 2020, 5, 412–422. [Google Scholar] [CrossRef] [PubMed]

- Sunny, A.I.; Zhao, A.; Li, L.; Kanteh Sakiliba, S. Low-Cost IoT-Based Sensor System: A Case Study on Harsh Environmental Monitoring. Sensors 2021, 21, 214. [Google Scholar] [CrossRef] [PubMed]

- Strigaro, D.; Cannata, M.; Antonovic, M. Boosting a Weather Monitoring System in Low Income Economies Using Open and Non-Conventional Systems: Data Quality Analysis. Sensors 2019, 19, 1185. [Google Scholar] [CrossRef] [PubMed]

- Cannata, M.; Strigaro, D.; Lepori, F.; Capelli, C.; Rogora, M.; Manca, D. FOSS4G Based High Frequency and Interoperable Lake Water-Quality Monitoring System. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH: Göttingen, Germany, 2021; Volume XLVI-4-W2-2021, pp. 25–29. [Google Scholar] [CrossRef]

- IST-SUPSI, Istituto Scienze Della Terra. Ricerche Sull’evoluzione Del Lago Di Lugano. Aspetti Limnologici. Programma Triennale 2019–2021; Campagna 2020; Commissione Internazionale per la Protezione delle Acque Italo-Svizzere, Ed.; 2021; p. 60. Available online: https://www.cipais.org/modules.php?name=cipais&pagina=lago-lugano#rapporti (accessed on 1 August 2022).

- Laas, A.; de Eyto, E.; Pierson, D.C.; Jennings, E. NETLAKE Guidelines for Automated Monitoring System Development. 2016. Available online: https://eprints.dkit.ie/524/ (accessed on 1 August 2022).

- Seifert-Dähnn, I.; Furuseth, I.S.; Vondolia, G.K.; Gal, G.; de Eyto, E.; Jennings, E.; Pierson, D. Costs and benefits of automated high-frequency environmental monitoring—The case of lake water management. J. Environ. Manag. 2021, 285, 112108. [Google Scholar] [CrossRef]

- Wüest, A.; Bouffard, D.; Guillard, J.; Ibelings, B.W.; Lavanchy, S.; Perga, M.E.; Pasche, N. LéXPLORE: A Floating Laboratory on Lake Geneva Offering Unique Lake Research Opportunities. WIREs Water 2021, 8, e1544. [Google Scholar] [CrossRef]

- Sankaran, M. The Future of Digital Water Quality Monitoring. 2021. Available online: https://www.wqpmag.com/web-exclusive/future-digital-water-quality-monitoring (accessed on 1 August 2022).

- Dutta, S.; Geiger, T.; Lanvin, B. The Global Information Technology Report 2015; World Economic Forum: Cologny, Switzerland, 2015; Volume 1, pp. 80–85. [Google Scholar]

- Sabbagh, K.; Friedrich, R.; El-Darwiche, B.; Singh, M.; Ganediwalla, S.; Katz, R. Maximizing the Impact of Digitization. Glob. Inf. Technol. Rep. 2012, 2012, 121–133. [Google Scholar]

- Jones, M.D.; Hutcheson, S.; Camba, J.D. Past, Present, and Future Barriers to Digital Transformation in Manufacturing: A Review. J. Manuf. Syst. 2021, 60, 936–948. [Google Scholar] [CrossRef]

- Gibert, K.; Horsburgh, J.S.; Athanasiadis, I.N.; Holmes, G. Environmental Data Science. Environ. Model. Softw. 2018, 106, 4–12. [Google Scholar] [CrossRef]

- Sima, V.; Gheorghe, I.G.; Subić, J.; Nancu, D. Influences of the Industry 4.0 Revolution on the Human Capital Development and Consumer Behavior: A Systematic Review. Sustainability 2020, 12, 4035. [Google Scholar] [CrossRef]

- Marcon, É.; Marcon, A.; Le Dain, M.A.; Ayala, N.F.; Frank, A.G.; Matthieu, J. Barriers for the Digitalization of Servitization. Procedia CIRP 2019, 83, 254–259. [Google Scholar] [CrossRef]

- Desai, J.R. Internet of Things: Architecture, Protocols, and Interoperability as a Major Challenge. 2019. Available online: https://www.igi-global.com/chapter/internet-of-things/www.igi-global.com/chapter/internet-of-things/233262 (accessed on 1 August 2022). [CrossRef]

- Nielsen, E.S. The Use of Radio-active Carbon (C14) for Measuring Organic Production in the Sea. ICES J. Mar. Sci. 1952, 18, 117–140. [Google Scholar] [CrossRef]

- Schindler, D.W.; Schmidt, R.V.; Reid, R.A. Acidification and Bubbling as an Alternative to Filtration in Determining Phytoplankton Production by the 14C Method. J. Fish. Res. Board Can. 1972, 29, 1627–1631. [Google Scholar] [CrossRef]

- Staehr, P.A.; Bade, D.; de Bogert, M.C.V.; Koch, G.R.; Williamson, C.; Hanson, P.; Cole, J.J.; Kratz, T. Lake Metabolism and the Diel Oxygen Technique: State of the Science. Limnol. Oceanogr. Methods 2010, 8, 628–644. [Google Scholar] [CrossRef]

- OECD. Eutrophication of Waters: Monitoring, Assessment and Control; Organisation for Economic Co-operation and Development; OECD Publications and Information Center: Paris, France; Washington, DC, USA, 1982; p. 154. [Google Scholar]

- Papazoglou, M.; Van Den Heuvel, W.J. Service Oriented Architectures: Approaches, Technologies and Research Issues. VLDB J. 2007, 16, 389–415. [Google Scholar] [CrossRef]

- Aalst, W.M.V.D.; Beisiegel, M.; Hee, K.M.V.; Konig, D.; Stahl, C. An SOA-based Architecture Framework. Int. J. Bus. Process Integr. Manag. 2007, 2, 91. [Google Scholar] [CrossRef]

- Granell, C.; Díaz, L.; Gould, M. Service-Oriented Applications for Environmental Models: Reusable Geospatial Services. Environ. Model. Softw. 2010, 25, 182–198. [Google Scholar] [CrossRef]

- Morabito, R.; Cozzolino, V.; Ding, A.Y.; Beijar, N.; Ott, J. Consolidate IoT Edge Computing with Lightweight Virtualization. IEEE Netw. 2018, 32, 102–111. [Google Scholar] [CrossRef]

- Chen, B.; Wan, J.; Celesti, A.; Li, D.; Abbas, H.; Zhang, Q. Edge Computing in IoT-Based Manufacturing. IEEE Commun. Mag. 2018, 56, 103–109. [Google Scholar] [CrossRef]

- Reynolds, C.S. The Ecology of Phytoplankton; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Cannata, M.; Antonovic, M.; Molinari, M.; Pozzoni, M. istSOS, a New Sensor Observation Management System: Software Architecture and a Real-Case Application for Flood Protection. Geomat. Nat. Hazards Risk 2015, 6, 635–650. [Google Scholar]

- Bröring, A.; Stasch, C.; Echterhoff, J. OGC Sensor Observation Service Interface Standard; Version 2.0; Report; Open Geospatial Consortium: Wayland, MA, USA, 2012. [Google Scholar] [CrossRef]

- Salvati, M.; Brambilla, E.; Grafiche Futura. Data Quality Control Procedures in Alpine Metereological Services; Università di Trento. Dipartimento di Ingegneria Civile e Ambientale: Trento, Italy, 2008. [Google Scholar]

- Zahumenskỳ, I. Guidelines on Quality Control Procedures for Data from Automatic Weather Stations; World Meteorological Organization: Geneva, Switzerland, 2004. [Google Scholar]

- Shafer, M.A.; Fiebrich, C.A.; Arndt, D.S.; Fredrickson, S.E.; Hughes, T.W. Quality Assurance Procedures in the Oklahoma Mesonetwork. J. Atmos. Ocean. Technol. 2000, 17, 474–494. [Google Scholar] [CrossRef]

- Kunkel, K.E.; Andsager, K.; Conner, G.; Decker, W.L.; Hillaker, H.J.; Knox, P.N.; Nurnberger, F.V.; Rogers, J.C.; Scheeringa, K.; Wendland, W.M.; et al. An Expanded Digital Daily Database for Climatic Resources Applications in the Midwestern United States. Bull. Am. Meteorol. Soc. 1998, 79, 1357–1366. [Google Scholar] [CrossRef]

- Schroeder, J.L.; Burgett, W.S.; Haynie, K.B.; Sonmez, I.; Skwira, G.D.; Doggett, A.L.; Lipe, J.W. The West Texas Mesonet: A Technical Overview. J. Atmos. Ocean. Technol. 2005, 22, 211–222. [Google Scholar] [CrossRef]

- Meek, D.W.; Hatfield, J.L. Data Quality Checking for Single Station Meteorological Databases. Agric. For. Meteorol. 1994, 69, 85–109. [Google Scholar] [CrossRef]

- Bergkemper, V.; Weisse, T. Do Current European Lake Monitoring Programmes Reliably Estimate Phytoplankton Community Changes? Hydrobiologia 2018, 824, 143–162. [Google Scholar] [CrossRef]

- WMO. State of the Global Climate 2021: WMO Provisional Report; WMO: Geneva, Switzerland, 2021. [Google Scholar]

- Spinoni, J.; Vogt, J.V.; Naumann, G.; Barbosa, P.; Dosio, A. Will Drought Events Become More Frequent and Severe in Europe? Int. J. Climatol. 2018, 38, 1718–1736. [Google Scholar] [CrossRef]

- Guo, H.; Bao, A.; Liu, T.; Jiapaer, G.; Ndayisaba, F.; Jiang, L.; Kurban, A.; De Maeyer, P. Spatial and Temporal Characteristics of Droughts in Central Asia during 1966–2015. Sci. Total Environ. 2018, 624, 1523–1538. [Google Scholar]

- Brunner, M.I.; Björnsen Gurung, A.; Zappa, M.; Zekollari, H.; Farinotti, D.; Stähli, M. Present and Future Water Scarcity in Switzerland: Potential for Alleviation through Reservoirs and Lakes. Sci. Total Environ. 2019, 666, 1033–1047. [Google Scholar] [CrossRef]

- Marcé, R.; George, G.; Buscarinu, P.; Deidda, M.; Dunalska, J.; de Eyto, E.; Flaim, G.; Grossart, H.P.; Istvanovics, V.; Lenhardt, M.; et al. Automatic High Frequency Monitoring for Improved Lake and Reservoir Management. Environ. Sci. Technol. 2016, 50, 10780–10794. [Google Scholar] [CrossRef]

| Total | North Basin | South Basin | |

|---|---|---|---|

| Area of the basin (km2) | 565.6 | 269.7 | 295.9 |

| Area (km2) | 48.9 | 27.5 | 21.4 |

| Volume (km3) | 6.5 | 4.7 | 1.8 |

| Mean depth (m) | 134 | 171 | 85 |

| Max depth (m) | 288 | 288 | 89 |

| QI | Description |

|---|---|

| 0 | Raw value erroneous |

| 100 | Raw value |

| 101 | The value is consistent with the sensors’s range of measurement |

| 102 | The value is consistent with the previous one |

| 103 | The value is timely consistent |

| 200 | The value has been aggregated with less than 60% of valid values |

| 201 | The value has been correctly aggregated |

| Observed Property | Range |

|---|---|

| Water temperature | (°C) |

| Oxygen saturation | (%) |

| Oxygen concentration | (mg/L or ppm) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Strigaro, D.; Cannata, M.; Lepori, F.; Capelli, C.; Lami, A.; Manca, D.; Seno, S. Open and Cost-Effective Digital Ecosystem for Lake Water Quality Monitoring. Sensors 2022, 22, 6684. https://doi.org/10.3390/s22176684

Strigaro D, Cannata M, Lepori F, Capelli C, Lami A, Manca D, Seno S. Open and Cost-Effective Digital Ecosystem for Lake Water Quality Monitoring. Sensors. 2022; 22(17):6684. https://doi.org/10.3390/s22176684

Chicago/Turabian StyleStrigaro, Daniele, Massimiliano Cannata, Fabio Lepori, Camilla Capelli, Andrea Lami, Dario Manca, and Silvio Seno. 2022. "Open and Cost-Effective Digital Ecosystem for Lake Water Quality Monitoring" Sensors 22, no. 17: 6684. https://doi.org/10.3390/s22176684

APA StyleStrigaro, D., Cannata, M., Lepori, F., Capelli, C., Lami, A., Manca, D., & Seno, S. (2022). Open and Cost-Effective Digital Ecosystem for Lake Water Quality Monitoring. Sensors, 22(17), 6684. https://doi.org/10.3390/s22176684