Abstract

Traditional grey wolf optimizers (GWOs) have difficulty balancing convergence and diversity when used for multimodal optimization problems (MMOPs), resulting in low-quality solutions and slow convergence. To address these drawbacks of GWOs, a fuzzy strategy grey wolf optimizer (FSGWO) is proposed in this paper. Binary joint normal distribution is used as a fuzzy method to realize the adaptive adjustment of the control parameters of the FSGWO. Next, the fuzzy mutation operator and the fuzzy crossover operator are designed to generate new individuals based on the fuzzy control parameters. Moreover, a noninferior selection strategy is employed to update the grey wolf population, which makes the entire population available for estimating the location of the optimal solution. Finally, the FSGWO is verified on 30 test functions of IEEE CEC2014 and five engineering application problems. Comparing FSGWO with state-of-the-art competitive algorithms, the results show that FSGWO is superior. Specifically, for the 50D test functions of CEC2014, the average calculation accuracy of FSGWO is 33.63%, 46.45%, 62.94%, 64.99%, and 59.82% higher than those of the equilibrium optimizer algorithm, modified particle swarm optimization, original GWO, hybrid particle swarm optimization and GWO, and selective opposition-based GWO, respectively. For the 30D and 50D test functions of CEC2014, the results of the Wilcoxon signed-rank test show that FSGWO is better than the competitive algorithms.

1. Introduction

Many complex optimization problems in industrial applications have multiple global optimal solutions or near-optimal solutions that provide decision-makers with different decision preferences, and such problems are often referred to as multimodal optimization problems (MMOPs) [1]. Air service network design is an example of a MMOP, which requires all feasible routes to transport goods to the destination; if the current route cannot be executed due to weather conditions, an alternative route with a similar cost can be selected from the feasible routes to transport goods [2]. Other examples of MMOPs include structural damage detection [3], image segmentation [4], flight control systems [5], job shop scheduling [6], truss structure optimization [7], protein structure prediction [8], and electromagnetic design [9]. Most MMOPs are nonconvex and nonlinear; classical numerical optimization methods are sensitive to nonconvexity and nonlinearity, so they encounter difficulties in solving MMOPs. In contrast, evolutionary algorithms are not sensitive to the nonconvexity and nonlinearity of optimization problems and have been widely used to solve MMOPs, such as genetic algorithms [10], evolutionary algorithms [11], particle swarm optimization [12], ant colony optimization [13], cuckoo search algorithms [14], memetic algorithms [15], niching chaos optimization [16], grey wolf optimization [17], harmony search algorithms [18], fireworks algorithms [19], and gravitational search algorithms [20]. Among these evolutionary algorithms for solving MMOPs, grey wolf optimizers (GWOs) have the advantages of easy implementation and requiring few parameters [21]. Moreover, GWOs use three leading wolves to guide the search and more easily escape local optima, making GWOs competitive for solving MMOPs [22,23,24]. Because the solution space of MMOPs is very complex, GWOs have difficulty balancing convergence and diversity [25,26], so GWOs cannot easily estimate the position of the optimal solution, and the obtained solutions are relatively poor [27]. To address these drawbacks of GWOs, many improved variants of GWOs have been developed, which can be divided into two categories. The first category is to improve the control parameters of GWOs. GWOs have two control parameters, a and C; the former is a linearly attenuated search step, and the latter is the neighborhood radius coefficient, both of which can be used to control the diversity and convergence of GWOs. In [28,29,30,31], methods such as nonlinear functions and chaotic sequences are proposed to construct parameter a, which further enhances the diversity of individuals and improves the ability of GWOs to bounce from local optima. In most GWOs, a and C are treated as independent parameters, while the opinion presented in [32] is that they are related, and a method to calculate C with a is proposed, which further enhances the diversity of GWOs. The second category is to design new individual update strategies. Individual update strategies, such as Levy flight [33,34], Cauchy operator [35], opposition-based learning [36], refraction learning [37], and chaotic opposition-based approaches [38], can make some individuals in the population change greatly, thereby increasing the diversity of GWOs. Update strategies of other evolutionary algorithms, such as the whale optimization algorithm (WOA) [39], covariance matrix adaptation-evolution strategy (CMA-ES) [40], minimum conflict algorithm (MCA) [41], and grasshopper optimization algorithm (GOA) [42], are applied to improve the individual update strategies of GWOs and can also effectively improve the diversity and convergence of GWOs.

By using GWOs to solve MMOPs, the convergence speed and quality of solutions must be further improved, and there are three main reasons for these defects. (i) The absolute value operation in the search direction leads to the loss of negative signs in some dimensions, resulting in an incorrect search and affecting the speed of convergence. (ii) The new individuals of GWOs are mutated in all dimensions, which is a phenomenon of search divergence and affects the convergence and quality of solutions. (iii) GWOs allow worse new individuals to be updated into the population, resulting in the population being unable to effectively estimate the region where the optimal solution is located and reducing the convergence speed of the algorithm. To address these drawbacks of GWOs, a fuzzy strategy grey wolf optimizer (FSGWO) for MMOPs is proposed in this paper. The role of the fuzzy strategy is to automatically adjust the control parameters of the algorithm to realize the adaptive balance of diversity and convergence. By using fuzzy control parameters, new evolutionary operations are designed to increase the algorithm’s abilities to explore new regions and local search, and to improve the quality of the solutions to MMOPs. The main contributions of this paper are as follows: (i) A new grey wolf individual update strategy is proposed. First, both global and local search information is added to the fuzzy search direction, which is used to guide individual mutation and enhance the individual’s ability to detect the optimal solution. Second, the fuzzy cross-operator is applied to generate new individuals and avoid the mutation of new individuals in all dimensions, which is a method to control the phenomenon of individual search divergence. Finally, a noninferior selection strategy is employed to update the population, allowing only better new individuals to be updated into the population, which improves the ability of the grey wolf population to estimate the location of the optimal solution and helps accelerate the convergence. (ii) Binary joint normal distribution is used as a fuzzy method to realize the adaptive adjustment of the control parameters of FSGWO. The two control parameters of the FSGWO are considered to have an intrinsic correlation, which is modeled by a binary joint normal distribution. In the iterative process, the parameters of the binary joint normal distribution method are adaptively updated with information about the current optimal solutions to automatically control the convergence speed. (iii) The FSGWO is verified on the 30 30D and 50D test functions of IEEE CEC2014 and five engineering application problems and compared with state-of-the-art competitive algorithms. The results show that the proposed algorithm has advantages over competitive algorithms in solving MMOPs, and the proposed improvement ideas are feasible for balancing the diversity and convergence of traditional GWOs. Specifically, for the 50D test functions of CEC2014, the average calculation accuracy of FSGWO is 33.63%, 46.45%, 62.94%, 64.99%, and 59.82% higher than those of the equilibrium optimizer algorithm (EO), modified particle swarm optimization (MPSO), original GWO, hybrid particle swarm optimization and grey wolf optimizer (HPSOGWO), and selective opposition-based GWO (SOGWO), respectively, which indicates that the FSGWO can significantly improve the calculation accuracy when solving high-dimensional MMOPs. For the 30D and 50D test functions of CEC2014, the results of the Wilcoxon signed-rank test show that the proposed algorithm is better than the competitive algorithms.

2. Related Work

2.1. Algorithm Flow of the Original Grey Wolf Optimizer

The grey wolf population is denoted by X = {, , …, }, where M is the number of grey wolves. Xp is an individual in X. In the original GWO [21], the three best solutions appearing in the iterative process are called the three leading wolves and are denoted by , , and . The three leading wolves are used to guide grey wolf individuals to round up prey, which is also known as updating individuals.

The search direction from to is calculated as

where is the neighborhood radius, which is a random vector between (0, 2). The operator ⨀ represents the vector dot product operation. C1 ⨀ is a neighborhood point of . The operator |∙| is an absolute value operator.

A mutant individual of generated by is written as

where is the search step, which is a random vector between (−2, 2).

Similarly, a mutant individual of generated by can be presented as

where Dβ = | ⨀ − | is the search direction. C2 is a random vector between (0, 2), and is a random vector between (−2, 2).

In the same way, a mutant individual of Xp generated by is

where Dδ = | ⨀ Xδ − | is the search direction. C3 is a random vector between (0, 2), and is a random vector between (−2, 2).

The new individual Xu, generated from the above three mutant individuals, can be expressed as

Finally, Xp in X is replaced with Xu, completing the update of the individual .

This update strategy of GWO has two drawbacks. (i) Compared with Xp, all dimensions of Xu are mutated, which leads to divergence when solving high-dimensional MMOPs and reduces the quality of solutions. (ii) Moreover, GWO uses only three leading wolves as heuristic information to guide individuals to search for the optimal solution. The distribution of the optimal solutions of MMOPs is more complex, and the three leading wolves cannot quickly estimate the region of the optimal solution; thus, the algorithm slowly converges.

The pseudocode of the original GWO algorithm is shown in Algorithm 1.

| Algorithm 1. Pseudocode of the original GWO algorithm [21]. |

Initialize the grey wolf population Xi (i = 1, 2, …, M) Initialize a, A, and C Calculate the fitness of each search agent = the best search agent Xβ = the second-best search agent = the third-best search agent while (t < Max number of iterations) for each search agent Update the position of Xi end for Update a, A, and C Calculate the fitness of all search agents Update Xα, , and Xδ t = t + 1 end while return |

2.2. Fuzzy Adaptive Control Parameters

For GWOs, the design of adaptive control parameters is a challenge. In [28,29,30,31], nonlinear functions are used to design adaptive control parameters for GWOs, but such adaptive parameters are not effective in solving MMOPs, and the quality of the obtained solutions is not high. The main reason for this result is that the nonlinear function cannot know the complexity of the solution space of MMOPs and cannot use the current iteration information to estimate the position of the optimal solution.

In recent studies, fuzzy methods have been used to address the issues of adaptive control parameters of evolutionary algorithms. To achieve an optimal balance of exploitation and exploration in the chicken swarm optimization algorithm [43], the fuzzy system is applied to adaptively adjust the number of chickens and random factors. In [44], the fuzzy system is used for the design of the crossover rate control parameter of the differential evolution algorithm, which improves the diversity of the population. In [45], the fuzzy inference system is employed to automatically tune the control parameters of the whale optimization algorithm, which improves the convergence of the algorithm. The common feature of these fuzzy methods is updating the control parameters with the information of the optimal solution in the current iteration.

Fuzzy methods provide new ideas for the design of adaptive control parameters for GWOs. Inspired by this, in this study, bivariate joint normal distribution is used as a fuzzy method to design adaptive control parameters of GWO and new evolutionary operators are designed based on these fuzzy control parameters. Finally, the improved GWO is employed to solve MMOPs.

3. The Proposed Algorithm

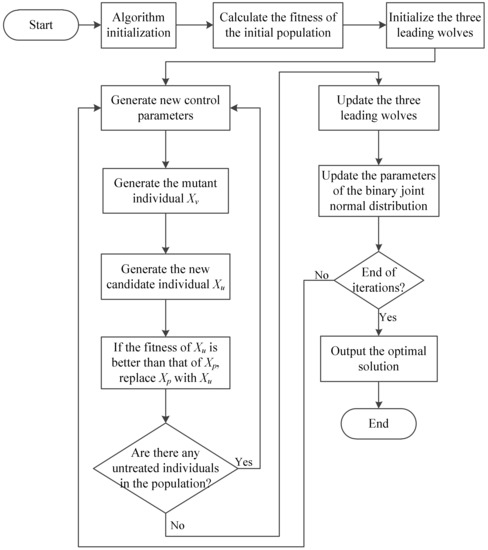

The flowchart of the FSGWO is shown in Figure 1. The algorithm parameters and population are initialized first; the fitness of each individual in the initial population is calculated, and the three individuals with the best fitness values are selected as the three initial leading wolves. In the iterative part of the algorithm, new control parameters are obtained by sampling the binary joint normal distribution, and then a new individual Xu is generated through mutation and crossover operations. If Xu is better than Xp, is replaced with Xu; otherwise, it is not replaced. Finally, the parameters of the bivariate joint normal distribution are adaptively updated and the algorithm continues to the next iteration. After the end of the iterations, the best leader wolf is taken as the optimal solution and output.

Figure 1.

Flowchart of FSGWO.

The key points of the FSGWO are described below.

3.1. Mutation Strategy with a Fuzzy Search Direction

The mutation of the grey wolf is realized by adding a fuzzy search direction to the grey wolf. The term fuzzy search direction refers to the product of the fuzzy step ra and the search direction , and its calculation method is described as follows.

First, three leading wolves are used to estimate the current position Xc of the prey, and is given by

The fuzzy search direction of Xp is defined as

where and are two individuals randomly selected in X, and ≠ ≠ . The expression Xc − represents the search information from Xp to the prey, which belongs to the global search information. The expression − represents the search information between individuals and belongs to the local search information.

There are three differences between Equation (7) and Equation (1). (i) Equation (7) has no absolute value operator and retains the heuristic effect of negative signs on the search. (ii) In the early stage of iterations, the positions of the three leading wolves are generally scattered. Therefore, Equation (7) uses the average value of the three leading wolves to estimate the position of the prey, which can reduce the adverse effects caused by the dispersion of guiding positions and help accelerate the convergence. (iii) Grey wolves have the habit of hunting collectively and surround the prey by exchanging information on the location of their prey. Equation (7) uses − to realize the exchange of prey location information between grey wolves, but there is no method for exchanging prey location information between grey wolves in Equation (1).

The mutant individual of Xp is generated by the fuzzy search direction, written as

where is the fuzzy step, which is a random vector between (0, 1). ra is a control parameter of FSGWO, and its generation method is described later. The expression ⨀ Dc represents the fuzzy search direction, which is the product of the fuzzy step and the search direction Dc. The expression Xp + ⨀ Dc starts from and searches for prey in the fuzzy search direction of ra ⨀ .

3.2. Fuzzy Crossover Operator

All dimensions of Xν are mutated. To make the search stable, selecting the values on some dimensions from and then copying them into the corresponding dimensions of Xu is necessary. This is achieved by a fuzzy crossover operator. The term fuzzy crossover operator refers to a crossover operator that uses the fuzzy crossover factor rb.

The term j is denoted as the jth dimension of and Xν. The crossover operation on the jth dimension can be expressed by

where is the fuzzy crossover factor, which is a random vector between (0, 1). is the value in the jth dimension of . r is a random number (scalar) that follows the standard uniform distribution. The expression r ≥ indicates that the value of the jth dimension of is copied to the jth dimension of Xu using the roulette strategy.

After completing the operation of Equation (9), a dimension w is randomly specified, and then the mutation operation = is performed to generate a new individual Xu.

The term fuzzy control parameter means that control parameters and rb are not directly related in formulas, but they can affect the diversity and the convergence of FSGWO, and there is an inherent fuzzy correlation between and rb. Therefore, these two control parameters are related in this paper, and a bivariate joint normal distribution is used to describe that fuzzy relationship. The expression = [, ] is a binary variable, where and are values on the jth dimension of and rb, respectively. follows a binary joint normal distribution with a mean of μ and a covariance of ∑, denoted as

where u = [, ], ura and are both scalars. The covariance matrix ∑ is defined as

where s1 is a random number (scalar) following the standard uniform distribution. The terms and s3 are random numbers (scalars) following the standard normal distribution. The values of and s3 obtained by sampling the standard normal distribution may be greater than 1, so the diagonal elements of ∑ have diversity, which makes also have diversity.

By sampling Equation (10), a matrix with d rows and two columns can be obtained as follows:

where d is the dimensionality of the MMOPs. The terms ra and are fuzzily related by Equation (10), so the crossover operation of Equation (9) is referred to as the fuzzy crossover operation. The control parameters in rc can be used by a d-dimensional individual in a complete mutation and crossover operation.

3.3. Updated Parameters of the Bivariate Joint Normal Distribution

To improve the diversity of and rb, it is necessary to update μ and ∑ before each iteration of FSGWO.

3.3.1. Update of μ

Updating μ with a fuzzy perturbation is described as follows.

before and after the update is denoted by and , respectively. The fitness values of and are denoted by f() and f(), respectively. The absolute value |f() − f()| represents the change rate of the fitness value before and after the update of . In population X, the individual with the largest |f() − f()| is denoted by , where m is the ID of Xm. can be written as

The control parameters of Xm are stored in the mth row of , denoted by rmc = [,]. can be regarded as heuristic information for updating μ, namely, fuzzy perturbation. Updating μ with can be written as

where c is a conversion factor, which is a constant between (0, 1). The expression c × takes part of as heuristic information to update μ. To avoid excessive perturbation and cause the algorithm to diverge, c is usually 0.1 or 0.2.

3.3.2. Update of ∑

The updated method of ∑ is relatively simple. First, is obtained by sampling the standard uniform distribution; s2 and are sampled via the standard normal distribution. Finally, a new ∑ can be obtained by substituting s1, , and s3 into Equation (11).

3.4. Steps of FSGWO

The steps of FSGWO are shown in Algorithm 2.

| Algorithm 2. Steps of the FSGWO algorithm. |

|

|

Some details of the algorithm steps in Algorithm 2 are described below.

- (i)

- In step 1, the initial value of μ is [0.5, 0.5], and the initial value of ∑ is [0.1, 0; 0, 0.1]. The value of c is 0.1 or 0.2, which means taking 10% or 20% of as a fuzzy perturbation.

- (ii)

- In step 4.1, the values of the elements in , which are sampled from the binary joint normal distribution N(μ, ∑), may be out of (0, 1); the element’s value is corrected to 0.999 if it crosses the upper bound or 0.001 if it crosses the lower bound.

- (iii)

- In steps 4.2.1 and 4.2.2, the value of each element in Xν and Xu is between [lb, ub]; if the value of an element exceeds the upper bound, it is corrected to rand × ub, or rand × lb if the value of an element exceeds the lower bound, where rand is a random number following a standard uniform distribution.

- (iv)

- In step 4.4, the value of the element in μ is between (0, 1); if the value of an element in μ exceeds the upper bound, it is corrected to 0.99, or 0.01 if the value of an element exceeds the lower bound.

- (v)

- Comparing the FSGWO algorithm flow in Algorithm 2 with the original GWO algorithm flow in Algorithm 1 shows that the operations lacking in Algorithm 1 mainly include fuzzy control parameters in step 4.1, the fuzzy crossover operation in step 3, noninferior selection in step 4.2.4, and fuzzy perturbation in step 4.4.

3.5. Analysis of Computational Complexity

M is the population size, d is the dimension of the problem, and T is the number of iterations.

According to the algorithm steps shown in Algorithm 2, the computational cost of FSGWO is concentrated in the iterative part. The computational cost of an iteration mainly includes the population mutant of O(M × d), the population crossover of O(M × d), the calculation of population fitness of O(M), an update of the leading wolfs of O(M), and an update of parameters of O(1). The computational cost of T iterations is O(T × (M × d + M × d + M + M + 1)). Therefore, the computational complexity of FSGWO is O(T × M × d).

According to the algorithm steps shown in Algorithm 1, the computational cost of the original GWO is concentrated in the iterative part. The computational cost of an iteration mainly includes the population mutant guided by the three leading wolves of O(M × d + M × d + M × d), the calculation of the population fitness of O(M), an update of the leading wolfs of O(M), and an update of the parameters of O(1). The computational cost of T iterations is O(T × (3 × M × d + 2 × M + 1)). Therefore, the computational complexity of the original GWO is O(T × M × d).

According to the above analysis, the computational complexity of FSGWO is the same as that of GWO.

4. Results

In this section, the FSGWO algorithm is verified on 30 test functions of IEEE CEC2014 [46] and 5 engineering application problems.

The compared algorithms include GWO [21], HPSOGWO [47], SOGWO [36], EO [48], and MPSO [49]. GWO is the original GWO. HPSOGWO is an improved GWO with a particle swarm individual update strategy. SOGWO uses selective opposition to enhance the diversity of GWO, and the convergence speed is faster. EO is inspired by control volume mass balance models used to estimate both dynamic and equilibrium states. MPSO is a particle swarm optimization algorithm using chaotic nonlinear inertia weights and has a good balance of diversity and convergence.

The key parameters of the competitive algorithms are shown in Table 1, and the computer source codes of those algorithms were provided by the original papers. The parameter values of the competition algorithm were taken from the original paper and the default settings of the source codes. The setting method of the control parameter values of FSGWO is described in Algorithm 2. In Table 1, N is the population size, which is uniformly taken as 50 in this paper. In EO, a1 is a constant value that controls exploration ability and a2 is a constant value used to manage exploitation ability; GP is a parameter used to balance exploration and exploitation. In MPSO, c1 and c2 are referred to as the acceleration factors; ω1 and ω2 are inertia weights used to balance exploration and exploitation. In GWO, parameter a is the neighborhood radius. In HPSOGWO, rand is a random number between (0, 1) and ω is an inertia weight. In SOGWO, parameter a is the neighborhood radius. In FSGWO, c is a conversion factor between (0, 1); and rb are adaptive control parameters.

Table 1.

Key parameters of the competitive algorithms.

4.1. Results of the Test Functions of CEC2014

The IEEE Congress on Evolutionary Computation 2014 (CEC2014) test suite had 30 complex optimization functions [46], where F1–F3 were unimodal functions and F4–F30 were multimodal functions. In this paper, the proposed algorithm was verified on 30 complex functions (F1–F30) of CEC2014. According to the requirements of the competition, the value range of each dimension decision variable was [−100, 100], and the maximum number of computations of the fitness function was d*104. The experiment was repeated 51 times. The absolute value |f(x) − f(x*)| was the final result of a calculation, where f(x) was the optimal value of the function obtained by the algorithm, and f(x*) was the theoretical optimal value of the function. The smaller the value of |f(x) − f(x*)| was, the closer the optimal value obtained by the algorithm was to the theoretical optimal value. If |f(x) − f(x*)| < 10−8, the calculation result was 0.

4.1.1. Results of 30-Dimensional Test Functions

Table 2 shows the calculation results of the related algorithms, in which the mean (Mean) and standard deviation (STD) of the index were calculated using the results of 51 runs of each algorithm. In Table 2, the optimal mean and standard deviation for each function are highlighted with a bold font and gray background.

Table 2.

Results of the related algorithms for 30-dimensional test functions.

From the mean results in Table 2, the calculated results of FSGWO on unimodal functions F1–F3 were at least four orders of magnitude better than those of GWO, HPSOGWO, and SOGWO. For the F1 function, the exponent of the result of FSGWO was e + 03, while the exponents of the results of GWO, HPSOGWO, and SOGWO were all e + 07. For the F2 function, the exponent of the result of FSGWO was e + 00, while the exponents of the results of GWO, HPSOGWO, and SOGWO were e + 09, e + 09, and e + 08. For the F3 function, the exponent of the result of FSGWO was e + 00, while the exponents of the results of GWO, HPSOGWO, and SOGWO were all e + 04. The results of the unimodal function show that FSGWO had excellent local optimization ability.

From the mean in Table 2, it can be seen that the calculation results of the proposed algorithm for 24 functions (F1–F5, F8–F12, F14, F16–F23, and F26–F30) were better than those of the competitive algorithms, accounting for 80.0%, which indicates that FSGWO could obtain high-quality solutions for MMOPs.

From the STD in Table 2, it can be seen that the results of the proposed algorithm for 23 functions (F1–F5, F8–F12, F14, F16–F23, F26, and F28–F30) were better than those of the competitive algorithms, accounting for 76.7%, which indicates that the FSGWO algorithm had good stability and convergence.

The mean results in Table 2 were analyzed by the Wilcoxon signed-rank test, and the significance level was 0.05. The results of the Wilcoxon test are shown in Table 3. In Table 3, all p values were less than 0.05, which meant that the mean values of FSGWO were significantly different from those of the other algorithms. The results of Table 2 and Table 3 show that the quality of solutions obtained by FSGWO was better than that of the competitive algorithms for the 30 30D test functions.

Table 3.

Results of the Wilcoxon test for the mean values in Table 2.

According to the data in Table 2, the percentage of improvement in calculation accuracy between FSGWO and each competing algorithm can be calculated, and the results are shown in Table 4. In Table 4, a negative number means that the calculation accuracy of FSGWO for this test function was not as good as that of the competitive algorithm and the term Average represents the average percentage of improvement in the calculation accuracy of FSGWO over the competitive algorithm for the 30 test functions. Table 4 shows that for the 30 30D test functions, the average calculation accuracy of FSGWO was 46.98%, 54.35%, 64.84%, 69.02%, and 62.27% higher than those of EO, MPSO, GWO, HPSOGWO, and SOGWO, respectively. The calculation accuracy of FSGWO was significantly higher than those of the competitive algorithms.

Table 4.

Comparison of the calculation accuracy of related algorithms for 30 30D test functions.

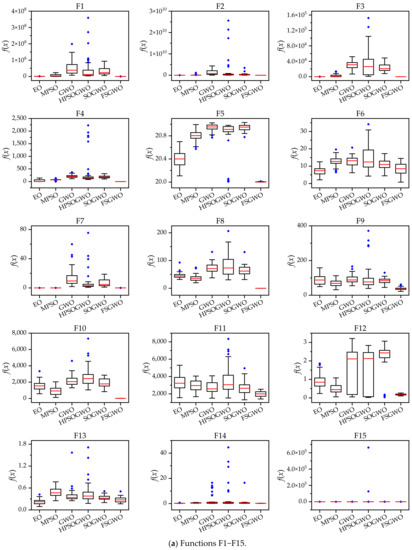

Figure 2 shows box plots of the related algorithms for the 30 test functions which were drawn with 51 calculation results of related algorithms. In Figure 2, the short red line represents the median; the black box represents the upper quartile (Q3) and the lower quartile (Q1); and the blue solid prism represents an outlier.

Figure 2.

The box plots of the related algorithms for the 30-dimensional functions.

For 27 functions (F1–F5, F7–F23, and F26–F30), the box length of FSGWO was shorter than those of the competitive algorithms or comparable to them, which meant that the proposed algorithm had good convergence; therefore, the results of 51 runs were relatively concentrated, and the box length was shorter.

For 28 functions (F1–F23 and F26–F30), the median of the proposed algorithm was smaller than those of the competitive algorithms or comparable to them, which indicated that the proposed algorithm had good diversity and local optimization ability and could find high-quality solutions.

For 28 functions (F1–F25 and F28–F30), the number of outliers of FSGWO was less than those of the compared algorithms, or the outliers were mainly distributed around the median, which indicated that the calculation results of FSGWO were close to the normal distribution, and the robustness of FSGWO was better than those of the compared algorithms.

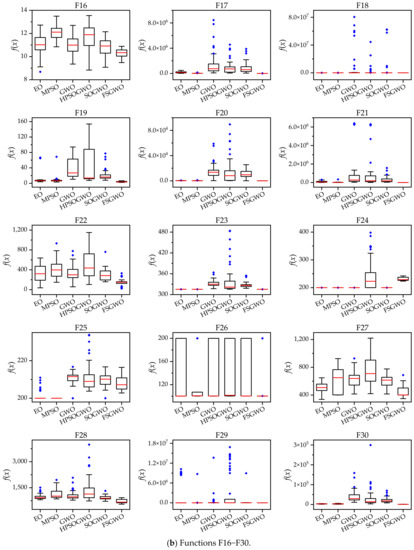

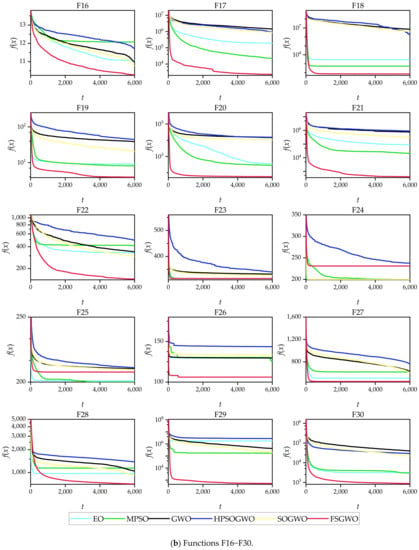

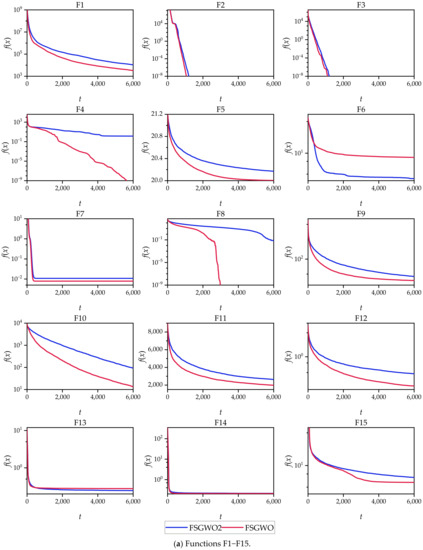

Figure 3 shows the convergence curves of the related algorithms for the 30 30D test functions, where the abscissa t is the number of iterations, and the ordinate f(x) is the average fitness of 51 independent experiments of each algorithm.

Figure 3.

Convergence curves of the related algorithms for the 30-dimensional functions.

From the perspective of the changing tendencies of the convergence curves in the early stage of the iterations, the fitness values of the proposed algorithm decreased faster than those of the competitive algorithms for 29 functions (F1–F5 and F7–F30), which indicated that FSGWO had good diversity and convergence and could quickly locate the optimal solution in the early stage of the iterations.

From the overall change tendencies of the curves and the final convergence positions, for the 25 functions (F1–F5, F8–F12, F14–F23, and F26–F30), the convergence curves of FSGWO were better than those of the competitive algorithms.

4.1.2. Results of 50-Dimensional Test Functions

The IEEE CEC2014 test functions were set to 50 dimensions. Table 5 shows the calculation results of the related algorithms for the 50-dimensional functions. In Table 5, the optimal mean and standard deviation for each function are highlighted with a bold font and gray background. As the dimensions increased, so did the complexity of the MMOPs. For 23 functions (F1–F5, F7–F12, F14, F16–F18, F20–F23, F26, and F28–F30), the mean values of FSGWO were better than those of the competitive algorithms, accounting for 76.7%.

Table 5.

Results of the related algorithms for 50-dimensional functions.

The Wilcoxon signed-rank test was used to analyze the mean values in Table 5, with a significance level of 0.05. The results of the test are shown in Table 6. Table 6 shows that all p values were less than 0.05, which meant that the calculation results of FSGWO for the 50-dimensional functions were significantly different from those of the competitive algorithms.

Table 6.

Results of the Wilcoxon test for the mean values of Table 5.

According to the data in Table 5, the percentage of improvement in calculation accuracy between FSGWO and each competing algorithm can be calculated, and the results are shown in Table 7. In Table 7, a negative number means that the calculation accuracy of FSGWO for this test function was not as good as that of the competitive algorithm and the term Average represents the average percentage of improvement in the calculation accuracy of FSGWO over the competitive algorithm for 30 test functions. Table 7 shows that for the 30 50-dimensional test functions, the average calculation accuracy of FSGWO was 33.63%, 46.45%, 62.94%, 64.99%, and 59.82% higher than those of EO, MPSO, GWO, HPSOGWO, and SOGWO, respectively. The calculation accuracy of FSGWO was significantly higher than those of the competitive algorithms for the 50D test functions.

Table 7.

Comparison of the calculation accuracy of related algorithms for 30 50-D test functions.

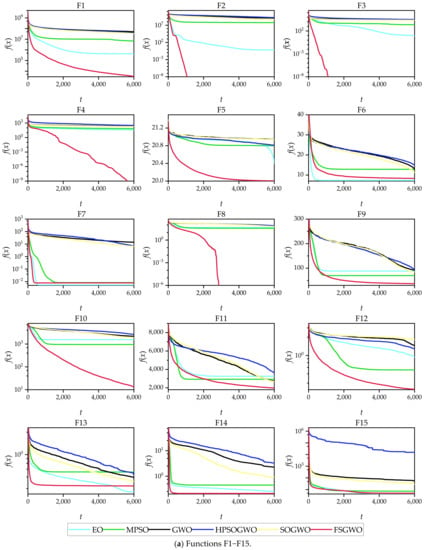

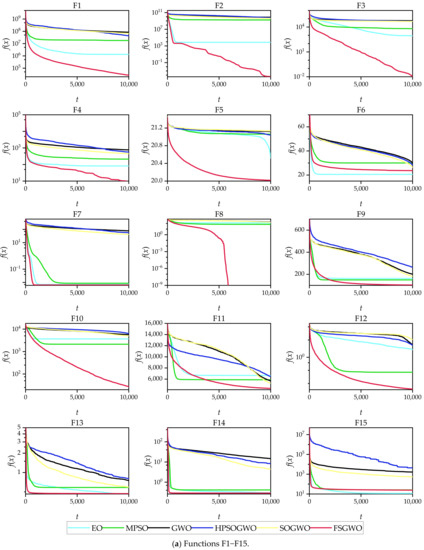

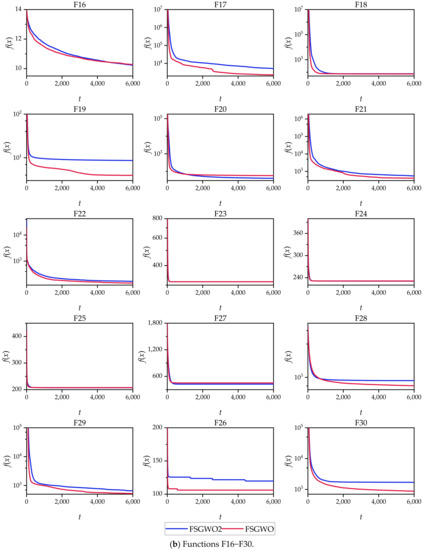

Figure 4 shows the convergence curves of the related algorithms for the 50-dimensional functions, which were plotted with an average of 51 calculations of each algorithm. From the changing tendencies of the convergence curves and the final convergence positions, the convergence tendencies of the proposed algorithm for 25 test functions (F1–F5, F7–F14, F16–F18, F20–F23, and F26–F30) were better than those of the competitive algorithms.

Figure 4.

Convergence curves of the related algorithms for 50-dimensional functions.

4.1.3. Verification of the Validity of the Fuzzy Control Parameters

In this paper, the correlation between control parameters and rb is modeled by the bivariate joint normal distribution N(μ, ∑). A comparative experiment was conducted to verify the effectiveness of that modeling idea. The control parameters and rb were assumed to be independent random variables. In addition, both parameters followed the standard uniform distribution; the other parts of the FSGWO algorithm remained unchanged, and the new FSGWO at this time was denoted as FSGWO1.

The 30-dimensional functions (F1–F30) were solved by FSGWO and FSGWO1, and the calculation results are shown in Table 8. In Table 8, the optimal mean and standard deviation for each function are highlighted with a bold font and gray background. For 24 complex multimodal functions (F1–F5, F7–F12, F14–F16, F18, F19, F21–F23, F25, F26, and F28–F30), the mean values of FSGWO were better than those of FSGWO1, accounting for 80.0%. The Wilcoxon signed-rank test was used to analyze the mean values in Table 8, and the significance level was 0.05. The results of the test are shown in Table 9. The p value (2.7610e – 03) was less than the significance level, indicating that there was a substantial difference in Mean between FSGWO and FSGWO1.

Table 8.

Results of FSGWO and FSGWO1 for 30-dimensional functions.

Table 9.

Results of the Wilcoxon test for the mean values of Table 8.

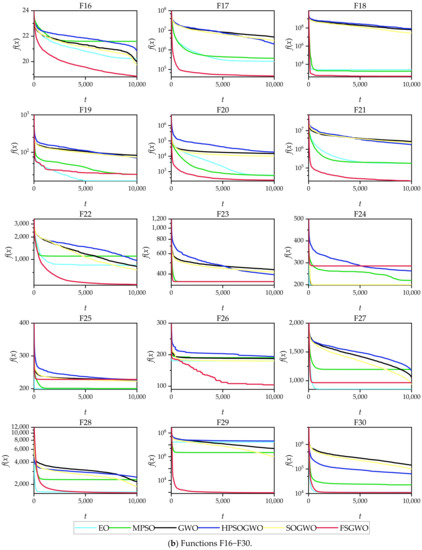

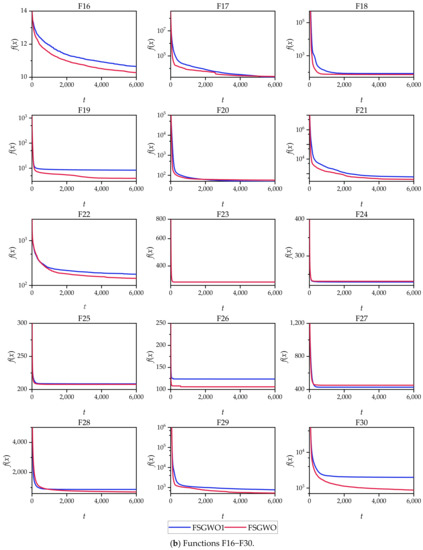

Figure 5 demonstrates the convergence curves of FSGWO and FSGWO1 for the 30 30D test functions, which were plotted with the averages of 51 runs of the 2 algorithms. Figure 5 shows that the change tendencies and the final convergence positions of FSGWO were better than or comparable to those of FSGWO1 for 29 functions (F1–F5 and F7–F30).

Figure 5.

Convergence curves of FSGWO and FSGWO1.

According to the results of Table 8, Table 9 and Figure 5, the optimization ability of FSGWO was better than that of FSGWO1. Therefore, it is valid that binary joint normal distribution is used as a fuzzy method to realize the adaptive adjustment of the control parameters and rb, and this fuzzy method helps improve the convergence speed of FSGWO and the quality of the solution.

4.1.4. Verification of the Effectiveness of the Fuzzy Perturbation Strategy

In Equation (14), the fuzzy perturbation is used to update the mean μ of the bivariate joint normal distribution. A comparative experiment was used to verify that was an effective design. was extracted from by m of Equation (13), and m was randomly generated instead of using Equation (13); the other parts of FSGWO remained unchanged, and the new FSGWO was denoted as FSGWO2.

The 30-dimensional functions were solved with FSGWO and FSGWO2, and the results are shown in Table 10. In Table 10, the optimal mean and standard deviation for each function are highlighted with a bold font and gray background. The mean values of FSGWO were better than those of FSGWO2 for 24 functions (F1–F5, F7–F12, F14, F15, F17–F19, F21–F24, F26, and F28–F30), accounting for 80.0%. Table 11 is the result of the Wilcoxon test for the mean values of Table 10, and the significance level was 0.05. The p value was 2.9719e-03, which was less than the significance level. The results of the Wilcoxon test indicated that there was a significant difference in the mean between FSGWO and FSGWO2.

Table 10.

Results of FSGWO and FSGWO2 for 30-dimensional functions.

Table 11.

Results of the Wilcoxon test for the mean values of Table 10.

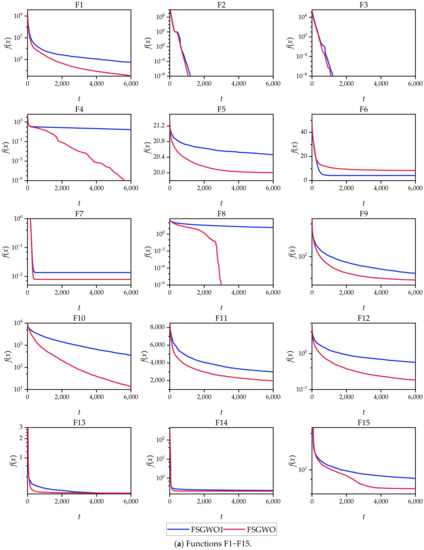

Figure 6 shows the convergence curves of FSGWO and FSGWO2, which were plotted with the averages of 51 runs of the 2 algorithms. Figure 6 shows that FSGWO converged faster than FSGWO2 or was comparable to FSGWO2 for 29 functions (F1–F5 and F7–F30), accounting for 96.7%.

Figure 6.

Convergence curves for FSGWO and FSGWO2.

4.2. Results for Economic Load Dispatch Problems of Power Systems

Economic load dispatch (ELD) is a complex optimization problem with constraints in power systems [50,51]. The task of ELD is to reasonably dispatch the load of the system to each generator to minimize the fuel cost of the system and satisfy the relevant constraints.

4.2.1. The Basic Model of the ELD Problem

The economic load dispatch of thermal power units is discussed in this paper. The fuel cost of an ELD can be approximately expressed as

where i is the ID of a generator unit and NG is the total number of generators, which is the dimension of the ELD. For the ith unit, is the output power, is the minimum output power, (Pi) is the generation cost function, and , bi, , ei and are the power generation cost coefficients. The absolute value operator |·| converts the negative domain of the sine function into a positive domain to generate multimodality; thus, the ELD is a constrained high-dimensional multimodal optimization problem.

The main constraints of ELDs are as follows.

- (i)

- Power balance constraints.

These constraints require that the total power generation of each unit is equal to the system load PD and the transmission loss .

- (ii)

- Generating capacity constraints.

- (iii)

- Ramp rate limits.

- (iv) Prohibited operating zones.

4.2.2. Results of ELD Cases

The data of static ELD cases were taken from the IEEE CEC2011 competition dataset [51]; the numbers of units were 40 and 140, respectively. According to the requirements of the competition, the maximum number of calculations of the fitness function was 15,000. The best result (best), mean (mean), median (median), the worst result (worst), and standard deviation (STD) were calculated with 25 independent running results of the algorithm.

In addition to the six algorithms shown in Table 1, the compared algorithms included the island-based harmony search (iHS) [52], intellects-masses optimizer (IMO) [53], modified intellects-masses optimizer (MIMO) [53], adaptive population-based simplex (APS 9) [54], enhanced salp swarm algorithm (ESSA) [55], and the genetic algorithm with a new multiparent crossover (GA-MPC) [56]. iHS is a multipopulation evolutionary algorithm with an island-based harmony search. IMO is a dual-population culture algorithm, and its parameters hardly need to be adjusted. MIMP is an IMO algorithm with a trust domain reflection strategy and strong local search abilities. APS 9 is an improved adaptive population-based simplex method. ESSA is a multistrategy enhanced salp swarm algorithm. GA-MPC is a genetic algorithm with three consecutive parents.

Table 12 shows the results of the related algorithms for the 40-unit case. The results of the 1st–6th algorithms are calculated and presented in this paper, and the results of the 7th–12th algorithms were taken from the original papers. In Table 12, the best values of the indicators are highlighted with bold font and a gray background; the symbol—indicates that the data are not provided in the original paper.

Table 12.

Results of the related algorithms for the 40-unit ELD case.

From the mean index in Table 12, the exponents of the results of related algorithms were all e + 05, and the coefficients of the results were also very close, which meant that all algorithms could approximate the optimal solution; the nuance of the results was mainly caused by the different local optimization abilities of each algorithm. The Best, Mean, and Median of the FSGWO were 1.2260e + 05, 1.2514e + 05, and 1.2519e + 05, respectively, which were better than those of competitive algorithms, indicating that FSGWO had relatively strong local optimization ability and stability.

Table 13 shows the results of the related algorithms for the 140-unit case. In Table 13, the best values of the indicators are highlighted with a bold font and gray background. The best, mean, and median of FSGWO were 1.7551e + 06, 1.8119e + 06, and 1.8107e + 06, respectively, which were still better than those of the competitive algorithms, indicating that the proposed algorithm still had excellent optimization performance for the high-dimensional ELD problem. The simplex of ESSA and trust region of MIMO were both classical numerical optimization strategies; Table 13 shows that the fuzzy search strategy of FSGWO was competitive with those numerical optimization strategies when used to solve high-dimensional ELD problems.

Table 13.

Results of the related algorithms for the 140-unit ELD case.

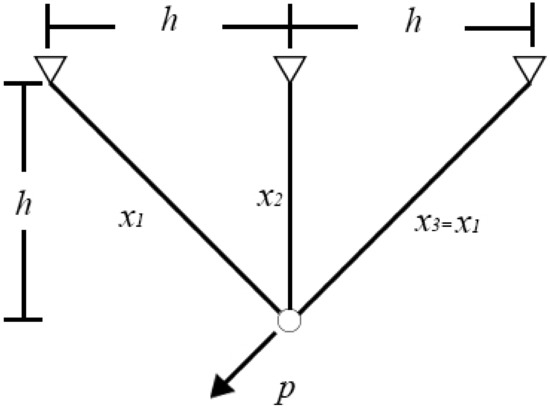

4.3. Design of Three-Bar Truss

In structural engineering, a truss is a triangulated system that provides an efficient way to span long distances. Because members of a truss incur only axial force, the purpose of a truss design is to use less material and maintain the effectiveness of the entire system. A reduction in the amount of material used is usually expressed as a reduction in the diameter of a member. A three-bar planar truss structure is shown in Figure 7 [57]. In this problem, x1, x2, and x3 are the normalized diameters of the three members, and x3 has the same diameter as x1. The aim of this study is to achieve the minimum volume of a three-bar truss by minimizing the values of x1 and x2.

Figure 7.

Three-bar truss design.

The problem shown in Figure 7 can be expressed as an optimization problem:

where x1 and x2 are between [0, 1].

FSGWO was applied to solve the optimization problem of Equation (20). The competitive algorithms included the memory-based grey wolf optimizer (m-GWO) [58], modified sine cosine algorithm (m-SCA) [59], moth-flame optimization (MFO) [60], and cuckoo search (CS) [57]. Table 14 shows the results of related algorithms for the three-bar truss design problem. In Table 14, the best object function value is highlighted with a bold font and gray background. The data for the competitive algorithms are taken from the original papers. From Table 14, the objective function value of FSGWO is 263.8958, which is better than those of the competitive algorithms.

Table 14.

Results of related algorithms for the three-bar truss design problem.

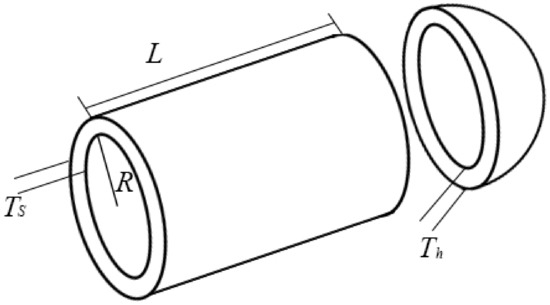

4.4. Design of Pressure Vessel

Figure 8 shows a cylindrical pressure vessel which has a hemispherical head at the end and is designed according to the ASME boiler and pressure vessel code [57]. This problem has four decision variables, which are the thickness of the shell (Ts), the thickness of the head (Th), the inner radius (R), and the length of the cylindrical section without considering the head (L). The goal of this problem is to minimize the cost of producing this capacity and satisfy the relevant conditions.

Figure 8.

Pressure vessel design.

Four decision variables of the pressure vessel are represented by x1, x2, x3, and x4. The problem shown in Figure 8 can be expressed as an optimization problem:

where x1 and x2 are between [0, 99] and x3 and x4 are between [10, 200].

FSGWO was applied to solve the optimization problem of Equation (21). The competitive algorithms included the grey wolf optimization method based on a beetle antenna strategy (BGWO) [61], the improved grey wolf optimizer (I-GWO) [62], moth-flame optimization with orthogonal learning and Broyden-Fletcher-Goldfarb-Shanno (BFGSOLMFO) [63] and the slime mould algorithm (SMA) [64]. Table 15 shows the results of related algorithms for the pressure vessel problem. In Table 15, the best object function value is highlighted with a bold font and gray background. The data for the competitive algorithms are taken from the original papers. From Table 15, the objective function value of FSGWO is 5885.3328, which is better than those of the competitive algorithms.

Table 15.

Results of related algorithms for the pressure vessel design problem.

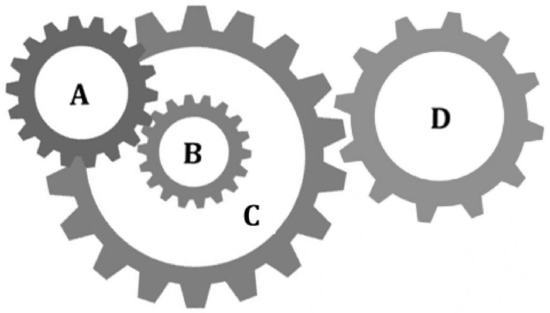

4.5. Design of Gear Train

Figure 9 shows a gear train design problem, in which there are four gears A, B, C and D [59]. The numbers of teeth of the four gears are represented by variables x1, x2, x3, and x4. The number of teeth is an integer between [12,60]. The goal of this problem is to minimize the gear ratio and keep it close to the optimal value of 1/6.931.

Figure 9.

Gear train design.

The problem of Figure 9 can be expressed as an optimization problem:

FSGWO was applied to solve the optimization problem of Equation (22). The competitive algorithms included m-SCA [59], CS [57], the linear prediction evolution algorithm (LPE) [65], and the hybrid grey wolf optimizer and sine cosine algorithm (GWOSCA) [66]. Table 16 shows the results of related algorithms for the gear train design problem. In Table 16, the best object function values are highlighted with a bold font and gray background. The data for the competitive algorithms are taken from the original papers. From Table 16, the objective function value of FSGWO is 2.7009e−12, which is as good as those of m-SCA, CS, and LPE. Moreover, Table 16 shows that gear train design is a typical multimodal optimization problem. For the objective function value of 2.7009e−12, there are three different nearly optimal solutions that correspond to different gear train designs. According to the cost, volume, weight, and reliability of the gear train, decision makers find a design that meets their requirements among these different solutions.

Table 16.

Results of related algorithms for the gear train design problem.

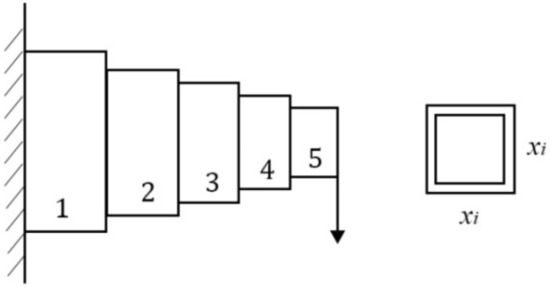

4.6. Design of Cantilever Beam

Figure 10 shows a cantilever beam design problem in which there are five nodes [59]. A node in Figure 10 is regarded as a square hollow cross-section with constant thickness. The first node is fixedly supported, and there is an external vertical force acting at the end of the fifth node. The variable xi represents the width of the cross-section of the ith node and its value is between [0.01, 100]. The goal of this problem is to minimize the weight of the cantilever beam.

Figure 10.

Cantilever beam design.

The problem of Figure 10 can be expressed as an optimization problem:

FSGWO was applied to solve the optimization problem of Equation (23). The competitive algorithms included CS [57], BGWO [61], m-SCA [59], and MFO [60]. Table 17 shows the results of related algorithms for the cantilever beam design problem. In Table 17, the best object function values are highlighted with a bold font and gray background. The data for the competitive algorithms are taken from the original papers. From Table 17, the objective function value of FSGWO is 1.33996, which is as good as that of BGWO.

Table 17.

Results of related algorithms for the cantilever beam design problem.

5. Discussion

The convergence curves of Figure 3 and Figure 4 show that the fitness values of the FSGWO algorithm decreased faster than those of the competitive algorithms in the early stage of iterations. This advantage is related to the improvement of FSGWO in population updates. Step 4.2.4 in Algorithm 2 utilizes the noninferior selection strategy for population updating, which allows only better new individuals to be updated into the population. As the entire grey wolf population can be used to estimate the position of the optimal solution, the probability of detecting the region where the optimal solution is located is also increased, and FSGWO has a faster convergence speed in the early stage of iterations. In contrast, the traditional GWO uses only three leading wolves to estimate the region of the optimal solution, and the probability of finding the optimal solution is relatively low; thus, the convergence curve slowly decreases.

The results in Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11, Table 12, Table 13, Table 14, Table 15, Table 16 and Table 17 and Figure 2 show that the FSGWO algorithm can obtain higher-quality solutions, which indicates that the proposed algorithm has strong optimization ability and stability. These advantages of FSGWO come from the following improvements. (i) The fuzzy direction Dc is added with both global and local search information, which enhances the ability of FSGWO to approximate the optimal solution, thereby producing a high-quality solution. (ii) The new individual Xu generated by the fuzzy crossover operator does not mutate in all dimensions, which effectively controls the divergence of the algorithm and improves the stability and robustness of FSGWO. (iii) The binary joint normal distribution and fuzzy perturbation can adaptively adjust the control parameters and rb of FSGWO, which not only reduces the blindness of the selection of control parameters but also helps improve the local search ability and stability of FSGWO.

Other evolutionary algorithms also have control parameters, and the proposed modeling idea of control parameters is also suitable for those evolutionary algorithms. For example, an evolutionary algorithm has four control parameters, denoted as , r2, , and r4. The internal relation of these four control parameters can be modeled by a quaternary joint normal distribution N(μ, ∑), and μ is written as

where , μ2, , and μ4 are scalars between (0, 1), and their initial values are 0.5. The covariance matrix ∑ can be expressed as

where is a random number following the standard uniform distribution. The four terms s2– are random numbers following the standard normal distribution. The initial values of –s5 are all 0.1. A set of control parameters (, r2, , r4) can be obtained by sampling the quaternary joint normal distribution N(μ, ∑). In the iterative process, the updated methods of μ and ∑ are the same as those in this study.

In summary, the experimental results show that the improvements of FSGWO in balancing diversity and convergence are feasible and effective. The proposed algorithm can produce high-quality solutions when used to solve high-dimensional complex MMOPs and has good convergence and stability.

6. Conclusions

To address the issue that the traditional GWO solves high-dimensional MMOPs with slow convergence speed and low quality solutions, a fuzzy strategy grey wolf optimizer (FSGWO) is proposed in this paper, the key improvements of which are as follows. (i) A new individual mutation strategy is proposed, which utilizes both global and local search information in the fuzzy search direction of mutation and enhances the ability of grey wolf individuals to find the optimal solutions. (ii) A fuzzy crossover operator is used to prevent new individuals from mutating in all dimensions and effectively improves the local search ability of FSGWO and the quality of solutions. (iii) The noninferior selection strategy is applied to update the population, and only better new individuals are allowed to update in the population. Therefore, the entire grey wolf population can be used to estimate the region where the optimal solution is located, which speeds up the convergence of FSGWO. (iv) The two control parameters of FSGWO are modeled by a binary joint normal distribution whose parameters are adaptively updated by a fuzzy perturbation, which effectively reduces the blindness of control parameter selection and improves the stability of the proposed algorithm. Finally, FSGWO is verified on 30 complex test functions of IEEE CEC2014 and 5 engineering application problems; the results show that the convergence of the proposed algorithm and quality of solutions are better than those of the competitive algorithms, which means that the improvements of FSGWO are feasible and effective.

Recent studies have shown that multiple populations have advantages over a single population in maintaining diversity and convergence [67,68]. For our future works, we are interested in some novel topics on GWOs with multiple populations, such as the exchange method of optimal solution information between different populations and the design idea of individual search direction in multiple populations. In addition, state-of-the-art evolutionary algorithms, such as self-adaptive quasi-oppositional stochastic fractal search [69] and combined social engineering particle swarm optimization [70], have many creative update strategies for populations and can be used for reference in the future improvement of FSGWO.

Author Contributions

Conceptualization, H.Q.; methodology, H.Q.; software, T.M.; validation, T.M.; resources, Y.C.; data curation, Y.C.; writing—original draft preparation, T.M.; writing—review and editing, H.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (ID: 61762009).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We are grateful to the anonymous reviewers for their valuable suggestions and comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nekouie, N.; Yaghoobi, M. A new method in multimodal optimization based on firefly algorithm. Artif. Intell. Rev. 2016, 46, 267–287. [Google Scholar] [CrossRef]

- De Oliveira, R.P.; Lohmann, G.; Oliveira, A.V.M. A systematic review of the literature on air transport networks (1973–2021). J. Air Transp. Manag. 2022, 103, 102248. [Google Scholar] [CrossRef]

- Chen, D.C.; Li, Y.Y. A development on multimodal optimization technique and its application in structural damage detection. Appl. Soft. Comput. 2020, 91, 106264. [Google Scholar] [CrossRef]

- Farshi, T.R.; Drake, J.H.; Ozcan, E. A multimodal particle swarm optimization-based approach for image segmentation. Expert Syst. Appl. 2020, 149, 113233. [Google Scholar] [CrossRef]

- Bian, Q.; Nener, B.; Wang, X.M. A quantum inspired genetic algorithm for multimodal optimization of wind disturbance alleviation flight control system. Chin. J. Aeronaut. 2019, 32, 2480–2488. [Google Scholar] [CrossRef]

- Perez, E.; Posada, M.; Herrera, F. Analysis of new niching genetic algorithms for finding multiple solutions in the job shop scheduling. J. Intell. Manuf. 2012, 23, 341–356. [Google Scholar] [CrossRef]

- Mashayekhi, M.; Yousefi, R. Topology and size optimization of truss structures using an improved crow search algorithm. Struct. Eng. Mech. 2021, 77, 779–795. [Google Scholar]

- Nazmul, R.; Chetty, M.; Chowdhury, A.R. Multimodal Memetic framework for low-resolution protein structure prediction. Swarm Evol. Comput. 2020, 52, 100608. [Google Scholar] [CrossRef]

- Fahad, S.; Yang, S.Y.; Khan, R.A.; Khan, S.; Khan, S.A. A multimodal smart quantum particle swarm optimization for electromagnetic design optimization problems. Energies 2021, 14, 4613. [Google Scholar] [CrossRef]

- Tutkun, N. Optimization of multimodal continuous functions using a new crossover for the real-coded genetic algorithms. Expert Syst. Appl. 2009, 36, 8172–8177. [Google Scholar] [CrossRef]

- Rajabi, A.; Witt, C. Self-Adjusting evolutionary algorithms for multimodal optimization. Algorithmica 2022, 84, 1694–1723. [Google Scholar] [CrossRef]

- Seo, J.H.; Im, C.H.; Heo, C.G.; Kim, J.K.; Jung, H.K.; Lee, C.G. Multimodal function optimization based on particle swarm optimization. IEEE Trans. Magn. 2006, 42, 1095–1098. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, W.N.; Yu, Z.T.; Gu, T.L.; Li, Y.; Zhang, H.X.; Zhang, J. Adaptive multimodal continuous ant colony optimization. IEEE Trans. Evol. Comput. 2017, 21, 191–205. [Google Scholar] [CrossRef]

- Cuevas, E.; Reyna-Orta, A. A Cuckoo search algorithm for multimodal optimization. Sci. World J. 2014, 1, 497514. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, P.T.H.; Sudholt, D. Memetic algorithms outperform evolutionary algorithms in multimodal optimisation. Artif. Intell. 2020, 287, 103345. [Google Scholar] [CrossRef]

- Rim, C.; Piao, S.; Li, G.; Pak, U. A niching chaos optimization algorithm for multimodal optimization. Soft Comput. 2018, 22, 621–633. [Google Scholar] [CrossRef]

- Arora, A.; Miri, R. Cryptography and tay-grey wolf optimization based multimodal biometrics for effective security. Multimed. Tools Appl. 2022, 5, in press. [Google Scholar] [CrossRef]

- Kumar, V.; Chhabra, J.K.; Kumar, D. Variance-Based harmony search algorithm for unimodal and multimodal optimization problems with application to clustering. Cybern. Syst. 2014, 45, 486–511. [Google Scholar] [CrossRef]

- Li, J.Z.; Tan, Y. Loser-Out tournament-based fireworks algorithm for multimodal function optimization. IEEE Trans. Evol. Comput. 2018, 22, 679–691. [Google Scholar] [CrossRef]

- Bala, I.; Yadav, A. Comprehensive learning gravitational search algorithm for global optimization of multimodal functions. Neural Comput. Appl. 2020, 32, 7347–7382. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Software 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, R.; Nazir, A.; Mahadzir, S.; Shorfuzzaman, M.; Islam, J. Niching grey wolf optimizer for multimodal optimization problems. Appl. Sci. 2021, 11, 4975. [Google Scholar] [CrossRef]

- Rajakumar, R.; Sekaran, K.; Hsu, C.H.; Kadry, S. Accelerated grey wolf optimization for global optimization problems. Technol. Forecast. Soc. Chang. 2021, 169, 120824. [Google Scholar] [CrossRef]

- Yu, X.B.; Xu, W.Y.; Li, C.L. Opposition-based learning grey wolf optimizer for global optimization. Knowl.-Based Syst. 2021, 226, 107139. [Google Scholar] [CrossRef]

- Rodriguez, A.; Camarena, O.; Cuevas, E.; Aranguren, I.; Valdivia-G, A.; Morales-Castaneda, B.; Zaldivar, D.; Perez-Cisneros, M. Group-based synchronous-asynchronous grey wolf optimizer. Appl. Math. Modell. 2021, 93, 226–243. [Google Scholar] [CrossRef]

- Deshmukh, N.; Vaze, R.; Kumar, R.; Saxena, A. Quantum entanglement inspired grey wolf optimization algorithm and its application. Evol. Intell. 2022, in press. [CrossRef]

- Hu, J.; Chen, H.L.; Heidari, A.A.; Wang, M.J.; Zhang, X.Q.; Chen, Y.; Pan, Z.F. Orthogonal learning covariance matrix for defects of grey wolf optimizer: Insights, balance, diversity, and feature selection. Knowl.-Based Syst. 2021, 213, 106684. [Google Scholar] [CrossRef]

- Mittal, N.; Singh, U.; Sohi, B.S. Modified grey wolf optimizer for global engineering optimization. Appl. Comput. Intell. Soft Comput. 2016, 2016, 7950348. [Google Scholar] [CrossRef]

- Saxena, A.; Kumar, R.; Mirjalili, S. A harmonic estimator design with evolutionary operators equipped grey wolf optimizer. Expert Syst. Appl. 2020, 145, 113125. [Google Scholar] [CrossRef]

- Hu, P.; Chen, S.Y.; Huang, H.X.; Zhang, G.Y.; Liu, L. Improved alpha-guided grey wolf optimizer. IEEE Access 2019, 7, 5421–5437. [Google Scholar] [CrossRef]

- Saxena, A.; Kumar, R.; Das, S. Beta-Chaotic map enabled grey wolf optimizer. Appl. Soft Comput. 2019, 75, 84–105. [Google Scholar] [CrossRef]

- Long, W.; Jiao, J.J.; Liang, X.M.; Cai, S.H.; Xu, M. A random opposition-based learning grey wolf optimizer. IEEE Access 2019, 7, 113810–113825. [Google Scholar] [CrossRef]

- Heidari, A.A.; Pahlavani, P. An efficient modified grey wolf optimizer with lévy flight for optimization tasks. Appl. Soft Comput. 2017, 60, 115–134. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. Enhanced leadership-inspired grey wolf optimizer for global optimization problems. Eng. Comput. 2020, 36, 1777–1800. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. Cauchy grey wolf optimiser for continuous optimisation problems. J. Exp. Theor. Artif. Intell. 2018, 30, 1051–1075. [Google Scholar] [CrossRef]

- Dhargupta, S.; Ghosh, M.; Mirjalili, S.; Sarkar, R. Selective opposition based grey wolf optimization. Expert Syst. Appl. 2020, 151, 113389. [Google Scholar] [CrossRef]

- Long, W.; Wu, T.B.; Cai, S.H.; Liang, X.M.; Jiao, J.J.; Xu, M. A novel grey wolf optimizer algorithm with refraction learning. IEEE Access 2019, 7, 57805–57819. [Google Scholar] [CrossRef]

- Ibrahim, R.A.; Abd Elaziz, M.; Lu, S.F. Chaotic opposition-based grey-wolf optimization algorithm based on differential evolution and disruption operator for global optimization. Expert Syst. Appl. 2018, 108, 1–27. [Google Scholar] [CrossRef]

- Mohammed, H.; Rashid, T. A novel hybrid GWO with WOA for global numerical optimization and solving pressure vessel design. Neural Comput. Appl. 2020, 32, 14701–14718. [Google Scholar] [CrossRef]

- Zhao, Y.T.; Li, W.G.; Liu, A. Improved grey wolf optimization based on the two-stage search of hybrid CMA-ES. Soft Comput. 2020, 24, 1097–1115. [Google Scholar] [CrossRef]

- Makhadmeh, S.N.; Khader, A.T.; Al-Betar, M.A.; Naim, S.; Abasi, A.K.; Alyasseri, Z.A.A. A novel hybrid grey wolf optimizer with min-conflict algorithm for power scheduling problem in a smart home. Swarm Evol. Comput. 2021, 60, 100793. [Google Scholar] [CrossRef]

- Purushothaman, R.; Rajagopalan, S.P.; Dhandapani, G. Hybridizing gray wolf optimization (GWO) with grasshopper optimization algorithm (GOA) for text feature selection and clustering. Appl. Soft Comput. 2020, 96, 106651. [Google Scholar] [CrossRef]

- Wang, Z.W.; Qin, C.; Wan, B.T.; Song, W.W.; Yang, G.Q. An adaptive fuzzy chicken swarm optimization algorithm. Math. Probl. Eng. 2021, 2021, 8896794. [Google Scholar] [CrossRef]

- Brindha, S.; Amali, S.M.J. A robust and adaptive fuzzy logic based differential evolution algorithm using population diversity tuning for multi-objective optimization. Eng. Appl. Artif. Intell. 2021, 102, 104240. [Google Scholar]

- Ferrari, A.C.K.; da Silva, C.A.G.; Osinski, C.; Pelacini, D.A.F.; Leandro, G.V.; Coelho, L.D. Tuning of control parameters of the whale optimization algorithm using fuzzy inference system. J. Intell. Fuzzy Syst. 2022, 42, 3051–3066. [Google Scholar] [CrossRef]

- Liang, J.; Suganthan, P.N. Problem definitions and evaluation criteria for the CEC 2014 special session and competition on single objective real-parameter numerical optimization. In Technical Report 201311; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China, 2013. [Google Scholar]

- Dahmani, S.; Yebdri, D. Hybrid algorithm of particle swarm optimization and grey wolf optimizer for reservoir operation management. Water Resour. Manag. 2020, 34, 4545–4560. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, X.; Tu, L. A modified particle swarm optimization using adaptive strategy. Expert Syst. Appl. 2020, 152, 113353. [Google Scholar] [CrossRef]

- Sinha, N.; Chakrabarti, R.; Chattopadhyay, P.K. Evolutionary programming techniques for economic load dispatch. IEEE Trans. Evol. Comput. 2003, 7, 83–94. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems; Technical Report; Jadavpur University: Kolkata, India, 2011. [Google Scholar]

- Al-Betar, M.A. Island-Based harmony search algorithm for non-convex economic load dispatch problems. J. Electr. Eng. Technol. 2021, 16, 1985–2015. [Google Scholar] [CrossRef]

- Omran, M.G.H.; Alsharhan, S.; Clerc, M. A modified intellects-masses optimizer for solving real-world optimization problems. Swarm Evol. Comput. 2018, 41, 159–166. [Google Scholar] [CrossRef]

- Omran, M.G.H.; Clerc, M. APS 9: An improved adaptive population-based simplex method for real-world engineering optimization problems. Appl. Intell. 2018, 48, 1596–1608. [Google Scholar] [CrossRef]

- Zhang, H.; Cai, Z.; Ye, X.; Wang, M.; Kuang, F.; Chen, H.; Li, C.; Li, Y. A multi-strategy enhanced salp swarm algorithm for global optimization. Eng. Comput. 2022, 38, 1177–1203. [Google Scholar] [CrossRef]

- Elsayed, S.M.; Sarker, R.A.; Essam, D.L. GA with a mew multi-parent crossover for solving IEEE-CEC2011 competition problems. In Proceedings of the 2011 IEEE Congress of Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; pp. 1034–1040. [Google Scholar]

- Gandomi, A.H.; Yang, X.S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. A memory-based grey wolf optimizer for global optimization tasks. Appl. Soft Comput. 2020, 93, 106367. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. A hybrid self-adaptive sine cosine algorithm with opposition based learning. Expert Syst. Appl. 2019, 119, 210–230. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-Flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Fan, Q.S.; Huang, H.S.; Li, Y.T.; Han, Z.G.; Hu, Y.; Huang, D. Beetle antenna strategy based grey wolf optimization. Expert Syst. Appl. 2021, 165, 113882. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Taghian, S.; Mirjalili, S. An improved grey wolf optimizer for solving engineering problems. Expert Syst. Appl. 2021, 166, 113917. [Google Scholar] [CrossRef]

- Zhang, H.L.; Li, R.; Cai, Z.N.; Gu, Z.Y.; Heidari, A.A.; Wang, M.J.; Chen, H.L.; Chen, M.Y. Advanced orthogonal moth flame optimization with Broyden-Fletcher-Goldfarb-Shanno algorithm: Framework and real-world problems. Expert Syst. Appl. 2020, 159, 113617. [Google Scholar] [CrossRef]

- Li, S.M.; Chen, H.L.; Wang, M.J.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comp. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Gao, C.; Hu, Z.B.; Tong, W.Y. Linear prediction evolution algorithm: A simplest evolutionary optimizer. Memet. Comput. 2021, 13, 319–339. [Google Scholar] [CrossRef]

- Singh, N.; Singh, S.B. A novel hybrid GWO-SCA approach for optimization problems. Eng. Sci. Technol. 2017, 20, 1586–1601. [Google Scholar] [CrossRef]

- Tian, Y.; Liu, R.C.; Zhang, X.Y.; Ma, H.P.; Tan, K.C.; Jin, Y.C. A multipopulation evolutionary algorithm for solving large-scale multimodal multiobjective optimization problems. IEEE Trans. Evol. Comput. 2021, 25, 405–418. [Google Scholar] [CrossRef]

- Ma, H.P.; Fei, M.R.; Jiang, Z.H.; Li, L.; Zhou, H.Y.; Crookes, D. A multipopulation-based multiobjective evolutionary algorithm. IEEE Trans. Cybern. 2020, 50, 689–702. [Google Scholar] [CrossRef]

- Alkayem, N.F.; Shen, L.; Asteris, P.G.; Sokol, M.; Xin, Z.Q.; Cao, M.S. A new self-adaptive quasi-oppositional stochastic fractal search for the inverse problem of structural damage assessment. Alex. Eng. J. 2022, 61, 1922–1936. [Google Scholar] [CrossRef]

- Alkayem, N.F.; Cao, M.S.; Shen, L.; Fu, R.H.; Sumarac, D. The combined social engineering particle swarm optimization for real-world engineering problems: A case study of model-based structural health monitoring. Appl. Soft Comput. 2022, 123, 108919. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).