A Combined Approach to Infrared Small-Target Detection with the Alternating Direction Method of Multipliers and an Improved Top-Hat Transformation

Abstract

:1. Introduction

2. Related Work

2.1. Robust Principal Component Analysis (RPCA)

2.2. Alternating Direction Method of Multipliers (ADMM)

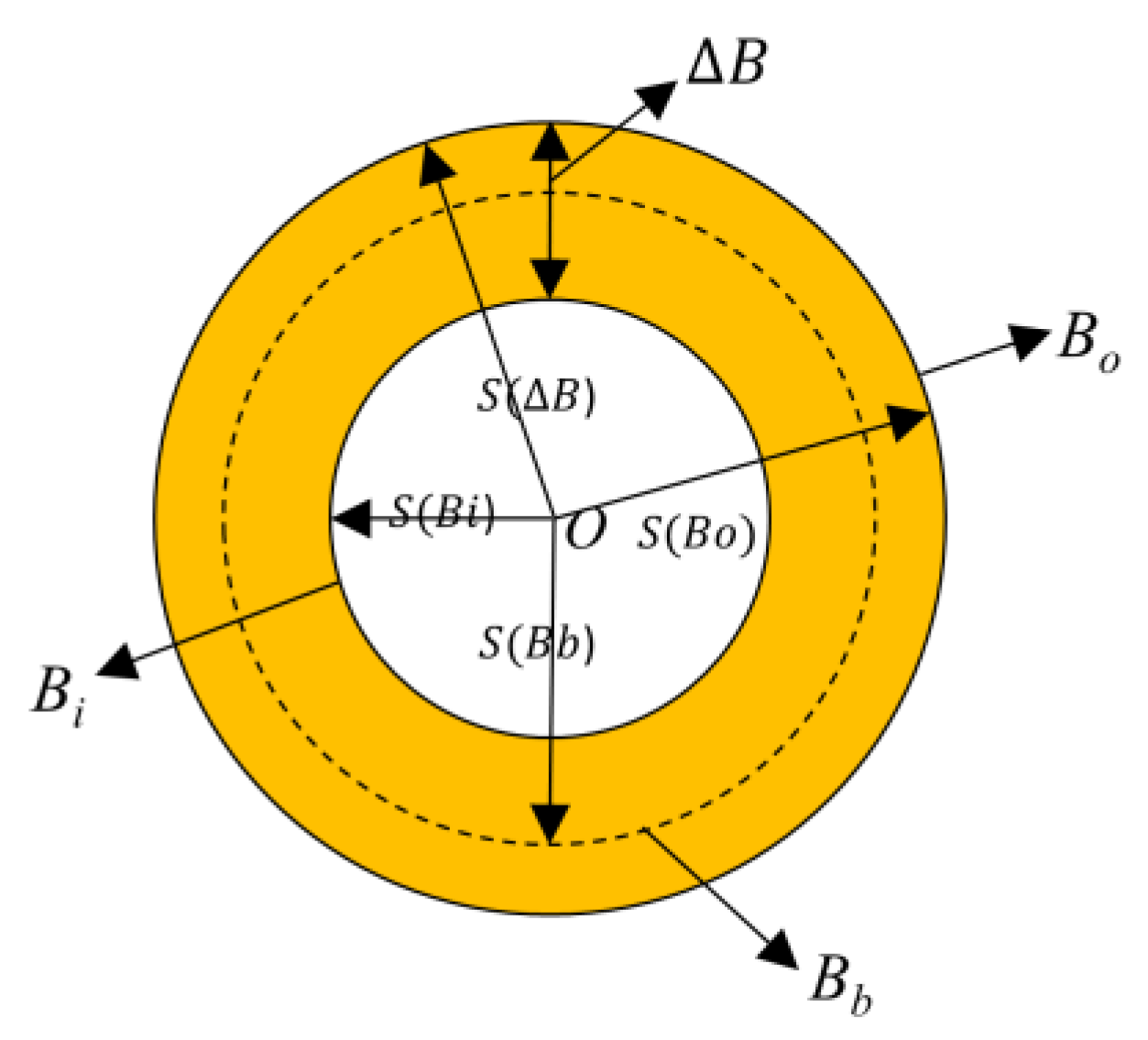

2.3. Top-Hat and NWTH

3. The Proposed Method

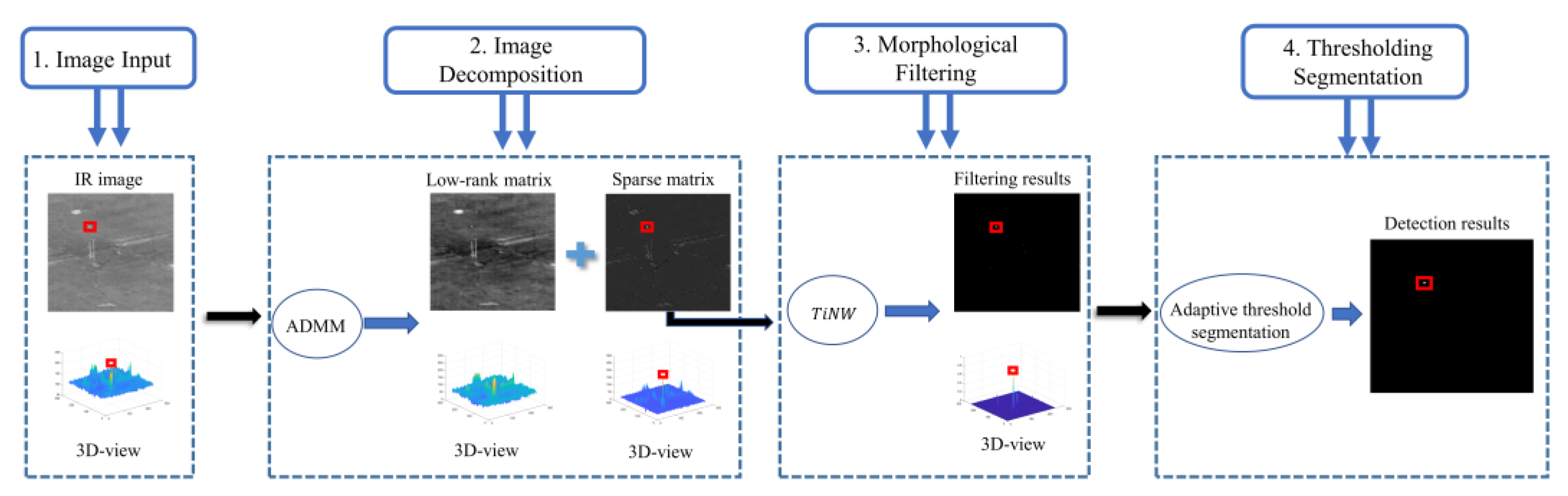

3.1. Overview

3.2. Image Decomposition—ADMM

| Algorithm 1 The pseudocode of ADMM. |

| Output: Sparse matrixand low-rank matrix |

| let; while (not converged) |

| ; |

| ; |

| ; |

| ; |

| ; ; end |

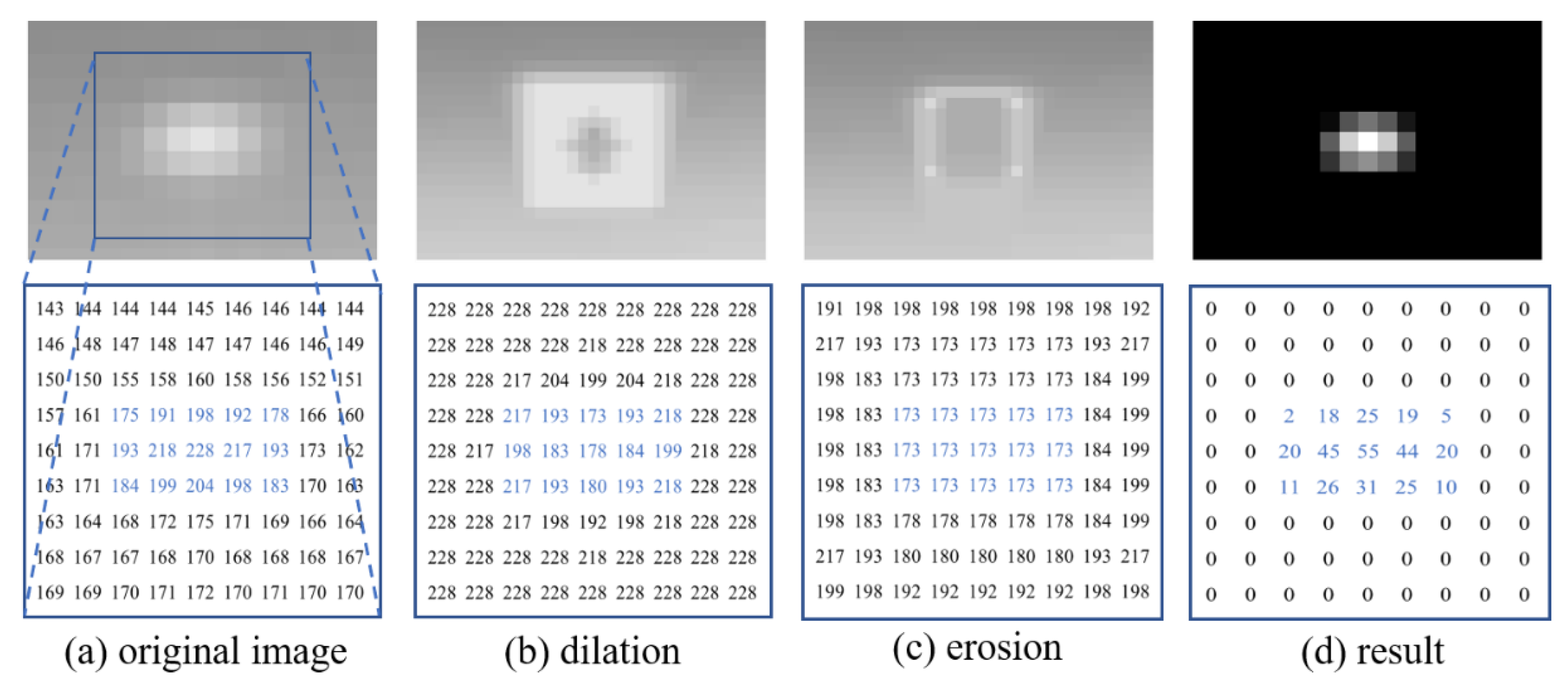

3.3. Morphological Filtering—An Improved NTWH Transformation

3.4. Adaptive Thresholding Segmentation

4. Experimental Results and Analysis

4.1. Experimental Setup

4.1.1. Hardware and Software

4.1.2. Datasets

4.1.3. Baseline Methods

4.1.4. Evaluation Metrics

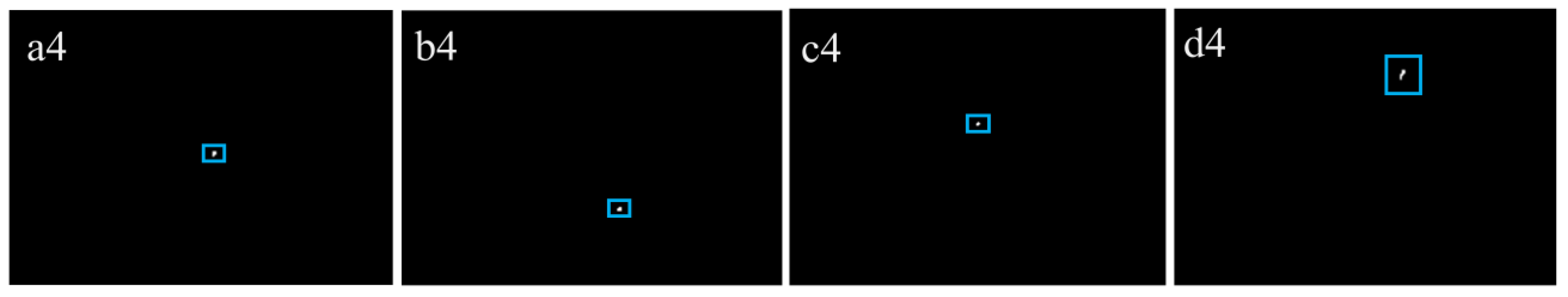

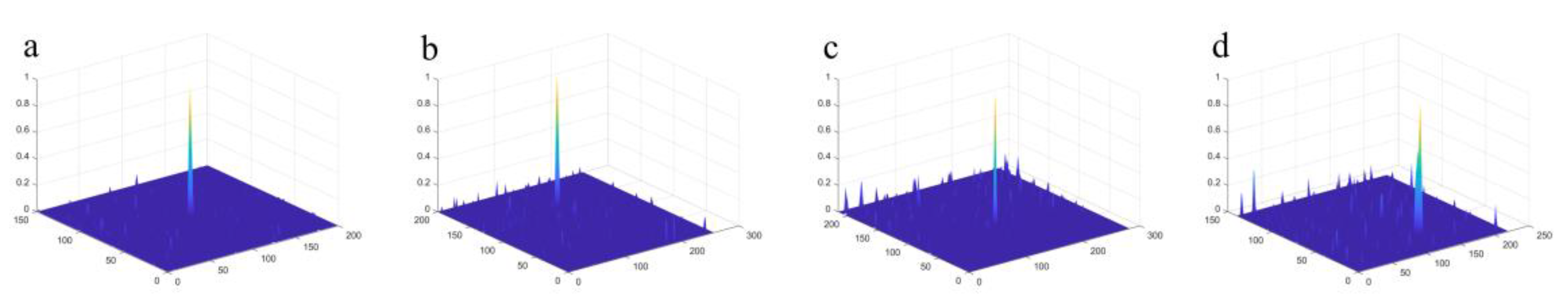

4.2. Experimental Results: Results at Each Stage, in Four Typical Backgrounds

4.3. Experimental Results: Comparison to the State-of-Art Algorithms

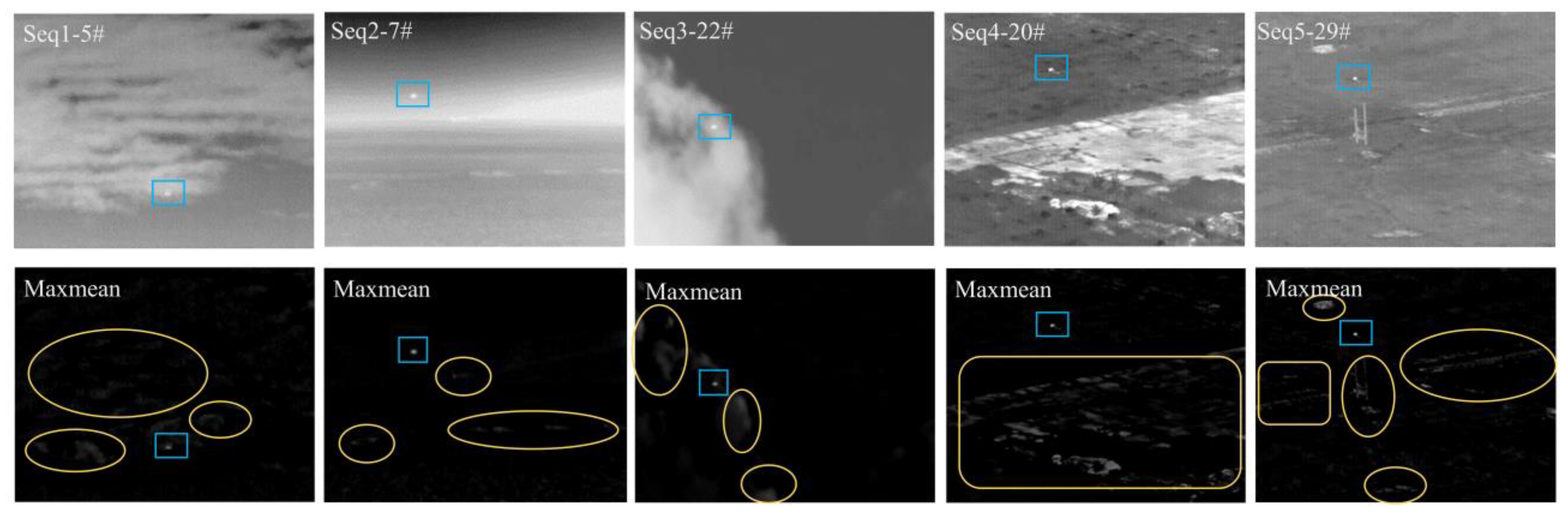

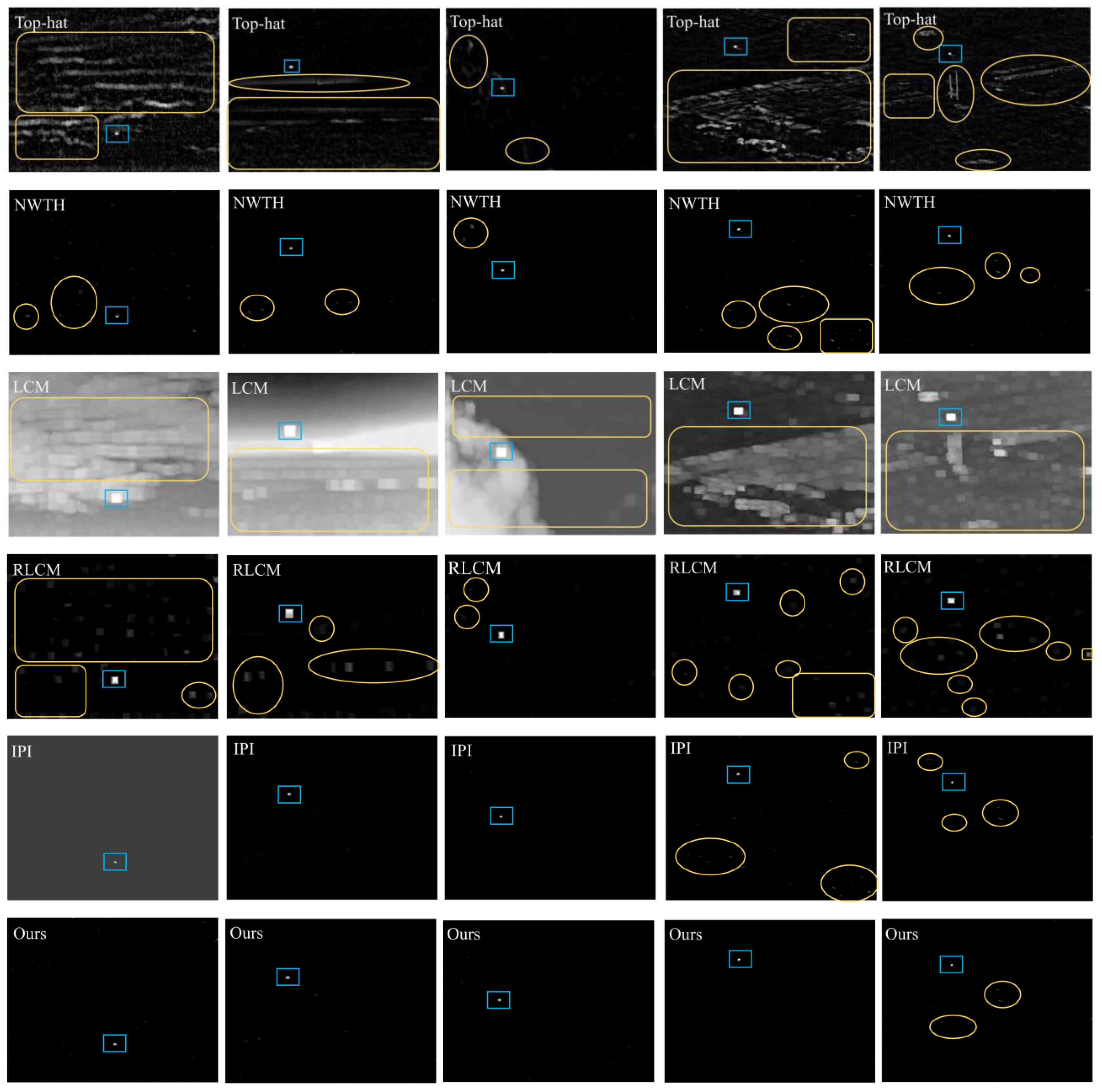

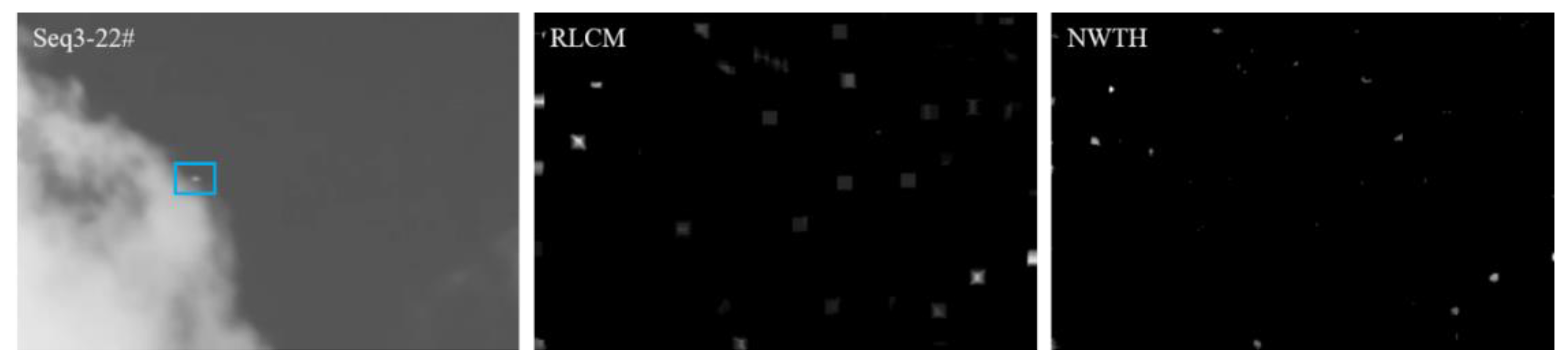

4.3.1. Visual Observation

4.3.2. Quantitative Comparison

4.3.3. Overall Comparison

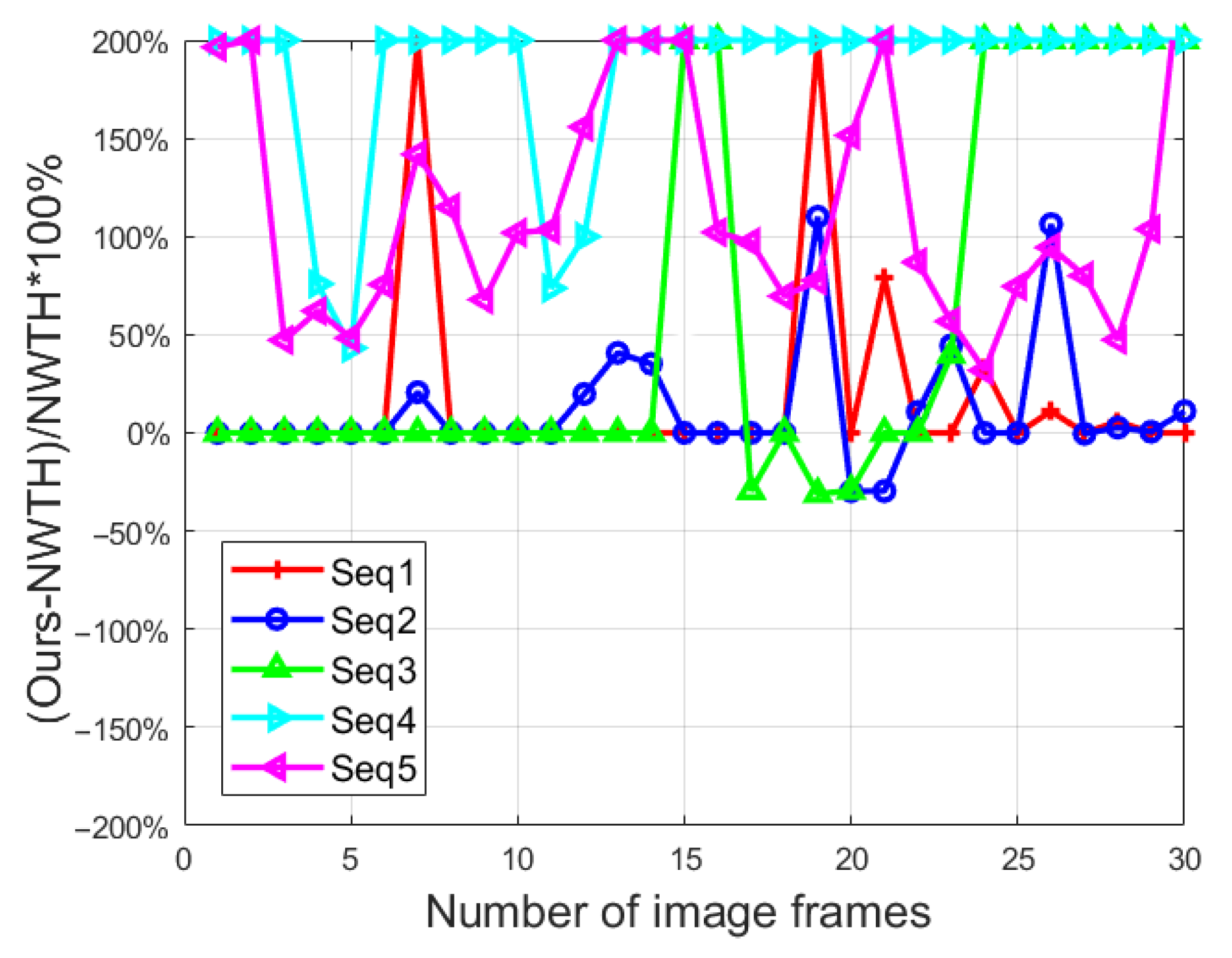

4.4. Additional Experimental Results: Effectiveness of Our New Top-Hat Structuring Element

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yavari, M.; Moallem, P.; Kazemi, M.; Moradi, S. Small Infrared Target Detection Using Minimum Variation Direction Interpolation. Digit. Signal Process. A Rev. J. 2021, 117, 103174. [Google Scholar] [CrossRef]

- Xu, L.; Wei, Y.; Zhang, H.; Shang, S. Robust and Fast Infrared Small Target Detection Based on Pareto Frontier Optimization. Infrared Phys. Technol. 2022, 123, 104192. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F.; Jin, T. Enhancement of Dim Small Target through Modified Top-Hat Transformation under the Condition of Heavy Clutter. Signal Process. 2010, 90, 1643–1654. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y. Robust Infrared Small Target Detection Using Local Steering Kernel Reconstruction. Pattern Recognit. 2018, 77, 113–125. [Google Scholar] [CrossRef]

- Li, Z.Z.; Chen, J.; Hou, Q.; Fu, H.X.; Dai, Z.; Jin, G.; Li, R.Z.; Liu, C.J. Sparse Representation for Infrared Dim Target Detection via a Discriminative Over-Complete Dictionary Learned Online. Sensors 2014, 14, 9451–9470. [Google Scholar] [CrossRef] [PubMed]

- Wan, M.; Gu, G.; Cao, E.; Hu, X.; Qian, W.; Ren, K. In-Frame and Inter-Frame Information Based Infrared Moving Small Target Detection under Complex Cloud Backgrounds. Infrared Phys. Technol. 2016, 76, 455–467. [Google Scholar] [CrossRef]

- Tonissen, S.M.; Evans, R.J. Performance of Dynamic Programming Techniques for Track-Before-Detect. IEEE Trans. Aerosp. Electron. Syst. 1996, 1440, 1440–1451. [Google Scholar] [CrossRef]

- Reed, I.S.; Gagliardi, R.M.; Shao, H.M. Application of Three-Dimensional Filtering to Moving Target Detection. IEEE Trans. Aerosp. Electron. Syst. 1983, 6, 898–905. [Google Scholar] [CrossRef]

- Blostein, S.D. A Sequential Detection Approach to Target Tracking. IEEE Trans. Aerosp. Electron. Syst. 1994, 30, 197–212. [Google Scholar] [CrossRef]

- Tartakovsky, A.G.; Brown, J. Adaptive Spatial-Temporal Filtering Methods for Clutter Removal and Target Tracking. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 1522–1537. [Google Scholar] [CrossRef]

- Bae, T.W. Small Target Detection Using Bilateral Filter and Temporal Cross Product in Infrared Images. Infrared Phys. Technol. 2011, 54, 403–411. [Google Scholar] [CrossRef]

- Gao, C.; Wang, L.; Xiao, Y.; Zhao, Q.; Meng, D. Infrared Small-Dim Target Detection Based on Markov Random Field Guided Noise Modeling. Pattern Recognit. 2018, 76, 463–475. [Google Scholar] [CrossRef]

- Deng, L.; Zhu, H.; Tao, C.; Wei, Y. Infrared Moving Point Target Detection Based on Spatial-Temporal Local Contrast Filter. Infrared Phys. Technol. 2016, 76, 168–173. [Google Scholar] [CrossRef]

- Mu, J.; Rao, J.; Chen, R.; Li, F. Low-Altitude Infrared Slow-Moving Small Target Detection via Spatial-Temporal Features Measure. Sensors 2022, 22, 5136. [Google Scholar] [CrossRef]

- Qian, K.; Zhou, H.; Qin, H.; Rong, S.; Zhao, D.; Du, J. Guided Filter and Convolutional Network Based Tracking for Infrared Dim Moving Target. Infrared Phys. Technol. 2017, 85, 431–442. [Google Scholar] [CrossRef]

- Jiang, Y.; Dong, L.; Chen, Y.; Xu, W. An Infrared Small Target Detection Algorithm Based on Peak Aggregation and Gaussian Discrimination. IEEE Access 2020, 8, 106214–106225. [Google Scholar] [CrossRef]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-Mean and Max-Median Filters for Detection of Small-Targets. Signal Data Process. Small Targets 1999, 3809, 74–83. [Google Scholar] [CrossRef]

- Gregoris, D.J.; Yu, S.K.; Tritchew, S.; Sevigny, L. Wavelet Transform-Based Filtering for the Enhancement of Dim Targets in FLIR Images. Wavelet Appl. 1994, 2242, 573. [Google Scholar] [CrossRef]

- Yao, R.; Guo, C.; Deng, W.; Zhao, H. A Novel Mathematical Morphology Spectrum Entropy Based on Scale-Adaptive Techniques. ISA Trans. 2021, 126, 691–702. [Google Scholar] [CrossRef]

- Zeng, M.; Li, J.; Peng, Z. The Design of Top-Hat Morphological Filter and Application to Infrared Target Detection. Infrared Phys. Technol. 2006, 48, 67–76. [Google Scholar] [CrossRef]

- Deng, L.; Zhu, H.; Zhou, Q.; Li, Y. Adaptive Top-Hat Filter Based on Quantum Genetic Algorithm for Infrared Small Target Detection. Multimed. Tools Appl. 2018, 77, 10539–10551. [Google Scholar] [CrossRef]

- Ai, J. The Application of SVD-Based Speckle Reduction and Tophat Transform in Preprocessing of Ship Detection. IET Conf. Publ. 2015, 2015, 9–12. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F. Analysis of New Top-Hat Transformation and the Application for Infrared Dim Small Target Detection. Pattern Recognit. 2010, 43, 2145–2156. [Google Scholar] [CrossRef]

- Deng, L.; Zhang, J.; Xu, G.; Zhu, H. Infrared Small Target Detection via Adaptive M-Estimator Ring Top-Hat Transformation. Pattern Recognit. 2021, 112, 107729. [Google Scholar] [CrossRef]

- Wang, C.; Wang, L. Multidirectional Ring Top-Hat Transformation for Infrared Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8077–8088. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, J.; Xu, G.; Deng, L. Balanced Ring Top-Hat Transformation for Infrared Small-Target Detection with Guided Filter Kernel. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 3892–3903. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A Local Contrast Method for Small Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 574–581. [Google Scholar] [CrossRef]

- Han, J.; Ma, Y.; Zhou, B.; Fan, F.; Liang, K.; Fang, Y. A Robust Infrared Small Target Detection Algorithm Based on Human Visual System. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2168–2172. [Google Scholar] [CrossRef]

- Han, J.; Liang, K.; Zhou, B.; Zhu, X.; Zhao, J.; Zhao, L. Infrared Small Target Detection Utilizing the Multiscale Relative Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2018, 15, 612–616. [Google Scholar] [CrossRef]

- Qin, Y.; Li, B. Effective Infrared Small Target Detection Utilizing a Novel Local Contrast Method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Ren, L.; Pan, Z.; Ni, Y. Double Layer Local Contrast Measure and Multi-Directional Gradient Comparison for Small Infrared Target Detection. Optik 2022, 258, 168891. [Google Scholar] [CrossRef]

- Li, Q.; Nie, J.; Qu, S. A Small Target Detection Algorithm in Infrared Image by Combining Multi-Response Fusion and Local Contrast Enhancement. Optik 2021, 241, 166919. [Google Scholar] [CrossRef]

- Denney, B.S.; de Figueiredo, R.J. Optimal Point Target Detection Using Adaptive Auto Regressive Background Prediction. In Signal and Data Processing of Small Targets 2000; SPIE: Bellingham, WA, USA, 2000; Volume 4048, pp. 46–57. [Google Scholar]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Song, Y. Infrared Small Target and Background Separation via Column-Wise Weighted Robust Principal Component Analysis. Infrared Phys. Technol. 2016, 77, 421–430. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Song, Y.; Guo, J. Non-Negative Infrared Patch-Image Model: Robust Target-Background Separation via Partial Sum Minimization of Singular Values. Infrared Phys. Technol. 2017, 81, 182–194. [Google Scholar] [CrossRef]

- Fan, Z.; Bi, D.; Xiong, L.; Ma, S.; He, L.; Ding, W. Dim Infrared Image Enhancement Based on Convolutional Neural Network. Neurocomputing 2018, 272, 396–404. [Google Scholar] [CrossRef]

- Liu, Q.; Lu, X.; He, Z.; Zhang, C.; Chen, W.S. Deep Convolutional Neural Networks for Thermal Infrared Object Tracking. Knowl.-Based Syst. 2017, 134, 189–198. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Zhao, B.; Wang, C.; Fu, Q.; Han, Z. A Novel Pattern for Infrared Small Target Detection with Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4481–4492. [Google Scholar] [CrossRef]

- Che, J.; Wang, L.; Bai, X.; Liu, C.; Zhou, F. Spatial—Temporal Hybrid Feature Extraction Network for Few—Shot Automatic Modulation Classification. IEEE Trans. Veh. Technol. 2022, 1–6. [Google Scholar] [CrossRef]

- Liu, L.; Ma, B.; Zhang, Y.; Yi, X.; Li, H. AFD-Net: Adaptive Fully-Dual Network for Few-Shot Object Detection. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 2549–2557. [Google Scholar] [CrossRef]

- Wright, J.; Peng, Y.; Ma, Y.; Ganesh, A.; Rao, S. Robust Principal Component Analysis: Exact Recovery of Corrupted Low-Rank Matrices by Convex Optimization. In Proceedings of the Advances in Neural Information Processing Systems 22: 23rd Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009; pp. 2080–2088. [Google Scholar]

- Shi, Y.; Wei, Y.; Yao, H.; Pan, D.; Xiao, G. High-Boost-Based Multiscale Local Contrast Measure for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2018, 15, 33–37. [Google Scholar] [CrossRef]

- Moradi, S.; Moallem, P.; Sabahi, M.F. Fast and Robust Small Infrared Target Detection Using Absolute Directional Mean Difference Algorithm. Signal Processing 2020, 177, 107727. [Google Scholar] [CrossRef]

- Moradi, S.; Moallem, P.; Sabahi, M.F. A False-Alarm Aware Methodology to Develop Robust and Efficient Multi-Scale Infrared Small Target Detection Algorithm. Infrared Phys. Technol. 2018, 89, 387–397. [Google Scholar] [CrossRef]

- Aghaziyarati, S.; Moradi, S.; Talebi, H. Small Infrared Target Detection Using Absolute Average Difference Weighted by Cumulative Directional Derivatives. Infrared Phys. Technol. 2019, 101, 78–87. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric Contextual Modulation for Infrared Small Target Detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Virtual, 5–9 January 2021; pp. 949–958. [Google Scholar] [CrossRef]

| Sequence | Target Region | Frames | Frame Size | Avg SCR | Image Description |

|---|---|---|---|---|---|

| Seq 1 | 30 | 0.62 | Sky background; mostly covered by scattered clouds; fixed camera position and the target is from left to right. | ||

| Seq 2 | 30 | 0.65 | Sky and sea background, with a clear horizontal boundary; fixed camera position and the target is moving from top to bottom. | ||

| Seq 3 | 30 | 0.51 | Sky background; partially covered by thick cloud; fixed camera position and the target is moving from right to left. | ||

| Seq 4 * | 30 | 3.81 | Land background (Rapidly changing); tracking camera position; small target (16 pixels). | ||

| Seq 5 * | 30 | 1.63 | Land background; tracking camera position; ultra-small target (9 pixels). |

| Methods | Parameter Settings |

|---|---|

| Max-mean | |

| Top-hat | |

| NWTH | for sequence 5 |

| LCM | , h = 3, 5, 7, 9 |

| RLCM | |

| IPI | |

| Ours | for sequence 5; other parameters are shown in Algorithm 1. |

| Method | Sequence 1 | Sequence 2 | Sequence 3 | Sequence 4 | Sequence 5 |

|---|---|---|---|---|---|

| Max-mean | 10.48 | 26.91 | 4.10 | 9.67 | 14.87 |

| Top-hat | 6.77 | 6.89 | 24.96 | 7.28 | 10.27 |

| NWTH | 79.46 | 137.34 | 66.40 | 22.52 | 13.63 |

| LCM | 3.17 | 1.23 | 3.36 | 5.50 | 4.92 |

| RLCM | 65.23 | 136.16 | 886.35 | 14.49 | 12.72 |

| IPI | 21.24 | 146.91 | 142.91 | 9.95 | 72.37 |

| Ours | 265.84 | 240.54 | 490.00 | 35.26 | 20.68 |

| Method | Sequence 1 | Sequence 2 | Sequence 3 | Sequence 4 | Sequence 5 |

|---|---|---|---|---|---|

| Max-mean | 3.96 | 12.75 | 6.69 | 4.26 | 1.48 |

| Top-hat | 1.05 | 3.43 | 8.39 | 2.05 | 0.81 |

| NWTH | 5.65 | 13.20 | 20.07 | 11.92 | 3.29 |

| LCM | 0.92 | 0.93 | 0.95 | 1.54 | 0.54 |

| RLCM | 2.02 | 3.98 | 6.87 | 6.97 | 1.27 |

| IPI | 10.96 | 14.41 | 22.92 | 17.76 | 3.99 |

| Ours | 7.17 | 14.15 | 23.69 | 14.09 | 3.98 |

| Method | Sequence 1 | Sequence 2 | Sequence 3 | Sequence 4 | Sequence 5 | Average |

|---|---|---|---|---|---|---|

| NWTH | 0.015 | 0.014 | 0.016 | 0.017 | 0.016 | 0.016 |

| IPI | 17.39 | 13.03 | 60.62 | 31.93 | 23.45 | 29.28 |

| Ours | 1.32 | 0.77 | 2.60 | 3.70 | 2.86 | 2.25 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xi, T.; Yuan, L.; Sun, Q. A Combined Approach to Infrared Small-Target Detection with the Alternating Direction Method of Multipliers and an Improved Top-Hat Transformation. Sensors 2022, 22, 7327. https://doi.org/10.3390/s22197327

Xi T, Yuan L, Sun Q. A Combined Approach to Infrared Small-Target Detection with the Alternating Direction Method of Multipliers and an Improved Top-Hat Transformation. Sensors. 2022; 22(19):7327. https://doi.org/10.3390/s22197327

Chicago/Turabian StyleXi, Tengyan, Lihua Yuan, and Quanbin Sun. 2022. "A Combined Approach to Infrared Small-Target Detection with the Alternating Direction Method of Multipliers and an Improved Top-Hat Transformation" Sensors 22, no. 19: 7327. https://doi.org/10.3390/s22197327

APA StyleXi, T., Yuan, L., & Sun, Q. (2022). A Combined Approach to Infrared Small-Target Detection with the Alternating Direction Method of Multipliers and an Improved Top-Hat Transformation. Sensors, 22(19), 7327. https://doi.org/10.3390/s22197327