Dual-Scale Doppler Attention for Human Identification

Abstract

1. Introduction

2. Related Works

2.1. Doppler Radar Systems for Human Identification

2.2. Deep Learning for Micro-Doppler Signatures

2.3. Recent Radar-Based Human Identification Analysis

3. Method

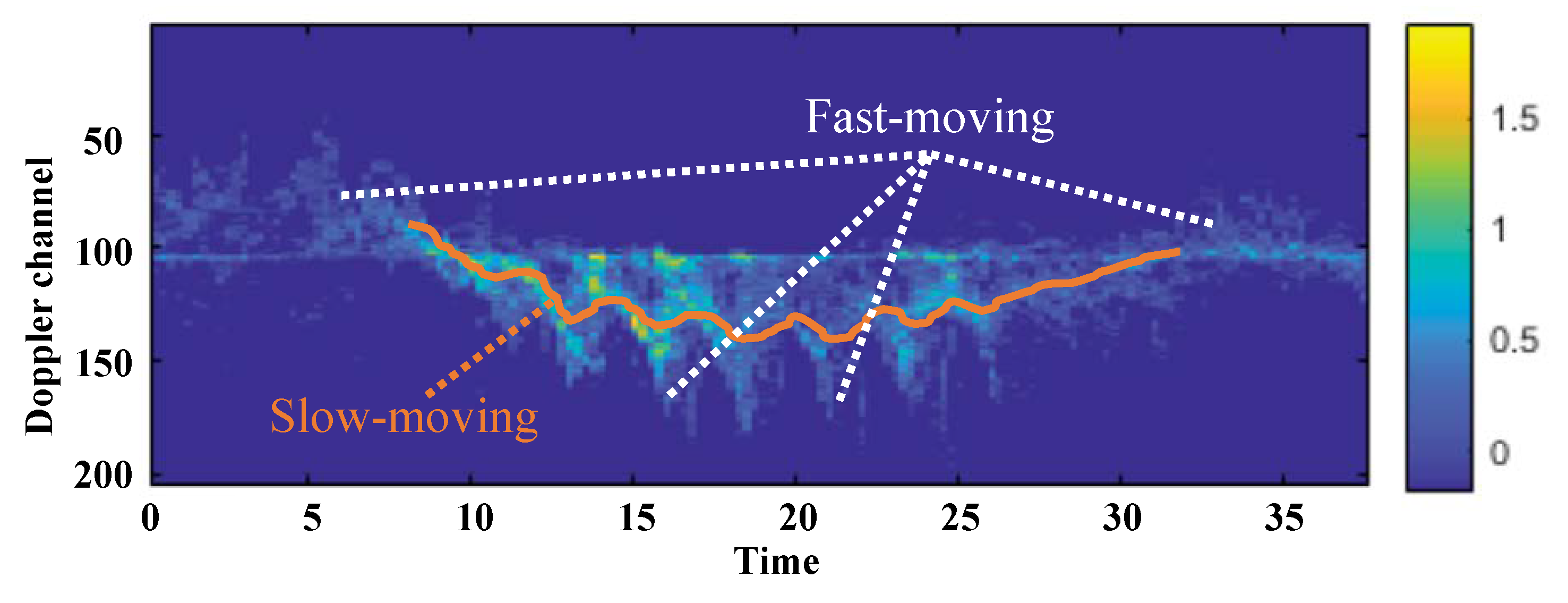

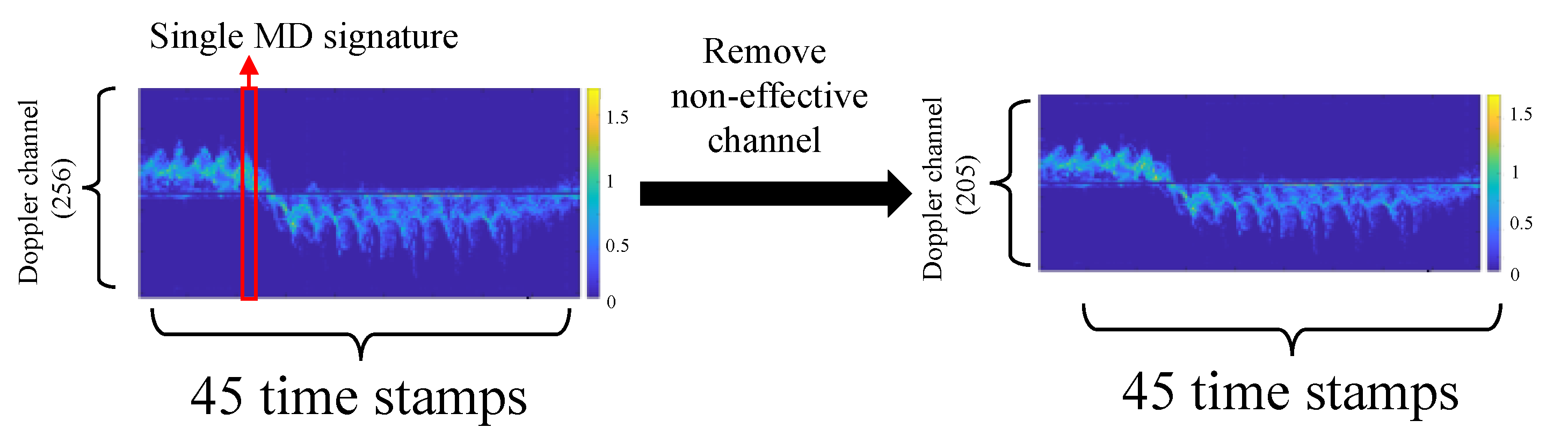

3.1. Generating Micro-Doppler Signatures

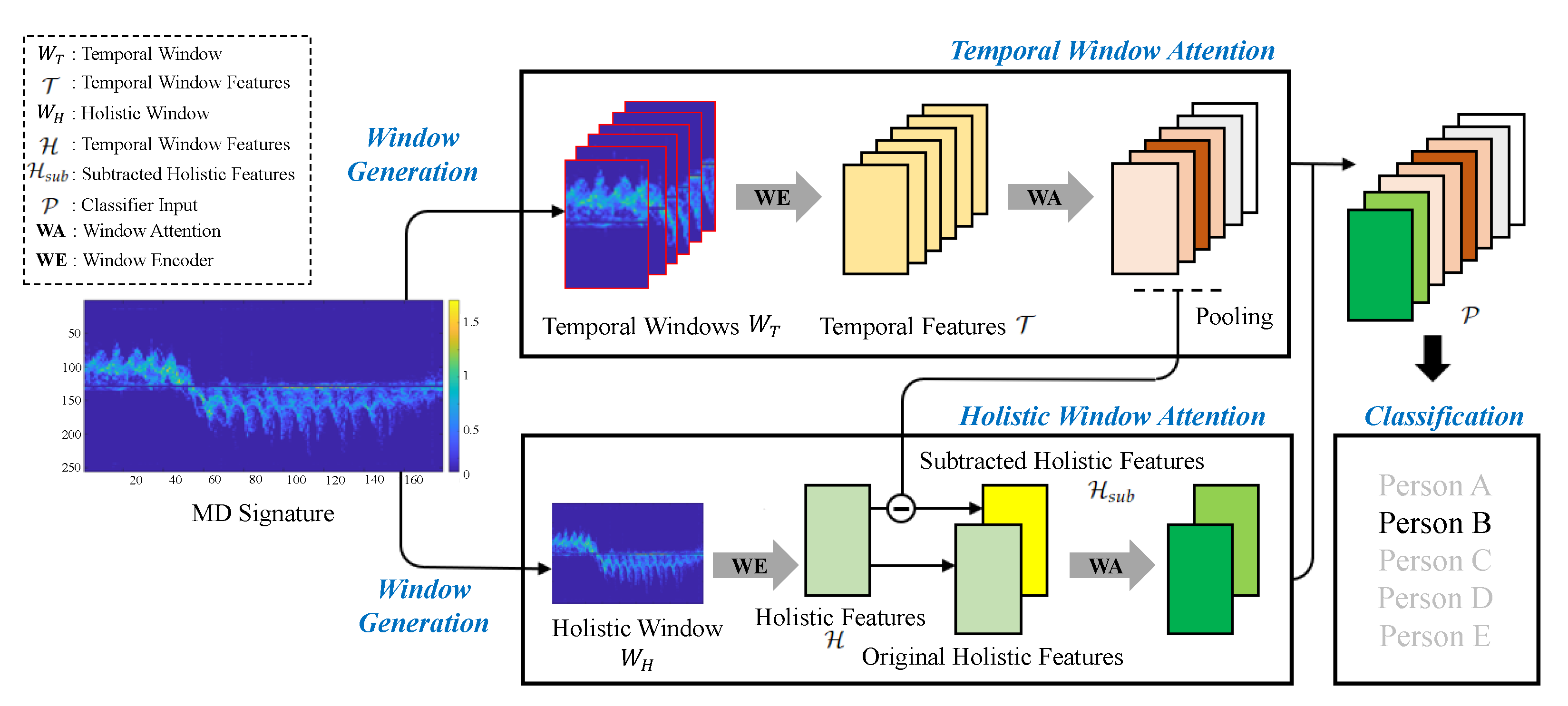

3.2. Model Overview

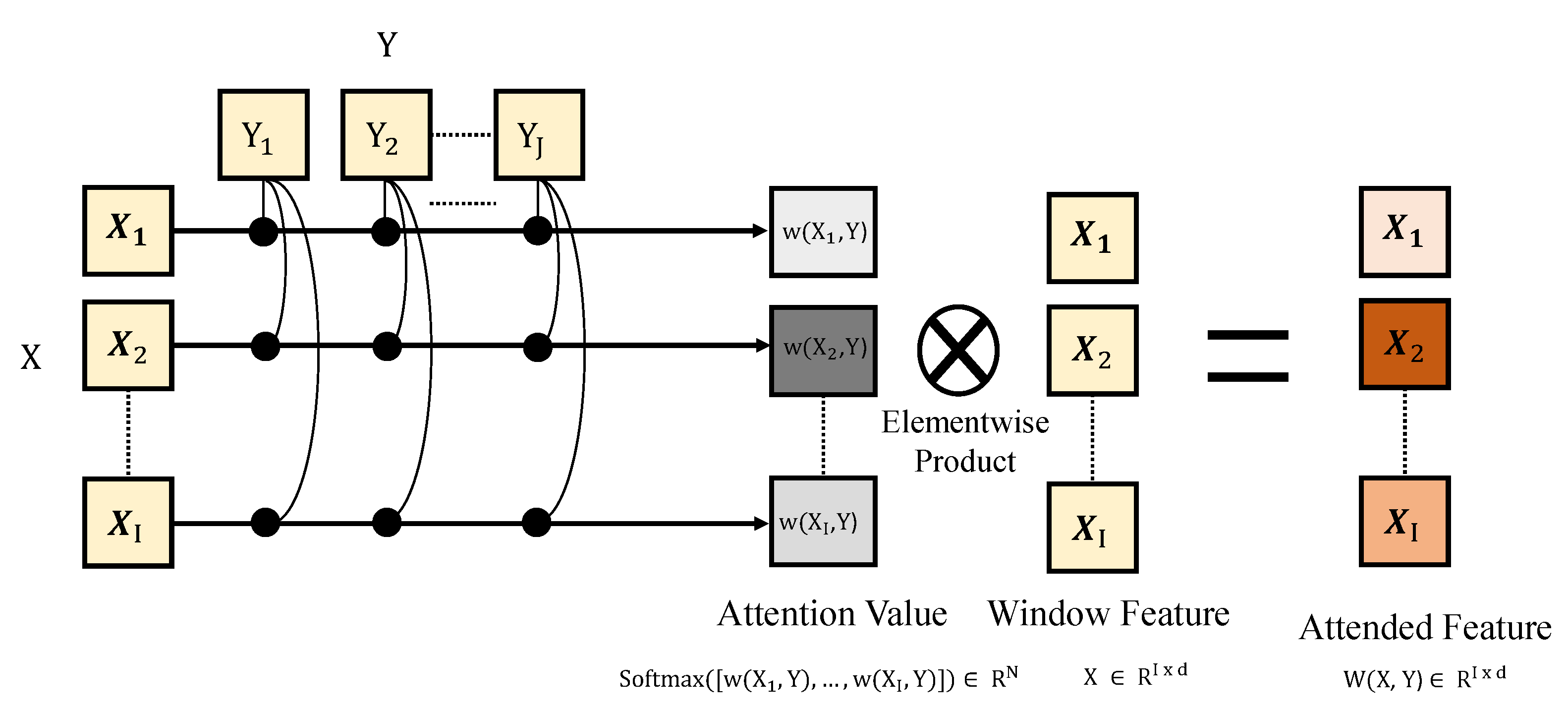

3.3. Window Attention

3.4. Temporal Window Attention

3.5. Holistic Window Attention

3.6. Classification

3.7. Training Loss

4. Experiments

4.1. IDRad Dataset

4.2. Experimental Details

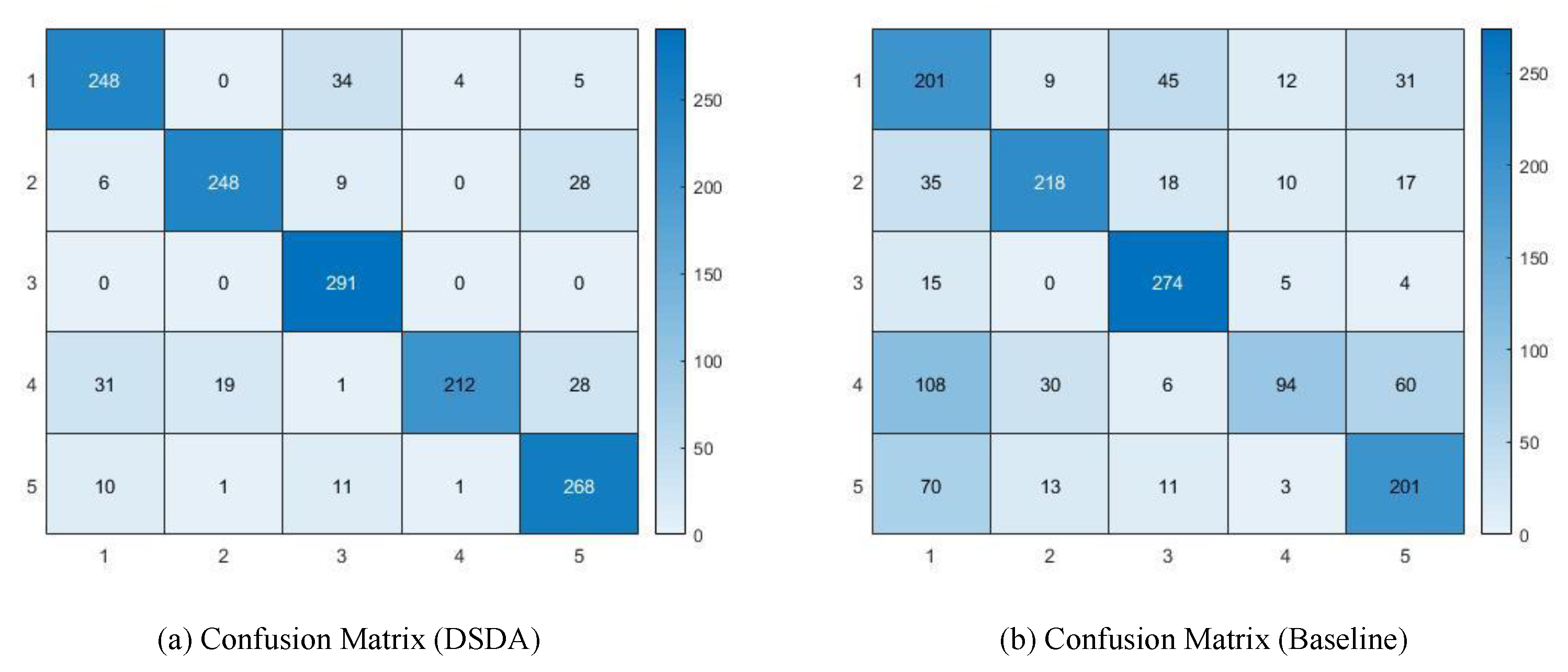

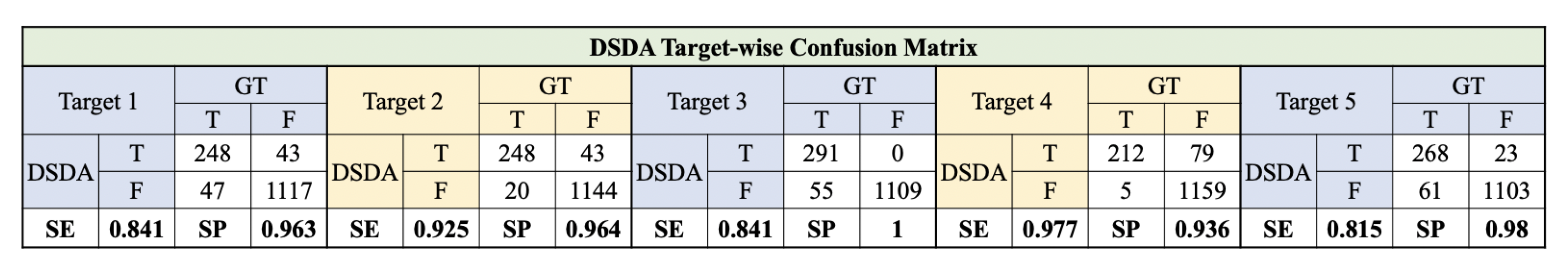

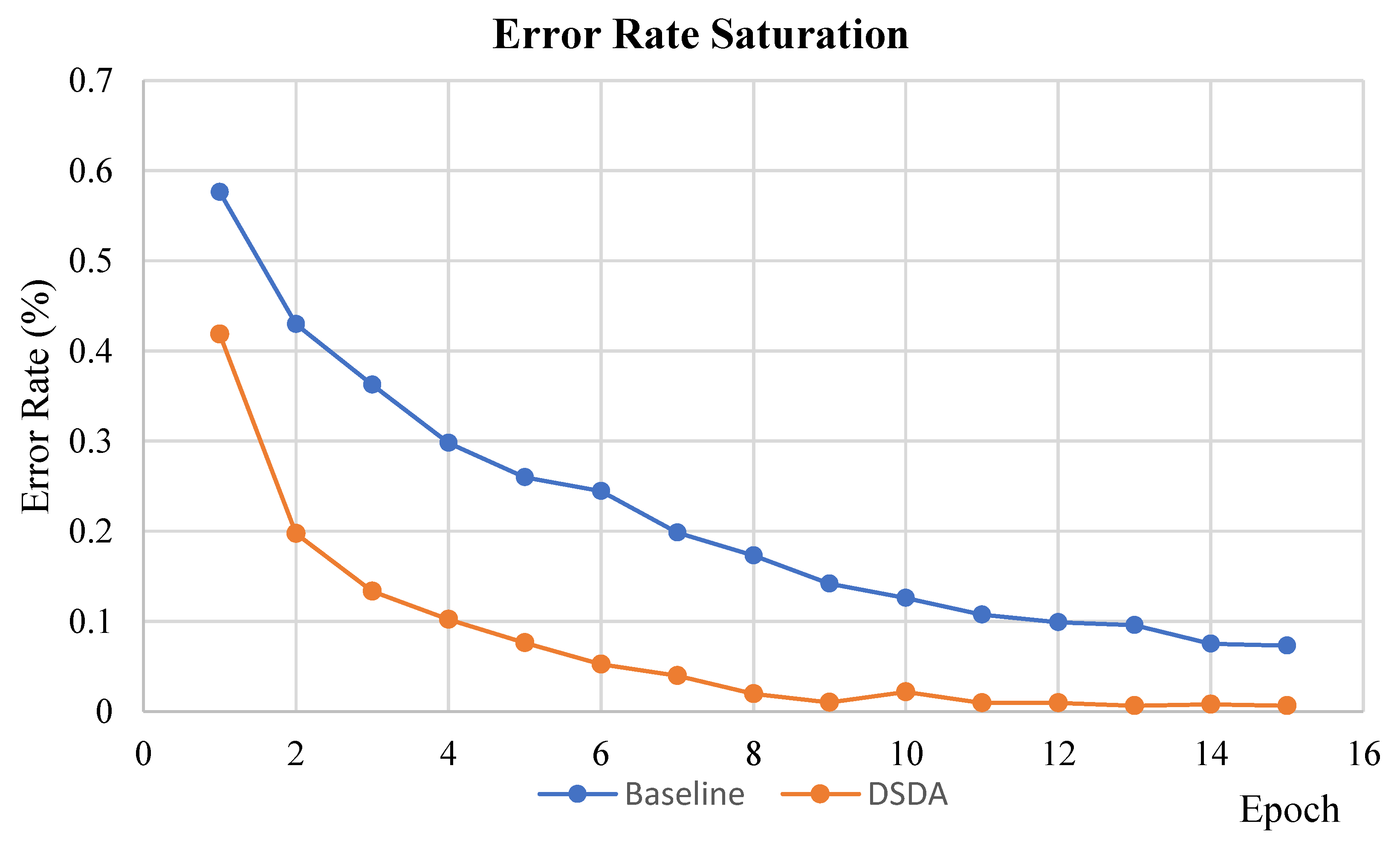

4.3. Experimental Results

4.4. Ablation Study

4.5. Qualitative Results

5. Limitation

6. Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Sample Availability

Abbreviations

| DCNN | Deep Convolution Neural Network |

| DSDA | Dual-Scale Doppler Attention |

| HWA | Holistic Window Attention |

| IDRad | IDentification with Radar |

| MD | Micro-Doppler |

| ML | Machine Learning |

| TWA | Temporal Window Attention |

| WE | Window Encoder |

| WA | Window Attention |

References

- Cunningham, S.J.; Masoodian, M.; Adams, A. Privacy issues for online personal photograph collections. J. Theor. Appl. Electron. Commer. Res. 2010, 5, 26–40. [Google Scholar] [CrossRef][Green Version]

- Kang, D.; Kum, D. Camera and radar sensor fusion for robust vehicle localization via vehicle part localization. IEEE Access 2020, 8, 75223–75236. [Google Scholar] [CrossRef]

- Bai, J.; Li, S.; Huang, L.; Chen, H. Robust detection and tracking method for moving object based on radar and camera data fusion. IEEE Sens. J. 2021, 21, 10761–10774. [Google Scholar] [CrossRef]

- Yang, B.; Guo, R.; Liang, M.; Casas, S.; Urtasun, R. Radarnet: Exploiting radar for robust perception of dynamic objects. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Chen, V.C.; Li, F.; Ho, S.S.; Wechsler, H. Micro-Doppler effect in radar: Phenomenon, model, and simulation study. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 2–21. [Google Scholar] [CrossRef]

- Kim, Y.; Ling, H. Human activity classification based on micro-Doppler signatures using a support vector machine. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1328–1337. [Google Scholar]

- Zhou, Z.; Cao, Z.; Pi, Y. Dynamic gesture recognition with a terahertz radar based on range profile sequences and Doppler signatures. Sensors 2017, 18, 10. [Google Scholar] [CrossRef] [PubMed]

- Fioranelli, F.; Ritchie, M.; Griffiths, H. Classification of unarmed/armed personnel using the NetRAD multistatic radar for micro-Doppler and singular value decomposition features. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1933–1937. [Google Scholar] [CrossRef]

- Kim, Y.; Ha, S.; Kwon, J. Human detection using Doppler radar based on physical characteristics of targets. IEEE Geosci. Remote Sens. Lett. 2014, 12, 289–293. [Google Scholar] [CrossRef]

- Versmissen, B.; Knudde, N.; Jalalv, A.; Couckuyt, I.; Bourdoux, A.; De Neve, W.; Dhaene, T. Indoor person identification using a low-power FMCW radar. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3941–3952. [Google Scholar] [CrossRef]

- Cao, P.; Xia, W.; Li, Y. Heart id: Human identification based on radar micro-doppler signatures of the heart using deep Learning. Remote Sens. 2019, 11, 1220. [Google Scholar] [CrossRef]

- Kim, Y.; Moon, T. Human detection and activity classification based on micro-Doppler signatures using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2015, 13, 8–12. [Google Scholar] [CrossRef]

- Le, H.T.; Phung, S.L.; Bouzerdoum, A.; Tivive, F.H. Human motion classification with micro-Doppler radar and Bayesian-optimized convolutional neural networks. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2961–2965. [Google Scholar]

- Gong, T.; Cheng, Y.; Li, X.; Chen, D. Micromotion detection of moving and spinning object based on rotational Doppler shift. IEEE Microw. Wirel. Compon. Lett. 2018, 28, 843–845. [Google Scholar] [CrossRef]

- Seddon, N.; Bearpark, T. Observation of the inverse Doppler effect. Science 2003, 302, 1537–1540. [Google Scholar] [CrossRef] [PubMed]

- Winkler, V. Range Doppler detection for automotive FMCW radars. In Proceedings of the 2007 European Radar Conference, Waltham, MA, USA, 17–20 April 2007; pp. 166–169. [Google Scholar]

- Lin, J., Jr.; Li, Y.P.; Hsu, W.C.; Lee, T.S. Design of an FMCW radar baseband signal processing system for automotive application. SpringerPlus 2016, 5, 42. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; He, Y.; Jing, X. A survey of deep learning-based human activity recognition in radar. Remote Sens. 2019, 11, 1068. [Google Scholar] [CrossRef]

- Lee, D.; Park, H.; Moon, T.; Kim, Y. Continual learning of micro-Doppler signature-based human activity classification. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Martinez, J.; Vossiek, M. Deep learning-based segmentation for the extraction of micro-doppler signatures. In Proceedings of the 2018 15th European Radar Conference (EuRAD), Madrid, Spain, 26–28 September 2018; pp. 190–193. [Google Scholar] [CrossRef]

- Abdulatif, S.; Wei, Q.; Aziz, F.; Kleiner, B.; Schneider, U. Micro-doppler based human-robot classification using ensemble and deep learning approaches. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018. [Google Scholar]

- Kim, Y.; Ling, H. Human activity classification based on micro-Doppler signatures using an artificial neural network. In Proceedings of the 2008 IEEE Antennas and Propagation Society International Symposium, San Diego, CA, USA, 5–11 July 2008; pp. 1–4. [Google Scholar]

- Park, J.; Javier, R.J.; Moon, T.; Kim, Y. Micro-Doppler based classification of human aquatic activities via transfer learning of convolutional neural networks. Sensors 2016, 16, 1990. [Google Scholar] [CrossRef]

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 851–860. [Google Scholar]

- Lin, Y.; Le Kernec, J.; Yang, S.; Fioranelli, F.; Romain, O.; Zhao, Z. Human activity classification with radar: Optimization and noise robustness with iterative convolutional neural networks followed with random forests. IEEE Sens. J. 2018, 18, 9669–9681. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Cao, P.; Xia, W.; Ye, M.; Zhang, J.; Zhou, J. Radar-ID: Human identification based on radar micro-Doppler signatures using deep convolutional neural networks. IET Radar Sonar Navig. 2018, 12, 729–734. [Google Scholar] [CrossRef]

- Narayanan, R.M.; Smith, S.; Gallagher, K.A. A multifrequency radar system for detecting humans and characterizing human activities for short-range through-wall and long-range foliage penetration applications. Int. J. Microw. Sci. Technol. 2014, 2014, 958905. [Google Scholar] [CrossRef]

- Ni, Z.; Huang, B. Open-set human identification based on gait radar micro-Doppler signatures. IEEE Sens. J. 2021, 21, 8226–8233. [Google Scholar] [CrossRef]

- Kwon, J.; Kwak, N. Human detection by neural networks using a low-cost short-range Doppler radar sensor. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017. [Google Scholar]

- Qiao, X.; Shan, T.; Tao, R. Human identification based on radar micro-Doppler signatures separation. Electron. Lett. 2020, 56, 195–196. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (elus). arXiv 2015, arXiv:1511.07289. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zhang, Z.; Tian, Z.; Zhou, M. Latern: Dynamic continuous hand gesture recognition using FMCW radar sensor. IEEE Sens. J. 2018, 18, 3278–3289. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf (accessed on 10 August 2022).

- Ma, M.; Yoon, S.; Kim, J.; Lee, Y.; Kang, S.; Yoo, C.D. Vlanet: Video-language alignment network for weakly-supervised video moment retrieval. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Kim, J.; Ma, M.; Pham, T.; Kim, K.; Yoo, C.D. Modality shifting attention network for multi-modal video question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

| Layer | Kernel Size | Stride | # of Filters | Data Shape |

|---|---|---|---|---|

| INPUT | ||||

| Temporal Window | ||||

| Conv 1 | (3,3) | (1,1) | 8 | |

| ELU 1 | ||||

| MAXPooL 1 | (2,3) | (2,3) | ||

| Conv 2 | (3,3) | (1,1) | 16 | |

| ELU 2 | ||||

| MAXPooL 2 | (2,3) | (2,3) | ||

| Conv 3 | (3,3) | (1,1) | 32 | |

| ELU 3 | ||||

| MAXPooL 3 | (3,1) | (3,1) | ||

| Conv 4 | (3,3) | (1,1) | 64 | |

| ELU 4 | ||||

| MAXPooL 4 | (1,5) | (1,5) | ||

| Pooling |

| Layer | Kernel Size | Stride | # of Filters | Data Shape |

|---|---|---|---|---|

| INPUT | ||||

| Holistic Window | ||||

| Conv 1 | (3,3) | (1,1) | 8 | |

| ELU 1 | ||||

| MAXPooL 1 | (6,3) | (6,3) | ||

| Conv 2 | (3,3) | (1,1) | 16 | |

| ELU 2 | ||||

| MAXPooL 2 | (2,3) | (2,3) | ||

| Conv 3 | (3,3) | (1,1) | 32 | |

| ELU 3 | ||||

| MAXPooL 3 | (3,1) | (3,1) | ||

| Conv 4 | (3,3) | (1,1) | 64 | |

| ELU 4 | ||||

| MAXPooL 4 | (1,5) | (1,5) | ||

| Pooling |

| Layer | Kernel SIZE | Stride | # of Filters | Data Shape |

|---|---|---|---|---|

| INPUT | ||||

| Pooling | (3,3) | (1,1) | 8 | |

| Linear | ||||

| ELU | (3,3) | (1,1) | 16 | |

| Dropout 4 | (1,5) | (1,5) | ||

| Linear | 5 |

| Methods | Validation | Test |

|---|---|---|

| Baseline [10] | 24.70 | 21.54 |

| Baseline [10] (reproduced on 150 time stamp) | 22.42 | 20.37 |

| PCA SVM | 37.67 | 32.91 |

| LSTM based model | 22.83 | 18.42 |

| DSDA | 10.87 | 8.65 |

| Model Variants | Error Rate (%) |

|---|---|

| Full DSDA | 10.87 |

| w/o TWA | 24.32 |

| w/o HWA | 23.94 |

| w/ stride , window | 12.99 |

| w/ stride , window | 13.71 |

| w/ stride , window | 14.73 |

| w/ stride , window | 14.80 |

| w/ stride , window | 11.93 |

| w/ stride , window | 13.45 |

| Multi-Scale (M = 3) | 10.87 |

| Multi-Scale (M = 4) | 12.79 |

| Attention Method for Calculating Similarity | Error Rate (%) | |

|---|---|---|

| 10.87 | ||

| 11.28 | ||

| 11.02 | ||

| Human Identification | Baseline [10] | Total | ||

|---|---|---|---|---|

| True | False | |||

| DSDA | True | 1082 | 246 | 1328 |

| False | 40 | 122 | 162 | |

| Total | 1122 | 368 | 1490 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, S.; Kim, D.; Hong, J.W.; Kim, J.; Yoo, C.D. Dual-Scale Doppler Attention for Human Identification. Sensors 2022, 22, 6363. https://doi.org/10.3390/s22176363

Yoon S, Kim D, Hong JW, Kim J, Yoo CD. Dual-Scale Doppler Attention for Human Identification. Sensors. 2022; 22(17):6363. https://doi.org/10.3390/s22176363

Chicago/Turabian StyleYoon, Sunjae, Dahyun Kim, Ji Woo Hong, Junyeong Kim, and Chang D. Yoo. 2022. "Dual-Scale Doppler Attention for Human Identification" Sensors 22, no. 17: 6363. https://doi.org/10.3390/s22176363

APA StyleYoon, S., Kim, D., Hong, J. W., Kim, J., & Yoo, C. D. (2022). Dual-Scale Doppler Attention for Human Identification. Sensors, 22(17), 6363. https://doi.org/10.3390/s22176363