Biometrics: Going 3D

Abstract

1. Introduction

2. Related Work

2.1. Face

2.2. Fingerprint

3. Literature Search

3.1. Search Protocol

| 3D* W/2 reconstruction* |

| AND |

| biometric* |

| AND |

| Publication Year > 2011 |

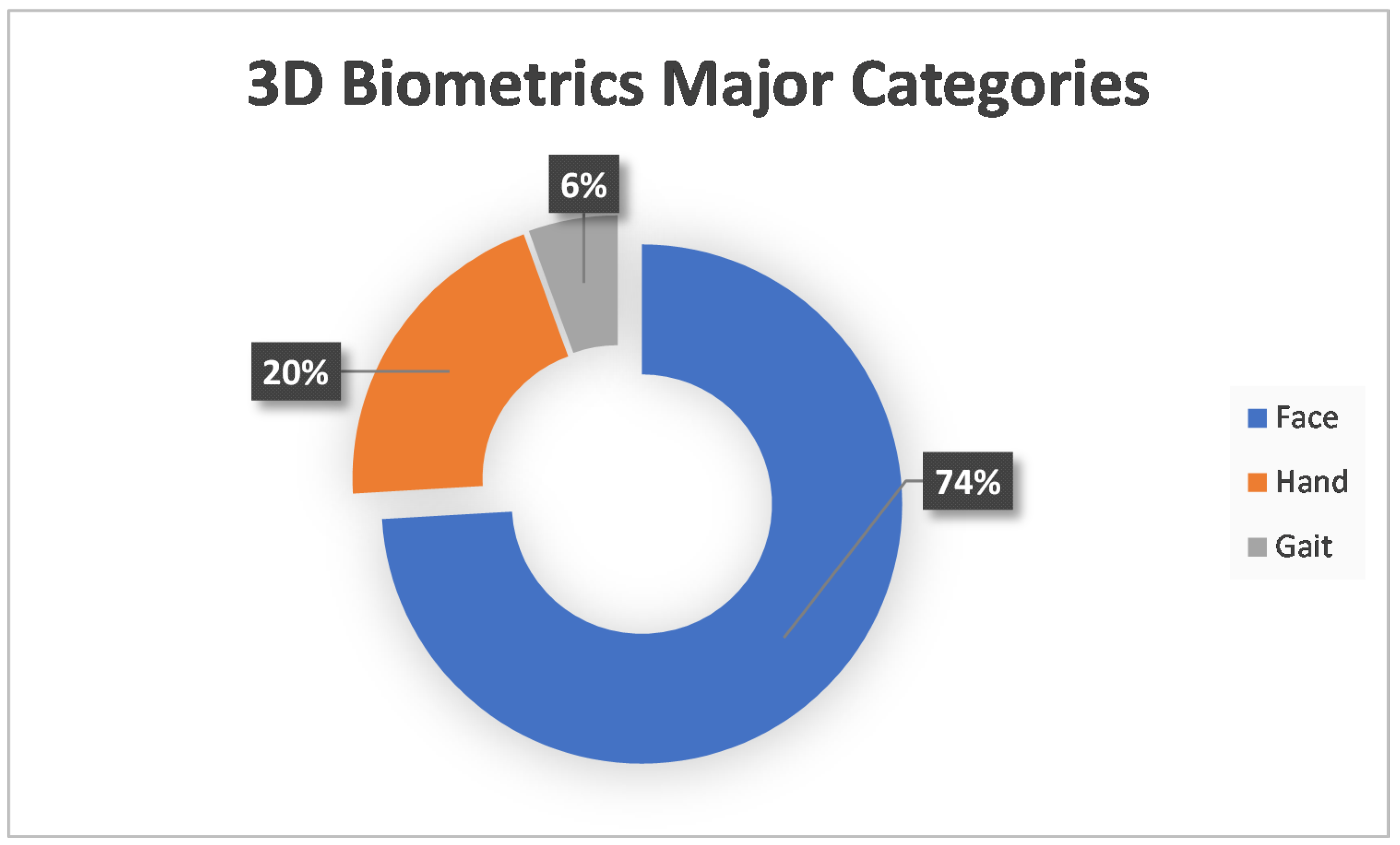

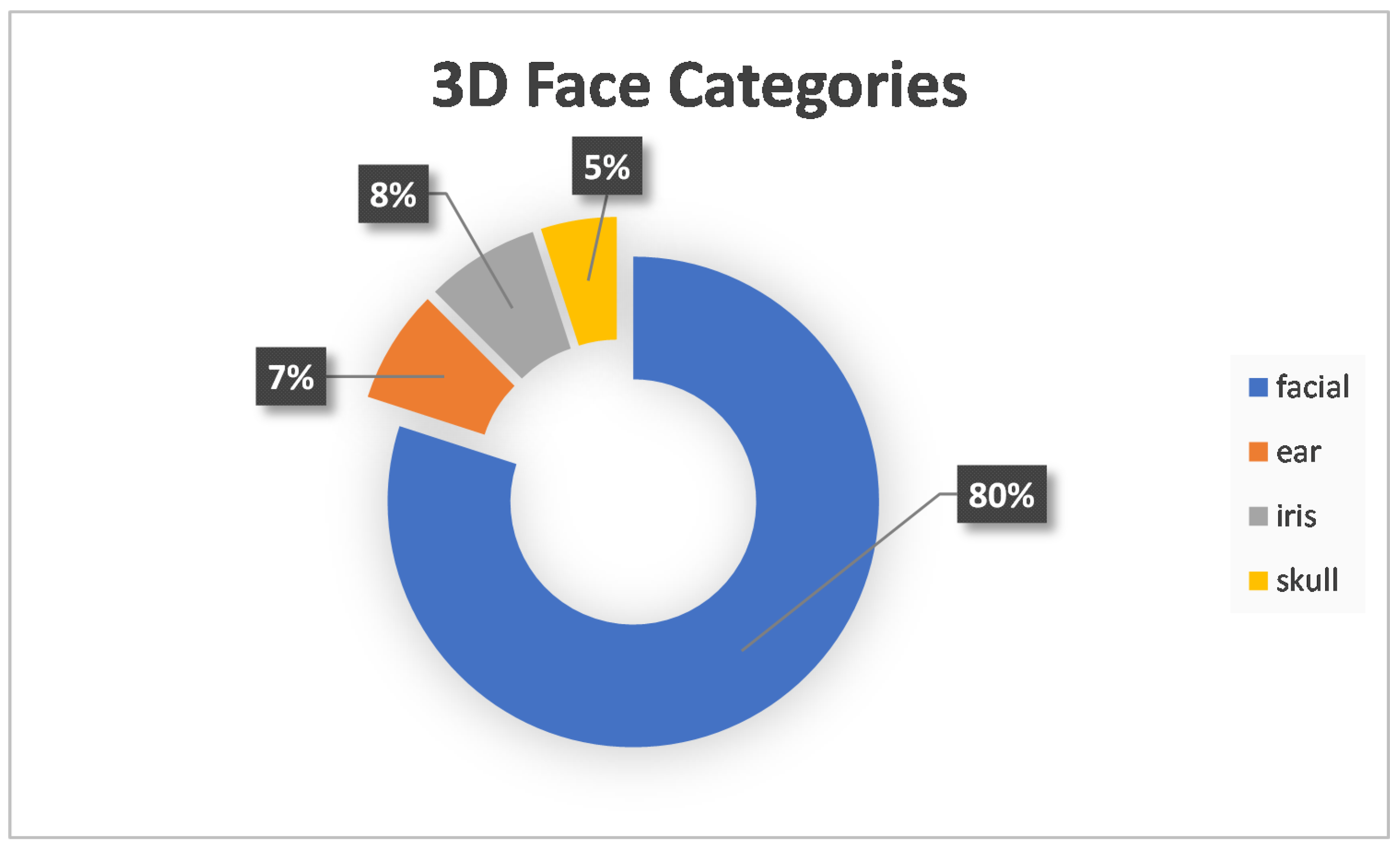

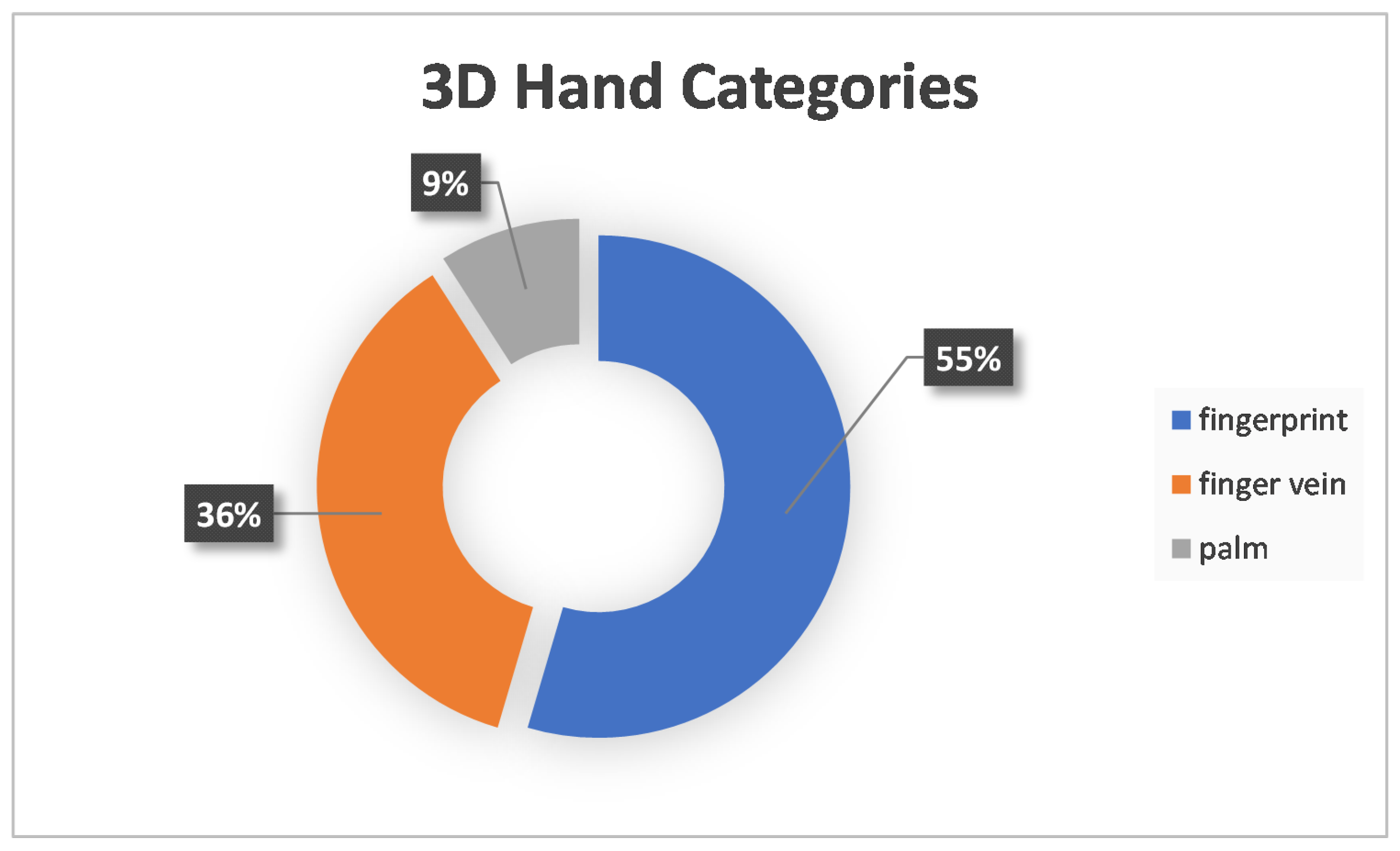

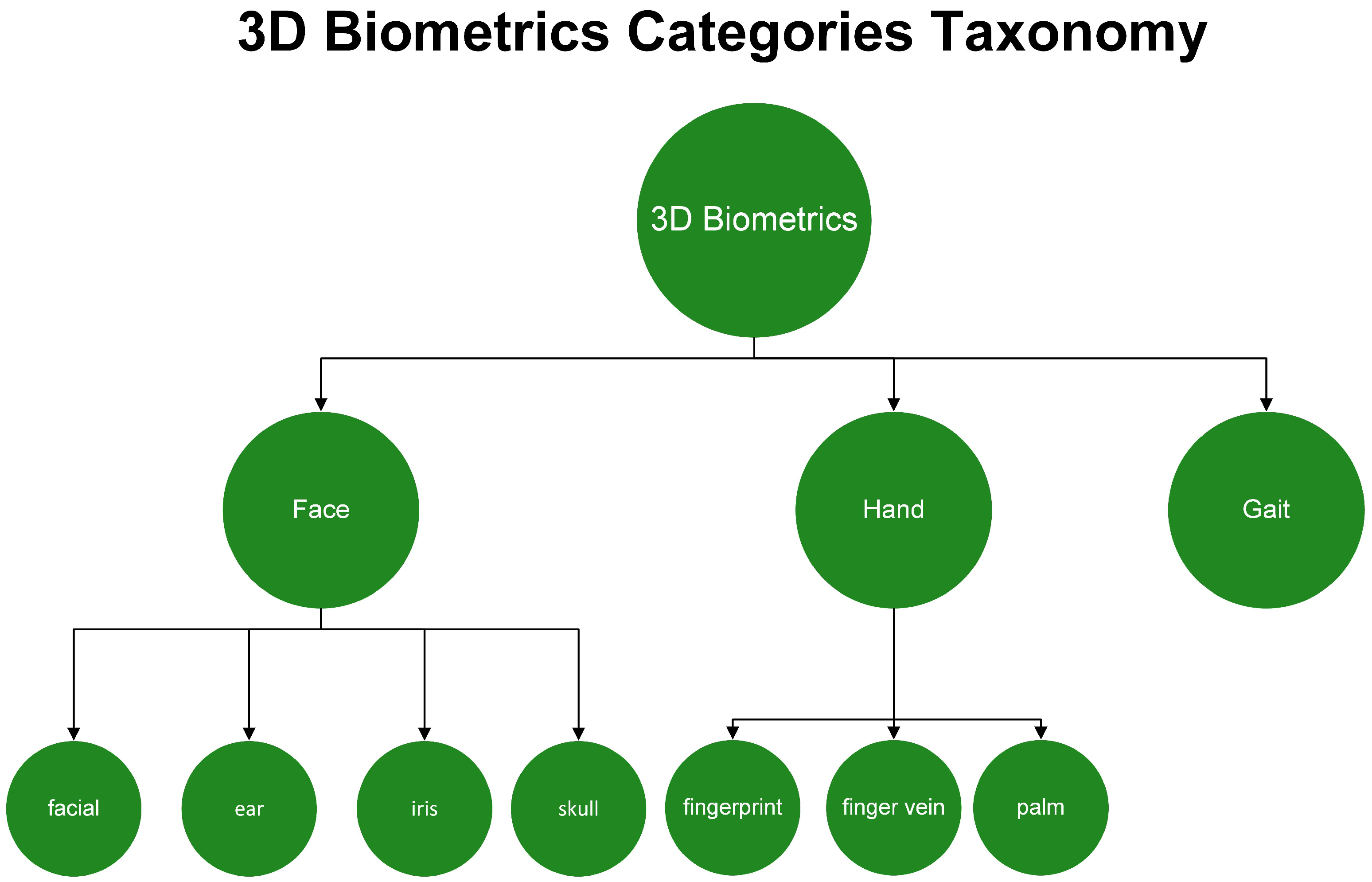

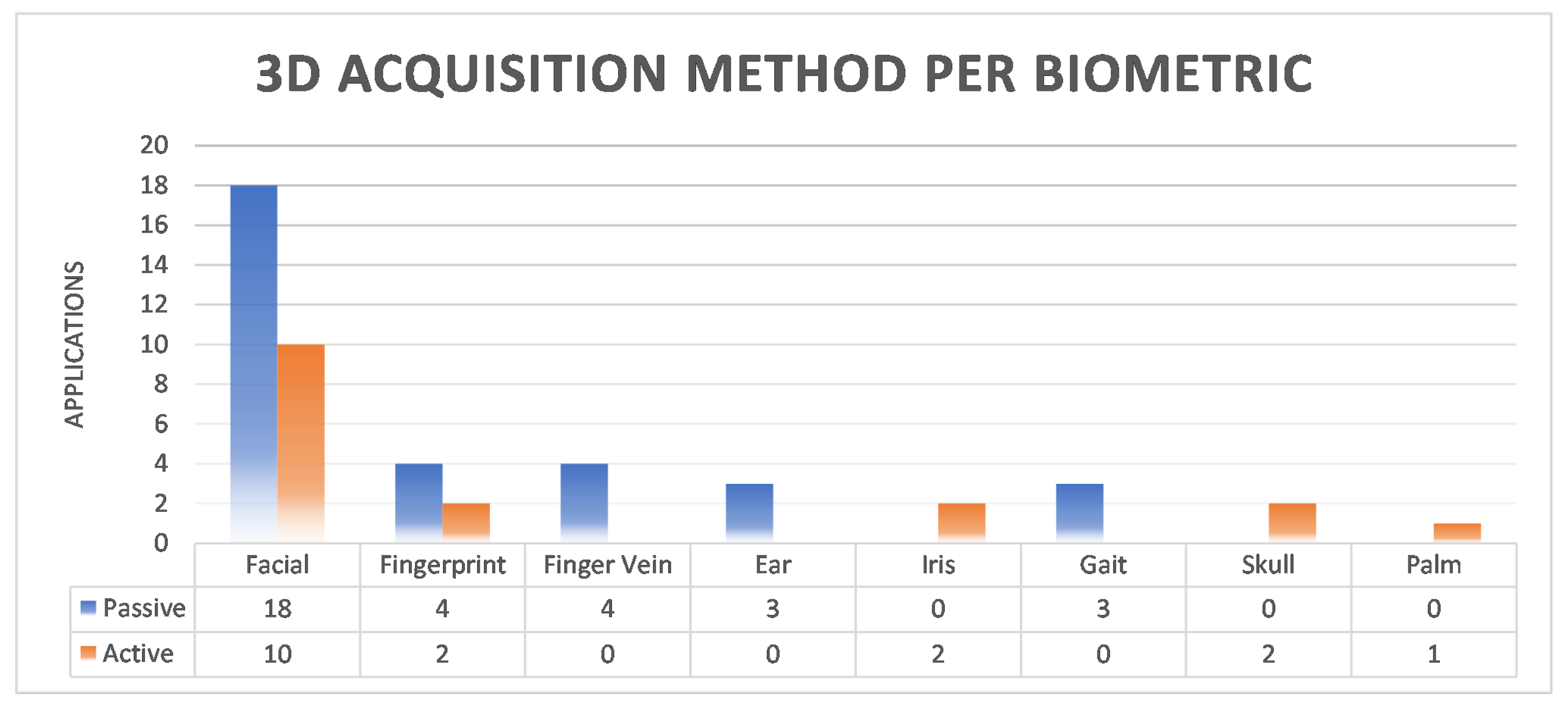

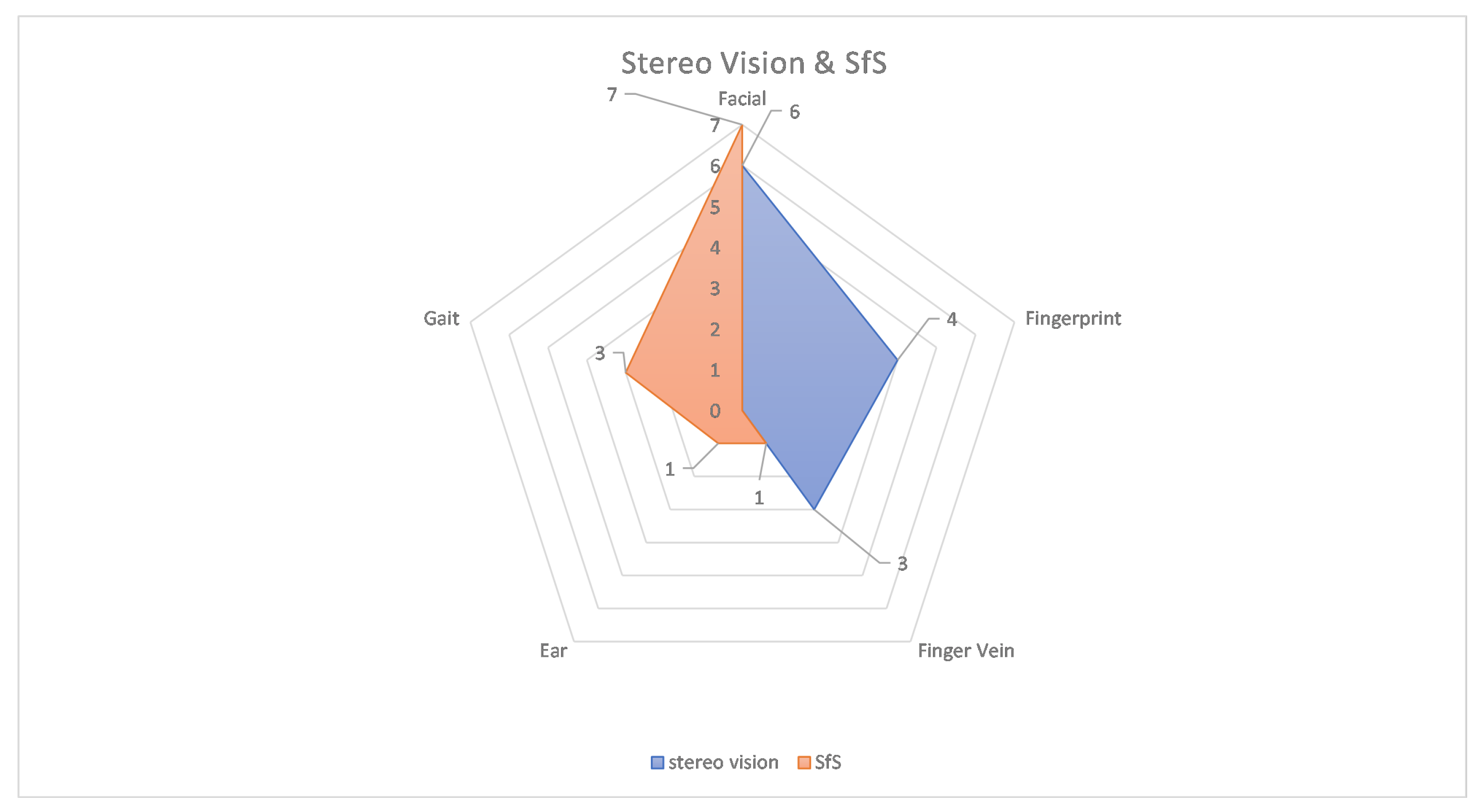

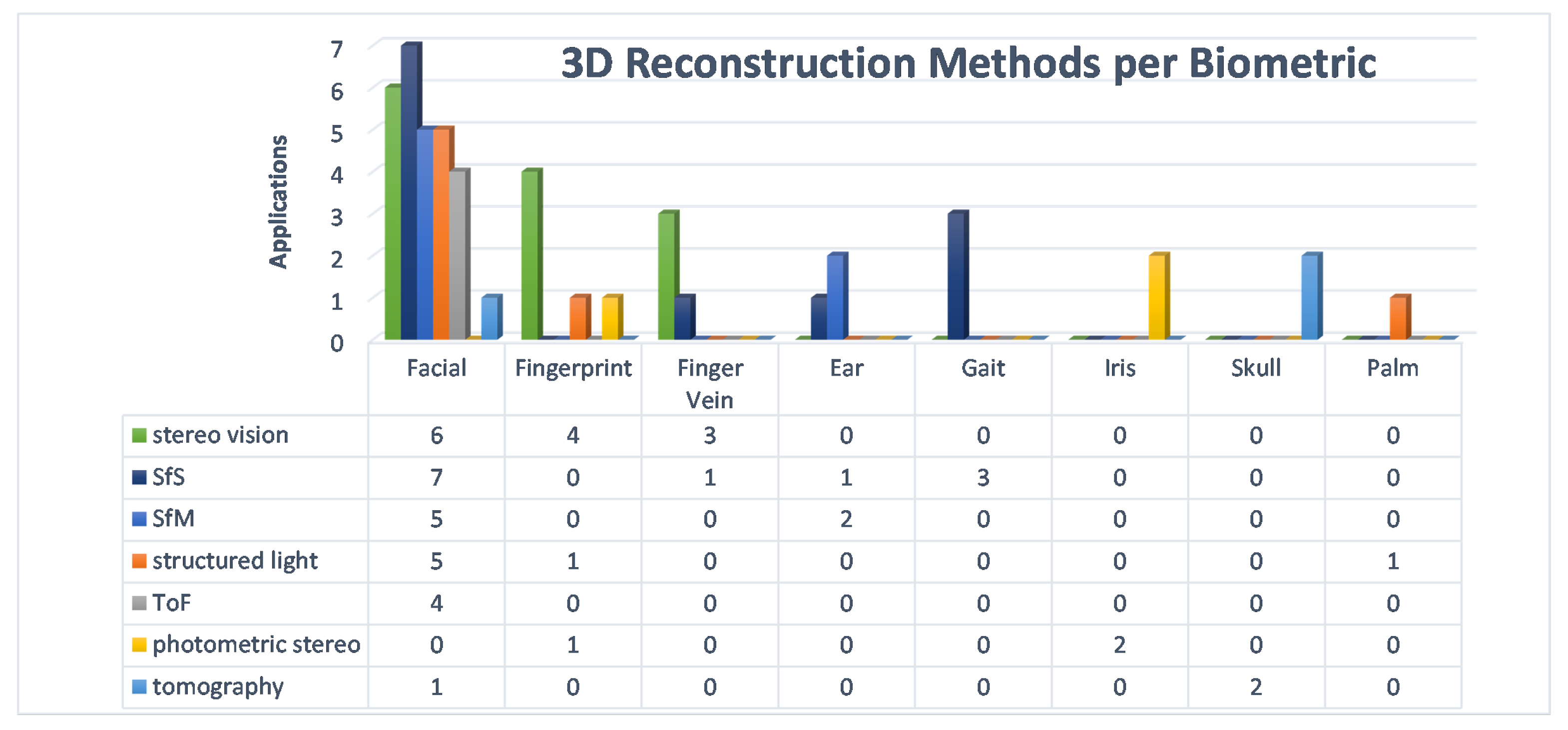

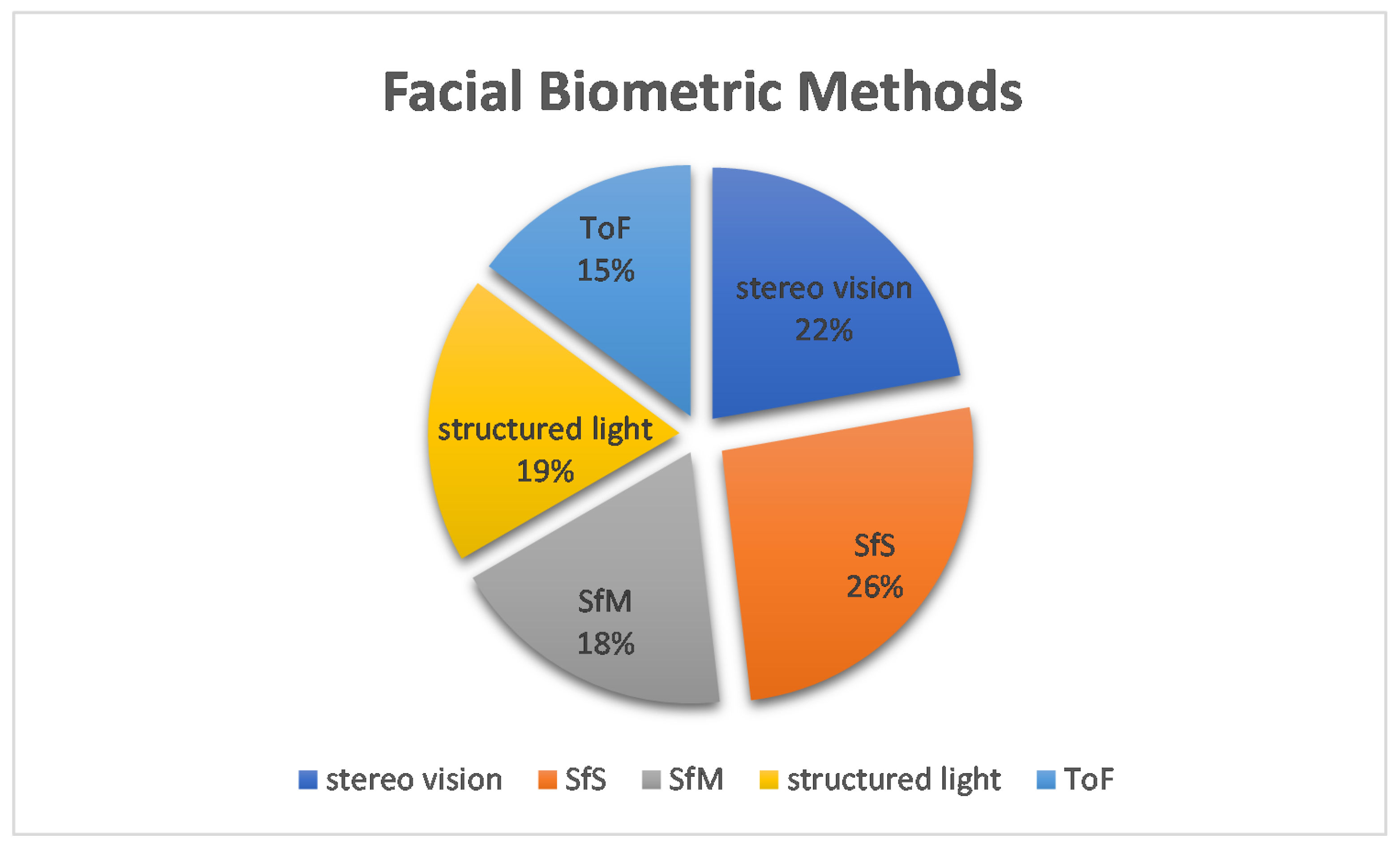

3.2. Statistical Results

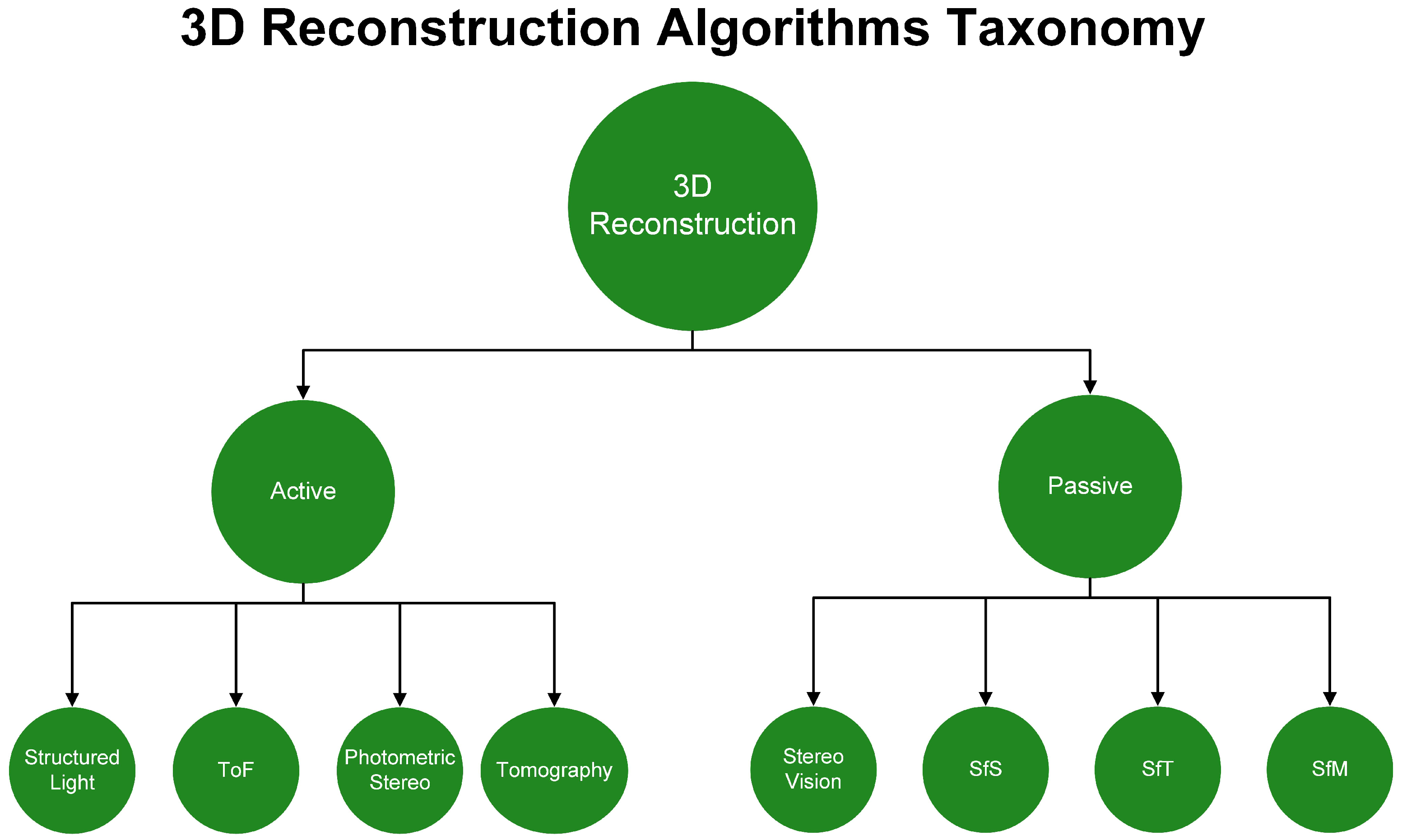

4. Three-Dimensional (3D) Reconstruction

4.1. Face

4.1.1. Facial

4.1.2. Ear

4.1.3. Iris

4.1.4. Skull

4.2. Hand

4.2.1. Fingerprint

4.2.2. Finger Vein

4.2.3. Palm

4.3. Gait

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jain, A.K.; Ross, A.; Prabhakar, S. An introduction to biometric recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 4–20. [Google Scholar] [CrossRef]

- Zhang, D.; Lu, G. 3D Biometrics; Springer: New York, NY, USA, 2013. [Google Scholar]

- Moons, T.; Van Gool, L.; Vergauwen, M. 3D reconstruction from multiple images part 1: Principles. Found. Trends® Comput. Graph. Vis. 2010, 4, 287–404. [Google Scholar] [CrossRef]

- Ulrich, L.; Vezzetti, E.; Moos, S.; Marcolin, F. Analysis of RGB-D camera technologies for supporting different facial usage scenarios. Multimed. Tools Appl. 2020, 79, 29375–29398. [Google Scholar] [CrossRef]

- Zollhöfer, M.; Stotko, P.; Görlitz, A.; Theobalt, C.; Nießner, M.; Klein, R.; Kolb, A. State of the art on 3D reconstruction with RGB-D cameras. Comput. Graph. Forum 2018, 37, 625–652. [Google Scholar] [CrossRef]

- Martín-Martín, A.; Orduna-Malea, E.; Thelwall, M.; López-Cózar, E.D. Google Scholar, Web of Science, and Scopus: A Systematic Comparison of Citations in 252 Subject Categories. J. Inf. 2018, 12, 1160–1177. [Google Scholar] [CrossRef]

- Yuan, L.; Mu, Z.C.; Yang, F. A review of recent advances in ear recognition. In Chinese Conference on Biometric Recognition; Springer: Berlin/Heidelberg, Germany, 2011; pp. 252–259. [Google Scholar]

- Islam, S.M.; Bennamoun, M.; Owens, R.A.; Davies, R. A review of recent advances in 3D ear-and expression-invariant face biometrics. ACM Comput. Surv. (CSUR) 2012, 44, 1–34. [Google Scholar] [CrossRef]

- Osuna, E.; Freund, R.; Girosit, F. Training support vector machines: An application to face detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 130–136. [Google Scholar]

- Nair, P.; Cavallaro, A. 3-D face detection, landmark localization, and registration using a point distribution model. IEEE Trans. Multimed. 2009, 11, 611–623. [Google Scholar] [CrossRef]

- Pears, N. RBF shape histograms and their application to 3D face processing. In Proceedings of the 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, The Netherlands, 17–19 September 2008; pp. 1–8. [Google Scholar]

- Mpiperis, I.; Malasiotis, S.; Strintzis, M.G. 3D face recognition by point signatures and iso-contours. In Proceedings of the Fourth IASTED International Conference on Signal Processing, Pattern Recognition, and Applications, Anaheim, CA, USA, 14–16 February 2007. [Google Scholar]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Chen, H.; Bhanu, B. Shape model-based 3D ear detection from side face range images. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)-Workshops, San Diego, CA, USA, 21–23 September 2005; p. 122. [Google Scholar]

- Subban, R.; Mankame, D.P. Human face recognition biometric techniques: Analysis and review. In Recent Advances in Intelligent Informatics; Springer: Cham, Switzerland, 2014; pp. 455–463. [Google Scholar]

- Huang, D.; Ardabilian, M.; Wang, Y.; Chen, L. 3-D face recognition using eLBP-based facial description and local feature hybrid matching. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1551–1565. [Google Scholar] [CrossRef]

- Inan, T.; Halici, U. 3-D face recognition with local shape descriptors. IEEE Trans. Inf. Forensics Secur. 2012, 7, 577–587. [Google Scholar] [CrossRef]

- Zhang, C.; Cohen, F.S. 3-D face structure extraction and recognition from images using 3-D morphing and distance mapping. IEEE Trans. Image Process. 2002, 11, 1249–1259. [Google Scholar] [CrossRef] [PubMed]

- Chang, K.; Bowyer, K.W.; Sarkar, S.; Victor, B. Comparison and combination of ear and face images in appearance-based biometrics. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1160–1165. [Google Scholar] [CrossRef]

- Alyuz, N.; Gokberk, B.; Akarun, L. Robust 3D Face Identification in the Presence of Occlusions. In Face Recognition in Adverse Conditions; IGI Global: Hershey, PA, USA, 2014; pp. 124–146. [Google Scholar]

- Balaban, S. Deep learning and face recognition: The state of the art. In Proceedings of the Biometric and Surveillance Technology for Human and Activity Identification XII, Baltimore, MD, USA, 20–24 April 2015. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Huang, G.B.; Mattar, M.; Berg, T.; Learned-Miller, E. Labeled faces in the wild: A database forstudying face recognition in unconstrained environments. In Proceedings of the Workshop on Faces in ‘Real-Life’ Images: Detection, Alignment, and Recognition, Marseille, France, 12–18 October 2008. [Google Scholar]

- Liu, X.; Sun, X.; He, R.; Tan, T. Recent advances on cross-domain face recognition. In Chinese Conference on Biometric Recognition; Springer: Cham, Switzerland, 2016; pp. 147–157. [Google Scholar]

- Bagga, M.; Singh, B. Spoofing detection in face recognition: A review. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; pp. 2037–2042. [Google Scholar]

- Kollreider, K.; Fronthaler, H.; Faraj, M.I.; Bigun, J. Real-time face detection and motion analysis with application in “liveness” assessment. IEEE Trans. Inf. Forensics Secur. 2007, 2, 548–558. [Google Scholar] [CrossRef]

- Bao, W.; Li, H.; Li, N.; Jiang, W. A liveness detection method for face recognition based on optical flow field. In Proceedings of the 2009 International Conference on Image Analysis and Signal Processing, Linhai, China, 11–12 April 2009; pp. 233–236. [Google Scholar]

- Mahmood, Z.; Muhammad, N.; Bibi, N.; Ali, T. A review on state-of-the-art face recognition approaches. Fractals 2017, 25, 1750025. [Google Scholar] [CrossRef]

- Jin, Y.; Wang, Y.; Ruan, Q.; Wang, X. A new scheme for 3D face recognition based on 2D Gabor Wavelet Transform plus LBP. In Proceedings of the 2011 6th International Conference on Computer Science & Education (ICCSE), Singapore, 3–5 August 2011; pp. 860–865. [Google Scholar]

- Alyuz, N.; Gokberk, B.; Akarun, L. 3-D face recognition under occlusion using masked projection. IEEE Trans. Inf. Forensics Secur. 2013, 8, 789–802. [Google Scholar] [CrossRef]

- Mohammadzade, H.; Hatzinakos, D. Iterative closest normal point for 3D face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 381–397. [Google Scholar] [CrossRef]

- Jahanbin, S.; Choi, H.; Bovik, A.C. Passive multimodal 2-D+ 3-D face recognition using Gabor features and landmark distances. IEEE Trans. Inf. Forensics Secur. 2011, 6, 1287–1304. [Google Scholar] [CrossRef]

- Huang, D.; Soltana, W.B.; Ardabilian, M.; Wang, Y.; Chen, L. Textured 3D face recognition using biological vision-based facial representation and optimized weighted sum fusion. In Proceedings of the CVPR Workshops, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1–8. [Google Scholar]

- Albakri, G.; Alghowinem, S. The effectiveness of depth data in liveness face authentication using 3D sensor cameras. Sensors 2019, 19, 1928. [Google Scholar] [CrossRef]

- Wu, B.; Pan, M.; Zhang, Y. A review of face anti-spoofing and its applications in china. In International Conference on Harmony Search Algorithm; Springer: Cham, Switzerland, 2019; pp. 35–43. [Google Scholar]

- Jourabloo, A.; Liu, Y.; Liu, X. Face de-spoofing: Anti-spoofing via noise modeling. In European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2018; pp. 290–306. [Google Scholar]

- Liu, A.; Li, X.; Wan, J.; Liang, Y.; Escalera, S.; Escalante, H.J.; Madadi, M.; Jin, Y.; Wu, Z.; Yu, X.; et al. Cross-ethnicity face anti-spoofing recognition challenge: A review. IET Biom. 2021, 10, 24–43. [Google Scholar] [CrossRef]

- Labati, R.D.; Genovese, A.; Piuri, V.; Scotti, F. Touchless fingerprint biometrics: A survey on 2D and 3D technologies. J. Internet Technol. 2014, 15, 325–332. [Google Scholar]

- Jung, J.; Lee, W.; Kang, W.; Shin, E.; Ryu, J.; Choi, H. Review of piezoelectric micromachined ultrasonic transducers and their applications. J. Micromech. Microeng. 2017, 27, 113001. [Google Scholar] [CrossRef]

- Iula, A. Ultrasound systems for biometric recognition. Sensors 2019, 19, 2317. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Wang, H.; Sun, H.; Zhang, Y.; Chen, P.; Liang, R. Optical Coherence Tomography in Fingertip Biometrics. Opt. Lasers Eng. 2022, 151, 106868. [Google Scholar] [CrossRef]

- Erdogmus, N.; Marcel, S. Spoofing face recognition with 3D masks. IEEE Trans. Inf. Forensics Secur. 2014, 9, 1084–1097. [Google Scholar] [CrossRef]

- Lee, W.J.; Wilkinson, C.M.; Hwang, H.S. An accuracy assessment of forensic computerized facial reconstruction employing cone-beam computed tomography from live subjects. J. Forensic Sci. 2012, 57, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.L.; Lam, K.M.; Gao, Q.W. Depth estimation of face images using the nonlinear least-squares model. IEEE Trans. Image Process. 2012, 22, 17–30. [Google Scholar] [PubMed]

- Raghavendra, R.; Raja, K.B.; Pflug, A.; Yang, B.; Busch, C. 3D face reconstruction and multimodal person identification from video captured using smartphone camera. In Proceedings of the 2013 IEEE International Conference on Technologies for Homeland Security (HST), Waltham, MA, USA, 12–14 November 2013; pp. 552–557. [Google Scholar]

- Dou, P.; Zhang, L.; Wu, Y.; Shah, S.K.; Kakadiaris, I.A. Pose-robust face signature for multi-view face recognition. In Proceedings of the 2015 IEEE 7th International Conference on Biometrics Theory, Applications and Systems (BTAS), Arlington, VA, USA, 8–11 September 2015; pp. 1–8. [Google Scholar]

- Wang, K.; Wang, X.; Pan, Z.; Liu, K. A two-stage framework for 3D face reconstruction from RGBD images. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1493–1504. [Google Scholar] [CrossRef]

- Hu, G.; Mortazavian, P.; Kittler, J.; Christmas, W. A facial symmetry prior for improved illumination fitting of 3D morphable model. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013; pp. 1–6. [Google Scholar]

- Fooprateepsiri, R.; Kurutach, W. A general framework for face reconstruction using single still image based on 2D-to-3D transformation kernel. Forensic Sci. Int. 2014, 236, 117–126. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, S.; Li, W.; Huang, D.; Wang, Y. Face anti-spoofing to 3D masks by combining texture and geometry features. In Chinese Conference on Biometric Recognition; Springer: Cham, Switzerland, 2018; pp. 399–408. [Google Scholar]

- Segundo, M.P.; Silva, L.; Bellon, O.R.P. Improving 3d face reconstruction from a single image using half-frontal face poses. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 1797–1800. [Google Scholar]

- Dou, P.; Kakadiaris, I.A. Multi-view 3D face reconstruction with deep recurrent neural networks. Image Vis. Comput. 2018, 80, 80–91. [Google Scholar] [CrossRef]

- Li, J.; Long, S.; Zeng, D.; Zhao, Q. Example-based 3D face reconstruction from uncalibrated frontal and profile images. In Proceedings of the 2015 International Conference on Biometrics (ICB), Phuket, Thailand, 19–22 May 2015; pp. 193–200. [Google Scholar]

- Abate, A.F.; Narducci, F.; Ricciardi, S. Biometrics empowered ambient intelligence environment. Atti Accad. Peloritana Pericolanti-Cl. Sci. Fis. Mat. Nat. 2015, 93, 4. [Google Scholar]

- van Dam, C.; Veldhuis, R.; Spreeuwers, L. Landmark-based model-free 3d face shape reconstruction from video sequences. In Proceedings of the 2013 International Conference of the BIOSIG Special Interest Group (BIOSIG), Darmstadt, Germany, 5–6 September 2013; pp. 1–5. [Google Scholar]

- Betta, G.; Capriglione, D.; Corvino, M.; Lavatelli, A.; Liguori, C.; Sommella, P.; Zappa, E. Metrological characterization of 3D biometric face recognition systems in actual operating conditions. Acta IMEKO 2017, 6, 33–42. [Google Scholar] [CrossRef]

- Yin, J.; Yang, X. 3D facial reconstruction of based on OpenCV and DirectX. In Proceedings of the 2016 International Conference on Audio, Language and Image Processing (ICALIP), Shanghai, China, 11–12 July 2016; pp. 341–344. [Google Scholar]

- Abate, A.F.; Nappi, M.; Ricciardi, S. A biometric interface to ambient intelligence environments. In Information Systems: Crossroads for Organization, Management, Accounting and Engineering; Springer: Berlin/Heidelberg, Germany, 2012; pp. 155–163. [Google Scholar]

- Crispim, F.; Vieira, T.; Lima, B. Verifying kinship from rgb-d face data. In International Conference on Advanced Concepts for Intelligent Vision Systems; Springer: Cham, Switzerland, 2020; pp. 215–226. [Google Scholar]

- Naveen, S.; Rugmini, K.; Moni, R. 3D face reconstruction by pose correction, patch cloning and texture wrapping. In Proceedings of the 2016 International Conference on Communication Systems and Networks (ComNet), Thiruvananthapuram, India, 21–23 July 2016; pp. 112–116. [Google Scholar]

- Zhang, Z.; Zhang, M.; Chang, Y.; Esche, S.K.; Chassapis, C. A virtual laboratory combined with biometric authentication and 3D reconstruction. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Phoenix, AZ, USA, 11–17 November 2016; Volume 50571, p. V005T06A049. [Google Scholar]

- Rychlik, M.; Stankiewicz, W.; Morzynski, M. 3D facial biometric database–search and reconstruction of objects based on PCA modes. In International Conference on Universal Access in Human-Computer Interaction; Springer: Cham, Switzerland, 2014; pp. 125–136. [Google Scholar]

- Tahiri, M.A.; Karmouni, H.; Tahiri, A.; Sayyouri, M.; Qjidaa, H. Partial 3D Image Reconstruction by Cuboids Using Stable Computation of Hahn Polynomials. In WITS 2020; Springer: Singapore, 2022; pp. 831–842. [Google Scholar]

- Xiong, W.; Yang, H.; Zhou, P.; Fu, K.; Zhu, J. Spatiotemporal Correlation-Based Accurate 3D Face Imaging Using Speckle Projection and Real-Time Improvement. Appl. Sci. 2021, 11, 8588. [Google Scholar] [CrossRef]

- Abate, A.F.; De Maio, L.; Distasi, R.; Narducci, F. Remote 3D face reconstruction by means of autonomous unmanned aerial vehicles. Pattern Recognit. Lett. 2021, 147, 48–54. [Google Scholar] [CrossRef]

- Li, X.; Wu, S. Multi-attribute regression network for face reconstruction. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7226–7233. [Google Scholar]

- Kneis, B.; Zhang, W. 3D Face Recognition using Photometric Stereo and Deep Learning. In Proceedings of the 10th International Conference on Web Intelligence, Mining and Semantics, Biarritz, France, 30 June–3 July 2020; pp. 255–261. [Google Scholar]

- Li, J.; Liu, Z.; Zhao, Q. Exploring shape deformation in 2D images for facial expression recognition. In Chinese Conference on Biometric Recognition; Springer: Cham, Switzerland, 2019; pp. 190–197. [Google Scholar]

- Sopiak, D.; Oravec, M.; Pavlovičová, J.; Bukovčíková, Z.; Dittingerová, M.; Bil’anská, A.; Novotná, M.; Gontkovič, J. Generating face images based on 3D morphable model. In Proceedings of the 2016 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Changsha, China, 13–15 August 2016; pp. 58–62. [Google Scholar]

- Chuchvara, A.; Georgiev, M.; Gotchev, A. A framework for fast low-power multi-sensor 3D scene capture and reconstruction. In International Workshop on Biometric Authentication; Springer: Cham, Switzerland, 2014; pp. 40–53. [Google Scholar]

- Narayana, S.; Rohit; Rajagopal; Rakshith; Antony, J. 3D face reconstruction using frontal and profile views. In Proceedings of the 2013 7th Asia Modelling Symposium, Hong Kong, China, 23–25 July 2013; pp. 132–136. [Google Scholar]

- Kumar, A.; Kwong, C. Towards Contactless, Low-Cost and Accurate 3D Fingerprint Identification. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 681–696. [Google Scholar] [CrossRef] [PubMed]

- Labati, R.D.; Genovese, A.; Piuri, V.; Scotti, F. Toward unconstrained fingerprint recognition: A fully touchless 3-D system based on two views on the move. IEEE Trans. Syst. Man Cybern. Syst. 2015, 46, 202–219. [Google Scholar] [CrossRef]

- Liu, F.; Zhang, D. 3D fingerprint reconstruction system using feature correspondences and prior estimated finger model. Pattern Recognit. 2014, 47, 178–193. [Google Scholar] [CrossRef]

- Xu, J.; Hu, J. Direct Feature Point Correspondence Discovery for Multiview Images: An Alternative Solution When SIFT-Based Matching Fails. In International Conference on Testbeds and Research Infrastructures; Springer: Cham, Switzerland, 2016; pp. 137–147. [Google Scholar]

- Chatterjee, A.; Bhatia, V.; Prakash, S. Anti-spoof touchless 3D fingerprint recognition system using single shot fringe projection and biospeckle analysis. Opt. Lasers Eng. 2017, 95, 1–7. [Google Scholar] [CrossRef]

- Yin, X.; Zhu, Y.; Hu, J. 3D fingerprint recognition based on ridge-valley-guided 3D reconstruction and 3D topology polymer feature extraction. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1085–1091. [Google Scholar] [CrossRef]

- Kang, W.; Liu, H.; Luo, W.; Deng, F. Study of a full-view 3D finger vein verification technique. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1175–1189. [Google Scholar] [CrossRef]

- Ma, Z.; Fang, L.; Duan, J.; Xie, S.; Wang, Z. Personal identification based on finger vein and contour point clouds matching. In Proceedings of the 2016 IEEE International Conference on Mechatronics and Automation, Harbin, China, 7–10 August 2016; pp. 1983–1988. [Google Scholar]

- Veldhuis, R.; Spreeuwers, L.; Ton, B.; Rozendal, S. A high-quality finger vein dataset collected using a custom-designed capture device. In Handbook of Vascular Biometrics; Springer: Cham, Switzerland, 2020; pp. 63–75. [Google Scholar]

- Xu, H.; Yang, W.; Wu, Q.; Kang, W. Endowing rotation invariance for 3d finger shape and vein verification. Front. Comput. Sci. 2022, 16, 1–16. [Google Scholar] [CrossRef]

- Cho, S.Y. 3D ear shape reconstruction and recognition for biometric applications. Signal Image Video Process. 2013, 7, 609–618. [Google Scholar] [CrossRef]

- Liu, C.; Mu, Z.; Wang, K.; Zeng, H. 3D ear modeling based on SFS. In Proceedings of the 10th World Congress on Intelligent Control and Automation, Beijing, China, 6–8 July 2012; pp. 4837–4841. [Google Scholar]

- Bastias, D.; Perez, C.A.; Benalcazar, D.P.; Bowyer, K.W. A method for 3D iris reconstruction from multiple 2D near-infrared images. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 503–509. [Google Scholar]

- Benalcazar, D.P.; Zambrano, J.E.; Bastias, D.; Perez, C.A.; Bowyer, K.W. A 3D iris scanner from a single image using convolutional neural networks. IEEE Access 2020, 8, 98584–98599. [Google Scholar] [CrossRef]

- Benalcazar, D.P.; Bastias, D.; Perez, C.A.; Bowyer, K.W. A 3D iris scanner from multiple 2D visible light images. IEEE Access 2019, 7, 61461–61472. [Google Scholar] [CrossRef]

- López-Fernández, D.; Madrid-Cuevas, F.J.; Carmona-Poyato, A.; Muñoz-Salinas, R.; Medina-Carnicer, R. Entropy volumes for viewpoint-independent gait recognition. Mach. Vis. Appl. 2015, 26, 1079–1094. [Google Scholar] [CrossRef]

- López-Fernández, D.; Madrid-Cuevas, F.J.; Carmona-Poyato, A.; Marín-Jiménez, M.J.; Muñoz-Salinas, R.; Medina-Carnicer, R. Independent gait recognition through morphological descriptions of 3D human reconstructions. Image Vis. Comput. 2016, 48, 1–13. [Google Scholar] [CrossRef]

- Imoto, D.; Kurosawa, K.; Honma, M.; Yokota, R.; Hirabayashi, M.; Hawai, Y. Model-Based Interpolation for Continuous Human Silhouette Images by Height-Constraint Assumption. In Proceedings of the 2020 4th International Conference on Vision, Image and Signal Processing, Bangkok, Thailand, 9–11 December 2020; pp. 1–11. [Google Scholar]

- Lorkiewicz-Muszyńska, D.; Kociemba, W.; Żaba, C.; Łabęcka, M.; Koralewska-Kordel, M.; Abreu-Głowacka, M.; Przystańska, A. The conclusive role of postmortem computed tomography (CT) of the skull and computer-assisted superimposition in identification of an unknown body. Int. J. Leg. Med. 2013, 127, 653–660. [Google Scholar] [CrossRef][Green Version]

- Svoboda, J.; Klubal, O.; Drahanskỳ, M. Biometric recognition of people by 3D hand geometry. In Proceedings of the International Conference on Digital Technologies 2013, Zilina, Slovakia, 29–31 May 2013; pp. 137–141. [Google Scholar]

- Chu, B.; Romdhani, S.; Chen, L. 3D-aided face recognition robust to expression and pose variations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1899–1906. [Google Scholar]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep Face Recognition. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015; pp. 41.1–41.12. [Google Scholar]

- Victor, B.; Bowyer, K.; Sarkar, S. An evaluation of face and ear biometrics. In Proceedings of the 2002 International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; Volume 1, pp. 429–432. [Google Scholar]

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Ghojogh, B.; Karray, F.; Crowley, M. Fisher and kernel Fisher discriminant analysis: Tutorial. arXiv 2019, arXiv:1906.09436. [Google Scholar]

- Li, C.; Mu, Z.; Zhang, F.; Wang, S. A novel 3D ear reconstruction method using a single image. In Proceedings of the 10th World Congress on Intelligent Control and Automation, Beijing, China, 6–8 July 2012; pp. 4891–4896. [Google Scholar]

- Blanz, V.; Vetter, T. A morphable model for the synthesis of 3D faces. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 8–13 August 1999; pp. 187–194. [Google Scholar]

- Daugman, J. How iris recognition works. In The Essential Guide to Image Processing; Elsevier: Amsterdam, The Netherlands, 2009; pp. 715–739. [Google Scholar]

- Moulon, P.; Bezzi, A. Python Photogrammetry Toolbox: A Free Solution for Three-Dimensional Documentation; ArcheoFoss: Napoli, Italiy, 2011; pp. 1–12. [Google Scholar]

- Zheng, C.; Cham, T.J.; Cai, J. T2net: Synthetic-to-realistic translation for solving single-image depth estimation tasks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 767–783. [Google Scholar]

- Alhashim, I.; Wonka, P. High quality monocular depth estimation via transfer learning. arXiv 2018, arXiv:1812.11941. [Google Scholar]

- Whitaker, L.A. Anthropometry of the Head and Face in Medicine. Plast. Reconstr. Surg. 1983, 71, 144–145. [Google Scholar] [CrossRef][Green Version]

- Vanezis, P.; Blowes, R.; Linney, A.; Tan, A.; Richards, R.; Neave, R. Application of 3-D computer graphics for facial reconstruction and comparison with sculpting techniques. Forensic Sci. Int. 1989, 42, 69–84. [Google Scholar] [CrossRef]

- Vandermeulen, D.; Claes, P.; De Greef, S.; Willems, G.; Clement, J.; Suetens, P. Automated facial reconstruction. In Craniofacial Identification; Cambridge University Press: Cambridge, UK, 2012; Volume 203. [Google Scholar]

- Vezzetti, E.; Marcolin, F.; Tornincasa, S.; Moos, S.; Violante, M.G.; Dagnes, N.; Monno, G.; Uva, A.E.; Fiorentino, M. Facial landmarks for forensic skull-based 3D face reconstruction: A literature review. In International Conference on Augmented Reality, Virtual Reality and Computer Graphics; Springer: Cham, Switzerland, 2016; pp. 172–180. [Google Scholar]

- Yin, X.; Zhu, Y.; Hu, J. A Survey on 2D and 3D Contactless Fingerprint Biometrics: A Taxonomy, Review, and Future Directions. IEEE Open J. Comput. Soc. 2021, 2, 370–381. [Google Scholar] [CrossRef]

- Gorthi, S.S.; Rastogi, P. Fringe projection techniques: Whither we are? Opt. Lasers Eng. 2010, 48, 133–140. [Google Scholar] [CrossRef]

- Stoykova, E.; Nazarova, D.; Berberova, N.; Gotchev, A. Performance of intensity-based non-normalized pointwise algorithms in dynamic speckle analysis. Opt. Express 2015, 23, 25128–25142. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Qin, A.K.; Huang, V.L.; Suganthan, P.N. Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans. Evol. Comput. 2008, 13, 398–417. [Google Scholar] [CrossRef]

- Zhu, Y.; Yin, X.; Jia, X.; Hu, J. Latent fingerprint segmentation based on convolutional neural networks. In Proceedings of the 2017 IEEE Workshop on Information Forensics and Security (WIFS), Rennes, France, 4–7 December 2017; pp. 1–6. [Google Scholar]

- Chikkerur, S.; Cartwright, A.N.; Govindaraju, V. Fingerprint enhancement using stft analysis. Pattern Recognit. 2007, 40, 198–211. [Google Scholar] [CrossRef]

- Sidiropoulos, G.K.; Kiratsa, P.; Chatzipetrou, P.; Papakostas, G.A. Feature Extraction for Finger-Vein-Based Identity Recognition. J. Imaging 2021, 7, 89. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wang, M. A Simple and Efficient Method for Finger Vein Recognition. Sensors 2022, 22, 2234. [Google Scholar] [CrossRef] [PubMed]

- Asaari, M.S.M.; Suandi, S.A.; Rosdi, B.A. Fusion of band limited phase only correlation and width centroid contour distance for finger based biometrics. Expert Syst. Appl. 2014, 41, 3367–3382. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. In Graphics Gems; Academic Press Professional, Inc.: San Diego, CA, USA, 1994; pp. 474–485. [Google Scholar]

- Cutting, J.E.; Kozlowski, L.T. Recognizing friends by their walk: Gait perception without familiarity cues. Bull. Psychon. Soc. 1977, 9, 353–356. [Google Scholar] [CrossRef]

- Horprasert, T.; Harwood, D.; Davis, L.S. A statistical approach for real-time robust background subtraction and shadow detection. In Proceedings of the IEEE ICCV’99 FRAME-RATE Workshop, Corfu, Greece, 20–25 September 1999; pp. 1–19. [Google Scholar]

- Koestinger, M.; Wohlhart, P.; Roth, P.M.; Bischof, H. Annotated facial landmarks in the wild: A large-scale, real-world database for facial landmark localization. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 2144–2151. [Google Scholar]

- Erdogmus, N.; Marcel, S. Spoofing 2D face recognition systems with 3D masks. In Proceedings of the 2013 International Conference of the BIOSIG Special Interest Group (BIOSIG), Darmstadt, Germany, 5–6 September 2013; pp. 1–8. [Google Scholar]

- Savran, A.; Alyüz, N.; Dibeklioğlu, H.; Çeliktutan, O.; Gökberk, B.; Sankur, B.; Akarun, L. Bosphorus database for 3D face analysis. In European Workshop on Biometrics and Identity Management; Springer: Berlin/Heidelberg, Germany, 2008; pp. 47–56. [Google Scholar]

- Yin, L.; Wei, X.; Sun, Y.; Wang, J.; Rosato, M.J. A 3D facial expression database for facial behavior research. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), Southampton, UK, 10–12 April 2006; pp. 211–216. [Google Scholar]

- Zhang, X.; Yin, L.; Cohn, J.F.; Canavan, S.; Reale, M.; Horowitz, A.; Liu, P. A high-resolution spontaneous 3D dynamic facial expression database. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; pp. 1–6. [Google Scholar]

- Phillips, P.J.; Moon, H.; Rizvi, S.A.; Rauss, P.J. The FERET evaluation methodology for face-recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1090–1104. [Google Scholar] [CrossRef]

- Phillips, P.J.; Scruggs, W.T.; O’Toole, A.J.; Flynn, P.J.; Bowyer, K.W.; Schott, C.L.; Sharpe, M. FRVT 2006 and ICE 2006 large-scale experimental results. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 831–846. [Google Scholar] [CrossRef] [PubMed]

- Tabula Rasa (Trusted Biometrics under Spoofing Attacks). Available online: http://www.tabularasa-euproject.org/contact-info (accessed on 16 February 2022).

- Zafeiriou, S.; Hansen, M.; Atkinson, G.; Argyriou, V.; Petrou, M.; Smith, M.; Smith, L. The photoface database. In Proceedings of the CVPR 2011 WORKSHOPS, Colorado Springs, CO, USA, 20–25 June 2011; pp. 132–139. [Google Scholar]

- Labeled Faces in the Wild. Available online: http://vis-www.cs.umass.edu/lfw/ (accessed on 16 February 2022).

- Wolf, L.; Hassner, T.; Maoz, I. Face recognition in unconstrained videos with matched background similarity. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 529–534. [Google Scholar]

- Sim, T.; Baker, S.; Bsat, M. The CMU pose, illumination, and expression (PIE) database. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21 May 2002; pp. 53–58. [Google Scholar]

- Toderici, G.; Evangelopoulos, G.; Fang, T.; Theoharis, T.; Kakadiaris, I.A. UHDB11 database for 3D-2D face recognition. In Pacific-Rim Symposium on Image and Video Technology; Springer: Berlin/Heidelberg, Germany, 2013; pp. 73–86. [Google Scholar]

- Kumar, A.; Wu, C. Automated human identification using ear imaging. Pattern Recognit. 2012, 45, 956–968. [Google Scholar] [CrossRef]

- AMI Ear Database. Available online: https://ctim.ulpgc.es/research_works/ami_ear_database/ (accessed on 19 February 2022).

- Chen, H.; Bhanu, B. Human ear recognition in 3D. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 718–737. [Google Scholar] [CrossRef]

- Yan, P.; Bowyer, K.W. Biometric recognition using 3D ear shape. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1297–1308. [Google Scholar] [CrossRef]

- Messer, K.; Matas, J.; Kittler, J.; Luettin, J.; Maitre, G. XM2VTSDB: The extended M2VTS database. In Proceedings of the Second International Conference on Audio and Video-Based Biometric Person Authentication, Washington, DC, USA, 22–24 March 1999; Volume 964, pp. 965–966. [Google Scholar]

- López-Fernández, D.; Madrid-Cuevas, F.J.; Carmona-Poyato, Á.; Marín-Jiménez, M.J.; Muñoz-Salinas, R. The AVA multi-view dataset for gait recognition. In International Workshop on Activity Monitoring by Multiple Distributed Sensing; Springer: Cham, Switzerland, 2014; pp. 26–39. [Google Scholar]

- Iwashita, Y.; Ogawara, K.; Kurazume, R. Identification of people walking along curved trajectories. Pattern Recognit. Lett. 2014, 48, 60–69. [Google Scholar] [CrossRef]

- Gkalelis, N.; Kim, H.; Hilton, A.; Nikolaidis, N.; Pitas, I. The i3dpost multi-view and 3d human action/interaction database. In Proceedings of the 2009 Conference for Visual Media Production, London, UK, 12–13 November 2009; pp. 159–168. [Google Scholar]

- Singh, S.; Velastin, S.A.; Ragheb, H. Muhavi: A multicamera human action video dataset for the evaluation of action recognition methods. In Proceedings of the 2010 7th IEEE International Conference on Advanced Video and Signal Based Surveillance, Boston, MA, USA, 29 August–1 September 2010; pp. 48–55. [Google Scholar]

- Weinland, D.; Ronfard, R.; Boyer, E. Free viewpoint action recognition using motion history volumes. Comput. Vis. Image Underst. 2006, 104, 249–257. [Google Scholar] [CrossRef]

- IIT Delhi Iris Database. Available online: http://https://www4.comp.polyu.edu.hk/~csajaykr/IITD/Database_Iris.htm (accessed on 20 February 2022).

- The Hong Kong Polytechnic University 3D Fingerprint Images Database. Available online: https://tinyurl.com/4nmb2keb (accessed on 19 February 2022).

- Tharewal, S.; Malche, T.; Tiwari, P.K.; Jabarulla, M.Y.; Alnuaim, A.A.; Mostafa, A.M.; Ullah, M.A. Score-Level Fusion of 3D Face and 3D Ear for Multimodal Biometric Human Recognition. Comput. Intell. Neurosci. 2022, 2022, 3019194. [Google Scholar] [CrossRef] [PubMed]

| Biometric | Major Category | Percentage (%) | References |

|---|---|---|---|

| Facial | Face | 59.26 | [42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,58,71] |

| Fingerprint | Hand | 11.11 | [72,73,74,75,76,77] |

| Finger Vein | Hand | 7.41 | [78,79,80,81] |

| Ear | Face | 5.56 | [45,82,83] |

| Iris | Face | 5.56 | [84,85,86] |

| Gait | Gait | 5.56 | [87,88,89] |

| Skull | Face | 3.70 | [43,90] |

| Palm | Hand | 1.85 | [91] |

| Dataset | Biometric | Number of Images | Classes | Year | Reference |

|---|---|---|---|---|---|

| AFLW | Facial | 21,997 | 25,993 | 2011 | [123] |

| 3D-MAD | Facial | 76,500 | 17 | 2013 | [124] |

| Bosphorus | Facial | 4666 | 105 | 2008 | [125] |

| BU-3DFE | Facial | 2500 | 100 | 2006 | [126] |

| BU-4DFE | Facial | 60,600 | 101 | 2013 | [127] |

| Feret | Facial | 14,126 | 1199 | 2000 | [128] |

| FRGC | Facial | 50,000 | 12,500 | 2004 | [129] |

| Morpho | Facial | 200 | 20 | 2013 | [130] |

| The Photoface Database | Facial | 7356 | 261 | 2011 | [131] |

| LFW | Facial | 13,233 | 5749 | 2019 | [132] |

| Youtube Faces | Facial | 3245 (videos) | 1595 | 2011 | [133] |

| Pie | Facial | 75,000 | 337 | 2002 | [134] |

| UHDB11 | Facial | 1656 | 23 | 2013 | [135] |

| IIT-Kanpur | Ear | 465 | 125 | 2012 | [136] |

| AMI | Ear | 700 | 100 | 2008 | [137] |

| UCR | Ear | 902 | 155 | 2007 | [138] |

| UND | Ear | 1686 | 415 | 2007 | [139] |

| XM2VTS | Ear | 1180 (videos) | 295 | 2013 | [140] |

| AVAMVG | Gait | 200 (videos) | 20 | 2014 | [141] |

| KY4D | Gait | 168 (videos) | 42 | 2014 | [142] |

| i3DPost | Gait | 768 (videos) | 8 | 2009 | [143] |

| MuHAVi | Gait | 136 (videos) | 14 | 2010 | [144] |

| IXMAS | Gait | 550 (videos) | 10 | 2006 | [145] |

| SCUT LFMB-3DPVFV | Finger Vein | 16,848 | 702 | 2022 | [81] |

| IIT Iris Database | Iris | 1120 | 224 | 2007 | [146] |

| Hong Kong Polytechnic 3D | Fingerprint | 1560 | 260 | 2016 | [147] |

| Title | Biometric | Score | Dataset | Year | Reference |

|---|---|---|---|---|---|

| Verifying kinship from rgb-d face data | Facial | 95% (accuracy) | Kin3D | 2020 | [59] |

| A novel 3D ear reconstruction method using a single image | Ear | manual | UND | 2012 | [98] |

| A 3D iris scanner

from a single image using convolutional neural networks | Iris | 99.8% (accuracy) | 98,520 iris | 2020 | [85] |

| An accuracy assessment of forensic computerized facial reconstruction employing cone-beam computed tomography from live subjects | Skull | 0.31 mm (error) | 3 humans | 2012 | [43] |

| 3D fingerprint recognition based on ridge–valley guided 3D reconstruction and 3D topology polymer feature extraction | Fingerprint | 98% (accuracy) | DB1, DB2 | 2019 | [77] |

| Endowing rotation

invariancefor 3D finger shape and vein verification | Finger Vein | 2.61 (ER%) | 3DPVFV | 2022 | [81] |

| Biometric recognition

of people by 3D hand geometry | Palm | 0.0018 (RMSE) | 3 palms | 2013 | [91] |

| Model-based interpolation for continuous human silhouette images by height-constraint assumption | Gait | 95% | KY 4D | 2020 | [89] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Samatas, G.G.; Papakostas, G.A. Biometrics: Going 3D. Sensors 2022, 22, 6364. https://doi.org/10.3390/s22176364

Samatas GG, Papakostas GA. Biometrics: Going 3D. Sensors. 2022; 22(17):6364. https://doi.org/10.3390/s22176364

Chicago/Turabian StyleSamatas, Gerasimos G., and George A. Papakostas. 2022. "Biometrics: Going 3D" Sensors 22, no. 17: 6364. https://doi.org/10.3390/s22176364

APA StyleSamatas, G. G., & Papakostas, G. A. (2022). Biometrics: Going 3D. Sensors, 22(17), 6364. https://doi.org/10.3390/s22176364