Sensor Verification and Analytical Validation of Algorithms to Measure Gait and Balance and Pronation/Supination in Healthy Volunteers

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design

2.1.1. Study Setup

2.1.2. Sample Size Estimation

- Anticipated ICC > 0.75 (good agreement);

- Anticipated ICC plus half width of 95th percentile CI ≤ 1;

- Anticipated ICC minus half width of 95th percentile CI ≥ 0.75.

- Three or four repeats are required for the ICC upper bound to be less than one;

- To retain the lower bound, more than 4 repeats are required if the ICC is less than 0.9.

2.2. Materials

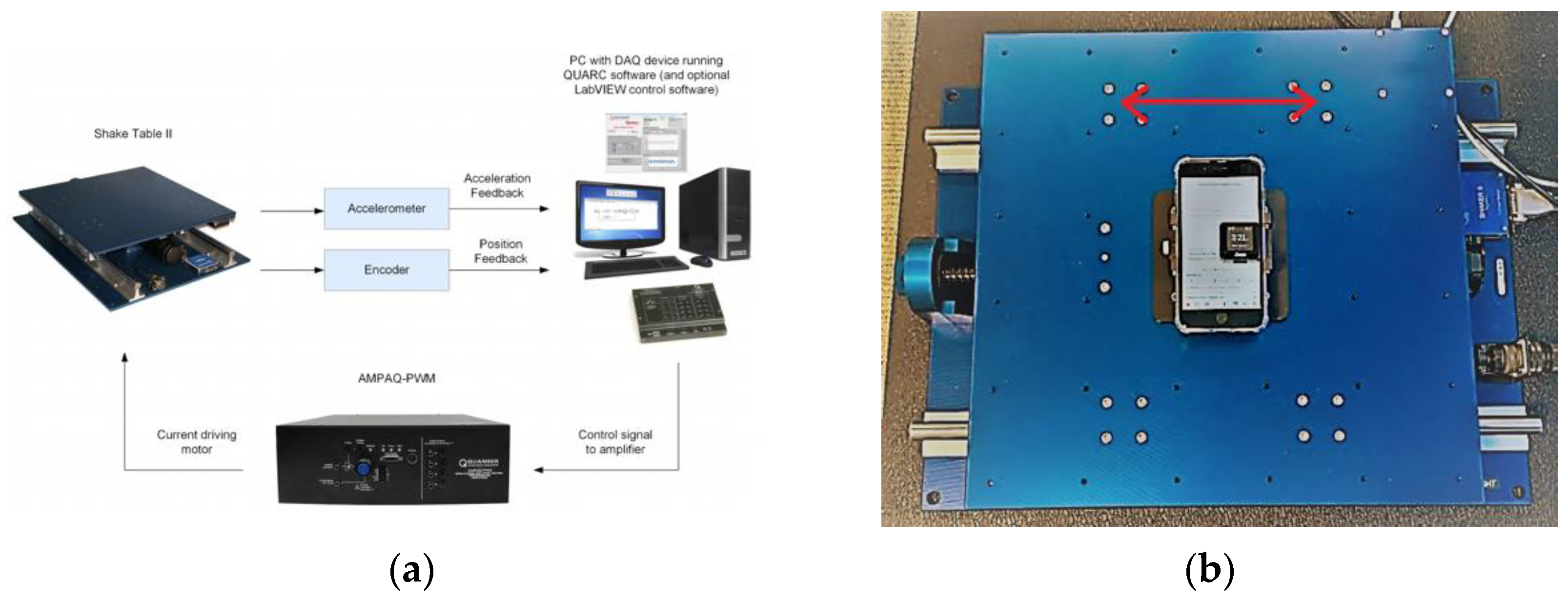

2.2.1. Hardware

2.2.2. Software

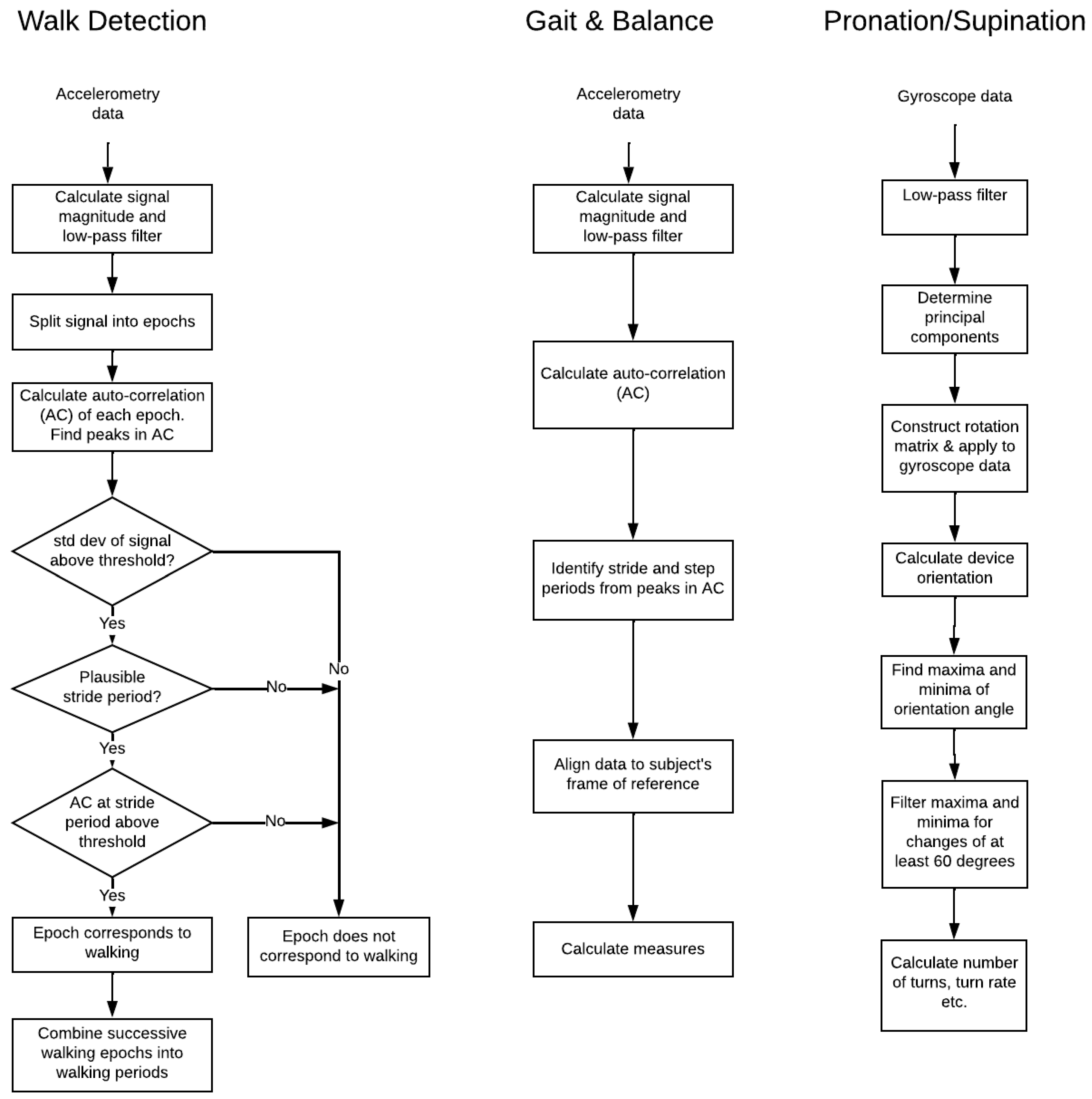

2.2.3. Algorithms

2.2.4. Statistical Analysis

2.3. Technical Verification

2.4. Analytical Validation

2.4.1. iPhone Walking Task

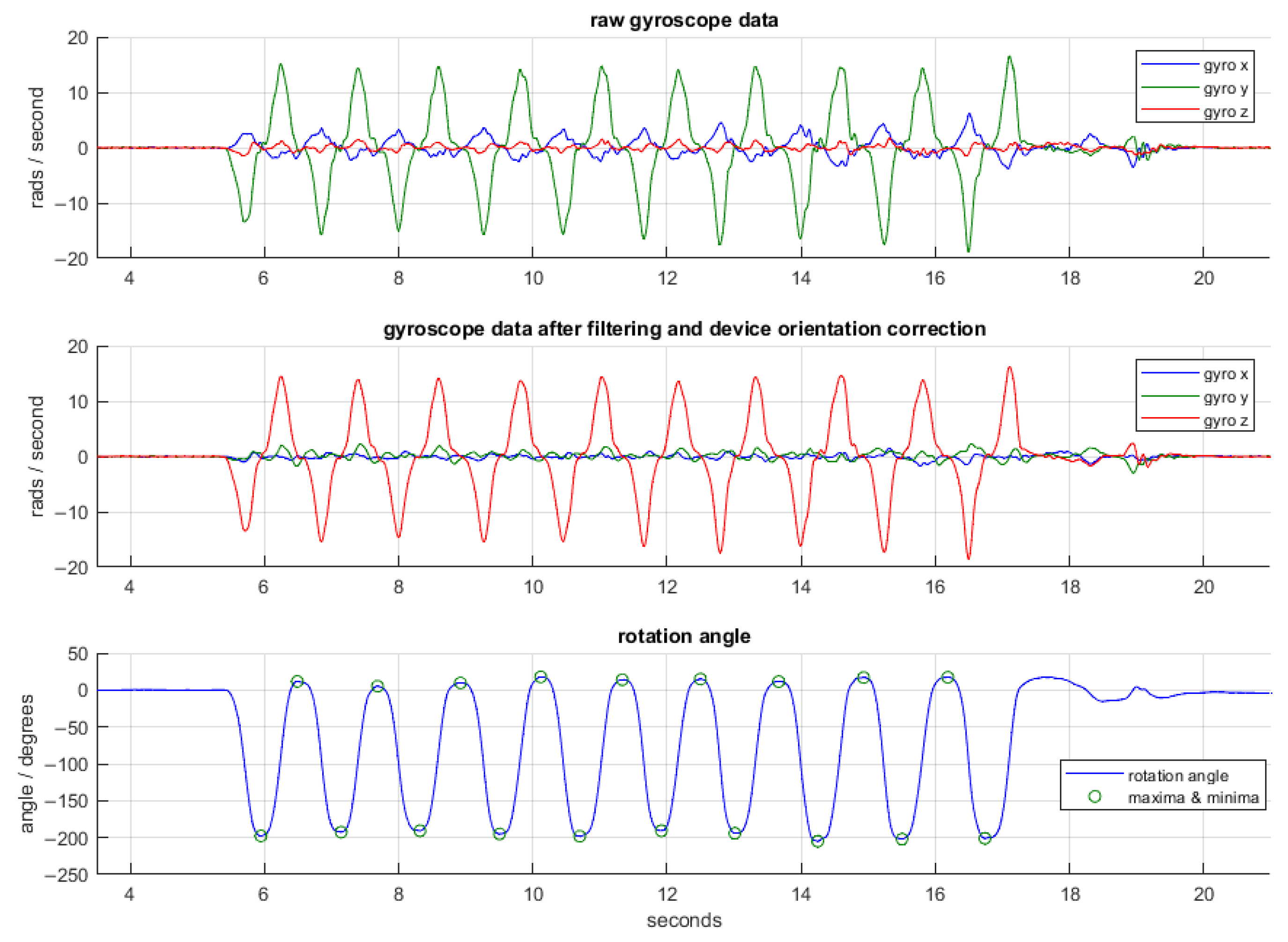

2.4.2. iPhone Pronation/Supination Task

2.4.3. ActiGraph Passive Walking Detection and Gait

2.5. Data Processing

3. Results

3.1. Technical Verification

3.2. Analytical Validation

3.2.1. iPhone Walking Task

3.2.2. iPhone Pronation/Supination Task

3.2.3. ActiGraph Passive Walk Detection and Gait Measures

4. Discussion

5. Conclusions

6. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jankovic, J. Parkinson’s disease: Clinical features and diagnosis. J. Neurol. Neurosurg. Psychiatry 2008, 79, 368–376. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goetz, C.G.; Tilley, B.C.; Shaftman, S.R.; Stebbins, G.T.; Fahn, S.; Martinez-Martin, P.; Poewe, W.; Sampaio, C.; Stern, M.B.; Dodel, R.; et al. Movement Disorder Society-sponsored revision of the Unified Parkinson’s Disease Rating Scale (MDS-UPDRS): Scale presentation and clinimetric testing results. Mov. Disord. 2008, 23, 2129–2170. [Google Scholar] [CrossRef] [PubMed]

- Bot, B.M.; Suver, C.; Neto, E.C.; Kellen, M.; Klein, A.; Bare, C.; Doerr, M.; Pratap, A.; Wilbanks, J.; Dorsey, E.R.; et al. The mPower study, Parkinson disease mobile data collected using ResearchKit. Sci. Data 2016, 3, 160011. [Google Scholar] [CrossRef] [PubMed]

- Lipsmeier, F.; Taylor, K.I.; Kilchenmann, T.; Wolf, D.; Scotland, A.; Schjodt-Eriksen, J.; Cheng, W.Y.; Fernandez-Garcia, I.; Siebourg-Polster, J.; Jin, L.; et al. Evaluation of smartphone-based testing to generate exploratory outcome measures in a phase 1 Parkinson’s disease clinical trial. Mov. Disord. 2018, 33, 1287–1297. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Burq, M.; Rainaldi, E.; Ho, K.C.; Chen, C.; Bloem, B.R.; Evers, L.J.W.; Helmich, R.C.; Myers, L.; Marks, W.J., Jr.; Kapur, R.; et al. Virtual exam for Parkinson’s disease enables frequent and reliable remote measurements of motor function. NPJ Digit. Med. 2022, 5, 65. [Google Scholar] [CrossRef] [PubMed]

- Barrachina-Fernández, M.; Maitín, A.M.; Sánchez-Ávila, C.; Romero, J.P. Wearable Technology to Detect Motor Fluctuations in Parkinson’s Disease Patients: Current State and Challenges. Sensors 2021, 21, 4188. [Google Scholar] [CrossRef] [PubMed]

- Goldsack, J.C.; Coravos, A.; Bakker, J.P.; Bent, B.; Dowling, A.V.; Fitzer-Attas, C.; Godfrey, A.; Godino, J.G.; Gujar, N.; Izmailova, E.; et al. Verification, analytical validation, and clinical validation (V3): The foundation of determining fit-for-purpose for Biometric Monitoring Technologies (BioMeTs). NPJ Digit. Med. 2020, 3, 55. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Food and Drug Administration. Software as a Medical Device (SAMD): Clinical Evaluation—Guidance for Industry and Food and Drug Administration Staff. 2017. Available online: https://www.fda.gov/media/100714/download (accessed on 8 June 2022).

- Food and Drug Administration. Guidance for Industry Patient-Reported Outcome Measures: Use in Medical Product Development to Support Labeling Claims. 2009. Available online: https://www.fda.gov/media/77832/download (accessed on 9 June 2022).

- Food and Drug Administration. Digital Health Technologies for Remote Data Acquisition in Clinical Investigations—Guidance for Industry, Investigators, and Other Stakeholders. 2022. Available online: https://www.fda.gov/media/155022/download (accessed on 8 June 2022).

- Pearlmutter, A.; Nantes, J.; Giladi, N.; Horak, F.; Alcalay, R.; Hausdorff, J.; Simuni, T.; Little, A.; Jha, A.; Bozzi, S.; et al. Clinical Trial Digital Endpoint Development: Patient and Provider Perspectives on the Most Impactful Functional Aspects of Parkinson’s Disease. In Proceedings of the MDS Virtual Congress, Virtual, 17–22 September 2021. [Google Scholar]

- Pearlmutter, A.; Wong, N.; Levin, M.; Zheng, R.; Ellis, R.; Kelly, P.; Peterschmitt, M. Parkinson’s Disease Functional Impacts Digital Instrument (PD-FIDI): Studies Preceding Clinical Validation. In Proceedings of the MDS Virtual Congress, Virtual, 17–22 September 2021. [Google Scholar]

- Shoukri, M.M.; Asyali, M.H.; Donner, A. Sample size requirements for the design of reliability study: Review and new results. Stat. Methods Med. Res. 2004, 4, 251–271. [Google Scholar] [CrossRef]

- Siderowf, A.; McDermott, M.; Kieburtz, K.; Blindauer, K.; Plumb, S.; Shoulson, I. Test–retest reliability of the unified Parkinson’s disease rating scale in patients with early Parkinson’s disease: Results from a multicenter clinical trial. Mov. Disord. 2002, 17, 758–763. [Google Scholar] [CrossRef] [PubMed]

- Apple iPhone 8 Plus Technical Specification. Available online: https://support.apple.com/kb/sp768?locale=en_US (accessed on 2 June 2022).

- ActiGraph GT9X Link. Available online: https://actigraphcorp.com/actigraph-link/ (accessed on 2 June 2022).

- Quanser Shake Table II. Available online: https://www.quanser.com/products/shake-table-ii/ (accessed on 2 June 2022).

- Quanser QUARC Software. Available online: https://www.quanser.com/products/quarc-real-time-control-software/ (accessed on 2 June 2022).

- Umek, A.; Kos, A. Validation of smartphone gyroscopes for mobile biofeedback applications. Pers. Ubiquitous Comput. 2016, 20, 657–666. [Google Scholar] [CrossRef] [Green Version]

- Matlab R2019b Release. Available online: https://www.mathworks.com/products/new_products/release2019b.html (accessed on 2 June 2022).

- SensorLog Application. Apple App Store. Available online: https://apps.apple.com/us/app/sensorlog/id388014573 (accessed on 2 June 2022).

- ActiGraph CenterPoint Platform. ActiGraph Corporation. Available online: https://actigraphcorp.com/centrepoint/ (accessed on 2 June 2022).

- ActiGraph ActiLife Software. ActiGraph Corporation. Available online: https://actigraphcorp.com/actilife/ (accessed on 2 June 2022).

- Python 3.6.5 Release Specification. Available online: https://www.python.org/downloads/release/python-365/ (accessed on 2 June 2022).

- Watson, P.F.; Petrie, A. Method agreement analysis: A review of correct methodology. Theriogenology 2010, 73, 1167–1179. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163, Erratum in J. Chiropr. Med. 2017, 16, 346. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Quanser Installation Qualification Manual. Available online: https://quanserinc.app.box.com/s/35ek73astl83h4z42q5r2dp69kk8r74w/file/168369015496 (accessed on 2 June 2022).

- Calzetti, S.; Baratti, M.; Gresty, M.; Findley, L. Frequency/amplitude characteristics of postural tremor of the hands in a population of patients with bilateral essential tremor: Implications for the classification and mechanism of essential tremor. J. Neurol. Neurosurg. Psychiatry 1987, 50, 561–567. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jankovic, J.; Schwartz, K.S.; Ondo, W. Re-emergent tremor of Parkinson’s disease. J. Neurol. Neurosurg. Psychiatry 1999, 67, 646–650. [Google Scholar] [CrossRef] [PubMed]

- Sigcha, L.; Pavon, I.; Costa, N.; Costa, S.; Gago, M.; Arezes, P.; Lopez, J.M.; Arcas, G. Automatic Resting Tremor Assessment in Parkinson’s Disease Using Smartwatches and Multitask Convolutional Neural Networks. Sensors 2021, 21, 291. [Google Scholar] [CrossRef] [PubMed]

- Kiprijanovska, I.; Gjoreski, H.; Gams, M. Detection of Gait Abnormalities for Fall Risk Assessment Using Wrist-Worn Inertial Sensors and Deep Learning. Sensors 2020, 20, 5373. [Google Scholar] [CrossRef] [PubMed]

- Evenson, K.R.; Goto, M.M.; Furberg, R.D. Systematic review of the validity and reliability of consumer-wearable activity trackers. Int. J. Behav. Nutr. Phys. Act 2015, 12, 159. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rodriguez-Molinero, A.; Perez-Lopez, C.; Sama, A.; Rodriguez-Martin, D.; Alcaine, S.; Mestre, B.; Quispe, P.; Giuliani, B.; Vainstein, G.; Browne, P.; et al. Estimating dyskinesia severity in Parkinson’s disease by using a waist-worn sensor: Concurrent validity study. Sci. Rep. 2019, 9, 13434. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hssayeni, M.D.; Jimenez-Shahed, J.; Burack, M.A.; Ghoraani, B. Wearable Sensors for Estimation of Parkinsonian Tremor Severity during Free Body Movements. Sensors 2019, 19, 4215. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tedesco, S.; Sica, M.; Ancillao, A.; Timmons, S.; Barton, J.; O’Flynn, B. Accuracy of consumer-level and research-grade activity trackers in ambulatory settings in older adults. PLOS ONE 2019, 14, e0216891. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qualification Opinion on Stride Velocity 95th Centile as a Secondary Endpoint in Duchenne Muscular Dystrophy Measured by a valid and Suitable Wearable Device. Available online: https://www.ema.europa.eu/en/documents/scientific-guideline/qualification-opinion-stride-velocity-95th-centile-secondary-endpoint-duchenne-muscular-dystrophy_en.pdf (accessed on 9 August 2022).

| Study | Study Objectives | Key Findings | Development Framework Alignment |

|---|---|---|---|

| Bot et al., 2016. The mPower study, Parkinson disease mobile data collected using ResearchKit [3]. | An observational smartphone-based study to evaluate the feasibility of remotely collecting frequent information about the daily changes in symptom severity and their sensitivity to medication in PD. | Established a database of sensor data collected in PD patients plus candidate disease features for several tasks including memory, finger tap, voice and walking. Data subsequently hosted for other approved researchers to access. | The data were derived from Apple iPhone devices with proprietary technical validation. Frameworks available at the time were not leveraged in the research. |

| Lipsmeier et al., 2018. Evaluation of smartphone-based testing to generate exploratory outcome measures in a phase 1 Parkinson’s disease clinical trial [4]. | The study assessed the feasibility, reliability and clinical validity of smartphone-based digital biomarkers of PD in a clinical trial setting. | Acceptable adherence among study participants. Sensor-based features showed moderate-to-excellent test–retest reliability (average ICC 0.84). All active test (sustained phonation, rest tremor, postural tremor, finger-tapping, balance and gait) features, except sustained phonation, were significantly related to corresponding MDS-UPRDS clinical severity ratings. | Sensor verification was not published. Analytical validation of accuracy of data processing algorithms was not established. The study’s main focus was clinical validation to compare sensor-based features with MDS-UPDRS in subjects with PD and healthy controls. |

| Barrachina-Fernandez et al., 2021 Wearable technology to detect motor fluctuations in Parkinson’s disease patients: current state and challenges [6]. | A systematic review of the utilization of sensors for identifying motor fluctuations in PD patients (on and off states) and the application of machine learning techniques. | The study highlighted that the two most influential factors in the good performance of the classification problem are the type of features utilized and the type of model. | The studies selected for review did not follow technology evaluation according to frameworks required for assessing technology use in clinical trials. The authors do not consider technology evaluation or analytical validation of measures as a condition of inclusion in the analysis. |

| Burq, M. et al. (2022) Virtual exam for Parkinson’s disease enables frequent and reliable remote measurements of motor function [5]. | Clinical evaluation of smartwatch-based active assessment that enables unsupervised measurement of motor signs of PD. | The study established patient engagement, usability in addition to comparing the smartwatch-based modern features with MDS-UPDRS scale items. | Sensor verification and analytical validation of data processing algorithms were not established. |

| Sensor verification and analytical validation of algorithms to measure gait and balance and pronation/supination in healthy volunteers [current manuscript]. | Technical verification of accelerometers in an Apple iPhone 8 Plus and ActiGraph GT9X versus an oscillating table; analytical validation of software tasks for walking and pronation/supination in healthy volunteers versus human raters. | The study followed the V3 framework and ascertained that selected sensors and algorithms processing accelerometry data are accurate and appropriate to use in clinical validation studies in patients with Parkinson’s disease. | This study followed the framework and FDA guidance on DHT use for remote data collection in clinical investigations. This is a preliminary step to ascertain technology performance prior to testing in patients. |

| Algorithm | Measure |

|---|---|

| Walk Detection |

|

| Gait and Balance |

|

| Pronation/Supination |

|

| Algorithm | Measure |

|---|---|

| Walk Detection |

|

| Gait and Balance |

|

| Pronation/Supination |

|

| Test Type | Test Configuration |

|---|---|

| Analytical Validity |

|

| Operational Tolerance |

|

| Test Type | Test Configuration |

|---|---|

| Analytical Validity | This test configuration was completed for both unsupervised and supervised completion of the task as instructed |

| Operational Tolerance | The test was completed for each of the following configurations:

|

| Test | Test Configuration |

|---|---|

| Analytical Validity 10 s walk |

|

| Analytical Validity 20 s walk |

|

| Device | Nominal Peak Acceleration | Percent of ICC > 0.75 |

|---|---|---|

| iPhone | 0.005 g to 3.261 g | 99.4% |

| ActiGraph | ≥ 0.1 g | 91.9% |

| <0.1 g | 2.3% |

| Type | Test | Duration (s) | Distance (m) | Steps (Count) | Speed (ms−1) | Stride Period (s) |

|---|---|---|---|---|---|---|

| AV | 5 s Walk | 0.496 * | 0.856 | 0.838 | 0.730 | 0.334 |

| 10 s Walk | −0.112 * | 0.948 | 0.873 | 0.942 | 0.893 | |

| 15 s Walk | 0.299 * | 0.933 | 0.932 | 0.950 | 0.892 | |

| 20 s Walk | −0.206 * | 0.944 | 0.976 | 0.944 | 0.955 | |

| 5–20 s Combined | 0.989 | 0.987 | 0.992 | 0.754 | 0.593 | |

| OT | Loose Pocket | 0.133 * | 0.926 | 0.874 | 0.840 | 0.889 |

| Shoulder Bag | 0.041 * | 0.914 | 0.889 | 0.892 | 0.844 |

| Type | Test | Turns (Count) | Rotation Rate (Turns/s) |

|---|---|---|---|

| AV | Complete as instructed | 0.642 | 0.935 |

| OT | Raise and lower arm | 0.971 | 0.975 |

| Stop and start turn every 5 s | 0.995 | 0.990 | |

| Turn 2 times then stop | 1.000 | 0.732 |

| Test | Statistic | Start Time (s) | End Time (s) | Duration (s) | Steps (Count) | Stride Period (s) |

|---|---|---|---|---|---|---|

| Start and stop walking every 10 s | MAE | 0.874 | 0.521 | 0.494 | 0.628 | 0.039 |

| RMSE | 0.999 | 0.626 | 0.629 | 0.816 | 0.051 | |

| MAPE | -* | 5.20% | 5.00% | 3.40% | 3.60% | |

| Start and stop walking every 20 s | MAE | 1.048 | 1.158 | 1.121 | 2.036 | 0.028 |

| RMSE | 1.545 | 1.492 | 1.645 | 3.166 | 0.036 | |

| MAPE | -* | 5.80% | 5.60% | 5.40% | 2.60% | |

| Combined results for start and stop every 10 s and 20 s | MAE | 0.943 | 0.776 | 0.745 | 1.191 | 0.033 |

| RMSE | 1.246 | 1.061 | 1.149 | 2.100 | 0.042 | |

| MAPE | -* | 5.50% | 5.20% | 4.20% | 3.10% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ellis, R.; Kelly, P.; Huang, C.; Pearlmutter, A.; Izmailova, E.S. Sensor Verification and Analytical Validation of Algorithms to Measure Gait and Balance and Pronation/Supination in Healthy Volunteers. Sensors 2022, 22, 6275. https://doi.org/10.3390/s22166275

Ellis R, Kelly P, Huang C, Pearlmutter A, Izmailova ES. Sensor Verification and Analytical Validation of Algorithms to Measure Gait and Balance and Pronation/Supination in Healthy Volunteers. Sensors. 2022; 22(16):6275. https://doi.org/10.3390/s22166275

Chicago/Turabian StyleEllis, Robert, Peter Kelly, Chengrui Huang, Andrew Pearlmutter, and Elena S. Izmailova. 2022. "Sensor Verification and Analytical Validation of Algorithms to Measure Gait and Balance and Pronation/Supination in Healthy Volunteers" Sensors 22, no. 16: 6275. https://doi.org/10.3390/s22166275

APA StyleEllis, R., Kelly, P., Huang, C., Pearlmutter, A., & Izmailova, E. S. (2022). Sensor Verification and Analytical Validation of Algorithms to Measure Gait and Balance and Pronation/Supination in Healthy Volunteers. Sensors, 22(16), 6275. https://doi.org/10.3390/s22166275