Collision Detection of a HEXA Parallel Robot Based on Dynamic Model and a Multi-Dual Depth Camera System

Abstract

:1. Introduction

- The analysis of implementing a dynamic model of the moving Hexa parallel robot for the collision detection problem is proposed.

- The obstacles and the Hexa robot are isolated by the proposed geometrical algorithm and the set of real-time images taken from the multi-cameras system is used to locate the contact point.

- Finally, the Cartesian external force at the contact point is estimated by the recursive Newton-Euler algorithm for the Hexa parallel robot, compared to the force sensor in different collision point cases.

2. Modeling of the Hexa Parallel Robot

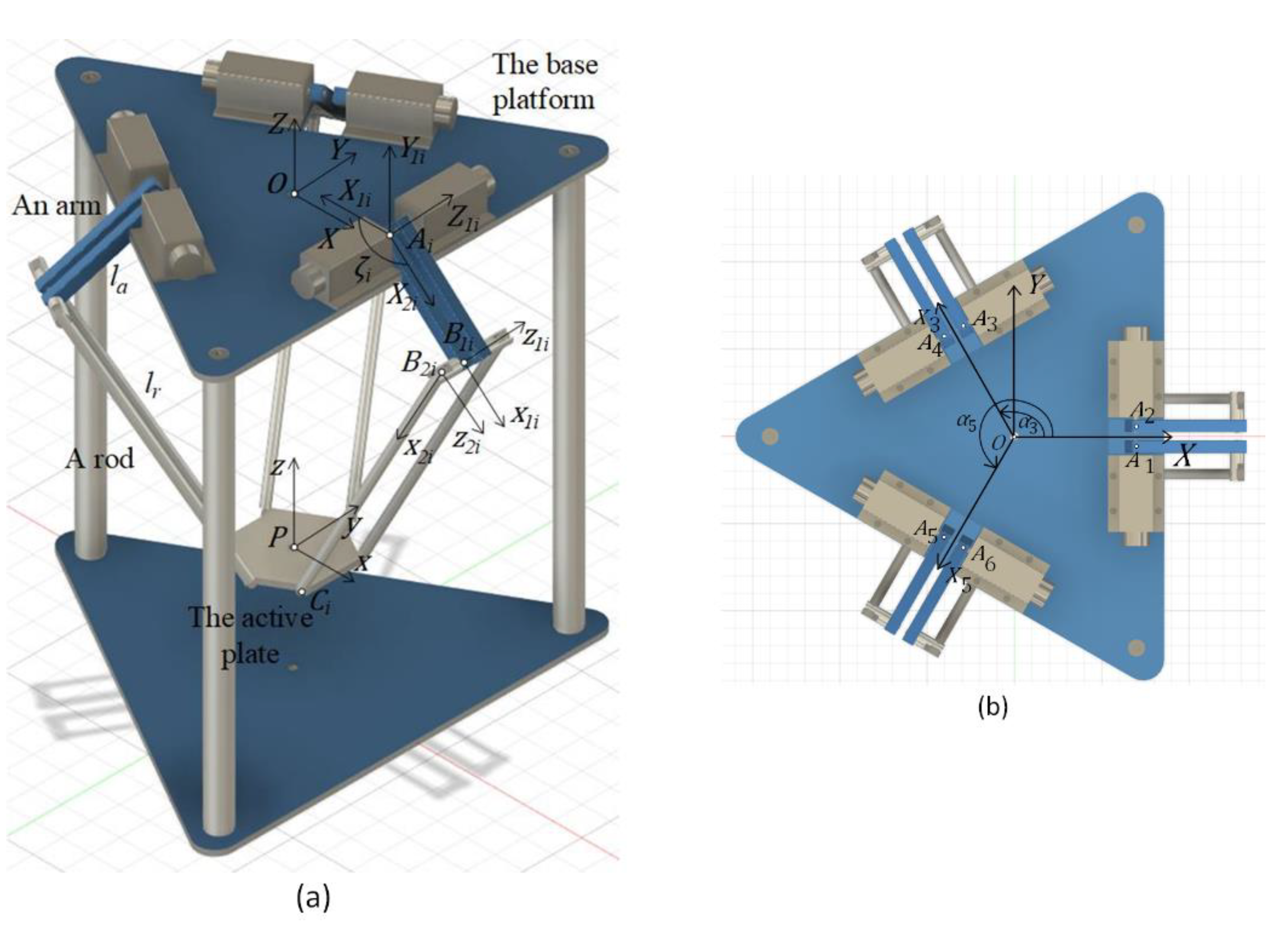

2.1. Configurations of the Hexa Robot

2.2. Kinematics

2.2.1. Inverse Kinematics

2.2.2. Forward Kinematics

2.3. Dynamics

2.3.1. Inverse Dynamics

2.3.2. Forward Dynamics

3. Collision Detection, Isolation and Identification of the Hexa Robot

3.1. Collision Detection

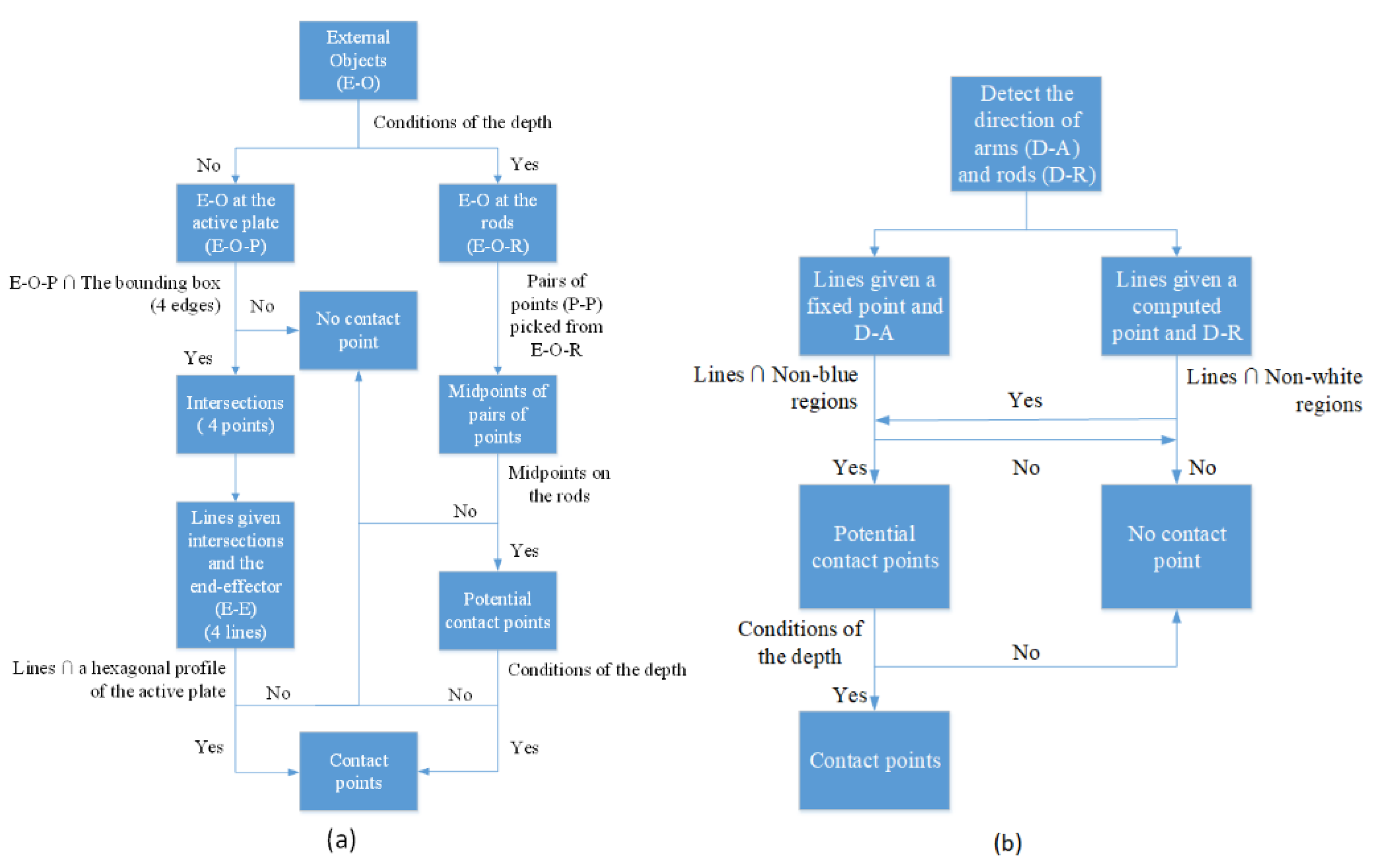

3.2. Collision Isolation

- Case 1: Contact points are determined by the first camera.

- Case 2: Contact points are determined by the second camera.

3.3. Collision Identification

- Case 1: A collision occurs on the active plate.

- Case 2: A collision occurs at an arm.

- Case 3: A collision occurs at a rod.

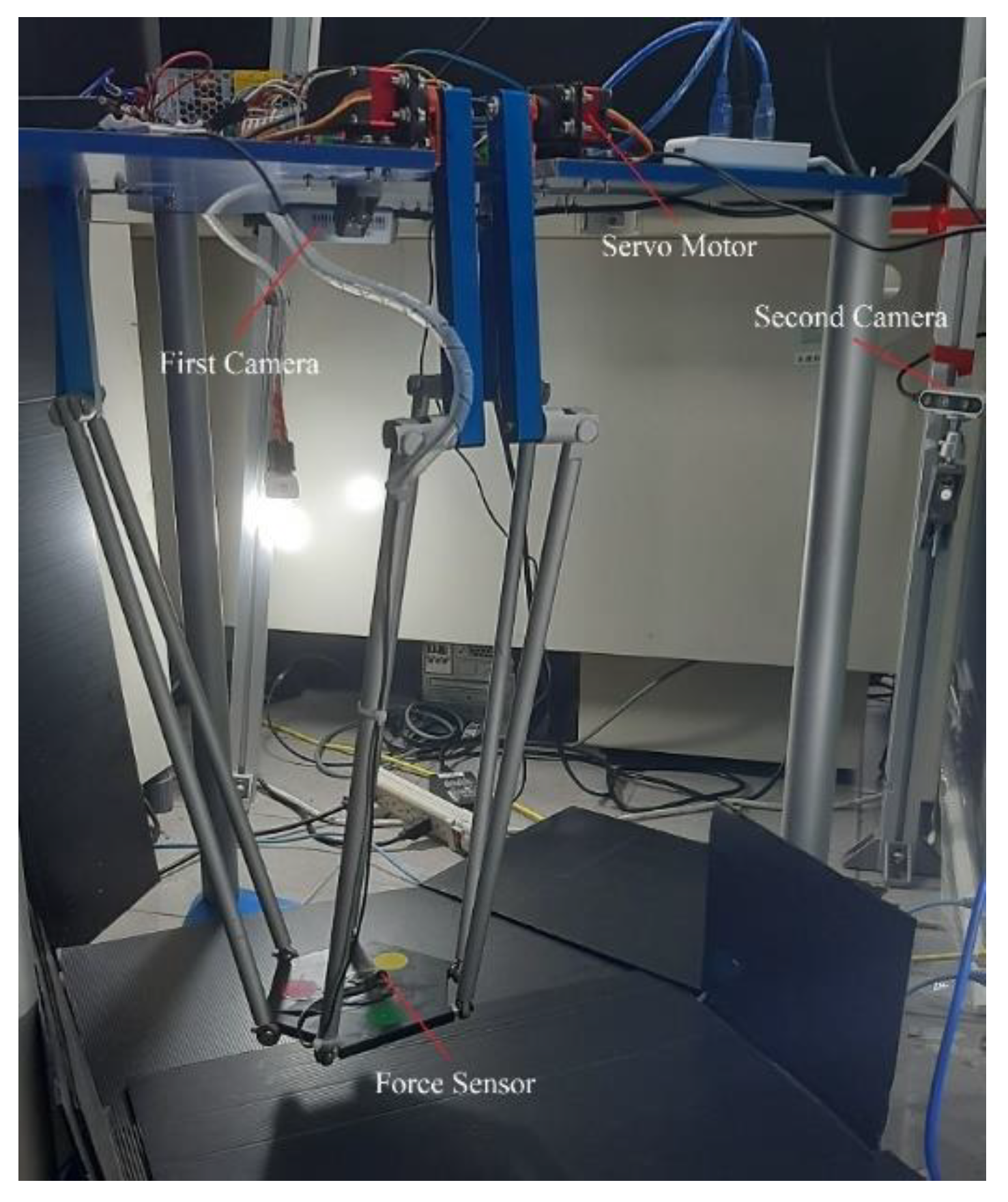

4. Experimental Setup

5. Experimental Results

5.1. Case 1: Collision at the Active Plate

5.1.1. Collision Detection at the Active Plate

5.1.2. Collision Isolation at the Active Plate

5.1.3. Collision Identification at the Active Plate

5.2. Case 2: Collision at an Arm

5.2.1. Collision Detection an Arm

5.2.2. Collision Isolation an Arm

5.2.3. Collision Identification an Arm

5.3. Case 3: Collision at a Rod

5.3.1. Collision Detection at a Rod

5.3.2. Collision Isolation at a Rod

5.3.3. Collision Identification at a Rod

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Merlet, J.P.; Gosselin, C. Parallel Mechanisms and Robots. In Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 269–285. [Google Scholar]

- Pierrot, F.; Dauchez, P.; Fournier, A. HEXA: A Fast Six-DOF Fully Parallel Robot. In Proceedings of the Fifth International Conference on Advanced Robotics ’Robots in Unstructured Environments, Pisa, Italy, 19–22 June 1991; Volume 2, pp. 1158–1163. [Google Scholar] [CrossRef]

- Huynh, B.-P.; Wu, C.-W.; Kuo, Y.-L. Force/Position Hybrid Control for a Hexa Robot Using Gradient Descent Iterative Learning Control Algorithm. IEEE Access 2019, 7, 72329–72342. [Google Scholar] [CrossRef]

- Filho, S.C.T.; Cabral, E.L.L. Kinematics and Workspace Analysis of a Parallel Architecture Robot: The Hexa. ABCM Symp. Ser. Mechatron. 2005, 2, 158–165. [Google Scholar]

- Dehghani, M.; Ahmadi, M.; Khayatian, A.; Eghtesad, M.; Farid, M. Neural network solution for forward kinematics problem of HEXA parallel robot. In Proceedings of the 2008 American Control Conference, Seattle, WA, USA, 11–13 June 2008; Volume 60, pp. 4214–4219. [Google Scholar] [CrossRef]

- Filho, S.C.T.; Cabral, E.L.L. Dynamics and Jacobian Analysis of a Parallel Architecture Robot: The Hexa. ABCM Symp. Ser. Mechatron. 2005, 2, 166–173. [Google Scholar]

- Wang, X.; Tian, Y. Inverse Dynamics of Hexa Parallel Robot Based on the Lagrangian Equations of First Type. In Proceedings of the 2010 International Conference on Mechanic Automation and Control Engineering, Wuhan, China, 26–28 June 2010; pp. 3712–3716. [Google Scholar] [CrossRef]

- Birjandi, S.A.B.; Kuhn, J.; Haddadin, S. Observer-Extended Direct Method for Collision Monitoring in Robot Manipulators Using Proprioception and IMU Sensing. IEEE Robot. Autom. Lett. 2020, 5, 954–961. [Google Scholar] [CrossRef]

- Birjandi, S.A.B.; Haddadin, S. Model-Adaptive High-Speed Collision Detection for Serial-Chain Robot Manipulators. IEEE Robot. Autom. Lett. 2020, 5, 6544–6551. [Google Scholar] [CrossRef]

- Qiu, Z.; Ozawa, R. A Sensorless Collision Detection Approach Based on Virtual Contact Points. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018; pp. 7639–7645. [Google Scholar] [CrossRef]

- Tian, Y.; Chen, Z.; Jia, T.; Wang, A.; Li, L. Sensorless Collision Detection and Contact Force Estimation for Collaborative Robots Based on Torque Observer. In Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 946–951. [Google Scholar] [CrossRef]

- Yao, Y.; Shen, Y.; Lu, Y.; Zhuang, C. Sensorless Collision Detection Method for Robots with Uncertain Dynamics Based on Fuzzy Logics. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation, ICMA 2020, Beijing, China, 13–16 October 2020; pp. 413–418. [Google Scholar] [CrossRef]

- Mcmahan, W.; Romano, J.M.; Kuchenbecker, K.J. Using Accelerometers to Localize Tactile Contact Events on a Robot Arm. In Workshop on Advances in Tactile Sensing and Touch-Based Human-Robot Interaction, Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction; 2012. [Google Scholar]

- Fritzsche, M.; Saenz, J.; Penzlin, F. A Large Scale Tactile Sensor for Safe Mobile Robot Manipulation. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; Volume 2016, pp. 427–428. [Google Scholar] [CrossRef]

- Fritzsche, M.; Elkmann, N.; Schulenburg, E. Tactile Sensing: A Key Technology for Safe Physical Human Robot Interaction. In Proceedings of the 6th International Conference on Human-Robot Interaction—HRI ’11, Lausanne, Switzerland, 6–9 March 2011; p. 139. [Google Scholar] [CrossRef]

- Cannata, G.; Maggiali, M.; Metta, G.; Sandini, G. An Embedded Artificial Skin for Humanoid Robots. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Seoul, Korea, 20–22 August 2008; pp. 434–438. [Google Scholar] [CrossRef]

- Yen, S.H.; Tang, P.C.; Lin, Y.C.; Lin, C.Y. Development of a Virtual Force Sensor for a Low-Cost Collaborative Robot and Applications to Safety Control. Sensors 2019, 19, 2603. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Magrini, E.; Flacco, F.; de Luca, A. Estimation of Contact Forces using a Virtual Force Sensor. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2126–2133. [Google Scholar] [CrossRef]

- Popov, D.; Klimchik, A.; Mavridis, N. Collision Detection, Localization & Classification for Industrial Robots with Joint Torque Sensors. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 838–843. [Google Scholar] [CrossRef]

- de Luca, A.; Albu-Schaffer, A.; Haddadin, S.; Hirzinger, G. Collision Detection and Safe Reaction with the DLR-III Lightweight Manipulator Arm. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 1623–1630. [Google Scholar] [CrossRef] [Green Version]

- Flacco, F.; Paolillo, A.; Kheddar, A. Residual-Based Contacts Estimation for Humanoid Robots. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; pp. 409–415. [Google Scholar] [CrossRef] [Green Version]

- Vorndamme, J.; Schappler, M.; Haddadin, S. Collision Detection, Isolation and Identification for Humanoids. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4754–4761. [Google Scholar] [CrossRef] [Green Version]

- Ebert, D.M.; Henrich, D.D. Safe Human-Robot-Cooperation: Image-based collision detection for Industrial Robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and System, Lausanne, Switzerland, 30 September–4 October 2002; Volume 2, pp. 1826–1831. [Google Scholar] [CrossRef] [Green Version]

- Rahul; Nair, B.B. Camera-Based Object Detection, Identification and Distance Estimation. In Proceedings of the 2018 2nd International Conference on Micro-Electronics and Telecommunication Engineering (ICMETE), Ghaziabad, India, 20–21 September 2018; pp. 203–205. [Google Scholar] [CrossRef]

- Mito, Y.; Morimoto, M.; Fujii, K. An Object Detection and Extraction Method Using Stereo Camera. In Proceedings of the 2006 World Automation Congress, Budapest, Hungary, 24–26 July 2006; pp. 1–6. [Google Scholar] [CrossRef]

- Lin, S.F.; Huang, S.H. Moving Object Detection from a Moving Stereo Camera via Depth Information and Visual Odometry. In Proceedings of the 2018 IEEE International Conference on Applied System Invention (ICASI), Chiba, Japan, 13–17 April 2018; pp. 437–440. [Google Scholar] [CrossRef]

- Mohan, A.S.; Resmi, R. Video Image Processing for Moving Object Detection and Segmentation using Background Subtraction. In Proceedings of the 2014 First International Conference on Computational Systems and Communications (ICCSC), Trivandrum, India, 17–18 December 2014; pp. 288–292. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Petrovskaya, A.; Park, J.; Khatib, O. Probabilistic Estimation of Whole Body Contacts for Multi-Contact Robot Control. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 568–573. [Google Scholar] [CrossRef] [Green Version]

- Dimeas, F.; Avendaño-Valencia, L.D.; Aspragathos, N. Human—Robot Collision Detection and Identification Based on Fuzzy and Time Series Modelling. Robotica 2015, 33, 1886–1898. [Google Scholar] [CrossRef]

- Khalil, W. Dynamic Modeling of Robots Using Recursive Newton-Euler Techniques. In Proceedings of the ICINCO 2010—Proceedings of the 7th International Conference on Informatics in Control, Automation and Robotics (2010), Funchal, Madeira, Portugal, 15–18 June 2010. [Google Scholar]

- Bianco, C.G.L. Evaluation of Generalized Force Derivatives by Means of a Recursive Newton–Euler Approach. IEEE Trans. Robot. 2009, 25, 954–959. [Google Scholar] [CrossRef]

- Featherstone, R.; Orin, D. Robot Dynamics: Equations and algorithms. In Proceedings of the 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No.00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 1, pp. 826–834. [Google Scholar] [CrossRef] [Green Version]

- Featherstone, R.; Orin, D.E. Dynamics. In Engineering Science, 6th ed.; Routledge: Berlin/Heidelberg, Germany, 2015; Volume 4, pp. 63–70. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoang, X.-B.; Pham, P.-C.; Kuo, Y.-L. Collision Detection of a HEXA Parallel Robot Based on Dynamic Model and a Multi-Dual Depth Camera System. Sensors 2022, 22, 5923. https://doi.org/10.3390/s22155923

Hoang X-B, Pham P-C, Kuo Y-L. Collision Detection of a HEXA Parallel Robot Based on Dynamic Model and a Multi-Dual Depth Camera System. Sensors. 2022; 22(15):5923. https://doi.org/10.3390/s22155923

Chicago/Turabian StyleHoang, Xuan-Bach, Phu-Cuong Pham, and Yong-Lin Kuo. 2022. "Collision Detection of a HEXA Parallel Robot Based on Dynamic Model and a Multi-Dual Depth Camera System" Sensors 22, no. 15: 5923. https://doi.org/10.3390/s22155923

APA StyleHoang, X.-B., Pham, P.-C., & Kuo, Y.-L. (2022). Collision Detection of a HEXA Parallel Robot Based on Dynamic Model and a Multi-Dual Depth Camera System. Sensors, 22(15), 5923. https://doi.org/10.3390/s22155923