Abstract

While in most industries, most processes are automated and human workers have either been replaced by robots or work alongside them, fewer changes have occurred in industries that use limp materials, like fabrics, clothes, and garments, than might be expected with today’s technological evolution. Integration of robots in these industries is a relatively demanding and challenging task, mostly because of the natural and mechanical properties of limp materials. In this review, information on sensors that have been used in fabric-handling applications is gathered, analyzed, and organized based on criteria such as their working principle and the task they are designed to support. Categorization and related works are presented in tables and figures so someone who is interested in developing automated fabric-handling applications can easily get useful information and ideas, at least regarding the necessary sensors for the most common handling tasks. Finally, we hope this work will inspire researchers to design new sensor concepts that could promote automation in the industry and boost the robotization of domestic chores involving with flexible materials.

1. Introduction

Since ancient times, people have been trying to develop tools and machines to increase productivity and facilitate everyday work. Nowadays, industries are implementing highly automated machines—robots—to significantly decrease production costs while considerably improving the rate of production. The development of robots and their integration in production processes can relieve industries of high labor costs and human workers of difficult, dangerous, and even repetitive, boring tasks. This allows humans to concentrate in other fields where they can outperform robots so industries can provide products at lower cost.

However, textile industries present a relatively low percentage of automation in their production lines, which are constantly transporting, handling, and processing limp materials. In 1990, the challenges of developing a fully automated garment manufacturing process were stated in [1]. In line with the article, one of the major mistakes usually made is facing the problem without considering engineering, by characterizing materials as soft, harsh, slippery, etc., without using quantitative values. The author suggested that fabrics must be carefully studied and, if needed, redesigned to fulfil the desired specifications. When it comes to the machinery used for manipulation of fabrics, the soft and sensitive surface of materials of this kind, combined with their lack of rigidity, demands the development of sophisticated and specially designed grippers and robots that can effectively handle limp materials like fabrics in an accurate, dexterous, and quick manner. Integration of robots in production aims to improve the quality of the final product so technology that might harm the material surface, like bulk machines used in other industries, is not implemented.

Picking up fabric either from a surface or from a pile of cloth is probably the most important and challenging task in fabric manipulation. Various methods to pick up fabric from a solid surface have been developed, such as intrusive, surface attraction, pinching, etc. [2]. Picking up fabric from a pile presents different challenges, such as detecting the fabric and dexterously grasping it.

Fabric-handling robots could—and probably will—also be used by individuals as home-assistive robots. The modern way of working and living, combined with the increase in life expectancy, has resulted in a high percentage of people with limited physical abilities who cannot easily perform many daily tasks, such as laundry and bed making. Advanced technologies could offer a solution to these people by making home-assistive robots capable of dealing with garments in order to help with these kinds of tasks. In addition to the difficulties caused by the mechanical properties of garments, tasks involving human–robot interaction should be flawlessly safe, and the robots used should be able to be precisely controlled. For this reason, the use of sensors in such applications is necessary to ensure the safety of humans involved. Such home-assistive applications are already being investigated, and many research projects have developed robots that could help in housework activities [3,4,5,6,7,8,9,10,11,12] or by assisting humans with mobility problems [13,14,15,16,17,18,19].

A recent approach to manipulation of clothes is CloPeMa (clothes perception and manipulation), which aims to advance the state of the art in autonomous perception and manipulation of all kinds of fabrics, textiles, and garments [20]. The project consisted of experiments with several types of fabrics and different solutions for their manipulation. Various sensors were integrated for haptic and visual sensing and recognition using a pair of robotic arms.

The present paper, according to current technological needs, constitutes an exhaustive review of sensors used in robotic applications involving limp materials. More attention has been given to recent applications in order to provide more useful information to someone interested in developing applications of their own. However, references to former applications are also analyzed, as they might be simpler and cheaper and could provide inspiration and include information important to an engineer. The presented sensing systems are categorized according to type and working principle and are analyzed according to the fabric-handling tasks of the applications.

2. Materials and Methods

All accessible applications involving sensors in the field of fabrics and, in general, limp-material handling were examined. Research was carried out on papers, articles in magazines, and books published from the 1980s until today. We processed information based on more than 60 papers and classified the sensors used according to their working principle (visual sensors, tactile sensors, etc.), as well as product-processing data. Classification of the included applications is presented in Table 1.

The purpose of this review is to facilitate the process of design and development of applications for limp materials. Koustoumpardis et al. [21] developed a decision-making system that helps in the design of grippers used in fabric-handling operations. Inputs include the task to be achieved and the operating principle of the gripper; the system then outputs a suggested sensor device. Similarly, in this paper, the classification of the references can make it easier for the reader to guide themselves to better understand the context and ultimately decide which sensors to use in their application.

3. Sensor Analysis

3.1. Working Principle

3.1.1. Visual Sensors

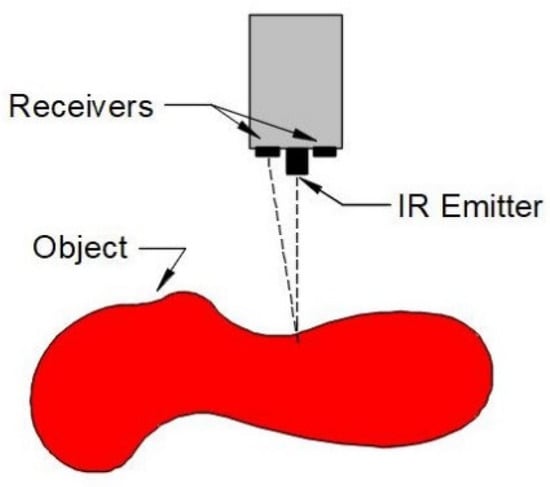

Most developed applications of fabric-handling robots include visual sensors. Visual sensors can be separated into various categories based on their working principle and their goal. The most fundamental classification is binary vs. non-binary sensors. Binary sensors can produce only two values as output: logic “0” and logic “1”. These sensors are only used to detect the presence of an object. A popular application of binary visual sensors is infrared sensors [22,23,24] (Figure 1), although other types of are available, such as fiber-optic [25,26,27] or optoelectronic sensors [28]. In [29] two sensors working as 1D cameras were used as edge sensors, measuring the light of every pixel.

Figure 1.

Depiction of a 1D infrared sensor.

On the other hand, non-binary sensors provide more information than just the existence of an object. These sensors are usually cameras, either 2D or 3D (Figure 2). According to Kelley [30], 2D vision can usually answer questions such as the location of a part, its orientation, its appropriacy, etc. These are the most common issues in fabric-manipulation applications, and 2D vision can be used to effectively solve them, avoiding the significant data processing required by 3D vision. The use of 2D cameras is very popular in applications in which object or edge detection is required, as image processing can offer a solution that does not require interaction between the manipulator and the object. In [24], a camera was used in different schemes to detect the location of a fabric, its centroid, or an edge, while in [9], a camera mounted above the manipulation surface inspected the folding process of clothes by detecting their edges and comparing images to an ideal model. Similarily, in [31], a 2D camera observed the contour of a fabric while it was being folded and made predictions, with the aim of acquiring knowledge concerning the behavior of flexible objects. Other applications implementing 2D visual sensors are described in [7,23,27,28,32,33,34,35,36,37].

Figure 2.

Depiction of a 2D camera.

One major drawback of 2D visual sensors is that the quality of the information they can provide depends heavily on experimental conditions, especially lighting. In [32], a two-arm industrial robot was used to grasp a piece of clothing from a pile, recognize it, move it to a defined position, and eventually fold it. The authors state that besides the challenges related to the folding of the piece, the biggest challenge was the recognition of the piece by the vision system. The decision is made by the robot, using the shape information provided by the 2D visual sensing system. However, the shapes of hanging clothes are dependent on the random choice of hanging points. To solve this problem, the authors added tactile sensors on the grippers in order to collect roughness data.

Technological progress in hardware, along with developments in artificial intelligence (AI) and machine learning (ML), has made it feasible to process large amounts of data, the computational cost of which would have been unbearable in the past [38]. Without these advances, the use of 3D cameras would not be as widespread, especially in applications for which a 2D camera could achieve satisfactory results. According to [39], despite the fact that 3D vision requires greater data-processing capabilities than 2D systems, its many advantages include independence experimental conditions, such as light intensity. Furthermore, data-processing requirements can be offset by the value of acquired data, i.e., a big difference in light intensity of pixels can quickly indicate an edge. As stated in the same article, even without a 3D camera, it is possible to acquire 3D data using the following configurations:

- Binocular stereo, where images from two cameras (or one moving camera) are combined;

- Trinocular stereo, where three cameras are used, and more information can be provided, as all three dimensions can be projected;

- Longitudinal stereo, where consecutive views are captured along the optical axes, a method used in vehicle guidance.

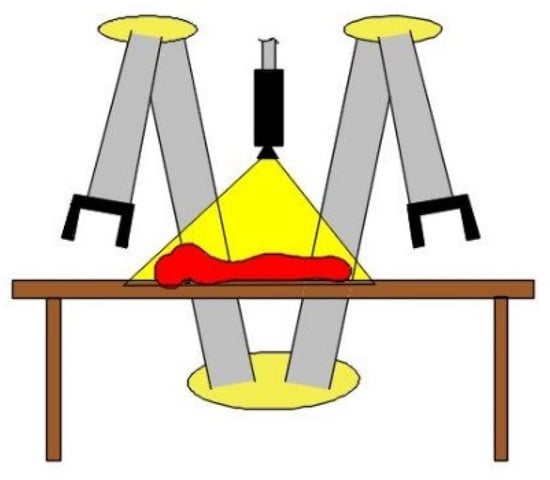

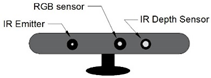

The advantage of 3D vision over 2D is the sense of depth that 3D can offer. Measured depth differences in applications of fabric manipulation are usually owing to wrinkles or edges. An RGB-D sensor was used in [40], wherein a fabric or some clothes were left lying folded on a surface, and the sensor identified the folded part and determined the point from which to grasp the fabric. Using the same principle, the contour and the formation of the fabric—or even its presence, as in [10]—can be detected. A humanoid robot used to assist with the bottom dressing of a human [15] implemented another 3D camera (Xtion PRO LIVE) to locate the position of the legs and the state of the pants, as well as possible failure throughout the process. Another human-assisting robot is presented in [3], where an RGB-D camera located the edges of the bed sheets in a bed-making process. A sketch depicting a typical RGB-D sensor can be seen below in Figure 3.

Figure 3.

Depiction of an RGB-D sensor.

Besides detecting edges and formations, 3D sensors are also widely used for classification purposes. A depth sensor was used in [41] to pick up clothes from a pile. Next, it classified them into one of four main clothing categories, and an algorithm was implemented to identify the correct point from which the clothing should be picked up for optimal unfolding. In [42] two, RGB-D sensors continuously captured a garment lying on a surface, first to locate the grasping point via a heuristic algorithm; recording then continued until the garment could be classified into a predefined category. A contrasting approach was used in [43], wherein after a garment was picked up from a pile, a time-of-flight (ToF) sensor—a new generation of depth sensors—captured a single partial image of the garment (partial because depth sensors are usually used at close distances), and a convolutional neural network (CNN) was then used to classify the garment into one of four garment categories: “shirt”, “trouser”, “towel” or “polo”.

3.1.2. Tactile Sensors

In robots, similarly to humans, vision is the most useful sense, as it provides a lot of information quickly. One disadvantage of vision sensing is that it is prone to miscalculations and mistakes when environmental conditions vary. Furthermore, there are many mechanical properties of objects that cannot be identified by vision alone, such as hardness. A popular alternative to vision, especially in applications with fabrics, is tactile sense.

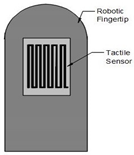

Tactile sensors can vary from cheap and custom to high-cost and industrial. Depending on the present hardware and the task to be achieved, sensors can be custom-fabricated or found in the market. In 1990 [44], Paul et al. referred to the issue of using tactile sensors in robots handling limp materials. Until then, most tactile sensors were used to compute gripping forces and to detect contact with objects. The development of tactile-sensing arrays, as opposed single sensors, was recommended, together with the use of sensors, to measure the dynamic and static parts of contact forces, similarly to how humans recognize objects using tactile sense. Nowadays, these principles are widely followed, as researchers are developing humanoid fingers equipped with tactile sensors [45].

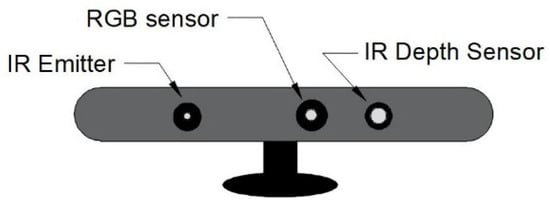

The basic working principle is similar for most tactile sensors. Conductive components, the electrical properties of which may change when they deform, are used such that when the sensor comes in contact with an object, they deform, and as a result, a difference in the component’s resistivity is measured (Figure 4). If the mechanical properties of the deformed part are known, the electrical differences can be translated into developed forces.

Figure 4.

Depiction of the basic working principle of a tactile sensor. (a) A magnetic sensor using a Hall effect sensing element inside a deformable diaphragm embedded with small magnetic parts. (b) When contact is made, the diaphragm deforms, and the magnetic field changes.

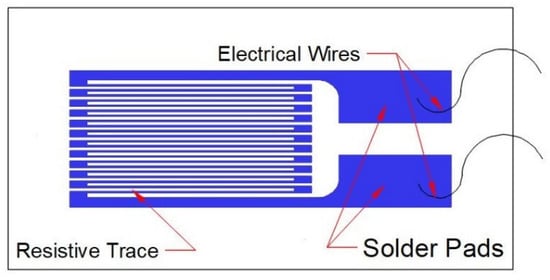

When it comes to the conductive component, a wide variety of materials can be used. Strain gauges (Figure 5) fit precisely in the previous given description. Strain gauges are sensors, the measured electrical resistance of which differs with strain variations. There are many options available online or in shops, but strain gauges can also be easily fabricated. In [46], the fabrication of a strain gauge from elastomer cast and conductive traces (cPDMS) for a sensor skin is presented. A strain gauge was used in [34] in an automated sewing machine in order to control the tension developed on the fabric. Meanwhile, in [47], strain gauges were used to sense whether a fabric ply was grasped from the gripper, even calculating the number of caught plies. Other uses of strain gauges in fabric handling are presented in [23,48,49].

Figure 5.

Strain gauge.

Capacitive sensors differ from resistive sensors in that they measure variations in capacitance. A thin dielectric layer sandwiched between a pair of conductors can be used in a capacitive sensor. The thickness of the dielectric layer varies depending on sensor deformation, and as a result, its capacitance also changes [50]. A simple implementation of a capacitive sensor is presented in [28], wherein a capacitive sensor was mounted on a gripper to detect contact with the manipulated object and stop the movement. The advantages of capacitive sensors were further exploited in [51,52,53], wherein capacitive sensors were used either alone or together with other sensors to explore the texture properties of fabric materials.

Piezoelectric materials are also a cheap and very effective option. In [54], piezoresistive gauges were positioned in a thin, flexible diaphragm with a small mesa mounted on its center. When force was applied on the mesa, the diaphragm deflected, and its resistivity changed as a result of the displacement of the piezoresistive gauges. By measuring the voltages on the diaphragm, forces can be calculated, and through a neural network, different textures can be recognized based on the differences in the measurements. Polyvinylidene fluoride (PVDF) is a polymer with strong piezo-pyroelectric activity. Compared with other piezoelectric materials, PVDF is flexible, light, tenacious, and inexpensive [55]. It is highly recommended for applications requiring object classification based on surface-texture criteria, such as in [49,55,56]. In [49], both strain gauges and PVDF sensors were used to classify materials with a biomimetic finger. As stated in the article, human tactile sensors of can be divided into two broad categories, namely slow-adapting and fast-adapting receptors. Slow-adapting sensors, respond to low-frequency stimuli and sense static properties of the stimuli, while fast-adapting sensors respond to higher frequencies and provide information on the dynamic stimuli properties. Strain gauges act as slow-adapting sensors, while PVDF acts as a fast-adapting sensor. As mentioned above, for investigation of texture properties, the dynamic part of the load contains more information; therefore, PVDF sensors can prove useful in applications of this kind. Accelerometers have also been used for this purpose [45,57,58].

A similar approach to piezoresistive sensors as that used in [54] is presented in [59], with the use of magnetic materials. Instead of a soft mesa, a soft cylindrical silicon material centered on a cylindrical niobium magnet was used, and in place of piezoresistive gauges, three ratiometric linear sensors (RLS) were implemented perpendicular to each other. When an external force is applied on the soft silicon, it deforms, displacing the magnet inside it and causing magnetic flux to change near the RLS. This approach was used to distinguish between eight different objects—two of them fabrics—by rubbing their surfaces.

3.1.3. Body Sensors

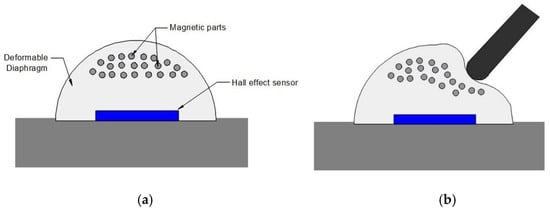

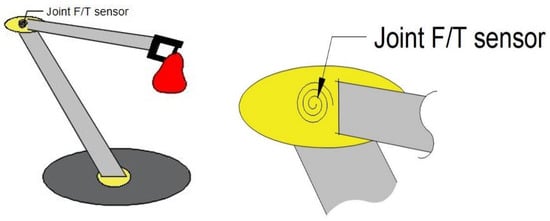

Tactile and visual sensing add exteroception to a robotic system by enabling it to gather information from its environment. Proprioception, on the other hand, is the ability of a robotic system to sense its own movement and position in space. In order to make a robot proprioceptive, sensors are usually mounted on its body, mostly on its joints or at critical points (Figure 6). In [48], positions of the finger joints of a humanoid robot were measured using Hall sensors on the output of each joint, and arm-joint positions were measured using optical sensors. The robot explored the surface of clothes, and when it encountered features, such as buttons or snaps, the accurate position of the finger that came in contact with the feature was already known and could be marked as the location of the feature.

Figure 6.

A force/torque sensor mounted on the joint of a robotic arm.

In a similar way, force/torque sensors are located either in arm joints or somewhere on the gripper in order to perceive when contact has been made with an object. This way, serious collisions can be avoided, and the exerted force on the manipulating object can be controlled. In [60], a bio-inspired gripper was specially designed for fabrics, mimicking a fish called the lamprey. The gripper works by combining microneedles with negative pressure to create a vacuum. Inside its body, a sensor is mounted to constantly measure the pressure and control the vacuuming process. In a robot-assisted dressing system [13] force/torque sensors were used to constantly measure and control the developed force in the human arm while putting on a shirt. Other applications utilizing sensors in the bodies of operating robots can be found in [14,15,27,29,35,61].

3.1.4. Wearable Sensors

A different kind of sensor implementation in fabric applications that could be included in the body-sensor category is stretch sensors in clothes or even on the human body. These sensors are called wearable sensors, as they can be worn together with clothes or like stickers. Such sensors might belong to a different category since they do not involve fabric-manipulating robots, but this kind of sensor could play a determinative role in the future and be used both on humans and robots.

In [62], stretch sensors were developed from textile materials and compared to commercial sensors in applications such as measuring the expansion of the chest during inhalation and determining the position of the ankle. In these applications the stretch sensors are mounted right on the human skin. Their implementation in clothes, together with the use of AI, could lead to what is called “smart clothes”, which could track important biometrics, such as the heart rate of a person or his/her performance during sports [63]. According to [63], monitoring of biometrics is already feasible by using gadget like smart watches; however, the use of smart clothes could provide more types of physiological measurements since they cover a much larger area of the human body.

In addition to the quantity of the information that such sensors could provide, the fact that textile-based sensors can be implemented in clothes without altering their mechanical properties offers an alternative to present wearable sensors, which can be sizeable, rigid, and uncomfortable. An interesting example is presented in [64], where an obstacle-detection system was designed on a shirt that can be used by vision-impaired people. This shirt consists of an integrated circuit alongside the fabric of the shirt, in addition to one microcontroller and some sensors and motors. The shirt was designed to be worn comfortably, but the inclusion of the necessary rigid parts, such the microcontroller, may cause heterogeneity in the whole of the shirt.

The capabilities of sensors that can be perfectly integrated into fabrics without altering their mechanical properties were examined in [65] by using wearable textile-based sensors to recognize human motions. A review of the use of textile materials in robotics in general is presented in [66]. In [67], Xiong et al. present an extensive review of fibers and fabrics used to produce sensors for all kinds of measurements (mechanical sensors, thermal sensors, humidity sensors, etc.), as well as other useful components, such as grippers and actuators.

3.2. Data Processing and a Reference to the Type of Manipulated Object

Sensors are used in robotic applications to instantiate perception in robots. Depending on the sensor used, different types of perception can be achieved, and depending on the type of perception required, different types of sensors can be used. Below, the most popular tasks for sensors are presented, with a reference to corresponding applications that have been developed.

3.2.1. Object Detection

The first step in almost every fabric-handling application is to locate the fabric and detect the points from which it will be grasped. In production lines, where conveyor belts are usually used and, in general, processes are more specific, detection of the object is easier, as its possible location is known most of the time; only its presence needs to be confirmed. In such cases, simple sensor setups can be used, such as binary sensors. As previously mentioned, binary sensors can be visual [22,23,24,25,26,27,28,29], tactile [6,28,68], or even placed on the body of the robot, as in [61], where sensors were placed on a robot that picks up flexible materials from a stack, and when there is contact between the gripper and the stack, a signal is sent to a microprocessor. In [25,26,27], six proximity sensors on the bottom surface of a suction gripper were used to detect whether the fabric was in a position from which it could be grasped by checking the values of the sensor. In [68], an underactuated gripper specially designed to pick up fabrics from a surface used tactile sensors on its fingers to distinguish whether the fabric ply had been caught.

Non-binary sensors are also used to detect objects. In contrast to binary sensors, non-binary sensors extract more information, which is then processed before it is turned into actual knowledge. Tactile sensors were used in [47] to measure strain in a gripper and discriminate the number of plies grasped. As mentioned earlier, both tactile and body sensors were used in [48] to detect contact with clothing features, such as buttons. However, most sensors used in object-detection applications are visual.

Cameras and depth sensors are usually integrated into robotic setups to sense the presence of a fabric or to identify the optimal grasping point. In a setup of two robotic arms grasping fabrics from two conveyor belts, Hebert et al. [26,27] placed cameras beside the conveyor belts to spot the incoming fabrics and their orientations so the gripper could adapt its orientation. Doulgeri and Fahantidis [28] also implemented a camera to find the grasping point of a fur. A depth sensor (Kinect) was used in [69,70]. In the latter, the image captured by the depth sensor served as an input to a CNN capable of locating the optimal grasping point of clothes.

Many home-assistive robots implement visual sensors in order to find fabrics, as they operate in non-defined environments. Laundry-handling robots use 2D [7] or 3D visions setups [4,6,71] to locate clothes. RGB and depth sensors are used in a mobile bed-making robot to detect the edges of the bedsheet [3]. Visual sensors were also used in [8,22,23,72] for robotic towel-folding applications. The task of the visual sensors is to detect the grasping points on the towels—usually the edges.

In addition to applications that use sensors to detect an object in order to manipulate it optimally, a reference should also be made to applications that detect objects in order to avoid them. Obstacle-detection is a very important task in industrial applications, especially in dynamic environments in which obstacles constantly change position. A material-handling robot was designed in [73], including a locomotive with IR sensors and cameras to locate the path to be followed, as well as any obstructions in the path. This locomotive can be used to carry anything needed in a production line, including fabrics. Another typical application of obstacle detection is in smart shirts for vision-impaired people [64].

3.2.2. Control

The purpose of using sensors in robots is to enable them to operate more precisely in a closed loop. Force/torque sensors empower robots to obtain feedback on the exerting force and make necessary adjustments, as in [27]. In [35], force/torque sensors were incorporated in order to constantly measure the applied forces from the gripper to the fabric and control the processes, including laying, folding, etc. In the same system, a camera was also used to track the manipulated object. A typical use of force/torque sensors in grippers designed for limp materials is to ensure a firm grip while also ensuring that the product is not damaged [14,23,32,74]. In [14], tactile sensors were placed on the gripper of a robot that collaborates with a human to keep steady tension in a garment without slack. A Kinect camera was also used, as torque tactile sensors cannot detect slacking, and the camera that tracks the garment cannot perceive when the human moves away. Collaborative tension control of garments was also attempted in [75], but with two-wheeled mobile robots (WMR) instead of a robot–human collaboration. A low-cost force-sensory system was mounted in the following WMR of a follower–leader formation for the control of the applied forces via two independent PID controllers with force feedback.

In sewing processes, high-tension forces are developed, and control is necessary, as high precision is required. In [29,34], automated sewing systems were presented, comprising both tension sensors and cameras; the tension sensors measured the forces developing on the fabric, while the cameras were used to control the seam width.

Human–robot interaction applications implement very high safety standards [76] that require that the robot never exert a force that could harm the human. In [15], a humanoid robot was used to assist a person with limited mobility to dress the bottom half of their body. Force sensors were mounted on the robot’s grippers, and when inadequate forces were measured, a signal was sent to the system, activating a camera to identify the malfunction. Another bottom-dressing assistive robot uses a camera to supervise the process by identifying the region of the image where the clothes are, maintaining awareness of any complications [33]. In [17], a mannequin was dressed by a dual-arm robot. In subsequent research [16], where a real person replaced the mannequin, Kinect cameras were used to track the fingertips and the elbow of the person.

3.2.3. Object Classification

Current technological evolution and the growth of AI have made projects feasible that would have sounded unrealistic 20 years ago. One of the basic assets of intelligent machines is that they can make decisions based on data. This asset has been exploited in many current fabric-handling applications in order to recognize clothes and classify them into categories.

Fabric recognition and classification is not a simple task, and in many cases, various sensors and complex data processing are necessary. Tactile and vision sensors are mostly used applications of this kind, similarly to humans that recognize a fabric either by looking at it or rubbing it—sometimes both. In [7], a 2D camera on a humanoid robot captured images of a fabric, identified its contour, and classified the fabric into a clothing category; then, depending on the category, an automated folding algorithm was implemented. In [36], wrinkles and other features were derived from images of fabrics, and a set of Gabor filters were applied to classify fabrics into clothing categories, with almost perfect results. In [37], fabrics were not classified into categories, but their material properties, like stiffness and density, were estimated.

Depth sensors were used in [77,78] to estimate the category of grasped clothes and their hanging pose, depending on the grasping point. Greyscale images from an RGB-D sensor and a graph-based method were used in [79] for clothing classification. In [69,80], 2D and 3D images of clothes were used for grasping-point detection and clothing classification, both separately and combined, while in [81], a clothes-folding robot used an Xtion camera to categorize clothes. In [82], a 3D laser scanner scanned not fabrics but molds in which fabrics were to be place, and based on the mold, a corresponding handling method was applied.

Capacitive tactile sensors were used in [51,53] to discriminate between different textures. Khan et al. [53] used an array of 16 capacitive tactile sensors, and by using the data from each sensor as inputs in an SVM, they managed to obtain close to perfect results. In [58], force sensors, combined with accelerometers, were implemented in a biomimetic fingertip used for surface discrimination using neural network PVDF. Tactile sensors were used in another finger-shaped application, serving the same purpose [55], and in [56], three different classification methods were applied, namely support-vector machine (SVM), CNN, and k-nearest neighbors (kNN).

4. Discussion

In this section, previous case studies are further analyzed and discussed and statistics information is represented, according Table 1. In Table 1, the aforementioned applications, along with some additional ones, are classified into categories, similarly to Section 3 (Sensor Analysis). The first major classification was made according to the working principle of the sensors used. Visual sensors [83,84,85], tactile sensors [86], and body sensors [87] were the three major categories. Visual sensors were broken down even further into 1D [88], 2D [89,90,91,92], and 3D [93,94]. Besides the working principle of the sensors used, applications were further classified according to data processing-method. The majority of applications is used the data collected from the sensors to detect an object, an edge, or an obstacle [95,96,97]. Control of the process is also a common practice [98,99,100], while object classification is becoming a very popular task [94,101]. References with applications that fit into more than one classification group, are mentioned multiple times.

Table 1.

Classification of fabric-manipulation applications that use sensors.

Table 1.

Classification of fabric-manipulation applications that use sensors.

| Sensor Type | Task | |||

|---|---|---|---|---|

| Object Detection | Control | Classifying | ||

| Visual Sensors |  1D 1D | [22,23,25,26,27,28,29,87,88,95] | ||

2D 2D | [7,9,23,24,26,27,28,31,33,35,36,86,89,92,96,100] | [33,34,90,98] | [7,32,36,37,52,89,91,92] | |

3D 3D | [3,5,6,8,10,11,12,15,40,41,42,69,70,71,78,80,81,83,84,85,92,93,94,97,99,101] | [5,6,14,15,16,17,74,99] | [4,41,42,43,52,69,77,78,79,80,81,82,92,94,97,101] | |

| Tactile Sensors |  | [6,28,47,48,68,86] | [14,18,23,32,34,35,74,86] | [32,49,51,52,53,54,55,56,57,58,59,70] |

| Body Sensors |  | [48,61] | [13,15,27,29,35,60,61,75,87,100] | |

Many types of sensors are described in the present review and are available to manufacturers and researchers. Knowledge of the application type and basic condition requirements can facilitate more informed and accurate sensor-selection choices for factory automation and machine control. Identification of appropriate sensors for specific applications requires narrowing of the search to a short list of sensors and testing the sensors with the part, the actuator, or the machine under conditions representative of where the sensor will be installed. When a short list of sensors is identified, prior to final selection, it must be ensured that the set—based on the manufacturer’s data sheet—is compatible with the basic operating conditions of the application, such as temperature range, dimensions, voltage range, discrete or analog output, etc.

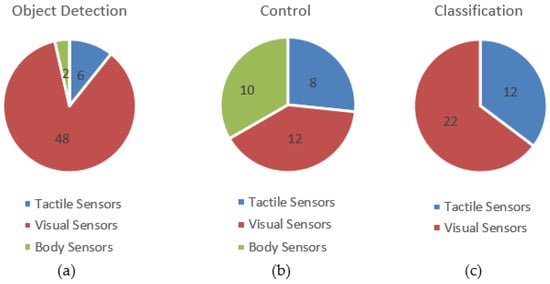

The first step in almost every fabric-handling process is the detection of the fabric. Visual sensors are mainly used for object detection, but tactile or body sensors can also be used (Figure 7a). Once the object is detected, determination of the grasping point usually requires a camera and algorithms, with camera images as the input and the optimal grasping point as the output. This operation is very important because picking up fabric from the correct point makes the subsequent processes easier, whether it is folding, transportation, etc. In fact, the most common task among the reviewed applications is object detection, including detection of a specific part or point of the object, such as grasping point. Object detection was performed in 53 out of 80 (66%) applications included in this review. Tactile and body sensors are typically used to recognize contact and notice abnormal measurements in the applied forces. Thus, sensors are used to observe when contact is made with the object and not the location of the object. Visual sensors offer wider capabilities, as they can find an object in the workspace and provide its exact position and orientation. Of course, sometimes simplicity is more important, and smart design can offer a considerable amount of information without the use of complex sensors, as in [27], wherein smart positioning of infrared sensors detected both the existence and the orientation of the fabric in a system using a conveyor belt for the feeding of fabrics.

Figure 7.

Distribution of sensor types for different purposes. (a) Object detection, (b) control, (c) classification.

When it comes to the control of the handling process, where excessive forces should not be applied and grasping and handling stability is crucial, all kinds of sensors can be used (Figure 7b). Visual sensors can detect misplacement, tactile sensors can detect slippage or excessive forces, and body sensors can detect failure trajectory planning (and/or collisions). Control systems were implemented in 25 out of 80 (35%) applications included in this review.

When high levels of automation are needed, a combination of different sensors and implementations of AI and/or ML approaches is usually applied. Recognizing a fabric and discriminating between different fabric types is a determinant capability for an automated fabric-manipulation systems and, presented in 32 (40%) of applications included in this review. Fabrics can be distinguished according from their feeling, their structure, their composition, or their texture. Consequently, depending on the type of fabric, there are two alternatives available (Figure 7c). Visual sensors can often obtain better results than tactile sensors, usually in applications where the type of apparel needs to be distinguished, e.g., towel, t-shirt, jeans. In cases where the material of the fabric is a desideratum, tactile sensors can be prove more efficient, as more information can be provided from the surface texture.

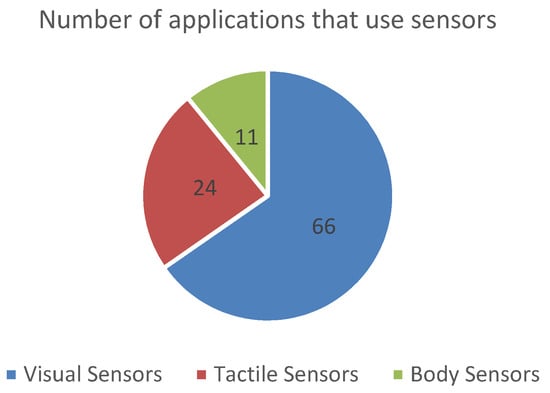

Most applications use visual sensors (Figure 8). Less than 20% of the applications included in this review implemented tactile or body sensors alone (14 out of 80). In applications requiring object detection, only 5 of the 53 (9.4%) applications included in this review did not use visual sensors. Of the 66 applications that used visual sensors for any reason, only 10 used 1D sensors (15%), and of which used 1D sensors exclusively for object detection. Of the applications include in this review, 23 used 2D sensors (34.8%), and more than half use 3D sensors (39, 59.1%). Tactile sensors are being used for every purpose with almost the same occurrence. Out of a total of 24 applications, seven use tactile sensors for object detection, and seven for process control. Meanwhile, 12 used tactile sensors for classification purposes. Body sensors, on the other hand, cannot be used for classification and are mostly used for process control (10 out of 11) usually to avoid harsh collisions.

Figure 8.

Quantitative distribution of each type of sensor.

Due to the rapid development of personal information platforms and the Internet of Things, more flexible and multifunctional sensors are being applied in daily life, such as in the fields of smart textiles, human health monitoring, and soft robotics. The presented fabric sensors [66] present good and stable sensing performances, even after thousands of testing cycles. Moreover, fabric sensors prove to exhibit good wearability and efficiency for detecting various human motions. One problem related to stretch sensors, such as fabric sensors, could be hysteresis, which could be handled by the use of ML algorithms [62,65].

There is no doubt that the integration of sensing systems in fabric-handling applications, where dexterous manipulation is required, is more than necessary and crucial for system performance and efficiency. Fabrics are sensitive to damage, and the current common robotic grippers are inadequate. One solution to ensure final product quality is the use of soft and compliant grippers that, owing to their flexibility, do not harm the fabric surface. Another option is to design a sophisticated automated system that can operate precisely and dexterously according to the needs of a fabric-manipulation process. To achieve these levels of competence in an automated system, different types of sensors must be used or designed as new approaches. Visual sensors seem to be indispensable, since the quality of the information provided by such sensors can facilitate much of the process, mainly through object detection. Tactile sensors can be used in a complementary fashion with visual sensors to enrich the feedback system. Body sensors, on the other hand, are also important, although their use is more limited. They can be useful in situations where internal measurements of the robot state, such as applied torque or joint angles, are needed. For a fully automated system, integration of multiple types of sensors is recommended. However, with the addition of sensors, the cost and complexity of an application also increase, so there should be a compromise between cost and level of autonomy.

5. Conclusions

In this review, a systematic analysis of different types of sensors was carried out, focusing on those that have been used in fabric-handling applications. An extensive review of published scientific papers was conducted to gather information from as many fabric-handling applications as possible and organized according to predefined criteria. The goal was to group information about sensing systems in a way that will facilitate future selection of appropriate hardware for use in fabric-handling applications. The use of automated systems is constantly increasing, and implementation of appropriate sensing systems is crucial when developing such applications. Fabric handling is a task that has not been automated to the same extent as other fields, such as the automotive industry. This can be explained by the challenges encountered in applications such as those included in this review. We hope that researchers will maintain an active interest in this field and continue to develop future advances. In terms of future work, researchers could focus on studies regarding grippers, in-hand manipulation strategies, or even the textile types that pose greater/lesser challenges in automated applications.

Author Contributions

Conceptualization, P.N.K.; writing—original draft preparation P.I.K.; writing—review and editing, P.N.K. and P.G.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Seesselberg, H.A. A Challenge to Develop Fully Automated Garment Manufacturing. In Sensory Robotics for the Handling of Limp Materials; Springer: Berlin/Heidelberg, Germany, 1990; pp. 53–67. [Google Scholar]

- Koustoumpardis, P.N.; Aspragathos, N.A. A Review of Gripping Devices for Fabric Handling. In Proceedings of the International Conference on Intelligent Manipulation and Grasping IMG04, Genova, Italy, 1–2 July 2004; pp. 229–234. [Google Scholar]

- Seita, D.; Jamali, N.; Laskey, M.; Kumar Tanwani, A.; Berenstein, R.; Baskaran, P.; Iba, S.; Canny, J.; Goldberg, K. Deep Transfer Learning of Pick Points on Fabric for Robot Bed-Making. arXiv 2018, arXiv:1809.09810. [Google Scholar]

- Osawa, F.; Seki, H.; Kamiya, Y. Unfolding of Massive Laundry and Classification Types by Dual Manipulator. J. Adv. Comput. Intell. Intell. Inform. 2007, 11, 457–463. [Google Scholar] [CrossRef]

- Kita, Y.; Kanehiro, F.; Ueshiba, T.; Kita, N. Clothes handling based on recognition by strategic observation. In Proceedings of the 2011 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 26–28 October 2011; pp. 53–58. [Google Scholar]

- Bersch, C.; Pitzer, B.; Kammel, S. Bimanual Robotic Cloth Manipulation for Laundry Folding. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1413–1419. [Google Scholar]

- Miller, S.; Berg, J.V.D.; Fritz, M.; Darrell, T.; Goldberg, K.; Abbeel, P. A geometric approach to robotic laundry folding. Int. J. Robot. Res. 2011, 31, 249–267. [Google Scholar] [CrossRef]

- Maitin-Shepard, J.; Cusumano-Towner, M.; Lei, J.; Abbeel, P. Cloth grasp point detection based on multiple-view geometric cues with application to robotic towel folding. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 2308–2315. [Google Scholar]

- Osawa, F.; Seki, H.; Kamiya, Y. Clothes Folding Task by Tool-Using Robot. J. Robot. Mechatron. 2006, 18, 618–625. [Google Scholar] [CrossRef]

- Yamazaki, K.; Inaba, M. A Cloth Detection Method Based on Image Wrinkle Feature for Daily Assistive Robots. In Proceedings of the MVA2009 IAPR International Conference on Machine Vision Applications, Yokohama, Japan, 20–22 May 2009; pp. 366–369. Available online: http://www.mva-org.jp/Proceedings/2009CD/papers/11-03.pdf (accessed on 19 April 2021).

- Twardon, L.; Ritter, H. Interaction skills for a coat-check robot: Identifying and handling the boundary components of clothes. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3682–3688. [Google Scholar]

- Li, Y.; Hu, X.; Xu, D.; Yue, Y.; Grinspun, E.; Allen, P.K. Multi-Sensor Surface Analysis for Robotic Ironing. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 5670–5676. [Google Scholar] [CrossRef][Green Version]

- Yu, W.; Kapusta, A.; Tan, J.; Kemp, C.C.; Turk, G.; Liu, C.K. Haptic Simulation for Robot-Assisted Dressing. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 6044–6051. [Google Scholar]

- Kruse, D.; Radke, R.J.; Wen, J.T. Collaborative Human-Robot Manipulation of Highly Deformable Materials. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 3782–3787. [Google Scholar]

- Yamazaki, K.; Oya, R.; Nagahama, K.; Okada, K.; Inaba, M. Bottom Dressing by a Life-Sized Humanoid Robot Provided Failure Detection and Recovery Functions. In Proceedings of the 2014 IEEE/SICE International Symposium on System Integration, Tokyo, Japan, 13–15 December 2014; pp. 564–570. [Google Scholar]

- Joshi, R.P.; Koganti, N.; Shibata, T. A framework for robotic clothing assistance by imitation learning. Adv. Robot. 2019, 33, 1156–1174. [Google Scholar] [CrossRef]

- Tamei, T.; Matsubara, T.; Rai, A.; Shibata, T. Reinforcement learning of clothing assistance with a dual-arm robot. In Proceedings of the 2011 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 26–28 October 2011; pp. 733–738. [Google Scholar]

- Erickson, Z.; Clever, H.M.; Turk, G.; Liu, C.K.; Kemp, C.C. Deep Haptic Model Predictive Control for Robot-Assisted Dressing. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar] [CrossRef]

- King, C.H.; Chen, T.L.; Jain, A.; Kemp, C.C. Towards an Assistive Robot That Autonomously Performs Bed Baths for Patient Hygiene. In Proceedings of the IEEE/RSJ 2010 International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 319–324. [Google Scholar] [CrossRef]

- Clothes Perception and Manipulation (CloPeMa)—HOME—CloPeMa—Clothes Perception and Manipulation. Available online: http://clopemaweb.felk.cvut.cz/clothes-perception-and-manipulation-clopema-home/ (accessed on 17 May 2021).

- Koustoumpardis, P.N.; Aspragathos, N. Control and Sensor Integration in Designing Grippers for Handling Fabrics. In Proceedings of the 8th Internat. Workshop on RAAD’99, Munich, Germany, 17–19 June 1999; pp. 291–296. [Google Scholar]

- Balaguer, B.; Carpin, S. Combining Imitation and Reinforcement Learning to Fold Deformable Planar Objects. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1405–1412. [Google Scholar]

- Sahari, K.S.M.; Seki, H.; Kamiya, Y.; Hikizu, M. Clothes Manipulation by Robot Grippers with Roller Fingertips. Adv. Robot. 2010, 24, 139–158. [Google Scholar] [CrossRef]

- Taylor, P.M.; Taylor, G.E. Sensory robotic assembly of apparel at Hull University. J. Intell. Robot. Syst. 1992, 6, 81–94. [Google Scholar] [CrossRef]

- Kolluru, R.; Valavanis, K.P.; Steward, A.; Sonnier, M.J. A flat surface robotic gripper for handling limp material. IEEE Robot. Autom. Mag. 1995, 2, 19–26. [Google Scholar] [CrossRef]

- Kolluru, R.; Valavanis, K.P.; Hebert, T.M. A robotic gripper system for limp material manipulation: Modeling, analysis and performance evaluation. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Albuquerque, NM, USA, 25 April 1997; Volume 1, pp. 310–316. [Google Scholar]

- Hebert, T.; Valavanis, K.; Kolluru, R. A robotic gripper system for limp material manipulation: Hardware and software development and integration. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Albuquerque, NM, USA, 25 April 1997; Volume 1, pp. 15–21. [Google Scholar]

- Doulgeri, Z.; Fahantidis, N. Picking up flexible pieces out of a bundle. IEEE Robot. Autom. Mag. 2002, 9, 9–19. [Google Scholar] [CrossRef]

- Schrimpf, J.; Wetterwald, L.E. Experiments towards automated sewing with a multi-robot system. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 5258–5263. [Google Scholar]

- Kelley, R.B. 2D Vision Techniques for the Handling of Limp Materials. In Sensory Robotics for the Handling of Limp Materials; Springer Science and Business Media LLC: Cham, Switzerland, 1990; pp. 141–157. [Google Scholar]

- Bergstrom, N.; Ek, C.H.; Kragic, D.; Yamakawa, Y.; Senoo, T.; Ishikawa, M. On-line learning of temporal state models for flexible objects. In Proceedings of the 2012 12th IEEE-RAS International Conference on Humanoid Robots (Humanoids 2012), Osaka, Japan, 29 November–1 December 2012; pp. 712–718. [Google Scholar]

- Le, T.-H.-L.; Jilich, M.; Landini, A.; Zoppi, M.; Zlatanov, D.; Molfino, R. On the Development of a Specialized Flexible Gripper for Garment Handling. J. Autom. Control Eng. 2013, 1, 255–259. [Google Scholar] [CrossRef]

- Yamazaki, K.; Oya, R.; Nagahama, K.; Inaba, M. A Method of State Recognition of Dressing Clothes Based on Dynamic State Matching. In Proceedings of the 2013 IEEE/SICE International Symposium on System Integration, Kobe, Japan, 15–17 December 2013; pp. 406–411. [Google Scholar]

- Gershon, D.; Porat, I. Vision servo control of a robotic sewing system. In Proceedings of the 1988 IEEE International Conference on Robotics and Automation, Philadelphia, PA, USA, 24–29 April 1988; pp. 1830–1835. [Google Scholar]

- Paraschidis, K.; Fahantidis, N.; Vassiliadis, V.; Petridis, V.; Doulgeri, Z.; Petrou, L.; Hasapis, G. A robotic system for handling textile materials. In Proceedings of the 1995 IEEE International Conference on Robotics and Automation, Nagoya, Japan, 21–27 May 1995; Volume 2, pp. 1769–1774. [Google Scholar]

- Yamazaki, K.; Inaba, M. Clothing Classification Using Image Features Derived from Clothing Fabrics, Wrinkles and Cloth Overlaps. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2710–2717. [Google Scholar]

- Bouman, K.L.; Xiao, B.; Battaglia, P.; Freeman, W.T. Estimating the Material Properties of Fabric from Video. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar] [CrossRef]

- Shih, B.; Shah, D.; Li, J.; Thuruthel, T.G.; Park, Y.-L.; Iida, F.; Bao, Z.; Kramer-Bottiglio, R.; Tolley, M.T. Electronic skins and machine learning for intelligent soft robots. Sci. Robot. 2020, 5, 9239. [Google Scholar] [CrossRef] [PubMed]

- Domey, J.; Rioux, M.; Blais, F. 3-D Sensing for Robot Vision. In Sensory Robotics for the Handling of Limp Materials; Taylor, P.M., Ed.; Springer: Berlin/Heidelberg, Germany, 1990; pp. 159–192. [Google Scholar]

- Triantafyllou, D.; Koustoumpardis, P.; Aspragathos, N. Type independent hierarchical analysis for the recognition of folded garments’ configuration. Intell. Serv. Robot. 2021, 14, 427–444. [Google Scholar] [CrossRef]

- Doumanoglou, A.; Kargakos, A.; Kim, T.-K.; Malassiotis, S. Autonomous active recognition and unfolding of clothes using random decision forests and probabilistic planning. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 987–993. [Google Scholar]

- Martínez, L.; Ruiz-Del-Solar, J.; Sun, L.; Siebert, J.P.; Aragon-Camarasa, G. Continuous perception for deformable objects understanding. Robot. Auton. Syst. 2019, 118, 220–230. [Google Scholar] [CrossRef]

- Gabas, A.; Corona, E.; Alenyà, G.; Torras, C. Robot-Aided Cloth Classification Using Depth Information and CNNs. In Proceedings of the International Conference on Articulated Motion and Deformable Objects, Palma de Mallorca, Spain, 13–15 July 2016; Springer: Cham, Switzerland; pp. 16–23. [Google Scholar]

- Paul, F.W.; Torgerson, E. Tactile Sensors: Application Assessment for Robotic Handling of Limp Materials. In Sensory Robotics for the Handling of Limp Materials; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 1990; pp. 227–237. [Google Scholar]

- Chathuranga, K.V.D.S.; Ho, V.A.; Hirai, S. A bio-mimetic fingertip that detects force and vibration modalities and its application to surface identification. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 575–581. [Google Scholar]

- Shih, B.; Drotman, D.; Christianson, C.; Huo, Z.; White, R.; Christensen, H.I.; Tolley, M.T. Custom soft robotic gripper sensor skins for haptic object visualization. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 494–501. [Google Scholar]

- Ono, E.; Okabe, H.; Akami, H.; Aisaka, N. Robot Hand with a Sensor for Cloth Handling. J. Text. Mach. Soc. Jpn. 1991, 37, 14–24. [Google Scholar] [CrossRef]

- Platt, R.; Permenter, F.; Pfeiffer, J. Using Bayesian Filtering to Localize Flexible Materials during Manipulation. IEEE Trans. Robot. 2011, 27, 586–598. [Google Scholar] [CrossRef]

- Jamali, N.; Sammut, C. Majority Voting: Material Classification by Tactile Sensing Using Surface Texture. IEEE Trans. Robot. 2011, 27, 508–521. [Google Scholar] [CrossRef]

- Li, S.; Zhao, H. Flexible and Stretchable Sensors for Fluidic Elastomer Actuated Soft Robots Nonlinear Dynamics and Emergent Complexity View Project. Mrs Bull. 2017, 42, 138–142. [Google Scholar] [CrossRef]

- Muhammad, H.B.; Recchiuto, C.; Oddo, C.M.; Beccai, L.; Anthony, C.J.; Adams, M.J.; Carrozza, M.C.; Ward, M.C.L. A Capacitive Tactile Sensor Array for Surface Texture Discrimination. Microelectron. Eng. 2011, 88, 1811–1813. [Google Scholar] [CrossRef]

- Kampouris, C.; Mariolis, I.; Peleka, G.; Skartados, E.; Kargakos, A.; Triantafyllou, D.; Malassiotis, S. Multi-sensorial and explorative recognition of garments and their material properties in unconstrained environment. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1656–1663. [Google Scholar]

- Khan, A.A.; Khosravi, M.; Denei, S.; Maiolino, P.; Kasprzak, W.; Mastrogiovanni, F.; Cannata, G. A Tactile-Based Fabric Learning and Classification Architecture. In Proceedings of the 2016 IEEE International Conference on Information and Automation for Sustainability (ICIAfS), Galle, Sri Lanka, 16–19 December 2016. [Google Scholar]

- De Boissieu, F.; Godin, C.; Guilhamat, B.; David CEA, D.; Serviere, C.; Baudois, D. Tactile Texture Recognition with a 3-Axial Force MEMS Integrated Artificial Finger. In Robotics: Science and Systems; Trinkle, J., Matsuoka, Y., Castellanos, J.A., Eds.; The MIT Press: Cambridge, MA, USA, 2009; pp. 49–56. [Google Scholar]

- Hu, H.; Han, Y.; Song, A.; Chen, S.; Wang, C.; Wang, Z. A Finger-Shaped Tactile Sensor for Fabric Surfaces Evaluation by 2-Dimensional Active Sliding Touch. Sensors 2014, 14, 4899–4913. [Google Scholar] [CrossRef]

- Drimus, A.; Borlum Petersen, M.; Bilberg, A. Object Texture Recognition by Dynamic Tactile Sensing Using Active Exploration. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 277–283. [Google Scholar]

- Sinapov, J.; Sukhoy, V.; Sahai, R.; Stoytchev, A. Vibrotactile Recognition and Categorization of Surfaces by a Humanoid Robot. IEEE Trans. Robot. 2011, 27, 488–497. [Google Scholar] [CrossRef]

- Chathuranga, D.S.; Hirai, S. Investigation of a Biomimetic Fingertip’s Ability to Discriminate Based on Surface Textures. In Proceedings of the 2013 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Wollongong, NSW, Australia, 9–12 July 2013. [Google Scholar]

- Chathuranga, D.S.; Wang, Z.; Noh, Y.; Nanayakkara, T.; Hirai, S. Robust real time material classification algorithm using soft three axis tactile sensor: Evaluation of the algorithm. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 2093–2098. [Google Scholar]

- Ku, S.; Myeong, J.; Kim, H.-Y.; Park, Y.-L. Delicate Fabric Handling Using a Soft Robotic Gripper with Embedded Microneedles. IEEE Robot. Autom. Lett. 2020, 5, 4852–4858. [Google Scholar] [CrossRef]

- Kondratas, A. Robotic Gripping Device for Garment Handling Operations and Its Adaptive Control. Fibres Text. East. Eur. 2005, 13, 84–89. [Google Scholar]

- Ejupi, A.; Ferrone, A.; Menon, C. Quantification of Textile-Based Stretch Sensors Using Machine Learning: An Exploratory Study. In Proceedings of the IEEE RAS and EMBS International Conference on Biomedical Robotics and Biomechatronics, Enschede, The Netherlands, 26–29 August 2018; pp. 254–259. [Google Scholar] [CrossRef]

- Cermak, D. How Artificial Intelligence Is Transforming the Textile Industry. Available online: https://www.iotforall.com/how-artificial-intelligence-is-transforming-the-textile-industry (accessed on 3 September 2021).

- Bahadir, S.K.; Koncar, V.; Kalaoglu, F. Wearable obstacle detection system fully integrated to textile structures for visually impaired people. Sens. Actuators A Phys. 2012, 179, 297–311. [Google Scholar] [CrossRef]

- Vu, C.C.; Kim, J. Human Motion Recognition by Textile Sensors Based on Machine Learning Algorithms. Sensors 2018, 18, 3109. [Google Scholar] [CrossRef]

- Pyka, W.; Jedrzejowski, M.; Chudy, M.; Krafczyk, W.; Tokarczyk, O.; Dziezok, M.; Bzymek, A.; Bysko, S.; Blachowicz, T.; Ehrmann, A. On the Use of Textile Materials in Robotics. J. Eng. Fibers Fabr. 2020, 15. [Google Scholar] [CrossRef]

- Xiong, J.; Chen, J.; Lee, P.S. Functional Fibers and Fabrics for Soft Robotics, Wearables, and Human–Robot Interface. Adv. Mater. 2021, 33, e2002640. [Google Scholar] [CrossRef]

- Koustoumpardis, P.N.; Nastos, K.X.; Aspragathos, N.A. Underactuated 3-Finger Robotic Gripper for Grasping Fabrics. In Proceedings of the 2014 23rd International Conference on Robotics in Alpe-Adria-Danube Region (RAAD), Smolenice, Slovakia, 3–5 September 2014. [Google Scholar]

- Ramisa, A.; Alenya, G.; Moreno-Noguer, F.; Torras, C. Learning RGB-D descriptors of garment parts for informed robot grasping. Eng. Appl. Artif. Intell. 2014, 35, 246–258. [Google Scholar] [CrossRef]

- Yuan, W.; Mo, Y.; Wang, S.; Adelson, E.H. Active Clothing Material Perception Using Tactile Sensing and Deep Learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Monso, P.; Alenya, G.; Torras, C. POMDP approach to robotized clothes separation. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 1324–1329. [Google Scholar]

- Huang, S.H.; Pan, J.; Mulcaire, G.; Abbeel, P. Leveraging appearance priors in non-rigid registration, with application to manipulation of deformable objects. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 878–885. [Google Scholar]

- A Katkar, S.; Karikatti, G.; Ladawa, S. Bvbcet Material Handling Robot with Obstacle Detection. Int. J. Eng. Res. 2015, V4, 560–566. [Google Scholar] [CrossRef]

- Li, Y.; Yue, Y.; Xu, D.; Grinspun, E.; Allen, P.K. Folding deformable objects using predictive simulation and trajectory optimization. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 6000–6006. [Google Scholar]

- Dadiotis, I.D.; Sakellariou, J.S.; Koustoumpardis, P.N. Development of a Low-Cost Force Sensory System for Force Control via Small Grippers of Cooperative Mobile Robots Used for Fabric Manipulation. Adv. Mech. Mach. Sci. 2021, 102, 47–58. [Google Scholar] [CrossRef]

- ISO/TS 15066:2016; Robots and Robotic Devices-Collaborative Robots. International Organization for Standardization: Geneva, Switzerland, 2016.

- Li, Y.; Chen, C.-F.; Allen, P.K. Recognition of deformable object category and pose. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 5558–5564. [Google Scholar]

- Li, Y.; Wang, Y.; Yue, Y.; Xu, D.; Case, M.; Chang, S.-F.; Grinspun, E.; Allen, P.K. Model-Driven Feedforward Prediction for Manipulation of Deformable Objects. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1621–1638. [Google Scholar] [CrossRef]

- Thewsuwan, S.; Horio, K. Texture-Based Features for Clothing Classification via Graph-Based Representation. J. Signal Process. 2018, 22, 299–305. [Google Scholar] [CrossRef]

- Corona, E.; Alenya, G.; Gabas, A.; Torras, C. Active garment recognition and target grasping point detection using deep learing. Pattern Recognit. 2018, 74, 629–641. [Google Scholar] [CrossRef]

- Stria, J.; Prusa, D.; Hlavac, V.; Wagner, L.; Petrik, V.; Krsek, P.; Smutny, V. Garment perception and its folding using a dual-arm robot. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 61–67. [Google Scholar]

- Molfino, R.; Zoppi, M.; Cepolina, F.; Yousef, J.; Cepolina, E.E. Design of a Hyper-Flexible Cell for Handling 3D Carbon Fiber Fabric. Recent Adv. Mech. Eng. Mech. 2014, 165, 165–170. [Google Scholar]

- Willimon, B.; Birchfield, S.; Walker, I. Model for Unfolding Laundry Using Interactive Perception. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 4871–4876. [Google Scholar] [CrossRef]

- Shibata, M.; Ota, T.; Hirai, S. Wiping Motion for Deformable Object Handling. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 134–139. [Google Scholar]

- Ramisa, A.; Alenyà, G.; Moreno-Noguer, F.; Torras, C. Using Depth and Appearance Features for Informed Robot Grasping of Highly Wrinkled Clothes. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 1703–1708. [Google Scholar]

- Ono, E.; Ichijo, H.; Aisaka, N. Flexible Robotic Hand for Handling Fabric Pieces in Garment Manufacture. Int. J. Cloth. Sci. Technol. 1992, 4, 16–23. [Google Scholar] [CrossRef]

- Potluri, P.; Atkinson, J.; Porat, I. A robotic flexible test system (FTS) for fabrics. Mechatronics 1995, 5, 245–278. [Google Scholar] [CrossRef]

- Seliger, G.; Gutsche, C.; Hsieh, L.-H. Process Planning and Robotic Assembly System Design for Technical Textile Fabrics. CIRP Ann. 1992, 41, 33–36. [Google Scholar] [CrossRef]

- Hamajima, K.; Kakikura, M. Planning strategy for task of unfolding clothes. Robot. Auton. Syst. 2000, 32, 145–152. [Google Scholar] [CrossRef]

- Buckingham, R.; Newell, G. Automating the manufacture of composite broadgoods. Compos. Part A Appl. Sci. Manuf. 1996, 27, 191–200. [Google Scholar] [CrossRef]

- Cusumano-Towner, M.; Singh, A.; Miller, S.; O’Brien, J.F.; Abbeel, P. Bringing clothing into desired configurations with limited perception. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Willimon, B.; Walker, I.; Birchfield, S. Classification of Clothing Using Midlevel Layers. ISRN Robot. 2013, 2013, 1–17. [Google Scholar] [CrossRef]

- Kita, Y.; Saito, F.; Kita, N. A deformable model driven visual method for handling clothes. In Proceedings of the IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; Volume 4, pp. 3889–3895. [Google Scholar]

- Willimon, B.; Birchfield, S.; Walker, I. Classification of Clothing Using Interactive Perception. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1862–1868. [Google Scholar] [CrossRef]

- Potluri, P.; Atkinson, J. Automated manufacture of composites: Handling, measurement of properties and lay-up simulations. Compos. Part A Appl. Sci. Manuf. 2003, 34, 493–501. [Google Scholar] [CrossRef]

- Triantafyllou, D.; Aspragathos, N.A. A Vision System for the Unfolding of Highly Non-Rigid Objects on a Table by One Manipulator. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2011; pp. 509–519. [Google Scholar] [CrossRef]

- Attayyab Khan, A.; Iqbal, J.; Rasheed, T. Feature Extraction of Garments Based on Gaussian Mixture for Autonomous Robotic Manipulation. In Proceedings of the 2017 First International Conference on Latest trends in Electrical Engineering and Computing Technologies (INTELLECT), Karachi, Pakistan, 15–16 November 2017. [Google Scholar] [CrossRef]

- Brown, P.R.; Buchanan, D.R.; Clapp, T.G. Large-deflexion Bending of Woven Fabric for Automated Material-handling. J. Text. Inst. 1990, 81, 1–14. [Google Scholar] [CrossRef]

- Sun, L.; Aragon-Camarasa, G.; Rogers, S.; Siebert, J.P. Accurate garment surface analysis using an active stereo robot head with application to dual-arm flattening. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 185–192. [Google Scholar]

- Karakerezis, A.; Ippolito, M.; Doulgeri, Z.; Rizzi, C.; Cugini, C.; Petridis, V. Robotic Handling for Flat Non-Rigid Materials. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 2–5 October 1994; pp. 937–946. [Google Scholar] [CrossRef]

- Li, Y.; Xu, D.; Yue, Y.; Wang, Y.; Chang, S.-F.; Grinspun, E.; Allen, P.K. Regrasping and unfolding of garments using predictive thin shell modeling. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1382–1388. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).