Evaluating the Forest Ecosystem through a Semi-Autonomous Quadruped Robot and a Hexacopter UAV

Abstract

:1. Introduction

- We, for the first time, deploy a quadruped robot in a forest landscape and report several vital observations around the robot’s movement dynamics and ability to navigate and survey various forest sites.

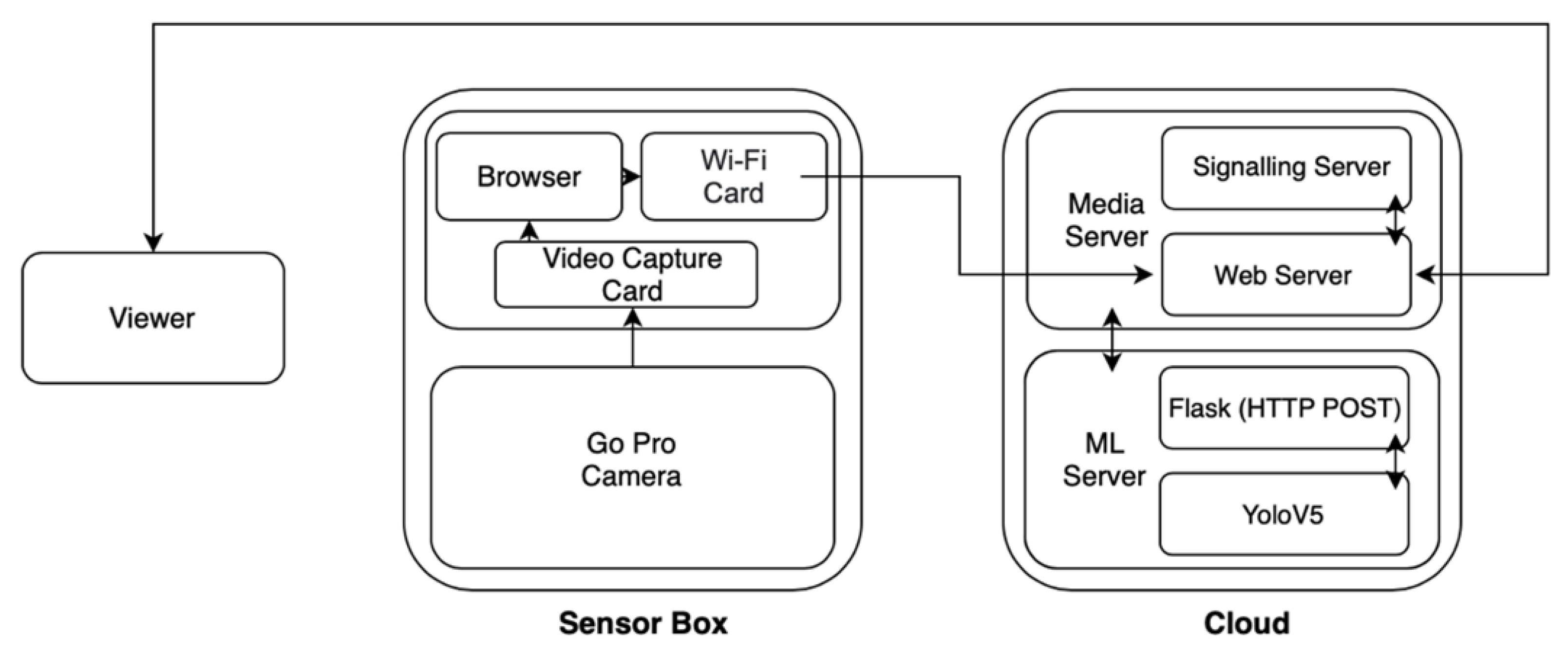

- We define new methods to integrate evolving technologies such as AI, cloud computing, streaming protocols and wireless communication systems to realise end-to-end low-latency detection.

- We propose a method to maximise the uptime of the monitoring system through load balancing distribution and adaptive offloading mechanisms.

- We provide analysis of several state-of-the-art object-detection systems in their ability to accurately detect the health of the forest indicators (e.g., tree species, burrows, deadwood, persons and fire) in real time.

2. Literature Review

2.1. Robotics in Forestry

2.2. AI and Machine Learning in Forestry

3. Materials and Methods

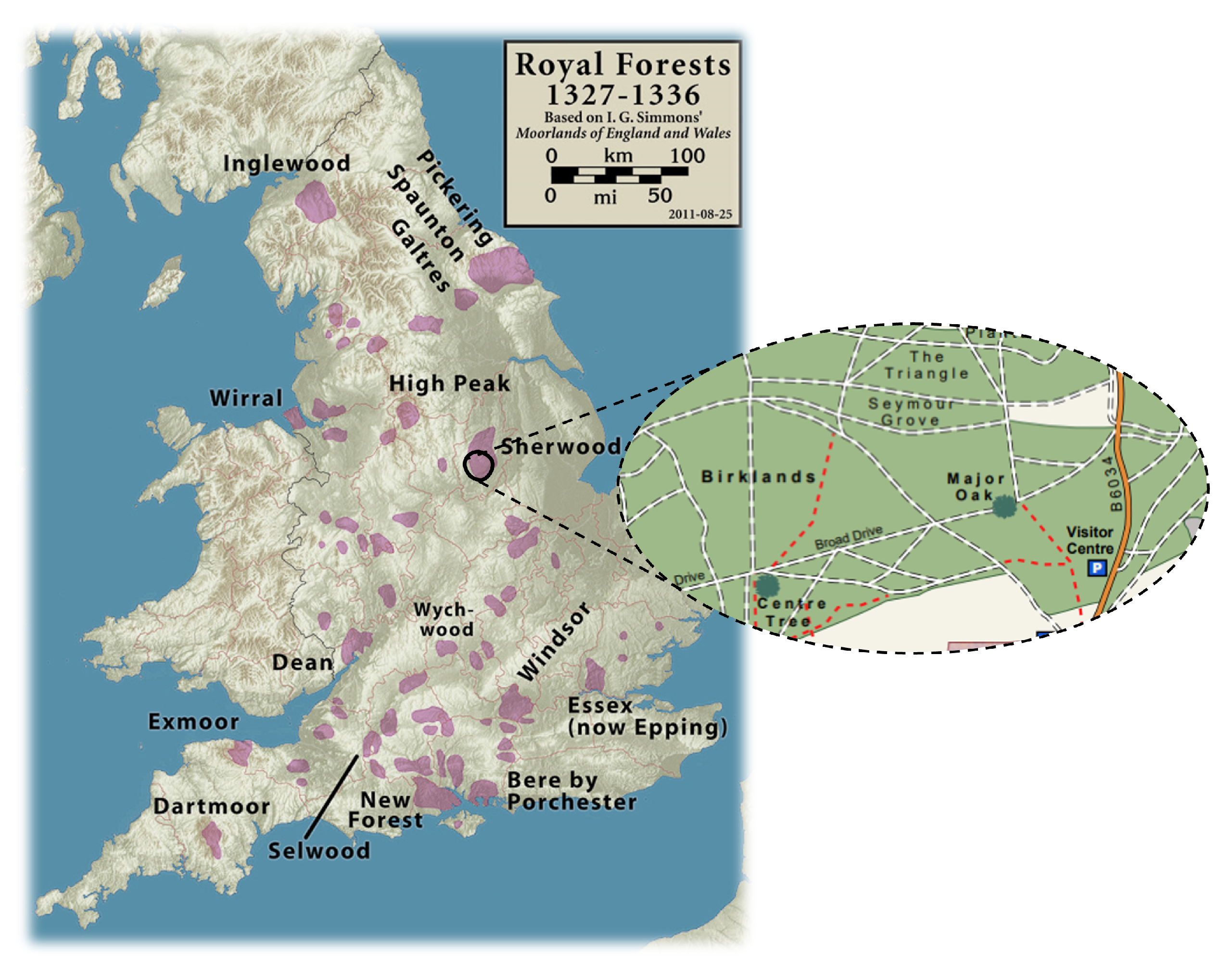

3.1. Study Area

3.2. Robotic System

3.2.1. Aliengo

- Reasonable battery life: ranging between 120 and 270 min depending on endurance;

- Carry payloads of up to 12 kg;

- Locomotion speeds of up to 6 Km/h;

- TX2 Jetson for fast computational processes;

- Mini-Pc for User logic control;

- Embedded power management system;

- External manual remote controller;

- Choice for integrating further tracking, positioning, imaging and communication devices.

3.2.2. Air6 System

3.2.3. Multi-Modal Sensing

3.2.4. Robot Perception Sensors

3.2.5. External Sensing Platform (ESP)

3.2.6. UAV Sensors

3.3. Data Acquisition

3.4. Key Performance Metrics

4. Results and Discussion

4.1. Locomotion

4.2. 4G/Wi-Fi Connectivity for Data Transfer

4.3. Object Detection in Forest

4.4. Challenges

4.4.1. 5G Network Operational Limitations

4.4.2. UAV and UGV Constraints

4.4.3. Data Streaming and Object Detection

4.4.4. Data Limitations

4.5. Future Works

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Trust, T.W. The Economic Benefits of Woodland. 2017. Available online: https://www.woodlandtrust.org.uk/publications/2017/01/economic-benefits-of-woodland/ (accessed on 11 April 2022).

- Woodland Natural Capital Accounts. Woodland Natural Capital Accounts, UK. 2020. Available online: https://www.ons.gov.uk/economy/environmentalaccounts/bulletins/woodlandnaturalcapitalaccountsuk/2020/ (accessed on 2 May 2022).

- Forestry Statistics. Forestry Commission, Edinburgh, UK. Available online: https://www.forestresearch.gov.uk/tools-and-resources/statistics/forestry-statistics/ (accessed on 2 May 2022).

- Approaches. Available online: https://www.fauna-flora.org/approaches/ (accessed on 2 May 2022).

- Forest Ecosystem Services: Can They Pay Our Way out of Deforestation? Available online: https://www.cifor.org/knowledge/publication/1199/ (accessed on 2 May 2022).

- Davies, H.J.; Doick, K.J.; Hudson, M.D.; Schreckenberg, K. Challenges for tree officers to enhance the provision of regulating ecosystem services from urban forests. Environ. Res. 2017, 156, 97–107. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rathore, M.M.; Ahmad, A.; Paul, A.; Wan, J.; Zhang, D. Real-time medical emergency response system: Exploiting IoT and big data for public health. J. Med. Syst. 2016, 40, 283. [Google Scholar] [CrossRef]

- Daume, S.; Albert, M.; von Gadow, K. Forest monitoring and social media—Complementary data sources for ecosystem surveillance? For. Ecol. Manag. 2014, 316, 9–20. [Google Scholar] [CrossRef]

- Cui, F. Deployment and integration of smart sensors with IoT devices detecting fire disasters in huge forest environment. Comput. Commun. 2020, 150, 818–827. [Google Scholar] [CrossRef]

- Environmental Monitoring|HARDWARIO Use Case. Available online: https://www.hardwario.com/use-cases/physical-quantities/ (accessed on 2 May 2022).

- Silvagni, M.; Tonoli, A.; Zenerino, E.; Chiaberge, M. Multipurpose UAV for search and rescue operations in mountain avalanche events. Geomat. Nat. Hazards Risk 2017, 8, 18–33. [Google Scholar] [CrossRef] [Green Version]

- Tomic, T.; Schmid, K.; Lutz, P.; Domel, A.; Kassecker, M.; Mair, E.; Grixa, I.L.; Ruess, F.; Suppa, M.; Burschka, D. Toward a fully autonomous UAV: Research platform for indoor and outdoor urban search and rescue. IEEE Robot. Autom. Mag. 2012, 19, 46–56. [Google Scholar] [CrossRef] [Green Version]

- Firefighters Use Thermal Imaging Camera to Find Missing Person at Trelech. Available online: https://www.tivysideadvertiser.co.uk/news/18605961.firefighters-use-thermal-imaging-camera-find-missing-person-trelech/ (accessed on 2 May 2022).

- Allison, R.S.; Johnston, J.M.; Craig, G.; Jennings, S. Airborne optical and thermal remote sensing for wildfire detection and monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef] [Green Version]

- Robots Start Work in Forestry. Available online: https://www.timberbiz.com.au/robots-start-work-in-forestry/ (accessed on 2 May 2022).

- Automated Harvesting with Robots in the Forest. Available online: https://ssi.armywarcollege.edu/armed-robotic-systems-emergence-weapons-systems-life-cycles-analysis-and-new-strategic-realities/ (accessed on 2 May 2022).

- Pierzchała, M.; Giguère, P.; Astrup, R. Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Ni, J.; Hu, J.; Xiang, C. A review for design and dynamics control of unmanned ground vehicle. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2021, 235, 1084–1100. [Google Scholar] [CrossRef]

- Idrissi, M.; Salami, M.; Annaz, F. A Review of Quadrotor Unmanned Aerial Vehicles: Applications, Architectural Design and Control Algorithms. J. Intell. Robot. Syst. 2022, 104, 1–33. [Google Scholar] [CrossRef]

- Hussain, S.H.; Hussain, A.; Shah, R.H.; Abro, S.A. Mini Rover-Object Detecting Ground Vehicle (UGV). Univ. Sindh J. Inf. Commun. Technol. 2019, 3, 104–108. [Google Scholar]

- Xavier, A.D. Perception System for Forest Cleaning with UGV. Ph.D. Thesis, Universidade de Coimbra, Coimbra, Portugal, 2021. [Google Scholar]

- Fankhauser, P.; Hutter, M. Anymal: A unique quadruped robot conquering harsh environments. Res. Feature 2018, 126, 54–57. [Google Scholar] [CrossRef]

- Chen, T.; Rong, X.; Li, Y.; Ding, C.; Chai, H.; Zhou, L. A compliant control method for robust trot motion of hydraulic actuated quadruped robot. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418813235. [Google Scholar] [CrossRef] [Green Version]

- Nygaard, T.F.; Martin, C.P.; Torresen, J.; Glette, K.; Howard, D. Real-world embodied AI through a morphologically adaptive quadruped robot. Nat. Mach. Intell. 2021, 3, 410–419. [Google Scholar] [CrossRef]

- Oliveira, L.F.; Moreira, A.P.; Silva, M.F. Advances in forest robotics: A state-of-the-art survey. Robotics 2021, 10, 53. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [Green Version]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef] [Green Version]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Deng, J.; Xuan, X.; Wang, W.; Li, Z.; Yao, H.; Wang, Z. A review of research on object detection based on deep learning. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1684, p. 012028. [Google Scholar]

- Seeland, M.; Rzanny, M.; Alaqraa, N.; Wäldchen, J.; Mäder, P. Plant species classification using flower images—A comparative study of local feature representations. PLoS ONE 2017, 12, e0170629. [Google Scholar] [CrossRef] [PubMed]

- Wu, S.G.; Bao, F.S.; Xu, E.Y.; Wang, Y.X.; Chang, Y.F.; Xiang, Q.L. A leaf recognition algorithm for plant classification using probabilistic neural network. In Proceedings of the 2007 IEEE International Symposium on Signal Processing and Information Technology, Giza, Egypt, 15–18 December 2007; pp. 11–16. [Google Scholar]

- Jeon, W.S.; Rhee, S.Y. Plant leaf recognition using a convolution neural network. Int. J. Fuzzy Log. Intell. Syst. 2017, 17, 26–34. [Google Scholar] [CrossRef] [Green Version]

- Joly, A.; Goëau, H.; Bonnet, P.; Bakić, V.; Barbe, J.; Selmi, S.; Yahiaoui, I.; Carré, J.; Mouysset, E.; Molino, J.F.; et al. Interactive plant identification based on social image data. Ecol. Inform. 2014, 23, 22–34. [Google Scholar] [CrossRef]

- Patil, A.A.; Bhagat, K. Plant identification by leaf shape recognition: A review. Int. J. Eng. Trends Technol. 2016, 35, 359–361. [Google Scholar] [CrossRef]

- Mohtashamian, M.; Karimian, M.; Moola, F.; Kavousi, K.; Masoudi-Nejad, A. Automated Plant Species Identification Using Leaf Shape-Based Classification Techniques: A Case Study on Iranian Maples. Iran. J. Sci. Technol. Trans. Electr. Eng. 2021, 45, 1051–1061. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Ma, L.H.; Cheung, Y.m.; Wu, X.; Tang, Y.; Chen, C.L.P. ApLeaf: An efficient android-based plant leaf identification system. Neurocomputing 2015, 151, 1112–1119. [Google Scholar] [CrossRef]

- Kress, W.J.; Garcia-Robledo, C.; Soares, J.V.; Jacobs, D.; Wilson, K.; Lopez, I.C.; Belhumeur, P.N. Citizen science and climate change: Mapping the range expansions of native and exotic plants with the mobile app Leafsnap. BioScience 2018, 68, 348–358. [Google Scholar] [CrossRef] [Green Version]

- Kumar, N.; Belhumeur, P.N.; Biswas, A.; Jacobs, D.W.; Kress, W.J.; Lopez, I.C.; Soares, J.V. Leafsnap: A computer vision system for automatic plant species identification. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 502–516. [Google Scholar]

- Plant Encyclopedia + Catalogue of Plant + Taxonomic Tree. Available online: https://plant.picturethisai.com/en/plant (accessed on 2 May 2022).

- Pl@ntNet. Available online: https://plantnet.org/en (accessed on 2 May 2022).

- Abozariba, R.; Davies, E.; Broadbent, M.; Race, N. Evaluating the real-world performance of 5G fixed wireless broadband in rural areas. In Proceedings of the 2019 IEEE 2nd 5G World Forum (5GWF), Dresden, Germany, 30 September–2 October 2019; pp. 465–470. [Google Scholar]

- Hussain, A.; Barua, B.; Osman, A.; Abozariba, R.; Asyhari, A.T. Performance of MobileNetV3 Transfer Learning on Handheld Device-based Real-Time Tree Species Identification. In Proceedings of the 2021 IEEE 26th International Conference on Automation and Computing (ICAC), Portsmouth, UK, 2–4 September 2021; pp. 1–6. [Google Scholar]

- Zhang, J.; Huang, X.Y. Measuring method of tree height based on digital image processing technology. In Proceedings of the 2009 IEEE First International Conference on Information Science and Engineering, Nanjing, China, 26–28 December 2009; pp. 1327–1331. [Google Scholar]

- Han, D.; Wang, C. Tree height measurement based on image processing embedded in smart mobile phone. In Proceedings of the 2011 IEEE International Conference on Multimedia Technology, Hangzhou, China, 26–28 July 2011; pp. 3293–3296. [Google Scholar]

- Coelho, J.; Fidalgo, B.; Crisóstomo, M.; Salas-González, R.; Coimbra, A.P.; Mendes, M. Non-destructive fast estimation of tree stem height and volume using image processing. Symmetry 2021, 13, 374. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of riparian forest species and health condition using multi-temporal and hyperspatial imagery from unmanned aerial system. Environ. Monit. Assess. 2016, 188, 146. [Google Scholar] [CrossRef] [Green Version]

- Mahlein, A.K. Plant disease detection by imaging sensors–parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Neupane, K.; Baysal-Gurel, F. Automatic identification and monitoring of plant diseases using unmanned aerial vehicles: A review. Remote Sens. 2021, 13, 3841. [Google Scholar] [CrossRef]

- Gini, R.; Passoni, D.; Pinto, L.; Sona, G. Use of unmanned aerial systems for multispectral survey and tree classification: A test in a park area of northern Italy. Eur. J. Remote Sens. 2014, 47, 251–269. [Google Scholar] [CrossRef]

- Aicardi, I.; Garbarino, M.; Lingua, A.; Lingua, E.; Marzano, R.; Piras, M. Monitoring post-fire forest recovery using multitemporal digital surface models generated from different platforms. EARSeL eProceedings 2016, 15, 1–8. [Google Scholar]

- Larrinaga, A.R.; Brotons, L. Greenness indices from a low-cost UAV imagery as tools for monitoring post-fire forest recovery. Drones 2019, 3, 6. [Google Scholar] [CrossRef] [Green Version]

- Getzin, S.; Nuske, R.S.; Wiegand, K. Using unmanned aerial vehicles (UAV) to quantify spatial gap patterns in forests. Remote Sens. 2014, 6, 6988–7004. [Google Scholar] [CrossRef] [Green Version]

- Pierzchała, M.; Talbot, B.; Astrup, R. Estimating soil displacement from timber extraction trails in steep terrain: Application of an unmanned aircraft for 3D modelling. Forests 2014, 5, 1212–1223. [Google Scholar] [CrossRef] [Green Version]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry remote sensing from unmanned aerial vehicles: A review focusing on the data, processing and potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef] [Green Version]

- Morales, G.; Kemper, G.; Sevillano, G.; Arteaga, D.; Ortega, I.; Telles, J. Automatic segmentation of Mauritia flexuosa in unmanned aerial vehicle (UAV) imagery using deep learning. Forests 2018, 9, 736. [Google Scholar] [CrossRef] [Green Version]

- About Sherwood Forest. Available online: https://www.visitsherwood.co.uk/about-sherwood-forest/ (accessed on 6 July 2022).

- Lee, Y.H.; Lee, Y.H.; Lee, H.; Kang, H.; Lee, J.H.; Phan, L.T.; Jin, S.; Kim, Y.B.; Seok, D.Y.; Lee, S.Y.; et al. Development of a Quadruped Robot System with Torque-Controllable Modular Actuator Unit. IEEE Trans. Ind. Electron. 2020, 68, 7263–7273. [Google Scholar] [CrossRef]

- Netmore Enables Sherwood Forest to Be the World’s First 5G Connected Forest. Available online: https://netmoregroup.com/news/netmore-enables-sherwood-forest-to-be-the-worlds-first-5g-connected-forest/ (accessed on 2 May 2022).

- Vinogradov, E.; Sallouha, H.; De Bast, S.; Azari, M.M.; Pollin, S. Tutorial on UAV: A blue sky view on wireless communication. arXiv 2019, arXiv:1901.02306. [Google Scholar]

- Zeng, Y.; Wu, Q.; Zhang, R. Accessing from the sky: A tutorial on UAV communications for 5G and beyond. Proc. IEEE 2019, 107, 2327–2375. [Google Scholar] [CrossRef] [Green Version]

- Emran, B.J.; Najjaran, H. A review of quadrotor: An underactuated mechanical system. Annu. Rev. Control 2018, 46, 165–180. [Google Scholar] [CrossRef]

- Unitree. Available online: https://www.unitree.com/products/a1/ (accessed on 2 May 2022).

- Osman, A.; Abozariba, R.; Asyhari, A.T.; Aneiba, A.; Hussain, A.; Barua, B.; Azeem, M. Real-Time Object Detection with Automatic Switching between Single-Board Computers and the Cloud. In Proceedings of the 2021 IEEE Symposium Series on Computational Intelligence (SSCI), Orlando, FL, USA, 5–7 December 2021; pp. 1–6. [Google Scholar]

- An End-to-End Open Source Machine Learning Platform. Available online: https://www.tensorflow.org/ (accessed on 6 July 2022).

- Bokolonga, E.; Hauhana, M.; Rollings, N.; Aitchison, D.; Assaf, M.H.; Das, S.R.; Biswas, S.N.; Groza, V.; Petriu, E.M. A compact multispectral image capture unit for deployment on drones. In Proceedings of the 2016 IEEE International Instrumentation and Measurement Technology Conference Proceedings, Taipei, Taiwan, 23–26 May 2016; pp. 1–5. [Google Scholar]

- Smigaj, M.; Gaulton, R.; Suárez, J.C.; Barr, S.L. Canopy temperature from an Unmanned Aerial Vehicle as an indicator of tree stress associated with red band needle blight severity. For. Ecol. Manag. 2019, 433, 699–708. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- FireNet. Available online: https://github.com/OlafenwaMoses/FireNET (accessed on 2 May 2022).

- VisDrone-Dataset. Available online: https://github.com/VisDrone/VisDrone-Dataset (accessed on 2 May 2022).

- Rosebrock, A. Intersection over Union (IoU) for Object Detection. 2016. Diambil kembali dari PYImageSearch. Available online: https//www.pyimagesearch.com/2016/11/07/intersection-over-union-iou-for-object-detection (accessed on 2 May 2022).

- Mean Average Precision (mAP) Using the COCO Evaluator. Available online: https://pyimagesearch.com/2022/05/02/mean-average-precision-map-using-the-coco-evaluator/ (accessed on 6 June 2022).

- Network Bandwidth. Available online: https://cloud.google.com/compute/docs/network-bandwidth (accessed on 2 May 2022).

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A real-time detection algorithm for Kiwifruit defects based on YOLOv5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- GCP AutoML, vs. YOLOv5 for Training a Custom Object Detection Model. Available online: https://medium.com/slalom-data-ai/gcp-automl-vs-yolov5-for-training-a-custom-object-detection-model-c1481b8a5c58 (accessed on 2 May 2022).

- Rahman, E.U.; Zhang, Y.; Ahmad, S.; Ahmad, H.I.; Jobaer, S. Autonomous vision-based primary distribution systems porcelain insulators inspection using UAVs. Sensors 2021, 21, 974. [Google Scholar] [CrossRef] [PubMed]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for autonomous landing spot detection in faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- YOLOv5 Is Here: State-of-the-Art Object Detection at 140 FPS. Available online: https://blog.roboflow.com/yolov5-is-here/ (accessed on 6 June 2022).

- Liu, Y.; Lu, B.; Peng, J.; Zhang, Z. Research on the use of YOLOv5 object-detection algorithm in mask wearing recognition. World Sci. Res. J. 2020, 6, 276–284. [Google Scholar]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Varna, D.; Abromavičius, V. A System for a Real-Time Electronic Component Detection and Classification on a Conveyor Belt. Appl. Sci. 2022, 12, 5608. [Google Scholar] [CrossRef]

- What Is 5G New Radio (5G NR). Available online: https://5g.co.uk/guides/what-is-5g-new-radio/ (accessed on 2 May 2022).

- Armed Robotic Systems Emergence: Weapons Systems Life Cycles Analysis and New Strategic Realities. Available online: https://press.armywarcollege.edu/monographs/401/ (accessed on 2 May 2022).

- Greatest Challenges for Forest Robots • Forest Monitor. Available online: https://www.forest-monitor.com/en/greatest-challenges-forest-robots/ (accessed on 2 May 2022).

- Liu, Y.; Du, B.; Wang, S.; Yang, H.; Wang, X. Design and implementation of performance testing utility for RTSP streaming media server. In Proceedings of the 2010 IEEE First International Conference on Pervasive Computing, Signal Processing and Applications, Harbin, China, 17–19 September 2010; pp. 193–196. [Google Scholar]

- Chakraborty, P.; Dev, S.; Naganur, R.H. Dynamic http live streaming method for live feeds. In Proceedings of the 2015 IEEE International Conference on Computational Intelligence and Communication Networks (CICN), Jabalpur, India, 12–14 December 2015; pp. 1394–1398. [Google Scholar]

- Edan, N.; Mahmood, S.A. Multi-user media streaming service for e-learning based web real-time communication technology. Int. J. Electr. Comput. Eng. 2021, 11, 567. [Google Scholar] [CrossRef]

- HTTP Request Methods. Available online: https://developer.mozilla.org/en-US/docs/Web/HTTP/Methods (accessed on 2 May 2022).

- MediaDevices.getUserMedia(). Available online: https://developer.mozilla.org/en-US/docs/Web/API/MediaDevices/getUserMedia (accessed on 2 May 2022).

- Canvas API. Available online: https://developer.mozilla.org/en-US/docs/Web/API/Canvas_API (accessed on 2 May 2022).

- HTMLCanvasElement.toBlob(). Available online: https://developer.mozilla.org/en-US/docs/Web/API/HTMLCanvasElement/toBlob (accessed on 2 May 2022).

- JavaScript. Available online: https://developer.mozilla.org/en-US/docs/Web/JavaScript (accessed on 2 May 2022).

- Ali, H.; Salleh, M.N.M.; Saedudin, R.; Hussain, K.; Mushtaq, M.F. Imbalance class problems in data mining: A review. Indones. J. Electr. Eng. Comput. Sci. 2019, 14, 1560–1571. [Google Scholar] [CrossRef]

| Components | Features | Remarks |

|---|---|---|

| Motor Power | 6 × 750 W | Brushless |

| Weight | 4 kg | Without Payload |

| Maximum take-off weight | 6.5 kg | - |

| Flight-Controller | PixHawk | Redundant Controller |

| LiPo Battery Pack | 2 × LiPo 3700 mAh 6S | 1 Flight Pack = 2 pcs Battery |

| RC Remote Control | 204 GHz | Range approx. ca. 1.500 m (no obstacle) |

| FPV Video-uplink | Analog 5.8 GHz | Digital 5.8 GHz |

| Gimbal (Camera Mount) | 2–3 axis-brushless | Remotely operated via remote control |

| Maximum Wid Speed | 10 m/s | - |

| Algorithm | YOLOv5x | YOLOv5s | ||||

|---|---|---|---|---|---|---|

| Size | 173 MB | 14.5 MB | ||||

| Classes | Precision | Recall | mAP@50 | Precision | Recall | mAP@50 |

| Burrow | 70 | 66 | 64 | 79 | 64 | 67 |

| Deadwood | 68 | 60 | 62 | 67 | 56 | 58 |

| Pine | 63 | 52 | 53 | 63 | 62 | 55 |

| Grass | 88 | 30 | 40 | 64 | 30 | 35 |

| Oak | 39 | 0.09 | 10 | 28 | 17 | 13 |

| Wood | 39 | 30 | 23 | 36 | 38 | 27 |

| Fire | 85 | 65 | 75 | 81 | 74 | 71 |

| Pedestrian | 65 | 35 | 40 | 58 | 41 | 43 |

| Person (Thermal) | 30 | 20 | 28 | 25 | 20 | 30 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Idrissi, M.; Hussain, A.; Barua, B.; Osman, A.; Abozariba, R.; Aneiba, A.; Asyhari, T. Evaluating the Forest Ecosystem through a Semi-Autonomous Quadruped Robot and a Hexacopter UAV. Sensors 2022, 22, 5497. https://doi.org/10.3390/s22155497

Idrissi M, Hussain A, Barua B, Osman A, Abozariba R, Aneiba A, Asyhari T. Evaluating the Forest Ecosystem through a Semi-Autonomous Quadruped Robot and a Hexacopter UAV. Sensors. 2022; 22(15):5497. https://doi.org/10.3390/s22155497

Chicago/Turabian StyleIdrissi, Moad, Ambreen Hussain, Bidushi Barua, Ahmed Osman, Raouf Abozariba, Adel Aneiba, and Taufiq Asyhari. 2022. "Evaluating the Forest Ecosystem through a Semi-Autonomous Quadruped Robot and a Hexacopter UAV" Sensors 22, no. 15: 5497. https://doi.org/10.3390/s22155497

APA StyleIdrissi, M., Hussain, A., Barua, B., Osman, A., Abozariba, R., Aneiba, A., & Asyhari, T. (2022). Evaluating the Forest Ecosystem through a Semi-Autonomous Quadruped Robot and a Hexacopter UAV. Sensors, 22(15), 5497. https://doi.org/10.3390/s22155497