Gaze Estimation Approach Using Deep Differential Residual Network

Abstract

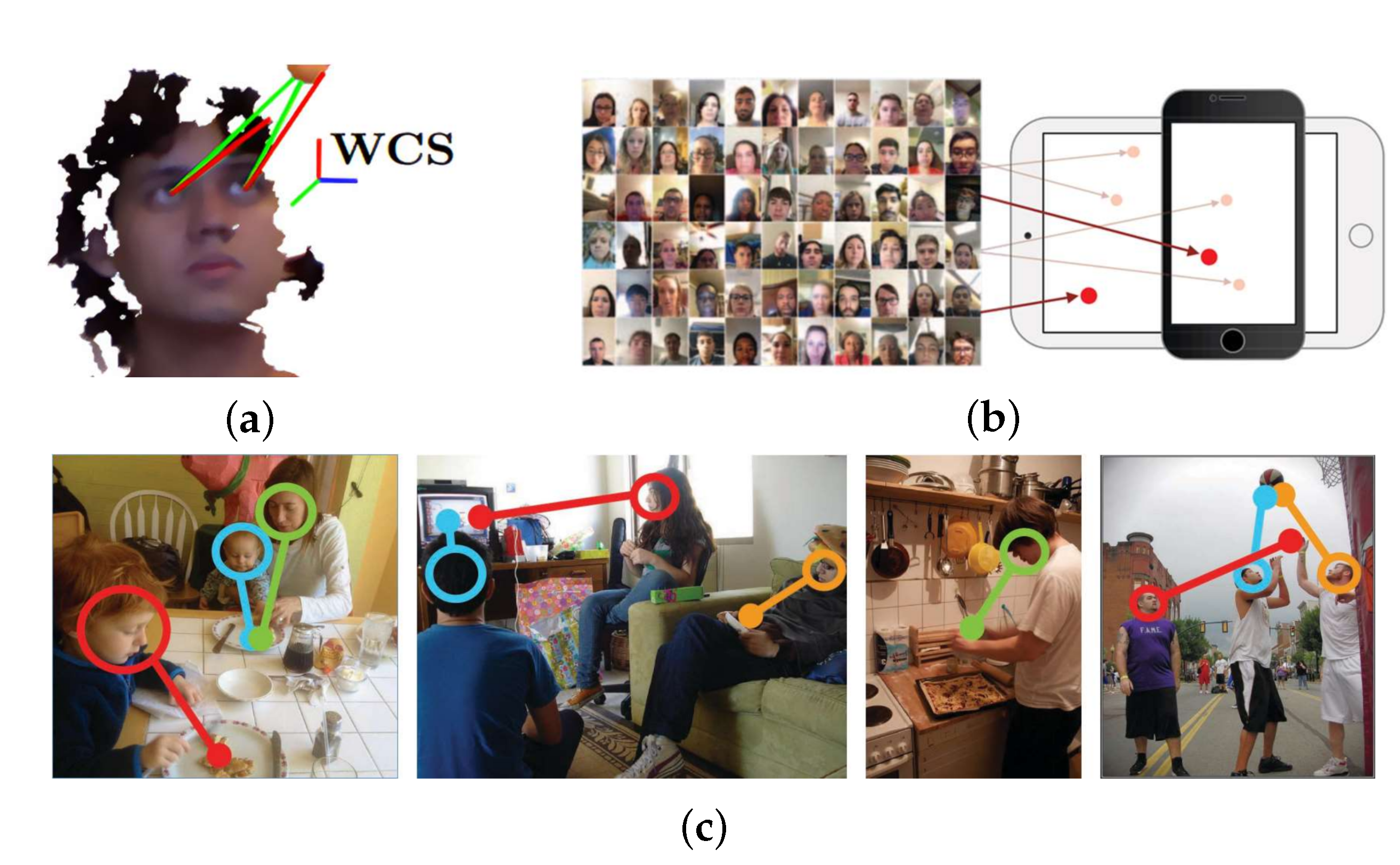

:1. Introduction

- We propose the DRNet model, which applies the shortcut connection, to address the gaze calibration problem and hence improve the robustness-to-noise image in the eye images. DRNet outperforms the state-of-the-art gaze estimation methods only using eye features, and is also highly competitive among the gaze estimation methods combining facial feature.

- We propose a new loss function for gaze estimation. It provides a certain boost to existing appearance-based methods.

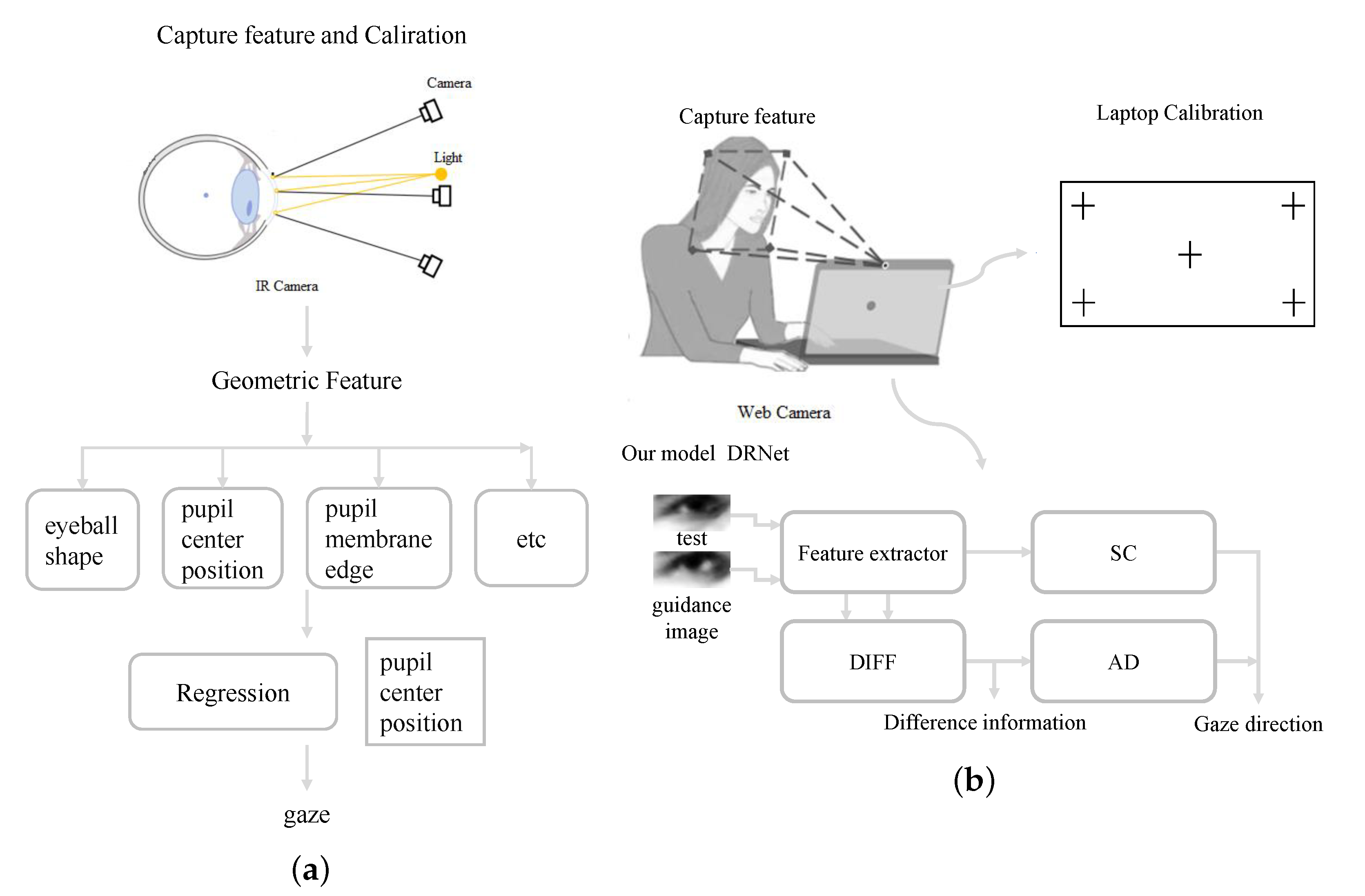

2. Related Work

3. Methodology

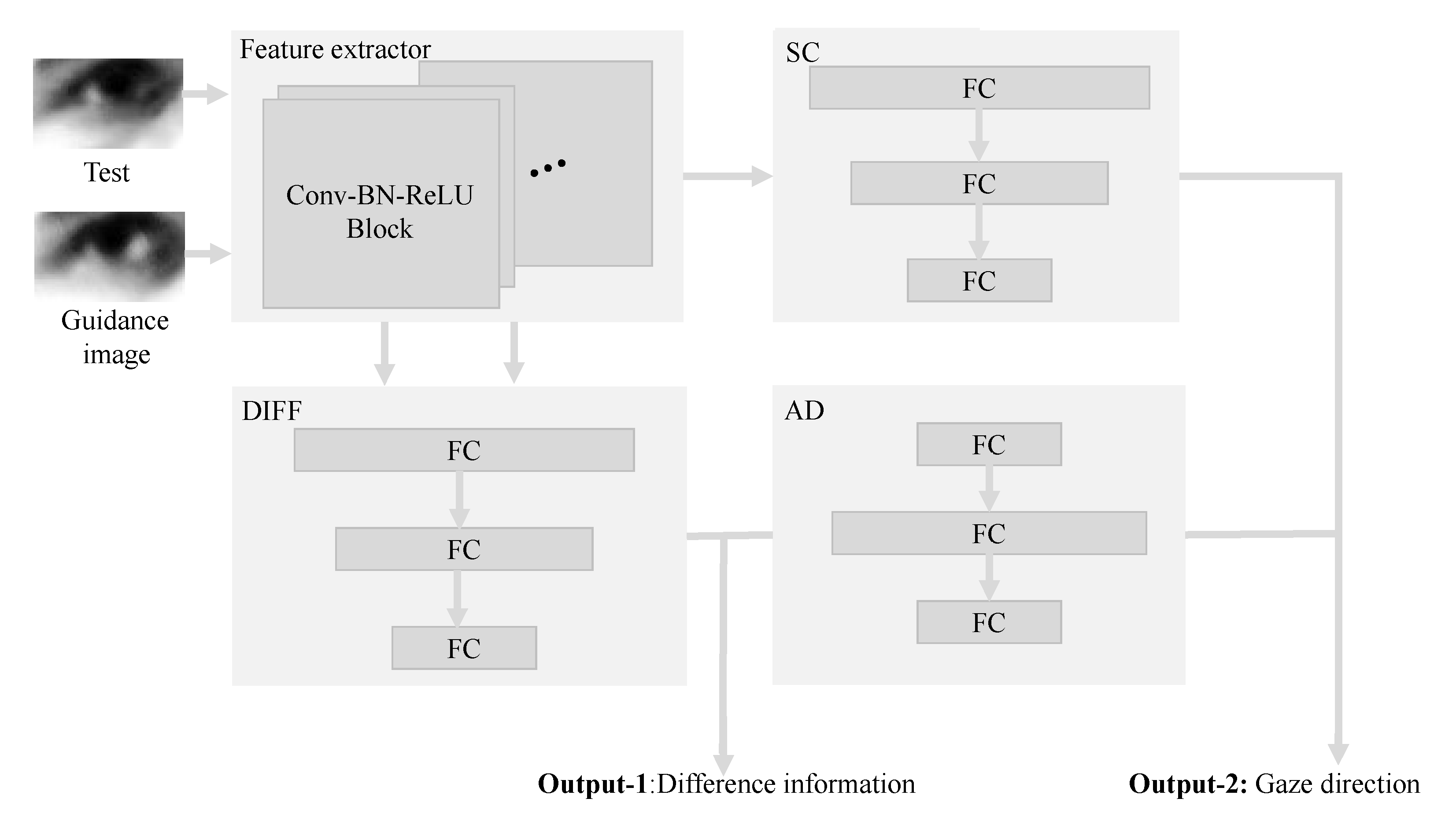

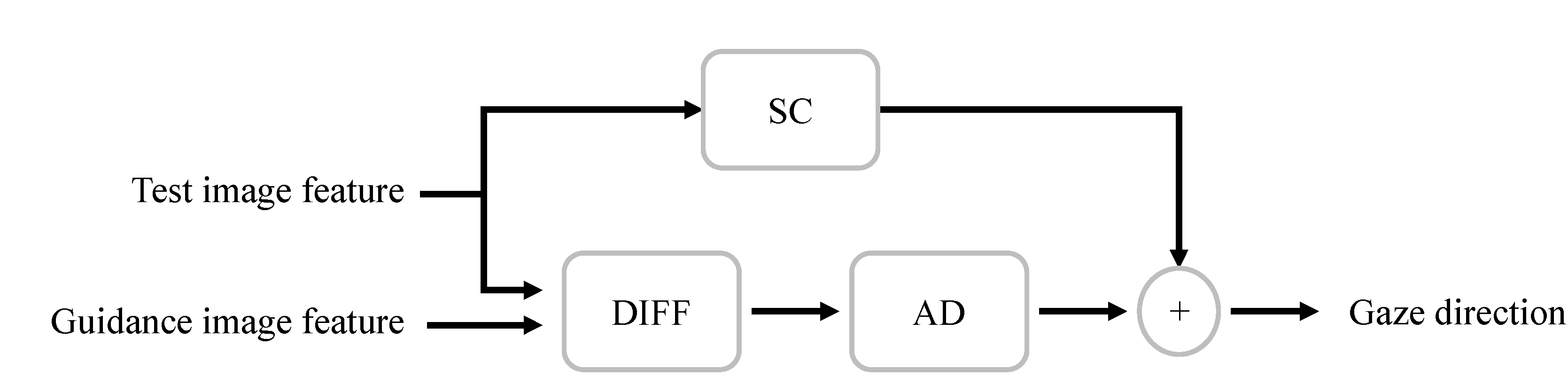

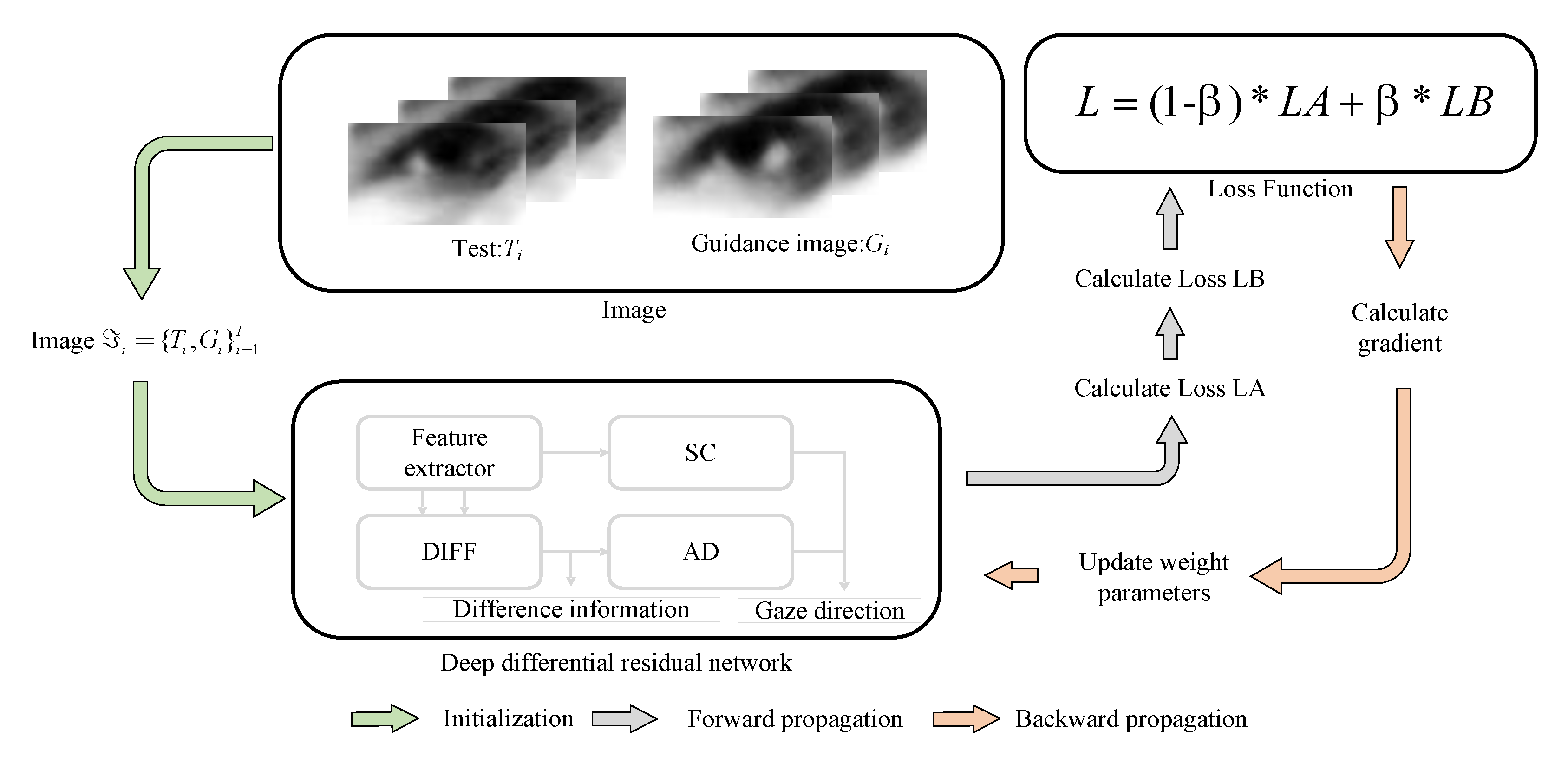

3.1. Proposed DRNet

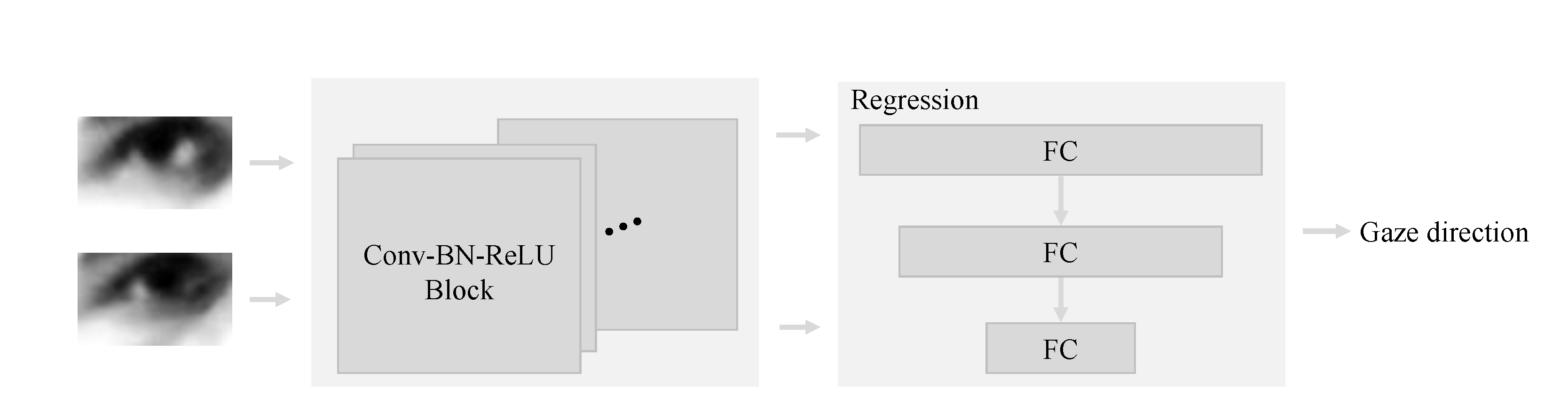

3.1.1. Feature Extractor

3.1.2. Residual Branch

3.2. The Residual Structure in DRNet

3.3. Loss Function

3.4. Training Model

4. Experiments

4.1. Appearance-Base Methods

4.2. Noise Impact on DRNet Model

4.3. Assessing the Impact of the Loss Functions

4.4. Ablation Study of DRNet

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Eckstein, M.K.; Guerra-Carrillo, B.; Singley, A.T.M.; Bunge, S.A. Beyond eye gaze: What else can eyetracking reveal about cognition and cognitive development? Dev. Cogn. Neurosci. 2017, 25, 69–91. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Kanemura, A.; Asoh, H.; Miyanishi, T.; Kawanabe, M. A sparse coding framework for gaze prediction in egocentric video. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1313–1317. [Google Scholar]

- Meißner, M.; Oll, J. The promise of eye-tracking methodology in organizational research: A taxonomy, review, and future avenues. Organ. Res. Methods 2019, 22, 590–617. [Google Scholar] [CrossRef]

- El-Hussieny, H.; Ryu, J.-H. Inverse discounted-based LQR algorithm for learning human movement behaviors. Appl. Intell. 2019, 49, 1489–1501. [Google Scholar] [CrossRef]

- Li, P.; Hou, X.; Duan, X.; Yip, H.; Song, G.; Liu, Y. Appearance-based gaze estimator for natural interaction control of surgical robots. IEEE Access 2019, 7, 25095–25110. [Google Scholar] [CrossRef]

- Li, Z.; Li, Y.; Tan, B.; Ding, S.; Xie, S. Structured Sparse Coding With the Group Log-regularizer for Key Frame Extraction. IEEE/CAA J. Autom. Sin. 2022, 9, 1–13. [Google Scholar] [CrossRef]

- Mohammad, Y.; Nishida, T. Controlling gaze with an embodied interactive control architecture. Appl. Intell. 2010, 32, 148–163. [Google Scholar] [CrossRef]

- Shah, S.M.; Sun, Z.; Zaman, K.; Hussain, A.; Shoaib, M.; Pei, L. A Driver Gaze Estimation Method Based on Deep Learning. Sensors 2022, 22, 3959. [Google Scholar] [CrossRef] [PubMed]

- Funes-Mora, K.A.; Odobez, J.M. Gaze estimation in the 3d space using rgb-d sensors. Int. J. Comput. Vis. 2016, 118, 194–216. [Google Scholar] [CrossRef]

- Li, Y.; Tan, B.; Akaho, S.; Asoh, H.; Ding, S. Gaze prediction for first-person videos based on inverse non-negative sparse coding with determinant sparse measure. J. Vis. Commun. Image Represent. 2021, 81, 103367. [Google Scholar] [CrossRef]

- Krafka, K.; Khosla, A.; Kellnhofer, P.; Kannan, H.; Bhandarkar, S.; Matusik, W.; Torralba, A. Eye tracking for everyone. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2176–2184. [Google Scholar]

- Recasens, A.; Khosla, A.; Vondrick, C.; Torralba, A. Where are they looking? In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Guestrin, E.D.; Eizenman, M. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Trans. Biomed. Eng. 2006, 53, 1124–1133. [Google Scholar] [CrossRef]

- Zhu, Z.; Ji, Q. Novel eye gaze tracking techniques under natural head movement. IEEE Trans. Biomed. Eng. 2007, 54, 2246–2260. [Google Scholar] [PubMed]

- Valenti, R.; Sebe, N.; Gevers, T. Combining head pose and eye location information for gaze estimation. IEEE Trans. Image Process. 2011, 21, 802–815. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alberto Funes Mora, K.; Odobez, J.M. Geometric generative gaze estimation (g3e) for remote rgb-d cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1773–1780. [Google Scholar]

- Zhang, X.; Sugano, Y.; Fritz, M.; Bulling, A. Mpii gaze: Real-world dataset and deep appearance-based gaze estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 162–175. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, X.; Sugano, Y.; Fritz, M.; Bulling, A. Appearance-based gaze estimation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4511–4520. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Funes Mora, K.A.; Monay, F.; Odobez, J.M. Eyediap: A database for the development and evaluation of gaze estimation algorithms from rgb and rgb-d cameras. In Proceedings of the Symposium on Eye Tracking Research and Applications, Safety Harbor, FL, USA, 26–28 March 2014; pp. 255–258. [Google Scholar]

- Zhang, X.; Huang, M.X.; Sugano, Y.; Bulling, A. Training person-specific gaze estimators from user interactions with multiple devices. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar]

- Li, Y.; Zhan, Y.; Yang, Z. Evaluation of appearance-based eye tracking calibration data selection. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 27–29 June 2020; pp. 222–224. [Google Scholar]

- Lindén, E.; Sjostrand, J.; Proutiere, A. Learning to personalize in appearance-based gaze tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Wang, Q.; Wang, H.; Dang, R.C.; Zhu, G.P.; Pi, H.F.; Shic, F.; Hu, B.L. Style transformed synthetic images for real world gaze estimation by using residual neural network with embedded personal identities. Appl. Intell. 2022. [Google Scholar] [CrossRef]

- Liu, G.; Yu, Y.; Mora, K.A.F.; Odobez, J.M. A differential approach for gaze estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1092–1099. [Google Scholar] [CrossRef] [Green Version]

- Gu, S.; Wang, L.; He, L.; He, X.; Wang, J. Gaze Estimation via a Differential Eyes’ Appearances Network with a Reference Grid. Engineering 2021, 7, 777–786. [Google Scholar] [CrossRef]

- Fischer, T.; Chang, H.J.; Demiris, Y. Rt-gene: Real-time eye gaze estimation in natural environments. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 334–352. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zhang, X.; Sugano, Y.; Fritz, M.; Bulling, A. It’s written all over your face: Full-face appearance-based gaze estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 51–60. [Google Scholar]

- Cheng, Y.; Huang, S.; Wang, F.; Qian, C.; Lu, F. A coarse-to-fine adaptive network for appearance-based gaze estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 10623–10630. [Google Scholar]

- Chen, Z.; Shi, B.E. Appearance-based gaze estimation using dilated-convolutions. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer: Cham, Switzerland, 2018; pp. 309–324. [Google Scholar]

- Naqvi, R.A.; Arsalan, M.; Batchuluun, G.; Yoon, H.; Park, K.R. Deep Learning-Based Gaze Detection System for Automobile Drivers Using a NIR Camera Sensor. Sensors 2018, 18, 456. [Google Scholar] [CrossRef] [Green Version]

- Naqvi, R.A.; Arsalan, M.; Rehman, A.; Rehman, A.U.; Paul, A. Deep Learning-Based Drivers Emotion Classification System in Time Series Data for Remote Applications. Remote Sens. 2020, 12, 587. [Google Scholar] [CrossRef] [Green Version]

- Bao, Y.; Cheng, Y.; Liu, Y.; Lu, F. Adaptive feature fusion network for gaze tracking in mobile tablets. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 9936–9943. [Google Scholar]

- Hwang, B.J.; Chen, H.H.; Hsieh, C.H.; Huang, D.Y. Gaze Tracking Based on Concatenating Spatial-Temporal Features. Sensors 2022, 22, 545. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.H.; Jeong, J.W. Gaze in the Dark: Gaze Estimation in a Low-Light Environment with Generative Adversarial Networks. Sensors 2020, 20, 4935. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

- Cheng, Y.; Wang, H.; Bao, Y.; Lu, F. Appearance-based gaze estimation with deep learning: A review and benchmark. arXiv 2021, arXiv:2104.12668. [Google Scholar]

- Kellnhofer, P.; Recasens, A.; Stent, S.; Matusik, W.; Torralba, A. Gaze360: Physically unconstrained gaze estimation in the wild. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6912–6921. [Google Scholar]

- Smith, B.A.; Qi, Y.; Feiner, S.K.; Nayar, S.K. Gaze locking: Passive eye contact detection for human-object interaction. In Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, St. Andrews, UK, 8–11 October 2013. [Google Scholar]

| Method | MpiiGaze | Eyediap |

|---|---|---|

| Mnist [18] | 6.27 | 7.6 |

| GazeNet [17] | 5.7 | 7.13 |

| RT-Gene [27] | 4.61 | 6.3 |

| DenseNet101-Diff-Nn [25] | 6.33 | 7.96 |

| DenseNet101-DRNet (ours) | 4.57 | 6.14 |

| Method | MpiiGaze | Eyediap |

|---|---|---|

| Dilated-Net [31] | 4.39 | 6.57 |

| Gaze360 [39] | 4.07 | 5.58 |

| FullFace [29] | 4.96 | 6.76 |

| AFF-Net [34] | 3.69 | 6.75 |

| CA-Net [30] | 4.27 | 5.63 |

| Two Stream Model versus DRNet | |||

|---|---|---|---|

| MpiiGaze | No_Invalid_Image | Fixed_Invalid_Image | Distance |

| 4.72-4.46 | 5.09-4.48 | 0.37-0.02 | |

| 5.98-5.99 | 6.11-6.02 | 0.13-0.03 | |

| 5.29-5.02 | 5.41-4.85 | 0.12-0.17 | |

| 6.65-6.61 | 6.43-6.41 | 0.22-0.20 | |

| 6.78-6.70 | 6.79-6.18 | 0.01-0.52 | |

| 6.20-6.27 | 7.32-6.39 | 1.12-0.12 | |

| 6.15-5.94 | 6.61-5.94 | 0.46-0.00 | |

| 7.44-7.19 | 7.11-7.07 | 0.33-0.12 | |

| 6.51-6.46 | 6.84-6.41 | 0.33-0.05 | |

| 7.98-7.07 | 7.91-7.09 | 0.07-0.02 | |

| 5.41-5.38 | 5.88-5.33 | 0.47-0.05 | |

| 5.26-4.88 | 5.26-5.36 | 0.00-0.48 | |

| 5.87-5.33 | 6.70-5.78 | 0.83-0.45 | |

| 6.39-6.11 | 6.68-6.20 | 0.29-0.09 | |

| 6.01-6.22 | 5.65-6.34 | 0.36-0.12 | |

| Average | 6.18-5.98 | 6.39-5.99 | 0.34-0.16 |

| Eyediap | No_Invalid_Image | Fixed_Invalid_Image | Distance |

| 7.35-6.86 | 7.27-7.14 | 0.08-0.28 | |

| 7.43-7.33 | 6.87-7.66 | 0.56-0.33 | |

| 5.78-6.01 | 6.41-6.05 | 0.63-0.04 | |

| 7.66-5.29 | 7.92-5.58 | 0.26-0.29 | |

| 8.08-6.06 | 8.67-7.07 | 0.59-1.01 | |

| 7.14-5.84 | 7.40-6.21 | 0.26-0.37 | |

| 7.64-6.96 | 9.63-7.58 | 1.99-0.62 | |

| 8.23-5.44 | 9.17-5.61 | 0.94-0.17 | |

| 8.10-7.37 | 7.56-7.77 | 0.54-0.40 | |

| 7.24-7.87 | 8.86-8.32 | 1.62-0.45 | |

| 6.46-6.93 | 6.12-7.54 | 0.34-0.61 | |

| 5.35-7.78 | 5.30-7.56 | 0.05-0.22 | |

| 6.11-7.26 | 7.84-6.78 | 1.73-0.48 | |

| 6.46-7.00 | 7.06-6.58 | 0.60-0.42 | |

| Average | 7.07-6.71 | 7.58-6.96 | 0.73-0.41 |

| Eyediap | MpiiGaze | |

|---|---|---|

| 1 | 7.31 | 6.07 |

| 0.75 | 7.27 | 6.12 |

| 0.5 | 7.38 | 6.25 |

| 0.25 | 7.59 | 6.53 |

| 0 | 7.6 | 6.3 |

| 0.25 | 0.5 | 0.75 | 1 | ||

|---|---|---|---|---|---|

| 0 | 7.17/8.4 | 7.09/8,5 | 7.01/8.14 | 6.81/8.07 | |

| 0.25 | 6.35/7.13 | 6.43/7.13 | 6.36/6.93 | 6.28/6.94 | |

| 0.5 | 6.28/6.87 | 6.17/6.87 | 6.15/7.01 | 6.17/6.88 | |

| 0.75 | 6.08/7.13 | 5.96/7.06 | 5.97/6.71 | 5.88/6.77 | |

| 1 | 6.05/7.33 | 6.07/7.26 | 6.02/7.18 | 6.06/7.04 | |

| Dataset | ||

|---|---|---|

| MpiiGaze | 6.05 | 0.89 |

| Eyediap | 7.16 | 0.88 |

| Method | MpiiGaze (L/R/All) | Eyediap (L/R/All) |

|---|---|---|

| DRNet | 6.15/6.29/5.98 | 6.71/-/- |

| Diff-Nn [25] | 10.73/10.92/10.83 | 11.82/-/- |

| DRNet_NoSC | 6.59/6.32/6.97 | 7.72/-/- |

| DRNet_NoAD | 6.11/6.25/6.05 | 7.16/-/- |

| DRNet_NoDIFF | 6.22/6.32/6.07 | 6.97/-/- |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, L.; Li, Y.; Wang, X.; Wang, H.; Bouridane, A.; Chaddad, A. Gaze Estimation Approach Using Deep Differential Residual Network. Sensors 2022, 22, 5462. https://doi.org/10.3390/s22145462

Huang L, Li Y, Wang X, Wang H, Bouridane A, Chaddad A. Gaze Estimation Approach Using Deep Differential Residual Network. Sensors. 2022; 22(14):5462. https://doi.org/10.3390/s22145462

Chicago/Turabian StyleHuang, Longzhao, Yujie Li, Xu Wang, Haoyu Wang, Ahmed Bouridane, and Ahmad Chaddad. 2022. "Gaze Estimation Approach Using Deep Differential Residual Network" Sensors 22, no. 14: 5462. https://doi.org/10.3390/s22145462

APA StyleHuang, L., Li, Y., Wang, X., Wang, H., Bouridane, A., & Chaddad, A. (2022). Gaze Estimation Approach Using Deep Differential Residual Network. Sensors, 22(14), 5462. https://doi.org/10.3390/s22145462