Retina-like Computational Ghost Imaging for an Axially Moving Target

Abstract

:1. Introduction

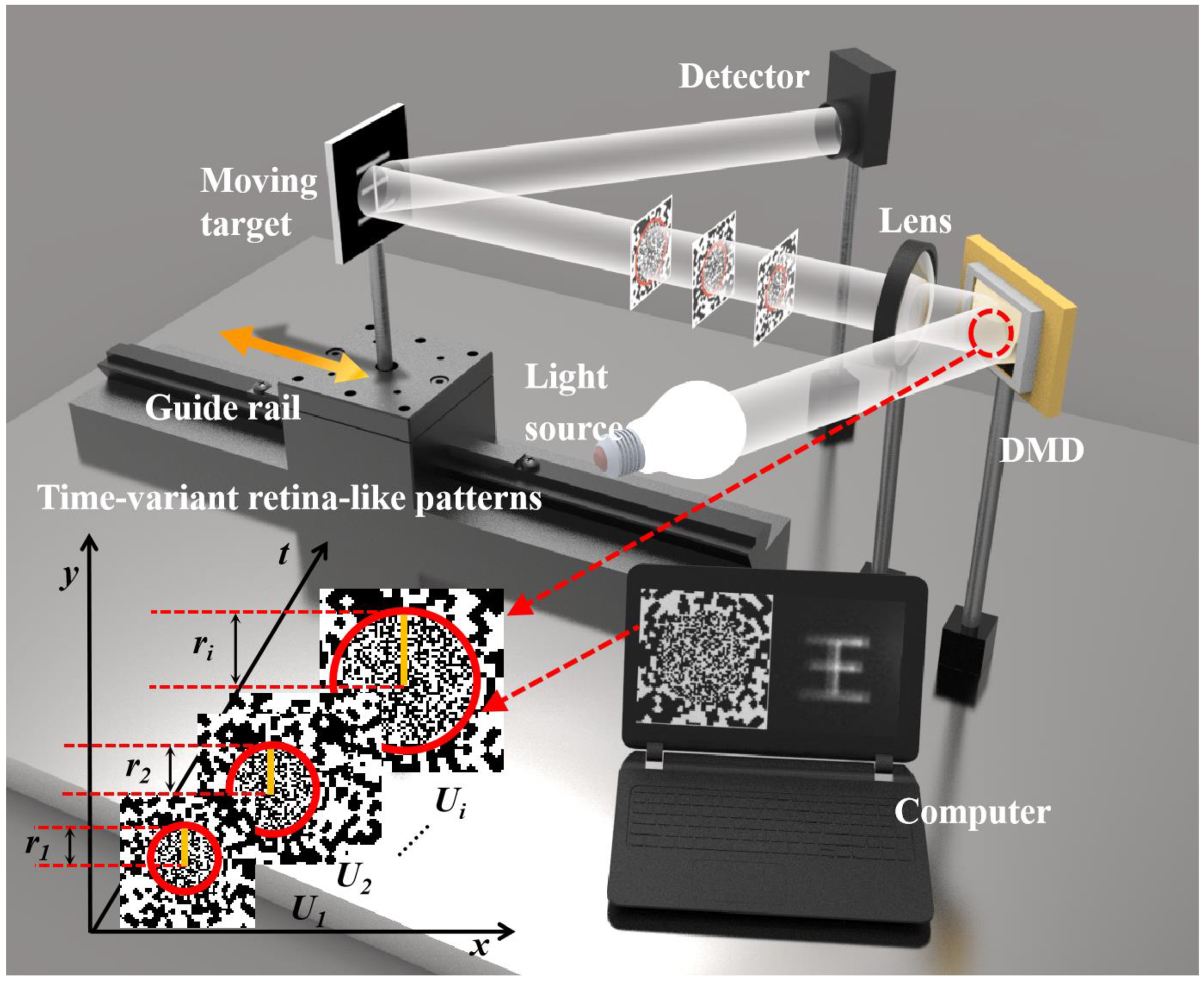

2. Methods

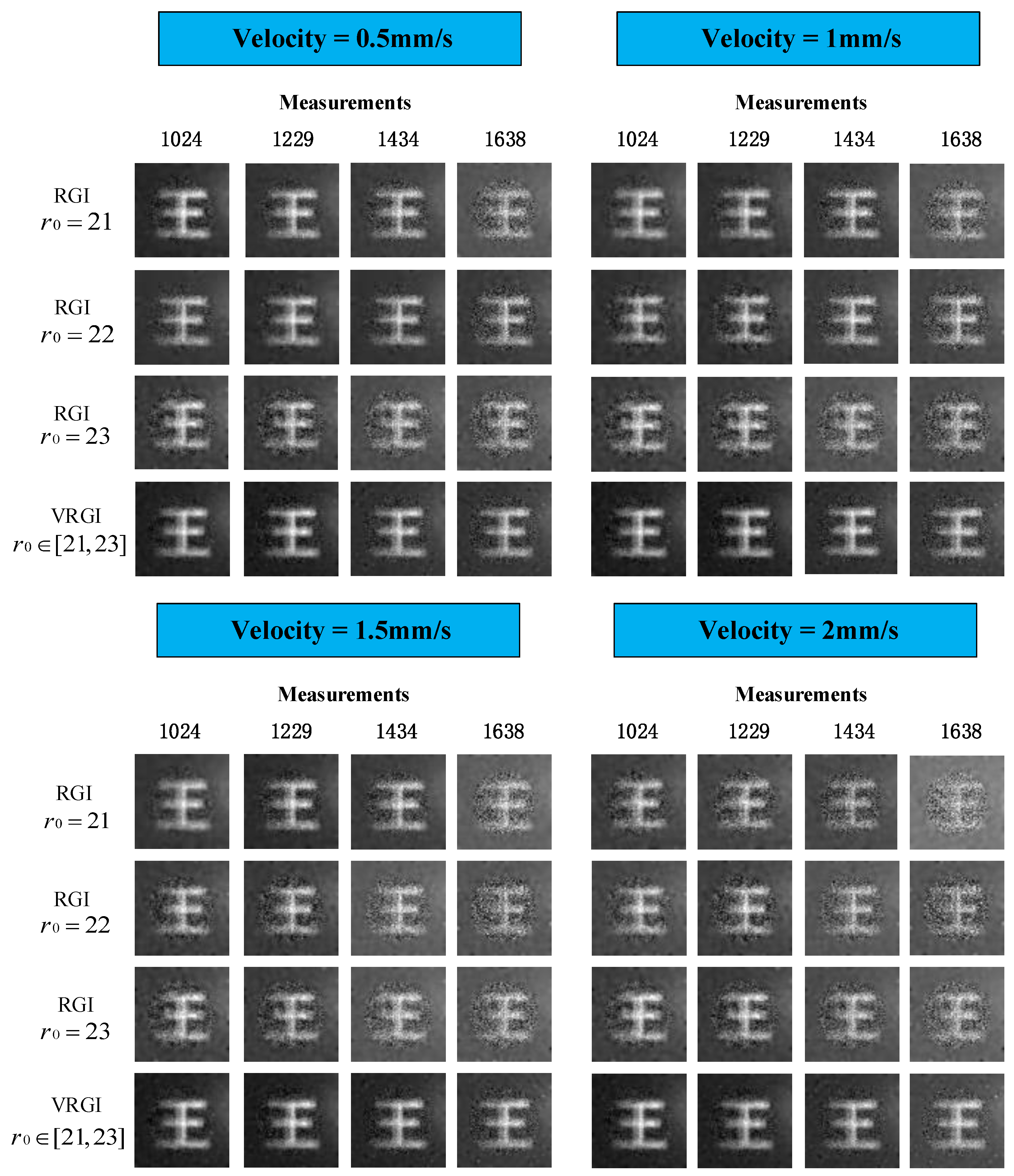

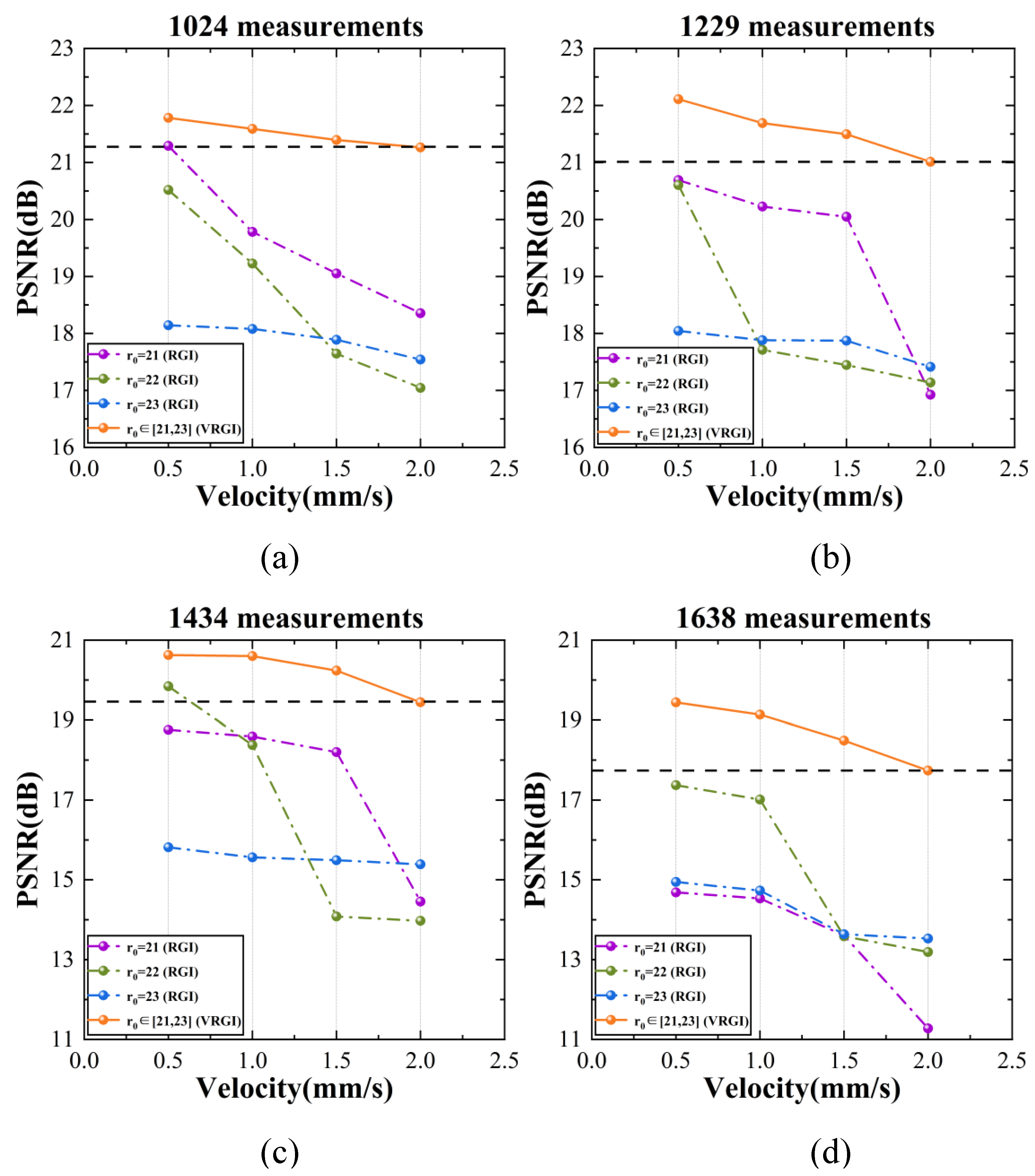

3. Experimental Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gibson, G.M.; Johnson, S.D.; Padgett, M.J. Single-pixel imaging 12 years on: A review. Opt. Express 2020, 28, 28190–28208. [Google Scholar] [CrossRef]

- Lu, T.; Qiu, Z.; Zhang, Z.; Zhong, J. Comprehensive comparison of single-pixel imaging methods. Opt. Lasers Eng. 2020, 134, 106301. [Google Scholar] [CrossRef]

- Zhang, D.; Zhai, Y.; Wu, L.; Chen, X. Correlated two-photon imaging with true thermal light. Opt. Lett. 2005, 30, 2354–2356. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, M.; Zhang, J. Single-Pixel Imaging and Its Application in Three-Dimensional Reconstruction: A Brief Review. Sensors 2019, 19, 732. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pittman, T.B.; Shih, Y.H.; Strekalov, D.V.; Sergienko, A.V. Optical imaging by means of two-photon quantum entanglement. Phys. Rev. A 1995, 52, R3429–R3432. [Google Scholar] [CrossRef] [PubMed]

- Bennink, R.S.; Bentley, S.J.; Boyd, R.W. “Two-Photon” Coincidence Imaging with a Classical Source. Phys. Rev. Lett. 2002, 89, 113601. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shapiro, J.H. Computational ghost imaging. Phys. Rev. A 2008, 78, R061802. [Google Scholar] [CrossRef]

- Bromberg, Y.; Katz, O.; Silberberg, Y. Ghost imaging with a single detector. Phys. Rev. A 2009, 79, 53840. [Google Scholar] [CrossRef] [Green Version]

- Studer, V.; Bobin, J. Compressive Fluorescence Microscopy for Biological and Hyperspectral Imaging. Proc. Natl. Acad. Sci. USA 2012, 109, E1679–E1687. [Google Scholar] [CrossRef] [Green Version]

- Zanotto, L.; Piccoli, R. Single-pixel terahertz imaging: A review. Opto.-Electron. Adv. 2020, 3, 200012. [Google Scholar] [CrossRef]

- Olivieri, L.; Gongora, J.T.; Peters, L.; Cecconi, V.; Cutrona, A.; Tunesi, J.; Tucker, R.; Pasquazi, A.; Peccianti, M. Hyperspectral terahertz microscopy via nonlinear ghost imaging. Optica 2020, 7, 186–191. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Suo, J.; Hu, X.; Deng, C.; Fan, J.; Dai, Q. Efficient single-pixel multispectral imaging via non-mechanical spatio-spectral modulation. Sci. Rep. 2017, 7, 41435. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bian, L.; Suo, J.; Situ, G.; Li, Z.; Fan, J.; Chen, F.; Dai, Q. Multispectral imaging using a single bucket detector. Sci. Rep. 2016, 6, 24752. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rousset, F.; Ducros, N.; Peyrin, F.; Valentini, G.; Andrea, C.D.; Farina, A. Time-resolved multispectral imaging based on an adaptive single-pixel camera. Opt. Express 2018, 26, 10550–10558. [Google Scholar] [CrossRef]

- Huang, J.; Shi, D. Multispectral computational ghost imaging with multiplexed illumination. J. Opt. 2017, 19, 75701. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.; Shi, D.; Meng, W.; Zha, L.; Yuan, K.; Hu, S.; Wang, Y. Spectral encoded computational ghost imaging. Opt. Commun. 2020, 474, 126105. [Google Scholar] [CrossRef]

- Duan, D.; Xia, Y. Pseudo color ghost coding imaging with pseudo thermal light. Opt. Commun. 2018, 413, 295–298. [Google Scholar] [CrossRef]

- Deng, Q.; Zhang, Z.; Zhong, J. Image-free real-time 3-D tracking of a fast-moving object using dual-pixel detection. Opt. Lett. 2020, 45, 4734–4737. [Google Scholar] [CrossRef]

- Soltanlou, K.; Latifi, H. Three-dimensional imaging through scattering media using a single pixel detector. Appl. Opt. 2019, 58, 7716–7726. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhong, J. Three-dimensional single-pixel imaging with far fewer measurements than effective image pixels. Opt. Lett. 2016, 41, 2497–2500. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, Y.; Yang, C.; Xu, L.; Wang, Q.; Zhao, Y. Heterodyne 3D ghost imaging. Opt. Commun. 2016, 368, 1–6. [Google Scholar] [CrossRef]

- Zhang, C.; Guo, S.; Guan, J.; Cao, J.; Gao, F. Three-dimensional ghost imaging using acoustic transducer. Opt. Commun. 2016, 368, 134–140. [Google Scholar] [CrossRef]

- Gong, W.; Han, S. Correlated imaging in scattering media. Opt. Lett. 2011, 36, 394–62011. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Liu, W.; Zhang, E.; Li, Q.; Dai, H.; Chen, P. Is ghost imaging intrinsically more powerful against scattering? Opt. Express 2015, 23, 32993–33000. [Google Scholar] [CrossRef] [PubMed]

- Satat, G.; Tancik, M.; Gupta, O.; Heshmat, B.; Raskar, R. Object classification through scattering media with deep learning on time resolved measurement. Opt. Express 2017, 25, 17466–27479. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Xiong, J.; Zeng, G. Lensless ghost imaging for moving objects. Opt. Eng. 2011, 50, 7005. [Google Scholar] [CrossRef]

- Zhang, C.; Gong, W.; Han, S. Improving imaging resolution of shaking targets by Fourier-transform ghost diffraction. Appl. Phys. Lett. 2013, 102, 21111. [Google Scholar] [CrossRef] [Green Version]

- Li, E.; Bo, Z.; Chen, M.; Gong, W.; Han, S. Ghost imaging of a moving target with an unknown constant speed. Appl. Phys. Lett. 2014, 104, 251120. [Google Scholar] [CrossRef]

- Yang, Z.; Li, W.; Song, Z.; Yu, W.; Wu, L. Tracking Compensation in Computational Ghost Imaging of Moving Objects. IEEE Sens. J. 2021, 21, 85–91. [Google Scholar] [CrossRef]

- Gong, W.; Han, S. The influence of axial correlation depth of light field on lensless ghost imaging. J. Opt. Soc. Am. B 2010, 27, 675–678. [Google Scholar] [CrossRef]

- Li, X.; Deng, C.; Chen, M.; Gong, W.; Han, S. Ghost imaging for an axially moving target with an unknown constant speed. Photon. Res. 2015, 3, 153–157. [Google Scholar] [CrossRef] [Green Version]

- Liang, Z.; Fan, X.; Cheng, Z.; Zhu, B.; Chen, Y. Research of high-order thermal ghost imaging for an axial moving target. J. Optoelectron. Laser 2017, 28, 547–552. [Google Scholar]

- Phillips, D.B.; Sun, M.J.; Taylor, J.M. Adaptive foveated single-pixel imaging with dynamic supersampling. Sci. Adv. 2017, 3, e1601782. [Google Scholar] [CrossRef] [Green Version]

- Hao, Q.; Tao, Y.; Cao, J.; Tang, M.; Cheng, Y.; Zhou, D.; Ning, Y.; Bao, C.; Cui, H. Retina-like Imaging and Its Applications: A Brief Review. Appl. Sci. 2021, 11, 7058. [Google Scholar] [CrossRef]

- Zhang, K. Modeling and Simulations of Retina-Like Three-Dimensional Computational Ghost Imaging. IEEE Photonics J. 2019, 11, 1–13. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Oldenburg, D.W. Inversion of band limited reflection seismograms. In Inverse Problems of Acoustic & Elastic Waves; Society for Industrial & Applied: Philadelphia, PA, USA, 1984. [Google Scholar]

- Mallat, S.G.; Zhang, Z. Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 1993, 41, 3397–3415. [Google Scholar] [CrossRef] [Green Version]

- Bian, L.; Suo, J.; Dai, Q.; Chen, F. Experimental comparison of single-pixel imaging algorithms. J. Opt. Soc. Am. A 2018, 35, 78–87. [Google Scholar] [CrossRef]

- Li, C. An Efficient Algorithm for Total Variation Regularization with Applications to the Single Pixel Camera and Compressive Sensing. Master’s Thesis, Rice University, Houston, TX, USA, 2011. [Google Scholar]

- Liu, H.; Yang, B.; Guo, Q.; Shi, J.; Guan, C.; Zheng, G.; Mühlenbernd, H.; Li, G.; Zentgraf, P.; Zhang, P. Single-pixel computational ghost imaging with helicity-dependent metasurface hologram. Sci. Adv. 2017, 3, e1701477. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Cao, J.; Cui, H.; Zhou, D.; Han, B.; Hao, Q. Retina-like Computational Ghost Imaging for an Axially Moving Target. Sensors 2022, 22, 4290. https://doi.org/10.3390/s22114290

Zhang Y, Cao J, Cui H, Zhou D, Han B, Hao Q. Retina-like Computational Ghost Imaging for an Axially Moving Target. Sensors. 2022; 22(11):4290. https://doi.org/10.3390/s22114290

Chicago/Turabian StyleZhang, Yingqiang, Jie Cao, Huan Cui, Dong Zhou, Bin Han, and Qun Hao. 2022. "Retina-like Computational Ghost Imaging for an Axially Moving Target" Sensors 22, no. 11: 4290. https://doi.org/10.3390/s22114290

APA StyleZhang, Y., Cao, J., Cui, H., Zhou, D., Han, B., & Hao, Q. (2022). Retina-like Computational Ghost Imaging for an Axially Moving Target. Sensors, 22(11), 4290. https://doi.org/10.3390/s22114290