A Review of Mobile Mapping Systems: From Sensors to Applications

Abstract

:1. Introduction

2. Paper Scope and Organization

3. An Overview of Sensors in Mobile Mapping Systems

3.1. Positioning Sensors

3.1.1. Global Navigation Satellite System Receiver

3.1.2. Inertial Measurement Unit

3.1.3. Distance Measuring Instrument

3.2. Sensors for Data Collection

3.2.1. Light Detection and Ranging (LiDAR)

3.2.2. Imaging Systems and Cameras

4. Mobile Mapping Systems and Platforms

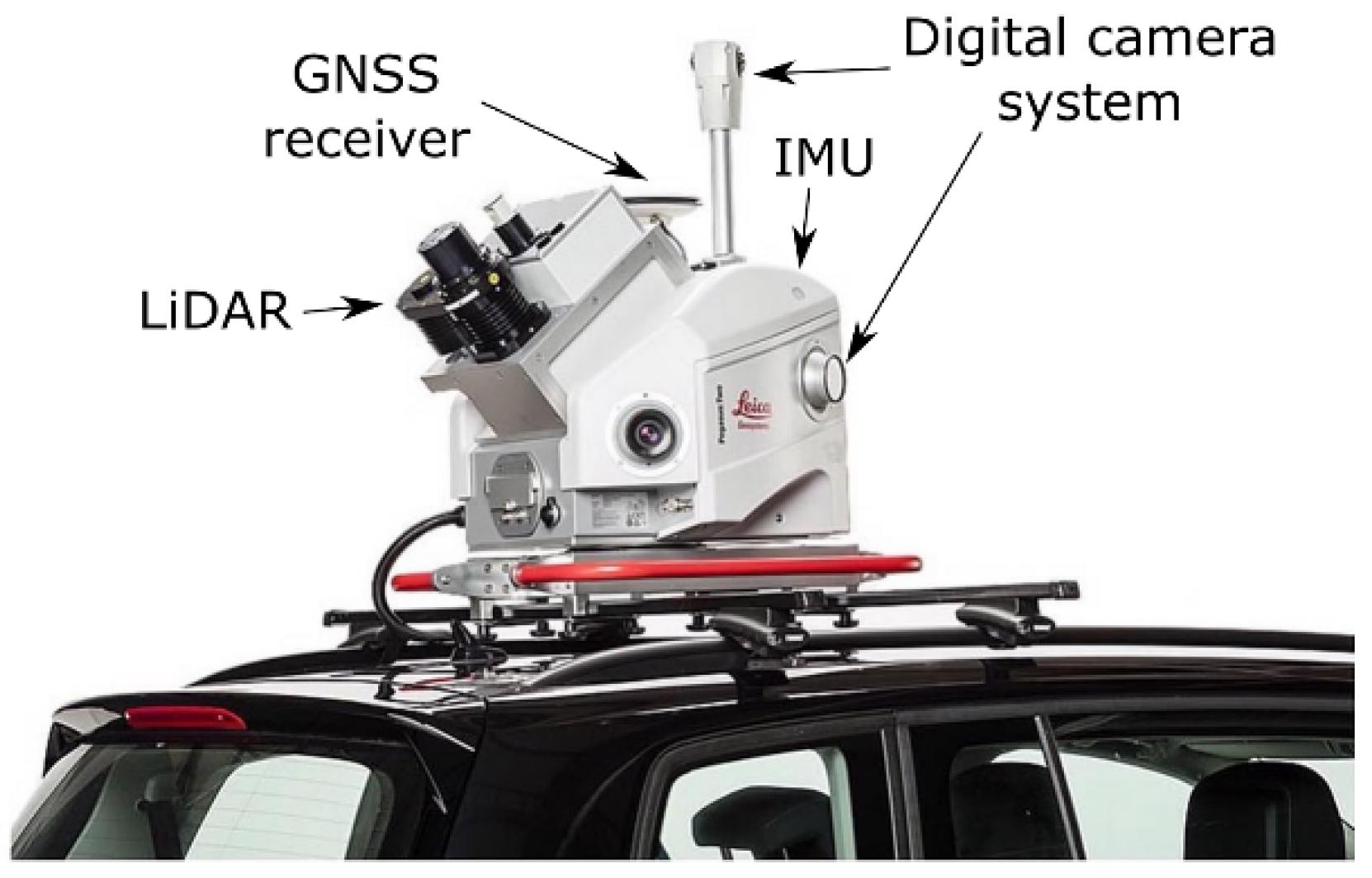

4.1. Vehicle-Mounted Systems

4.2. Handheld and Wearable Systems

4.3. Trolley-Based Systems

5. MMS Workflow and Processing Pipeline

5.1. Data Acquisition

5.2. Sensors Calibration and Fusion

5.2.1. Positioning Sensors Calibration and Fusion

5.2.2. Camera Calibration

5.2.3. LiDAR and Camera Calibration

5.3. Georeferencing LiDAR Scans and Camera Images Using Navigation Data

5.4. Data Processing in Preparation for Scene Understanding

6. Applications

Summary

7. Conclusions

7.1. Summary

7.2. Future Trends

- (1)

- Reduced sensor cost for high-resolution sensors, primarily LiDAR systems with equivalent accuracy/resolution as those currently in use, but at a much lower cost.

- (2)

- Crowdsourced and collaborative MMS using smartphone data; for example, the new iPhone has been equipped with a low-cost LiDAR sensor.

- (3)

- Incorporation of new sensors, such as ultra-wide-band tracking systems, as well as WiFi-based localization for use in MMS.

- (4)

- Enhanced (more robust) use of cameras as visual sensors for navigation.

- (5)

- Higher flexibility in sensor integration and customization as well as more mature software ecosystems (e.g., self-calibration algorithms among multiple sensors) to allow users to easily plug and play different sensors to match the demand for mapping different environments.

- (6)

- Advanced post-processing algorithms for pose estimation, data registration for a close-range scenario, dynamic object removal for data cleaning, and refinement for collections in cluttered environments.

- (7)

- The integration of novel deep learning solutions at all levels of processing, from navigation and device calibration to 3D scene reconstruction and interpretation.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Al-Bayari, O. Mobile mapping systems in civil engineering projects (case studies). Appl. Geomat. 2019, 11, 1–13. [Google Scholar] [CrossRef]

- Schwarz, K.P.; El-Sheimy, N. Mobile mapping systems—State of the art and future trends. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 10. [Google Scholar]

- Chiang, K.W.; Tsai, G.-J.; Zeng, J.C. Mobile Mapping Technologies. In Urban Informatics; Shi, W., Goodchild, M.F., Batty, M., Kwan, M.-P., Zhang, A., Eds.; The Urban Book Series; Springer: Singapore, 2021; pp. 439–465. [Google Scholar]

- Balado, J.; González, E.; Arias, P.; Castro, D. Novel Approach to Automatic Traffic Sign Inventory Based on Mobile Mapping System Data and Deep Learning. Remote Sens. 2020, 12, 442. [Google Scholar] [CrossRef] [Green Version]

- Soilán, M.; Riveiro, B.; Martínez-Sánchez, J.; Arias, P. Automatic road sign inventory using mobile mapping systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 717–723. [Google Scholar] [CrossRef] [Green Version]

- Hirabayashi, M.; Sujiwo, A.; Monrroy, A.; Kato, S.; Edahiro, M. Traffic light recognition using high-definition map features. Robot. Auton. Syst. 2019, 111, 62–72. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Zhu, Q.; Liu, L.; Li, C.; Zheng, D. A survey of mobile laser scanning applications and key techniques over urban areas. Remote Sens. 2019, 11, 1540. [Google Scholar] [CrossRef] [Green Version]

- Vallet, B.; Mallet, C. Urban Scene Analysis with Mobile Mapping Technology. In Land Surface Remote Sensing in Urban and Coastal Areas; Baghdadi, N., Zribi, M., Eds.; Elsevier: Amsterdam, The Netherlands, 2016; pp. 63–100. [Google Scholar]

- Raper, J. GIS, Mobile and Locational Based Services. In International Encyclopedia of Human Geography; Kitchin, R., Thrift, N., Eds.; Elsevier: Oxford, UK, 2009; pp. 513–519. [Google Scholar]

- Mahabir, R.; Schuchard, R.; Crooks, A.; Croitoru, A.; Stefanidis, A. Crowdsourcing Street View Imagery: A Comparison of Mapillary and OpenStreetCam. ISPRS Int. J. Geo-Inf. 2020, 9, 341. [Google Scholar] [CrossRef]

- Anguelov, D.; Dulong, C.; Filip, D.; Frueh, C.; Lafon, S.; Lyon, R.; Ogale, A.; Vincent, L.; Weaver, J. Google street view: Capturing the world at street level. Computer 2010, 43, 32–38. [Google Scholar] [CrossRef]

- Werner, P.A. Review of Implementation of Augmented Reality into the Georeferenced Analogue and Digital Maps and Images. Information 2019, 10, 12. [Google Scholar] [CrossRef] [Green Version]

- Leica Geosystems. Available online: http://www.leica-geosystems.com/ (accessed on 15 February 2022).

- Laguela, S.; Dorado, I.; Gesto, M.; Arias, P.; Gonzalez-Aguilera, D.; Lorenzo, H. Behavior Analysis of Novel Wearable Indoor Mapping System Based on 3D-SLAM. Sensors 2018, 18, 766. [Google Scholar] [CrossRef] [Green Version]

- Nocerino, E.; Menna, F.; Remondino, F.; Toschi, I.; Rodríguez-Gonzálvez, P. Investigation of indoor and outdoor performance of two portable mobile mapping systems. In Proceedings of the Videometrics, Range Imaging, and Applications, Munich, Germany, 26 June 2017. [Google Scholar]

- Tucci, G.; Visintini, D.; Bonora, V.; Parisi, E.I. Examination of Indoor Mobile Mapping Systems in a Diversified Internal/External Test Field. Appl. Sci. 2018, 8, 401. [Google Scholar] [CrossRef] [Green Version]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef] [Green Version]

- Maboudi, M.; Bánhidi, D.; Gerke, M. Evaluation of indoor mobile mapping systems. In Proceedings of the GFaI Workshop 3D North East, Berlin, Germany, 1–2 December 2017; pp. 125–134. [Google Scholar]

- Puente, I.; Gonzalez-Jorge, H.; Martinez-Sanchez, J.; Arias, P. Review of mobile mapping and surveying technologies. Measurement 2013, 46, 2127–2145. [Google Scholar] [CrossRef]

- Otero, R.; Laguela, S.; Garrido, I.; Arias, P. Mobile indoor mapping technologies: A review. Autom. Constr. 2020, 120, 103399. [Google Scholar] [CrossRef]

- Karimi, H.A.; Khattak, A.J.; Hummer, J.E. Evaluation of mobile mapping systems for roadway data collection. J. Comput. Civ. Eng. 2000, 14, 168–173. [Google Scholar] [CrossRef]

- Lovas, T.; Hadzijanisz, K.; Papp, V.; Somogyi, A. Indoor Building Survey Assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 251–257. [Google Scholar] [CrossRef]

- Han, Y.; Liu, W.; Huang, X.; Wang, S.; Qin, R. Stereo Dense Image Matching by Adaptive Fusion of Multiple-Window Matching Results. Remote Sens. 2020, 12, 3138. [Google Scholar] [CrossRef]

- Hirschmüller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Li, Y.; Hu, Y.; Song, R.; Rao, P.; Wang, Y. Coarse-to-Fine PatchMatch for Dense Correspondence. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 2233–2245. [Google Scholar] [CrossRef]

- Shen, S. Accurate multiple view 3D reconstruction using patch-based stereo for large-scale scenes. IEEE Trans. Image Process. 2013, 22, 1901–1914. [Google Scholar] [CrossRef]

- Barnes, C.; Shechtman, E.; Goldman, D.; Finkelstein, A. The Generalized PatchMatch Correspondence Algorithm. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 29–43. [Google Scholar]

- Li, T.; Zhang, H.; Gao, Z.; Niu, X.; El-sheimy, N. Tight Fusion of a Monocular Camera, MEMS-IMU, and Single-Frequency Multi-GNSS RTK for Precise Navigation in GNSS-Challenged Environments. Remote Sens. 2019, 11, 610. [Google Scholar] [CrossRef] [Green Version]

- Grewal, M.S.; Andrews, A.P.; Bartone, C.G. Global Navigation Satellite Systems, Inertial Navigation, and Integration; John Wiley & Sons: Hoboken, NJ, USA, 2020. [Google Scholar]

- Hofmann-Wellenhof, B.; Lichtenegger, H.; Wasle, E. GNSS—Global Navigation Satellite Systems: GPS, GLONASS, Galileo, and More; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Gan-Mor, S.; Clark, R.L.; Upchurch, B.L. Implement lateral position accuracy under RTK-GPS tractor guidance. Comput. Electron. Agric. 2007, 59, 31–38. [Google Scholar] [CrossRef]

- Shi, B.; Wang, M.; Wang, Y.; Bai, Y.; Lin, K.; Yang, F. Effect Analysis of GNSS/INS Processing Strategy for Sufficient Utilization of Urban Environment Observations. Sensors 2021, 21, 620. [Google Scholar] [CrossRef] [PubMed]

- Wei-Wen, K. Integration of GPS and dead-reckoning navigation systems. In Proceedings of the Vehicle Navigation and Information Systems Conference, Troy, MI, USA, 20–23 October 1991; pp. 635–643. [Google Scholar]

- Noureldin, A.; Karamat, T.B.; Georgy, J. Fundamentals of Inertial Navigation, Satellite-Based Positioning and Their Integration; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Ahmed, H.; Tahir, M. Accurate attitude estimation of a moving land vehicle using low-cost MEMS IMU sensors. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1723–1739. [Google Scholar] [CrossRef]

- Petrie, G. An introduction to the technology: Mobile mapping systems. Geoinformatics 2010, 13, 32. [Google Scholar]

- Falco, G.; Pini, M.; Marucco, G. Loose and Tight GNSS/INS Integrations: Comparison of Performance Assessed in Real Urban Scenarios. Sensors 2017, 17, 255. [Google Scholar] [CrossRef]

- Tao, V.; Li, J. Advances in Mobile Mapping Technology; ISPRS Series; Taylor & Francis, Inc.: London, UK, 2007; Volume 4. [Google Scholar]

- Mehendale, N.; Neoge, S. Review on Lidar Technology. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Wandinger, U. Introduction to lidar. In Lidar; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1–18. [Google Scholar]

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef] [Green Version]

- RIEGL. Available online: http://www.riegl.com/ (accessed on 11 February 2022).

- Trimble. Available online: https://www.trimble.com/ (accessed on 11 February 2022).

- Velodyne. Available online: https://velodynelidar.com/ (accessed on 8 February 2022).

- Ouster. Available online: http://ouster.com/ (accessed on 8 February 2022).

- Luminar Technologies. Available online: https://www.luminartech.com/ (accessed on 8 February 2022).

- Innoviz Technologies. Available online: http://www.innoviz.tech/ (accessed on 8 February 2022).

- Li, Y.; Ibanez-Guzman, J. Lidar for autonomous driving: The principles, challenges, and trends for automotive lidar and perception systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Yoo, H.W.; Druml, N.; Brunner, D.; Schwarzl, C.; Thurner, T.; Hennecke, M.; Schitter, G. MEMS-based lidar for autonomous driving. Elektrotechnik Und Inf. 2018, 135, 408–415. [Google Scholar] [CrossRef] [Green Version]

- Poulton, C.V.; Byrd, M.J.; Timurdogan, E.; Russo, P.; Vermeulen, D.; Watts, M.R. Optical Phased Arrays for Integrated Beam Steering. In Proceedings of the IEEE International Conference on Group IV Photonics, Cancun, Mexico, 29–31 August 2018; pp. 1–2. [Google Scholar]

- Amzajerdian, F.; Roback, V.E.; Bulyshev, A.; Brewster, P.F.; Hines, G.D. Imaging flash lidar for autonomous safe landing and spacecraft proximity operation. In Proceedings of the AIAA SPACE, Long Beach, CA, USA, 13–16 September 2016; p. 5591. [Google Scholar]

- Zhou, G.; Zhou, X.; Yang, J.; Tao, Y.; Nong, X.; Baysal, O. Flash Lidar Sensor Using Fiber-Coupled APDs. IEEE Sens. J. 2015, 15, 4758–4768. [Google Scholar] [CrossRef]

- Yokozuka, M.; Koide, K.; Oishi, S.; Banno, A. LiTAMIN: LiDAR-based Tracking and MappINg by Stabilized ICP for Geometry Approximation with Normal Distributions. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5143–5150. [Google Scholar]

- Yokozuka, M.; Koide, K.; Oishi, S.; Banno, A. LiTAMIN2: Ultra Light LiDAR-based SLAM using Geometric Approximation applied with KL-Divergence. In Proceedings of the IEEE International Conference on Robotics and Automation, Philadelphia, PA, USA, 30 May–5 June 2021; pp. 11619–11625. [Google Scholar]

- Droeschel, D.; Behnke, S. Efficient continuous-time SLAM for 3D lidar-based online mapping. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–26 May 2018; pp. 5000–5007. [Google Scholar]

- Milioto, A.; Vizzo, I.; Behley, J.; Stachniss, C. RangeNet++: Fast and Accurate LiDAR Semantic Segmentation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, The Venetian Macao, Macau, 3–8 November 2019; pp. 4213–4220. [Google Scholar]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguère, P.; Behley, J.; Stachniss, C. SuMa++: Efficient LiDAR-based Semantic SLAM. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, The Venetian Macao, Macau, 3–8 November 2019; pp. 4530–4537. [Google Scholar]

- Zhang, J.; Xiao, W.; Coifman, B.; Mills, J.P. Vehicle Tracking and Speed Estimation from Roadside Lidar. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5597–5608. [Google Scholar] [CrossRef]

- Arcos-García, Á.; Soilán, M.; Álvarez-García, J.A.; Riveiro, B. Exploiting synergies of mobile mapping sensors and deep learning for traffic sign recognition systems. Expert Syst. Appl. 2017, 89, 286–295. [Google Scholar] [CrossRef]

- Wan, R.; Huang, Y.; Xie, R.; Ma, P. Combined Lane Mapping Using a Mobile Mapping System. Remote Sens. 2019, 11, 305. [Google Scholar] [CrossRef] [Green Version]

- Microsoft Azure. Available online: https://azure.microsoft.com/en-us/services/kinect-dk/ (accessed on 11 February 2022).

- Intel RealSense. Available online: https://www.intelrealsense.com/ (accessed on 11 February 2022).

- Teledyne FLIR LLC. Available online: http://www.flir.com/ (accessed on 11 February 2022).

- El-Hashash, M.M.; Aly, H.A. High-speed video haze removal algorithm for embedded systems. J. Real-Time Image Process. 2019, 16, 1117–1128. [Google Scholar] [CrossRef]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking Single-Image Dehazing and Beyond. IEEE Trans. Image Process. 2019, 28, 492–505. [Google Scholar] [CrossRef] [Green Version]

- Remondino, F.; Fraser, C. Digital camera calibration methods: Considerations and comparisons. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 266–272. [Google Scholar]

- Lee, H.S.; Lee, K.M. Dense 3D Reconstruction from Severely Blurred Images Using a Single Moving Camera. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 273–280. [Google Scholar]

- Blaser, S.; Meyer, J.; Nebiker, S.; Fricker, L.; Weber, D. Centimetre-accuracy in forests and urban canyons—Combining a high-performance image-based mobile mapping backpack with new georeferencing methods. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, V-1-2020, 333–341. [Google Scholar] [CrossRef]

- Kukko, A.; Kaartinen, H.; Hyyppä, J.; Chen, Y. Multiplatform Mobile Laser Scanning: Usability and Performance. Sensors 2012, 12, 11712–11733. [Google Scholar] [CrossRef] [Green Version]

- Karam, S.; Vosselman, G.; Peter, M.; Hosseinyalamdary, S.; Lehtola, V. Design, Calibration, and Evaluation of a Backpack Indoor Mobile Mapping System. Remote Sens. 2019, 11, 905. [Google Scholar] [CrossRef] [Green Version]

- Wen, C.; Dai, Y.; Xia, Y.; Lian, Y.; Tan, J.; Wang, C.; Li, J. Toward Efficient 3-D Colored Mapping in GPS-/GNSS-Denied Environments. IEEE Geosci. Remote Sens. Lett. 2020, 17, 147–151. [Google Scholar] [CrossRef]

- Fassi, F.; Perfetti, L. Backpack mobile mapping solution for dtm extraction of large inaccessible spaces. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 473–480. [Google Scholar] [CrossRef] [Green Version]

- Ilci, V.; Toth, C. High Definition 3D Map Creation Using GNSS/IMU/LiDAR Sensor Integration to Support Autonomous Vehicle Navigation. Sensors 2020, 20, 899. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Optech. Available online: http://www.teledyneoptech.com/ (accessed on 15 February 2022).

- Topcon Positioning Systems, Inc. Available online: http://topconpositioning.com/ (accessed on 15 February 2022).

- Hi-Target Navigation Technology Corporation. Available online: https://en.hi-target.com.cn/ (accessed on 15 February 2022).

- VIAMETRIS. Available online: https://viametris.com/ (accessed on 15 February 2022).

- Wu, H.; Yao, L.; Xu, Z.; Li, Y.; Ao, X.; Chen, Q.; Li, Z.; Meng, B. Road pothole extraction and safety evaluation by integration of point cloud and images derived from mobile mapping sensors. Adv. Eng. Inform. 2019, 42, 100936. [Google Scholar] [CrossRef]

- Sairam, N.; Nagarajan, S.; Ornitz, S. Development of Mobile Mapping System for 3D Road Asset Inventory. Sensors 2016, 16, 367. [Google Scholar] [CrossRef] [Green Version]

- Voelsen, M.; Schachtschneider, J.; Brenner, C. Classification and Change Detection in Mobile Mapping LiDAR Point Clouds. J. Photogramm. Remote Sens. Geoinf. Sci. 2021, 89, 195–207. [Google Scholar] [CrossRef]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection—Approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef] [Green Version]

- Kremer, J.; Grimm, A. The Railmapper—A Dedicated Mobile Lidar Mapping System for Railway Networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39-B5, 477–482. [Google Scholar] [CrossRef] [Green Version]

- Cahalane, C.; McElhinney, C.P.; McCarthy, T. Mobile mapping system performance-an analysis of the effect of laser scanner configuration and vehicle velocity on scan profiles. In Proceedings of the European Laser Mapping Forum, The Hague, The Netherlands, 10 December 2010. [Google Scholar]

- Scotti, F.; Onori, D.; Scaffardi, M.; Lazzeri, E.; Bogoni, A.; Laghezza, F. Multi-Frequency Lidar/Radar Integrated System for Robust and Flexible Doppler Measurements. IEEE Photonics Technol. Lett. 2015, 27, 2268–2271. [Google Scholar] [CrossRef]

- Lauterbach, H.A.; Borrmann, D.; Hess, R.; Eck, D.; Schilling, K.; Nuchter, A. Evaluation of a Backpack-Mounted 3D Mobile Scanning System. Remote Sens. 2015, 7, 13753–13781. [Google Scholar] [CrossRef] [Green Version]

- Debeunne, C.; Vivet, D. A Review of Visual-LiDAR Fusion based Simultaneous Localization and Mapping. Sensors 2020, 20, 2068. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- GEXCEL. Available online: https://gexcel.it/ (accessed on 15 February 2022).

- GeoSLAM Limited. Available online: http://www.geoslam.com/ (accessed on 15 February 2022).

- NavVis. Available online: https://www.navvis.com/ (accessed on 15 February 2022).

- Wen, C.L.; Pan, S.Y.; Wang, C.; Li, J. An Indoor Backpack System for 2-D and 3-D Mapping of Building Interiors. IEEE Geosci. Remote Sens. Lett. 2016, 13, 992–996. [Google Scholar] [CrossRef]

- Raval, S.; Banerjee, B.P.; Singh, S.K.; Canbulat, I. A Preliminary Investigation of Mobile Mapping Technology for Underground Mining. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 6071–6074. [Google Scholar]

- Zlot, R.; Bosse, M.; Greenop, K.; Jarzab, Z.; Juckes, E.; Roberts, J. Efficiently capturing large, complex cultural heritage sites with a handheld mobile 3D laser mapping system. J. Cult. Herit. 2014, 15, 670–678. [Google Scholar] [CrossRef]

- Puche, J.M.; Macias Solé, J.; Sola-Morales, P.; Toldrà, J.; Fernandez, I. Mobile mapping and laser scanner to interrelate the city and its heritage of Roman Circus of Tarragona. In Proceedings of the 3rd International Conference on Preservation, Maintenance and Rehabilitation of Historical Buildings and Structures, Braga, Portugal, 14–16 June 2017; pp. 21–28. [Google Scholar]

- Nespeca, R. Towards a 3D digital model for management and fruition of Ducal Palace at Urbino. An integrated survey with mobile mapping. SCIRES-IT-SCIentific RESearch Inf. Technol. 2019, 8, 1–14. [Google Scholar] [CrossRef]

- Vatandaşlar, C.; Zeybek, M. Extraction of forest inventory parameters using handheld mobile laser scanning: A case study from Trabzon, Turkey. Measurement 2021, 177, 109328. [Google Scholar] [CrossRef]

- Ryding, J.; Williams, E.; Smith, M.J.; Eichhorn, M.P. Assessing Handheld Mobile Laser Scanners for Forest Surveys. Remote Sens. 2015, 7, 1095–1111. [Google Scholar] [CrossRef] [Green Version]

- Previtali, M.; Banfi, F.; Brumana, R. Handheld 3D Mobile Scanner (SLAM): Data Simulation and Acquisition for BIM Modelling. In R3 in Geomatics: Research, Results and Review; Springer: Cham, Switzerland, 2020; pp. 256–266. [Google Scholar]

- Maset, E.; Cucchiaro, S.; Cazorzi, F.; Crosilla, F.; Fusiello, A.; Beinat, A. Investigating the performance of a handheld mobile mapping system in different outdoor scenarios. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 103–109. [Google Scholar] [CrossRef]

- Karam, S.; Peter, M.; Hosseinyalamdary, S.; Vosselman, G. An evaluation pipeline for indoor laser scanning point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-1, 85–92. [Google Scholar] [CrossRef] [Green Version]

- Faro Technologies. Available online: https://www.faro.com/ (accessed on 15 February 2022).

- Kubelka, V.; Oswald, L.; Pomerleau, F.; Colas, F.; Svoboda, T.; Reinstein, M. Robust Data Fusion of Multimodal Sensory Information for Mobile Robots. J. Field Robot. 2015, 32, 447–473. [Google Scholar] [CrossRef] [Green Version]

- Simanek, J.; Kubelka, V.; Reinstein, M. Improving multi-modal data fusion by anomaly detection. Auton. Robot. 2015, 39, 139–154. [Google Scholar] [CrossRef]

- Ravi, R.; Lin, Y.-J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous system calibration of a multi-lidar multicamera mobile mapping platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Simon, D. Optimal State Estimation: Kalman, H Infinity, and Nonlinear Approaches; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Farrell, J.; Barth, M. The Global Positioning System and Inertial Navigation; Mcgraw-Hill: New York, NY, USA, 1999; Volume 61. [Google Scholar]

- Vanicek, P.; Omerbašic, M. Does a navigation algorithm have to use a Kalman filter? Can. Aeronaut. Space J. 1999, 45, 292–296. [Google Scholar]

- Zarchan, P.; Musoff, H. Fundamentals of Kalman Filtering—A Practical Approach, 4th ed.; ARC: Reston, VA, USA, 2015; Volume 246. [Google Scholar]

- Ristic, B.; Arulampalam, S.; Gordon, N. Beyond the Kalman Filter: Particle Filters for Tracking Applications; Artech House: London, UK, 2003. [Google Scholar]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–108. [Google Scholar] [CrossRef] [Green Version]

- Del Moral, P.; Doucet, A. Particle methods: An introduction with applications. ESAIM Proc. 2014, 44, 1–46. [Google Scholar] [CrossRef]

- Georgy, J.; Karamat, T.; Iqbal, U.; Noureldin, A. Enhanced MEMS-IMU/odometer/GPS integration using mixture particle filter. GPS Solut. 2011, 15, 239–252. [Google Scholar] [CrossRef]

- Brown, D.C. Decentering distortion of lenses. Photogramm. Eng. Remote Sens. 1966, 32, 444–462. [Google Scholar]

- Gruen, A.; Beyer, H.A. System calibration through self-calibration. In Calibration and Orientation of Cameras in Computer Vision; Armin Gruen, T.S.H., Ed.; Springer: Berlin/Heidelberg, Germany, 2001; Volume 34, pp. 163–193. [Google Scholar]

- Brown, D.C. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Fraser, C.S. Automatic Camera Calibration in Close Range Photogrammetry. Photogramm. Eng. Remote Sens. 2013, 79, 381–388. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Tungadi, F.; Kleeman, L. Time synchronisation and calibration of odometry and range sensors for high-speed mobile robot mapping. In Proceedings of the Australasian Conference on Robotics and Automation, Canberra, Australia, 3–5 December 2008. [Google Scholar]

- Madeira, S.; Gonçalves, J.A.; Bastos, L. Sensor integration in a low cost land mobile mapping system. Sensors 2012, 12, 2935–2953. [Google Scholar] [CrossRef]

- Shim, I.; Shin, S.; Bok, Y.; Joo, K.; Choi, D.-G.; Lee, J.-Y.; Park, J.; Oh, J.-H.; Kweon, I.S. Vision system and depth processing for DRC-HUBO+. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2456–2463. [Google Scholar]

- Liu, X.; Neuyen, M.; Yan, W.Q. Vehicle-Related Scene Understanding Using Deep Learning. In Pattern Recognition; Springer: Singapore, 2020; pp. 61–73. [Google Scholar]

- Hofmarcher, M.; Unterthiner, T.; Arjona-Medina, J.; Klambauer, G.; Hochreiter, S.; Nessler, B. Visual Scene Understanding for Autonomous Driving Using Semantic Segmentation. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Samek, W., Montavon, G., Vedaldi, A., Hansen, L.K., Müller, K.-R., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 285–296. [Google Scholar]

- Pintore, G.; Ganovelli, F.; Gobbetti, E.; Scopigno, R. Mobile Mapping and Visualization of Indoor Structures to Simplify Scene Understanding and Location Awareness. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10, 15–16 October 2016; pp. 130–145. [Google Scholar]

- Wald, J.; Tateno, K.; Sturm, J.; Navab, N.; Tombari, F. Real-Time Fully Incremental Scene Understanding on Mobile Platforms. IEEE Robot. Autom. Lett. 2018, 3, 3402–3409. [Google Scholar] [CrossRef]

- Wu, Z.; Deng, X.; Li, S.; Li, Y. OC-SLAM: Steadily Tracking and Mapping in Dynamic Environments. Front. Energy Res. 2021, 9, 803631. [Google Scholar] [CrossRef]

- Csurka, G. A Comprehensive Survey on Domain Adaptation for Visual Applications. In Domain Adaptation in Computer Vision Applications; Csurka, G., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 1–35. [Google Scholar]

- Dvornik, N.; Shmelkov, K.; Mairal, J.; Schmid, C. BlitzNet: A Real-Time Deep Network for Scene Understanding. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4174–4182. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Schön, M.; Buchholz, M.; Dietmayer, K. MGNet: Monocular Geometric Scene Understanding for Autonomous Driving. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 15784–15795. [Google Scholar]

- Chen, K.; Oldja, R.; Smolyanskiy, N.; Birchfield, S.; Popov, A.; Wehr, D.; Eden, I.; Pehserl, J. MVLidarNet: Real-Time Multi-Class Scene Understanding for Autonomous Driving Using Multiple Views. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 2288–2294. [Google Scholar]

- Sánchez-Rodríguez, A.; Soilán, M.; Cabaleiro, M.; Arias, P. Automated Inspection of Railway Tunnels’ Power Line Using LiDAR Point Clouds. Remote Sens. 2019, 11, 2567. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Wang, C.; Yang, Z.; Chen, Y.; Li, J. Automatic railway power line extraction using mobile laser scanning data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 615–619. [Google Scholar] [CrossRef] [Green Version]

- Stricker, R.; Eisenbach, M.; Sesselmann, M.; Debes, K.; Gross, H.M. Improving Visual Road Condition Assessment by Extensive Experiments on the Extended GAPs Dataset. In Proceedings of the International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Eisenbach, M.; Stricker, R.; Sesselmann, M.; Seichter, D.; Gross, H. Enhancing the quality of visual road condition assessment by deep learning. In Proceedings of the World Road Congress, Abu Dhabi, United Arab Emirates, 6–10 October 2019. [Google Scholar]

- Aoki, K.; Yamamoto, K.; Shimamura, H. Evaluation model for pavement surface distress on 3D point clouds from mobile mapping system. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B3, 87–90. [Google Scholar] [CrossRef] [Green Version]

- Ortiz-Coder, P.; Sánchez-Ríos, A. A Self-Assembly Portable Mobile Mapping System for Archeological Reconstruction Based on VSLAM-Photogrammetric Algorithm. Sensors 2019, 19, 3952. [Google Scholar] [CrossRef] [Green Version]

- Costin, A.; Adibfar, A.; Hu, H.; Chen, S.S. Building Information Modeling (BIM) for transportation infrastructure—Literature review, applications, challenges, and recommendations. Autom. Constr. 2018, 94, 257–281. [Google Scholar] [CrossRef]

- Laituri, M.; Kodrich, K. On Line Disaster Response Community: People as Sensors of High Magnitude Disasters Using Internet GIS. Sensors 2008, 8, 3037–3055. [Google Scholar] [CrossRef]

- Gusella, L.; Adams, B.; Bitelli, G. Use of mobile mapping technology for post-disaster damage information collection and integration with remote sensing imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2007, 34, 1–8. [Google Scholar]

- Saarinen, N.; Vastaranta, M.; Vaaja, M.; Lotsari, E.; Jaakkola, A.; Kukko, A.; Kaartinen, H.; Holopainen, M.; Hyyppä, H.; Alho, P. Area-Based Approach for Mapping and Monitoring Riverine Vegetation Using Mobile Laser Scanning. Remote Sens. 2013, 5, 5285–5303. [Google Scholar] [CrossRef] [Green Version]

- Monnier, F.; Vallet, B.; Soheilian, B. Trees detection from laser point clouds acquired in dense urban areas by a mobile mapping system. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 245–250. [Google Scholar] [CrossRef] [Green Version]

- Holopainen, M.; Vastaranta, M.; Kankare, V.; Hyyppä, H.; Vaaja, M.; Hyyppä, J.; Liang, X.; Litkey, P.; Yu, X.; Kaartinen, H.; et al. The use of ALS, TLS and VLS measurements in mapping and monitoring urban trees. In Proceedings of the Joint Urban Remote Sensing Event, Munich, Germany, 11–13 April 2011; pp. 29–32. [Google Scholar]

- Jaakkola, A.; Hyyppä, J.; Kukko, A.; Yu, X.; Kaartinen, H.; Lehtomäki, M.; Lin, Y. A low-cost multi-sensoral mobile mapping system and its feasibility for tree measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Sánchez-Aparicio, L.J.; Mora, R.; Conde, B.; Maté-González, M.Á.; Sánchez-Aparicio, M.; González-Aguilera, D. Integration of a Wearable Mobile Mapping Solution and Advance Numerical Simulations for the Structural Analysis of Historical Constructions: A Case of Study in San Pedro Church (Palencia, Spain). Remote Sens. 2021, 13, 1252. [Google Scholar] [CrossRef]

- Barba, S.; Ferreyra, C.; Cotella, V.A.; Filippo, A.d.; Amalfitano, S. A SLAM Integrated Approach for Digital Heritage Documentation. In Proceedings of the International Conference on Human-Computer Interaction, Washington, DC, USA, 24–29 July 2021; pp. 27–39. [Google Scholar]

- Malinverni, E.S.; Pierdicca, R.; Bozzi, C.A.; Bartolucci, D. Evaluating a SLAM-Based Mobile Mapping System: A Methodological Comparison for 3D Heritage Scene Real-Time Reconstruction. In Proceedings of the Metrology for Archaeology and Cultural Heritage, Cassino, Italy, 22–24 October 2018; pp. 265–270. [Google Scholar]

- Jan, J.-F. Digital heritage inventory using open source geospatial software. In Proceedings of the 22nd International Conference on Virtual System & Multimedia, Kuala Lumpur, Malaysia, 17–21 October 2016; pp. 1–8. [Google Scholar]

- Radopoulou, S.C.; Brilakis, I. Improving Road Asset Condition Monitoring. Transp. Res. Procedia 2016, 14, 3004–3012. [Google Scholar] [CrossRef] [Green Version]

- Douangphachanh, V.; Oneyama, H. Using smartphones to estimate road pavement condition. In Proceedings of the International Symposium for Next Generation Infrastructure, Wollongong, Australia, 1–4 October 2013. [Google Scholar]

- Koloushani, M.; Ozguven, E.E.; Fatemi, A.; Tabibi, M. Mobile Mapping System-based Methodology to Perform Automated Road Safety Audits to Improve Horizontal Curve Safety on Rural Roadways. Comput. Res. Prog. Appl. Sci. Eng. (CRPASE) 2020, 6, 263–275. [Google Scholar]

- Agina, S.; Shalkamy, A.; Gouda, M.; El-Basyouny, K. Automated Assessment of Passing Sight Distance on Rural Highways using Mobile LiDAR Data. Transp. Res. Rec. 2021, 2675, 676–688. [Google Scholar] [CrossRef]

- Nikoohemat, S.; Diakité, A.A.; Zlatanova, S.; Vosselman, G. Indoor 3D reconstruction from point clouds for optimal routing in complex buildings to support disaster management. Autom. Constr. 2020, 113, 103109. [Google Scholar] [CrossRef]

- Bienert, A.; Georgi, L.; Kunz, M.; Von Oheimb, G.; Maas, H.-G. Automatic extraction and measurement of individual trees from mobile laser scanning point clouds of forests. Ann. Bot. 2021, 128, 787–804. [Google Scholar] [CrossRef]

- Holopainen, M.; Kankare, V.; Vastaranta, M.; Liang, X.; Lin, Y.; Vaaja, M.; Yu, X.; Hyyppä, J.; Hyyppä, H.; Kaartinen, H.; et al. Tree mapping using airborne, terrestrial and mobile laser scanning—A case study in a heterogeneous urban forest. Urban For. Urban Green. 2013, 12, 546–553. [Google Scholar] [CrossRef]

- Rutzinger, M.; Pratihast, A.K.; Oude Elberink, S.; Vosselman, G. Detection and modelling of 3D trees from mobile laser scanning data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2010, 38, 520–525. [Google Scholar]

- Pratihast, A.K.; Thakur, J.K. Urban Tree Detection Using Mobile Laser Scanning Data. In Geospatial Techniques for Managing Environmental Resources; Thakur, J.K., Singh, S.K., Ramanathan, A.L., Prasad, M.B.K., Gossel, W., Eds.; Springer: Dordrecht, The Netherlands, 2011; pp. 188–200. [Google Scholar]

- Herrero-Huerta, M.; Lindenbergh, R.; Rodríguez-Gonzálvez, P. Automatic tree parameter extraction by a Mobile LiDAR System in an urban context. PLoS ONE 2018, 13, e0196004. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hassani, F. Documentation of cultural heritage; techniques, potentials, and constraints. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W7, 207–214. [Google Scholar] [CrossRef] [Green Version]

- Di Stefano, F.; Chiappini, S.; Gorreja, A.; Balestra, M.; Pierdicca, R. Mobile 3D scan LiDAR: A literature review. Geomat. Nat. Hazards Risk 2021, 12, 2387–2429. [Google Scholar] [CrossRef]

- Parsizadeh, F.; Ibrion, M.; Mokhtari, M.; Lein, H.; Nadim, F. Bam 2003 earthquake disaster: On the earthquake risk perception, resilience and earthquake culture—Cultural beliefs and cultural landscape of Qanats, gardens of Khorma trees and Argh-e Bam. Int. J. Disaster Risk Reduct. 2015, 14, 457–469. [Google Scholar] [CrossRef]

- Remondino, F. Heritage Recording and 3D Modeling with Photogrammetry and 3D Scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez-Gonzálvez, P.; Jiménez Fernández-Palacios, B.; Muñoz-Nieto, Á.L.; Arias-Sanchez, P.; Gonzalez-Aguilera, D. Mobile LiDAR System: New Possibilities for the Documentation and Dissemination of Large Cultural Heritage Sites. Remote Sens. 2017, 9, 189. [Google Scholar] [CrossRef] [Green Version]

- Moretti, N.; Ellul, C.; Re Cecconi, F.; Papapesios, N.; Dejaco, M.C. GeoBIM for built environment condition assessment supporting asset management decision making. Autom. Constr. 2021, 130, 103859. [Google Scholar] [CrossRef]

- Mora, R.; Sánchez-Aparicio, L.J.; Maté-González, M.Á.; García-Álvarez, J.; Sánchez-Aparicio, M.; González-Aguilera, D. An historical building information modelling approach for the preventive conservation of historical constructions: Application to the Historical Library of Salamanca. Autom. Constr. 2021, 121, 103449. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, Y. Reliability Evaluation of Sight Distance on Mountainous Expressway Using 3D Mobile Mapping. In Proceedings of the 2019 5th International Conference on Transportation Information and Safety (ICTIS), Liverpool, UK, 14–17 July 2019; pp. 1–7. [Google Scholar]

| Sensor | Description | Benefits | Limitations |

|---|---|---|---|

| GNSS receiver | The signals from orbiting satellites are utilized by the GNSS receiver to compute the position, velocity, and elevation. Some examples include GPS, GLONASS, Galileo, and BeiDou. |

|

|

| IMU | IMU is an egocentric sensor that records the relative position of the orientation and directional acceleration of the host platform. |

|

|

| DMI | A supplementary positioning sensor measures the traveled distance of the platform, i.e., information derived from a speedometer. |

|

|

| Company | Model | Range (m) | Range Accuracy (cm) | Number of Beams | Horizontal FoV (°) | Vertical FoV (°) | Horizontal Resolution (°) | Vertical Resolution (°) | Points Per Second | Refresh Rate (Hz) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rotating | RIGEL | VQ-250 | 1.5–500 | 0.1 | — | 360 | — | — | — | 300,000 | — |

| VQ-450 | 1.5–800 | 0.8 | — | 360 | — | — | — | 550,000 | — | ||

| Trimble | MX50 laser scanner | 0.6–80 | 0.2 | — | 360 | — | — | — | 960,000 | — | |

| MX9 laser scanner | 1.2–420 | 0.5 | — | 360 | — | — | — | 1,000,000 | — | ||

| Velodyne | HDL-64E | 120 | ±2 | 64 | 360 | 26.9 | 0.08 to 0.35 | 0.4 | 1,300,000 | 5 to 20 | |

| HDL-32E | 100 | ±2 | 32 | 360 | 41.33 | 0.08 to 0.33 | 1.33 | 695,000 | 5 to 20 | ||

| Puck | 100 | ±3 | 16 | 360 | 30 | 0.1 to 0.4 | 2.0 | 300,000 | 5 to 20 | ||

| Puck LITE | 100 | ±3 | 16 | 360 | 30 | 0.1 to 0.4 | 2.0 | 300,000 | 5 to 20 | ||

| Puck Hi-Res | 100 | ±3 | 16 | 360 | 20 | 0.1 to 0.4 | 1.33 | 300,000 | 5 to 20 | ||

| Puck 32MR | 120 | ±3 | 32 | 360 | 40 | 0.1 to 0.4 | 0.33 (min) | 600,000 | 5 to 20 | ||

| Ultra Puck | 200 | ±3 | 32 | 360 | 40 | 0.1 to 0.4 | 0.33 (min) | 600,000 | 5 to 20 | ||

| Alpha Prime | 245 | ±3 | 128 | 360 | 40 | 0.1 to 0.4 | 0.11 (min) | 2,400,000 | 5 to 20 | ||

| Ouster | OS2-32 | 1 to 240 | ±2.5 to ±8 | 32 | 360 | 22.5 | 0.18 | 0.7 | 655,000 | 10, 20 | |

| OS2-64 | 1 to 240 | ±2.5 to ±8 | 64 | 360 | 22.5 | 0.18 | 0.36 | 1,311,000 | 10, 20 | ||

| OS2-128 | 1 to 240 | ±2.5 to ±8 | 128 | 360 | 22.5 | 0.18 | 0.18 | 2,621,000 | 10–20 | ||

| Hesai | PandarQT | 0.1 to 60 | ±3 | 64 | 360 | 104.2 | 0.6° | 1.45 | 384,000 | 10 | |

| PandarXT | 0.05 to 120 | ±1 | 32 | 360 | 31 | 0.09, 0.18, 0.36 | 1 | 640,000 | 5, 10, 20 | ||

| Oandar40M | 0.3 to 120 | ±5 to ±2 | 40 | 360 | 40 | 0.2, 0.4 | 1, 2, 3, 4, 5, 6 | 720,000 | 10, 20 | ||

| Oandar64 | 0.3 to 200 | ±5 to ±2 | 64 | 360 | 40 | 0.2, 0.4 | 1, 2, 3, 4, 5, 6 | 1,152,000 | 10, 20 | ||

| Pandar128E3X | 0.3 to 200 | ±8 to ±2 | 128 | 360 | 40 | 0.1, 0.2, 0.4 | 0.125, 0.5, 1 | 3,456,000 | 10, 20 | ||

| Solid-state | Luminar | IRIS | Up to 600 | — | 640 lines/s | 120 | 0–26 | 0.05 | 0.05 | 300 points/square degree | 1 to 30 |

| Innoviz | InnovizOne | 250 | — | — | 115 | 25 | 0.1 | 0.1 | — | 5 to 20 | |

| InnovizTwo | 300 | — | 8000 lines/s | 125 | 40 | 0.07 | 0.05 | — | 10 to 20 | ||

| Flash | LeddarTech | Pixell | Up to 56 | ±3 | — | 117.5 ± 2.5 | 16.0 ± 0.5 | — | — | — | 20 |

| Continental | HFL110 | 50 | — | — | 120 | 30 | — | — | — | 25 |

| Type | Description | Benefits | Limitations |

|---|---|---|---|

| Monocular | Single-lens camera. |

|

|

| Binocular | Two collocated cameras with known relative orientation capturing overlapping and synchronized image |

|

|

| RGB-D | Cameras that capture RGB and depth images at the same time |

|

|

| Multi-camera system | A spherical camera system with multiple cameras that can provide a 360° field of view |

|

|

| Fisheye | Spherical lens camera that has more than 180° field of view |

|

|

| System | Release Year | Indoor | Outdoor | Camera | LiDAR/Max. Range | IMU | GPS | Accuracy * | Applications | |

|---|---|---|---|---|---|---|---|---|---|---|

| Vehicle-mounted | Leica Pegasus: Two Ultimate | 2018 | 🗶 | 🗸 | 360° FoV | ZF9012 profiler 360° × 41.33°/100 m | 🗸 | 🗸 | 2 cm horizontal accuracy 1.5 cm vertical accuracy |

|

| Teledyne Optech Lynx HS600-D | 2017 | 🗶 | 🗸 | 360° FoV | 2 Optech sensors/130 m | 🗸 | 🗸 | ±5 cm absolute accuracy | ||

| Topcon IP-S3 HD1 | 2015 | 🗶 | 🗸 | 360° FoV | Velodyne HDL-32E LiDAR/100 m | 🗸 | 🗸 | 0.1 cm road surface accuracy (1 sigma) | ||

| Hi-Target HiScan-C | 2017 | 🗶 | 🗸 | 360° FoV | 650 m | 🗸 | 🗸 | 5 cm at 40 m range | ||

| Trimble MX7 | 🗶 | 🗸 | 360° FoV | 🗶 | 🗸 | 🗸 | — | |||

| Trimble MX50 | 2021 | 🗶 | 🗸 | 90% of a full sphere | 2 MX50 Laser scanner/80 m | 🗸 | 🗸 | 0.2 cm (laser scanner) | ||

| Trimble MX9 | 2018 | 🗶 | 🗸 | 1 spherical + 2 side looking + 1 backward/downward camera | MX9 Laser scanner/up to 420 m | 🗸 | 🗸 | 0.5 cm (laser scanner) | ||

| Viametris vMS3D | 2016 | 🗶 | 🗸 | FLIR Ladybug5+ | Velodyne VLP-16 + Velodyne HDL-32E | 🗸 | 🗸 | 2–3 cm relative accuracy | ||

| Handheld | HERON LITE Color | 2018 | 🗸 | 🗸 | 360° × 360° FoV | 1 Velodyne Puck/100 m | 🗸 | 🗶 | 3 cm relative accuracy |

|

| GeoSLAM Zeb Go | 2020 | 🗸 | 🗶 | Can be added, accessory | Hokuyo UTM-30LX laser scanner/30m | 🗶 | 🗶 | 1 to 3 cm relative accuracy | ||

| GeoSLAM Zeb Revo RT | 2015 | 🗸 | 🗶 | Can be added, accessory | Hokuyo UTM-30LX laser scanner/30m | 🗶 | 🗶 | 0.6 cm relative accuracy | ||

| GeoSLAM Zeb Horizon | 2018 | 🗸 | 🗸 | Can be added, accessory | Velodyne Puck VLP-16/100 m | 🗶 | 🗶 | 0.6 cm relative accuracy | ||

| Leica BLK2GO | 2018 | 🗸 | 🗸 | 3 camera system 300° × 150° FoV | Up to 25 m 360 × 270 | 🗶 | 🗶 | ±1 cm in an indoor environment with a scan duration of 2 min | ||

| Wearable | Leica Pegasus: Backpack | 2017 | 🗸 | 🗸 | 360° × 200° FoV | Dual Velodyne VLP-16/100 m | 🗸 | 🗸 | 2 to 3 cm relative accuracy 5 cm absolute accuracy | |

| HERON MS Twin | 2020 | 🗸 | 🗸 | 360° × 360° FoV | Dual Velodyne Puck/ 100 m | 🗸 | 🗶 | 3 cm relative accuracy | ||

| NavVis VLX | 2021 | 🗸 | 🗸 | 360° FoV | Dual Velodyne Puck LITE/100 m | 🗸 | 🗶 | 0.6 cm absolute accuracy at 68% confidence 1.5 cm absolute accuracy at 95% confidence | ||

| Viametris BMS3D-HD | 2019 | 🗸 | 🗸 | FLIR Ladybug5+ | 16 beams LiDAR + 32 beams LiDAR | 🗸 | 🗸 | 2 cm relative accuracy | ||

| Trolley | NavVis M6 | 2018 | 🗸 | 🗶 | 360° FoV | 6 Velodyne Puck LITE/100 m | 🗸 | 🗶 | 0.57 cm absolute accuracy at 68% confidence 1.38 cm absolute accuracy at 95% confidence |

|

| Leica ProScan | 2017 | 🗸 | 🗸 | 🗶 | Leica ScanStation P40, P30 or P16 | 🗸 | 🗸 | 0.12 cm (range accuracy for Lecia ScanStation P40) | ||

| Trimble Indoor | 2015 | 🗸 | 🗶 | 360° FoV | Trimble TX-5, FARO Focus X-130, X-330, S-70-A, S-150-A, S-350-A | 🗸 | 🗶 | 1 cm relative accuracy when combined with FARO Focus X-130 | ||

| FARO Focus Swift | 2020 | 🗸 | 🗶 | HDR camera | FARO Focus Laser Scanner with a FARO ScanPlan 2D mapper | 🗸 | 🗶 | 0.2 cm relative accuracy at 10 m range 0.1 cm absolute accuracy |

| Selected Applications | Highlights | |

|---|---|---|

| Road asset management and condition assessment | Extraction of road assets [79]; road condition assessment [133]; detection of pavement distress using deep-learning [134]; evaluation of pavement surface distress for maintenance planning [135]. |

|

| BIM | Low-cost MMS for BIM of archeological reconstruction [136]; analysis of BIM for transportation infrastructure [137]. |

|

| Emergency and disaster response | Network-based GIS for disaster response [138]; analyzing post-disaster damage [139]. |

|

| Vegetation mapping and detection | Mapping and monitoring riverine vegetation [140]; tree detection and measurement [141,142,143]. |

|

| Digital Heritage Conservation | Mapping a complex heritage site using handheld MMS [92]; mapping a museum in a complex building [94]; numerical simulations for structural analysis of historical constructions [144]; digital heritage documentation [145]; mapping archaeological sites [146]; development of a digital heritage inventory system [147]. |

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elhashash, M.; Albanwan, H.; Qin, R. A Review of Mobile Mapping Systems: From Sensors to Applications. Sensors 2022, 22, 4262. https://doi.org/10.3390/s22114262

Elhashash M, Albanwan H, Qin R. A Review of Mobile Mapping Systems: From Sensors to Applications. Sensors. 2022; 22(11):4262. https://doi.org/10.3390/s22114262

Chicago/Turabian StyleElhashash, Mostafa, Hessah Albanwan, and Rongjun Qin. 2022. "A Review of Mobile Mapping Systems: From Sensors to Applications" Sensors 22, no. 11: 4262. https://doi.org/10.3390/s22114262

APA StyleElhashash, M., Albanwan, H., & Qin, R. (2022). A Review of Mobile Mapping Systems: From Sensors to Applications. Sensors, 22(11), 4262. https://doi.org/10.3390/s22114262