A Novel Driving Noise Analysis Method for On-Road Traffic Detection

Abstract

1. Introduction

2. Materials and Methods

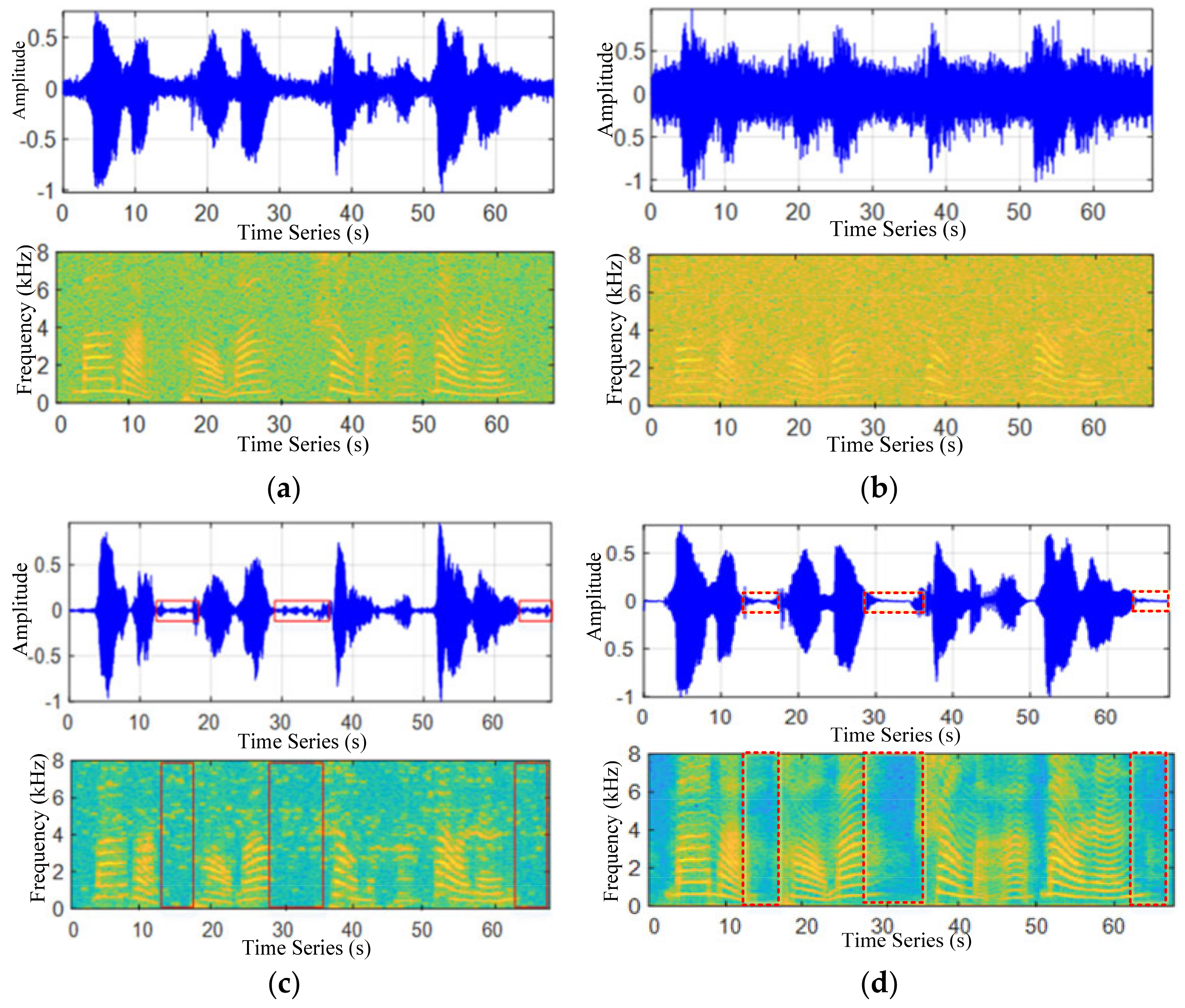

2.1. Pretreatment and Characteristic Analysis of Driving Noise

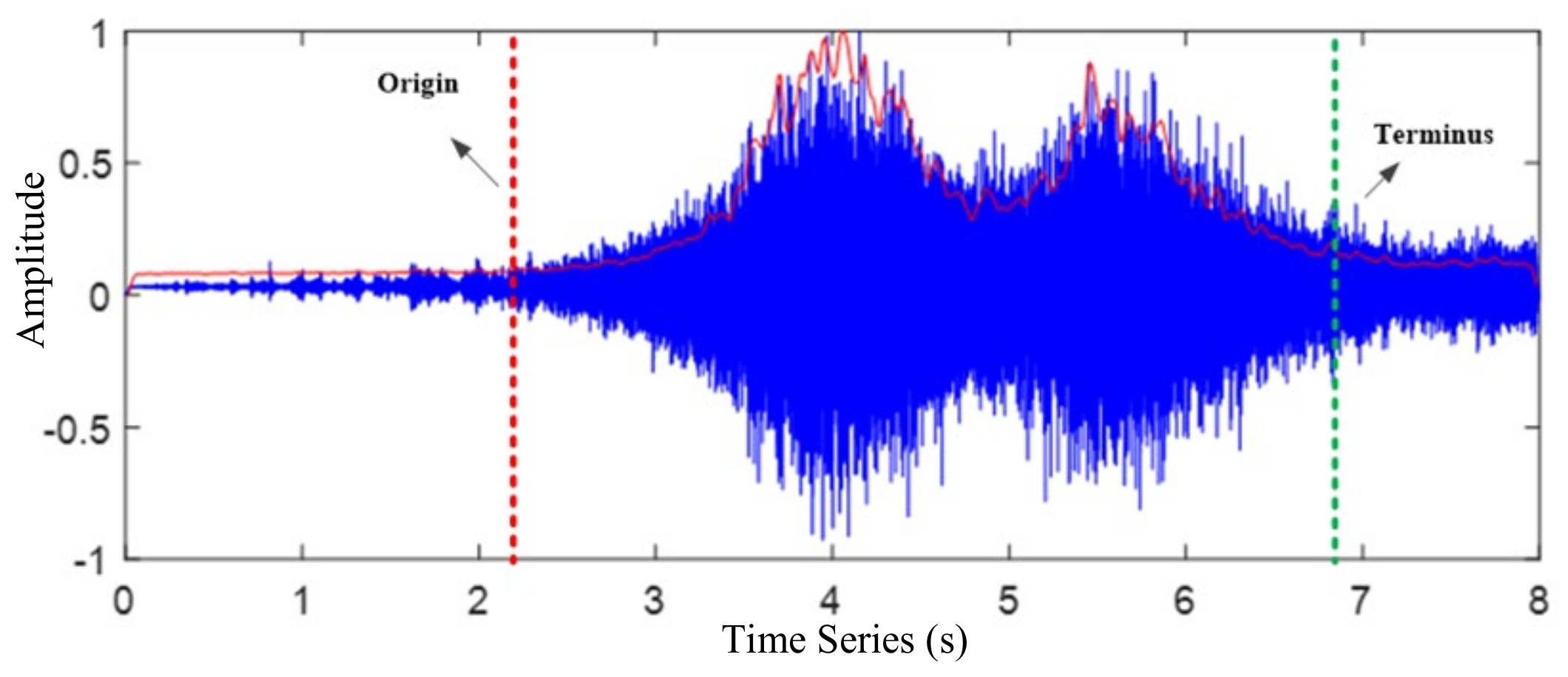

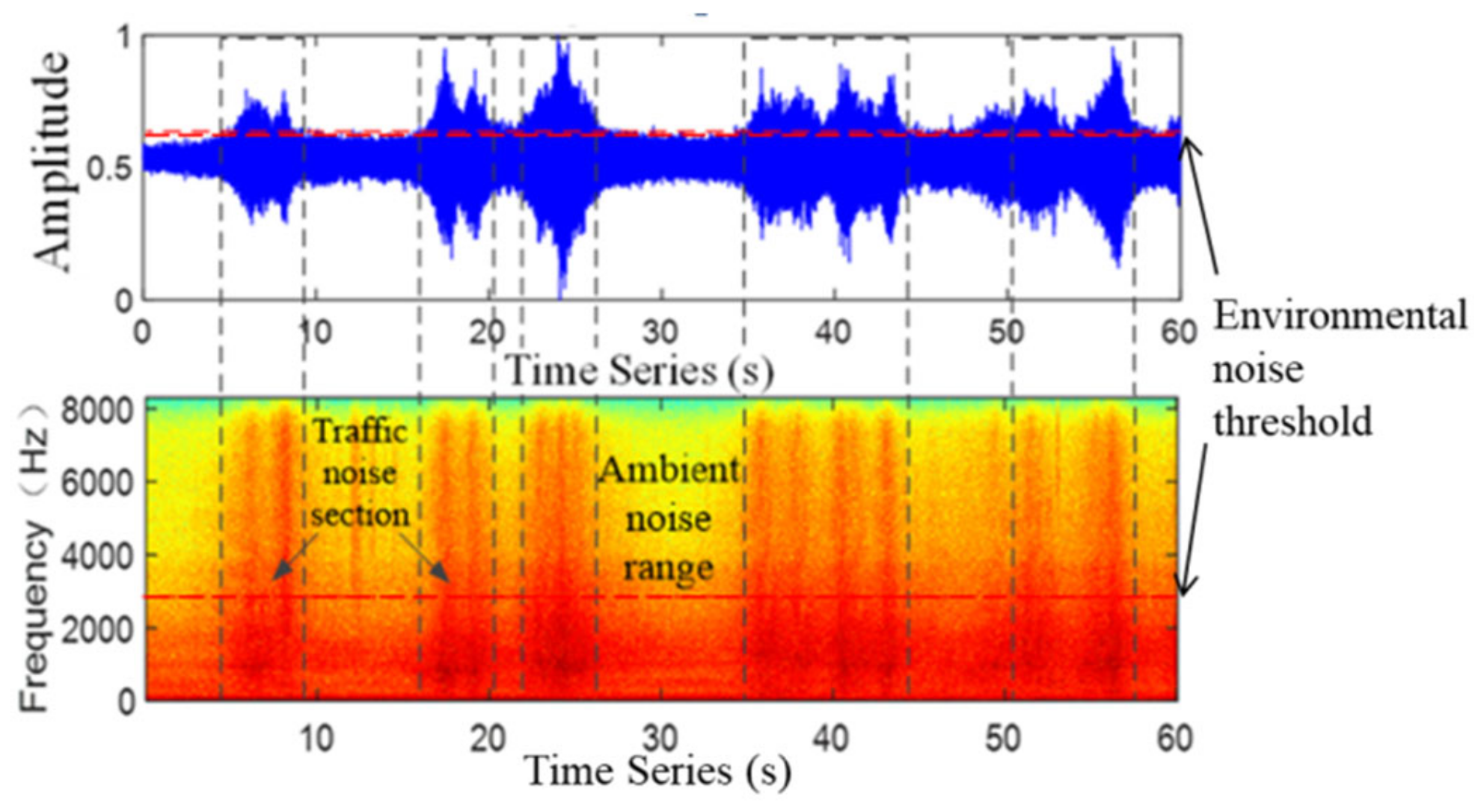

2.1.1. Pretreatment

2.1.2. Framing and Windowing

2.1.3. Normalized Processing

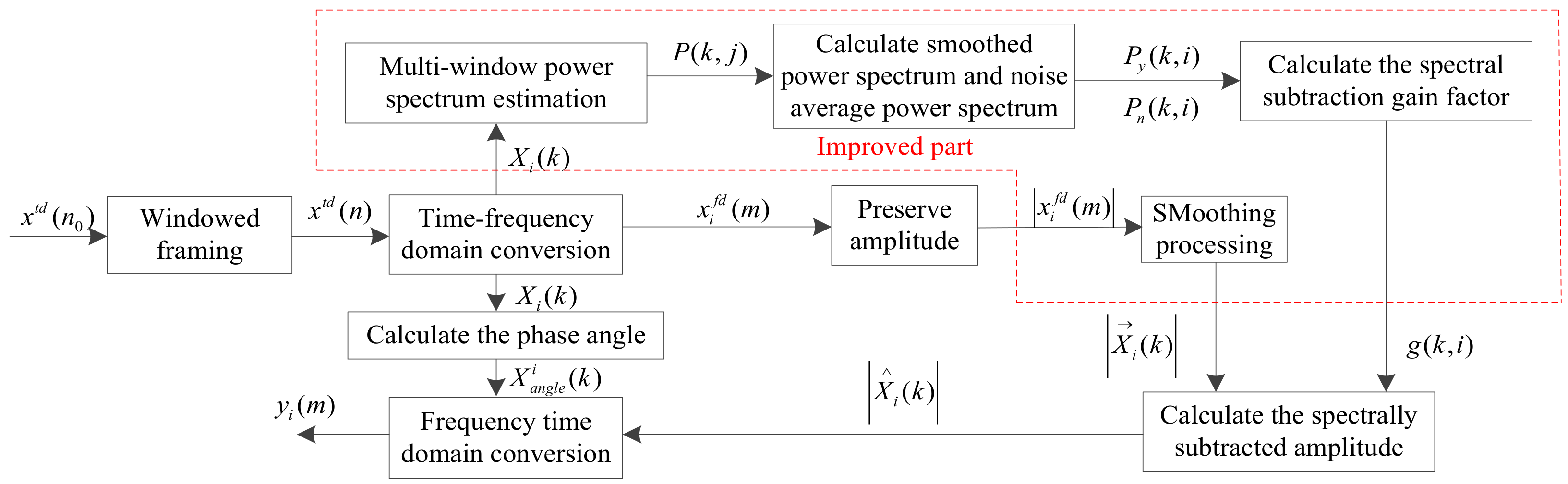

2.1.4. Improved Spectral Subtraction Noise Reduction

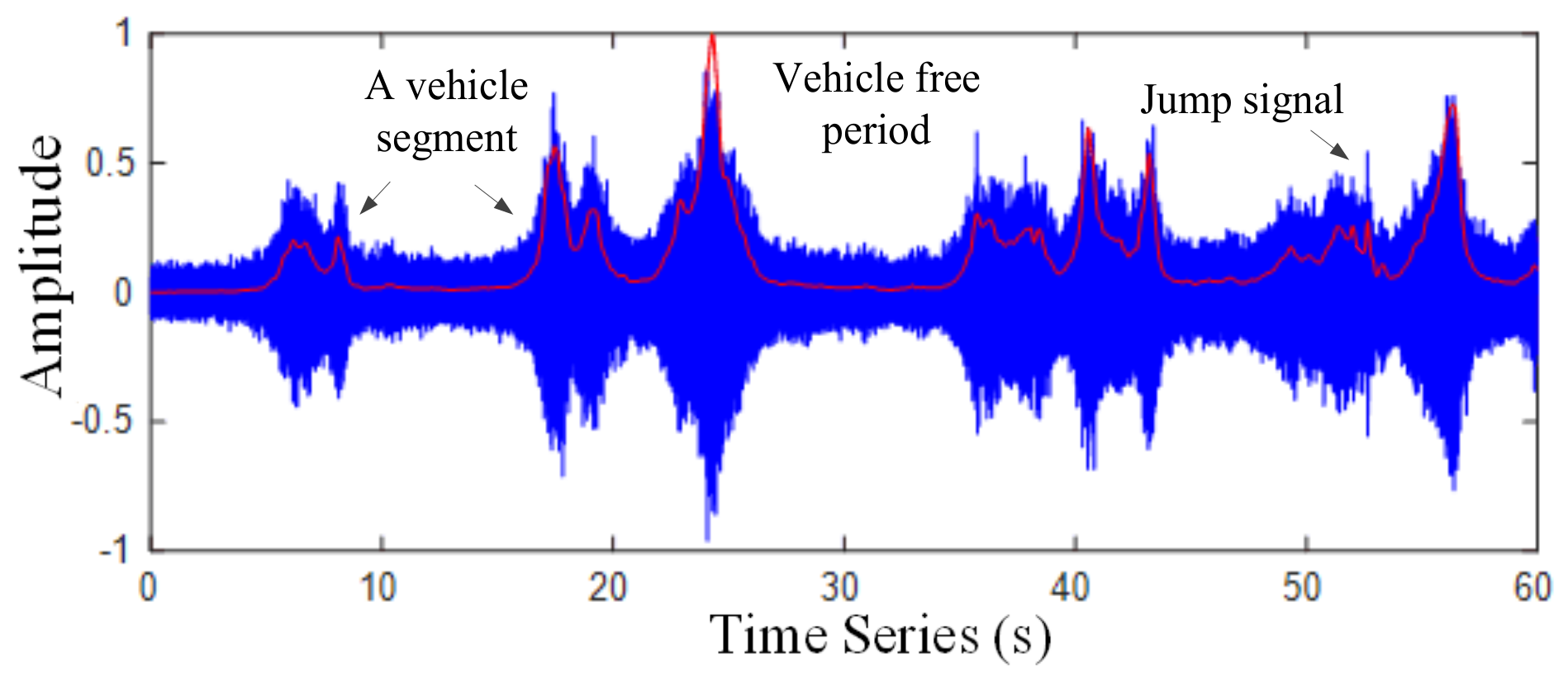

2.2. Traffic Detection Based on Triangle Wave Analysis of Traffic Noise

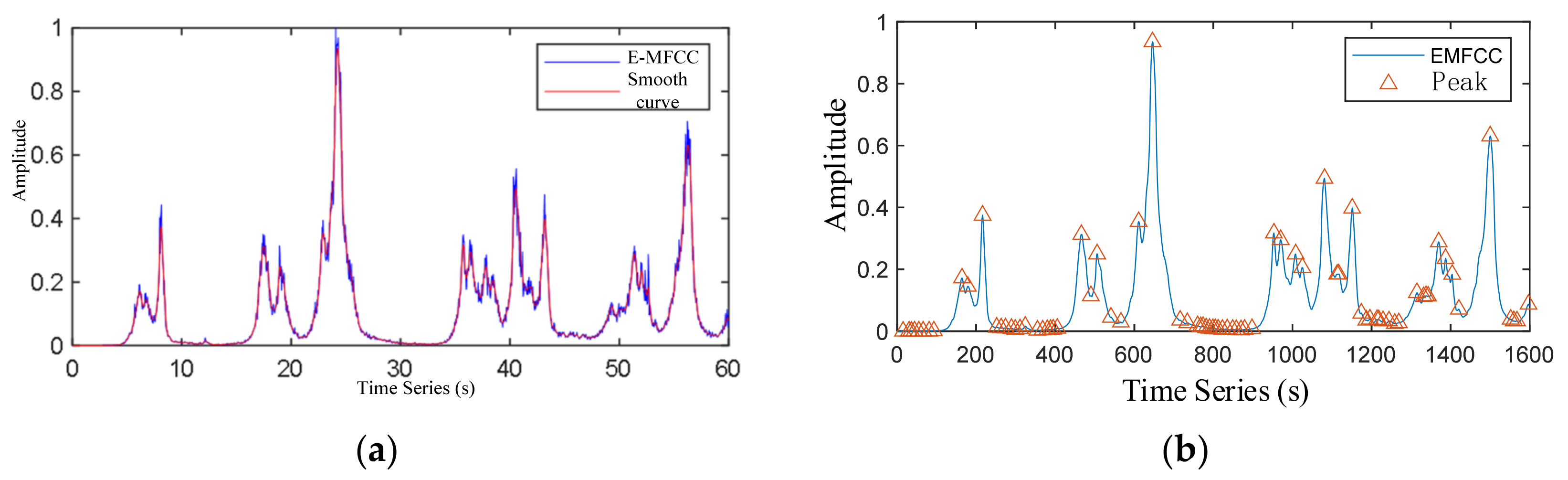

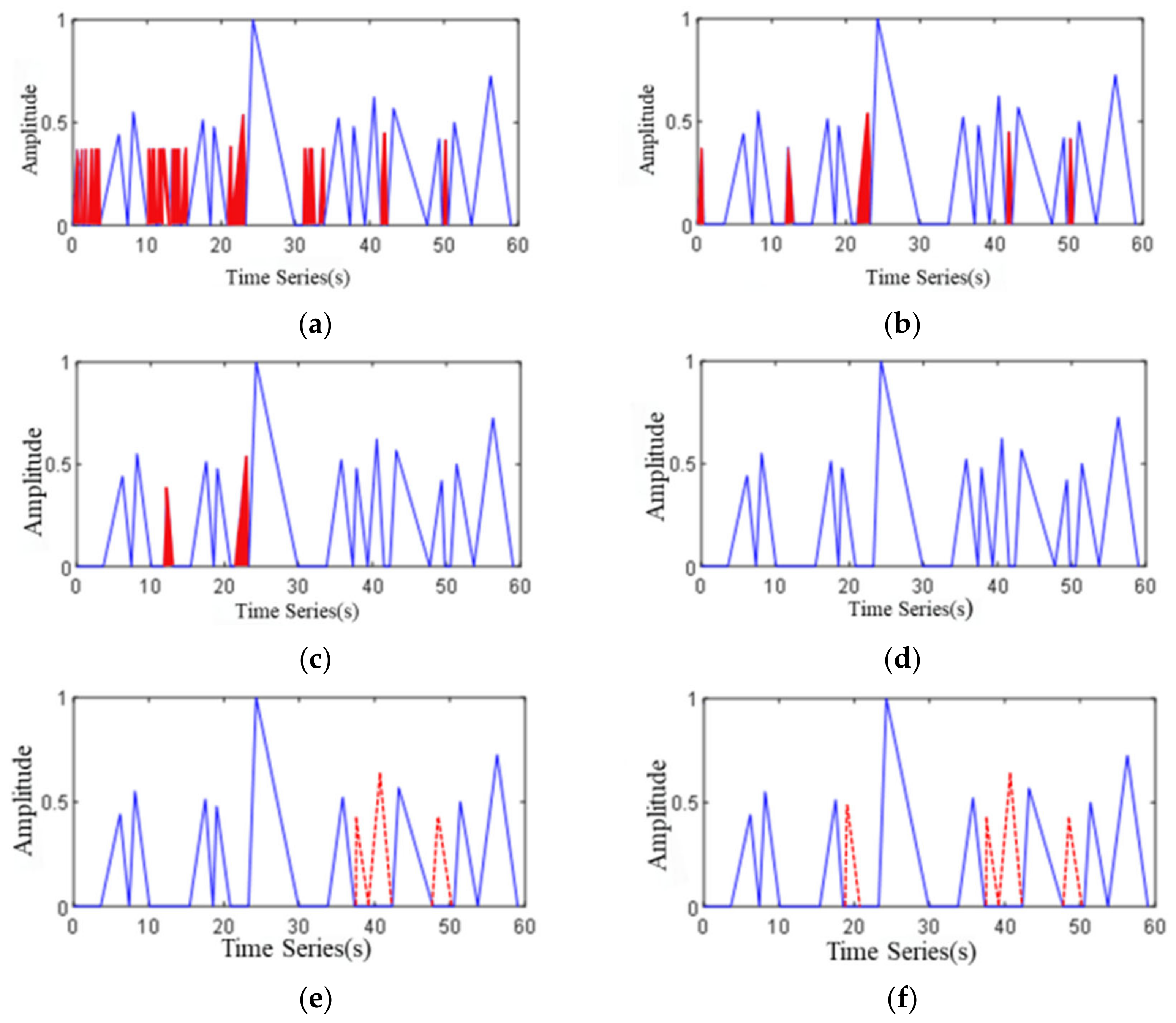

2.2.1. Feature Extraction and Fusion

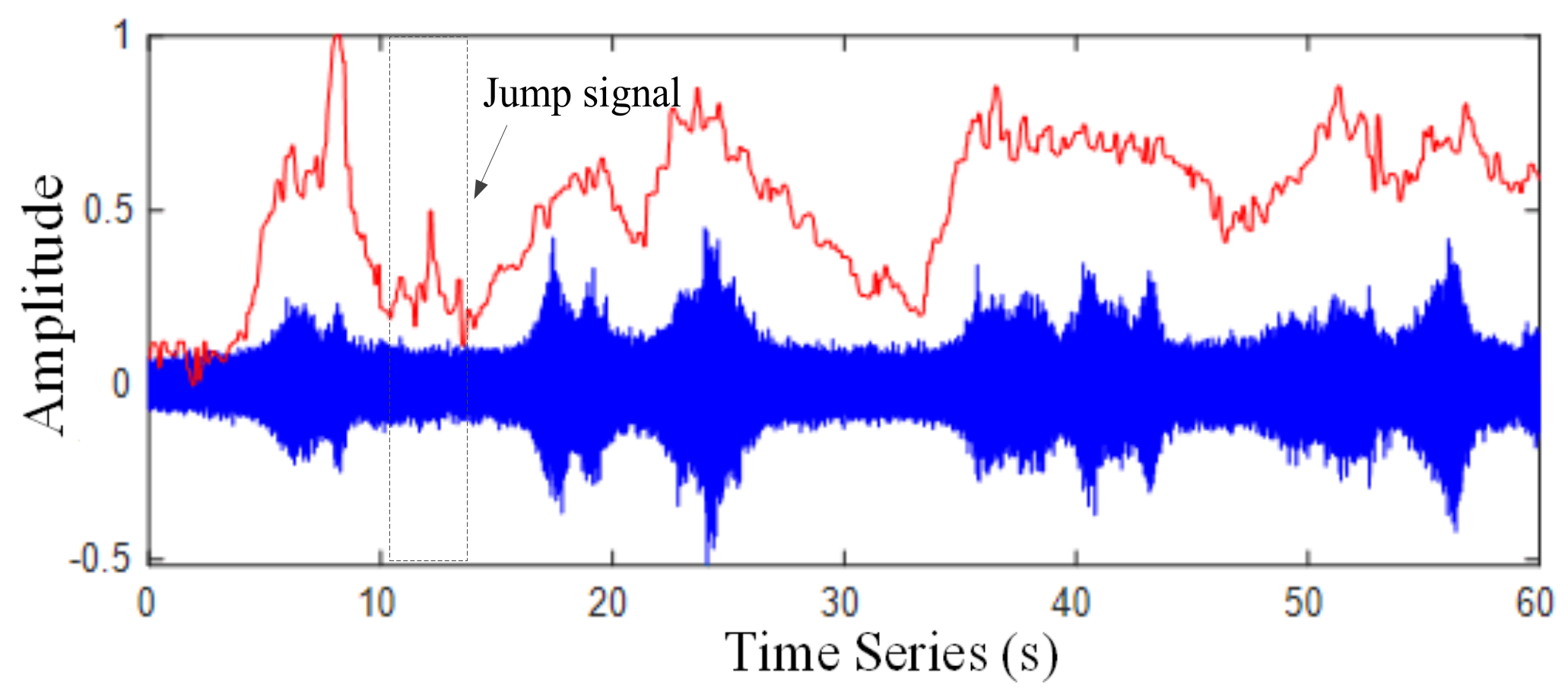

2.2.2. Extremum Extraction

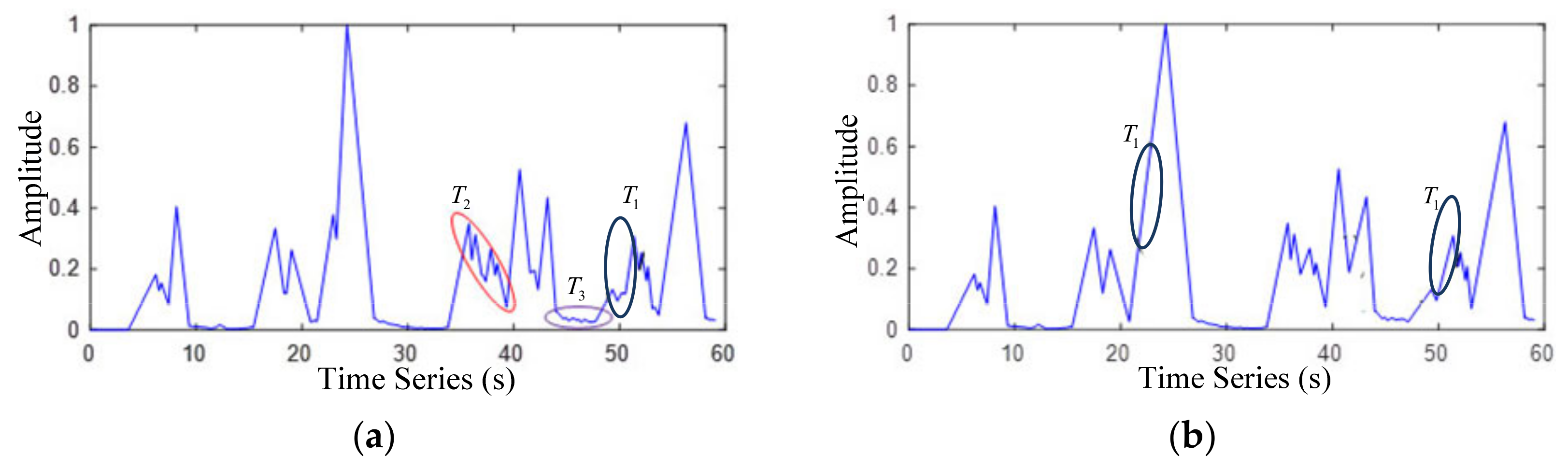

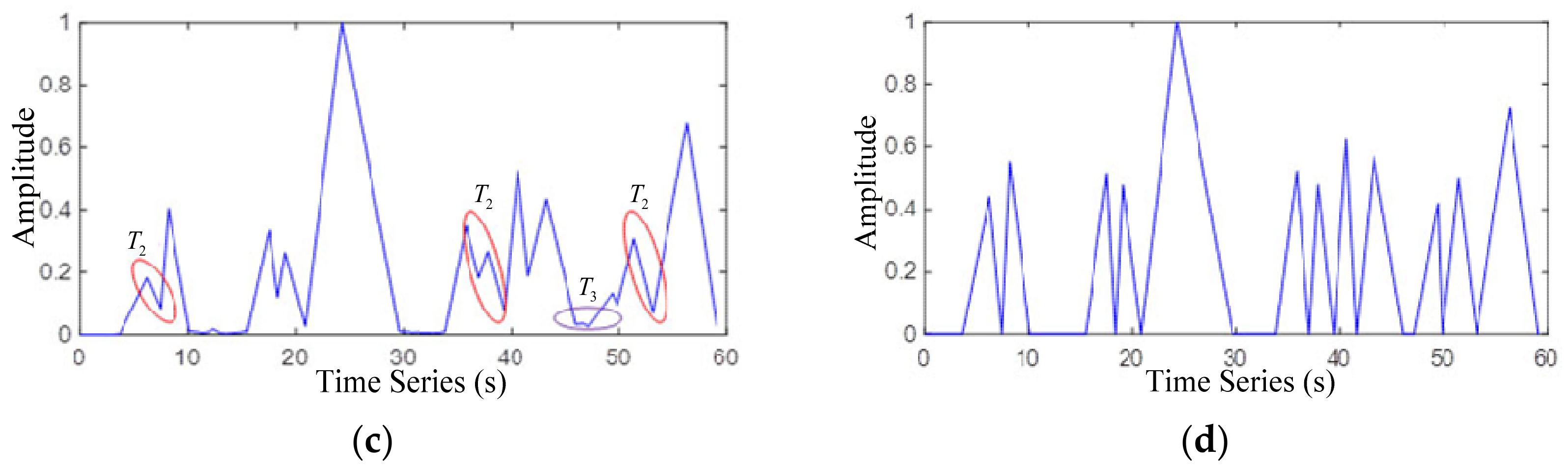

2.2.3. Formation and Combination of Triangular Waves

2.3. Experiment and Empirical Cases

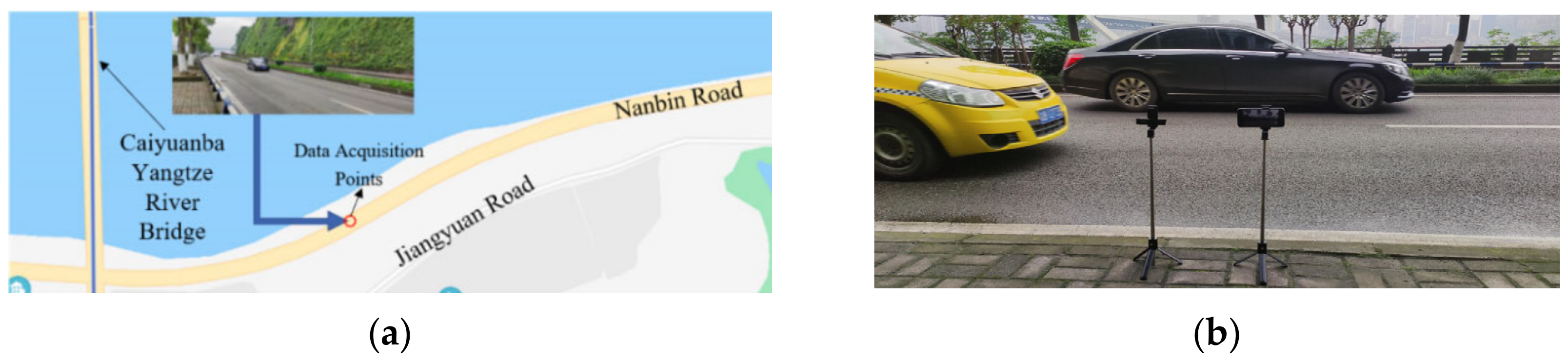

2.3.1. Experimental Data Acquisition

2.3.2. Evaluating Indicator

2.3.3. Noise Reduction Performance Comparison

2.3.4. Establishment of Simulation Platform

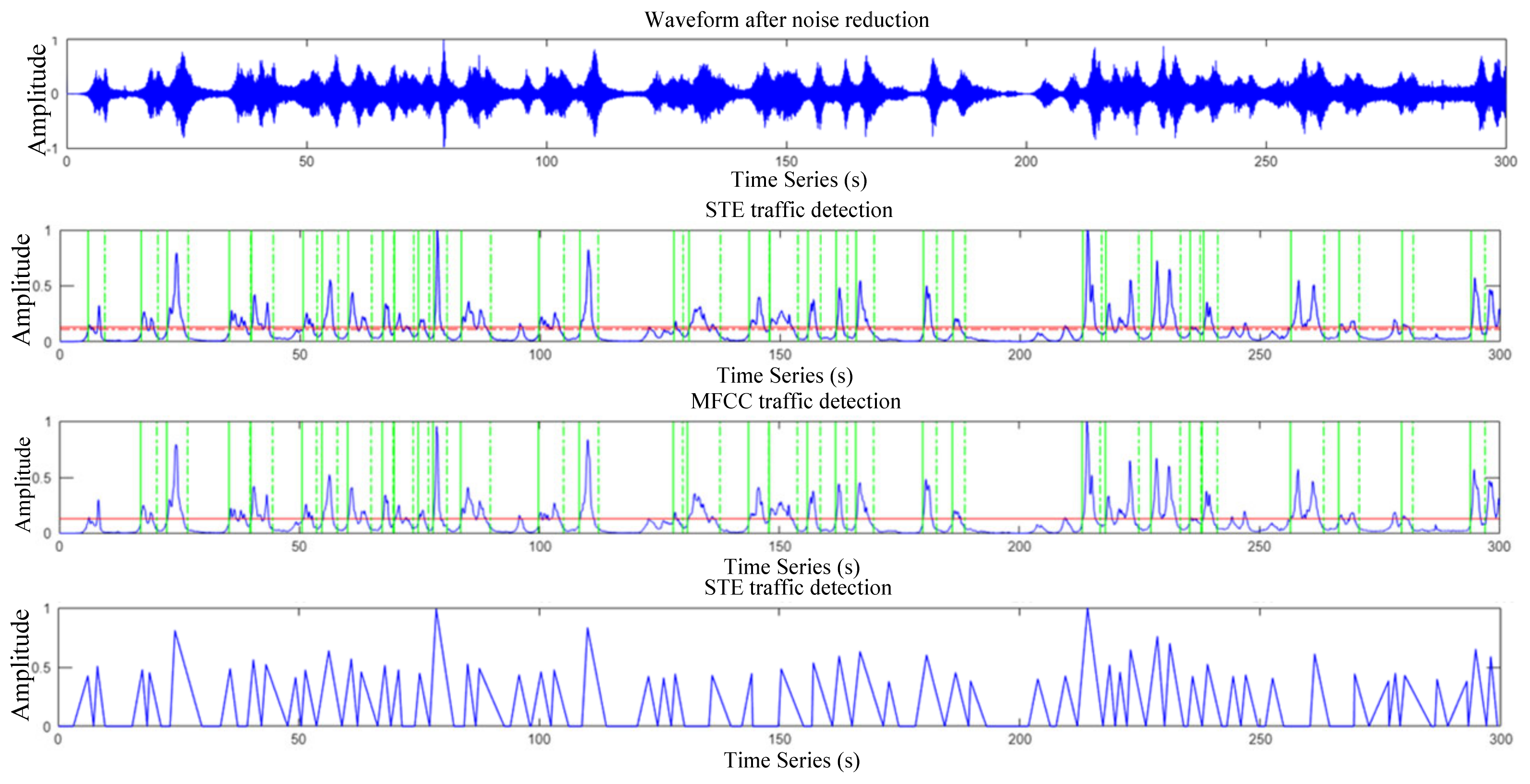

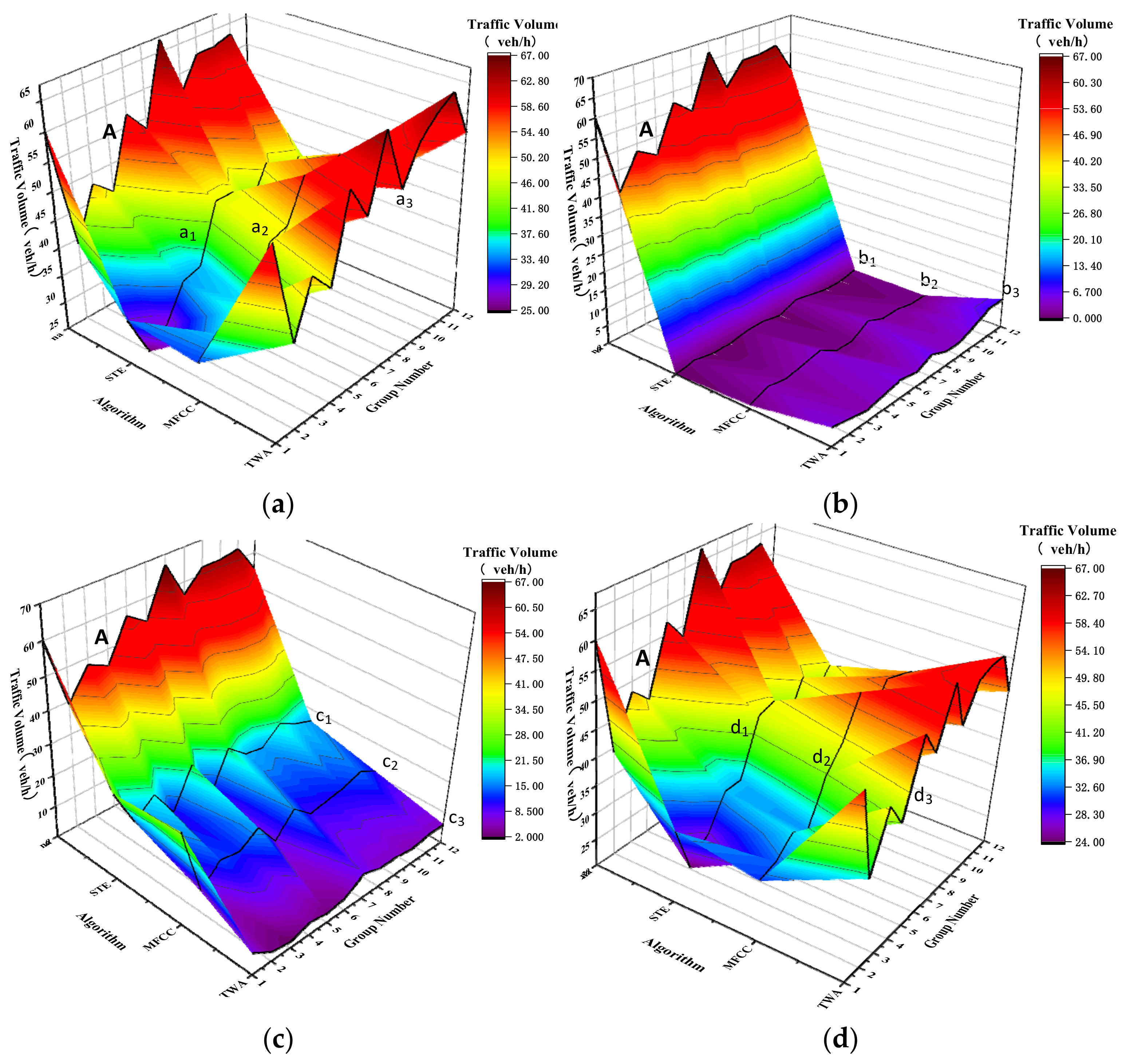

2.3.5. Test Results and Analysis

3. Conclusions and Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hamet, J.F.; Besnard, F.; Doisy, S.; Lelong, J.; Le Duc, E. New vehicle noise emission for French traffic noise prediction. Appl. Acoust. 2010, 71, 861–869. [Google Scholar] [CrossRef]

- Torija, J.A.; Ruiz, P.D. Using recorded sound spectra profile as input data for real-time short-term urban road-traffic-flow estimation. Sci. Total Environ. 2012, 435, 270–279. [Google Scholar] [CrossRef] [PubMed]

- Marmaroli, P.; Carmona, M.; Odobez, J.M.; Falourd, X.; Lissek, H. Observation of Vehicle Axles Through Pass-by Noise: A Strategy of Microphone Array Design. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1654–1664. [Google Scholar] [CrossRef][Green Version]

- Feng, C.; Chen, S. Reliability-based assessment of vehicle safety in adverse driving conditions. Transp. Res. Part C Emerg. Technol. 2011, 19, 156–168. [Google Scholar]

- Jo, K.; Jo, Y.; Suhr, J.K.; Jung, H.G.; Sunwoo, M. Precise Localization of an Autonomous Car Based on Probabilistic Noise Models of Road Surface Marker Features Using Multiple Cameras. IEEE Trans. Intell. Transp. Syst. 2016, 6, 3377–3392. [Google Scholar] [CrossRef]

- Torija, J.A.; Ruiz, D.P. Automated classification of urban locations for environmental noise impact assessment on the basis of road-traffic content. Expert Syst. Appl. 2016, 53, 1–13. [Google Scholar] [CrossRef]

- Lefebvre, N.; Chen, X.; Beauseroy, P.; Zhu, M. Traffic flow estimation using acoustic signal. Eng. Appl. Artif. Intell. 2017, 64, 164–171. [Google Scholar] [CrossRef]

- Steele, C. A critical review of some traffic noise prediction models. Appl. Acoust. 2001, 62, 271–287. [Google Scholar] [CrossRef]

- Mato-Méndez, F.J.; Sobreira-Seoane, M.A. Blind separation to improve classification of traffic noise. Appl. Acoust. 2011, 72, 590–598. [Google Scholar] [CrossRef]

- Chakraborty, P.; Hegde, C.; Sharma, A. Data-driven parallelizable traffic incident detection using spatio-temporally denoised robust thresholds. Transp. Res. Part C Emerg. Technol. 2019, 105, 81–99. [Google Scholar] [CrossRef]

- Kumar, P.; Nigam, S.P.; Kumar, N. Vehicular traffic noise modeling using artificial neural network approach. Transp. Res. Part C Emerg. Technol. 2014, 40, 111–122. [Google Scholar] [CrossRef]

- Alexandre, E.; Cuadra, L.; Salcedo-Sanz, S.; Pastor-Sánchez, A.; Casanova-Mateo, C. Hybridizing Extreme Learning Machines and Genetic Algorithms to select acoustic features in vehicle classification applications. Neurocomputing 2015, 152, 59–68. [Google Scholar] [CrossRef]

- Borkar, P.; Sarode, M.V.; Malik, L.G. Employing speeded scaled conjugate gradient algorithm for multiple contiguous feature vector frames: An approach for traffic density state estimation. Procedia Comput. Sci. 2016, 78, 740–747. [Google Scholar] [CrossRef]

- Rahman, M.; Kang, M.W.; Biswas, P. Predicting time-varying, speed-varying dilemma zones using machine learning and continuous vehicle tracking. IEEE Trans. Intell. Transp. Syst. 2021, 130, 103310. [Google Scholar] [CrossRef]

- Kim, K. Lightweight filter architecture for energy efficient mobile vehicle localization based on a distributed acoustic sensor network. Sensors 2013, 13, 11314–11335. [Google Scholar] [CrossRef]

- Nooralahiyan, Y.; Kirby, H.R.; Mckeown, D. Vehicle classification by acoustic signature. Math. Comput. Model. 1998, 27, 205–214. [Google Scholar] [CrossRef]

- Schclar, A.; Averbuch, A.; Rabin, N.; Zheludev, V. A diffusion framework for detection of moving vehicles. Digit. Signal Processing 2010, 20, 111–122. [Google Scholar] [CrossRef]

- Yuan, Y.K.; Fan, L.; Wang, Z.S. A novel method for speed measurement based on vehicle noise. J. Dalian Polytech. Univ. 2014, 33, 300–304. [Google Scholar]

- Ma, A.Y.; Wang, J.L. Statistical analysis of source noise and speed of different vehicles on Expressway. Highway 2005, 7, 199–202. [Google Scholar]

- Dinesh, V.; Naveen, A. Smartphone based traffic state detection using acoustic analysis and crowdsourcing. Appl. Acoust. 2018, 138, 80–91. [Google Scholar]

- Dennis, J.; Tran, H.D.; Chng, E.S. Overlapping sound event recognition using local spectrogram features and the generalised Hough transform. Pattern Recognit. Lett. 2013, 34, 1085–1093. [Google Scholar] [CrossRef]

- Ma, Q.L.; Zou, Z. Traffic detection algorithm for identify overlapping noise. Appl. Res. Comput. 2020, 37, 1069–1072 + 1080. [Google Scholar]

- Aumond, P.; Can, A.; Gozalo, G.R.; Fortin, N.; Suarez, E. Method for in situ acoustic calibration of smartphone-based sound measurement applications. Appl. Acoust. 2020, 166, 107337. [Google Scholar] [CrossRef]

- Benocci, R.; Molteni, A.; Cambiaghi, M.; Angelini, F.; Roman, H.E.; Zambon, G. Reliability of Dynamap traffic noise prediction. Appl. Acoust. 2019, 156, 142–150. [Google Scholar] [CrossRef]

- Yang, G.; Mo, H.; Li, W.; Lian, L.; Zheng, Z. The Endpoint detection of cough signal in continuous speech. J. Biomed. Eng. 2010, 27, 544–547, 555. [Google Scholar]

- Borkar, P.; Malik, L.G. Acoustic Signal based Traffic Density State Estimation using SVM. Int. J. Image Graph. Signal Processing 2013, 8, 37–44. [Google Scholar] [CrossRef]

- Ma, Q.L.; Zou, Z. Traffic State Evaluation Using Traffic Noise. IEEE Access 2020, 8, 120627–120646. [Google Scholar] [CrossRef]

- Kaur, A.; Sood, N.; Aggarwal, N.; Vij, D.; Sachdeva, B. Traffic state detection using smartphone based acoustic sensing. J. Intell. Fuzzy Syst. 2017, 32, 3159–3166. [Google Scholar] [CrossRef]

- Cai, P. A fast algorithm of speech end-point detection combined with short-time zero crossing rate. J. Xiamen Univ. Technol. 2013, 21, 48–51. [Google Scholar]

- Jodra, J.L.; Gurrutxaga, I.; Muguerza, J.; Yera, A. Solving Poisson’s equation using FFT in a GPU cluster. J. Parallel Distrib. Comput. 2017, 102, 28–36. [Google Scholar] [CrossRef]

- Ma, Q.L.; Zou, Z.; Liu, F.J. Traffic statistics based on the endpoint detection of driving acoustic signals. Sci. Technol. Eng. 2020, 20, 1676–1683. [Google Scholar]

- Zhang, H.; Pan, Z.M. Parameters selection of stationary wavelet denoising algorithm. J. Natl. Univ. Def. Technol. 2019, 41, 165–170. [Google Scholar]

| Group | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 60 | 33 | 0 | 27 | 32 | 1 | 29 | 58 | 5 | 7 |

| 2 | 39 | 25 | 1 | 15 | 35 | 3 | 7 | 40 | 4 | 3 |

| 3 | 48 | 28 | 0 | 20 | 37 | 2 | 13 | 49 | 3 | 2 |

| 4 | 45 | 34 | 0 | 11 | 36 | 3 | 12 | 45 | 4 | 4 |

| 5 | 57 | 35 | 1 | 23 | 45 | 4 | 16 | 59 | 5 | 3 |

| 6 | 53 | 45 | 1 | 9 | 46 | 1 | 8 | 53 | 4 | 4 |

| 7 | 67 | 45 | 0 | 22 | 53 | 1 | 15 | 65 | 6 | 8 |

| 8 | 56 | 41 | 2 | 17 | 50 | 4 | 10 | 54 | 4 | 6 |

| 9 | 62 | 47 | 1 | 16 | 53 | 2 | 11 | 60 | 4 | 6 |

| 10 | 62 | 41 | 0 | 21 | 52 | 2 | 12 | 63 | 6 | 5 |

| 11 | 63 | 45 | 0 | 18 | 50 | 1 | 14 | 65 | 8 | 6 |

| 12 | 55 | 40 | 1 | 16 | 45 | 1 | 11 | 57 | 8 | 6 |

| Methods | Missed Detection Rate | False Detection Rate | Accuracy |

|---|---|---|---|

| STE | 32.23% | 1.05% | 67.77% |

| MFCC | 23.69% | 3.75% | 76.01% |

| TWA | 9% | 9.15% | 91% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Q.; Ma, L.; Liu, F.; Sun, D. A Novel Driving Noise Analysis Method for On-Road Traffic Detection. Sensors 2022, 22, 4230. https://doi.org/10.3390/s22114230

Ma Q, Ma L, Liu F, Sun D. A Novel Driving Noise Analysis Method for On-Road Traffic Detection. Sensors. 2022; 22(11):4230. https://doi.org/10.3390/s22114230

Chicago/Turabian StyleMa, Qinglu, Lian Ma, Fengjie Liu, and Daniel (Jian) Sun. 2022. "A Novel Driving Noise Analysis Method for On-Road Traffic Detection" Sensors 22, no. 11: 4230. https://doi.org/10.3390/s22114230

APA StyleMa, Q., Ma, L., Liu, F., & Sun, D. (2022). A Novel Driving Noise Analysis Method for On-Road Traffic Detection. Sensors, 22(11), 4230. https://doi.org/10.3390/s22114230