Blind Source Separation Based on Double-Mutant Butterfly Optimization Algorithm

Abstract

:1. Introduction

- (1)

- An ICA method based on DMBOA is designed to address the low-separation performance of conventional ICA. DMBOA is used to optimize the separation matrix W, maximize the kurtosis, and finally, complete the separation of observation signals.

- (2)

- Three improved strategies are designed for the insufficient search capability of the basic BOA, which coordinate the global search and local search of the algorithm while improving BOA searching ability.

- (3)

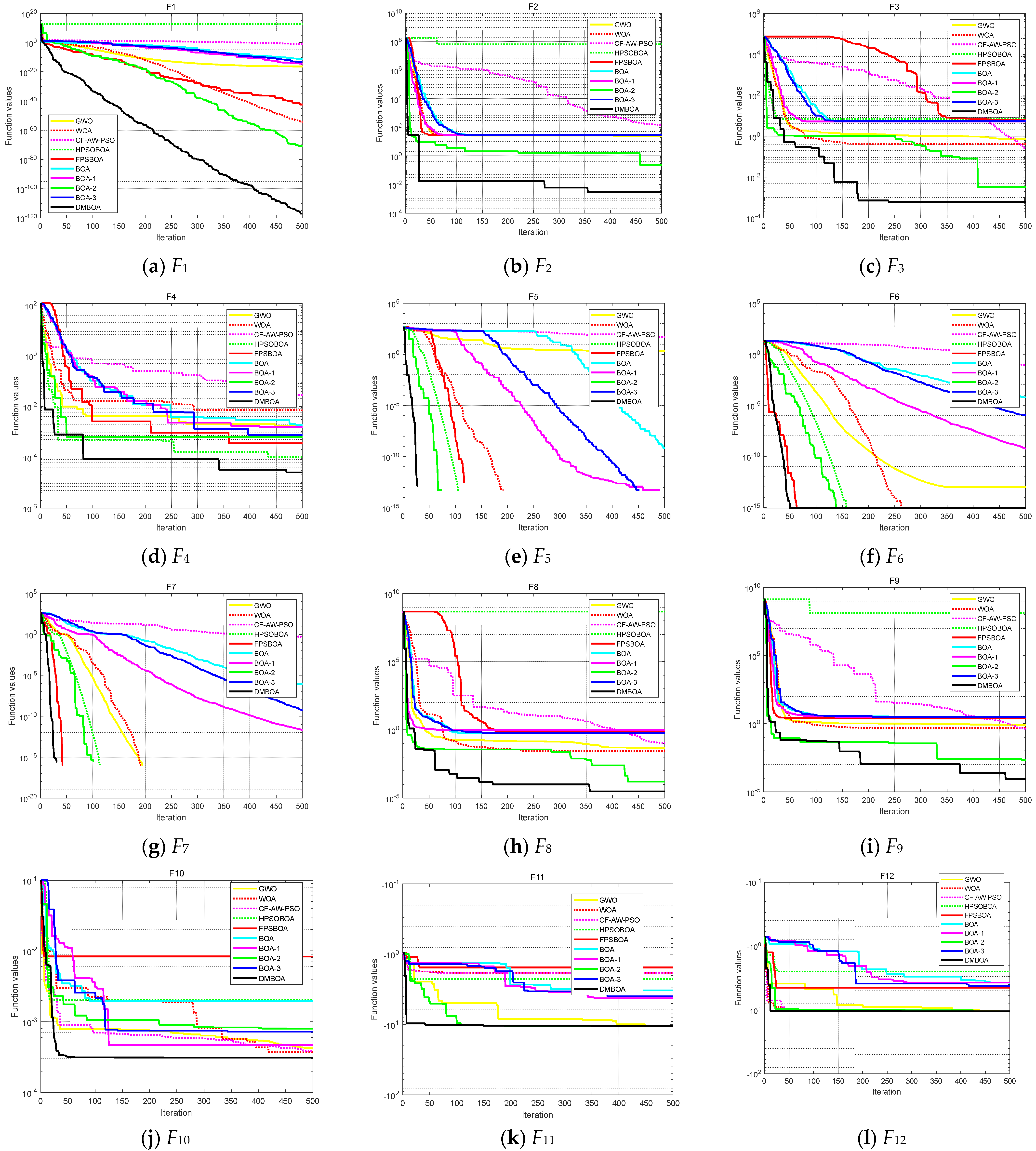

- Simulation results show that DMBOA outperforms the other nine algorithms when optimizing 12 benchmark functions. In the BSS problem, DMBOA is capable of successfully separating mixed signals and achieving higher separation performance than the compared algorithms.

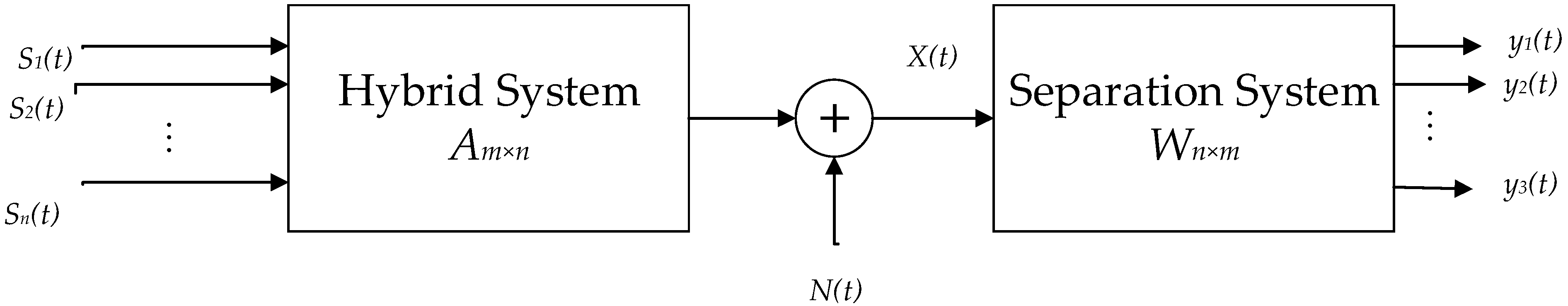

2. Basic Theory of Blind Source Separation

2.1. Linear Mixed Blind Source Separation Model

- (1)

- The mixing matrix, A, should be reversible or full rank, and the number of observed signals should be larger than or equal to the number of source signals (i.e.,).

- (2)

- From a statistical standpoint, each source signal is independent of the others, and at most, one signal follows a Gaussian distribution, because multiple Gaussian processes remain a Gaussian process after mixing and, hence, cannot be separated.

2.2. Signal Preprocesing

2.3. Separation Principle

3. Butterfly Optimization Algorithm (BOA)

| Algorithm 1: BOA |

|---|

| Input: Objective function f(x), butterfly population size N, stimulation concentration I, sensory modality , power exponent , conversion probability , Maximum number of iterations T. |

| 1. Initialize population |

| 2. While t < T 3. for i = 1: N |

| 4. Calculate fragrance using Equation (8) 5. Generate a random number rand in [0, 1] 6. if rand < p 7. Update position using Equation (10) 8. else 9. Update position using Equation (11) 10. end if 11. if 12. , 13. end if 14. Update the value of c using Equation (9) 15. end for 16. end while 17. Output the global optimal solution |

4. Double-Mutant Butterfly Optimization Algorithm (DMBOA)

4.1. Dynamic Transition Probability

4.2. Improvement in Update Function

4.3. Population Reconstruction Mechanism

| Algorithm 2: DMBOA |

|---|

| Input: Objective function f(x), butterfly population size N, stimulation concentration I, sensory modality , power exponent , maximum number of iterations T. counter . |

| 1. Initialize population |

| 2. Whilet < T 3. for i = 1: N |

| 4. Calculate fragrance using Equation (8) 5. Calculate conversion probability p using Equation (8) 6. Generate a random numbers rand in [0, 1] 7. if rand < p 8. Update position using Equation (13) 9. else 10. Update position using Equation (14) 11. end if 12. if 13. , , |

| 14. else 15. 16. end if 17. if 18. Execute population reconstruction strategy 19. end if |

| 20. Update the value of c using Equation (9) 21. end for 22. end while 23. Output the global optimal solution |

5. Simulation and Result Analysis

5.1. Evalution of DMBOA on Benchmark Function

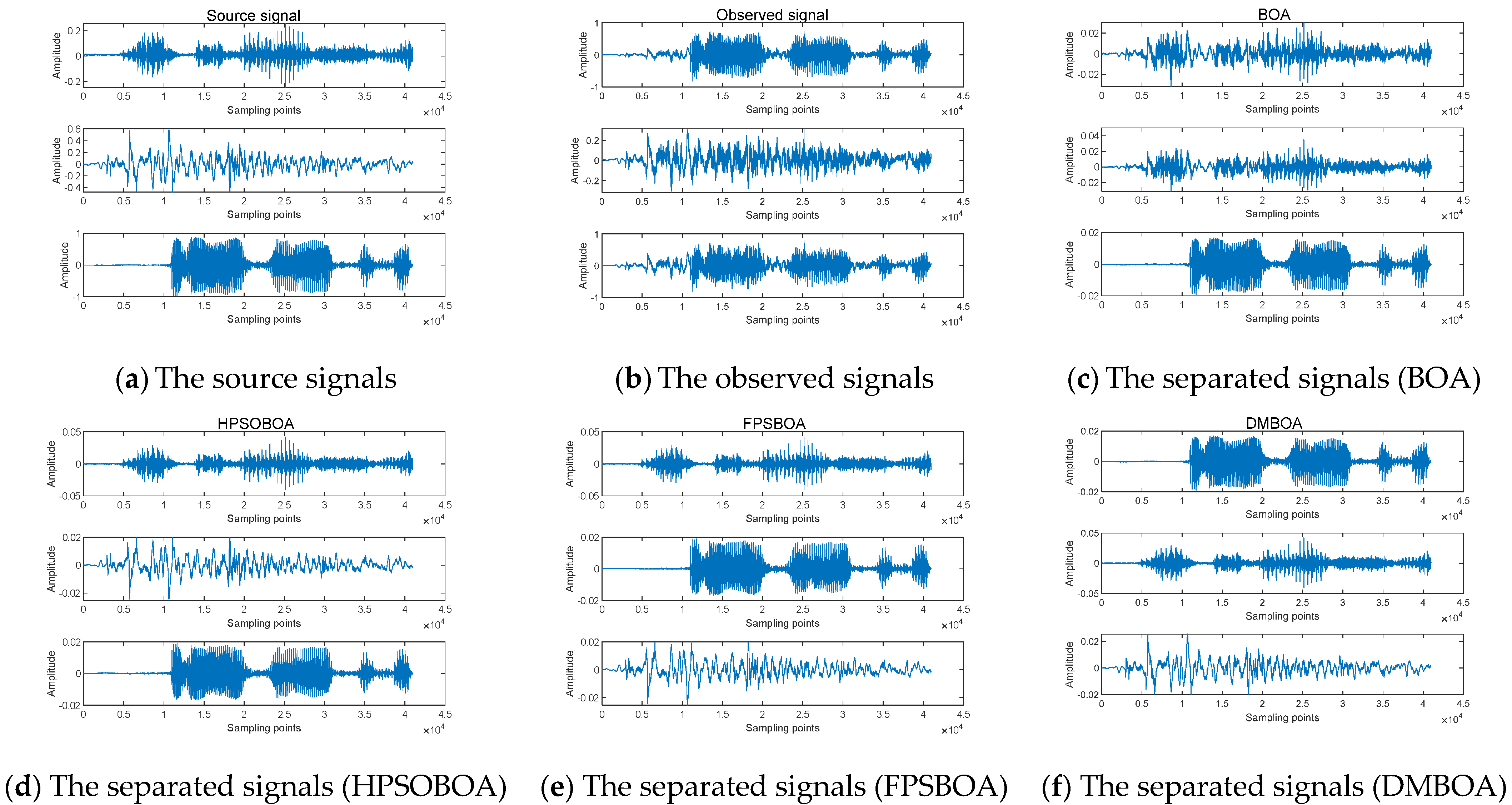

5.2. Speech Signal Separation

5.3. Image Signal Separation

6. Conclusions

- (1)

- When optimizing 12 benchmark functions (four low-modal and eight high-modal), DMBOA outperforms the other nine algorithms. The three improvement methods proposed in this study increased the performance of BOA to varying degrees in the algorithm ablation experiment. All of this demonstrates that DMBOA has a high level of search performance and strong robustness.

- (2)

- DMBOA outperforms the other algorithms in the BSS and is capable of successfully separating the mixed speech and image signals.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gao, J.; Zhu, X.; Nandi, A. Independent Component Analysis for Multiple-Input Multiple-Output Wireless Communication Systems. Signal Processing 2011, 91, 607–623. [Google Scholar] [CrossRef]

- Cheng, Y.; Zhu, D.; Zhang, J. High Precision Sparse Reconstruction Scheme for Multiple Radar Mainlobe Jammings. Electronics 2020, 9, 1224. [Google Scholar] [CrossRef]

- Zi, J.; Lv, D.; Liu, J.; Huang, X.; Yao, W.; Gao, M.; Xi, R.; Zhang, Y. Improved Swarm Intelligent Blind Source Separation Based on Signal Cross-Correlation. Sensors 2022, 22, 118. [Google Scholar] [CrossRef] [PubMed]

- Ali, K.; Nourredine, A.; Elhadi, K. Blind Image Separation Using the JADE Method. Eng. Proc. 2022, 14, 20. [Google Scholar]

- Taha, L.; Abdel-Raheem, E. A Null Space-Based Blind Source Separation for Fetal Electrocardiogram Signals. Sensors 2020, 20, 3536. [Google Scholar] [CrossRef]

- Xu, H.; Ebrahim, M.P.; Hasan, K.; Heydari, F.; Howley, P.; Yuce, M.R. Accurate Heart Rate and Respiration Rate Detection Based on a Higher-Order Harmonics Peak Selection Method Using Radar Non-Contact Sensors. Sensors 2022, 22, 83. [Google Scholar] [CrossRef]

- Guo, S.; Shi, M.; Zhou, Y.; Yu, J.; Wang, E. An Efficient Convolutional Blind Source Separation Algorithm for Speech Signals under Chaotic Masking. Algorithms 2021, 14, 165. [Google Scholar] [CrossRef]

- Ding, H.; Wang, Y.; Yang, Z.; Pfeiffer, O. Nonlinear Blind Source Separation and Fault Feature Extraction Method for Mining Machine Diagnosis. Appl. Sci. 2019, 9, 1852. [Google Scholar] [CrossRef]

- Comon, P. Independent Component Analysis, A New Concept? Signal Processing 1994, 36, 287–314. [Google Scholar] [CrossRef]

- Amari, S. Natural Gradient Works Efficiently in Learning. Neural Comput. 1998, 10, 251–276. [Google Scholar] [CrossRef]

- Barros, A.; Cichocki, A. A Fixed-Point Algorithm for Independent Component Analysis which Uses A Priori Information. In Proceedings of the 5th Brazilian Symposium on Neural Networks, Belo Horizonte, Brazil, 9–11 December 1998. [Google Scholar]

- Lee, S.; Yang, C. GPSO-ICA: Independent Component Analysis Based on Gravitational Particle Swarm Optimization for Blind Source Separation. J. Intell. Fuzzy Syst. 2018, 35, 1943–1957. [Google Scholar] [CrossRef]

- Li, C.; Jiang, Y.; Liu, F.; Xiang, Y. Blind Source Separation Algorithm Based on Improved Particle Swarm Optimization under Noisy Condition. In Proceedings of the 2018 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference, Xian, China, 25–27 May 2018. [Google Scholar]

- Wang, R. Blind Source Separation Based on Adaptive Artificial Bee Colony Optimization and Kurtosis. Circuits Syst. Signal Processing 2021, 40, 3338–3354. [Google Scholar] [CrossRef]

- Luo, W.; Jin, H.; Li, H.; Fang, X.; Zhou, R. Optimal Performance and Application for Firework Algorithm Using a Novel Chaotic Approach. IEEE Access 2020, 8, 120798–120817. [Google Scholar] [CrossRef]

- Luo, W.; Jin, H.; Li, H.; Duan, K. Radar Main-Lobe Jamming Suppression Based on Adaptive Opposite Fireworks Algorithm. IEEE Open J. Antennas Propag. 2021, 2, 138–150. [Google Scholar] [CrossRef]

- Wen, G.; Zhang, C.; Lin, Z.; Shang, Z.; Wang, H.; Zhang, Q. Independent Component Analysis Based on Genetic Algorithms. In Proceedings of the 2014 10th International Conference on Natural Computation, Xiamen, China, 19–21 August 2014. [Google Scholar]

- Arora, S.; Singh, S. Butterfly Optimization Algorithm: A Novel Approach for Global Optimization. Soft Comput. 2019, 23, 715–734. [Google Scholar] [CrossRef]

- Long, W.; Wu, T.; Xu, M.; Tang, M.; Cai, S. Parameters Identification of Photovoltaic Models by Using An Enhanced Adaptive Butterfly Optimization Algorithm. Energy 2021, 103, 120750. [Google Scholar] [CrossRef]

- Arora, S.; Singh, S. An Effective Hybrid Butterfly Optimization Algorithm with Artificial Bee Colony for Numerical Optimization. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 14–21. [Google Scholar] [CrossRef]

- Long, W.; Jiao, J.; Liang, X.; Wu, T.; Xu, M.; Cai, S. Pinhole-Imaging-Based Learning Butterfly Optimization Algorithm for Global Optimization and Feature Selection. Appl. Soft Comput. 2021, 103, 107146. [Google Scholar] [CrossRef]

- Fan, Y.; Shao, J.; Sun, G.; Shao, X. A Self-Adaption Butterfly Optimization Algorithm for Numerical Optimization Problems. IEEE Access 2020, 8, 88026–88041. [Google Scholar] [CrossRef]

- Mortazavi, A.; Moloodpoor, M. Enhanced Butterfly Optimization Algorithm with A New Fuzzy Regulator Strategy and Virtual Butterfly Concept. Knowl. -Based Syst. 2021, 228, 107291. [Google Scholar] [CrossRef]

- Zhang, B.; Yang, X.; Hu, B.; Liu, Z.; Li, Z. OEbBOA: A Novel Improved Binary Butterfly Opmization Approaches With Various Strategies for Feature Selection. IEEE Access 2020, 8, 67799–67812. [Google Scholar] [CrossRef]

- Li, G.; Chang, W.; Yang, H. A Novel Combined Prediction Model for Monthly Mean Precipitation With Error Correction Strategy. IEEE Access 2020, 8, 141432–141445. [Google Scholar] [CrossRef]

- Watkins, D. Fundamentals of Matrix Computations, 2nd ed; Wiley: New York, NY, USA, 2002; pp. 192–194. [Google Scholar]

- Storn, R.; Price, K. Differential Evolution–A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Price, K. Differential Evolution: A Fast and Simple Numerical Optimizer. In Proceedings of the North American Fuzzy Information Processing, Berkeley, CA, USA, 19–22 June 1996; pp. 524–527. [Google Scholar]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Li, Y.; Ni, Z.; Jin, Z.; Li, J.; Li, F. Research on Clustering Method of Improved Glowworm Algorithm Based on Good-Point Set. Math. Probl. Eng. 2018, 2018, 8274084. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- You, Z.; Chen, W.; He, G.; Nan, X. Adaptive Weight Particle Swarm Optimization Algorithm with Constriction Factor. In Proceedings of the 2010 International Conference of Information Science and Management Engineering, Xi’an, China, 7–8 August 2010. [Google Scholar]

- Zhang, M.; Long, D.; Qin, T.; Yang, J. A Chaotic Hybrid Butterfly Optimization Algorithm with Particle Swarm Optimization for High-Dimensional Optimization Problems. Symmetry 2020, 12, 1800. [Google Scholar] [CrossRef]

- Li, Y.; Yu, X.; Liu, J. Enhanced Butterfly Optimization Algorithm for Large-Scale Optimization Problems. J. Bionic Eng. 2022, 19, 554–570. [Google Scholar] [CrossRef]

- Ali, M.N.; Falavigna, D.; Brutti, A. Time-Domain Joint Training Strategies of Speech Enhancement and Intent Classification Neural Models. Sensors 2022, 22, 374. [Google Scholar] [CrossRef]

- Fu, S.; Liao, C.; Tsao, Y. Learning with learned loss function: Speech enhancement with quality-net to improve perceptual evaluation of speech quality. IEEE Signal Process. Lett. 2019, 27, 26–30. [Google Scholar] [CrossRef]

- Mahdaoui, A.E.; Ouahabi, A.; Moulay, M.S. Image Denoising Using a Compressive Sensing Approach Based on Regularization Constraints. Sensors 2022, 22, 2199. [Google Scholar] [CrossRef] [PubMed]

| Algorithm Type | Name | Method | Conclusion | Reference |

|---|---|---|---|---|

| Conventional ICA | NGA | Based on gradient information | The separation performance of conventional algorithms is low and need to be further improved. | Amari [10] |

| FastICA | Based on fixed point iteration | Barros et al. [11] | ||

| Intelligent optimization ICA | PSO-ICA | Introduce PSO into ICA | Introducing swarm intelligence algorithms into ICA improves the separation performance compared with conventional ICA. But there are problems with these swarm intelligence algorithms. | Li et al. [13] |

| ABC-ICA | Introduce ABC into ICA | Wang et al. [14] | ||

| FA-ICA | Introduce FA into ICA | Luo et al. [15,16] | ||

| GA-ICA | Introduce GA into ICA | Wen et al. [17] | ||

| Improved algorithms of BOA | BOA/ABC | Combines BOA and ABC | Most improved algorithms only improve the single search performance of BOA, but ignore the balance between global search ability and local search ability. | Arora et al. [20] |

| PIL-BOA | Provides a pinhole image learning strategy based on the optical principle | Long et al. [21] | ||

| SABOA | Introduces a new fragrance coefficient and a different iteration strategy | Fan et al. [22] | ||

| FBOA | Proposes a novel fuzzy decision strategy and introduces a notion of “virtual butterfly” | Mortazavi et al. [23] | ||

| OEbBOA | Proposes a heuristic initialization strategy combined with greedy strategy | Zhang et al. [24] | ||

| IBOA | Introduces weight factor and Cauchy mutation | Li et al. [25] |

| Function | Dim | Scope | fmin |

|---|---|---|---|

| 30 | [–10, 10] | 0 | |

| 30 | [−30, 30] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−1.28, 1.28] | 0 | |

| 30 | [−5.12, 5.12] | 0 | |

| 30 | [−32, 32] | 0 | |

| 30 | [−600, 600] | 0 | |

| 30 | [−50, 50] | 0 | |

| 30 | [−50,50] | 0 | |

| 4 | [−5, 5] | 0.00030 | |

| 4 | [0, 10] | −10.4028 | |

| 4 | [0, 10] | −10.5363 |

| Function | Index | DMBOA | BOA | BOA_1 | BOA_2 | BOA_3 | HPSOBOA | FPSBOA | GWO | WOA | CF_AW_PSO |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | BEST | 1.28 × 10−119 | 2.75 × 10−11 | 2.42 × 10−14 | 1.13 × 10−62 | 3.01 × 10−13 | 3.19 × 1011 | 9.73 × 10−51 | 1.20 × 10−16 | 9.40 × 10−53 | 0.12256 |

| MEAN | 2.04 × 105 | 9.01 × 107 | 3.51 × 107 | 8.52 × 105 | 4.71 × 107 | 4.58 × 1013 | 2.43 × 108 | 7.29 × 108 | 8.05 × 109 | 1.40 × 1012 | |

| STD | 2.47 × 105 | 2.01 × 109 | 7.47 × 107 | 7.85 × 107 | 7.85 × 108 | 1.61 × 1014 | 1.68 × 109 | 1.63 × 109 | 8.84 × 109 | 2.04 × 1012 | |

| TIME | 0.1610 | 0.1527 | 0.1543 | 0.1771 | 0.1732 | 0.1757 | 0.1375 | 0.1927 | 0.0901 | 1.0270 | |

| F2 | BEST | 1.06 × 10−2 | 28.9471 | 28.8818 | 0.1786 | 28.0715 | 2.41 × 108 | 2.89 × 101 | 26.8769 | 27.6766 | 2.03 × 102 |

| MEAN | 1.24 × 105 | 2.91 × 106 | 2.51 × 106 | 1.39 × 106 | 2.47 × 106 | 2.44 × 108 | 1.32 × 106 | 1.89 × 106 | 1.97 × 106 | 1.62 × 106 | |

| STD | 1.63 × 106 | 2.67 × 107 | 1.68 × 107 | 1.68 × 107 | 2.04 × 107 | 9.84 × 106 | 1.58 × 107 | 1.80 × 107 | 1.93 × 107 | 1.32 × 107 | |

| TIME | 0.1941 | 0.2077 | 0.1844 | 0.1844 | 0.2290 | 0.1947 | 0.1884 | 0.2050 | 0.0794 | 0.9862 | |

| F3 | BEST | 1.28 × 10−3 | 5.1259 | 4.738 | 0.0098 | 4.9992 | 6.3584 | 4.8811 | 0.6259 | 0.4128 | 0.3316 |

| MEAN | 2.87 × 102 | 2.32 × 103 | 2.01 × 103 | 3.30 × 102 | 2.04 × 103 | 2.66 × 102 | 2.91 × 103 | 6.50 × 102 | 6.24 × 102 | 1.94 × 103 | |

| STD | 4.02 × 103 | 8.84 × 103 | 7.43 × 103 | 4.48 × 103 | 9.00 × 103 | 3.46 × 103 | 7.78 × 103 | 4.68 × 103 | 5.10 × 103 | 4.42 × 103 | |

| TIME | 0.1356 | 0.1241 | 0.1316 | 0.1478 | 0.1524 | 0.1369 | 0.1279 | 0.1774 | 0.0631 | 0.9525 | |

| F4 | BEST | 7.93 × 10−5 | 0.0020 | 8.33 × 10−4 | 6.09 × 10−4 | 1.30 × 10−3 | 1.09 × 10−4 | 5.31 × 10−4 | 1.44 × 10−3 | 0.0049 | 0.0485 |

| MEAN | 0.4327 | 3.4488 | 2.3577 | 1.2125 | 1.4712 | 0.5487 | 3.6951 | 0.7935 | 1.0180 | 0.9897 | |

| STD | 5.1646 | 14.7580 | 11.7394 | 10.0441 | 14.9266 | 5.9768 | 15.277 | 7.2482 | 8.1546 | 7.1023 | |

| TIME | 0.3257 | 0.3312 | 0.3017 | 0.3247 | 0.3436 | 0.3058 | 0.3163 | 0.2940 | 0.1530 | 1.0780 | |

| F5 | BEST | 0 | 2.85 × 10−10 | 0 | 0 | 0 | 0 | 0 | 0.7624 | 0 | 47.5728 |

| MEAN | 2.3163 | 1.04 × 102 | 33.2498 | 4.5992 | 90.2747 | 10.9330 | 1.87 × 102 | 26.5955 | 27.2819 | 1.59 × 102 | |

| STD | 27.8726 | 1.20 × 102 | 88.8153 | 38.8205 | 1.21 × 102 | 52.0126 | 8.82 × 101 | 67.5291 | 72.5040 | 75.2091 | |

| TIME | 0.1925 | 0.1992 | 0.1797 | 0.1795 | 0.2186 | 0.1676 | 0.1645 | 0.1920 | 0.0734 | 0.9903 | |

| F6 | BEST | 8.88 × 10−16 | 4.74 × 10−5 | 3.24 × 10−7 | 8.88 × 10−16 | 1.21 × 10−6 | 8.88 × 10−16 | 8.88 × 10−16 | 1.22 × 10−13 | 6.57 × 10−15 | 0.8873 |

| MEAN | 0.1272 | 3.4204 | 2.2722 | 0.2123 | 3.4058 | 0.6122 | 0.1782 | 0.7996 | 0.6367 | 7.2342 | |

| STD | 1.4421 | 6.1540 | 5.1366 | 1.6815 | 6.2388 | 2.8252 | 2.1388 | 3.1655 | 2.7333 | 4.3789 | |

| TIME | 0.1650 | 0.1561 | 0.1485 | 0.2010 | 0.1724 | 0.1481 | 0.1467 | 0.1926 | 0.0730 | 1.0238 | |

| F7 | BEST | 0 | 3.70 × 10−7 | 8.08 × 10−11 | 0 | 6.84 × 10−9 | 0 | 0.3697 | 0.0033 | 0 | 0.5772 |

| MEAN | 2.9803 | 27.5437 | 17.7892 | 4.9745 | 22.8844 | 9.8251 | 3.2284 | 6.1030 | 6.1503 | 19.5304 | |

| STD | 39.4556 | 97.3967 | 78.3987 | 45.4127 | 95.8473 | 51.8421 | 40.1433 | 44.5673 | 47.6451 | 40.2275 | |

| TIME | 0.1864 | 0.1834 | 0.1744 | 0.1475 | 0.1956 | 0.1674 | 0.1836 | 0.2231 | 0.0904 | 0.9302 | |

| F8 | BEST | 6.45 × 10−5 | 0.5278 | 0.6101 | 3.39 × 10−4 | 0.5155 | 1.42 × 108 | 5.57 × 105 | 0.0438 | 0.0262 | 0.1533 |

| MEAN | 7.93 × 105 | 4.05 × 106 | 1.86 × 106 | 1.81 × 106 | 3.66 × 106 | 2.22 × 108 | 9.69 × 107 | 3.38 × 106 | 3.84 × 106 | 1.65 × 106 | |

| STD | 2.26 × 107 | 3.96 × 107 | 2.83 × 107 | 2.96 × 107 | 2.83 × 107 | 1.32 × 108 | 1.24 × 108 | 3.55 × 107 | 3.99 × 107 | 2.58 × 107 | |

| TIME | 0.6626 | 0.6407 | 0.6175 | 0.6476 | 0.6813 | 0.6804 | 0.6465 | 0.4189 | 0.3076 | 1.1649 | |

| F9 | BEST | 3.00 × 10−5 | 2.8907 | 2.8577 | 6.88 × 10−4 | 2.9815 | 6.61 × 108 | 2.5389 | 0.6075 | 0.3928 | 0.8492 |

| MEAN | 4.84 × 106 | 8.96 × 106 | 4.97 × 106 | 4.92 × 106 | 8.55 × 106 | 7.11 × 108 | 3.09 × 107 | 7.28 × 106 | 8.05 × 106 | 5.20 × 106 | |

| STD | 4.01 × 107 | 8.44 × 107 | 6.45 × 107 | 6.45 × 107 | 7.98 × 107 | 1.35 × 108 | 1.40 × 108 | 7.66 × 107 | 8.25 × 107 | 5.42 × 107 | |

| TIME | 0.6237 | 0.6170 | 0.6374 | 0.6372 | 0.6380 | 0.6234 | 0.6133 | 0.4383 | 0.3051 | 1.1549 | |

| F10 | BEST | 3.29 × 10−4 | 4.63 × 10−4 | 7.52 × 10−4 | 0.0024 | 6.95 × 10−4 | 8.33 × 10−3 | 1.21 × 10−2 | 3.62 × 10−4 | 0.0011 | 3.31 × 10−4 |

| MEAN | 0.0014 | 0.0108 | 0.0087 | 0.0031 | 0.0075 | 0.0250 | 0.0139 | 0.0193 | 0.0125 | 0.0132 | |

| STD | 0.0092 | 0.0440 | 0.0352 | 0.0174 | 0.0397 | 0.0266 | 0.0182 | 0.0130 | 0.0137 | 0.0149 | |

| TIME | 0.1415 | 0.1331 | 0.1256 | 0.1441 | 0.1470 | 0.1204 | 0.1351 | 0.1030 | 0.0566 | 0.8962 | |

| F11 | BEST | −10.4021 | −3.7065 | −4.2248 | −10.3921 | −4.3732 | −2.7479 | −6.4141 | −10.3998 | −7.2097 | −7.8124 |

| MEAN | −10.0248 | −3.0691 | −3.9299 | −9.8669 | −3.2063 | −2.5950 | −4.5366 | −7.6326 | −5.9612 | −6.9726 | |

| STD | 1.1633 | 1.7467 | 1.4404 | 1.3712 | 1.4478 | 1.2843 | 1.1177 | 2.3504 | 1.7862 | 1.0625 | |

| TIME | 0.2247 | 0.4710 | 0.4779 | 0.2023 | 0.5053 | 0.5345 | 0.1971 | 0.1303 | 0.0934 | 0.8702 | |

| F12 | BEST | −10.5398 | −4.2295 | −4.5870 | −10.4547 | −4.4975 | −2.6101 | −5.1456 | −10.5191 | −5.2541 | −7.3815 |

| MEAN | −9.9728 | −2.8359 | −2.8770 | −9.4217 | −3.1161 | −2.5639 | −3.8055 | −8.0916 | −5.0373 | −6.7461 | |

| STD | 0.5395 | 1.3041 | 1.1196 | 1.9452 | 1.3458 | 1.2225 | 1.0012 | 2.1849 | 0.7045 | 1.3569 | |

| TIME | 0.2381 | 0.5797 | 0.5812 | 0.2390 | 0.5975 | 0.6086 | 0.2210 | 0.1440 | 0.1157 | 0.8930 |

| Algorithm | BOA | HPSOBOA | FPSBOA | DMBOA |

|---|---|---|---|---|

| similarity coefficient | 0.8584 | 0.9001 | 0.9741 | 0.9877 |

| 0.7951 | 0.9274 | 0.9526 | 0.9927 | |

| 0.8560 | 0.9432 | 0.9363 | 0.9763 | |

| PI | 0.3054 | 0.2041 | 0.1687 | 0.1329 |

| time | 35.78 | 26.14 | 25.41 | 22.48 |

| PESQ | 2.06 | 2.23 | 2.30 | 2.44 |

| Algorithm | BOA | HPSOBOA | FPSBOA | DMBOA |

|---|---|---|---|---|

| similarity coefficient | 0.8119 0.8546 0.8757 0.8378 | 0.8878 0.9021 0.9074 0.9253 | 0.9784 0.9552 0.9301 0.9222 | 0.9982 0.9907 0.9874 0.9833 |

| PI | 0.2601 | 0.1986 | 0.1524 | 0.1163 |

| time | 37.91 | 34.25 | 30.51 | 26.74 |

| SSIM | 0.8340 | 0.9015 | 0.9282 | 0.9647 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xia, Q.; Ding, Y.; Zhang, R.; Liu, M.; Zhang, H.; Dong, X. Blind Source Separation Based on Double-Mutant Butterfly Optimization Algorithm. Sensors 2022, 22, 3979. https://doi.org/10.3390/s22113979

Xia Q, Ding Y, Zhang R, Liu M, Zhang H, Dong X. Blind Source Separation Based on Double-Mutant Butterfly Optimization Algorithm. Sensors. 2022; 22(11):3979. https://doi.org/10.3390/s22113979

Chicago/Turabian StyleXia, Qingyu, Yuanming Ding, Ran Zhang, Minti Liu, Huiting Zhang, and Xiaoqi Dong. 2022. "Blind Source Separation Based on Double-Mutant Butterfly Optimization Algorithm" Sensors 22, no. 11: 3979. https://doi.org/10.3390/s22113979

APA StyleXia, Q., Ding, Y., Zhang, R., Liu, M., Zhang, H., & Dong, X. (2022). Blind Source Separation Based on Double-Mutant Butterfly Optimization Algorithm. Sensors, 22(11), 3979. https://doi.org/10.3390/s22113979