1. Introduction

A 5G network is a massive IoT environment with low latency [

1,

2]. When an IoT device with weak security is connected to a 5G network, a Tbps-level DDoS attack targeting the 5G mobile network occurs, resulting in a network failure (delay) [

3]. This can cause major security problems for 5G core network functions and devices connected to the networks. Machine learning (ML) models learn the rules for making intrusion decisions. Building an automated intrusion detection system using ML can solve the time and cost limitations. In addition, generalized performance can be guaranteed for new attack patterns. Therefore, interest in the automation of intrusion detection using ML in 5G networks is increasing. However, DDoS attack detection using ML in 5G networks is limited by the processing of large amounts of data generated by 5G devices. Using all available features as input can lead to ML models performing poorly and wasted training and detection time. Therefore, the massive amount of 5G data raises the problem of selecting key features related to real-time learning and detection to provide ultra-low latency. To detect large amounts of traffic in 5G networks in real time, determining fewer important features while ensuring the learning performance is necessary. Thus, the feature-selection process can be used. In ML classification problems, the feature selection process is an essential preprocessing step for identifying important or highly relevant features in large datasets. The feature selection process maintains and improves performance while reducing computational complexity by removing noisy features that are less relevant to learning [

4,

5,

6,

7]. This feature selection method enables ultra-low-latency 5G services through real-time DDoS attack detection using an ML algorithm. Therefore, research on features that can reduce the time complexity for the real-time detection of large-capacity DDoS attacks in a 5G mobile network environment is required.

A 5G network consists of a service-based architecture (SBA) that is subdivided and virtualized into network function (NF) units. The International Telecommunication Union Telecommunication Standardization Sector (ITU-T) and 3rd Generation Partnership Project (3GPP) are researching the internalization of security in the SBA of 5G networks, a 5G E2E security framework, including SBA security in the SG17 core network security of ITU-T [

8], and 5G SBA NF security research conducted by 3GPP [

9]. In addition, as artificial intelligence (AI) technology advances, it is drawing attention as a major technology in 5G network design. To implement AI-based 5G network automation in 3GPP and optimize the related NF, a network data analysis function (NWDAF) was introduced in the 5G core network to adopt the network design method for AI [

10,

11]. Accordingly, prior research is necessary to implement security functions using the AI-native core architectures of 5G and 6G networks [

12].

Previous studies on the performance improvement of DDoS attack detection using ML and feature selection have focused on the wired network environment [

13,

14,

15,

16,

17]. Therefore, to provide ultra-low latency service in a 5G mobile network environment, studying the feature engineering of DDoS attack detection related to time complexity reduction is necessary. Thus, we constructed a 5G tested environment for experiments, collected GPRS Tunneling Protocol (GTP) packets by establishing hypotheses, explained DDoS detection results using feature selection, and presented future studies.

In this study, to detect large-scale DDoS attacks using ML in real time in a 5G mobile network environment, feature selection experiments that can reduce time complexity were performed, and the results were analyzed. A virtual 5G environment (UERANSIM + Open5GS) was constructed to collect GTP-U packets, i.e., 5G datasets from the user plane by reapplying the Kitsune dataset [

18,

19] on a 5G network. The 5G dataset collected was multiclass classified using the ML algorithms decision tree (DT), random forest (RF), k-nearest neighbors (KNN), and Stacking Ensemble. To compare their performance, various feature selection methods were used, namely, activating all features and activating selected features. In addition, the difference in the required time was compared while increasing the size of the data.

The main contributions of this study can be summarized as follows. First, we noted the importance of removing extraneous features to detect IoT DDoSs with low latency in the 5G core network path. The experiments show that the feature selection process can lead to faster and better classification models by selecting features that have a large influence on learning and detection. Second, we constructed an experimental 5G mobile environment and collected datasets using multiple user equipment (UE). Third, we evaluated the results of feature selection by classification algorithms and presented all performance indicators related to our experiments. Finally, we showed the usefulness of the feature selection process in terms of low-latency detection of IoT DDoS attacks on massive 5G datasets. Subsequently, we confirmed the significance of the feature selection study for the real-time detection of DDoS attacks in an ultra-low latency 5G core environment.

The remainder of this paper is organized as follows.

Section 2 presents previous studies related studies on 5G network attack detection and feature selection.

Section 3 describes the concepts of 5G system architecture and feature selection.

Section 4 defines the problem and outlines the IoT DDoS detection methodology of 5G environments employed in this study.

Section 5 presents the results of the feature selection method.

Section 6 compares the results of using all features extracted with the results obtained using the feature selection process. By increasing the size of the dataset, it shows the effect of the feature selection process on the detection of a large amount of IoT DDoS. Finally,

Section 7 provides concluding remarks regarding this study and directions for future research.

3. Background

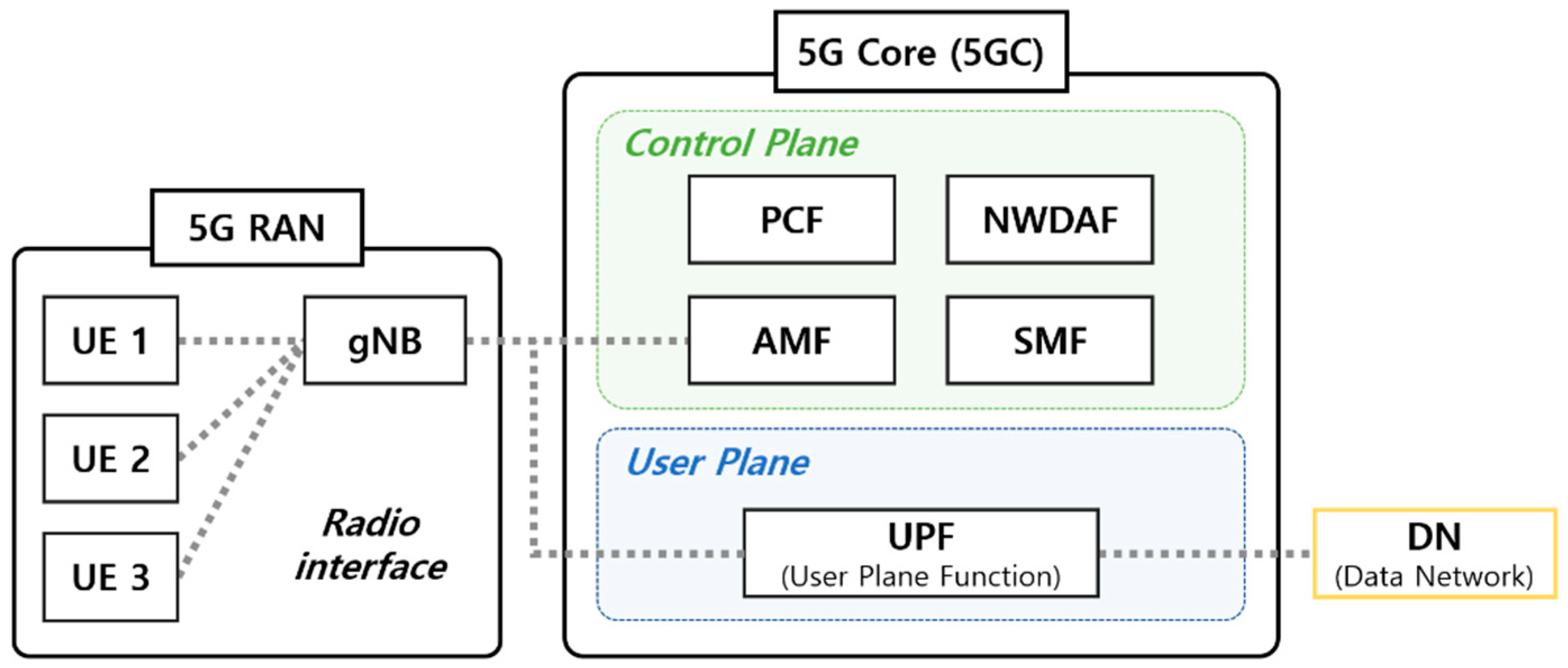

3.1. 5G System Architecture

The 5G system architecture (5GS) includes a 5G access network (AN), 5G core network (5GC), and UE [

26] (

Figure 1) and is defined as service-based. The 5G (R)AN provides a wireless interface for the UE. The 5G base station (gNB) of the (R)AN provides the GTP to the UE. GTP is a tunneling protocol that is defined to carry data within mobile networks. The network packet generated from the UE is transmitted to the 5GC via GPRS tunneling through the (R)AN. GTP consists of a control plane (GTP-C), user plane (GTP-U), and charging (GTP’ derived from GTP-C) traffic [

27].

Several NFs in the 5GC provide services through the serviced-based interface (SBI) of the SBA. The 5GC network is responsible for functions such as session management, mobility, authentication, and security [

28]. The control plane is responsible for control, and the user plane is responsible for data packet transmission [

29]. In the control plane, mobility is mostly managed through AMF and SMF in 5GC. The AMF manages the access and mobility of UEs through location service messages. The SMF allocates IP addresses to the UEs and manages user plane services [

30]. PCF defines policies of all types in the network and provides them to NFs (e.g., AMF, SMF, et al.) in other control planes [

31,

32]. Each NF of the 5GC constituting the SBA controls data transmission [

33]. The 5GS was enhanced to support network data analysis services through the NWDAF [

34]. NWDAF collects and analyzes information on various network domains. It is a core functional entity that provides analysis-based statistical and predictive insights into 5GC. To perform tasks such as mobility prediction and optimization, DDoS attack detection, and predictive QoS and data correlation, ML algorithms can utilize multiple network information collected through the NWDAF [

35]. The user plane function (UPF) of the 5GC user plane is connected to the data network (DN) and is responsible for packet routing and forwarding.

3.2. GPRS Tunneling Protocol in 5G

This study collected GTP tunneling packets to be transmitted to a 5G core and detected DDoS attacks through them. Therefore, the GTP uplink process for a network packet in 5G is described.

Figure 2 shows the uplink flow through the user plane of the 5G network traffic. The IP packet generated by the UE is forwarded to the gNB for transmission to the DN. When the gNB receives an IP packet, it encapsulates the IP packet in the GTP-U header. Subsequently, the packet is encapsulated inside the IP and UDP headers and is then transmitted to the UPF. The encapsulated IP packet and GTP-U header make up the G-protocol data unit (G-PDU). The GTP-U header consists of the message type, next extension header type, tunnel end-point identifier (TEID), and QoS flow identifier (QFI). The message type is fixed to decimal number 255 (0xff), indicating the G-PDU, and the next extension header type is fixed to binary number ‘1000 0101’ (0x85), indicating the PDU session container. The transmitted packet in the QoS flow is identified by the QoS flow identifier. TEID is an identifier uniquely assigned to each GTP user connection on each node [

12].

3.3. Feature Selection

Feature selection is an important technique for the efficient learning and operation of ML models. It is used to reduce overfitting and learning time as well as to improve accuracy. Feature selection is classified as unsupervised or supervised according to the value of y (label). Supervised feature selection selects features based on the label and is largely divided into three types of methods, i.e., filter, wrapper, and embedded methods [

4].

Filter Method. A filter method uses a statistical measurement technique to determine the influence and correlation of a feature, as opposed to that of the best feature subset. Hence, each feature is considered an independent variable. This method had the fastest speed in terms of time complexity. Some examples include feature importance and statistical methods.

Wrapper Method. A wrapper method determines a subset with the optimal usefulness by comparing subset combinations of all features in a specific ML algorithm. Therefore, the more features that exist, the higher the number of computations involved; thus, the method has the slowest speed in terms of time complexity. However, this improves the performance of the model because it determines the best feature subset. When the purpose of the ML algorithm is classification, the subsets are evaluated and compared based on their accuracy, precision, recall, and F1-score. Examples include recursive feature elimination (RFE), recursive feature elimination (RFECV), and sequential feature selection (SFS).

Embedded Method. An embedded method is used to learn features that contribute to the accuracy of the model. In terms of time complexity, this approach is between the wrapper and filter methods. Examples of embedded methods include LASSO, elastic net, and ridge regression.

In this study, feature selection was applied to find a small number of feature combinations (5 or 10) that maintained or increased the 5G malicious packet detection rate compared with a scenario in which all 55 features were used. The feature selection methods used in the experiment were the filter (feature importance) and wrapper (RFE, RFECV, SFS) methods. Feature importance is a method that uses the importance value provided by a tree-based model. RFE repeats the training using all features by removing features with the lowest importance until a user-specified number of features remains (the default is half of all features). RFECV derives the best feature subset using the same process as RFE. However, unlike RFE, the number of features can be unspecified, and cross-validation is possible. SFS finds the best feature subset by adding or removing features using the forward or backward method. SFS must specify the number of features for selection, and cross-validation is also possible.

6. Analysis of Experimental Results

The results obtained in

Section 5 demonstrate that the features selected for each feature selection method and classification algorithm are different, and the evaluation index performance differs according to the number of features used. In this section, we compare the base model of

Section 5.1 and the best performance with the classification algorithm of each feature selection method. We also compared the results with related works that are similar to this experiment. In addition, by increasing the amount of data, the time complexity change according to the feature selection application is checked and analyzed.

6.1. Comparison of Performance

6.1.1. Comparison with Base Model

Figure 6 shows the accuracy and F1-score of each classification algorithm for the base model using all features and the model applying each feature-selection method.

Figure 7 shows the ROC curves for the base model and each feature selection method for each classification algorithm.

Table 11 shows the score when using all features for each classification algorithm and the score when using the feature subset, showing good performance for each feature selection method. The multiclass classification result using all features was approximately 70.038%, which is significant, but the performance result increased with the feature selection method. In particular, the backward SFS method performed best for the tree-based classification algorithms, DT and RF. With KNN, the forward SFS method performed best according to the evaluation metrics, excluding the accuracy. The DT, RF, and KNN algorithms, which were learned by selecting the features for effective training for each classification algorithm, increased the accuracy by 9.1%, 6.34%, and 17.67%, respectively, compared to the base model. However, the accuracy of the stacking algorithm with the feature selection method decreased by 0.083%, whereas the F1-score increased by 0.076% compared with the scores when using all features.

As a result, when feature selection was used, the performance was maintained and improved. In addition, the features that had a significant influence on the classification results varied for each classification algorithm. The classification using feature selection achieving the best performance for each classification algorithm shows a lower score than that of the stacking algorithm using the feature selection method based on frequency. This indicates that the classification performance is highly dependent not only on the combination of features, but also on the classification algorithm applied.

6.1.2. Comparison with Related Work

Table 12 presents the results of related studies similar to ours. Our results (

Table 11) generally show good performance. In particular, the stacking algorithm, which showed the highest score among our results, showed the best performance, except for KNN in [

23]. However, it should be noted that similar studies have used different datasets and models. Therefore, justifying the comparison results is difficult.

6.2. Comparison of Time Complexity

Figure 8 and

Table 13 show the time complexity change according to the dataset size change. When all features were used, the required time increased remarkably as the dataset increased. In particular, KNN, which compares all data, and stacking, which is an ensemble technique of several models, showed the time required in units of the hour as the data increased. However, both algorithms maintained the required time in units of seconds when using features selected for feature selection. In particular, the stacking algorithm, which showed the best performance, showed a greater rate of change (between the base model and the model using feature selection) as the dataset increased. When 364,000 packets were used for the stacking algorithm, the time required for the base model was increased by 57.6 times compared to the time required for the model using feature selection, and in the case of using 455,000 packets, it increased by 84.07 times. As a result, as the dataset increases exponentially, the difference in time complexity between the base model and the model to which feature selection is applied will gradually increase.

The time complexity change experiment, according to the dataset size change, shows the effect of detecting a DDoS attack using the feature selected in a 5G network where a lot of IoT data is generated. Preprocessing through feature selection is useful and effective in detecting IoT DDoS attacks with low latency in 5G networks.

Thus, 5G should provide services to users with low latency. This requires ML-based automated intrusion detection systems to detect DDoS attacks at high speeds. Through experiments, this study confirmed that the feature selection process could have a significant effect on reducing the time complexity. In addition, compared with the large reduction in time required, the performance was maintained and improved. This shows that research related to feature engineering to detect large-capacity DDoS attacks in real time in 5G mobile networks is significant.

7. Conclusions

This study detected DDoS attacks using ML-based multiclass classification in a 5G mobile network environment. Subsequently, the effect of the feature selection method on the detection performance and time complexity was studied. For this purpose, an experimental 5G environment was constructed, hypotheses were made, and the 5G dataset was collected. In the experiment, the classification results using the features selected by the filter and wrapper methods were compared with those of a base model using all extracted features.

In the base model, which used all 55 features, the stacking algorithm showed the highest accuracy at 97.264%. When feature selection was applied, the number of features decreased by at least 25.45%, and the multiclass classification performance exceeded 82%. In particular, DT and RF increased the accuracy of the SFS (backward) method by 9.1% and 6.34%. In contrast, KNN increased the accuracy by 17.668% in the SFS (forward) method. The accuracy of the stacking algorithm was 97.183%, which was 0.083% lower than the accuracy when all features were used. In other words, compared to the large reduction rate of the number of features, the performance was maintained and improved. In addition, an experiment was conducted to confirm the change in detection and training time according to the increase in the size of the dataset. As the size of the dataset increased, training and detection times increased dramatically when all features were used. However, when feature selection was used, the training and detection time required for algorithms was maintained in seconds. As a result, as the size of the dataset increased, the difference in time complexity between the model using feature selection and the model using all features increased exponentially. Experiments showed that feature studies using feature selection are significant for improving DDoS detection performance and reducing time complexity. As a result, we confirmed the possibility of real-time DDoS attack detection via a feature selection process. Therefore, research related to feature engineering is required to detect large-capacity DDoS attacks with low latency in 5G mobile network environments.

Although this study established a 5G environment, only one gNB was built for the experiment. Therefore, in the near future, we will build two or more gNBs and establish an experimental environment by allocating gNBs by IP bandwidth. Subsequently, we plan to conduct feature-engineering-related experiments to detect DDoS attacks through multiple gNBs in real time. Simultaneously, we will conduct a feature study to detect more diverse types of DDoS attacks with low latency. In addition, 5G traffic was collected from the N3 network interface and entered from the gNB to the core in this study. However, because various network interfaces are used at the 5G core, a DDoS attack detection study that considers packets in other network interfaces is required.

The feature selection study for real-time detection of 5G mobile network DDoS attacks presented in this paper can be utilized in various applications. In particular, it can be utilized in a lightweight ML-based security function that can be operated in a 5G or 6G environment. Therefore, we plan to conduct feature engineering research that can be utilized in DDoS detection to achieve lower computational and time complexities. In addition, we plan to analyze the structure and procedure of the 5G core function and research a plan for the network function model that performs AI-based security detection in the 5G network standard structure.