Probabilistic Load Forecasting Optimization for Building Energy Models via Day Characterization

Abstract

:1. Introduction

2. Methodology

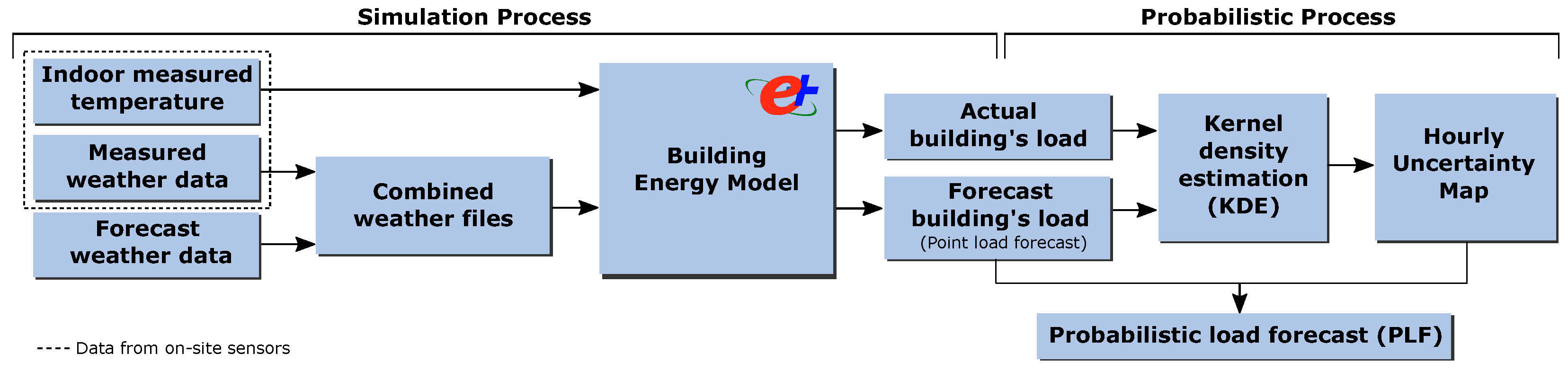

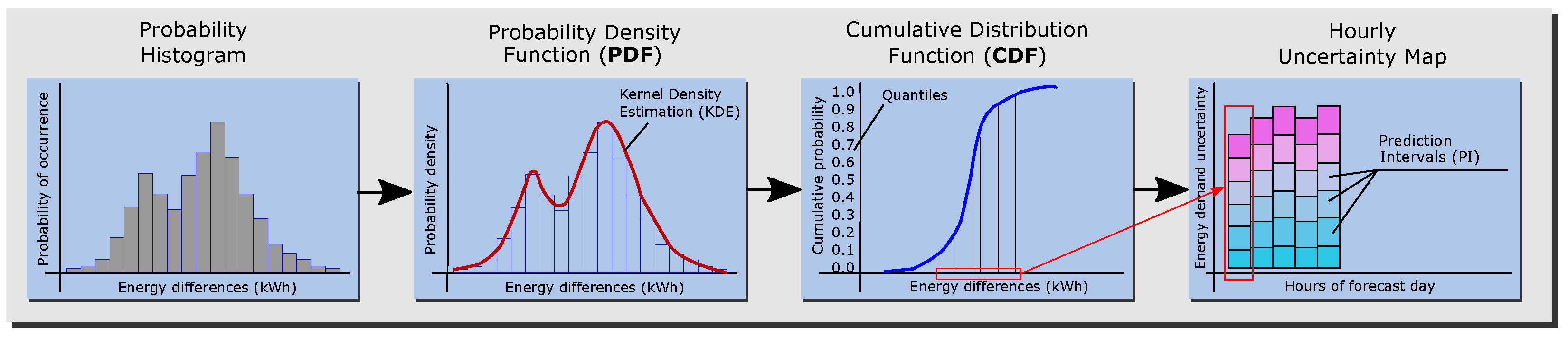

2.1. Probabilistic Load Forecast Using BEMs

2.2. Optimal Probabilistic Load Forecast

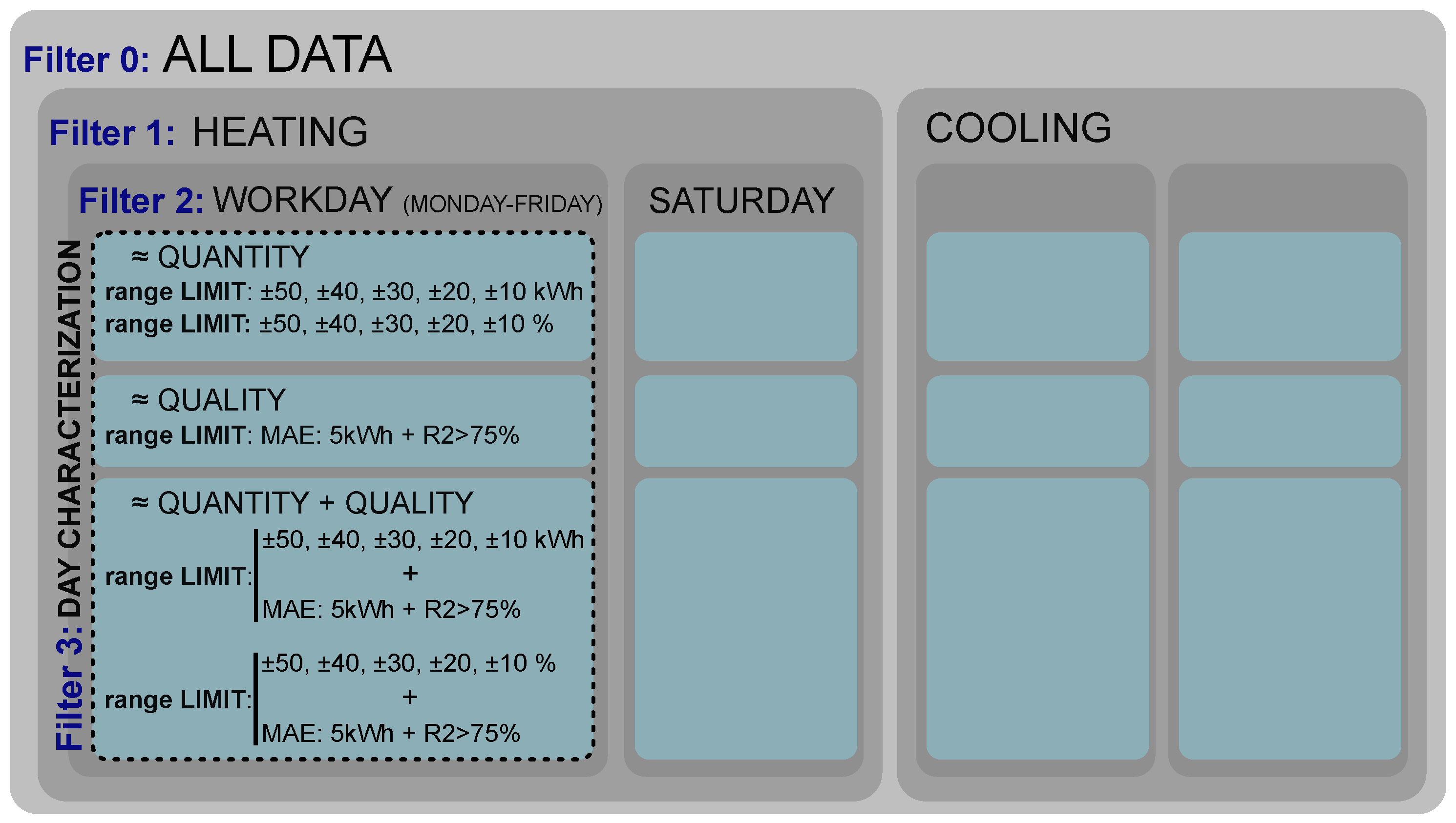

2.2.1. First Step: Definition of the Characterization Criteria

- Quantitative similarity: The days are similar in terms of the amount of energy demanded. This was analyzed using two different criteria: (1) by percentage with jumps of 5% with respect to energy demand (from 5% to 25%) and (2) by the amount of energy with jumps of 10 kWh (from 10 to 50 kWh). We selected 10 kWh because it matched with 10% of the mean daily energy demand of the building used in this study. For other cases with other mean daily energy demand, the jumps in kWh will be adjusted for the specific case.

- Qualitative similarity: The days are similar in terms of the shape of the required energy demand curve. Two indexes were used for this characterization: mean absolute error (MAE) (Equation (1)), which measures the average magnitude of the error in the units of the variable of interest, and the coefficient of determination () (Equation (2)), which allows measuring the linear relationship of the two patterns [59]. A maximum limit for the MAE of 5 kWh and a minimum limit for of 75% were established.

- Combination of the quantitative and qualitative criteria: The days selected as training days fulfill at once the quantitative and qualitative criteria defined in each case.

2.2.2. Second Step: PLF Calculation for Each Criterion

2.2.3. Third Step: Definition of the Minimum Training Days

2.2.4. Fourth Step: Ordering of the Characterization Criteria

3. Description of the Case Study

4. Results

- First Step: Definition of the characterization criteria

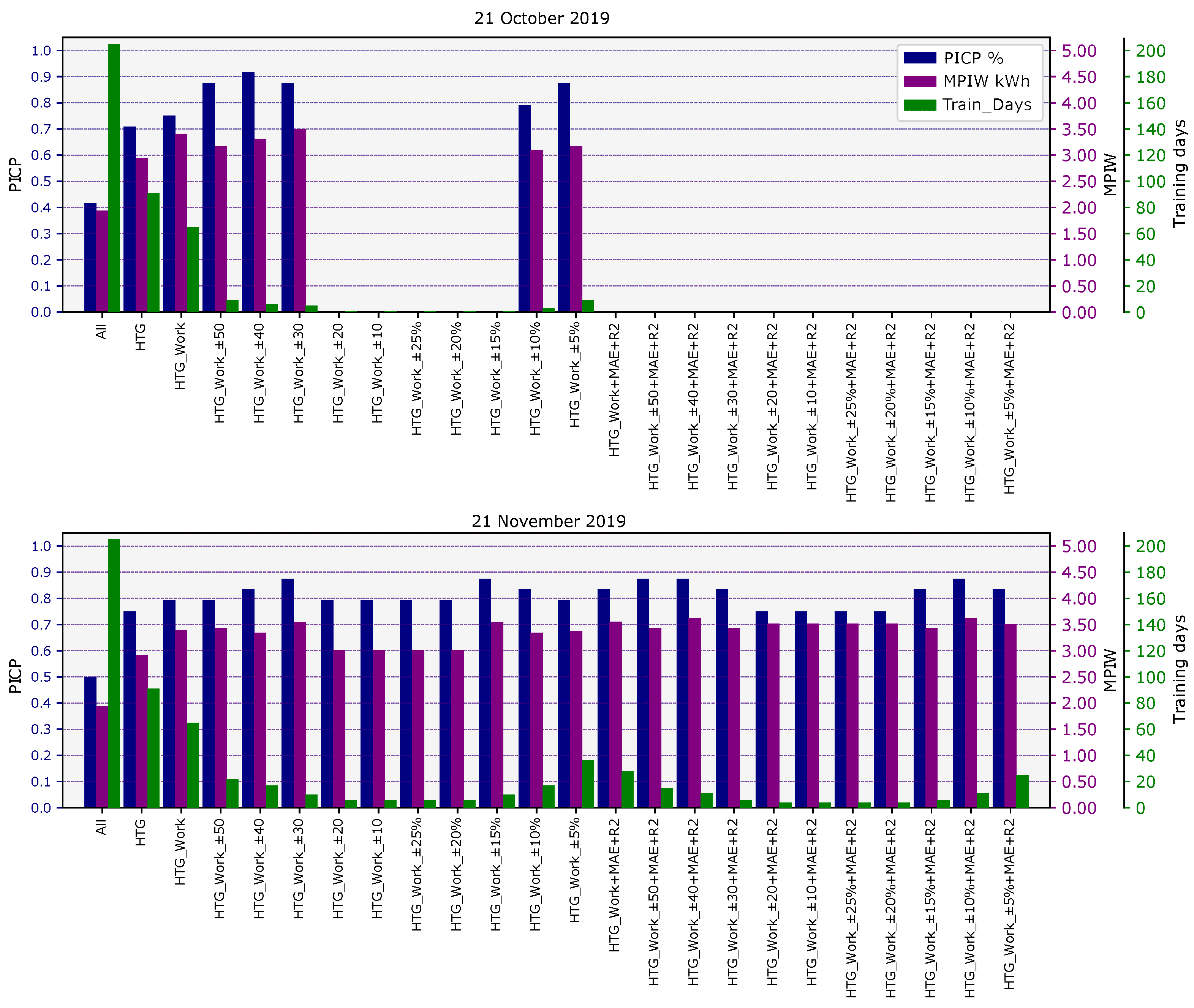

- Second Step: PLF calculation for each criterion

- Third Step: Definition of the minimum training days

- Fourth Step: Ordering of the characterization criteria

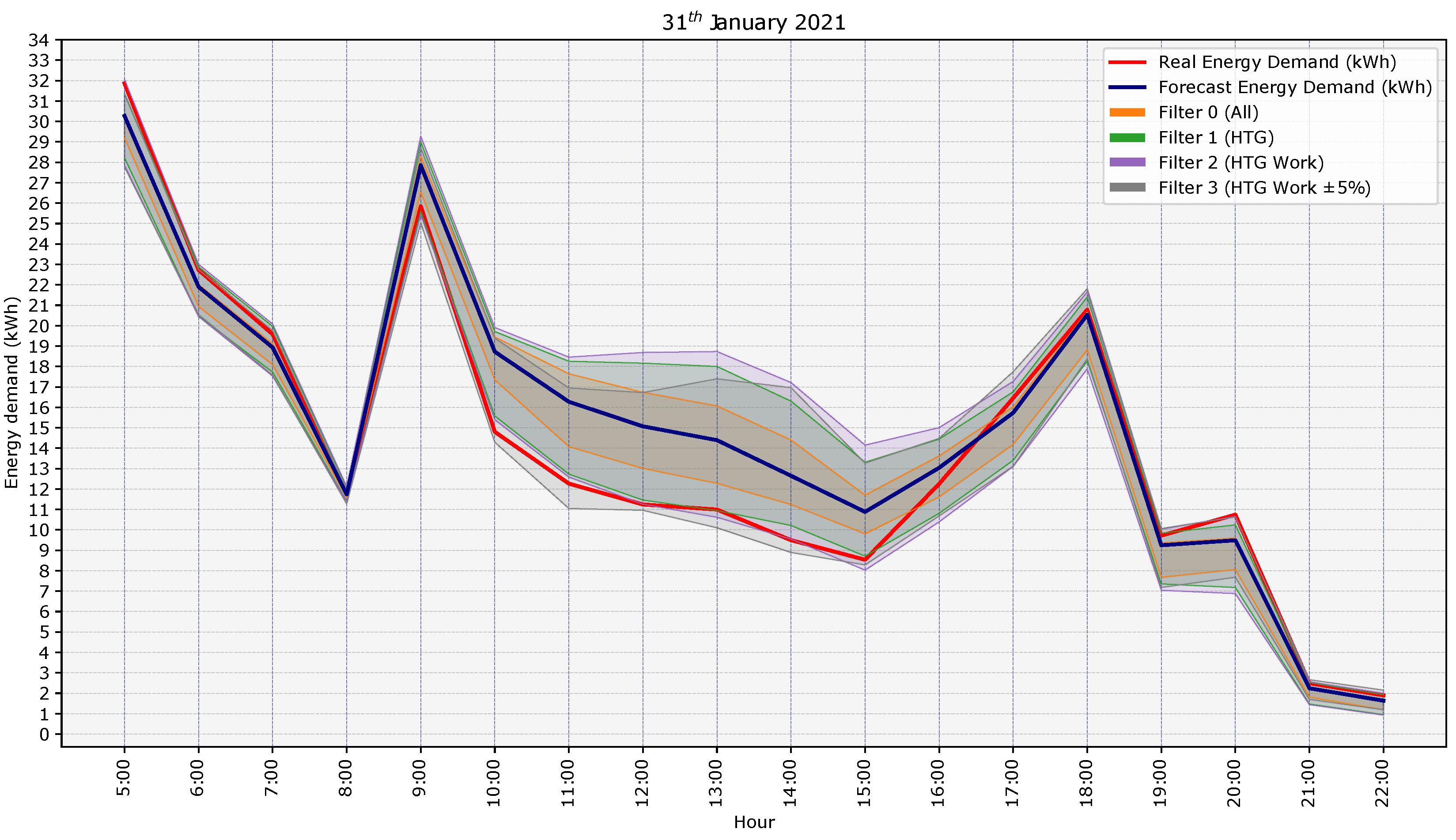

Validation of the Methodology

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ARIMA | Autoregressive integrated moving average |

| ARMA | Autoregressive moving average |

| BEM | Building energy model |

| CDF | Cumulative distribution function |

| CLG | Cooling |

| DHW | Domestic hot water |

| EPW | EnergyPlus weather file |

| HTG | Heating |

| HVAC | Heating, ventilation, and air conditioning |

| KDE | Kernel density estimation |

| MAE | Mean absolute error |

| MPIW | Mean prediction interval width |

| Probability density function | |

| PI | Prediction intervals |

| PICP | Prediction interval coverage probability |

| PINC | Prediction interval nominal confidence |

| PLF | Probabilistic load forecast |

| Sat | Saturday |

| Coefficient of determination |

Appendix A

| Min Training Days | HTG_Work_±50 | HTG_Work_±40 | HTG_Work_±30 | HTG_Work_±20 | HTG_Work_±10 | HTG_Work_±25% | HTG_Work_±20% | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | |||||||||||||||

| Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | ||||||||

| 1 | 82.97% | 80.69% | 82.97% | 80.01% | 82.71% | 79.60% | 82.58% | 76.29% | 83.37% | 70.99% | 81.86% | 75.39% | 81.62% | 76.68% | |||||||

| 2 | 82.97% | 80.69% | 82.97% | 80.01% | 82.71% | 79.60% | 82.64% | 77.10% | 83.37% | 71.60% | 81.91% | 76.24% | 81.62% | 76.68% | |||||||

| 3 | 82.57% | 81.85% | 82.29% | 81.97% | 82.14% | 81.03% | 82.11% | 78.35% | 82.84% | 76.08% | 81.84% | 79.73% | 81.99% | 79.47% | |||||||

| 4 | 82.42% | 81.90% | 82.14% | 82.03% | 82.29% | 80.99% | 81.32% | 79.56% | 82.98% | 77.53% | 81.76% | 79.13% | 81.84% | 80.34% | |||||||

| 5 | 82.14% | 82.02% | 81.54% | 81.85% | 82.06% | 81.27% | 82.22% | 80.39% | 84.72% | 82.99% | 83.06% | 79.93% | 81.51% | 80.04% | |||||||

| 6 | 81.98% | 81.79% | 81.67% | 81.04% | 81.86% | 81.04% | 82.41% | 79.89% | 85.83% | 85.42% | 83.58% | 79.91% | 83.58% | 82.06% | |||||||

| 7 | 82.22% | 82.37% | 81.86% | 81.34% | 82.12% | 81.44% | 83.65% | 80.83% | 77.78% | 75.00% | 83.83% | 79.39% | 83.39% | 82.16% | |||||||

| 8 | 81.91% | 82.30% | 81.86% | 81.34% | 82.33% | 82.65% | 82.47% | 78.86% | 83.33% | 83.33% | 82.88% | 79.09% | 83.58% | 82.81% | |||||||

| 9 | 81.81% | 82.44% | 81.45% | 80.31% | 82.96% | 82.27% | 80.16% | 78.65% | 82.87% | 79.54% | 84.39% | 85.56% | |||||||||

| 10 | 81.75% | 82.40% | 81.52% | 80.33% | 82.96% | 82.27% | 82.58% | 79.92% | 89.17% | 83.17% | 84.02% | 84.85% | |||||||||

| 11 | 82.16% | 82.70% | 81.82% | 80.48% | 82.72% | 82.23% | 85.42% | 81.25% | 91.67% | 83.33% | 82.42% | 83.33% | |||||||||

| 12 | 81.93% | 82.68% | 82.68% | 81.31% | 80.33% | 79.67% | 75.00% | 80.56% | |||||||||||||

| 13 | 82.01% | 82.75% | 82.66% | 81.16% | 77.92% | 76.85% | |||||||||||||||

| 14 | 81.67% | 82.16% | 82.33% | 81.00% | 75.28% | 75.69% | |||||||||||||||

| 15 | 82.33% | 82.56% | 82.59% | 80.70% | 68.75% | 64.58% | |||||||||||||||

| 16 | 82.33% | 82.56% | 82.73% | 80.81% | |||||||||||||||||

| 17 | 83.16% | 83.08% | 82.66% | 82.46% | |||||||||||||||||

| 18 | 83.16% | 83.08% | 85.53% | 84.39% | |||||||||||||||||

| 19 | 83.74% | 83.52% | 84.72% | 82.64% | |||||||||||||||||

| 20 | 82.04% | 82.04% | 87.50% | 79.17% | |||||||||||||||||

| 21 | 83.86% | 83.86% | 87.50% | 79.17% | |||||||||||||||||

| 22 | 86.46% | 86.46% | |||||||||||||||||||

| 23 | 87.50% | 86.90% | |||||||||||||||||||

| 24 | 91.67% | 89.58% | |||||||||||||||||||

| 25 | 91.67% | 91.67% | |||||||||||||||||||

| Min Training Days | HTG_Work_±15% | HTG_Work_±10% | HTG_Work_±5% | HTG_Work | HTG_Work_±50 | HTG_Work_±40 | HTG_Work_±30 | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| +MAE+R2 | +MAE+R2 | +MAE+R2 | +MAE+R2 | ||||||||||||||||||

| Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | |||||||||||||||

| Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | ||||||||

| 1 | 81.62% | 79.94% | 82.14% | 79.85% | 82.50% | 82.32% | 83.27% | 78.59% | 82.53% | 75.03% | 82.93% | 75.75% | 83.12% | 77.67% | |||||||

| 2 | 81.62% | 79.94% | 82.14% | 79.85% | 82.50% | 82.32% | 83.27% | 78.59% | 82.53% | 75.03% | 82.93% | 75.75% | 82.54% | 77.54% | |||||||

| 3 | 81.30% | 79.97% | 81.98% | 80.61% | 82.14% | 83.36% | 82.52% | 81.77% | 82.03% | 79.50% | 81.90% | 81.15% | 81.90% | 80.21% | |||||||

| 4 | 81.36% | 82.45% | 81.30% | 81.08% | 82.14% | 83.36% | 82.74% | 83.00% | 81.90% | 81.35% | 82.08% | 81.54% | 82.30% | 81.86% | |||||||

| 5 | 81.70% | 81.83% | 81.53% | 81.45% | 81.84% | 83.50% | 82.35% | 82.91% | 81.90% | 81.35% | 82.69% | 80.79% | 83.41% | 82.58% | |||||||

| 6 | 81.37% | 81.68% | 81.52% | 81.51% | 81.37% | 83.15% | 81.72% | 82.69% | 82.16% | 81.35% | 82.69% | 80.79% | 83.14% | 82.36% | |||||||

| 7 | 82.35% | 82.59% | 81.36% | 81.52% | 81.59% | 83.80% | 81.62% | 82.85% | 82.30% | 80.83% | 82.27% | 80.51% | 83.67% | 82.88% | |||||||

| 8 | 82.23% | 82.77% | 81.70% | 81.12% | 81.59% | 83.80% | 81.62% | 82.85% | 82.24% | 81.20% | 82.53% | 79.88% | 81.90% | 80.69% | |||||||

| 9 | 81.71% | 82.50% | 81.84% | 80.99% | 81.59% | 83.80% | 81.15% | 82.52% | 83.28% | 81.01% | 83.60% | 80.13% | 81.87% | 83.26% | |||||||

| 10 | 82.50% | 82.89% | 81.72% | 81.02% | 81.53% | 83.82% | 81.97% | 83.05% | 83.28% | 81.01% | 83.45% | 79.92% | 77.38% | 79.64% | |||||||

| 11 | 82.15% | 82.24% | 82.40% | 81.16% | 81.53% | 83.82% | 81.97% | 83.05% | 83.73% | 81.45% | 83.07% | 79.61% | 75.00% | 70.83% | |||||||

| 12 | 82.24% | 83.59% | 81.65% | 80.45% | 81.53% | 83.82% | 81.97% | 83.05% | 84.65% | 81.91% | 84.58% | 78.33% | |||||||||

| 13 | 78.33% | 79.55% | 81.81% | 80.58% | 81.53% | 83.82% | 82.01% | 83.38% | 85.48% | 83.29% | 79.17% | 72.50% | |||||||||

| 14 | 77.31% | 79.17% | 81.24% | 79.68% | 81.31% | 83.60% | 82.01% | 83.38% | 83.83% | 82.44% | 87.50% | 87.50% | |||||||||

| 15 | 83.33% | 79.17% | 82.75% | 81.26% | 81.36% | 83.61% | 82.01% | 83.38% | 82.41% | 82.78% | 87.50% | 87.50% | |||||||||

| 16 | 82.81% | 81.45% | 81.36% | 83.61% | 84.58% | 85.86% | 80.56% | 83.06% | |||||||||||||

| 17 | 83.26% | 82.77% | 81.84% | 83.54% | 84.81% | 86.44% | 89.58% | 85.42% | |||||||||||||

| 18 | 84.52% | 85.12% | 81.84% | 83.54% | 84.66% | 85.86% | |||||||||||||||

| 19 | 87.04% | 87.04% | 81.84% | 83.54% | 84.87% | 85.80% | |||||||||||||||

| 20 | 93.75% | 87.50% | 81.84% | 83.54% | 84.83% | 86.01% | |||||||||||||||

| 21 | 87.50% | 79.17% | 81.84% | 83.54% | 84.83% | 86.01% | |||||||||||||||

| 22 | 84.17% | 86.12% | |||||||||||||||||||

| 23 | 83.45% | 85.49% | |||||||||||||||||||

| 24 | 84.25% | 86.39% | |||||||||||||||||||

| 25 | 84.25% | 86.39% | |||||||||||||||||||

| 26 | 84.78% | 87.59% | |||||||||||||||||||

| 27 | 84.53% | 87.08% | |||||||||||||||||||

| 28 | 83.59% | 86.73% | |||||||||||||||||||

| 29 | 81.67% | 84.17% | |||||||||||||||||||

| 30 | 80.24% | 83.69% | |||||||||||||||||||

| 31 | 64.58% | 76.67% | |||||||||||||||||||

| Min Training Days | HTG_Work_±20 | HTG_Work_±10 | HTG_Work_±25% | HTG_Work_±20% | HTG_Work_±15% | HTG_Work_±10% | HTG_Work_±5% | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| +MAE+R2 | +MAE+R2 | +MAE+R2 | +MAE+R2 | +MAE+R2 | +MAE+R2 | +MAE+R2 | |||||||||||||||

| Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | Mean PICP | |||||||||||||||

| Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | Filter 2 | Filter 3 | ||||||||

| 1 | 82.15% | 71.27% | 83.27% | 65.48% | 80.74% | 67.08% | 81.43% | 73.40% | 81.81% | 78.69% | 82.03% | 77.71% | 82.04% | 78.17% | |||||||

| 2 | 82.22% | 72.37% | 83.43% | 66.85% | 80.79% | 68.19% | 81.43% | 73.40% | 81.67% | 78.58% | 82.03% | 77.71% | 82.04% | 78.17% | |||||||

| 3 | 83.01% | 78.55% | 81.85% | 72.96% | 82.83% | 73.63% | 81.75% | 77.86% | 81.78% | 81.62% | 82.12% | 80.66% | 81.24% | 81.93% | |||||||

| 4 | 82.83% | 78.48% | 81.73% | 75.90% | 83.62% | 74.97% | 83.57% | 78.66% | 81.77% | 81.21% | 81.78% | 81.50% | 82.18% | 83.21% | |||||||

| 5 | 82.83% | 77.83% | 83.33% | 83.33% | 82.06% | 73.53% | 83.43% | 78.27% | 81.77% | 81.21% | 81.78% | 81.50% | 82.15% | 83.54% | |||||||

| 6 | 82.72% | 79.06% | 82.00% | 71.25% | 84.39% | 80.72% | 82.58% | 82.81% | 81.26% | 80.98% | 82.15% | 83.54% | |||||||||

| 7 | 75.83% | 76.20% | 79.00% | 71.67% | 81.17% | 79.92% | 83.08% | 85.54% | 81.77% | 80.48% | 82.25% | 83.45% | |||||||||

| 8 | 77.33% | 78.17% | 78.89% | 73.61% | 82.62% | 83.81% | 81.03% | 82.95% | 82.34% | 80.73% | 82.25% | 83.45% | |||||||||

| 9 | 78.33% | 87.50% | 87.22% | 91.67% | 82.12% | 84.39% | 81.22% | 79.58% | 82.25% | 83.45% | |||||||||||

| 10 | 81.85% | 79.71% | 82.25% | 83.45% | |||||||||||||||||

| 11 | 82.04% | 80.21% | 82.25% | 83.45% | |||||||||||||||||

| 12 | 83.27% | 79.76% | 81.86% | 83.33% | |||||||||||||||||

| 13 | 89.58% | 84.03% | 81.89% | 83.71% | |||||||||||||||||

| 14 | 93.06% | 94.44% | 81.33% | 83.20% | |||||||||||||||||

| 15 | 87.50% | 87.50% | 82.84% | 84.11% | |||||||||||||||||

| 16 | 84.04% | 85.09% | |||||||||||||||||||

| 17 | 83.96% | 85.49% | |||||||||||||||||||

| 18 | 83.08% | 85.08% | |||||||||||||||||||

| 19 | 83.53% | 85.88% | |||||||||||||||||||

| 20 | 83.53% | 85.88% | |||||||||||||||||||

| 21 | 84.84% | 86.35% | |||||||||||||||||||

| 22 | 84.84% | 86.35% | |||||||||||||||||||

| 23 | 86.06% | 86.89% | |||||||||||||||||||

| 24 | 86.06% | 86.89% | |||||||||||||||||||

| 25 | 87.85% | 88.54% | |||||||||||||||||||

| 26 | 83.33% | 84.38% | |||||||||||||||||||

References

- Marinakis, V.; Doukas, H. An advanced IoT-based system for intelligent energy management in buildings. Sensors 2018, 18, 610. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jia, M.; Komeily, A.; Wang, Y.; Srinivasan, R.S. Adopting Internet of Things for the development of smart buildings: A review of enabling technologies and applications. Autom. Constr. 2019, 101, 111–126. [Google Scholar] [CrossRef]

- Li, Z.; Hurn, A.; Clements, A. Forecasting quantiles of day-ahead electricity load. Energy Econ. 2017, 67, 60–71. [Google Scholar] [CrossRef] [Green Version]

- Yildiz, B.; Bilbao, J.I.; Dore, J.; Sproul, A.B. Recent advances in the analysis of residential electricity consumption and applications of smart meter data. Appl. Energy 2017, 208, 402–427. [Google Scholar] [CrossRef]

- Guelpa, E.; Verda, V. Demand Response and other Demand Side Management techniques for District Heating: A review. Energy 2020, 119440. [Google Scholar] [CrossRef]

- Lee, S.; Choi, D.H. Reinforcement learning-based energy management of smart home with rooftop solar photovoltaic system, energy storage system, and home appliances. Sensors 2019, 19, 3937. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aste, N.; Adhikari, R.; Buzzetti, M.; Del Pero, C.; Huerto-Cardenas, H.; Leonforte, F.; Miglioli, A. nZEB: Bridging the gap between design forecast and actual performance data. Energy Built Environ. 2020. [Google Scholar] [CrossRef]

- Seal, S.; Boulet, B.; Dehkordi, V.R. Centralized model predictive control strategy for thermal comfort and residential energy management. Energy 2020, 212, 118456. [Google Scholar] [CrossRef]

- Buzna, L.; De Falco, P.; Ferruzzi, G.; Khormali, S.; Proto, D.; Refa, N.; Straka, M.; van der Poel, G. An ensemble methodology for hierarchical probabilistic electric vehicle load forecasting at regular charging stations. Appl. Energy 2021, 283, 116337. [Google Scholar] [CrossRef]

- Abergel, T.; Dulac, J.; Hamilton, I.; Jordan, M.; Pradeep, A. Global Status Report for Buildings and Construction-Towards a Zero-Emissions, Efficient and Resilient Buildings and Construction Sector. 2019. Available online: http://wedocs.unep.org/bitstream/handle/20.500.11822/30950/2019GSR.pdf?sequence=1&isAllowed=y (accessed on 20 April 2020).

- Hestnes, A.G.; Kofoed, N.U. Effective retrofitting scenarios for energy efficiency and comfort: Results of the design and evaluation activities within the OFFICE project. Build. Environ. 2002, 37, 569–574. [Google Scholar] [CrossRef]

- Shirazi, A.; Ashuri, B. Embodied Life Cycle Assessment (LCA) comparison of residential building retrofit measures in Atlanta. Build. Environ. 2020, 171, 106644. [Google Scholar] [CrossRef]

- Zhang, Z.; Chong, A.; Pan, Y.; Zhang, C.; Lam, K.P. Whole building energy model for HVAC optimal control: A practical framework based on deep reinforcement learning. Energy Build. 2019, 199, 472–490. [Google Scholar] [CrossRef]

- Ramos Ruiz, G.; Lucas Segarra, E.; Fernández Bandera, C. Model predictive control optimization via genetic algorithm using a detailed building energy model. Energies 2019, 12, 34. [Google Scholar] [CrossRef] [Green Version]

- Guideline, A. Guideline 14-2002, Measurement of Energy and Demand Savings; American Society of Heating, Ventilating, and Air Conditioning Engineers: Atlanta, GA, USA, 2002. [Google Scholar]

- Foucquier, A.; Robert, S.; Suard, F.; Stéphan, L.; Jay, A. State of the art in building modelling and energy performances prediction: A review. Renew. Sustain. Energy Rev. 2013, 23, 272–288. [Google Scholar] [CrossRef] [Green Version]

- Amasyali, K.; El-Gohary, N.M. A review of data-driven building energy consumption prediction studies. Renew. Sustain. Energy Rev. 2018, 81, 1192–1205. [Google Scholar] [CrossRef]

- Bourdeau, M.; Zhai, X.; Nefzaoui, E.; Guo, X.; Chatellier, P. Modeling and forecasting building energy consumption: A review of data-driven techniques. Sustain. Cities Soc. 2019, 48, 101533. [Google Scholar] [CrossRef]

- Sun, Y.; Haghighat, F.; Fung, B.C. A Review of the-State-of-the-Art in Data-driven Approaches for Building Energy Prediction. Energy Build. 2020, 110022. [Google Scholar] [CrossRef]

- Chou, J.S.; Ngo, N.T. Time series analytics using sliding window metaheuristic optimization-based machine learning system for identifying building energy consumption patterns. Appl. Energy 2016, 177, 751–770. [Google Scholar] [CrossRef]

- Nepal, B.; Yamaha, M.; Yokoe, A.; Yamaji, T. Electricity load forecasting using clustering and ARIMA model for energy management in buildings. Jpn. Archit. Rev. 2020, 3, 62–76. [Google Scholar] [CrossRef] [Green Version]

- Moradzadeh, A.; Mansour-Saatloo, A.; Mohammadi-Ivatloo, B.; Anvari-Moghaddam, A. Performance Evaluation of Two Machine Learning Techniques in Heating and Cooling Loads Forecasting of Residential Buildings. Appl. Sci. 2020, 10, 3829. [Google Scholar] [CrossRef]

- Khoshrou, A.; Pauwels, E.J. Short-term scenario-based probabilistic load forecasting: A data-driven approach. Appl. Energy 2019, 238, 1258–1268. [Google Scholar] [CrossRef] [Green Version]

- Khan, Z.A.; Hussain, T.; Ullah, A.; Rho, S.; Lee, M.; Baik, S.W. Towards Efficient Electricity Forecasting in Residential and Commercial Buildings: A Novel Hybrid CNN with a LSTM-AE based Framework. Sensors 2020, 20, 1399. [Google Scholar] [CrossRef] [Green Version]

- Kwak, Y.; Huh, J.H. Development of a method of real-time building energy simulation for efficient predictive control. Energy Convers. Manag. 2016, 113, 220–229. [Google Scholar] [CrossRef]

- Kwak, Y.; Huh, J.H.; Jang, C. Development of a model predictive control framework through real-time building energy management system data. Appl. Energy 2015, 155, 1–13. [Google Scholar] [CrossRef]

- Kampelis, N.; Papayiannis, G.I.; Kolokotsa, D.; Galanis, G.N.; Isidori, D.; Cristalli, C.; Yannacopoulos, A.N. An Integrated Energy Simulation Model for Buildings. Energies 2020, 13, 1170. [Google Scholar] [CrossRef] [Green Version]

- Luo, J.; Hong, T.; Fang, S.C. Benchmarking robustness of load forecasting models under data integrity attacks. Int. J. Forecast. 2018, 34, 89–104. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, F.; Wang, K. Robustness of Short-Term Wind Power Forecasting Against False Data Injection Attacks. Energies 2020, 13, 3780. [Google Scholar] [CrossRef]

- Henze, G. Model predictive control for buildings: A quantum leap? J. Build. Perform. Simul. 2013. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, A.T.; Reiter, S.; Rigo, P. A review on simulation-based optimization methods applied to building performance analysis. Appl. Energy 2014, 113, 1043–1058. [Google Scholar] [CrossRef]

- Ghosh, S.; Reece, S.; Rogers, A.; Roberts, S.; Malibari, A.; Jennings, N.R. Modeling the thermal dynamics of buildings: A latent-force-model-based approach. ACM Trans. Intell. Syst. Technol. TIST 2015, 6, 1–27. [Google Scholar] [CrossRef]

- Gray, F.M.; Schmidt, M. A hybrid approach to thermal building modelling using a combination of Gaussian processes and grey-box models. Energy Build. 2018, 165, 56–63. [Google Scholar] [CrossRef]

- Mariano-Hernández, D.; Hernández-Callejo, L.; Zorita-Lamadrid, A.; Duque-Pérez, O.; García, F.S. A review of strategies for building energy management system: Model predictive control, demand side management, optimization, and fault detect & diagnosis. J. Build. Eng. 2020, 101692. [Google Scholar] [CrossRef]

- Petersen, S.; Bundgaard, K.W. The effect of weather forecast uncertainty on a predictive control concept for building systems operation. Appl. Energy 2014, 116, 311–321. [Google Scholar] [CrossRef]

- Xu, L.; Wang, S.; Tang, R. Probabilistic load forecasting for buildings considering weather forecasting uncertainty and uncertain peak load. Appl. Energy 2019, 237, 180–195. [Google Scholar] [CrossRef]

- Sandels, C.; Widén, J.; Nordström, L.; Andersson, E. Day-ahead predictions of electricity consumption in a Swedish office building from weather, occupancy, and temporal data. Energy Build. 2015, 108, 279–290. [Google Scholar] [CrossRef]

- Thieblemont, H.; Haghighat, F.; Ooka, R.; Moreau, A. Predictive control strategies based on weather forecast in buildings with energy storage system: A review of the state-of-the art. Energy Build. 2017, 153, 485–500. [Google Scholar] [CrossRef] [Green Version]

- Zhao, J.; Duan, Y.; Liu, X. Uncertainty analysis of weather forecast data for cooling load forecasting based on the Monte Carlo method. Energies 2018, 11, 1900. [Google Scholar] [CrossRef] [Green Version]

- Agüera-Pérez, A.; Palomares-Salas, J.C.; González de la Rosa, J.J.; Florencias-Oliveros, O. Weather forecasts for microgrid energy management: Review, discussion and recommendations. Appl. Energy 2018, 228, 265–278. [Google Scholar] [CrossRef]

- Wang, Z.; Hong, T.; Piette, M.A. Building thermal load prediction through shallow machine learning and deep learning. Appl. Energy 2020, 263, 114683. [Google Scholar] [CrossRef] [Green Version]

- Henze, G.P.; Kalz, D.E.; Felsmann, C.; Knabe, G. Impact of forecasting accuracy on predictive optimal control of active and passive building thermal storage inventory. HVAC R Res. 2004, 10, 153–178. [Google Scholar] [CrossRef]

- Oldewurtel, F.; Parisio, A.; Jones, C.N.; Gyalistras, D.; Gwerder, M.; Stauch, V.; Lehmann, B.; Morari, M. Use of model predictive control and weather forecasts for energy efficient building climate control. Energy Build. 2012, 45, 15–27. [Google Scholar] [CrossRef] [Green Version]

- Kong, Z.; Xia, Z.; Cui, Y.; Lv, H. Probabilistic forecasting of short-term electric load demand: An integration scheme based on correlation analysis and improved weighted extreme learning machine. Appl. Sci. 2019, 9, 4215. [Google Scholar] [CrossRef] [Green Version]

- Hong, T.; Fan, S. Probabilistic electric load forecasting: A tutorial review. Int. J. Forecast. 2016, 32, 914–938. [Google Scholar] [CrossRef]

- Van der Meer, D.W.; Widén, J.; Munkhammar, J. Review on probabilistic forecasting of photovoltaic power production and electricity consumption. Renew. Sustain. Energy Rev. 2018, 81, 1484–1512. [Google Scholar] [CrossRef]

- Yang, Y.; Li, S.; Li, W.; Qu, M. Power load probability density forecasting using Gaussian process quantile regression. Appl. Energy 2018, 213, 499–509. [Google Scholar] [CrossRef]

- van der Meer, D.W.; Shepero, M.; Svensson, A.; Widén, J.; Munkhammar, J. Probabilistic forecasting of electricity consumption, photovoltaic power generation and net demand of an individual building using Gaussian Processes. Appl. Energy 2018, 213, 195–207. [Google Scholar] [CrossRef]

- Shepero, M.; Van Der Meer, D.; Munkhammar, J.; Widén, J. Residential probabilistic load forecasting: A method using Gaussian process designed for electric load data. Appl. Energy 2018, 218, 159–172. [Google Scholar] [CrossRef]

- Sun, M.; Feng, C.; Zhang, J. Conditional aggregated probabilistic wind power forecasting based on spatio-temporal correlation. Appl. Energy 2019, 256, 113842. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, Y.; Zhang, Y.; Wang, D.; Zhang, N. Load probability density forecasting by transforming and combining quantile forecasts. Appl. Energy 2020, 277, 115600. [Google Scholar] [CrossRef]

- Zucchini, W.; Berzel, A.; Nenadic, O. Applied smoothing techniques. Part I Kernel Density Estim. 2003, 15, 1–20. [Google Scholar]

- Lucas Segarra, E.; Ramos Ruiz, G.; Fernández Bandera, C. Probabilistic Load Forecasting for Building Energy Models. Sensors 2020, 20, 6525. [Google Scholar] [CrossRef]

- Gonzales-Fuentes, L.; Barbé, K.; Barford, L.; Lauwers, L.; Philips, L. A qualitative study of probability density visualization techniques in measurements. Measurement 2015, 65, 94–111. [Google Scholar] [CrossRef]

- Crawley, D.B.; Lawrie, L.K.; Winkelmann, F.C.; Buhl, W.F.; Huang, Y.J.; Pedersen, C.O.; Strand, R.K.; Liesen, R.J.; Fisher, D.E.; Witte, M.J.; et al. EnergyPlus: Creating a new-generation building energy simulation program. Energy Build. 2001, 33, 319–331. [Google Scholar] [CrossRef]

- Sun, M.; Feng, C.; Chartan, E.K.; Hodge, B.M.; Zhang, J. A two-step short-term probabilistic wind forecasting methodology based on predictive distribution optimization. Appl. Energy 2019, 238, 1497–1505. [Google Scholar] [CrossRef]

- Liu, N.; Tang, Q.; Zhang, J.; Fan, W.; Liu, J. A hybrid forecasting model with parameter optimization for short-term load forecasting of micro-grids. Appl. Energy 2014, 129, 336–345. [Google Scholar] [CrossRef]

- Lu, X.; O’Neill, Z.; Li, Y.; Niu, F. A novel simulation-based framework for sensor error impact analysis in smart building systems: A case study for a demand-controlled ventilation system. Appl. Energy 2020, 263, 114638. [Google Scholar] [CrossRef]

- González, V.G.; Colmenares, L.Á.; Fidalgo, J.F.L.; Ruiz, G.R.; Bandera, C.F. Uncertainy’s Indices Assessment for Calibrated Energy Models. Energies 2019, 12, 2096. [Google Scholar] [CrossRef] [Green Version]

- Guglielmetti, R.; Macumber, D.; Long, N. OpenStudio: An Open Source Integrated Analysis Platform; Technical Report; National Renewable Energy Laboratory (NREL): Golden, CO, USA, 2011. [Google Scholar]

- Ruiz, G.R.; Bandera, C.F.; Temes, T.G.A.; Gutierrez, A.S.O. Genetic algorithm for building envelope calibration. Appl. Energy 2016, 168, 691–705. [Google Scholar] [CrossRef]

- Ruiz, G.R.; Bandera, C.F. Analysis of uncertainty indices used for building envelope calibration. Appl. Energy 2017, 185, 82–94. [Google Scholar] [CrossRef]

- Fernández Bandera, C.; Ramos Ruiz, G. Towards a new generation of building envelope calibration. Energies 2017, 10, 2102. [Google Scholar] [CrossRef] [Green Version]

- Gutiérrez González, V.; Ramos Ruiz, G.; Fernández Bandera, C. Empirical and Comparative Validation for a Building Energy Model Calibration Methodologya. Sensors 2020, 20, 5003. [Google Scholar] [CrossRef] [PubMed]

- Meteoblue. Available online: https://meteoblue.com/ (accessed on 20 April 2020).

| Filter 0 | Baseline | All | |||

|---|---|---|---|---|---|

| Filter 1 | Type Energy Demand | HTG | CLG | ||

| Filter 2 | Type Use Building | HTG_Work | HTG_Sat | CLG_Work | CLG_Sat |

| Filter 3 | Similar Energy Demand | ||||

| Quantitative | HTG_Work_±50 | HTG_Sat_±50 | CLG_Work_±50 | CLG_Sat_±50 | |

| similarity | HTG_Work_±40 | HTG_Sat_±40 | CLG_Work_±40 | CLG_Sat_±40 | |

| HTG_Work_±30 | HTG_Sat_±30 | CLG_Work_±30 | CLG_Sat_±30 | ||

| HTG_Work_±20 | HTG_Sat_±20 | CLG_Work_±20 | CLG_Sat_±20 | ||

| HTG_Work_±10 | HTG_Sat_±10 | CLG_Work_±10 | CLG_Sat_±10 | ||

| HTG_Work_±25% | HTG_Sat_±25% | CLG_Work_±25% | CLG_Sat_±25% | ||

| HTG_Work_±20% | HTG_Sat_±20% | CLG_Work_±20% | CLG_Sat_±20% | ||

| HTG_Work_±15% | HTG_Sat_±15% | CLG_Work_±15% | CLG_Sat_±15% | ||

| HTG_Work_±10% | HTG_Sat_±10% | CLG_Work_±10% | CLG_Sat_±10% | ||

| HTG_Work_±5% | HTG_Sat_±5% | CLG_Work_±5% | CLG_Sat_±5% | ||

| Qualitative similarity | HTG_Work+MAE+R2 | HTG_Sat+MAE+R2 | CLG_Work+MAE+R2 | CLG_Sat+MAE+R2 | |

| Combination | HTG_Work_±50+MAE+R2 | HTG_Sat_±50+MAE+R2 | CLG_Work_±50+MAE+R2 | CLG_Sat_±50+MAE+R2 | |

| HTG_Work_±40+MAE+R2 | HTG_Sat_±40+MAE+R2 | CLG_Work_±40+MAE+R2 | CLG_Sat_±40+MAE+R2 | ||

| HTG_Work_±30+MAE+R2 | HTG_Sat_±30+MAE+R2 | CLG_Work_±30+MAE+R2 | CLG_Sat_±30+MAE+R2 | ||

| HTG_Work_±20+MAE+R2 | HTG_Sat_±20+MAE+R2 | CLG_Work_±20+MAE+R2 | CLG_Sat_±20+MAE+R2 | ||

| HTG_Work_±10+MAE+R2 | HTG_Sat_±10+MAE+R2 | CLG_Work_±10+MAE+R2 | CLG_Sat_±10+MAE+R2 | ||

| HTG_Work_±25%+MAE+R2 | HTG_Sat_±25%+MAE+R2 | CLG_Work_±25%+MAE+R2 | CLG_Sat_±25%+MAE+R2 | ||

| HTG_Work_±20%+MAE+R2 | HTG_Sat_±20%+MAE+R2 | CLG_Work_±20%+MAE+R2 | CLG_Sat_±20%+MAE+R2 | ||

| HTG_Work_±15%+MAE+R2 | HTG_Sat_±15%+MAE+R2 | CLG_Work_±15%+MAE+R2 | CLG_Sat_±15%+MAE+R2 | ||

| HTG_Work_±10%+MAE+R2 | HTG_Sat_±10%+MAE+R2 | CLG_Work_±10%+MAE+R2 | CLG_Sat_±10%+MAE+R2 | ||

| HTG_Work_±5%+MAE+R2 | HTG_Sat_±5%+MAE+R2 | CLG_Work_±5%+MAE+R2 | CLG_Sat_±5%+MAE+R2 | ||

| # | Filter | No. of Days Met Filter | Training Days PLF | Mean PICP | Mean MPIW |

|---|---|---|---|---|---|

| 0 | All | 66 | 205 | 55.9% | 1.94 |

| 1 | HTG | 66 | 91 | 79.1% | 2.93 |

| 2 | HTG_Work | 66 | 65 | 83.0% | 3.4 |

| 3 | HTG_Work_±50 | 66 | 2–25 | 80.7% | 3.44 |

| HTG_Work_±40 | 66 | 2–21 | 80.0% | 3.47 | |

| HTG_Work_±30 | 65 | 2–15 | 79.6% | 3.51 | |

| HTG_Work_±20 | 60 | 1–11 | 76.3% | 3.26 | |

| HTG_Work_±10 | 52 | 1–8 | 71.0% | 2.75 | |

| HTG_Work_±25% | 56 | 1–11 | 75.4% | 3.25 | |

| HTG_Work_±20% | 58 | 1–12 | 76.7% | 3.35 | |

| HTG_Work_±15% | 58 | 1–15 | 79.9% | 3.36 | |

| HTG_Work_±10% | 62 | 1–21 | 79.9% | 3.42 | |

| HTG_Work_±5% | 64 | 1–36 | 82.3% | 3.34 | |

| HTG_Work+MAE+R2 | 56 | 1–31 | 78.6% | 3.28 | |

| HTG_Work_±50+MAE+R2 | 51 | 1–17 | 75.0% | 2.88 | |

| HTG_Work_±40+MAE+R2 | 48 | 1–15 | 75.7% | 2.9 | |

| HTG_Work_±30+MAE+R2 | 45 | 1–11 | 77.7% | 3.02 | |

| HTG_Work_±20+MAE+R2 | 40 | 1–9 | 71.3% | 2.71 | |

| HTG_Work_±10+MAE+R2 | 28 | 1–5 | 65.5% | 2.39 | |

| HTG_Work_±25%+MAE+R2 | 36 | 1–8 | 67.1% | 2.83 | |

| HTG_Work_±20%+MAE+R2 | 38 | 1–9 | 73.4% | 2.99 | |

| HTG_Work_±15%+MAE+R2 | 42 | 1–11 | 78.7% | 2.96 | |

| HTG_Work_±10%+MAE+R2 | 43 | 1–15 | 77.7% | 3.02 | |

| HTG_Work_±5%+MAE+R2 | 51 | 1–26 | 78.2% | 3.04 |

| Filter | Minimum Training Days | Mean PICP Filter 2 (HTG_Work) | Mean PICP Filter 3 |

|---|---|---|---|

| HTG_Work_±50 | 7 | 82.2% | 82.4% |

| HTG_Work_±40 | 5 | 81.5% | 81.8% |

| HTG_Work_±30 | 8 | 82.3% | 82.7% |

| HTG_Work_±20 | - | - | - |

| HTG_Work_±10 | - | - | - |

| HTG_Work_±25% | - | - | - |

| HTG_Work_±20% | 9 | 84.4% | 85.6% |

| HTG_Work_±15% | 4 | 81.4% | 82.5% |

| HTG_Work_±10% | 7 | 81.4% | 81.5% |

| HTG_Work_±5% | 3 | 82.1% | 83.4% |

| HTG_Work+MAE+R2 | 4 | 82.7% | 83% |

| HTG_Work_±50+MAE+R2 | 15 | 82.4% | 82.8% |

| HTG_Work_±40+MAE+R2 | - | - | - |

| HTG_Work_±30+MAE+R2 | 9 | 81.9% | 83.3% |

| HTG_Work_±20+MAE+R2 | 7 | 75.8% | 76.2% |

| HTG_Work_±10+MAE+R2 | - | - | - |

| HTG_Work_±25%+MAE+R2 | - | - | - |

| HTG_Work_±20%+MAE+R2 | 8 | 82.6% | 83.8% |

| HTG_Work_±15%+MAE+R2 | 6 | 82.6% | 82.8% |

| HTG_Work_±10%+MAE+R2 | 14 | 93.1% | 94.4% |

| HTG_Work_±5%+MAE+R2 | 3 | 81.2% | 81.9% |

| Order | Filter 3 | Mean PICP | Min Training Days |

|---|---|---|---|

| 1 | HTG_Work_±10%+MAE+R2 | 94.4% | 14 |

| 2 | HTG_Work_±20% | 85.6% | 9 |

| 3 | HTG_Work_±20%+MAE+R2 | 83.8% | 8 |

| 4 | HTG_Work_±5% | 83.4% | 3 |

| 5 | HTG_Work_±30+MAE+R2 | 83.3% | 9 |

| 6 | HTG_Work+MAE+R2 | 82.8% | 4 |

| 7 | HTG_Work_±15%+MAE+R2 | 82.8% | 6 |

| 8 | HTG_Work_±50+MAE+R2 | 82.8% | 15 |

| 9 | HTG_Work_±30 | 82.7% | 8 |

| 10 | HTG_Work_±15% | 82.5% | 4 |

| 11 | HTG_Work_±50 | 82.4% | 7 |

| 12 | HTG_Work_±5%+MAE+R2 | 81.9% | 3 |

| 13 | HTG_Work_±40 | 81.8% | 5 |

| 14 | HTG_Work_±10% | 81.5% | 7 |

| 15 | HTG_Work_±20+MAE+R2 | 76.2% | 7 |

| Order | Filter 3 | Min Training Days | Training Days 31 January 2020 | Fulfills Training Days? |

|---|---|---|---|---|

| 1 | HTG_Work_±10%+MAE+R2 | 14 | 1 | No |

| 2 | HTG_Work_±20% | 9 | 2 | No |

| 3 | HTG_Work_±20%+MAE+R2 | 8 | 1 | No |

| 4 | HTG_Work_±5% | 3 | 13 | Yes |

| 5 | HTG_Work_±30+MAE+R2 | 9 | 3 | No |

| 6 | HTG_Work+MAE+R2 | 4 | 18 | Yes |

| 7 | HTG_Work_±15%+MAE+R2 | 6 | 2 | No |

| 8 | HTG_Work_±50+MAE+R2 | 15 | 5 | No |

| 9 | HTG_Work_±30 | 8 | 7 | No |

| 10 | HTG_Work_±15% | 4 | 3 | No |

| 11 | HTG_Work_±50 | 7 | 11 | Yes |

| 12 | HTG_Work_±5%+MAE+R2 | 3 | 6 | Yes |

| 13 | HTG_Work_±40 | 5 | 8 | Yes |

| 14 | HTG_Work_±10% | 7 | 5 | No |

| 15 | HTG_Work_±20+MAE+R2 | 7 | 2 | No |

| Filter | Filter 0 | Filter 1 | Filter 2 | Filter 3 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| All | HTG | HTG_Work | HTG_Work_() | ||||||||||

| Date | PICP | MPIW | PICP | MPIW | PICP | MPIW | PICP | MPIW | Filter Selected | ||||

| 10/01/2020 | 54.2% | 1.94 | 87.5% | 2.93 | 91.7% | 3.42 | 91.7% | 4.15 | _HTG_Work_±20% | ||||

| 14/01/2020 | 50.0% | 1.94 | 75.0% | 2.93 | 83.3% | 3.39 | 95.8% | 3.96 | _HTG_Work_±5% | ||||

| 15/01/2020 | 58.3% | 1.95 | 87.5% | 2.92 | 87.5% | 3.41 | 83.3% | 3.59 | _HTG_Work_±20% | ||||

| 16/01/2020 | 29.2% | 1.94 | 66.7% | 2.92 | 70.8% | 3.39 | 70.8% | 3.43 | _HTG_Work_±5% | ||||

| 17/01/2020 | 58.3% | 1.95 | 87.5% | 2.93 | 91.7% | 3.40 | 95.8% | 3.44 | _HTG_Work_±5% | ||||

| 20/01/2020 | 62.5% | 1.94 | 70.8% | 2.91 | 75.0% | 3.36 | 75.0% | 3.81 | _HTG_Work_±5% | ||||

| 21/01/2020 | 41.7% | 1.93 | 62.5% | 2.90 | 66.7% | 3.35 | 75.0% | 3.94 | _HTG_Work_±5% | ||||

| 22/01/2020 | 54.2% | 1.93 | 66.7% | 2.91 | 66.7% | 3.40 | 66.7% | 3.68 | _HTG_Work_±5% | ||||

| 23/01/2020 | 50.0% | 1.94 | 66.7% | 2.92 | 70.8% | 3.38 | 83.3% | 3.61 | _HTG_Work_±20% | ||||

| 24/01/2020 | 54.2% | 1.94 | 87.5% | 2.93 | 87.5% | 3.41 | 91.7% | 3.53 | _HTG_Work_±5% | ||||

| 27/01/2020 | 79.2% | 1.95 | 95.8% | 2.94 | 95.8% | 3.43 | 95.8% | 3.98 | _HTG_Work_±5% | ||||

| 28/01/2020 | 54.2% | 1.94 | 58.3% | 2.92 | 62.5% | 3.40 | 66.7% | 3.45 | _HTG_Work_±5% | ||||

| 29/01/2020 | 45.8% | 1.93 | 66.7% | 2.93 | 75.0% | 3.40 | 75.0% | 3.41 | _HTG_Work_±5% | ||||

| 30/01/2020 | 25.0% | 1.93 | 70.8% | 2.92 | 75.0% | 3.37 | 79.2% | 3.48 | _HTG_Work_±5% | ||||

| 31/01/2020 | 12.5% | 1.93 | 70.8% | 2.90 | 79.2% | 3.39 | 87.5% | 3.23 | _HTG_Work_±5% | ||||

| Mean | 48.6% | 1.94 | 74.7% | 2.92 | 78.6% | 3.39 | 82.2% | 3.65 | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lucas Segarra, E.; Ramos Ruiz, G.; Fernández Bandera, C. Probabilistic Load Forecasting Optimization for Building Energy Models via Day Characterization. Sensors 2021, 21, 3299. https://doi.org/10.3390/s21093299

Lucas Segarra E, Ramos Ruiz G, Fernández Bandera C. Probabilistic Load Forecasting Optimization for Building Energy Models via Day Characterization. Sensors. 2021; 21(9):3299. https://doi.org/10.3390/s21093299

Chicago/Turabian StyleLucas Segarra, Eva, Germán Ramos Ruiz, and Carlos Fernández Bandera. 2021. "Probabilistic Load Forecasting Optimization for Building Energy Models via Day Characterization" Sensors 21, no. 9: 3299. https://doi.org/10.3390/s21093299

APA StyleLucas Segarra, E., Ramos Ruiz, G., & Fernández Bandera, C. (2021). Probabilistic Load Forecasting Optimization for Building Energy Models via Day Characterization. Sensors, 21(9), 3299. https://doi.org/10.3390/s21093299