1. Introduction

The method in which visual information in a feedback control loop is used to precisely control the motion, position, and posture of a robot is called visual servoing. Visual servoing is well-known and commonly used in both dynamic and unstructured environments. Visual servoing tasks involving the use of a robot to catch a flying ball present a challenge.

In robotic ball-catching, there are several variations in the system configurations, methods of implementation, trajectory prediction algorithms, and control laws. In [

1], the stereo camera system (two PAL cameras) was placed above the work area to catch a flying ball. In [

2], two cameras were placed at the top and left of the work area for ping-pong ball catching and ball juggling. In [

3], the cameras were located behind the robot for catching in-flight objects with uneven shapes. In [

4], a high-speed vision system with two cameras was used for a ball juggling system.

In the above research, fixed cameras were used to predict the trajectory of the target object in the work area. The advantage is that the background image was fixed, and the image processing was relatively simple; however, it is difficult to achieve the desired results in an open space due to the field of view (FoV) constraints associated with the camera system and the limited workspace of a robotic arm.

In [

5], an autonomous wheelchair mounted with a robotic arm used two vision sensors to accurately determine the location of the target object and to pick up the object. In [

6], a robotic system endowed with only a single camera mounted in eye-in-hand configuration was used for the ball catching task. In [

7], a robot with an eye-in-hand monocular camera was used for autonomous object grasping. The methods of [

5,

6,

7] can resolve the stated problem. The eye-in-hand concept enables the camera to maneuver along with the robotic arm, which improves the trajectory prediction precision in an open space, especially when the object is near the robot. Another method by which to catch a ball in a wide-open space is to combine a static stereo vision system with a mobile robot to complete the ball-catching task, as described below.

In [

8], the ball was tracked using two ceiling-mounted cameras and a camera mounted on the base of the robot. Each camera performed ball detection independently. The 3D coordinates of the ball were then triangulated from the 2D image locations. In [

9], a robotic system consisted of a high-speed hand-arm, a high-speed vision system, and a real-time control system. A method was proposed to estimate the 3D position and orientation by fusing the information observed by a vision system and the contact information observed by tactile sensors.

In [

10], the robot maintained a camera fixation that was centered on the image of the ball and kept the tangent of the camera angle rising at a constant rate. The performance advantage was principally due to the higher gain and effectively wider viewing angle when the camera remained centered on the ball image. In [

11], a movable humanoid robot with an active stereo vision camera was designed and implemented for a ball-catching task. Two flying balls were caught by mobile humanoid robots at the same time in a wide space.

The prediction of the trajectory is an important factor for the effective capture of a flying ball. Most methods assumed that a free-flying object’s dynamic model was known. A parabola in 3D space was used to model the trajectory of a flying ball, and the least-squares method was used to estimate the model parameters.

In [

10], the flying ball trajectory was predicted using a non-linear dynamic model that included air resistance with different parameter estimation algorithms. In [

6], an estimate of the catching point was initially provided through a linear algorithm. Then, additional visual measurements were acquired to constantly refine the current estimate by exploiting a nonlinear optimization algorithm and a more accurate ballistic model with the influence of air resistance, visual measurement errors, and rotation caused by ground friction. One of the typical uses of the Kalman filter is in navigation and positioning technology.

In [

12], an automated guided vehicle was combined with a Kalman filter with deep learning. The system was found to have good adaptability to the statistical properties of the noise system, which improved the positioning accuracy and prevented filter divergence. In our previous work [

13], we provided a brief overview of an effective ball-catching system with an omni-directional wheeled mobile robot. In this paper, we extend the ball trajectory estimation method to improve the system’s ball-catching performance. Furthermore, the experiments and application are described in detail.

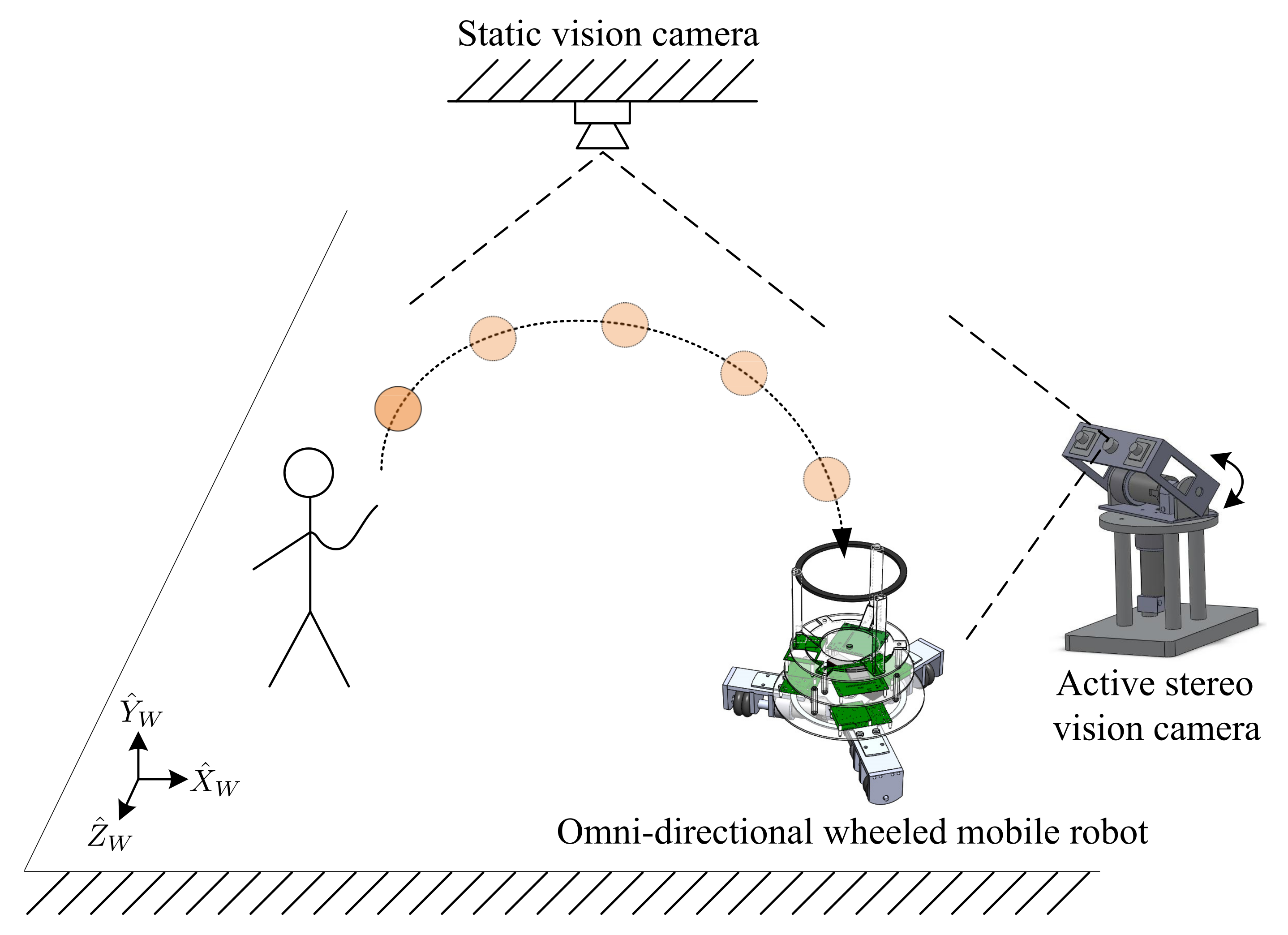

In this research, we developed a combined omni-directional wheeled mobile robot and a multi-camera vision system to catch a flying ball in a large workspace.

Figure 1 provides a schematic diagram of the proposed system.

To maneuver the robot around, an omni-directional wheeled setup was selected due to its superiority in terms of mobility. This robot can enable translational or rotational movements or any combination of these two movements. An active stereo vision camera assumed the visual tracking task for the flying ball with two pan-and-tilt cameras. To navigate the mobile robot to the ball’s touchdown point, a static vision camera was used. Real-time processing of the image processing algorithms and control laws are necessary to accomplish these visual servoing tasks.

Therefore, digital signal processors (DSPs) were used to ensure the real-time ability to carry out these actions. Noise from the environment or other sources, such as the measurement of vision systems for caught balls, can deteriorate the performance of the proposed visual servoing system. In this work, estimating the position and velocity of the ball was achieved using the Kalman filter and a linear dynamic model of a flying ball. The use of deep learning in Kalman filtering improved both the accuracy and robustness of the results. In this paper, the experimental setup is presented, and the results of the simulation and experiments are provided to demonstrate the performance of the proposed system.

The remainder of this paper is composed as follows: In

Section 2, the relationship between the coordinates used in this work is described (specifically, the world and image coordinates). In addition, the image processing algorithms are also introduced.

Section 3 describes the active stereo vision camera.

Section 4 describes the prediction and trajectory estimation method for a flying ball.

Section 5 offers a discussion of the control law of the omni-directional wheeled mobile robot.

Section 6 presents the implementation of the designed system.

Section 7 presents the results for the simulation and the experiments. Finally,

Section 8 provides our concluding remarks.

2. Image Processing and Visual Measurement

Vision systems are used to obtain the location of the ball and robot in a three-dimensional (3D) Cartesian coordinate system. The position determination of an object from an image is based on a pinhole camera model of the vision system [

14,

15]. The position of an object can be given in the vector form as

where vector

represents the 2D homogeneous coordinates of an image point in the image coordinate system. The vector

represents the 3D homogeneous coordinate of a target object point in the world coordinate system. The 3 by 3 matrix

and 3D vector

T are external camera parameters that define the rotation and translation between the world frame and camera frame, respectively.

is a scaling factor, and

is the internal parameter matrix of the camera. It is given by

In the matrix

,

is the principal point in the image coordinate system in pixels;

and

are the size of the unit lengths in the horizontal and vertical pixels, respectively, and

is the skewness factor of the pixels. The camera calibration procedure in [

14] is used to calibrate the internal camera parameters beforehand.

In the image plane, to obtain the precise position of a target object, a series of image processing algorithms are used to process the images captured from the camera. The source image with a complex background acquired from the camera is shown in

Figure 2a. In this study, the template matching approach [

16] is used to find the image of the target object that matches a template object image in the whole image. The template matching process compares the sub-image of the source image and template object image, from left to right and from top to bottom, to obtain the correlation between these two images.

Additionally, to detect the target object in the three-dimensional space, the template object image is resized during the comparison process.

Figure 2b shows the normalized cross-correlation image, in which the brightest one represents the most similarity between two images.

The simulation result of the template matching was compared to the one obtained by the color matching method. The result when using the template matching method to perform ball detection is shown in

Figure 3a. The result based on the color matching method is shown in

Figure 3b. In a complex background, when comparing the object extraction step in

Figure 3a with the thresholding process in

Figure 3b, the use of template matching for ball detection is not interfered with by other objects, which is suitable for the environment used in this study.

The location of the processed image was taken using the centroid of the object as follows:

where

are the center coordinates of the object,

are the coordinates of the white pixel, and

is the number of white pixels. The actual centroid of the ball in

Figure 3 obtained by the manual image segmentation is

. The centroid of the ball obtained from the template matching method is

, and the centroid of the ball obtained from the color matching method is

. The template matching method provided a more accurate result.

3. Active Stereo Vision

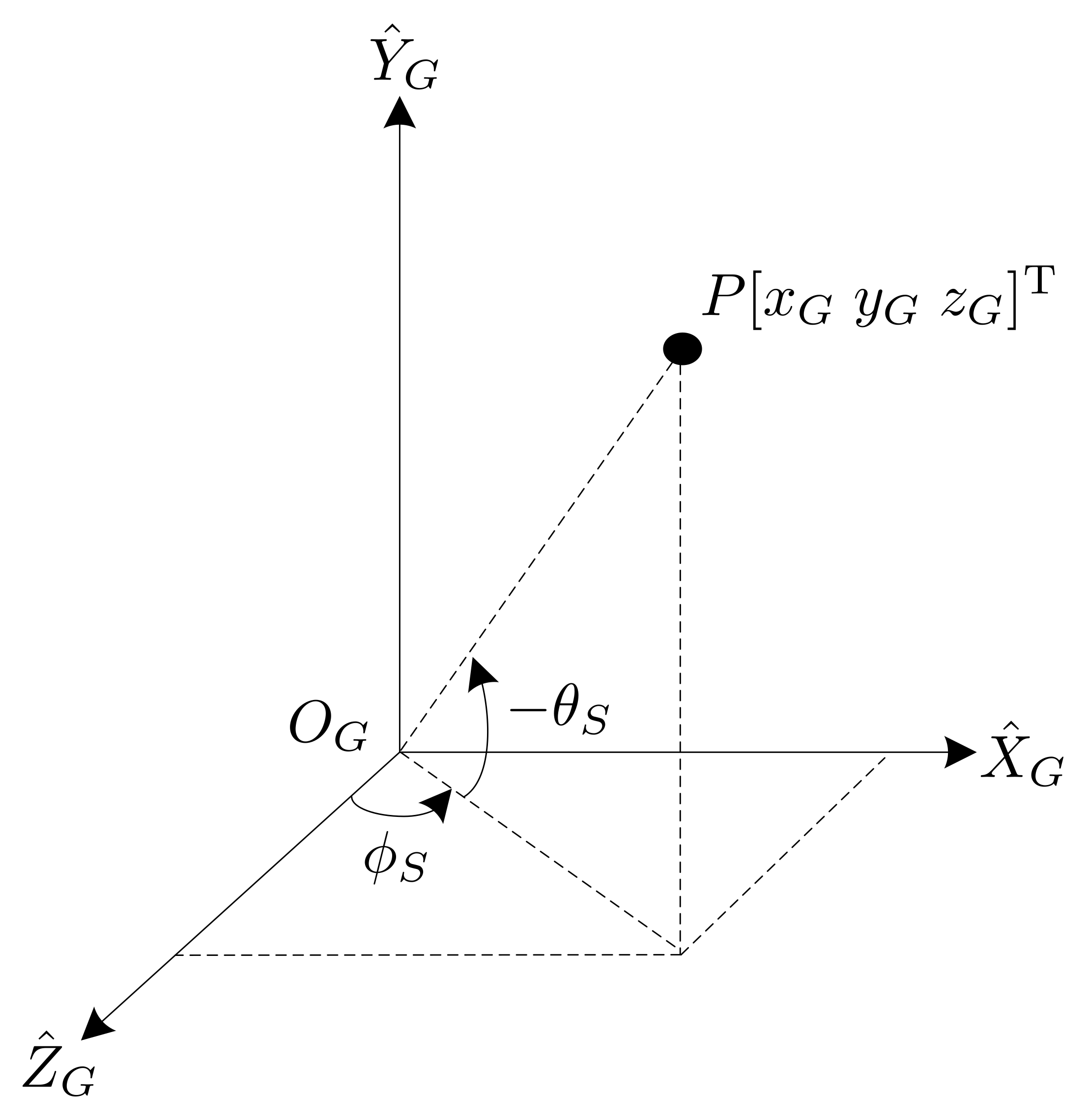

In this study, an active stereo vision camera was used to locate and trace the flying ball. The active stereo vision camera included two cameras mounted on a pan-and-tilt platform, and the cameras were parallel to each other. The coordinate systems of this active vision camera are described in

Figure 4, for which the parameters are listed below.

The pan angle

rotates along the

axis, and the tilt angle

rotates along the

axis. The position vector of the target object

P relative to camera A is denoted as

. In this study, we assumed that cameras A and B were identical. Based on (

1), after obtaining the image coordinates of the objects of camera A and camera B, respectively, the position of the target object in the coordinate frame of camera A can be written as:

where

and

are the coordinates of the target object obtained from cameras A and B, respectively. According to

Figure 4, the relationship of the coordinate system between the stereo coordinate and the camera A coordinate can be given by:

where

is the position vector of target object

P relative to the stereo coordinate frame. The position vector of target object

P converts to the geodetic frame using a homogeneous transformation matrix

, which is given by:

where

is the position of target object

P relative to the geodetic coordinate system and

Using Equations (

4)–(

6), the pixel position of the target object in the image frames of the two cameras can be used to determine the position vector of target object

P in the world frame.

Figure 5 illustrates the position of target object

P in the geodetic frame. From

Figure 5 using simple geometry, the angular displacement of the pan-axis can be determined by

where the angular displacement of the tilt-axis is:

The direct current (DC) servo motors drive the pan-and-tilt platform to maintain the continuous tracking of and . The and angular commands are sent in real-time to keep the target object in the FoV of the cameras.

4. Trajectory Estimation and Prediction

Visual tracking is challenging due to image variations caused by camera noise, background changes, illumination changes, and object occlusion. The above-mentioned problems will deteriorate the tracking performance and may even cause the loss of target objects. In this work, a Kalman filter [

17,

18] was applied to enhance the robustness of the designed visual tracking system for the purpose of estimating the target object’s position and velocity. The projectile motion trajectory for the flying ball was used to predict the touchdown point. The mobile robot was commanded to catch the ball at the appropriate location. A brief introduction to the Kalman filter is given below.

A state-space system model is described by

where

is the state of the system;

is the input, and

is the measurement. Matrices

A,

B, and

C are the state transition matrix, input matrix, and output matrix, respectively. The state and measurement noises are denoted as

and

. The zero-mean normal-distribution Gaussian white noise is assumed to be these two noises, and the covariances are

and

, respectively.

The use of the Kalman filter involves two major procedures: the time updating step and the measurement updating step [

17,

18]. Time updating is used to estimate the probability outcome of the next state. The measurement updating step is used to update the estimated state with the measured information. This updated state is used in the time updating step of the next cycle. The Kalman filter is done recursively, and the recursive formulas are

Measurement updating step:

In (

10)–(

13),

denotes the a priori predicted state, and

is the optimal estimated state after the measurement is updated.

and

are the a priori and a posteriori estimate error covariances, respectively.

is known as the Kalman gain, and

is called the measurement residual. Based on (

11), the a posteriori estimate error covariance

is minimized by

, and

is, hence, optimized.

Now, we consider the dynamics of a flying ball. The position and velocity vector of the ball are denoted as

and

in the world coordinate system, respectively. We assumed that the flying trajectory of the ball is not interfered with by other objects. The forces considered in this work are the gravitational force, the buoyancy force, and the drag force. Other non-stated forces, such as the Magnus force [

19], are ignored.

The buoyancy force vector is denoted as

, where the radius of the ball is

; the air density is

, and the gravitational acceleration at sea level is

. The drag force is assumed to be proportional to the velocity. According to Stokes’s law [

19], the drag force is

, where

is the dynamic viscosity of the air, and

. Let the mass of the ball be denoted as

. The equation that governs the motion of a flying ball can be written as

where

By discretizing (

14) with the sampling period

, we obtain the dynamic model of the system as shown below:

From Equations (

17)–(

22), a discrete-time linear state-space form (

9) can be further written for the system model as

Based on the above model, the Kalman filter is applicable for visual tracking to estimate the ball’s trajectory. Therefore, for the visual tracking of the flying ball, the Kalman filter and a constant acceleration model were used in this work.

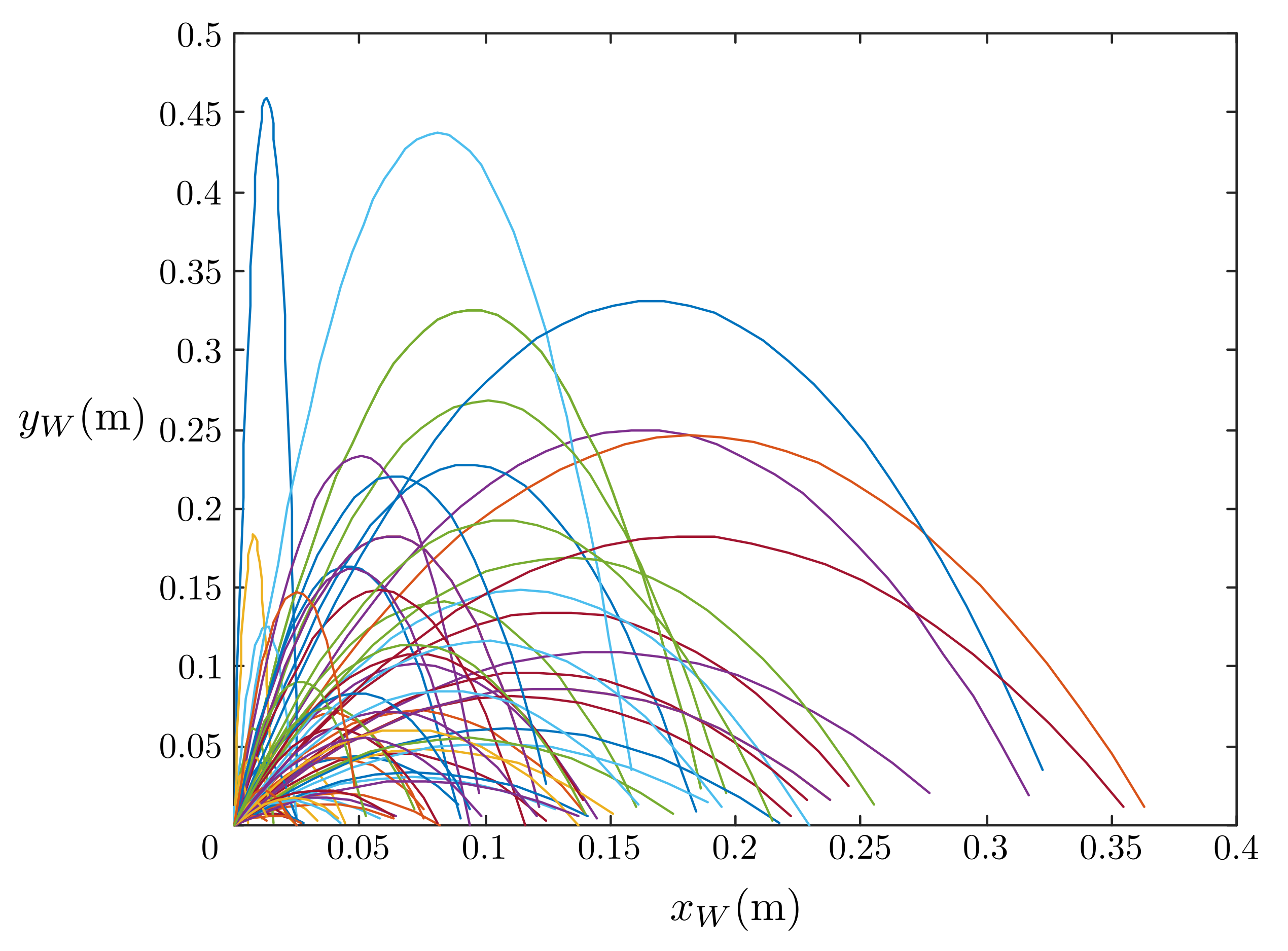

Using the estimated position, the estimated velocity, and the projectile motion Formulas (

17)–(

22), the future trajectory and touchdown point were predicted for the flying ball. Since the uncertainty of the initial value

affects the estimation accuracy and convergence of the Kalman filter, this can cause the active stereo vision camera to fail to track the ball, and therefore the ball leaves the FoV. The inaccuracy of the initial value is the result of an unreasonable flight trajectory, such as the ball not being thrown into the air.

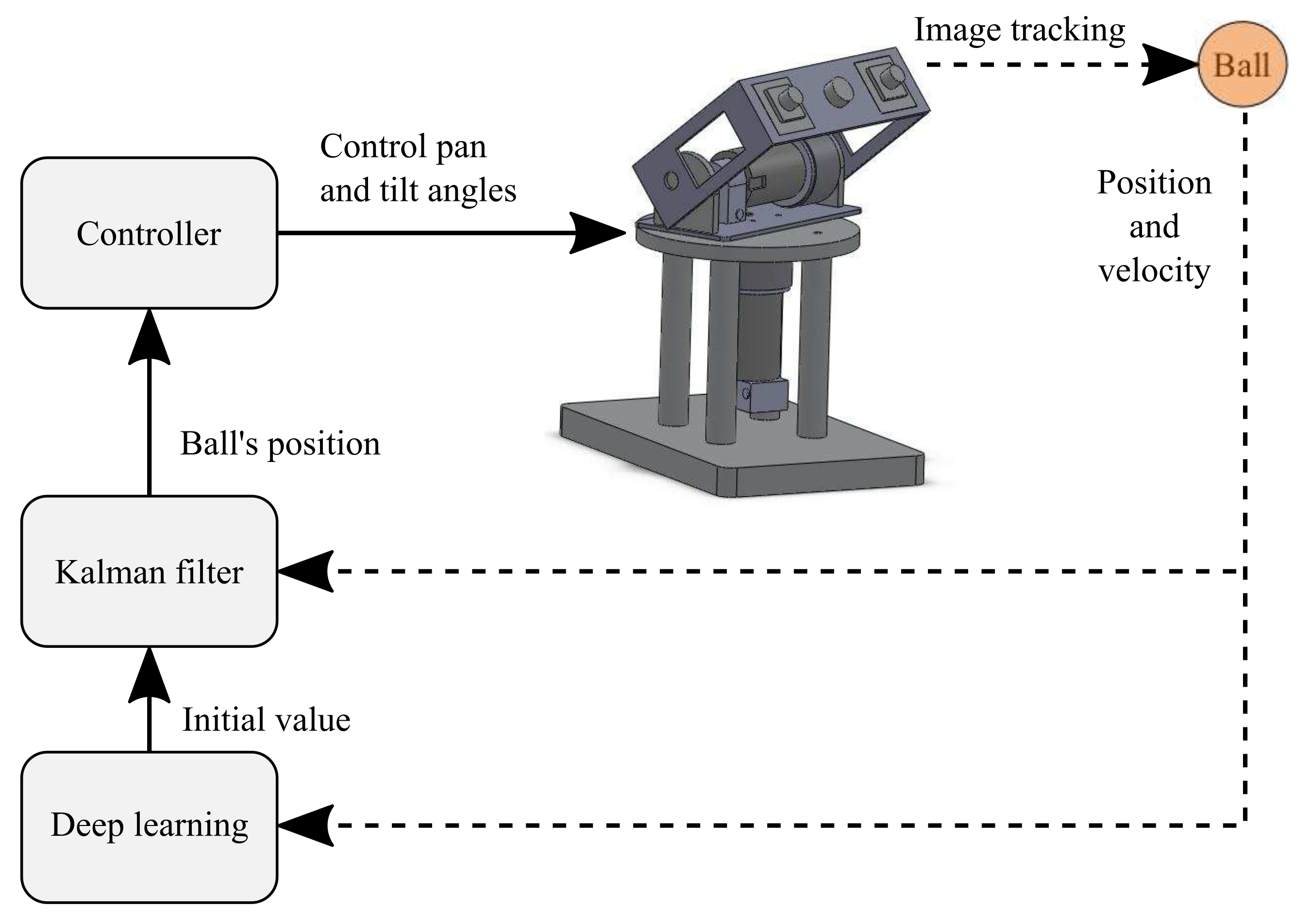

In this work, deep learning was used to obtain the initial value of the state estimation error covariance

.

Figure 6 describes the application using the Kalman filter combined with deep learning. After the camera obtains the position and velocity of the flying ball, the Kalman filter estimates the next position and commands the active stereo vision camera to track the ball.

Figure 7 describes the deep learning architecture. The input layer receives the input

and

, which is the data learned by the neural network. The network is based on AlexNet [

20], which is a deep convolutional neural network. These input matrices represent the position vector and velocity vector of the flying ball. The last layer is called the output layer, which outputs an initial estimated error covariance

, representing the neural network’s result. The hidden layers are performed in the layers between the input and output layers. Deep learning is helpful to attenuate the initial value deviation in the filtering process. Once the ball’s predicted touchdown point is obtained, the point-to-point path planning is used to command the mobile robot to move toward the touchdown point in advance to catch the ball. This ball-catching strategy is discussed in a later section.

5. Controller Design of the Omni-Directional Wheeled Mobile Robot

Figure 8 shows a schematic diagram of an omni-directional wheeled mobile robot from the top view. This mobile robot consists of a rigid body and three omni-directional wheels labeled 1, 2, and 3. The wheels were arranged at an equal distance from the center of the robot platform with a

interval. This setup allowed the robot to move freely in any direction. In this study, (

) is the world coordinate system. (

) is the body coordinate system with the origin attached to the center of mass of the robot. The direction of the

-axis is aligned to wheel 1, as shown in

Figure 8. We assumed that the wheels can roll without slipping.

The parameters for an omni-directional mobile robot are listed as follows:

According to [

21], the dynamics and kinematics of the mobile robot can be described by:

In this study, the brushed DC servo motors drove the omni-directional wheels. We also assumed that the motor’s electrical time constant was smaller than the mechanical time constants and that the motor friction was negligible. The model of a DC motor is, thus, reduced to

In (

26),

,

u,

,

, and

represent the motor torque, control voltage, motor torque constant, angular velocity of the motor, and armature resistance, respectively. The traction force

f of the wheel is given by

where

n is the gear ratio. The three motors used in this mobile robot were assumed to be identical. Therefore, combining (

26) and (

27), the relationship between

f,

u, and

is given by

From (

24), (

25), and (

28), the dynamics of the robot can be presented as

where

with

We assume that the predicted touchdown point of the ball in the world coordinate system is

. The position reference to the mobile robot is set to be:

where the rotation angle

is assumed to be 0. The tracking error is defined as follows:

A new control input

U [

22] is defined as follows:

From (

35) and (

34), we obtain

From (

34), the feedback control

can be written as

In the form used in (

36), the system is decoupled into a linear system, and the PID control algorithm is used for tracking control. In this case, the following PID control:

where

,

, and

are 3 by 3 diagonal PID gain matrixes that equal

,

, and

, respectively, and

i = 1, 2, and 3. From (

36) and (

37), it follows that the closed-loop tracking error system is given by:

According to the Routh–Hurwitz criterion [

23], PID gain values

,

, and

must satisfy:

To obtain closed-loop stability, the PID control gain values are based on the control design method proposed in [

24]. The phase margin and the gain margin were set to

and 6.0206 dB in this work.

6. Implementation of the Designed System

Figure 9 shows a block diagram of the proposed ball-catching system. This system consisted of an omni-directional wheeled mobile robot and an image processing system that included an active stereo vision camera and a static vision camera.

Figure 10a shows the active stereo vision camera. The MT9P001 image sensor was used, which is a complementary metal-oxide-semiconductor (CMOS). It can capture 640 × 480 pixels in the quantized RGB format at 60 frames per second (FPS). The cameras were attached onto a field-programmable gate array (FPGA) board. This FPGA board was used to configure the cameras and to acquire images. In the real-time image process for the acquired images, a DSP (TMS320DM6437) board was used.

An optical encoder with the resolution of 500 pulses/rev was used to measure the motors’ angular displacement in the pan-and-tilt platform. Another DSP board (TMS320F2812), with two quadrature encoder pulse (QEP) units and one pulse width modulation (PWM) signal generator unit was used to acquire the angular displacement and rotational direction of the motor from the quadrature encoder and to control the pan-and-tilt platform motors. In addition, the Kalman filter was implemented to mitigate the measurement noises and to predict the motion of the ball.

The static vision camera was mounted above the work area, where the FoV of the camera covered the entire work area (length: 2.5 m, width: 2.5 m, and height: 3 m) as shown in

Figure 1. This camera was used to locate and navigate the omni-directional wheeled mobile robot. As with the active stereo vision camera, the Kalman-filter-based vision tracking and image processing algorithms were implemented in the DSP board (TMS320DM6437).

The mobile robot, as stated in

Section 6, consisted of three brushed DC motors used to drive the omni-directional wheels. A DSP board (TMS320F2812) was used for PID control of the motors and the touchdown point prediction for the ball. To obtain the wheel’s angular displacement, an optical encoder with a 500 pulse/rev resolution was mounted to the shaft of each wheel. These optical encoders generate quadrature encoder signals to the QEP circuit on an FPGA board for decoding. The wheels’ angular velocities were obtained by differentiating the angular displacement using the sampling time, and a low-pass filter was then applied to attenuate the high-frequency noises.

The robot’s position and orientation were determined with the static vision camera and a dead reckoning algorithm based on the motor encoder measurements. The PWM signals were generated according to the designed PID control laws to drive each of the motors.

Figure 10b shows a basket 0.16 m in diameter mounted on the top of the robot for the purpose of catching the ball.

All of the systems stated above were communicated with using wireless communication modules, as shown in

Figure 9. The active stereo vision camera obtained the position and velocity of the ball and then sent it to the mobile robot to predict the touchdown point. The static vision camera obtained the position and direction of the mobile robot, and then sent it to the mobile robot for navigation and positioning through wireless communication.

8. Concluding Remarks

In this research, a robotic ball-catching system was presented. This system consisted of multi-camera vision systems, an omni-directional wheeled mobile robot, and wireless communication. In the multi-camera vision system, the ball’s motion was tracked with an active stereo vision camera while a static vision camera navigated a mobile robot. Using a Kalman filter and the motion governing equations of a flying ball, the ball’s touchdown point was predicted with reasonable accuracy. For Kalman filtering, we found that the use of deep learning improved the accuracy and robustness.

The robot was controlled to move toward the predicted point to catch the ball before it hit the ground. The performance of the sub-systems and the proposed algorithms was verified through simulations and experiments. The results of the simulation matched the experimental results well. The experimental results confirmed that the developed robotic system combined with multi-camera vision systems could catch a flying ball.

The main contribution of this paper is to present the main issues and technical challenges of the design and system integration of a vision-based ball-catching system. Compared with the existing vision-based ball-catching systems, by combining an omni-directional wheeled mobile robot and an active stereo vision system, the ball-catching system proposed in this paper can be in a large workspace.

In future research, the image capture system used in this paper can be improved through the use of better Kalman filtering methods to restrain noise problems. Deep learning will be applied to image pre-processing, and it will be used on complex backgrounds with balls of different colors to verify the recognition accuracy. Different sensors (such as a laser range finder or RGB-D cameras) will be used for experimental comparisons. Different control laws will be applied to the omni-directional wheeled mobile robot in an attempt to increase the speed and accuracy of movement. In addition, worst-case conditions (long-distance movement or disturbance during the movement) will be applied to verify the robot’s abilities. In the simulations and experiments, different ball conditions (speed, height, etc.) and disturbances during the flight will be used to verify the system robustness.