Automatic Correction of Real-Word Errors in Spanish Clinical Texts

Abstract

1. Introduction

2. Related Works

3. Seq2Seq Neural Machine Translation-Based Approach to Texts Correction

3.1. Seq2Seq Neural Machine Translation

The Transformer Architecture

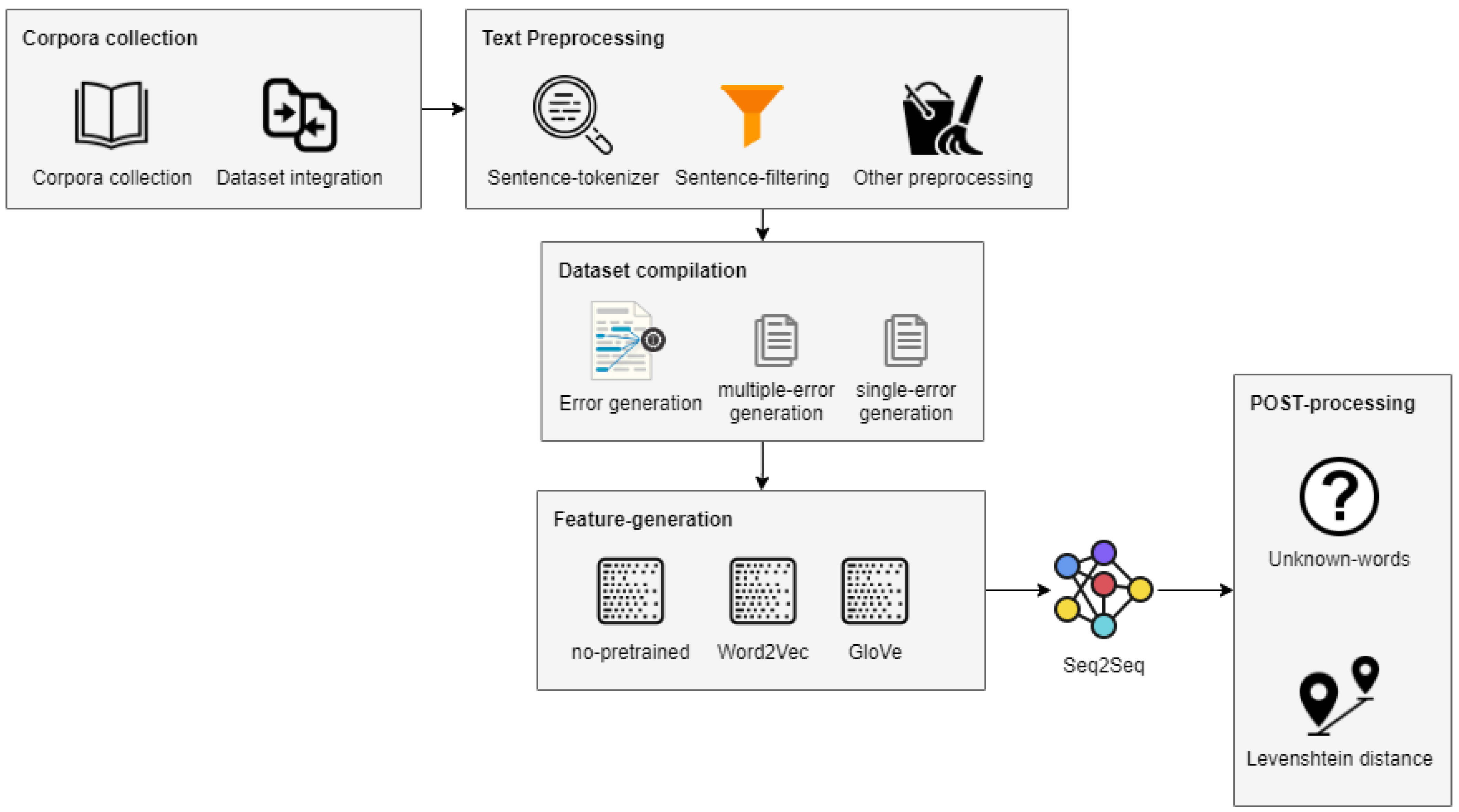

3.2. Initial Setup

3.2.1. Corpora

3.2.2. Pretrained Embeddings

3.3. Seq2Seq Model Building

3.3.1. Text Preprocessing

3.3.2. Error Generation

- Grammatical genre errors were generated by identifying the most common morphological suffixes: -ero, -ano, -ado, -ino, -ido, -ico, -ito, -oso, -ario, -ento, -olo, etc. In Spanish, as opposed to English, nouns, adjectives, or determiners, have lexical gender and vary their inflection in order to share an agreement in the same clause. If the input word ending matched one suffix listed, then the termination was replaced by the opposite, so that the word genre was modified. The rules were applied to masculine and feminine words in both singular and plural forms.

- In a similar manner, number errors were generated by identifying and modifying the most common word endings in singular, i.e., -o, -e, -a, -d, -n, -l, -j and -r, or in plural form, i.e., -os, -es, -as. Singular words were replaced by their plural and vice versa, regardless of their genre.

- Grammatical genre-number rules were combined to generate both kinds of error in the same word. For instance, singular words had their genre modified first and then the plural form was obtained, whereas plural words were changed to singular and then its genre was modified.

- Writing down a list of homophone words would have been tedious. For that reason, similar rules to those that have been described so far were defined. The most common homophone words contain the letters b, v, ll, y and h, since the pairs b/v and ll/y are equally pronounced in Spanish, whereas the letter h is silent. Therefore, the rules determined first whether these characters were present in the target word and then replaced them by the opposite. If a word started with h or this letter followed after n or s, then it was removed. The inverse rules were also considered.

- In addition to the previous rules, the method Hunspell/suggest [37] was used so as to obtain additional real-word error types. The method took an input word and returned a list of terms that were written in a similar way though they differed in meaning. In this way, the first suggestion was picked from the list. The generation of previous errors was avoided by testing the aforementioned rules before picking a word, so that new error types were obtained.

- The last type of real-word error generated was concerned with subject and verb concordance. Spanish verbs are divided into three conjugation groups: -ar, -er and -ir. For each group, the terminations of the conjugated verbs were listed for past, present and future tenses in both indicative and subjunctive moods. Only regular verbs were considered. Additionally, the conjugation of the verb haber was listed, since it is frequently used as the auxiliary verb in perfect tenses. If the input word was a verb, then it was identified as long as its termination appeared in the list and the person was randomly changed by a different one in the same tense. If the termination was not identified, then the whole word was compared to the conjugation list of haber. Conjugation groups were identified in the sentence by using the method Hunspell/stem [37], which returns the verb in its infinitive form.

3.3.3. Dataset Compilation Strategies

- The first strategy (hereafter, strategy 1) consisted in generating multiple erroneous sentences from one correct sentence. For that, the whole set of rules was selected and applied separately to the correct sentence. In order to balance the number of error types in the dataset, rules were divided according to the error type they generated and, for each group, only one rule was applied at random. Therefore, one sentence or none was obtained for each error type, depending on the rules that were applicable to the correct sentence. Not every attempt succeeded in generating one erroneous sentence. Sentences contained only one error. Additionally, the correct sentence was added to the erroneous dataset [11], as doing so augmented the training data.

- The second strategy (hereafter, strategy 2) generated only one erroneous sentence from the correct sentence by applying only one rule. Thus, only one error type was generated in the sentence. Again, rules were classified according to the output error type. The groups were applied at random, so as to make sure that the resulting dataset had a balanced number of error types. If the rule generated a real word in the sentence, then the erroneous sentence was added to the dataset and the rest of the rules were not tested. On the other hand, the correct sentence was added to the erroneous dataset only when no rule was able to generate an erroneous sentence.

3.3.4. Model Implementation

3.3.5. Correction Method

4. Evaluation

4.1. Experiments Sample Corrections

4.2. Performance on All Error Types

4.3. Performance on Each Error Type

- Evaluation of grammatical genre error correction. Table 9 shows the performance of the models on grammatical genre error types. In the Wikicorpus, precision of models without pretrained embeddings was high ( and ) and corrected around 30% of errors. Models using pretrained embeddings provided lower values. The lowest results were obtained for GloVe with and . Nevertheless, a similar performance to the models without pretrained embedding is observed for Word2Vec in the training set generated using strategy 2 with and . In the medicine corpus, the performance of the models improved with respect to the Wikicorpus. The model without pretrained embedding trained on the training set generated using strategy 2 corrected almost 50% of genre errors. However, GloVe performance decreased in the training set generated using strategy 1 () and in the training set generated using strategy 2 (). Models with pretrained embeddings improved from the training set generated using strategy 1 to the training set generated using strategy 2 for both corpora.Table 9. Recall ( ), precision ( ) and for the evaluation of genre error correction.

Training Strategy Wikicorpus Strategy 1 - 30.52 86.35 63.22 GloVe 13.92 30.20 24.47 W2V 21.53 65.93 46.68 Strategy 2 - 32.16 83.14 63.13 GloVe 25.09 53.56 43.66 W2V 30.57 74.60 57.92 Medicine corpus Strategy 1 - 36.71 81.70 65.62 GloVe 11.51 15.12 14.22 W2V 30.85 61.19 51.13 Strategy 2 - 48.10 84.83 73.59 GloVe 21.48 27.74 26.21 W2V 45.75 78.99 68.97 - Evaluation of number error correction. Table 10 shows the performance of the models on number error types. In the Wikicorpus, the model without pretrained embedding corrected 43.01% of errors in the training set generated using strategy 1, whereas it corrected 49.58% in the training set generated using strategy 2. Precision is high for both: and , respectively. GloVe results were much lower in the training set generated using strategy 1 ( and ) and the training set generated using strategy 2 ( and ), whereas Word2Vec performed similar to the model without pretrained embedding ( and ) in the training set generated using strategy 2. In the medicine corpus, models without pretrained embedding and models with Word2Vec improved when trained on medicine sentences. The model without pretrained embedding shows in the training set generated using strategy 1 and in the training set generated using strategy 2. This last model corrected more than 70% of sentences. For both corpora, better results were obtained in the training set generated using strategy 2 than in the training set generated using strategy 1.Table 10. Recall ( ), precision ( ) and for the evaluation of number error correction.

Training Strategy Wikicorpus Strategy 1 - 43.01 87.81 72.67 GloVe 27.12 45.53 40.09 W2V 37.97 77.00 63.87 Strategy 2 - 49.58 84.18 73.87 GloVe 39.45 62.66 56.06 W2V 48.93 81.47 71.91 Medicine corpus Strategy 1 - 60.32 83.03 77.22 GloVe 22.35 27.05 25.96 W2V 55.67 74.54 69.81 Strategy 2 - 72.11 86.01 82.82 GloVe 28.24 31.42 30.73 W2V 66.57 79.15 76.27 - Evaluation of grammatical genre-number error correction. Table 11 shows the performance of the models on grammatical genre-number error types. In the medicine corpus, the model without pretrained embedding and the model with Word2Vec corrected 53.40% and 50.74% of sentences when trained on the training set generated using strategy 1 and a precision of and was obtained. GloVe performance was again quite below these values, showing . The results improved after training the models on the training set generated using strategy 2. For the model without pretrained embedding, recall was and precision . The models trained on Wikicorpus sentences performed lower than when trained on medicine sentences. However, this is not true for GloVe in both the training sets generated using strategy 1 and strategy 2, where was equal to and , respectively.Table 11. Recall ( ), precision ( ) and for the evaluation of genre-number error correction.

Training Strategy Wikicorpus Strategy 1 - 35.07 82.37 64.87 GloVe 19.45 35.53 30.49 W2V 30.19 67.61 54.18 Strategy 2 - 39.61 78.84 65.81 GloVe 29.97 52.69 45.75 W2V 38.14 71.60 60.91 Medicine corpus Strategy 1 - 53.40 78.57 71.80 GloVe 16.40 18.63 18.13 W2V 50.74 70.71 65.55 Strategy 2 - 70.57 84.90 81.59 GloVe 27.64 30.48 29.87 W2V 64.94 77.74 74.80 - Evaluation of homophone error correction. A good performance was obtained for the models without pretrained embeddings (see Table 12). In the medicine corpus, recall was equal to and precision in the training set generated using strategy 1 and and in the training set generated using strategy 2. In the Wikicorpus, recall was and precision was when trained on the training set generated using strategy 1, which resulted in . Nevertheless, these values sharply decreased in the training set generated using strategy 2, resulting in . Then, Word2Vec performed well for the medicine corpus in both the training sets generated using strategy 1 and strategy 2 with and , respectively. Very bad results were obtained for GloVe. For the Wikicorpus, this model corrected less than 9% of sentences in the training set generated using strategy 1 and precision was . In line with this, for the medicine corpus this model corrected 26.11% of the sentences and the precision was 26.54%.Table 12. Recall ( ), precision ( ) and for the evaluation of homophone error correction.

Training Strategy Wikicorpus Strategy 1 - 78.41 93.34 89.92 GloVe 8.77 19.63 15.73 W2V 13.20 51.16 32.48 Strategy 2 - 17.20 66.52 42.28 GloVe 12.98 33.62 25.51 W2V 30.57 74.59 57.92 Medicine corpus Strategy 1 - 72.24 88.76 84.88 GloVe 26.11 26.65 26.54 W2V 70.75 76.50 75.28 Strategy 2 - 75.68 83.30 81.66 GloVe 28.85 29.68 29.51 W2V 69.66 75.63 74.34 - Evaluation of Hunspell-generated error correction. In Table 13, for the medicine corpus the model without pretrained embedding corrected only 36.10% of sentences in the training set generated using strategy 1, whereas the model with Word2Vec corrected 30.08%. However, the precision of the former is compared to of the latter. In the Wikicorpus, the number of corrected sentences decreased to 17.42% for the model without pretrained embedding in the training set generated using strategy 1. The three models revealed a very bad performance in the training set generated using strategy 2. Actually, for this error type, results decreased in all models that were trained on the training set generated using strategy 2 in both corpora.Table 13. Recall ( ), precision ( ) and for the evaluation of Hunspell-generated error correction.

Training Strategy Wikicorpus Strategy 1 - 17.42 78.06 46.03 GloVe 15.49 40.96 30.82 W2V 20.27 58.74 42.58 Strategy 2 - 10.25 56.60 29.72 GloVe 7.17 28.00 17.71 W2V 7.52 31.88 19.34 Medicine corpus Strategy 1 - 36.10 75.40 61.92 GloVe 13.31 16.36 15.64 W2V 30.08 56.13 47.85 Strategy 2 - 20.60 54.57 41.04 GloVe 9.69 12.95 12.13 W2V 20.65 44.46 36.13 - Evaluation of subject-verb concordance error correction. Models show better performance when trained on the medicine corpus than on the Wikicorpus (see Table 14). In the medicine corpus, the model without pretrained embedding corrected 44.55% of sentences in the training set generated using strategy 1 and 47.39% in the training set generated using strategy 2. Precision was low for both datasets ( and ). The model with Word2Vec corrected 41.37% of sentences in the training set generated using strategy 1 and 43.01% in the training set generated using strategy 2, whereas the model with GloVe corrected 16.65% of sentences in the training set generated using strategy 1 and 19.72% in the training set generated using strategy 2. The results of the models in the Wikicorpus are poorer than in the medicine corpus. The model without pretrained embedding corrected 24.55% of sentences in the training set generated using strategy 1, whereas the models using GloVe and Word2Vec corrected 7.89% and 10.96%, respectively.Table 14. Recall ( ), precision ( ) and for the evaluation of subject-verb concordance error correction.Table 14. Recall ( ), precision ( ) and for the evaluation of subject-verb concordance error correction.

Training Strategy Wikicorpus Strategy 1 - 24.55 50.00 41.41 GloVe 7.89 16.55 13.57 W2V 10.96 33.95 23.92 Strategy 2 - 15.23 44.48 32.14 GloVe 11.89 32.58 24.17 W2V 14.24 38.46 28.70 Medicine corpus Strategy 1 - 44.55 59.30 55.61 GloVe 16.65 18.69 18.25 W2V 41.37 52.07 49.51 Strategy 2 - 47.39 62.18 58.53 GloVe 19.72 22.74 22.06 W2V 43.01 55.59 52.52

4.4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Hussain, F.; Qamar, U. Identification and Correction of Misspelled Drugs’ Names in Electronic Medical Records (EMR). In Proceedings of the 18th International Conference on Enterprise Information Systems (ICEIS 2016), Rome, Italy, 25–28 April 2016; Hammoudi, S., Maciaszek, L.A., Missikoff, M., Camp, O., Cordeiro, J., Eds.; SCITEPRESS—Science and and Technology Publications: Setúbal, Portugal, 2016; Volume 2, pp. 333–338. [Google Scholar] [CrossRef]

- Workman, T.E.; Shao, Y.; Divita, G.; Zeng-Treitler, Q. An efficient prototype method to identify and correct misspellings in clinical text. BMC Res. Notes 2019, 12, 42. [Google Scholar] [CrossRef] [PubMed]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing (Draft), 3rd ed. 2020. Available online: https://web.stanford.edu/~jurafsky/slp3/ (accessed on 20 April 2021).

- López-Hernández, J.; Almela, Á.; Valencia-García, R. Automatic Spelling Detection and Correction in the Medical Domain: A Systematic Literature Review. In Technologies and Innovation, Proceedings of the 5th International Conference, CITI 2019, Guayaquil, Ecuador, 2–5 December 2019; Valencia-Garcıa, R., Alcaraz-Mármol, G., del Cioppo-Morstadt, J., Bucaram-Leverone, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 95–108. [Google Scholar] [CrossRef]

- Ramshaw, L.A. Correcting real-word spelling errors using a model of the problem-solving context. Comput. Intell. 1994, 10, 185–211. [Google Scholar] [CrossRef]

- Sharma, S.; Gupta, S. A Correction Model for Real-word Errors. Procedia Comput. Sci. 2015, 70, 99–106. [Google Scholar] [CrossRef][Green Version]

- Patrick, J.; Sabbagh, M.; Jain, S.; Zheng, H. Spelling correction in clinical notes with emphasis on first suggestion accuracy. In Proceedings of the 2nd Workshop on Building and Evaluating Resources for Biomedical Text Mining, Valletta, Malta, 18 May 2010; pp. 1–8. [Google Scholar]

- Samanta, P.; Chaudhuri, B.B. A simple real-word error detection and correction using local word bigram and trigram. In Proceedings of the 25th Conference on Computational Linguistics and Speech Processing, ROCLING 2015, Kaohsiung, Taiwan, 4–5 October 2013; Association for Computational Linguistics and Chinese Language Processing (ACLCLP): Taipei, Taiwan, 2013. [Google Scholar]

- Grundkiewicz, R.; Junczys-Dowmunt, M. Near Human-Level Performance in Grammatical Error Correction with Hybrid Machine Translation. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), New Orleans, LA, USA, 1 June 2018; Walker, M., Ji, H., Stent, A., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 284–290. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Shen, K.; Jia, R.; Liu, J. Improving Grammatical Error Correction via Pre-Training a Copy-Augmented Architecture with Unlabeled Data. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 3 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 156–165. [Google Scholar] [CrossRef]

- Beloki, Z.; Saralegi, X.; Ceberio, K.; Corral, A. Grammatical Error Correction for Basque through a seq2seq neural architecture and synthetic examples. Proces. Leng. Nat. 2020, 65, 13–20. [Google Scholar]

- Wilson, B.J.; Schakel, A.M.J. Controlled Experiments for Word Embeddings. arXiv 2015, arXiv:1510.02675. [Google Scholar]

- Lai, K.H.; Topaz, M.; Goss, F.R.; Zhou, L. Automated misspelling detection and correction in clinical free-text records. J. Biomed. Inform. 2015, 55, 188–195. [Google Scholar] [CrossRef] [PubMed]

- Fivez, P.; Suster, S.; Daelemans, W. Unsupervised Context-Sensitive Spelling Correction of Clinical Free-Text with Word and Character N-Gram Embeddings. In Proceedings of the BioNLP 2017, Vancouver, BC, Canada, 4 August 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 143–148. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; MIT Press: Cambridge, MA, USA, 2014; pp. 3104–3112. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., von Luxburg, U., Bengio, S., Wallach, H.M., Fergus, R., Vishwanathan, S.V.N., Garnett, R., Eds.; MIT Press: Cambridge, MA, USA, 2017; pp. 5998–6008. [Google Scholar]

- Peters, M.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep Contextualized Word Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; Walker, M., Ji, H., Stent, A., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 2227–2237. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Volume 1 (Long and Short Papers). Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25 October 2014; Moschitti, A., Pang, B., Daelemans, W., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. In Proceedings of the 1st International Conference on Learning Representations, ICLR 2013, Scottsdale, AZ, USA, 2–4 May 2013; Bengio, Y., LeCun, Y., Eds.; Workshop Track Proceedings. OpenReview: Amherst, MA, USA, 2013. [Google Scholar]

- Yin, F.; Long, Q.; Meng, T.; Chang, K.-W. On the Robustness of Language Encoders against Grammatical Errors. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 3386–3403. [Google Scholar] [CrossRef]

- Alamri, M.; Teahan, W. Automatic Correction of Arabic Dyslexic Text. Computers 2019, 8, 19. [Google Scholar] [CrossRef]

- Ferraro, G.; Nazar, R.; Alonso Ramos, M.; Wanner, L. Towards advanced collocation error correction in Spanish learner corpora. Lang. Resour. Eval. 2014, 48, 45–64. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25 October 2014; Moschitti, A., Pang, B., Daelemans, W., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2015, arXiv:1409.0473. [Google Scholar]

- Reese, S.; Boleda, G.; Cuadros, M.; Padró, L.; Rigau, G. Wikicorpus: A Word-Sense Disambiguated Multilingual Wikipedia Corpus. In Proceedings of the International Conference on Language Resources and Evaluation, LREC 2010, Valletta, Malta, 17–23 May 2010; Calzolari, N., Choukri, K., Maegaard, B., Mariani, J., Odijk, J., Piperidis, S., Rosner, M., Tapias, D., Eds.; European Language Resources Association: Paris, France, 2010. [Google Scholar]

- Atserias, J.; Casas, B.; Comelles, E.; González, M.; Padró, L.; Padró, M. FreeLing 1.3: Syntactic and semantic services in an open-source NLP library. In Proceedings of the Fifth International Conference on Language Resources and Evaluation, LREC 2006, Genoa, Italy, 22–28 May 2006; Calzolari, N., Choukri, K., Gangemi, A., Maegaard, B., Mariani, J., Odijk, J., Tapias, D., Eds.; European Language Resources Association (ELRA): Paris, France, 2006; pp. 2281–2286. [Google Scholar]

- Agirre, E.; Soroa, A. Personalizing PageRank for Word Sense Disambiguation. In Proceedings of the EACL 2009 12th Conference of the European Chapter of the Association for Computational Linguistics, Athens, Greece, 30 March–3 April 2009; Lascarides, A., Gardent, C., Nivre, J., Eds.; The Association for Computer Linguistics: Stroudsburg, PA, USA, 2009; pp. 33–41. [Google Scholar]

- Miranda-Escalada, A.; Gonzalez-Agirre, A.; Armengol-Estapé, J.; Krallinger, M. Overview of Automatic Clinical Coding: Annotations, Guidelines, and Solutions for non-English Clinical Cases at CodiEsp Track of CLEF eHealth 2020. In Proceedings of the Working Notes of CLEF 2020—Conference and Labs of the Evaluation Forum, Thessaloniki, Greece, 22–25 September 2020; Cappellato, L., Eickhoff, C., Ferro, N., Névéol, A., Eds.; CEUR-WS: Aachen, Germany, 2020; Volume 2696. [Google Scholar]

- Intxaurrondo, A.; Marimon, M.; Gonzalez-Agirre, A.; López-Martin, J.A.; Rodriguez, H.; Santamaria, J.; Villegas, M.; Krallinger, M. Finding Mentions of Abbreviations and Their Definitions in Spanish Clinical Cases: The BARR2 Shared Task Evaluation Results. In Proceedings of the Third Workshop on Evaluation of Human Language Technologies for Iberian Languages (IberEval 2018) Co-Located with 34th Conference of the Spanish Society for Natural Language Processing (SEPLN 2018), Sevilla, Spain, 18 September 2018; Rosso, P., Gonzalo, J., Martinez, R., Montalvo, S., de Albornoz, J.C., Eds.; SEPLN: Jaén, Spain, 2018; Volume 2150, pp. 280–289. [Google Scholar]

- Řehůřek, R.; Sojka, P. Software Framework for Topic Modelling with Large Corpora. In Proceedings of the LREC 2010 Workshop New Challenges for NLP Frameworks, Valleta, Malta, 22 May 2010; University of Malta: Valletta, Malta, 2010; pp. 46–50. [Google Scholar] [CrossRef]

- Bird, S. NLTK: The Natural Language Toolkit. In Proceedings of the ACL 2006 21st International Conference on Computational Linguistics and 44th Annual Meeting of the Association for Computational Linguistics, Sydney, Australia, 17–21 July 2006; Calzolari, N., Cardie, C., Isabelle, P., Eds.; Association for Computational Linguistics: Morristown, NJ, USA, 2006; pp. 69–72. [Google Scholar] [CrossRef]

- Klein, G.; Kim, Y.; Deng, Y.; Senellart, J.; Rush, A. OpenNMT: Open-Source Toolkit for Neural Machine Translation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Vancouver, BC, Canada, 30 July–4 August 2017; Bansal, M., Ji, H., Eds.; System Demonstrations. Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 67–72. [Google Scholar] [CrossRef]

- Yuan, Z.; Felice, M. Constrained Grammatical Error Correction using Statistical Machine Translation. In Proceedings of the Seventeenth Conference on Computational Natural Language Learning: Shared Task, CoNLL 2013, Sofia, Bulgaria, 8–9 August 2013; Ng, H.T., Tetreault, J.R., Wu, S.M., Wu, Y., Hadiwinoto, C., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2013; pp. 52–61. [Google Scholar]

- Ooms, J. The Hunspell Package: High-Performance Stemmer, Tokenizer, and Spell Checker for R. Available online: https://cran.r-project.org/web/packages/hunspell/vignettes/intro.html (accessed on 4 March 2021).

- Wang, C.; Cho, K.; Gu, J. Neural Machine Translation with Byte-Level Subwords. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, the Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, the Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, 7 February 2020; pp. 9154–9160. [Google Scholar]

- Cardellino, C. Spanish Billion Words Corpus and Embeddings. Available online: https://crscardellino.github.io/SBWCE/ (accessed on 7 March 2021).

- Cañete, J.; Chaperon, G.; Fuentes, R.; Ho, J.-H.; Kang, H.; Pérez, J. Spanish Pre-Trained BERT Model and Evaluation Data. In Proceedings of the PML4DC at ICLR 2020, Addis Ababa, Ethipia, 26 April 2020. [Google Scholar]

- García-Díaz, J.A.; Cánovas-García, M.; Valencia-García, R. Ontology-driven aspect-based sentiment analysis classification: An infodemiological case study regarding infectious diseases in Latin America. Future Gener. Comput. Syst. 2020, 112, 641–657. [Google Scholar] [CrossRef] [PubMed]

- García-Díaz, J.A.; Cánovas-García, M.; Colomo-Palacios, R.; Valencia-García, R. Detecting misogyny in Spanish tweets. An approach based on linguistics features and word embeddings. Future Gener. Comput. Syst. 2021, 114, 506–518. [Google Scholar] [CrossRef]

- Apolinario-Arzube, Ó.; García-Díaz, J.A.; Medina-Moreira, J.; Luna-Aveiga, H.; Valencia-García, R. Comparing Deep-Learning Architectures and Traditional Machine-Learning Approaches for Satire Identification in Spanish Tweets. Mathematics 2020, 8, 2075. [Google Scholar] [CrossRef]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef] [PubMed]

- García-Sánchez, F.; Colomo-Palacios, R.; Valencia-García, R. A social-semantic recommender system for advertisements. Inf. Process. Manag. 2020, 57, 102153. [Google Scholar] [CrossRef]

| Study | Corpora | Methods | Language |

|---|---|---|---|

| [7] | Corpus of clinical records of the Emergency Department at the Concord Hospital, Sidney. | Rule-based system and frequency-based method for detection and correction, respectively. | English |

| [8] | Brigham Young University bigram and trigram corpus. | Correction frequency-based method: bigram and trigram counting. | English |

| [13] | Free-text allergy entries from Allergy Repository (PEAR). | Dictionary look-up detection method and noisy channel correction method. | English |

| [14] | Clinical free-text from the Medical Information Mart for Intensive Care III (MIMIC-III) database. | No detection method. Word embedding cosine similarity for correction. | English |

| [11] | Sentences extracted from Basque news websites. | Correction with seq-2-seq Neural Machine Translation model. | Basque |

| [23] | Sentence pairs from 1B Word Benchmark. | BERT, RoBERTa and ELMo encoders and feature/fine-tuning based training for error location. | English |

| [24] | Bangor Dyslexic Arabic Corpus (BDAC). | Dictionary look-up detection method. PPM language model, edit operations and compression codelength for correction. | Arabic |

| [25] | Corpus Escrito del Español L2 (CEDEL2). | Error detection based on the frequency of n-grams. Affinity, lexical context and context feature metrics to select candidate correction. | Spanish |

| Dataset | Cases | Sentences | Tokens |

|---|---|---|---|

| CodiEsp | 3751 | 50,052 | 1,233,201 |

| MEDDOCAN | 1000 | 33,000 | 495,000 |

| SPACCC | 20,900 | 20,900 | 436,000 |

| Error Type | Substring Replacement | Erroneous Sentence |

|---|---|---|

| Grammatical genre | -ido-ida | Dicho traspaso se realiza unida al de su compañero. |

| -eras-eros | Las aguas del oeste son traicioneros. | |

| Number | -e-es | La aeronaves terminó contra un muro de contención. |

| -des-d | Consciente de sus numerosas virtud. | |

| Grammatical genre-number | -osos-osa | Se caracterizan por tener diseños agresivos y sinuosa. |

| -ado-adas | El proyecto fue abandonadas hace años. | |

| Homophones | a-ha- | Es una de las compañías más importantes ha nivel mundial. |

| -n--nh- | Receta de pollo con lima y enhebro. | |

| Hunspell | callecallé | Más de la mitad de la gente vive ahora en la callé. |

| agravioagrado | El agrado costó el reemplazo de los muebles. | |

| Subject-verb concordance | -an-as | Tanto las mujeres como los hombres las llevas. |

| -aba-ábamos | Peleábamos con los invitados para divertirse. |

| Wikicorpus | E1 | E2 | E3 | E4 | E5 | E6 |

|---|---|---|---|---|---|---|

| Strategy 1 | 1,952,506 | 3,196,193 | 2,027,014 | 1,712,850 | 2,753,397 | 1,786,375 |

| Strategy 2 | 472,376 | 788,092 | 492,374 | 415,709 | 671,898 | 430,993 |

| Medicine | E1 | E2 | E3 | E4 | E5 | E6 |

|---|---|---|---|---|---|---|

| Strategy 1 | 64,439 | 100,871 | 71,027 | 27,609 | 90,917 | 67,849 |

| Strategy 2 | 18,537 | 24,261 | 19,704 | 6000 | 20,004 | 18,852 |

| Training | Validation * | Test | Total | |

|---|---|---|---|---|

| Wikicorpus | ||||

| Strategy 1 | 14,181,497 | 5000 | 10,000 | 14,196,497 |

| Strategy 2 | 3,512,147 | 5000 | 10,000 | 3,527,147 |

| Medicine corpus | ||||

| Strategy 1 | 276,835 | 5000 | 9533 | 291,368 |

| Strategy 2 | 75,062 | 5000 | 9533 | 89,595 |

| Medicine-Wikicorpus | ||||

| Strategy 1 | 553,670 | 5000 | 10,000 | 568,670 |

| Strategy 2 | 150,124 | 5000 | 10,000 | 165,124 |

| Error Type | Result | Sentence (Source/Target/seq2seq Correction) |

|---|---|---|

| Grammatical genre | Success | Source: “Se procede a la electrocoagulación de varias áreas con sangrado activa” Target: “Se procede a la electrocoagulación de varias áreas con sangrado activo “ Seq2seq correction: “Se procede a la electrocoagulación de varias áreas con sangrado activo” |

| Failure | Source: “Estabilización y traslada a planta de medicina interna” Target: “Estabilización y traslado a planta de medicina interna” Seq2seq correction: “Estabilización y traslada a planta de medicina interna” | |

| Number | Success | Source: “No obstante el pacientes se encuentra tranquilo en actitud plácida” Target: “ No obstante el paciente se encuentra tranquilo en actitud plácida” Seq2seq correction: “No obstante el paciente se encuentra tranquilo en actitud plácida” |

| Failure | Source: “Sin antecedente de enfermedades cronicodegenerativas” Target: “Sin antecedentes de enfermedades cronicodegenerativas” Seq2seq correction: “Sin antecedente de enfermedades cronicodegenerativas” | |

| Grammatical genre-number | Success | Source: “Los cultiva del LCR fueron negativos” Target: “Los cultivos del LCR fueron negativos” Seq2seq correction: “Los cultivos del LCR fueron negativos” |

| Failure | Source: “La movilidad facial estaba conservados” Target: “La movilidad facial estaba conservada” Seq2seq correction: “La movilidad facial estaba conservados” | |

| Homophones | Success | Source: “Durante un año de seguimiento a presentado esputos hemoptoicos ocasionales” Target: “Durante un año de seguimiento ha presentado esputos hemoptoicos ocasionales” Seq2seq correction: “Durante un año de seguimiento ha presentado esputos hemoptoicos ocasionales” |

| Failure | Source: “Tampoco refiere el huso de algún fármaco de forma usual ni alergias” Target: “Tampoco refiere el uso de algún fármaco de forma usual ni alergias” Seq2seq correction: “Tampoco refiere el uso de algún fármaco en forma usual ni alergias” | |

| Hunspell | Success | Source: “En la observación genera, el abdomen se presentaba blando e indoloro” Target: “En la observación general, el abdomen se presentaba blando e indoloro” Seq2seq correction: “En la observación general, el abdomen se presentaba blando e indoloro” |

| Failure | Source: “Tras la administración de contraste la amas realzaba su densidad” Target: “Tras la administración de contraste la masa realzaba su densidad” Seq2seq correction: “Tras la administración de contraste la amas realzaba su densidad” | |

| Subject-verb concordance | Success | Source: “Se realizo ECO doppler y angioma TAC” Target: “Se realiza ECO doppler y angioma TAC” Seq2seq correction: “Se realiza ECO doppler y angioma TAC” |

| Failure | Source: “Se realizaron una artrocentesis bajo control de radioscopia con anestesia general” Target: “Se realizó una artrocentesis bajo control de radioscopia con anestesia general” Seq2seq correction: “Se realizaron una artrocentesis bajo control de radioscopia con anestesia general” |

| Training Strategy | |||||

|---|---|---|---|---|---|

| Wikicorpus | Strategy 1 | - | 37.61 | 70.19 | 59.77 |

| GloVe | 12.79 | 21.71 | 19.05 | ||

| W2V | 18.88 | 39.72 | 32.54 | ||

| Strategy 2 | - | 23.58 | 46.04 | 38.67 | |

| GloVe | 21.16 | 41.65 | 34.89 | ||

| W2V | 23.84 | 48.42 | 40.15 | ||

| Medicine corpus | Strategy 1 | - | 46.06 | 65.68 | 60.53 |

| GloVe | 15.79 | 17.95 | 17.48 | ||

| W2V | 40.07 | 54.31 | 50.71 | ||

| Strategy 2 | - | 50.92 | 69.79 | 64.98 | |

| GloVe | 21.58 | 24.71 | 24.01 | ||

| W2V | 46.69 | 61.82 | 58.08 | ||

| Medicine-wikicorpus | Strategy 1 | - | 20.61 | 45.83 | 36.82 |

| GloVe | 3.76 | 4.61 | 4.41 | ||

| W2V | 14.88 | 24.79 | 21.88 | ||

| Strategy 2 | - | 27.92 | 56.33 | 46.81 | |

| GloVe | 10.63 | 13.36 | 12.70 | ||

| W2V | 23.36 | 40.92 | 35.57 | ||

| Training Strategy | E1 | E2 | E3 | E4 | E5 | ||

|---|---|---|---|---|---|---|---|

| Wikicorpus | Strategy 1 | Before | 63.22 | 72.67 | 64.87 | 89.92 | 46.03 |

| After | 54.41 | 65.30 | 50.81 | 86.91 | 78.92 | ||

| Strategy 2 | Before | 63.13 | 73.83 | 65.81 | 42.28 | 29.72 | |

| After | 51.29 | 67.36 | 49.68 | 73.74 | 70.99 | ||

| Medicine corpus | Strategy 1 | Before | 65.62 | 77.22 | 71.80 | 84.88 | 61.92 |

| After | 50.02 | 71.96 | 62.14 | 77.09 | 77.53 | ||

| Strategy 2 | Before | 73.59 | 82.82 | 81.59 | 81.66 | 41.04 | |

| After | 56.52 | 74.78 | 67.16 | 72.44 | 74.42 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bravo-Candel, D.; López-Hernández, J.; García-Díaz, J.A.; Molina-Molina, F.; García-Sánchez, F. Automatic Correction of Real-Word Errors in Spanish Clinical Texts. Sensors 2021, 21, 2893. https://doi.org/10.3390/s21092893

Bravo-Candel D, López-Hernández J, García-Díaz JA, Molina-Molina F, García-Sánchez F. Automatic Correction of Real-Word Errors in Spanish Clinical Texts. Sensors. 2021; 21(9):2893. https://doi.org/10.3390/s21092893

Chicago/Turabian StyleBravo-Candel, Daniel, Jésica López-Hernández, José Antonio García-Díaz, Fernando Molina-Molina, and Francisco García-Sánchez. 2021. "Automatic Correction of Real-Word Errors in Spanish Clinical Texts" Sensors 21, no. 9: 2893. https://doi.org/10.3390/s21092893

APA StyleBravo-Candel, D., López-Hernández, J., García-Díaz, J. A., Molina-Molina, F., & García-Sánchez, F. (2021). Automatic Correction of Real-Word Errors in Spanish Clinical Texts. Sensors, 21(9), 2893. https://doi.org/10.3390/s21092893