Abstract

The online system state initialization and simultaneous spatial-temporal calibration are critical for monocular Visual-Inertial Odometry (VIO) since these parameters are either not well provided or even unknown. Although impressive performance has been achieved, most of the existing methods are designed for filter-based VIOs. For the optimization-based VIOs, there is not much online spatial-temporal calibration method in the literature. In this paper, we propose an optimization-based online initialization and spatial-temporal calibration method for VIO. The method does not need any prior knowledge about spatial and temporal configurations. It estimates the initial states of metric-scale, velocity, gravity, Inertial Measurement Unit (IMU) biases, and calibrates the coordinate transformation and time offsets between the camera and IMU sensors. The work routine of the method is as follows. First, it uses a time offset model and two short-term motion interpolation algorithms to align and interpolate the camera and IMU measurement data. Then, the aligned and interpolated results are sent to an incremental estimator to estimate the initial states and the spatial–temporal parameters. After that, a bundle adjustment is additionally included to improve the accuracy of the estimated results. Experiments using both synthetic and public datasets are performed to examine the performance of the proposed method. The results show that both the initial states and the spatial-temporal parameters can be well estimated. The method outperforms other contemporary methods used for comparison.

1. Introduction

Monocular Visual-Inertial Odometry (VIO) is an important topic in the robotics and the computer vision community. Its goal is to estimate the incremental motion and reconstruct scene structure by fusing measurements collected from a camera and an Inertial Measurement Unit (IMU). Previously, VIO has been successfully used in applications like unmanned aerial robots [1,2], autonomous or semi-autonomous driving [3,4], 3D reconstruction [5,6], and augmented reality [7,8]. The performance of monocular VIOs heavily relies on the accuracy of the initial states (including metric-scale, velocity, gravity, gyroscope bias, and accelerometer bias) and the spatial-temporal parameters between the camera and IMU. Thus, developing convenient and efficient methods to exactly acquire the values of these parameters is highly demanded. First, an online initialization process is needed to estimate the initial states for bootstrapping the fusion process, since otherwise, the metric-scale of camera measurements is ambiguous, which may cause the optimization sink into a local minimal solution. Second, the spatial-temporal parameters must be both calibrated. In particular, the temporal parameters are used to align different sensor data. The timestamps of the sensor data are taken either from an internal clock in the sensor or from the Operating System (OS) that receives the sensor data. Due to the unsynchronized clocks, transmission delays, sensor response, and OS overhead, latency exists between the actual sampling instant of the sensor data and the timestamp of the captured data; thus, the captured data (i.e., the measurement data) from the camera and IMU are usually misaligned. An example of temporal misalignment in the sensor data is shown in Figure 1. If the spatial-temporal parameters are not considered or incorrectly calibrated, the performance of mapping and navigation would be significantly impaired.

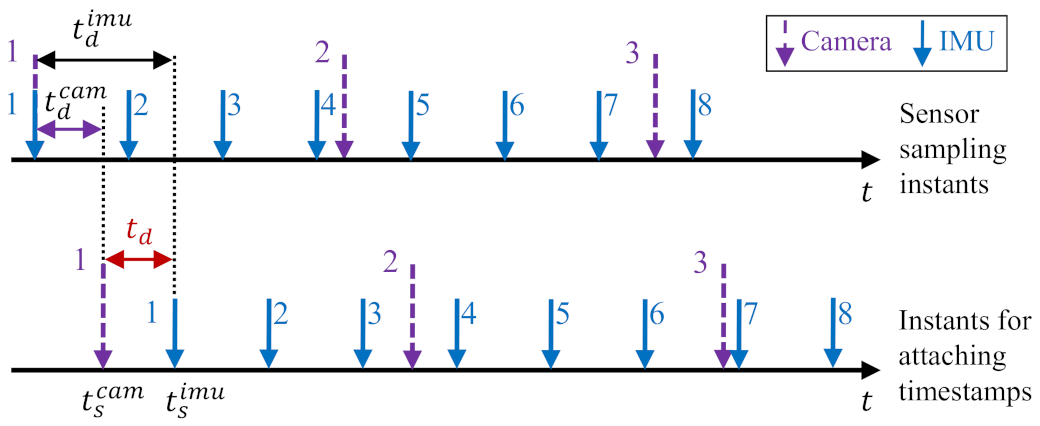

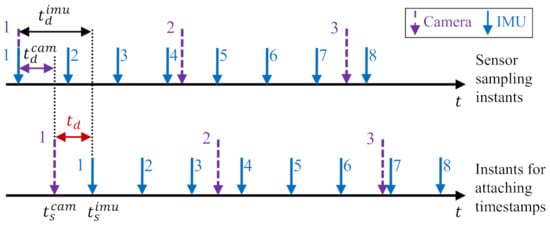

Figure 1.

An example of temporal misalignment in the sensor data. The upper plot represents the sensor sampling instants. The lower plot shows instants for attaching timestamps. The timestamped data are essentially the sensor measurements obtained by users. The meanings of the notations are as follows. and are, respectively, the latency of Inertial Measurement Unit (IMU) and camera. is the time offset between the stamped IMU and camera data. These two timestamped data can be aligned by shifting the camera data with offset or shifting the IMU data with offset.

There have been several publications studying the initialization and calibration problems. The early ones [9,10,11,12,13] used offline methods to obtain the spatial-temporal parameters. They required a professional user to carefully move the sensor suite in front of a stationary visual calibration target, which is troublesome in usual deployment and dangerous in cases of emergency. To overcome the disadvantages, several online methods were developed. The online methods assumed that the measurements from a camera and an IMU were well synchronized (e.g., [14,15,16,17,18,19]), or the extrinsic spatial parameter was known in advance (e.g., [20,21,22]), or both conditions were satisfied (e.g., [23,24,25,26,27,28]). In the case where both the measurements from different sensors are asynchronous and the extrinsic spatial parameter between different sensors is unknown, most of the existing methods [29,30,31,32,33,34] are designed for filter-based VIOs since they are usually built on the Multi-State Constraint Kalman Filter (MSCKF [35]) framework. They perform the state propagation/prediction by integrating IMU measurements and perform the state update/correction by using visual measurements. However, for the optimization-based VIOs, which use Bundle Adjustment (BA) to minimize the IMU preintegration errors and feature/photometric reprojection errors and thus have higher accuracy than filter-based ones, there is not much online simultaneous spatial-temporal calibration work in the literature.

Motivated by the situation, we in this paper propose an online initialization and calibration method for optimization-based VIO considering spatial-temporal constraints. The method simultaneously estimates the initial states and calibrates the spatial-temporal parameters during the system bootstrapping. It does not need professional knowledge and tedious offline preparations, thus enabling us to build “power-on-and-go” robots that can operate autonomously and instantaneously. The proposed method is carried out sequentially in three steps. First, our method introduces a time offset model and proposes two short-term sensor motion interpolation algorithms for aligning the asynchronous camera and IMU measurements, based on an assumption that the sensor suite moves in constant angular and linear velocities between consecutive frames with short-term intervals. The two sensor motion interpolation algorithms, i.e., the camera motion interpolation and the IMU motion interpolation, respectively, interpolate the camera pose and IMU pose at arbitrary intermediate times inside each short-term interval. By further considering the unknown metric-scale of camera pose in the camera motion interpolation algorithm, the transformation relationship between the camera and IMU at any timestamp is formulated as a function of the temporal parameter. Second, an incremental estimator considering the temporal misalignment between different sensor data is introduced to estimate the initial states and the spatial-temporal parameters. The incremental estimator is performed repeatedly each time a new keyframe is detected. It first estimates the extrinsic rotation, time offset, and gyroscope bias by minimizing the rotation difference between the camera and IMU. Then, it estimates metric-scale, gravity, and spatial translation by ignoring accelerometer bias. Finally, it refines these values and further estimates the accelerometer bias by taking the gravitational magnitude into account. Third, a bundle adjustment is used to improve the accuracy of the initialization and fuse the visual and inertial measurements. By minimizing both the IMU preintegration error and the feature reprojection error, it can globally or locally optimize the IMU states (including position, rotation, velocity, and biases), the spatial-temporal parameters, and the reconstructed map.

The main contributions of this article are in algorithmic implements:

- We propose an online method for bootstrapping the optimization-based monocular VIO system, which can simultaneously estimate the initial states and calibrate the spatial and temporal parameters between the camera and IMU sensors.

- The time offset is modeled, and two short-term motion interpolation algorithms considering both the temporal parameter and the unknown metric-scale are proposed to interpolate the camera and IMU pose at an arbitrary intermediate time, which enable us to align the camera poses and IMU poses.

- A three-step incremental estimator is introduced to estimate the initial states and the spatial-temporal parameters using the aligned poses.

- A tightly-coupled nonlinear optimization algorithm is additionally included to improve the accuracy of the estimated results.

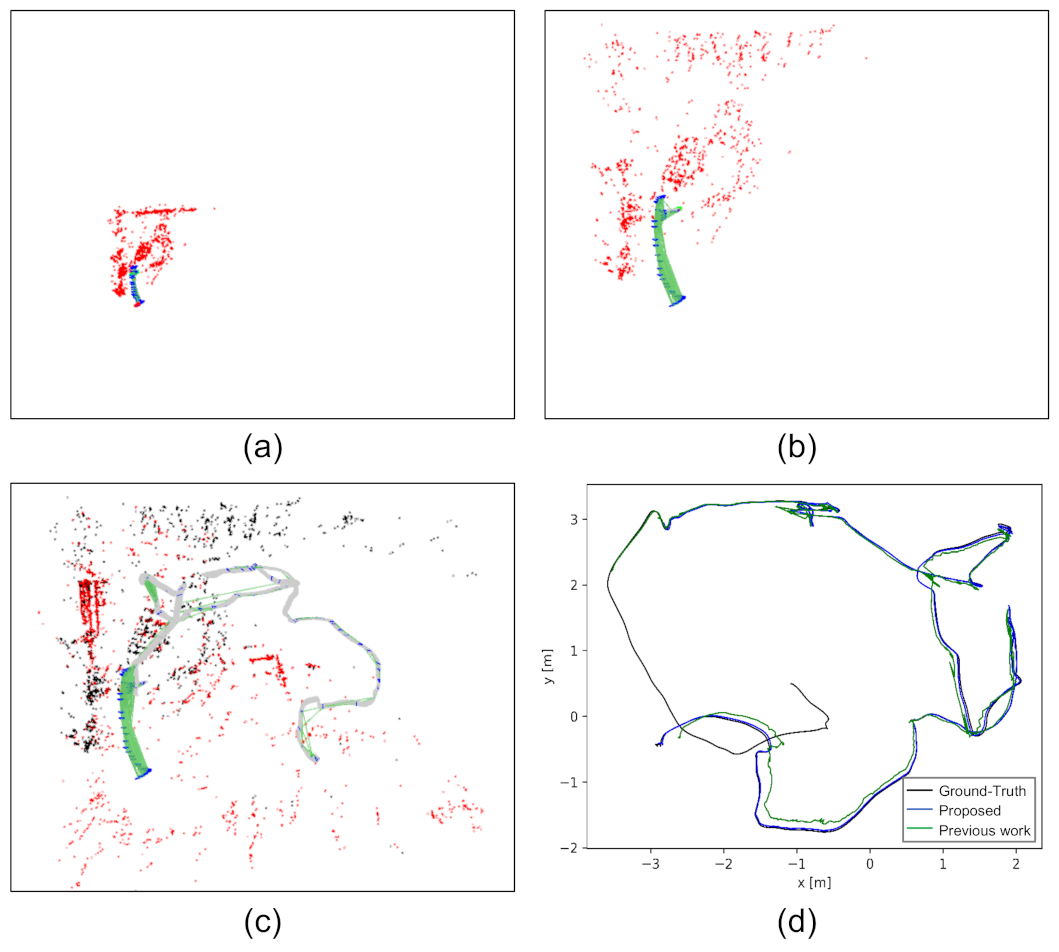

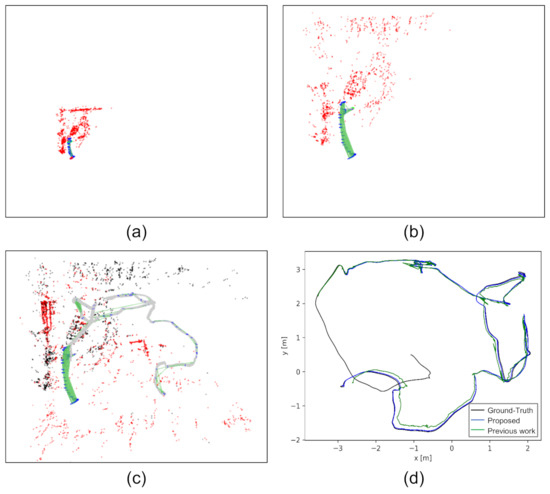

In the experimental section, quantitative analysis and comparison using synthetic sequences, public datasets, and real visual-inertial sensors are performed to examine the proposed method. The results show the efficacy and efficiency in estimating both the initial states and extrinsic spatial-temporal parameters. For intuitive understanding, an example of the estimated maps before and after applying the proposed method is shown in Figure 2. The map estimated by a pure monocular visual odometry (VO) front-end during initialization is shown in Figure 2a. It is subject to a problem of ambiguous scale. The metric-scale can be recovered by applying the proposed incremental estimator, and a real-sized map is found, as shown in Figure 2b. After initialization, the subsequent measurements are processed by the visual-inertial bundle adjustment. The result is a stitched map shown in Figure 2c. The top-view of the estimated trajectory compared with the ground-truth and our previous work [36] that considered spatial constraint only is shown in Figure 2d, which indicates that the trajectory estimated by simultaneously considering spatial-temporal constraints is more accurate.

Figure 2.

An example of the estimated maps before and after applying the proposed method. The data are from the V2_01 sequence of the EuRoC dataset [37] with a time offset of 45 ms. (a) The map estimated by monocular VO. It is subjected to ambiguous scales. (b) The map after initialization. The metric-scale was recovered. (c) The map constructed by the proposed method on the whole sequence. (d) A top-view of the estimated trajectory (blue curve) compared with the ground-truth (black curve) and our previous work [36] (green curve, which calibrates the extrinsic spatial parameter only). Best viewed in color.

The remaining part of this paper is organized as follows: Section 2 reviews the related work. Section 3 presents the time offset model, the short-term sensor motion interpolation algorithms, and the transformation equations developed based on the model and interpolations. Section 4 introduces the preliminary knowledge about the IMU measurement model and the IMU preintegration and presents the incremental estimation of the initial states and the extrinsic spatial-temporal parameters. Section 5 presents the bundle adjustment. Experiments and analyses are performed in Section 6. Conclusions and future work are described in Section 7.

2. Related Work

According to the working routine, previous VIO calibration methods can be divided into offline and online methods. Offline methods have a long history. For example, Mirzaei et al. [38] performed an observability analysis for rigid transformation between the camera and IMU. They showed that under certain motion, the rigid transformation is not fully observable. Furgale et al. [9,10] developed an open-source camera/IMU calibration toolbox named Kalibr (https://github.com/ethz-asl/kalibr, accessed on 14 February 2021), to perform the temporal and spatial calibration for a visual-inertial sensor in an offline manner. By using a continuous-time basis function representation [39] of the sensor trajectory, Rehder et al. [12,13] extended the toolbox to calibrate both the extrinsic and intrinsic parameters of multiple IMUs.The drawback of offline calibration is the need for additional calibration steps and assistive devices. In addition, a recalibration must be performed every time the configuration of a sensor suite is changed. To avoid inconvenience, researchers switched to online methods.

Online methods estimate the extrinsic parameters at the arrival of each new sensor measurements. It invalidates the need for assistive devices and, at the same time, maintains high accuracy, thereby making the odometry more robust and easier to use. According to the unknown parameters, the online method for monocular VIOs can be divided into online spatial calibration, online temporal calibration, and simultaneous online spatial-temporal calibration.

For online spatial calibration, Kelly et al. [15] proposed a self-calibration method based on the unscented Kalman filter. The method showed that the full observability of spatial parameters required the sensor suite to undergo both the rotation and acceleration on at least two IMU axes. Li et al. [16,17] estimated the spatial parameters along with a sliding window of sensor poses using an extended Kalman filter algorithm. It achieved consistent estimation by ensuring the correct observability properties of its linearized system model. Yang and Shen [18] calibrated the spatial parameters and the initial states (except for IMU biases) with an optimization-based linear estimator. In their extended batch-based monocular VINS (termed VINS-Mono) [40], the IMU biases are included in a sliding window nonlinear estimator. Schneider et al. [41] introduced information-theoretic metrics to assess the information content of trajectory segments, thus allowing them to select the most informative parts from a dataset for extrinsic spatial calibration purposes. Huang et al. [19] proposed an estimator to incrementally solve several linear equations to estimate the spatial parameters between an IMU and two cameras.

For online temporal calibration, Kelly et al. [20] formulated the calibration problem as a registration task. Their method leveraged a variant of iterative closet point algorithm, termed TD-ICP, to gradually match the three-dimensional orientation curves of camera and IMU. Mair et al. [42] presented and compared two approaches, i.e., cross-correlation and phase congruence, to temporally align the camera and the gyroscope, which showed that the cross-correlation based approach was more suitable for short delay. Ling et al. [21] presented a time-varying model for temporal calibration. This approach can handle the rolling-shutter effects and imperfect sensor synchronization in a nonlinear optimization algorithm. Qin et al. [22] extended [40] to include estimating the time offset between the camera and IMU by interpolating the location of image features. The method treated the time offset as a vision factor and calibrated it online along with features and sensor poses in an optimization-based VIO framework. Liu et al. [34] proposed an online temporal calibration method that was integrated into the filter-based VIO framework (S-MSCKF [43]). To ensure the consistency between the IMU measurement constraint and the visual measurement constraint, the authors established a modified feature projection model considering time offset and introduced an interpolation-based algorithm for inferring the 2D observations of features on a virtual image.

Although various sophisticated online approaches for either spatial calibration or temporal calibration have been proposed, drawbacks remain. Online spatial calibration methods [15,16,17,18,19] require the measurements from different sensors to be strictly synchronized, while online temporal calibration methods [20,21,22] assume the relative transformations between different sensors are prerequisites. In cases of low-cost and self-assembled devices, accurate factory calibration and hardware synchronization are not available. Such devices inspire the study of simultaneous online spatial-temporal calibration. For example, Li et al. [29,30] treated the time offset as an additional state variable to be estimated along with IMU pose, velocity, biases, feature positions, and extrinsic spatial parameters. To compute the feature residual, the authors performed the propagation using the IMU measurements up to the estimated time offset. Li’s works also showed that the time offset was locally identifiable, except in a small number of degenerate cases like constant-velocity motion. Zuo et al. [31] developed a Lidar-inertial-camera odometry that could refine the spatial-temporal parameters online along with sensor pose estimation. Yang et al. [32] analyzed the observability of spatial-temporal parameters and showed that they were both observable if the sensor platform underwent fully random motion. The authors also identified four degenerate motions that were harmful to the calibration accuracy. Eckenhoff et al. [33] used interpolation on the manifold to represent anytime IMU poses and successfully performed the spatial-temporal calibration for a multi-camera system. The short-term motion interpolation used in our paper is inspired by [33]. We extend it to interpolate the to-scale camera poses and thus can represent anytime camera poses and express the IMU pose by transforming the camera pose at the true time. We estimate the initial states and extrinsic parameters using the true time IMU-camera pose relations.

Although impressive performance has been achieved in the above-mentioned simultaneous online spatial-temporal calibration methods, most of them are designed for filter-based VIOs. For optimization-based VIOs, there is not much online spatial-temporal calibration method in the literature. Motivated by this situation, we, in this paper, propose an incremental estimator that simultaneously considers the spatial-temporal constraints and develops optimization-based algorithms. Our method can solve the calibration problem when both the spatial and temporal parameters are unknown. To the best of our knowledge, similar methods are less seen in modern literature. The only publication that has a similar conception is Feng’s method [44], which estimated the extrinsic rotation and time offset by minimizing the quaternion rotation difference between the camera and IMU. The method used a loosely coupled approach described in [45] to recover the initial states and used a nonlinear optimization algorithm to minimize sensor noises. Different from Feng’s method, our focus is the time offset among the sensors. We model the time offset and use the temporal constraints to interpolate the poses of the camera and IMU. The systematic accuracy and robustness are improved by taking the time offset into account. Compared with [22,30,34], our method directly performs the motion interpolation on the camera poses and IMU poses for compensating the time offset. By considering the time offset and the unknown metric-scale factor in the camera motion interpolation algorithm, it benefits us to inherit the incremental estimation routine introduced in our previous work [36]. Owing to the interpolation on camera poses, the proposed incremental estimator can be easily applied to the popular pure visual SLAM/Odometry frameworks (e.g., LSD-SLAM [46], ORB-SLAM [47,48,49], DSO [50], SVO [51,52]) to conduct visual-inertial systems since the input of our estimator is merely the IMU measurements and the keyframe poses. These frameworks can provide accurate up-to-scale camera pose and serve as the VO front-end for our incremental estimator.

3. Short-Term Sensor Motion Interpolation

This section introduces the short-term sensor motion interpolation algorithm. First, the time offset model used to align the camera and IMU data are presented. Then, two motion interpolation algorithms are introduced to, respectively, interpolate the camera and IMU poses. After that, the transformation relationship between the camera and IMU is derived by using the interpolated poses.

The meanings of the various symbols used in this section are as follows. denotes the states expressed in the world reference frame . It coincides with the camera coordinate system of the first keyframe. is the pose of the camera frame expressed in at time , where and are, respectively, the camera rotation and position. s is the metric-scale used to present the scale ambiguity in the camera position. is the pose of the IMU body frame expressed in at time . is the relative transformation between the camera and IMU, i.e., the extrinsic spatial parameter that should be calibrated. For convenience, the inverse representations, e.g., , are also used in some equations. The time offset between the camera and IMU measurements is denoted as . The relative transformation and time offset are fixed but unknown.

3.1. Time Offset Model

As previously shown in Figure 1, the camera and IMU in a monocular VIO sensor suite, respectively, provide discrete samplings in a constant frequency. Due to the unsynchronized clocks, transmission delays, sensor response, and operating system overhead, there exists a latency that makes the measured (i.e., timestamped) data misalign with the sampling instants. Consider the camera and the IMU measurements sampled at the same instant t, their timestamps and can be modeled as:

where and are, respectively, the latencies of camera and IMU. The time offset thus equals to:

Equations (1) and (2) indicate that the camera and IMU data can be aligned by shifting the camera data with offset or shifting the IMU data with offset, namely:

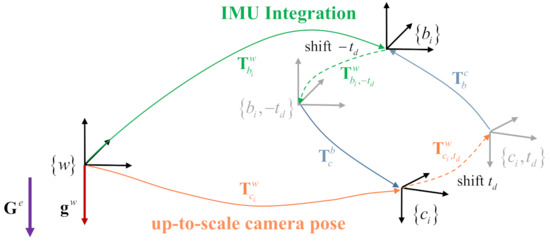

Here, is the camera pose at time . is the IMU pose at time . Equation (3) indicates that an IMU pose at time is corresponded to the camera pose at time . Equation (4) indicates that a camera pose at time corresponds to the IMU pose at time . The transformation relationships between the camera and IMU coordinate frames when considering the time offset are illustrated in Figure 3.

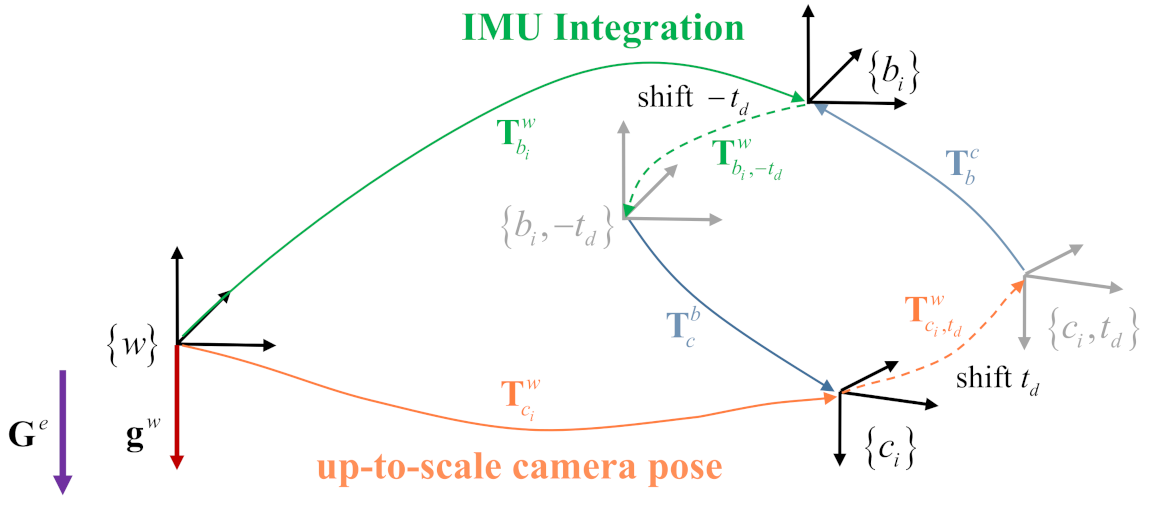

Figure 3.

Transformation relationships between the camera and IMU coordinate frames when considering the time offset. The camera and IMU poses can be aligned by shifting the camera pose with offset or shifting the IMU pose with offset. The world reference frame coincides with the first keyframe’s coordinate system of the monocular visual odometry front-end. is the gravity expressed in the earth inertial reference frame .

3.2. Sensor Motion Interpolation

3.2.1. Camera Motion Interpolation

In this work, the camera is assumed to move in constant angular and linear velocities in a short period; therefore, the camera pose at an arbitrary time can be interpolated using its nearest camera poses. Consider two up-to-scale camera poses and estimated at timestamp and ; the camera angular velocity and linear velocity at are approximately

where is the “vectorized” version of logarithm map that transforms a rotation matrix to a vector , with and is the vee operator that maps a skew-symmetric matrix in to a vector in . Its reverse process is called a hat operator that maps a vector in to a skew-symmetric matrix. A property of skew-symmetric matrices that will be used is as follows: given two vectors , the cross-product can be expressed as . Note that the velocity term in the equation is computed using the up-to-scale camera positions. The metric-scale factor is taken into account in the derivation of spatial relationships between camera and IMU (see Equation (9)).

Using Equation (5), the camera rotation and position at time can be interpolated as follows:

3.2.2. IMU Motion Interpolation

Similarly, assuming the IMU moves in constant angular and linear velocities in a short period, the rotation and position at time can be interpolated as:

where and are, respectively, the angular and linear velocities of the IMU at .

3.3. Spatial Relationships between Camera and IMU

By considering the time offset and the metric-scale s, the rotation and position of the IMU at timestamp can be derived from a camera pose according to Equation (3) as follows:

Similarly, the camera pose at timestamp can be derived from an IMU pose according to Equation (4), as follows:

In the following sections, Equations (8) and (9) are leveraged in the incremental estimator. Equations (10) and (11) are used for the bundle adjustment. Note that since the metric-scale can be recovered by performing the incremental estimator and the camera position term in (11) is transformed from the IMU pose, the metric-scale term is removed.

4. Online Initialization and Extrinsic Spatial-Temporal Calibration

This section details how to combine the motion interpolation algorithms introduced in Section 3 and the estimator introduced in our previous work [36] to estimate the initial states and the spatial-temporal parameters.

For the reader’s convenience, we first briefly introduce the IMU preintegration theory in Section 4.1. Then, the incremental estimator for online initialization is derived in Section 4.2. The incremental update of time offset, velocity estimation, termination condition, and implementation note are discussed in Section 4.3.

4.1. IMU Preintegration

The idea of preintegrated IMU measurements was first proposed by Lupton et al. [53]. Its essence is to combine many inertial measurements between two keyframes into a single relative motion constraint. Lupton et al. used Euler angles to represent the IMU rotation. The representation was later extended by Forster et al. [54] using a manifold in to avoid singularity. In this paper, we follow the IMU measurement model and the kinematic model of the system motion given in [54]. In the following, we denote the IMU body frame as and the world reference frame as , and the gravitational acceleration in as . The gyroscope outputs and accelerometer outputs are, respectively, subject to white sensor noises and (normally assumed as Gaussian noise), and slow time-varying gyroscope bias and accelerometer bias .

Given a series of IMU measurements from time i to time j () and ignoring the sensor noises, the changes of IMU rotation , velocity , and position between the two time instants can be computed by:

where is the IMU sampling interval, and . is the “vectorized” version of exponential map that transforms a vector to a rotation matrix , with . In the equation, the Jacobians and indicate how the measurements change with respect to the bias estimation. , , and are the preintegrated terms during the time period . They can be computed directly using the IMU measurements as follows:

Here, the biases are assumed to remain the same during the time period . They can be represented as , and . and are the biases at time i. and are compensation terms used to compensate for the time-varying parts. In the following, the preintegrated terms will be used for incremental estimation and constructing the IMU preintegration error term of nonlinear functions.

4.2. Incremental Estimation

In the incremental estimator, both the spatial and temporal parameters between the camera and IMU, as well as the initial states of metric-scale, gravity, and IMU biases, are simultaneously estimated. The basic idea is to solve the equality constraints between the up-to-scale camera poses with the integrated IMU poses. First, we run the sensor suite for a few seconds with at least two-axis rotation plus some random translation. Several keyframes are collected in the process. After that, we perform the incremental estimator repeatedly across consecutive keyframes until the values to be estimated converge.

The incremental estimator is indeed developed from the three-step process proposed in our previous work [36]. In [36], the extrinsic spatial parameters and initial values were online-estimated under the assumption that the camera and IMU measurements were well aligned. Therefore, in the first step, it used an iterative strategy to individually estimate the gyroscope bias and the extrinsic rotation matrix. In each iteration, the last estimated extrinsic rotation matrix was utilized to estimate a gyroscope bias. Then the preintegrated rotations were rectified by applying the estimated gyroscope bias. After that, a linear over-determined equation was developed to calculate a new extrinsic rotation matrix. The iterative estimation was performed until the extrinsic parameters converged. In the other two steps, the up-to-scale camera poses were directly used to conduct the position relationship. Unlike the previous work, the measurement misalignment case is taken into account in the current work. In comparison, the incorporation of time offset is not simple addition. It requires changes in all aspects, which makes the steps very different from our published work. IN particular, when asynchrony appears between sensors, it is difficult to distinguish the temporal parameter and the extrinsic rotation matrix, and one cannot individually estimate the gyroscope bias, the temporal parameter, and the extrinsic rotation matrix through an iterative manner. To overcome the difficulty, the camera motion interpolation algorithm is utilized to compensate for the time offset. In the first step of the incremental estimator, the interpolated camera rotations (i.e., the Equation (8)) are adopted; thus the temporal parameter, the extrinsic rotation matrix, and the gyroscope bias can be estimated together by solving an optimization function that minimizes the relative rotation difference between the IMU rotation and the interpolated camera rotation. In the other two steps, we further apply the interpolated camera positions considering the unknown metric-scale factor (i.e., the Equation (9)) to calibrate the extrinsic translation parameters and other initial states from coarse to fine.

4.2.1. Estimating Gyroscope Bias, and Calibrating Extrinsic Rotation and Time Offset

In the incremental estimation, the gyroscope bias is assumed to have a constant value as it changes slowly over time. The rotation relationships of two consecutive keyframes at timestamp i and can be described as:

where and are the IMU rotations that are derived by transforming the interpolated camera rotations. By substituting the first equation of (12) into (14) and using the notation to replace the notation j, the difference between the preintegrated rotation and the transformed value is:

where and are, respectively, the inverse of and .

With N collected keyframes, the gyroscope bias compensation , extrinsic rotation and time offset can be estimated by minimizing the rotation difference for all keyframes as follows:

where is the information matrices associated with the preintegrated rotation. The Equation (16) is a nonlinear least-squares problem on Lie algebra. It can be solved by using the iterative methods, like the Gauss–Newton method or the Levenberg–Marquardt method [55]. In the first estimation, since we do not have any prior knowledge about the time offset and extrinsic rotation, the initial seeds for and are set to zero, and is set to an identity matrix. After that, the results obtained in the last estimation are used as initial seeds. Note that when Equation (16) is solved, the preintegrated terms described in (13) are re-computed by using the new estimated gyroscope bias, which facilitates the following steps. The Jacobians of needed by the iterative method are derived in Appendix A.4.

4.2.2. Approximating Scale, Gravity, and Extrinsic Translation

Once the extrinsic rotation and time offset have been found by Equation (16), the metric-scale s, gravity vector , and extrinsic translation can be approximately estimated by combining Equations (8), (9), and (12).

First, by substituting (8) and (9) into the third equation of (12), the position relationship between two consecutive keyframes i and can be obtained:

where is the result of (16). The items and are temporarily set to zero since the accelerometer bias is not considered for approximation. The accelerometer bias will be estimated in the refinement process. Furthermore, by re-computing the preintegration terms after the gyroscope bias estimation and assuming the gyroscope bias is constant, and can be set to zero. Note that there is a velocity term in the Equation (17). This term is undetermined and will increase the complexity of the incremental estimation if it is reserved. In the following, we introduce a trick to eliminate the velocity term.

Second, by considering three consecutive keyframes, we can obtain two relations in the form of (17). Using these two relations and substituting the second equation of (12), the velocity terms can be eliminated, as follows:

When writing keyframes i, , as 1, 2, 3, , , , and can be expressed as:

With N keyframes, we can obtain relations in the form of Equation (18). These relations can be stacked into a linear over-determined system equation with weights for outlier handling [36]. This system equation can be solved using Singular Value Decomposition (SVD) to get the approximate metric-scale , gravity vector , and extrinsic translation . Note that since there are sub-equations and a seven-dimension unknown vector in the system equation, at least five keyframes are required to solve it.

Since the accelerometer bias is temporally set to zero in carrying out the computations, the estimated results in this step are approximated. They will be refined in the following part by considering the magnitude of gravitational acceleration and taking the accelerometer bias into account.

4.2.3. Estimating Accelerometer Bias, and Refining Scale, Gravity, and Extrinsic Translation

Next, we estimate the accelerometer bias and refine the scale, gravity, and translation. We denote the earth inertial reference frame as and compare it to the world reference frame . Using the already estimated in the approximation process, the rotation between and can be obtained as:

where and are, respectively, the rotation axis and the rotation angle of . is the gravity vector expressed in . is the magnitude of the gravitational acceleration (normally m/s). This rotation can be optimized by appending a perturbation , as follows:

where the first-order approximation of exponential map (see Equation (A2) in Appendix A.1) is applied. By substituting (21) into (17) and further considering the accelerometer bias, we have:

Similar to (18), the velocity term can be eliminated by considering three consecutive keyframes and applying the second equation of (12), which results in:

Here, remains the same as in (19), and , , , and are computed as follows:

where means the first two columns of the matrix. With N keyframes, a linear over-determined system equation with weights for outlier handling can be established to solve , , , and . Since the accelerometer bias is set to zero when integrating , , and , the final result is . The gravity is refined by appending the perturbation, i.e., . Note that since there are equations and a 9-dimension unknown vector in the system equation, at least five keyframes are required to solve it.

4.3. Updates, Termination, and Velocity Estimation

After each execution of the incremental estimator, the timestamps of subsequent visual measurements are compensated by applying the estimated time offsets, i.e., . The next estimation will be performed using newly obtained and compensated data.

The incremental estimation will be continuously performed until the estimates get converged. The convergence is judged by using the criteria proposed by [19]. The velocities of all keyframes are estimated uniformly after the parameters are converged. For the old ones, their velocities are computed using the positional relationship of two consecutive keyframes, as shown in (22). For the latest one, since its following keyframes are not determined yet, the velocity propagation equation in (12) is used to calculate its velocity. Once the velocities of all keyframes have been calculated, the scales of camera poses and map points are updated, and the preintegration terms are recomputed to correct the accelerometer bias. The incremental estimation is considered to have been completed after the update and recomputation.

Note that the proposed method does not rely on any prior knowledge about the sensor’s temporal misalignment. If the misalignment is too large, the old uncompensated keyframes will have a bad influence on following executions. We solve this problem by discarding the old keyframes. In particular, if the time offset estimated by (16) is larger than the IMU sampling interval, the time offset will be updated and the system is relaunched. The following approximation and refinement processes will not be performed in this case.

5. Visual-Inertial Bundle Adjustment

Note that in the incremental estimation, the gyroscope bias and the accelerometer bias are assumed to remain constant. This assumption simplifies the process but may slightly reduce the calibration accuracy. To overcome the shortcoming, a visual-inertial bundle adjustment is performed to further correct the spatial-temporal parameters, velocities, and IMU poses and biases. The bundle adjustment can be carried out both globally and locally. When the incremental estimation is completed, global adjustment can be used to refine the accuracy of initial states and extrinsic parameters. The local adjustment is used to combine visual and inertial measurements for visual-inertial odometry.

The bundle adjustment is formulated as a nonlinear optimization problem. We consider the following state vector for an ith keyframe as follows:

where is the kth map point in the world reference frame observed by the ith keyframe. The full state of a nonlinear optimization is defined as follows:

where n and l are, respectively, the newest and oldest keyframe indices in an optimized window with size . The setting of l depends on the type of adjustment. For global adjustment, l is set to 1. All states starting from the first keyframe are optimized, excluding the position and rotation of the first keyframe as it is regarded as the global reference frame. For local adjustment, l is set to . The states of the keyframes contained in a local window are accounted.

The nonlinear optimization minimizes both the feature reprojection errors and the IMU preintegration errors, as follows:

where is the feature reprojection error term for a given matched kth map point observed by the ith keyframe. is the IMU preintegration error term that links keyframe i and its previous keyframe .

5.1. Feature Reprojection Error

Considering a 3D map point observed by the ith keyframe and matched to a 2D image feature, the map point can be transformed into a local camera coordinate frame using Equations (10) and (11) as follows:

Here, we approximate by ignoring the white sensor noise. can thus be written as . Using Equation (28), the feature reprojection error term can be defined as follows:

where is the projection function of pinhole camera model [56]. It transforms a 3D point in the local camera coordinate frame into a 2D point on the image plane. is the pixel location of the matched feature. is the information matrix associated with the feature detection. is a Huber robust cost function. The states in Equation (29) include the extrinsic spatial and temporal parameters, as well as the IMU pose, IMU velocity, and map points. The Jacobians of reprojection error w.r.t. the states are derived in Appendix A.5.

5.2. IMU Preintegration Error

With a slight abuse of notation, we use i and j to denote two consecutive keyframes for convenience. The IMU preintegration error term is represented as:

where . , , and are, respectively, the errors of the preintegrated rotation, velocity, and position. is the bias difference between i and j time instants. and are, respectively, the information matrices of the preintegration and the bias random walk.

6. Experiments Furthermore, Discussions

We implement the proposed method based on ORB-SLAM2 [47,48]. The Tracking and Local Mapping threads of ORB-SLAM2 are used to process camera measurements and output up-to-scale camera poses. The output poses, together with IMU measurements, are sent to our incremental estimator for initialization and calibration. When the incremental estimation is converged, global adjustment is performed to adjust the results and improve accuracy. After that, local adjustment is used to fuse the subsequent camera and IMU measurements to realize the ego-motion estimation and scene reconstruction. Since our focus is on odometry, the Loop Closure thread of ORB-SLAM2 is disabled. All the compared methods are also run without loop closure for a fair comparison. The optimized window size is set to 20. The platform used in the experiments was an Intel CPU i7-4720HQ (8 cores @2.60 GHz) laptop computer with 8GB RAM (Lenovo, Beijing, China).

Performances of the proposed method were evaluated using both synthetic sequences and public real-world datasets. First, we carried out simulation experiments to analyze the robustness performance of the incremental estimator against various IMU sensor noises. The robustness was quantified using errors on the spatial-temporal parameters, gyroscope bias, and accelerometer bias. All of them are defined as scalars for better observing the difference between the estimated result and the ground-truth. The calibrated and the ground-truth rotation matrices are represented in yaw-pitch-roll Euler angles. Then, we studied the overall performance of both the incremental estimation and bundle adjustment using real-world experiments. The absolute translational root mean squared error (RMSE) [57] between the estimated trajectory and the ground-truth is used to analyze the overall odometry performance.

6.1. Robustness Performance against Various IMU Noises

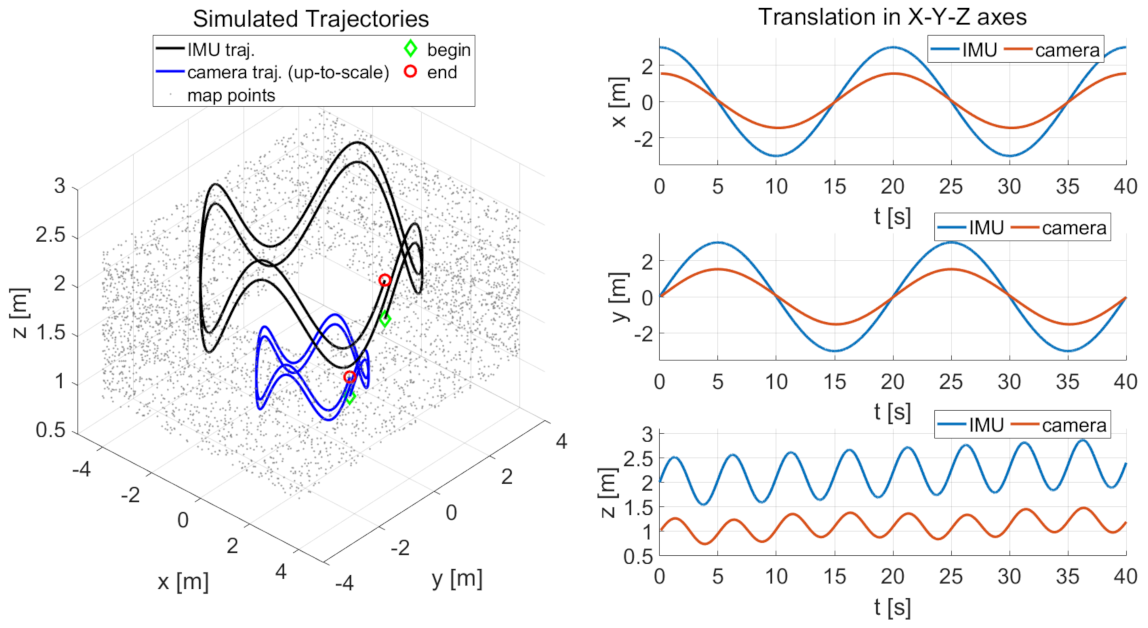

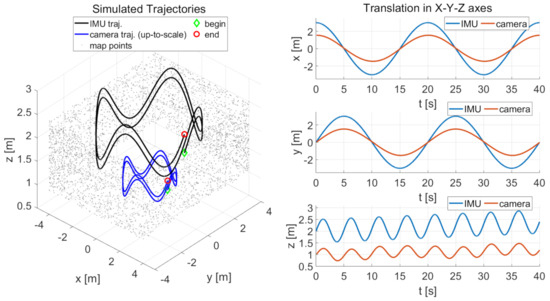

The robustness performance of the incremental estimator is analyzed using simulation with varying IMU sensor noises and time offsets. We collected data by simulating an IMU that moves along a sinusoidal circle motion. The IMU and camera trajectories are shown in the left subplot of Figure 4. The IMU trajectory is colored in black. The radius of the circular motion in the x-y plane is 3 m, and the amplitude of the vertical sinusoidal motion in the z-axis is m with a frequency of 0.2 Hz. The simulated IMU moves last for 40 s; thus the total length of the IMU trajectory is 41.59 m. The up-to-scale camera trajectory is colored in blue. Its poses are generated by transforming the IMU poses using predefined camera-IMU extrinsic spatial parameters (The predefined camera-IMU extrinsic parameters for simulation are set as: deg for rotation and m for translation), while suffering to a predefined metric-scale of 2.0. The translations of the camera and IMU poses are also shown in the right subplots of Figure 4.

Figure 4.

The left subplot shows the parametric IMU trajectory (black curve), up-to-scale camera trajectory (blue curve), and map points (gray dots). The three right subplots are the translation of the camera and IMU poses in x, y, z-axis, with a time offset of 0.1 s. The radius of the circular motion in the x-y plane is 3 m. The amplitude of the vertical sinusoidal motion in the z-axis is m. The frequency is 0.2 Hz. The metric-scale of camera poses is customized to 2.0. The green diamond and red circle, respectively, represent the beginning and the end of the trajectory. The simulated sensor moves for 40 s; thus, the total length of the trajectory is 41.59 m.

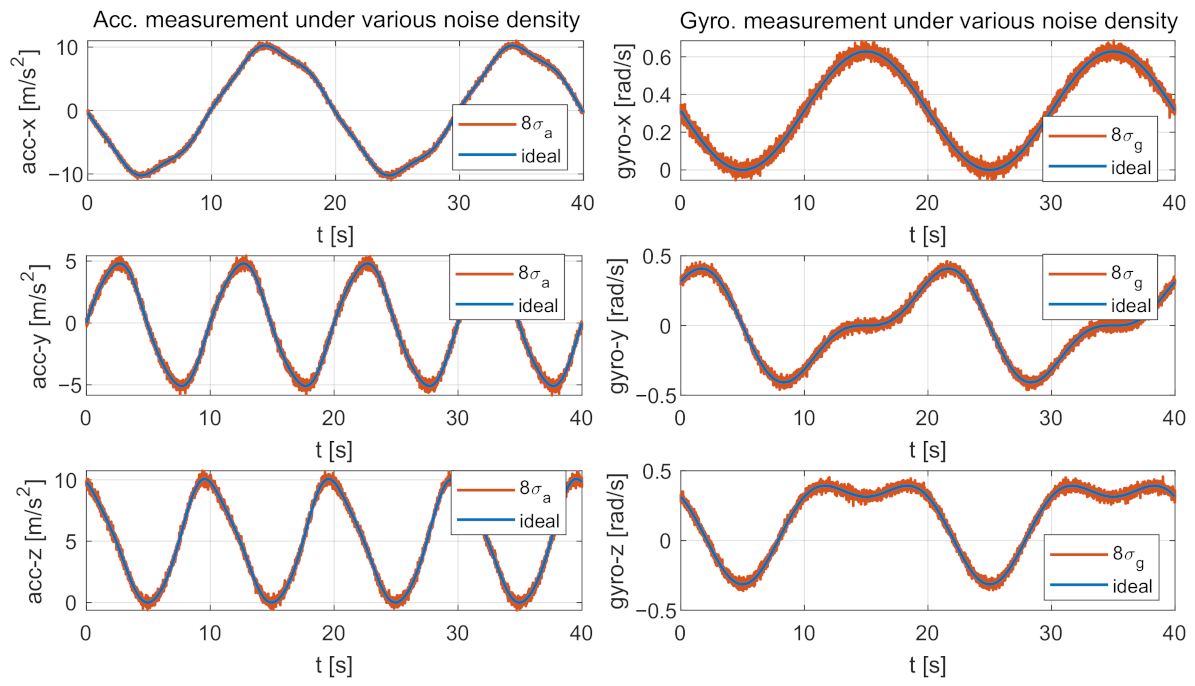

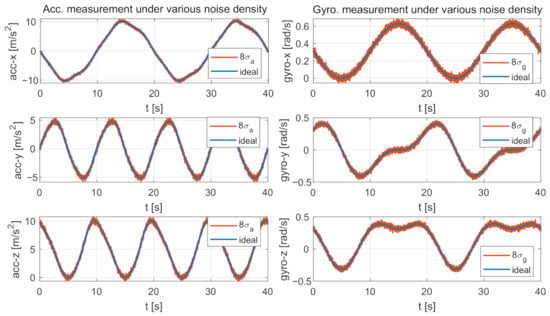

The IMU measurements were generated as follows. First, we prepared noise-free IMU measurements by computing the analytical derivatives of the parametric trajectory. Then, several synthetic noise-affected IMU measurements were generated by adding different intensities of noises to the noise-free measurements. We particularly considered six types of gyroscope-related and accelerometer-related noises, including the measurement noises (, ), the biases (, ), and the bias “diffusion” random walk noises (, ). For each type of noise, we designed eight different intensities by starting from a basic zero-mean Gaussian noise model (The basic IMU sensor noises are Gaussian-based noises: gyroscope and accelerometer continuous-time noise densities: , . Constant biases: , . Bias “diffusion” random walk noise densities: , . We highlight that these basic noises are similar to the ground-truth values provided by EuRoC dataset [37], therefore they are meaningful in practical application.) As a result, we get 48 (6 × 8) noise-affected IMU data sequences. Each sequence is affected by one type of noise with particular intensity. Figure 5 exemplifies two synthetic noisy IMU measurements compared with their corresponding noise-free values. The simulated sampling rates of the camera and IMU are 20 Hz and 200 Hz, respectively.

Figure 5.

Two examples of the comparison between the noise-free and the noise-affected IMU measurements. The noise-free measurements are generated by computing the analytical derivatives of the parametric trajectory shown in Figure 4. The left subplots compare the noise-free accelerometer measurements with the noise-affected ones. The noise-affected measurements are generated by adding Zero-mean Gaussian noises with densities of to the three axes of the noise-free accelerometer measurements. The right subplots show a similar comparison for gyroscope measurements.

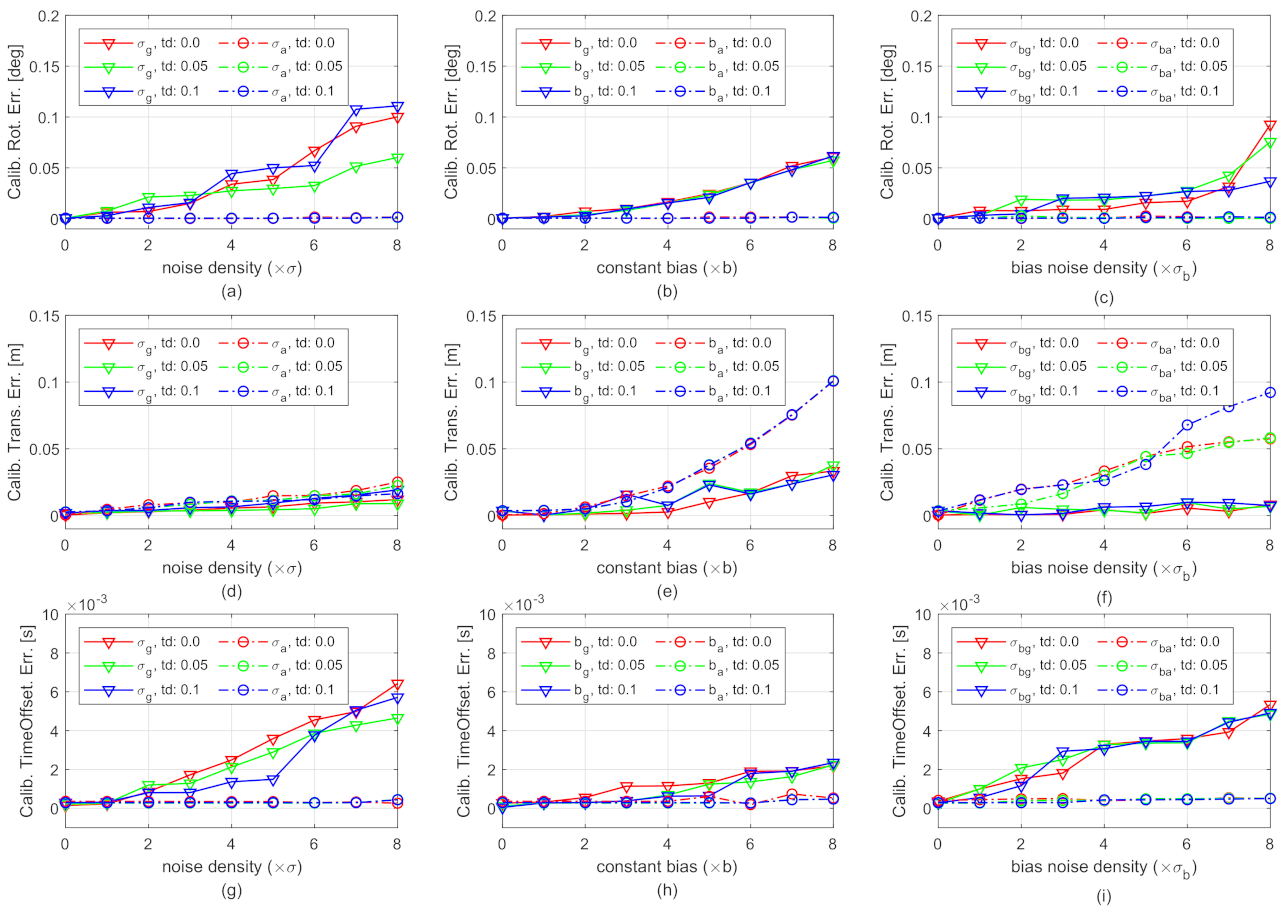

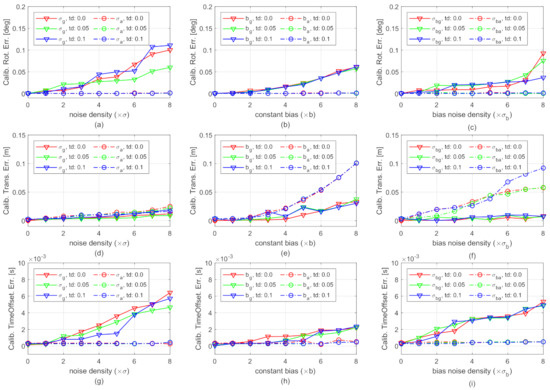

Figure 6 shows the camera-IMU spatial-temporal calibration errors in the presence of various gyroscope and accelerometer noises. The horizontal axes of the subplots are the intensities of sensor noises. The vertical axes are calibration errors. The solid curves and dash-dot curves are, respectively, the results of the gyroscope-related and accelerometer-related noises. The different colors show the results of different time offsets: red-0 ms, green-50 ms, blue-100 ms. The results in Figure 6 are the median over 25 tests. Since the proposed method does not rely on any prior knowledge, the spatial-temporal parameters are initially set to 0 in the tests.

Figure 6.

Spatial-temporal calibration errors in the presence of various gyroscope and accelerometer noises. The vertical axes of (a–c), (d–f), and (g–i) subplots are, respectively, the calibration error of extrinsic camera-IMU rotation, translation, and time offset. The horizontal axes of the subplots in the first column, the second column, and the third column are, respectively, the intensities of measurement noise density, constant bias, and bias “diffusion” random walk noise density. For instance, the labels “0” in all the horizontal axes represent the ideal noise-free measurements. The label “4” in the horizontal axis of (a) means the measurement noise density is customized to (for evaluating the robustness on gyroscope measurement noise, of which results are shown in solid curves) or (for evaluating the robustness on accelerometer measurement noise, of which results are shown in dash-dot curves), while the other noises are customized to zeros. Best viewed in color.

The results in Figure 6a–c,g–i show that both the calibration results of rotation and time offset are satisfying. For example, all the rotation errors are smaller than . When the gyroscope noise density and bias noise density are smaller than and , the calibrated time offsets are smaller than the simulated IMU sampling period (5 ms). The results also show that the influence of changing accelerometer noises on the rotation and time offset calibration is negligible. This is reasonable since only the gyroscope-related parameters are involved in the first process of the incremental estimation (see Equation (15)).

Figure 6d–f compare the translation errors of calibration under different noises. As shown in Figure 6d, the growth of the translation error is moderate with the increase of noise densities of both gyroscope and accelerometer measurement. The error remains less than 0.025 m even when the measurement noise density increases to and . The moderate growth implies that the translational calibration is robust to IMU measurement noise. The solid curves in Figure 6f show that the translational calibration is robust to gyroscope bias noise. The curves in Figure 6e and the dash-dot curves in Figure 6f show that the translational calibration may get worse when the constant biases or the accelerometer bias noise density are large. However, the results are still satisfying, i.e., 0.025 m errors, when the constant biases are smaller than and , and the accelerometer bias noise is smaller than .

Figure 6 also shows that the calibration errors are not significantly influenced by changing time offsets, as the curves with the same type but different colors in each subplot do not have significant differences. Detailed calibration errors of spatial-temporal parameters and IMU biases in the presence of all different types of IMU noises with their first intensities are provided in Table 1. The results also show that the errors are not much different w.r.t. varying time offsets. The observation is further analyzed using real-world datasets.

Table 1.

Errors of extrinsic spatial-temporal parameters and IMU biases in the presence of all different types of IMU noises.

6.2. Overall Performance of Both the Incremental Estimation and the Bundle Adjustment on Public Dataset

6.2.1. Dataset

The overall performance of both the incremental estimation and the bundle adjustment is evaluated using the real-world EuRoC dataset [37]. The dataset contains eleven sequences. Five of them were recorded in a large machine hall (denoted as MH_01 to MH_05), and the other six were recorded in a room using the Vicon motion capture system (denoted as V1_01 to V1_03 and V2_01 to V2_03). By considering the illumination, texture, motion velocity, and motion blur, the dataset is classified into easy, medium, and difficult grades. The ground-truth gyroscope bias, accelerometer bias, spatial parameters (The ground-truth extrinsic spatial parameters provided by the dataset were calibrated by the Kalibr [9,10,11,12] toolbox, with [89.147953, 1.476930, 0.215286] deg in yaw, pitch, roll directions for , and [−0.021640, −0.064677, 0.009811] m in x, y, z directions for ), IMU body velocities, and flying trajectories are given in the dataset. The original dataset does not have time delays. We include them by further adding millisecond values to the image timestamps. There is a fixed time offset between the IMU and camera measurements after the addition. To verify the capability of on-the-fly initialization, the start time of the time-shifted sequence is randomly selected in each test, so that the proposed and compared methods can start without any prior information about when the platform begins to fly.

6.2.2. Robustness Performance Concerning Various Time Offsets

In this experiment, we compared the robustness performance of different methods when concerning various time offsets. In particular, the predefined time offset ranges from −100 to 100 ms with a step of 5 ms. The accuracy of calibrated extrinsic parameters and the sensor ego-motion estimation of visual-inertial odometry were both evaluated using the MH_03 sequence, which contains fast motion and significant illumination change.

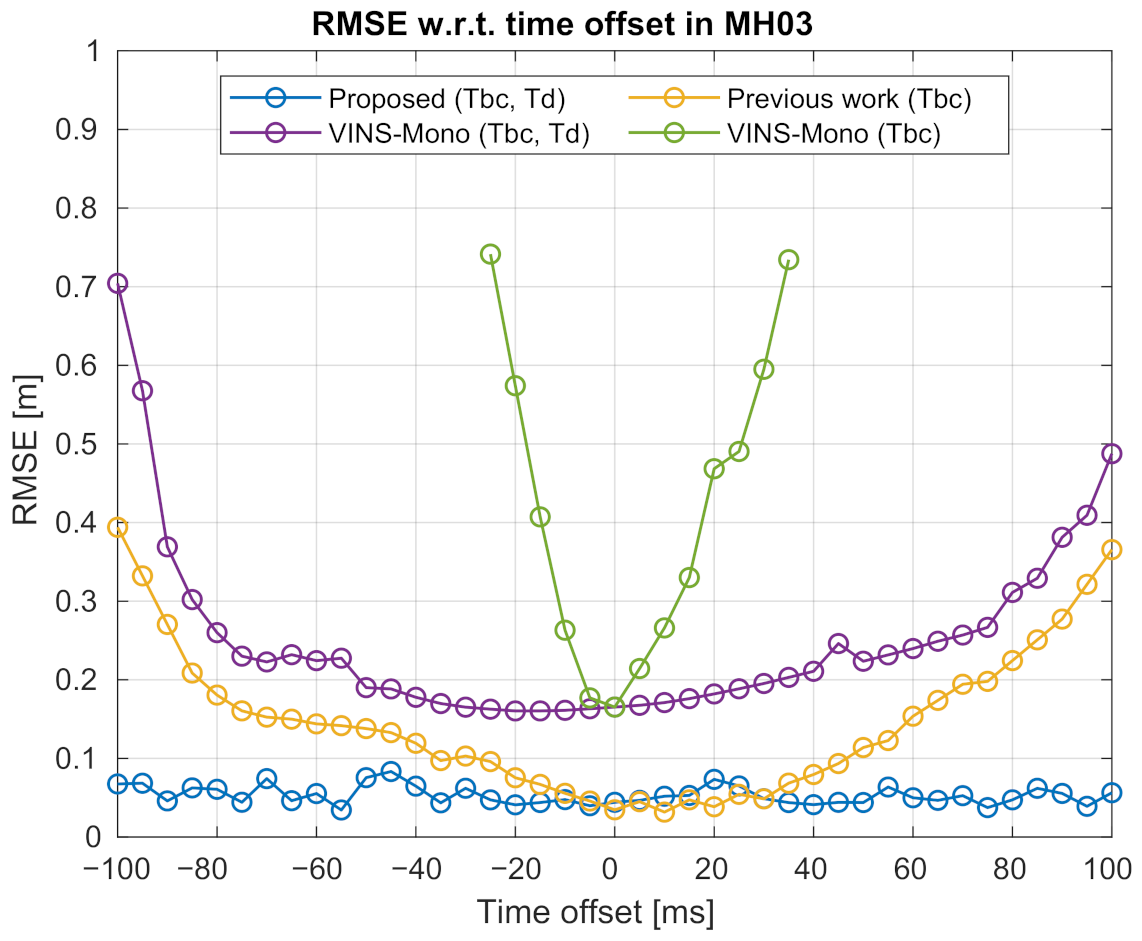

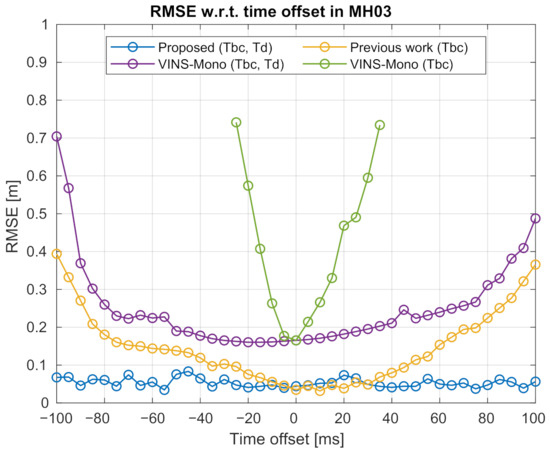

As shown in Figure 7 where the median RMSEs of the estimated trajectories over 25 tests are presented, the time-shifted sequences are tested with VINS-Mono [40], our previous method [36], and the proposed method.

Figure 7.

Comparison of the trajectory accuracy under various time offsets using the MH_03 sequence. The x-axis denotes the various time offsets. The y-axis shows the absolute translational root mean squared error (RMSE) of the estimated trajectory. The curves shown in the figure are, respectively, “Proposed (Tbc, Td)”—Results of the proposed method, which can perform simultaneous spatial-temporal calibration; “Previous work (Tbc)”—Results of our previous work [36], which only performs spatial calibration; “VINS-Mono (Tbc, Td)”—Results of VINS-Mono [40], which can perform simultaneous spatial-temporal calibration; “VINS-Mono (Tbc)”—Results of VINS-Mono, which only performs spatial calibration. Best viewed in color.

VINS-Mono (https://github.com/HKUST-Aerial-Robotics/VINS-Mono, accessed on 14 February 2021) is a popular open-source VIO system that can perform online spatial-temporal calibration. It provides three configurations for spatial calibration, i.e., “with extrinsic parameters”, “have initial extrinsic guess”, and “no extrinsic parameters”, as well as one configuration for temporal calibration, i.e., “estimate time offset”. For the “no extrinsic parameters” configuration, the public source code integrates a method called automatic estimator initialization and online extrinsic spatial calibration [18]. For the “estimate time offset” configuration, the code integrates a method developed in [22]. In our experiments, VINS-Mono is launched in all these configurations for inspection. The purple curve in Figure 7 is the results when VINS-Mono is launched under “no extrinsic parameters” and “estimate time offset” configurations for fairly comparing with the proposed method. In this case, the extrinsic spatial and temporal parameters can be continuously optimized by the bundle adjustment as new measurements arrive. The green curve in the same figure shows the result when VINS-Mono is launched under the single “no extrinsic parameters” configuration while the temporal calibration ability is disabled. Note that all these methods are launched without given any initial guess about extrinsic spatial or temporal parameters. In the experiments, we find out that the results of “VINS-Mono (Tbc)” have significantly large errors when the time offset surpasses 35 or −25 ms. These results are not plotted for limiting the range of the y-axis. The performance of “VINS-Mono (Tbc, Td)” is much better than “VINS-Mono (Tbc)” as the latter one does not calibrate the temporal parameters.

Our previous method can calibrate extrinsic spatial parameters online without considering the temporal parameters. The results of using our previous method are shown in the yellow curve with a legend of “Previous work (Tbc)”. This shows that the previous work can accurately estimate the sensor trajectory only when the time offset is within −10 to 35 ms. The trajectory accuracy of the previous work deteriorates remarkably when the time offset increases. The average RMSE of the previous work is 0.146 m. The blue curve is the result of the method proposed in this work. The trajectory accuracy of the proposed method is better than our previous work, especially when the time offset surpasses 35 or −10 ms. The proposed method also achieves much lower RMSE in all the time offsets compared to the “VINS-Mono (Tbc, Td)” method. The trajectory estimated by the proposed method exhibits consistent accuracy under different time offsets, with an average error of 0.035 m. On the contrary, although “VINS-Mono (Tbc, Td)” has consistent accuracy when the time offset is between −75 and 75 ms, its performance deteriorates remarkably when the time offset increases. The reason is that the authors used image feature velocity for modeling and compensating the temporal misalignment. The usage is under the assumption that in a short period, an image feature moves at a constant velocity on the image plane. However, when the time offset is large, this assumption could be violated. The feature velocity cannot compensate well for large temporal misalignment.

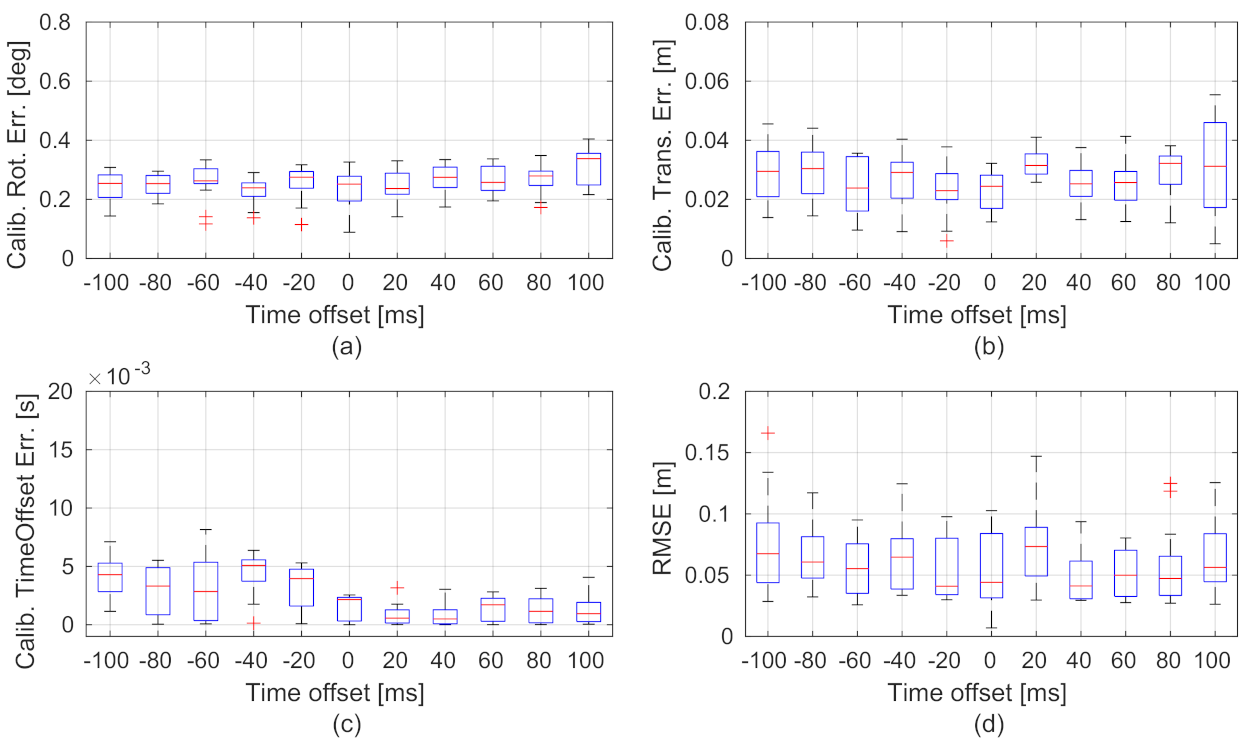

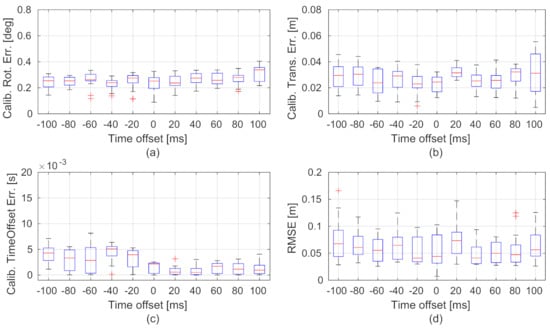

The statistical visualizations of the spatial-temporal calibration errors and trajectory accuracy of our method under various time offsets are, respectively, shown in Figure 8a–d. The results show that the calibration errors and trajectory accuracy do not have much difference under various time offsets. The performance of extrinsic calibration and ego-motion estimation of the proposed method is robust to asynchronous visual and inertial measurements.

Figure 8.

Statistical visualizations of the spatial-temporal calibration errors (a–c) and trajectory accuracy (d) under various time offsets using the MH_03 sequence. The x-axes of the subplots show the various time offsets. The box plots are the results of 25 tests using randomly selected start times.

6.2.3. Convergence Performance

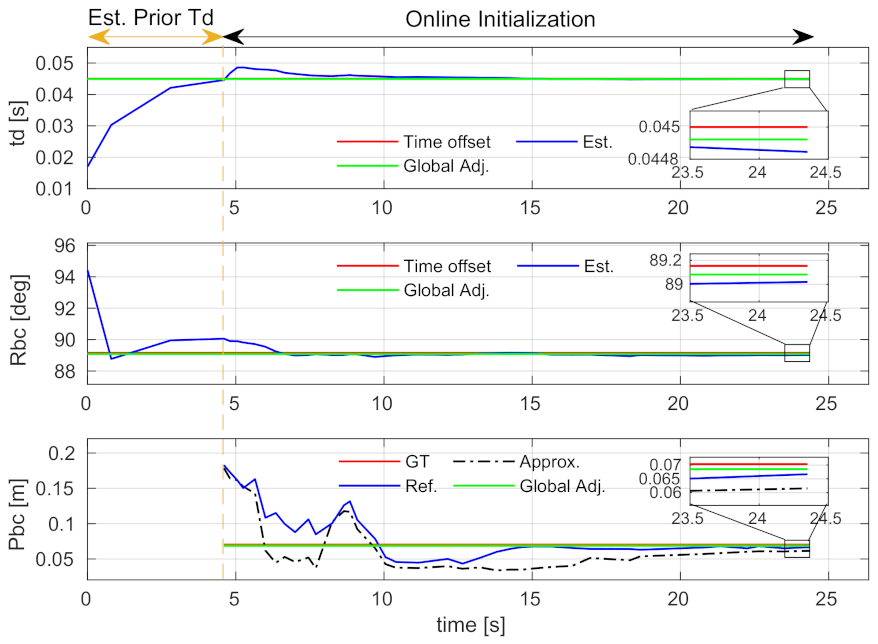

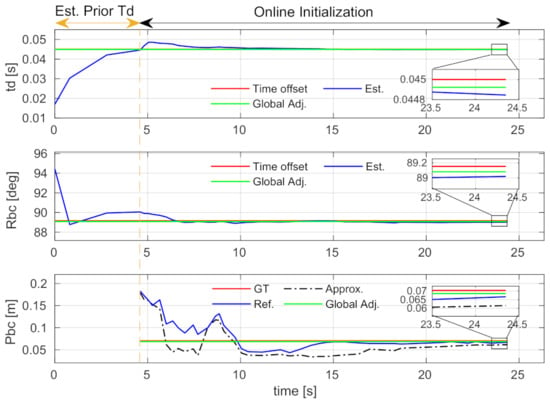

The convergence performance of spatial-temporal calibration and initial value estimation is studied using the V2_01 sequence with a 45 ms time offset. The time-varied estimation results of the spatial-temporal parameters and the initial states are, respectively, shown in Figure 9 and Figure 10. Note that, as described in Section 4.3, if a new time offset estimated by Equation (16) is larger than the IMU sampling interval, our system will be relaunched. The sections before the vertical dash lines in the figures show a relaunching action. These sections are denoted as “Est. Prior Td”. Since the approximation and refinement processes are skipped in these sections, the result of translation and all results of the initial states are not available.

Figure 9.

Comparison of the calibrated spatial and temporal parameters using the V2_01 sequence. The section labeled by “Est. Prior Td” denotes a stage where we update the time offset and relaunch the system. Abbreviations: Est.—Estimated; Global Adj.—Global adjustment; GT—Ground-truth; Approx.—Approximation; Ref.—Refinement. Best viewed in color.

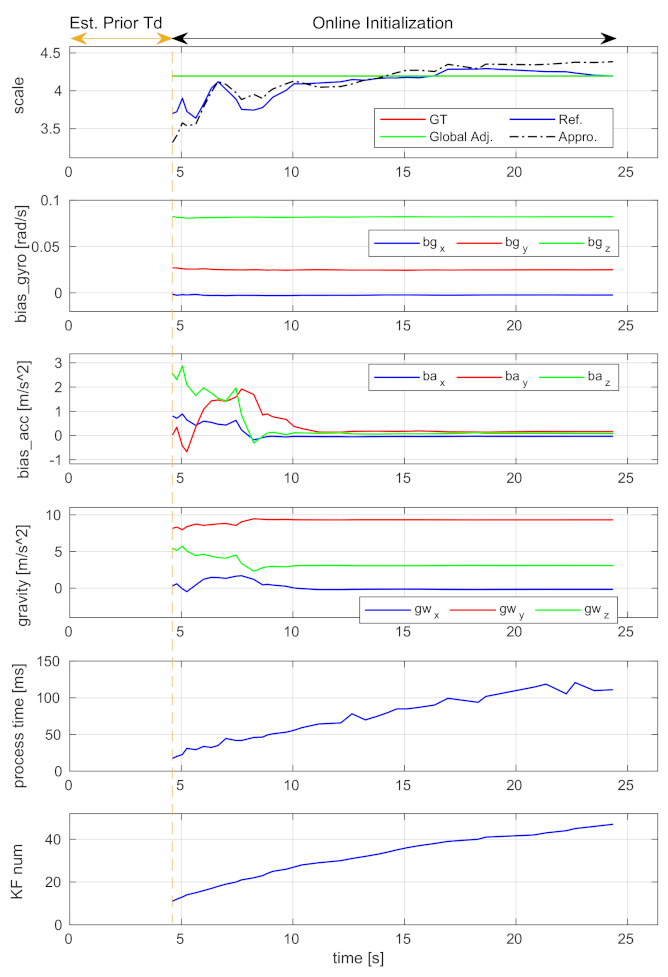

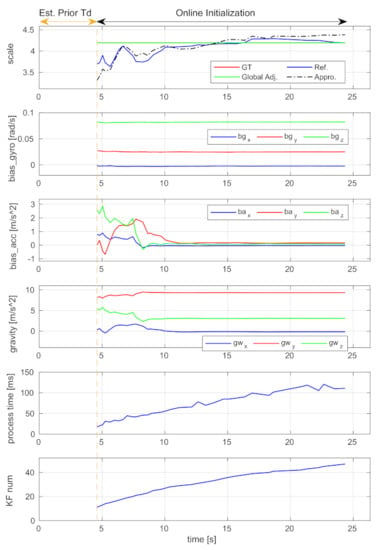

Figure 10.

The scale, gyroscope bias (bias_gyro), accelerometer bias (bias_acc), gravity vector, processing time expended by each execution, and keyframe number (KF num) using the V2_01 sequence.

The “Online Initialization” sections after the vertical dash lines are the relaunched results. The diagrams of the estimated time offset, extrinsic rotation, and gyroscope bias show that these parameters can converge to stable values within a few seconds, and the stable values are very close to the ground-truth. The proposed incremental estimator can effectively estimate them. The diagrams of the translation and scale show that the refined results (i.e., blue curves) are better than the approximated ones (i.e., black dash-dot curves). The results are even better if the global adjustment is performed (i.e., green curves). These indicate that the proposed incremental estimator can estimate the spatial-temporal parameters from coarse-to-fine, and the bundle adjustment can effectively improve the estimation.

One thing to note is that the curves of accelerometer bias and gravity exhibit severe oscillation in the first few seconds. This is because the platform did not have enough excitation in the beginning. The values become estimable with the arrival of more keyframes.

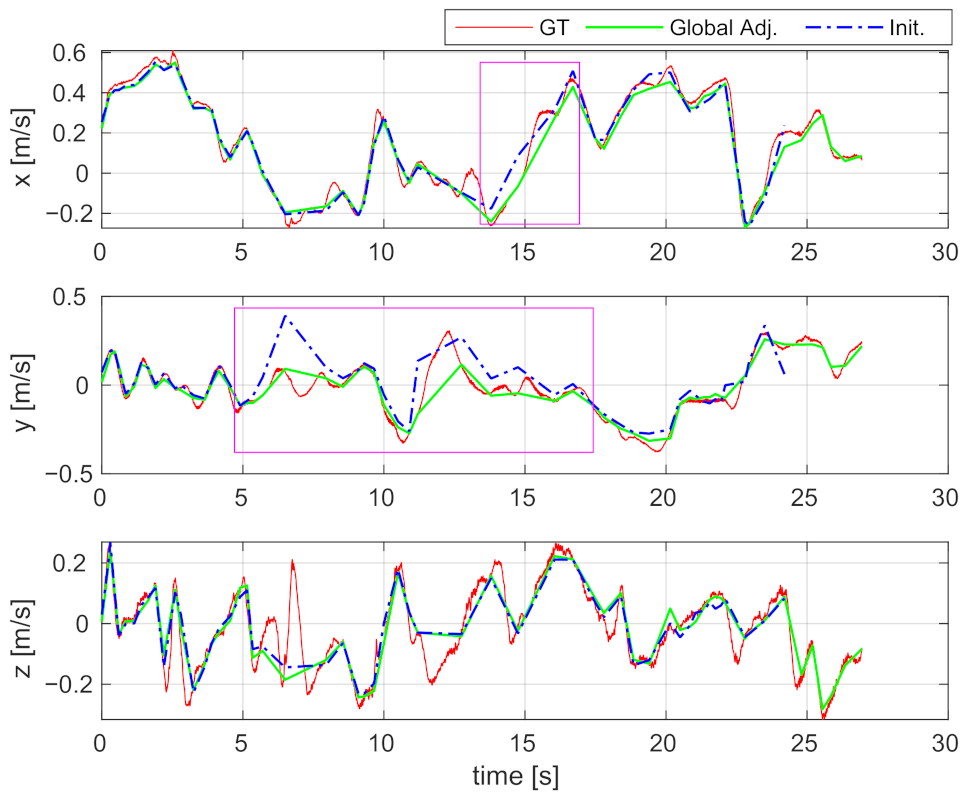

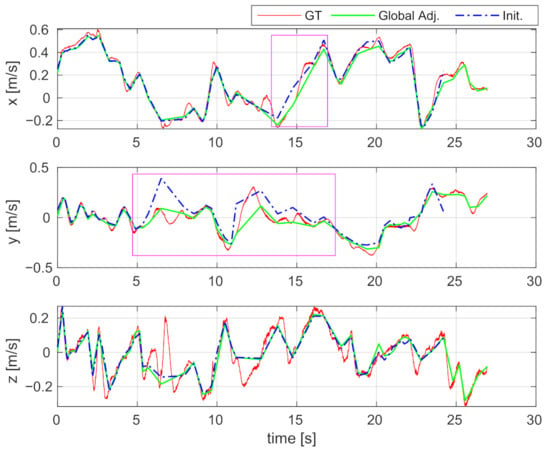

6.2.4. Velocity Estimation

The estimated IMU body velocities are shown in Figure 11. Since the estimated results and ground-truth are expressed in different coordinate systems, we transform the estimated results to help readers better compare with the ground-truth. The estimated velocities in the diagrams (i.e., blue dash-dot curves) are almost consistent with the ground-truth (i.e., red curves), indicating that the velocities are well estimated by the proposed incremental estimator. In the sections marked by pink boxes, the results of global adjustment are even closer to the ground-truth, which indicates that the accuracy of velocity estimation can be further improved by performing a global adjustment. The RMSE of the estimated velocities decreased from 0.093 m/s to 0.046 m/s by applying global adjustment. The consistency between the estimated results and the ground-truth also shows that the metric-scale can be correctly estimated, since otherwise, the magnitude of estimated velocity would differ from the ground-truth.

Figure 11.

Comparison of the estimated IMU body velocities using the V2_01 sequence of the EuRoC dataset. Red curve: Ground-truth velocity (GT); Blue dash-dot curve: The velocity estimated by online initialization (Init.); Green curve: The velocity corrected by global adjustment (Global Adj.). Best viewed in color.

6.2.5. Computational Complexity Analysis

In Figure 10, the keyframe number and the processing time expended by each execution of the incremental estimator are plotted. It shows that the processing time is approximately linear to the number of keyframes, indicating that the proposed incremental estimator has linear time complexity. To analyze the increased computation load on bundle adjustment due to the calculation of spatial-temporal parameters, we perform the global and local bundle adjustments with or without optimizing these parameters. The execution times are shown in Table 2. The global adjustment takes about 10 s and the local adjustment takes about 1.5 s for optimization. In detail, by further calculating the extrinsic spatial-temporal parameters, the global adjustment takes an extra 2.709 s and the local adjustment takes an extra 0.087 s. Note that since the two adjustments are middle-scale or large-scale optimization problems as they optimize thousands of variables, they are usually executed in separate threads in practice. In addition, the global adjustment is only performed one time when the incremental estimation is completed, and the local adjustment is performed only when a new keyframe is detected. Therefore, the extra time cost will not affect the real-time pose tracking of visual-inertial odometry.

Table 2.

Execution Times of Global and Local Adjustments With or Without Optimizing the Spatial-Temporal Parameters .

6.2.6. Accuracy on the Whole Dataset

We also studied the overall performance using the whole dataset. The various time offsets are preset to 0 ms, 50 ms, and 100 ms for comparison. Each sequence is tested 25 times under each time offset. VINS-Mono is launched under “no extrinsic parameters” and “estimate time offset” configurations. Feng’s method [44] is also compared. Table 3 shows the estimation errors of the spatial-temporal parameters and the absolute translational RMSE of the estimated trajectories. The average rotational and translational errors using the parameters calibrated by our method are, respectively, and m, which are better than VINS-Mono ( and m) and Feng’s method ( and m). The average temporal error using the parameter calibrated by our method is 0.773 ms. The value seems less accurate than VINS-Mono (0.133 ms). The reason is VINS-Mono treats the time offset as a vision factor by interpolating the location of features on the image plane, which exhibits better accuracy on temporal calibration than ours. Despite the low accuracy in temporal calibration, the trajectory RMSEs of our method are smaller than VINS-Mono and Feng’s method on most sequences, implying that it has a better performance on sensor pose estimation. The dominance of our method owes to its better accuracy in spatial calibration.

Table 3.

Spatial-Temporal Calibration Errors and Keyframe Trajectory Accuracy on the EuRoC Dataset .

Table 4 shows the detailed errors of the estimated gyroscope biases, accelerometer biases, and velocities. The mean, standard deviation, and the maximum values are the statistical results over 75 tests (There are three types of predefined time offsets, and each sequence is tested 25 times at each time offset. Thus, a sequence has tested a total of 75 times.). The results indicate that the IMU biases can be accurately estimated. The maximum errors for gyroscope bias and accelerometer bias are, respectively, 0.00158 rad/s and 0.1219 /. The maximum error for velocity is 0.0861 m/s, which is less than a few centimeters per second even for the difficult sequences, e.g., V1_03, V2_03, MH_04, and MH_05 sequence.

Table 4.

Errors of the Estimated Initial Values on the EuRoC Dataset .

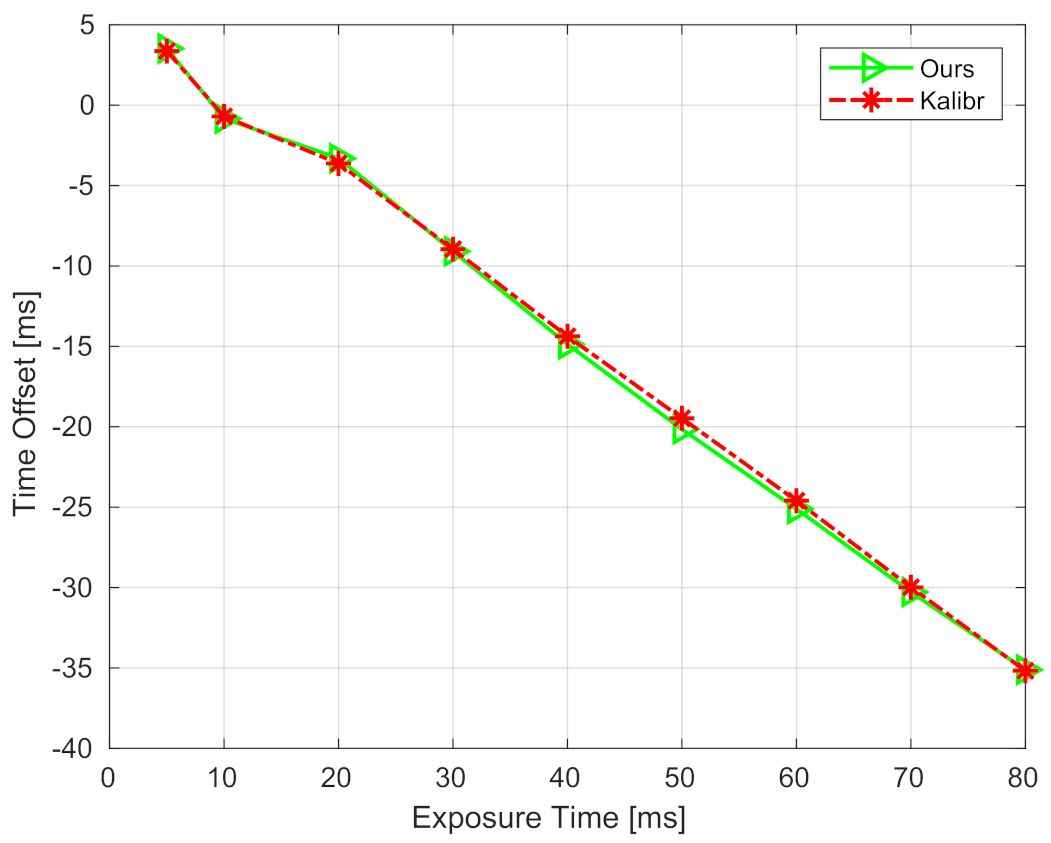

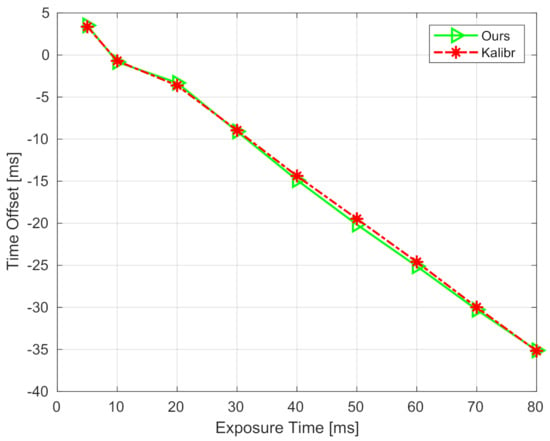

6.3. Real Sensor Experiments

The Intel RealSense Depth Camera D435i (https://www.intelrealsense.com/depth-camera-d435i/, accessed on 14 February 2021) was used in the real sensor experiment. This sensor contains a three-axis accelerometer with a sample rate of 250 Hz, a three-axis gyroscope with the sample rates of 200 or 400 Hz, two global infrared cameras, and one rolling shutter RGB camera. In this experiment, we used the accelerometer, the gyroscope, and the left infrared camera. The infrared camera was launched at 15 Hz with a resolution of 640 × 480. Since the accelerometer and the gyroscope run at different rates, the gyroscope was launched at 400 Hz and its measurements were down-sampled and linearly interpolated to synchronize with the accelerometer. The synchronized accelerometer and gyroscope data constitute the six-axis IMU measurements, with a rate of 250 Hz. According to official documents, the inertial sensors and cameras can be triggered to synchronize. However, we find that actually there is a noticeable time offset between IMU and camera measurements, and its value is related to the exposure time of the camera.

To evaluate the robustness performance of the proposed method on real sensors, we set nine different camera exposure times, from 5 to 80 ms. For each exposure time, five sequences facing toward a checkerboard were collected for offline calibration, and ten sequences in a living room were collected for testing the proposed method. In this experiment, the state-of-art toolbox Kalibr [9,10] was adopted to offline-calibrate the extrinsic spatial-temporal parameters. The offline-calibrated results are regarded as ground-truth values and used to compare with the proposed method. The comparison of calibrated time offsets under different exposure times is shown in Figure 12. It can be seen that, first, the time offset is approximately linear to the exposure time, with a slope of about −0.5. The time offset is about zero when the exposure time is set to 10 ms. This means that one can obtain well-aligned IMU and camera measurements when setting the exposure time to 10 ms. However, as the exposure time increases, the estimated time offset becomes negative, and its absolute value increases. The reason is that the middle of exposure time is recorded as the timestamp of an image. Second, the time offsets calibrated by our method (i.e., green curve) are close to those calibrated by Kalibr (i.e., red curve). Note that Kalibr is an offline method, which consumes more time and computation resources than ours. The comparison indicates that the proposed method is comparable to offline methods. The calibration errors of extrinsic rotation and translation are also shown in Table 5. It can be noticed that all the errors of rotation and translation are, respectively, smaller than 0.45 degrees and 0.017 m, even though the exposure time increases. These results illustrate that the proposed method can accurately calibrate the extrinsic spatial and temporal parameters, and it is robust to various exposure times when applying to a real visual-inertial sensor.

Figure 12.

Calibrated time offsets under different exposure times of RealSense-D435i.

Table 5.

Calibration Errors of Extrinsic Spatial Parameters under Different Exposure Times .

7. Conclusions and Future Work

This paper presented an online initialization and spatial-temporal calibration method for monocular visual-inertial odometry. The method used two short-term motion interpolation and an incremental estimator for online initialization and spatial-temporal calibration, and used bundle adjustment to fuse the camera and IMU measurements and adjust the estimated system states. The method does not rely on any prior knowledge about the spatial or temporal configuration. Thus, it is suitable for the specification-free and asynchronous VIO sensors. The performance of the proposed method was evaluated using both synthetic sequences and public datasets. The results showed that the initial states and spatial-temporal parameters could be accurately estimated and converge in a short time, and they could be well corrected by the introduced bundle adjustment. The proposed method is concluded to have a competitive accuracy compared with the existing methods.

One shortcoming of this work is that the proposed method is particularly designed for monocular VIOs. In practical applications, there are various types of odometers, like stereo/duo visual-inertial odometry, Lidar-visual-inertial odometry, Encoder-visual-inertial odometry, GPS-visual-inertial odometry, etc. In the future, we will extend the proposed method by applying it to other types of odometers.

Author Contributions

Conceptualization, W.H.; methodology, W.H.; software, W.H.; validation, W.H.; formal analysis, W.H.; investigation, W.H.; resources, H.L.; data curation, W.H.; writing—original draft preparation, W.H.; writing—review and editing, W.H., W.W. and H.L.; visualization, W.H.; supervision, W.W. and H.L.; project administration, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China (No. 2020AAA0108904), Science and Technology Plan of Shenzhen (Nos. JCYJ20190808182209321, JCYJ20200109140410340).

Conflicts of Interest

The authors do not have conflict of interest.

Appendix A

Appendix A.1. Preliminaries

The background geometric concepts used in Section 4.2 and the remaining part of this Appendix are as follows.

Appendix A.1.1. First-Order Approximation

The exponential map of a rotational vector is equivalent to a standard matrix exponential (i.e., Rodrigues’ rotation formula):

A first-order approximation of the exponential map is:

Appendix A.1.2. Adjoint Property

Given a Lie element and a rotation , the adjoint property is:

Appendix A.1.3. BCH Linear Approximation

The BCH (Baker–Campbell–Hausdorff [58]) linear approximation for and in the Lie algebra of a Lie group is:

where and are, respectively, the inverses of left-Jacobian matrix and right-Jacobian matrix . The additive operation of a small perturbation on Lie algebra can therefore be approximated as follows:

Appendix A.2. Gauss–Newton Algorithm

The proposed bundle adjustment considering the time offset in (27) is essentially a least-squares non-convex function, which can be rewritten as a differentiable object function . Our goal is to determine the optimum design parameter , that minimizes the objective function:

where the variables belong to some manifold . A typical technique to minimize this expression is Gauss–Newton method [55], which iteratively solves the Gauss–Newton update equation on an operating point to find the value of , i.e., a “small" change to , and then uses the to update . The method iterates until the change becomes sufficiently small.

The Gauss–Newton update equation is defined as:

with

where is the block-tridiagonal information matrix. The jacobian of errors w.r.t variables highly depends on the updating manner of the variables. In the following sections, we first define the updating rules of variables, and then derive the Jacobians of different errors.

Appendix A.3. State Update

We define the small change as an element of the tangent space at . The update equations of the system states are as follows:

Based on these update equations, we derive the Jacobians w.r.t. the vectors , , , , , , , , and .

Appendix A.4. Jacobians of Rotation Errors

The Jacobians of rotation error between consecutive keyframes i and j w.r.t. the vectors , , and are as follows.

By letting

,

,

, we have:

Here, the shorthand is used in the deduction.

By letting , , and , we have:

Here, , , , and are used in the deduction. In summary, the Jacobians of are:

Appendix A.5. Jacobians of Feature Residual Errors

We define the residual error between the reprojection of and the pixel location of the matched feature as . Here, is the kth point observed at the ith keyframe. It is expressed in the local camera frame. Since does not appear in , the Jacobian of w.r.t. is zero. By representing using , the Jacobians of w.r.t. the system states are:

where and are the focal lengths of the camera. is derived from the projection function of a pinhole camera model.

Since is linear in and and the retraction is simply a vector sum, the Jacobians of w.r.t. , are simplified as the matrix coefficients of and . We only need to focus on the following remaining Jacobians:

Here, we used the shorthand for convenience. is the right-Jacobian matrix of . In summary, the Jacobians of are:

References

- Lin, Y.; Gao, F.; Qin, T.; Gao, W.; Liu, T.; Wu, W.; Yang, Z.; Shen, S. Autonomous aerial navigation using monocular visual-inertial fusion. J. Field Robot. 2018, 35, 23–51. [Google Scholar] [CrossRef]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Jones, E.; Soatto, S. Visual-inertial navigation, mapping and localization: A scalable real-time causal approach. Int. J. Robot. Res. 2011, 30, 407–430. [Google Scholar] [CrossRef]

- Huai, Z.; Huang, G. Robocentric visual-inertial odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 6319–6326. [Google Scholar]

- Dong, J.; Fei, X.; Soatto, S. Visual-Inertial-Semantic Scene Representation for 3D Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 960–970. [Google Scholar]

- Yang, Z.; Gao, F.; Shen, S. Real-time monocular dense mapping on aerial robots using visual-inertial fusion. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4552–4559. [Google Scholar]

- Oskiper, T.; Samarasekera, S.; Kumar, R. Multi-sensor navigation algorithm using monocular camera, IMU and GPS for large scale augmented reality. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 71–80. [Google Scholar]

- Li, P.; Qin, T.; Hu, B.; Zhu, F.; Shen, S. Monocular visual-inertial state estimation for mobile augmented reality. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Nantes, France, 9–13 October 2017; pp. 11–21. [Google Scholar]

- Furgale, P.; Barfoot, T.D.; Sibley, G. Continuous-time batch estimation using temporal basis functions. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 2088–2095. [Google Scholar]

- Furgale, P.; Rehder, J.; Siegwart, R. Unified temporal and spatial calibration for multi-sensor systems. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1280–1286. [Google Scholar]

- Maye, J.; Furgale, P.; Siegwart, R. Self-supervised calibration for robotic systems. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013; pp. 473–480. [Google Scholar]

- Rehder, J.; Nikolic, J.; Schneider, T.; Hinzmann, T.; Siegwart, R. Extending kalibr: Calibrating the extrinsics of multiple IMUs and of individual axes. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4304–4311. [Google Scholar]

- Rehder, J.; Siegwart, R.; Furgale, P. A general approach to spatiotemporal calibration in multisensor systems. IEEE Trans. Robot. 2016, 32, 383–398. [Google Scholar] [CrossRef]

- Weiss, S.; Achtelik, M.; Lynen, S.; Chli, M.; Siegwart, R. Real-time onboard visual-inertial state estimation and self-calibration of mavs in unknown environments. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 957–964. [Google Scholar]

- Kelly, J.; Sukhatme, G.S. Visual-inertial sensor fusion: Localization, mapping and sensor-to-sensor self-calibration. Int. J. Robot. Res. 2011, 30, 56–79. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A. High-precision, consistent EKF-based visual-inertial odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Li, M.; Yu, H.; Zheng, X.; Mourikis, A. High-fidelity sensor modeling and self-calibration in vision-aided inertial navigation. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 409–416. [Google Scholar]

- Yang, Z.; Shen, S. Monocular visual-inertial state estimation with online initialization and camera-IMU extrinsic calibration. IEEE Trans. Autom. Sci. Eng. 2017, 14, 39–51. [Google Scholar] [CrossRef]

- Huang, W.; Liu, H.; Wan, W. An Online Initialization and Self-Calibration Method for Stereo Visual-Inertial Odometry. IEEE Trans. Robot. 2020, 36, 1153–1170. [Google Scholar] [CrossRef]

- Kelly, J.; Sukhatme, G.S. A general framework for temporal calibration of multiple proprioceptive and exteroceptive sensors. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 195–209. [Google Scholar]

- Ling, Y.; Bao, L.; Jie, Z.; Zhu, F.; Li, Z.; Tang, S.; Liu, Y.; Liu, W.; Zhang, T. Modeling Varying Camera-IMU Time Offset in Optimization-Based Visual-Inertial Odometry. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018; pp. 484–500. [Google Scholar]

- Qin, T.; Shen, S. Online temporal calibration for monocular visual-inertial systems. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3662–3669. [Google Scholar]

- Mur-Artal, R.; Tardós, J. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef]

- Campos, C.; Montiel, J.; Tardós, J. Fast and Robust Initialization for Visual-Inertial SLAM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 1288–1294. [Google Scholar]

- Campos, C.; Montiel, J.; Tardós, J. Inertial-Only Optimization for Visual-Inertial Initialization. arXiv 2020, arXiv:2003.05766. [Google Scholar]

- Martinelli, A. Closed-form solution of visual-inertial structure from motion. Int. J. Comput. Vision 2014, 106, 138–152. [Google Scholar] [CrossRef]

- Kaiser, J.; Martinelli, A.; Fontana, F.; Scaramuzza, D. Simultaneous state initialization and gyroscope bias calibration in visual inertial aided navigation. IEEE Robot. Autom. Lett. 2016, 2, 18–25. [Google Scholar] [CrossRef]