Dynamically Controlling Offloading Thresholds in Fog Systems

Abstract

1. Introduction

1.1. Contributions

- We propose a fog node architecture that dynamically decides whether to process the received tasks locally or offloads them to other neighbors. This is based on a dynamic threshold that considers the queuing delay of the primary fog node and the availability (i.e., the queuing delay) of its neighbors.

- Computational offloading and the associated computational resource management was investigated using an online dynamic system with the aim to solve the multi-objective problem that aims to minimize delay, minimize energy consumption, and maximize throughput.

- We conducted extensive experiments to evaluate the performance of our proposed scheme and compare our proposed algorithm to various benchmarks.

- This paper extends our previous work [1] by introducing a dynamic offloading threshold, made use of in an online model for evaluating service delay.

1.2. Paper Organization

2. Related Work

2.1. Computational Offloading

2.2. Dynamic Server Energy Management

2.3. Comparison of the State-of-the-Art

3. System Modelling and Constraints

3.1. System Model

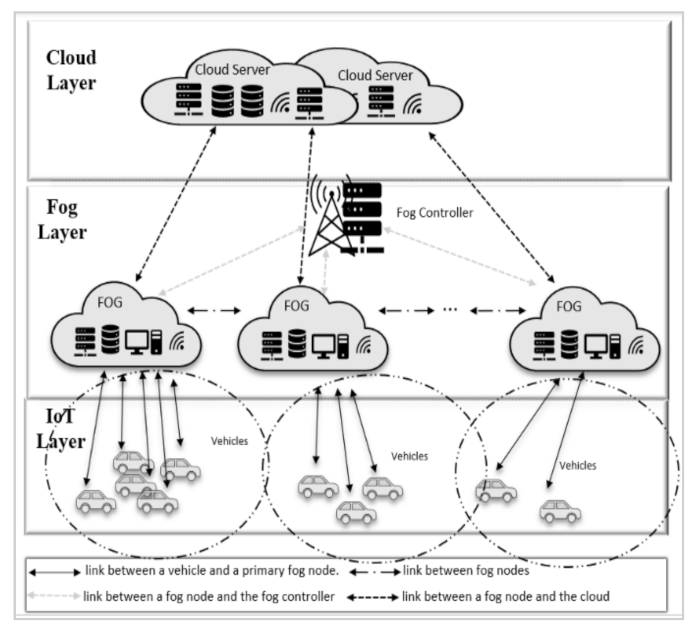

3.1.1. Network Diagram

- The IoT devices layer: This layer is composed of mobile vehicles—represented as vehicle nodes, containing an actuator and a collection of sensors. Each sensor produces a task, labelling it as “non-urgent” or “urgent”. Non-urgent tasks include data such as current position, speed, and path. Urgent tasks require a quicker response and can have stringent Quality of Service (QoS) requirements. This task may contain a video stream around a moving vehicle, requiring short latency or processing. This is necessary, for instance, in self-driving vehicles.

- Fog computing layer: This layer is comprised of a series of fog nodes and a fog controller. Fog nodes are located in RSU that are installed alongside a road. If fog nodes are situated in communication proximity of each other, they can interact and share data with each other [34]. Hence, fog nodes form an ad hoc network to exchange and share data. All fog nodes are linked to the fog controller, which is responsible for managing fog resources and controlling fog nodes. Fog nodes process two different types of tasks, urgent tasks are given priority and their processing results are sent back to the vehicle. For non-urgent tasks, fog nodes process these tasks and transfer the findings to the cloud for further analysis and storage, e.g., for retrieval by traffic management organizations.

- Cloud computing layer: This layer is composed of a set of cloud servers, hosted within one or more data centers. This layer is able to aggregate traffic information across a geographical area over time.

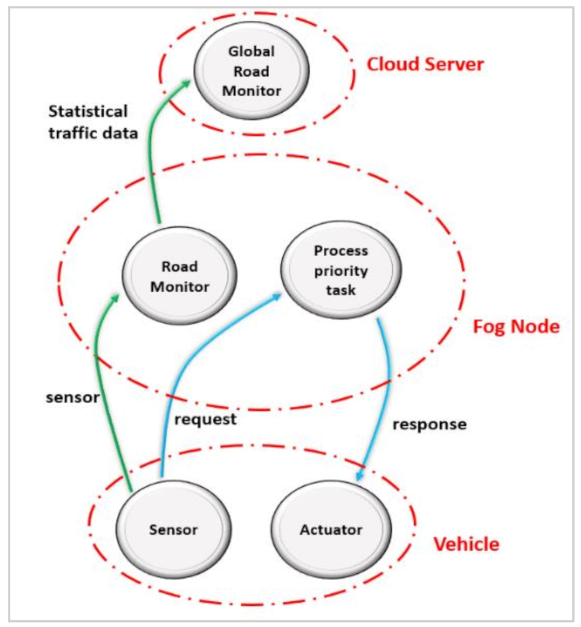

3.1.2. Application Module Description

- Road Monitor: This module is placed at fog nodes. When a vehicle comes into communication proximity of a fog node, a sensor immediately sends data to the connected fog node for analysis. This data contains the current position, the speed of the vehicle, weather, and road conditions. After processing these data by the specified module, the results are transmitted to a cloud server for further processing.

- Global Monitor: this module is placed at a cloud data center, receiving data that has already been processed by the road monitor module.

- Process Priority task: this module is placed at fog nodes and is responsible for processing priority requests from a user. The results are then sent back to the user. An application in iFogSim is specified as a directed acyclic graph (DAG) = (M, E), where M represents the deployed application modules M = {m1, m2, m3, …, mn}, e.g., process priority task, road monitor, and global road monitor modules. ‘E’ represents a set of edges describing data dependencies between application modules, as illustrated in Figure 2.

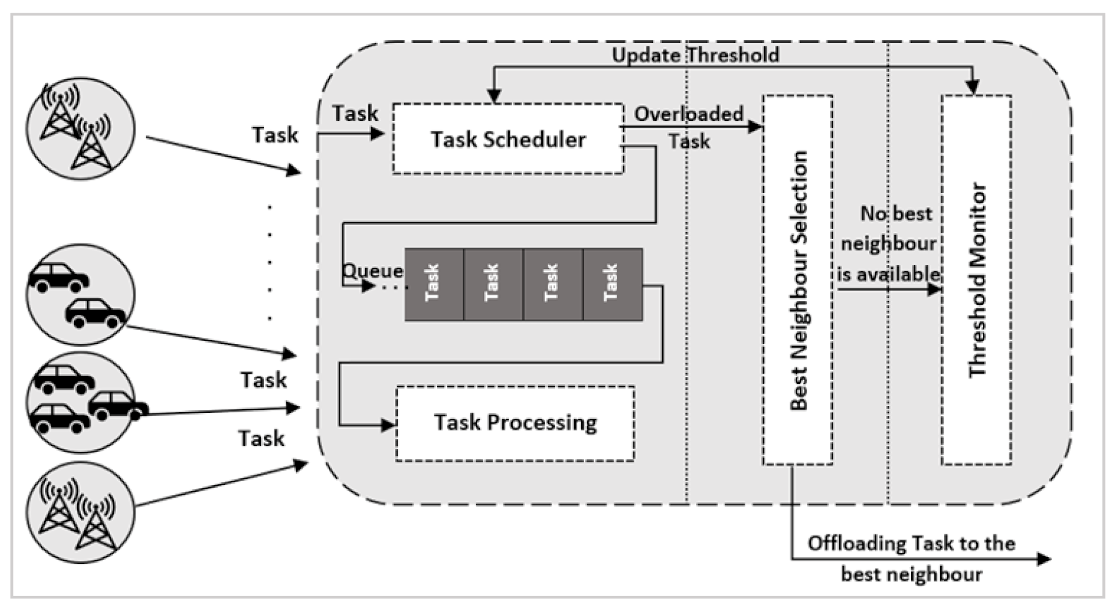

3.1.3. Fog Node Architecture

3.2. Types of Connections and Constraints

3.2.1. Connection between Vehicles and Fog Nodes

- Communication Constraints

- Processing Constraints

3.2.2. Connection between Fog Nodes

- Fog Node Waiting Queue.

- Coverage Method

- Selecting the Best Neighboring Fog Node

3.2.3. Between Fog Nodes and the Cloud

4. Problem Formulation

4.1. Delay Minimization and Throughput Maximization

4.2. Energy Saving

5. Proposed Algorithms

5.1. Dynamic Offloading Threshold

| Algorithm 1 Dynamic Offloading Threshold |

|

5.2. Dynamic Resource Saving

6. Experimental Results

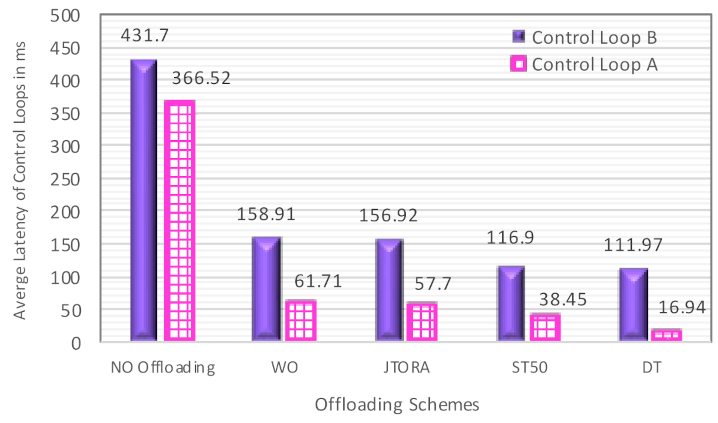

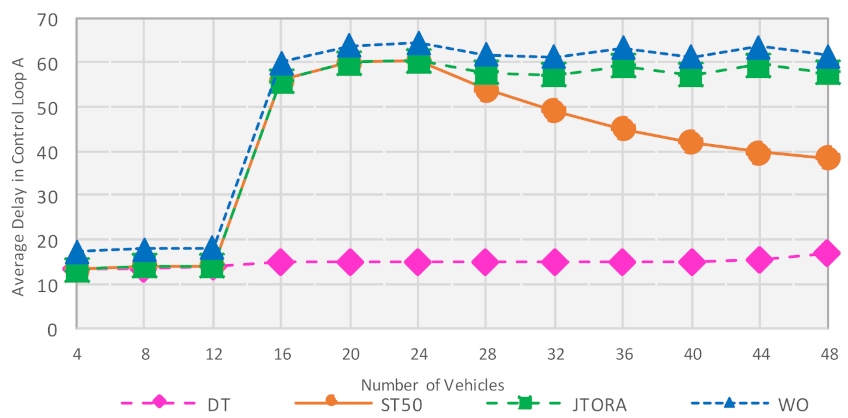

- Service Latency is the average round trip time for all tasks processed in the fog environment. Two control loops were used in the simulation: Control loop A: Sensor -> Process Priority Tasks -> Actuator. This control loop represented the path of priority requests. Control loop B: Sensor -> Road Monitor -> Global Road Monitor. This control loop represented the path of non-priority requests.

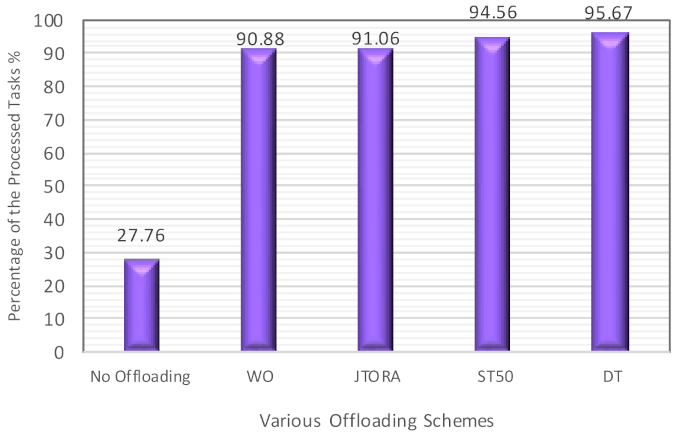

- Throughput, which was measured as the percentage of processed tasks within a time window.

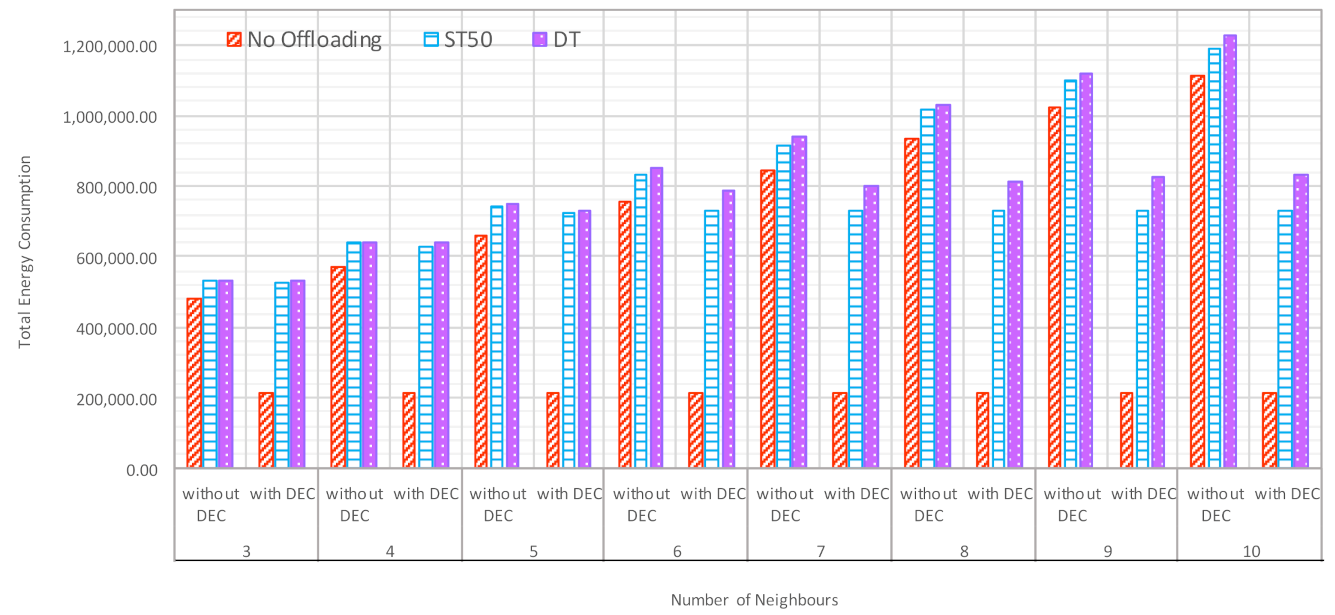

- Total Energy Consumption in fog environment caused by powering on fog nodes and processing tasks.

6.1. Performance Comparisons with Various Computation Offloading Schemes

6.2. Impact of the Proposed Scheme and Different Offloading Schemes on Delay and Throughputs

6.3. Impact of Increasing Number of Vehicles on Delay and Throughputs with Different Offloading Schemes

6.4. Impact of Increasing Number of Neighbors on Delay, Throughputs, and Energy with Various Offloading Schemes

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alenizi, F.; Rana, O. Minimising Delay and Energy in Online Dynamic Fog Systems. In Proceedings of the International Conference on Internet of Things and Embedded Systems (IoTE 2020), London, UK, 28–29 November 2020; Volume 14, pp. 139–158. [Google Scholar]

- Arkian, H.R.; Diyanat, A.; Pourkhalili, A. MIST: Fog-based data analytics scheme with cost-efficient resource provisioning for IoT crowdsensing applications. J. Netw. Comput. Appl. 2017, 82, 152–165. [Google Scholar] [CrossRef]

- Sarkar, S.; Misra, S. Theoretical modelling of fog computing: A green computing paradigm to support IoT applications. IET Netw. 2016, 5, 23–29. [Google Scholar] [CrossRef]

- Mahmud, R.; Kotagiri, R.; Buyya, R. Fog Computing: A Taxonomy, Survey and Future Directions. In Smart Sensors, Measurement and Instrumentation; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2018; pp. 103–130. [Google Scholar]

- Hu, P.; Dhelim, S.; Ning, H.; Qiu, T. Survey on fog computing: Architecture, key technologies, applications and open issues. J. Netw. Comput. Appl. 2017, 98, 27–42. [Google Scholar] [CrossRef]

- Brasil. Decreto—Lei nº 227, de 28 de Fevereiro de 1967. Dá Nova Redação ao Decreto-lei nº 1.985, de 29 de Janeiro de 1940 (Código de Minas). Brasília. 1967. Available online: http://www.planalto.gov.br/ccivil_03/Decreto-Lei/Del0227.htm (accessed on 19 October 2020).

- Ma, K.; Bagula, A.; Nyirenda, C.; Ajayi, O. An iot-based fog computing model. Sensors 2019, 19, 2783. [Google Scholar] [CrossRef]

- Xiao, Y.; Krunz, M. QoE and power efficiency tradeoff for fog computing networks with fog node cooperation. In Proceedings of the IEEE INFOCOM 2017—IEEE Conference on Computer Communications, Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar]

- Jamil, B.; Shojafar, M.; Ahmed, I.; Ullah, A.; Munir, K.; Ijaz, H. A job scheduling algorithm for delay and performance optimization in fog computing. Concurr. Comput. Pr. Exp. 2019, 32, 5581. [Google Scholar] [CrossRef]

- Dastjerdi, A.V.; Gupta, H.; Calheiros, R.N.; Ghosh, S.K.; Buyya, R. Fog Computing: Principles, architectures, and applications. In Internet of Things; Elsevier BV: Amsterdam, The Netherlands, 2016; pp. 61–75. [Google Scholar]

- Liu, L.; Chang, Z.; Guo, X.; Mao, S.; Ristaniemi, T. Multiobjective Optimization for Computation Offloading in Fog Computing. IEEE Internet Things J. 2018, 5, 283–294. [Google Scholar] [CrossRef]

- Zhao, Z.; Bu, S.; Zhao, T.; Yin, Z.; Peng, M.; Ding, Z.; Quek, T.Q.S. On the Design of Computation Offloading in Fog Radio Access Networks. IEEE Trans. Veh. Technol. 2019, 68, 7136–7149. [Google Scholar] [CrossRef]

- Zhu, Q.; Si, B.; Yang, F.; Ma, Y. Task offloading decision in fog computing system. China Commun. 2017, 14, 59–68. [Google Scholar] [CrossRef]

- Chen, M.; Hao, Y. Task Offloading for Mobile Edge Computing in Software Defined Ultra-Dense Network. IEEE J. Sel. Areas Commun. 2018, 36, 587–597. [Google Scholar] [CrossRef]

- Al-Khafajiy, M.; Baker, T.; Al-Libawy, H.; Maamar, Z.; Aloqaily, M.; Jararweh, Y. Improving fog computing performance via Fog-2-Fog collaboration. Futur. Gener. Comput. Syst. 2019, 100, 266–280. [Google Scholar] [CrossRef]

- Yousefpour, A.; Ishigaki, G.; Jue, J.P. Fog Computing: Towards Minimizing Delay in the Internet of Things. In Proceedings of the 2017 IEEE International Conference on Edge Computing (EDGE), Honolulu, HI, USA, 25–30 June 2017; pp. 17–24. [Google Scholar]

- Gao, X.; Huang, X.; Bian, S.; Shao, Z.; Yang, Y. PORA: Predictive Offloading and Resource Allocation in Dynamic Fog Computing Systems. IEEE Internet Things J. 2019, 7, 72–87. [Google Scholar] [CrossRef]

- Tang, Q.; Xie, R.; Yu, F.R.; Huang, T.; Liu, Y. Decentralized Computation Offloading in IoT Fog Computing System With Energy Harvesting: A Dec-POMDP Approach. IEEE Internet Things J. 2020, 7, 4898–4911. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, S. Latency-minimum offloading decision and resource allocation for fog-enabled Internet of Things networks. Trans. Emerg. Telecommun. Technol. 2020, 31, 3880. [Google Scholar] [CrossRef]

- Mukherjee, M.; Kumar, S.; Shojafar, M.; Zhang, Q.; Mavromoustakis, C.X. Joint Task Offloading and Resource Allocation for Delay-Sensitive Fog Networks. In Proceedings of the ICC 2019—2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar]

- Mukherjee, M.; Kumar, V.; Kumar, S.; Matamy, R.; Mavromoustakis, C.X.; Zhang, Q.; Shojafar, M.; Mastorakis, G. Compu-tation offloading strategy in heterogeneous fog computing with energy and delay constraints. In Proceedings of the ICC 2020—2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020. [Google Scholar]

- Sun, H.; Yu, H.; Fan, G.; Chen, L. Energy and time efficient task offloading and resource allocation on the generic IoT-fog-cloud architecture. Peer Peer Netw. Appl. 2020, 13, 548–563. [Google Scholar] [CrossRef]

- Meng, X.; Wang, W.; Zhang, Z. Delay-Constrained Hybrid Computation Offloading With Cloud and Fog Computing. IEEE Access 2017, 5, 21355–21367. [Google Scholar] [CrossRef]

- Yin, L.; Luo, J.; Luo, H. Tasks Scheduling and Resource Allocation in Fog Computing Based on Containers for Smart Manufacturing. IEEE Trans. Ind. Inform. 2018, 14, 4712–4721. [Google Scholar] [CrossRef]

- Mukherjee, M.; Guo, M.; Lloret, J.; Iqbal, R.; Zhang, Q. Deadline-Aware Fair Scheduling for Offloaded Tasks in Fog Computing With Inter-Fog Dependency. IEEE Commun. Lett. 2019, 24, 307–311. [Google Scholar] [CrossRef]

- Qayyum, T.; Malik, A.W.; Khattak, M.A.K.; Khalid, O.; Khan, S.U. FogNetSim++: A Toolkit for Modeling and Simulation of Distributed Fog Environment. IEEE Access 2018, 6, 63570–63583. [Google Scholar] [CrossRef]

- Marsan, M.A.; Meo, M. Queueing systems to study the energy consumption of a campus WLAN. Comput. Netw. 2014, 66, 82–93. [Google Scholar] [CrossRef]

- Li, F.; Wang, X.; Cao, J.; Wang, R.; Bi, Y. A State Transition-Aware Energy-Saving Mechanism for Dense WLANs in Buildings. IEEE Access 2017, 5, 25671–25681. [Google Scholar] [CrossRef]

- Monil, M.A.H.; Qasim, R.; Rahman, R.M. Energy-Aware VM consolidation approach using combination of heuristics and migration control. In Proceedings of the Ninth International Conference on Digital Information Management (ICDIM 2014) 2014, Phitsanulok, Thailand, 29 September–1 October 2014; pp. 74–79. [Google Scholar]

- Mosa, A.; Paton, N.W. Optimizing virtual machine placement for energy and SLA in clouds using utility functions. J. Cloud Comput. 2016, 5, 17. [Google Scholar] [CrossRef]

- Mahadevamangalam, S. Energy-Aware Adaptation in Cloud Datacenters. Master’s Thesis, Blekinge Institute of Technology, Karlskrona, Sweden, October 2018. [Google Scholar]

- Monil, M.A.H.; Rahman, R.M. Implementation of modified overload detection technique with VM selection strategies based on heuristics and migration control. In Proceedings of the 2015 IEEE/ACIS 14th International Conference on Computer and Information Science (ICIS), Las Vegas, NV, USA, 28 June–1 July 2015; pp. 223–227. [Google Scholar]

- Monil, M.A.H.; Rahman, R.M. VM consolidation approach based on heuristics fuzzy logic, and migration control. J. Cloud Comput. 2016, 5, 755. [Google Scholar] [CrossRef]

- Abedin, S.F.; Alam, G.R.; Tran, N.H.; Hong, C.S. A Fog based system model for cooperative IoT node pairing using matching theory. In Proceedings of the 2015 17th Asia-Pacific Network Operations and Management Symposium (APNOMS), Busan, Korea, 19–21 August 2015; pp. 309–314. [Google Scholar]

- Zohora, F.T.; Khan, M.R.R.; Bhuiyan, M.F.R.; Das, A.K. Enhancing the capabilities of IoT based fog and cloud infrastructures for time sensitive events. In Proceedings of the 2017 International Conference on Electrical Engineering and Computer Science (ICECOS), Palembang, Indonesia, 22–23 August 2017. [Google Scholar]

- Lee, G.; Saad, W.; Bennis, M. An online secretary framework for fog network formation with minimal latency. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- El Kafhali, S.; Salah, K.; Alla, S.B. Performance Evaluation of IoT-Fog-Cloud Deployment for Healthcare Services. In Proceedings of the 2018 4th International Conference on Cloud Computing Technologies and Applications (Cloudtech), Brussels, Belgium, 26–28 November 2018. [Google Scholar]

- Liu, L.; Chang, Z.; Guo, X. Socially Aware Dynamic Computation Offloading Scheme for Fog Computing System With Energy Harvesting Devices. IEEE Internet Things J. 2018, 5, 1869–1879. [Google Scholar] [CrossRef]

- Rahbari, D.; Nickray, M. Scheduling of fog networks with optimized knapsack by symbiotic organisms search. In Proceedings of the 2017 21st Conference of Open Innovations Association (FRUCT), Helsinki, Finland, 6–10 November 2017; pp. 278–283. [Google Scholar]

- Gupta, H.; Dastjerdi, A.V.; Ghosh, S.K.; Buyya, R. iFogSim: A toolkit for modeling and simulation of resource management techniques in the Internet of Things, Edge and Fog computing environments. Softw. Pr. Exp. 2017, 47, 1275–1296. [Google Scholar] [CrossRef]

| Ref | Offloading Deployment | Architecture Model | Fog Cooperation | Offloading Threshold | Communication Type | Objectives | Evaluation Tool | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IoT-Fog | IoT-Fog-Cloud | Fog-Cloud | Fog | Vertical | Horizontal | Delay | Energy | Throughput | ||||||||

| Yes | Relation with Delay | Which Energy | Which Device | |||||||||||||

| Tang et al. [18] | Offline |  | - | - | - | X | static |  | X |  |  | Energy constraint | Energy for data processing and data transmission | IoT | X | MATLAB |

| Wang and Chen [19] |  | - | - | - | X | static |  | X |  |  | Energy constraint | Energy for local processing, processing at fog nodes, transmitting tasks | IoT devices and Fog nodes | X | Simulation | |

| Liu et al. [11] | - |  | - | - | X | static |  | X |  |  | Trade-off | Energy spent by local processing and transmitting tasks | Mobile devices | X | Simulation | |

| Mukherjee et al. [20] | - |  | - | - |  | static |  |  |  | X | X | X | X | X | Monte Carlo simulations | |

| Zhu et al. [13] | - |  | - | - | X | static |  | X |  |  | Offloading policy is designed to minimize task execution delay and to save energy | The energy spent by uploading and receiving tasks | Mobile devices | X | Simulation (MATLAB) | |

| Mukherjee et al. [21] | - |  | - | - |  | static |  |  |  |  | Minimize total systems cost which includes total energy consumption & total processing delay | Energy consumed by local computing and uploading tasks to fog nodes | End user devices | X | MATLAB | |

| Chen and Hao [14] |  | - | - | - | X | static |  | X |  |  | Battery capacity | Energy for transmitting tasks to edge devices and for local processing | End user devices | X | Simulation | |

| Sun et al. [22] | - |  | - | - | X | static |  | X |  |  | Selects the lowest overhead cost which involves total computational time plus total energy spent by either processing task at IoT devices or transmission tasks to fog or cloud | Processing and transmission power | IoT, fog nodes, cloud servers | X | iFogSim | |

| Zhao et al. [12] | - |  | - | - | X | static |  | X |  |  | Minimizes the system cost which is the total offloading latency and the total energy consumption | Transmission and processing energy | Whole System: end user devices, fog nodes, and cloud servers | X | Simulation | |

| Meng et al. [23] | - |  | - | - | X | static |  | X |  |  | Minimizing energy given delay constraint | Transmission + computational energy | Mobile terminal, fog nodes, and cloud servers | X | Simulation | |

| Xiao and Krunz [8] | - | - |  | - |  | static |  |  |  |  | Trade-off | Transmission energy | Fog nodes | X | Simulation | |

| Yousefpour et al. [16] | Online | - |  | - | - |  | static |  |  |  | X | X | X | X | X | Simulation |

| Yin et al. [24] | - | - |  | - | X | static |  | X |  | X | X | X | X |  | Simulation | |

| Al-Khafajiy et al. [15] | - |  | - | - |  | static |  |  |  | X | X | X | X | X | MATLAB-based simulation | |

| Gao et al. [17] | - | - |  | - |  | static |  | X |  |  | Trade-off | Processing and transmitting tasks between fog nodes | Fog nodes | X | Simulation | |

| Mukherjee et al. [25] | - | - | - |  |  | static | X |  |  | X | X | X | X | X | Simulation | |

| Alenizi and Rana [1] | - | - |  | - |  | static |  |  |  |  | X | POWERING ON and processing tasks | Fog nodes |  | iFogSim | |

| The proposed approach | - | - |  | - |  | Dynamic |  |  |  |  | X | POWERING ON and processing tasks | Fog nodes |  | iFogSim | |

| Fog Node Type | Primary Fog Node | Neighboring Fog Nodes | |||

|---|---|---|---|---|---|

| Fog node A | Fog node B | Fog node C | Fog node D | Fog node E | |

| Threshold | 9 ms | 9 ms | 9 ms | 9 ms | 9 ms |

| Fog Node Type | Primary Fog Node | Neighboring Fog Nodes | |||

|---|---|---|---|---|---|

| Fog node B | Fog node A | Fog node F | Fog node G | Fog node H | |

| Threshold | 6 ms | 6 ms | 6 ms | 6 ms | 6 ms |

| Symbol | Description |

|---|---|

| Refers to the initial offloading threshold and the current threshold. | |

| VQ | Average queueing delay of all the neighbors |

| New offloading threshold. | |

| x | . |

| Ns | All neighboring fog nodes |

| Qs | Set of all queueing delay of all its neighbors |

| QNeighbours | Set of all neighbors and their queueing delay |

| When the queuing delay reaches this threshold, the fog node might consider decreasing its offloading threshold. | |

| N | The best neighbor fog node |

| T | The arrival task |

| Q | Queuing delay in the primary fog node |

| p | A number bigger than zero that determines how much to modify the offloading threshold based on the current offloading threshold |

| QN | Queueing delay of one neighbor |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alenizi, F.; Rana, O. Dynamically Controlling Offloading Thresholds in Fog Systems. Sensors 2021, 21, 2512. https://doi.org/10.3390/s21072512

Alenizi F, Rana O. Dynamically Controlling Offloading Thresholds in Fog Systems. Sensors. 2021; 21(7):2512. https://doi.org/10.3390/s21072512

Chicago/Turabian StyleAlenizi, Faten, and Omer Rana. 2021. "Dynamically Controlling Offloading Thresholds in Fog Systems" Sensors 21, no. 7: 2512. https://doi.org/10.3390/s21072512

APA StyleAlenizi, F., & Rana, O. (2021). Dynamically Controlling Offloading Thresholds in Fog Systems. Sensors, 21(7), 2512. https://doi.org/10.3390/s21072512