Smartwatch User Authentication by Sensing Tapping Rhythms and Using One-Class DBSCAN

Abstract

1. Introduction

- A new one-class classification algorithm called One-Class DBSCAN is proposed, which contributes a solution to the one-class classification.

- We also propose a method that can detect the security level of a tapping rhythm and prompt users to set more complex passwords if the password is too simple.

2. Related Work

2.1. Authentication Based on Physiological Biometrics

2.2. Authentication Based on Behavioral Biometrics

2.3. Authentication Based on Knowledge

3. Methodology

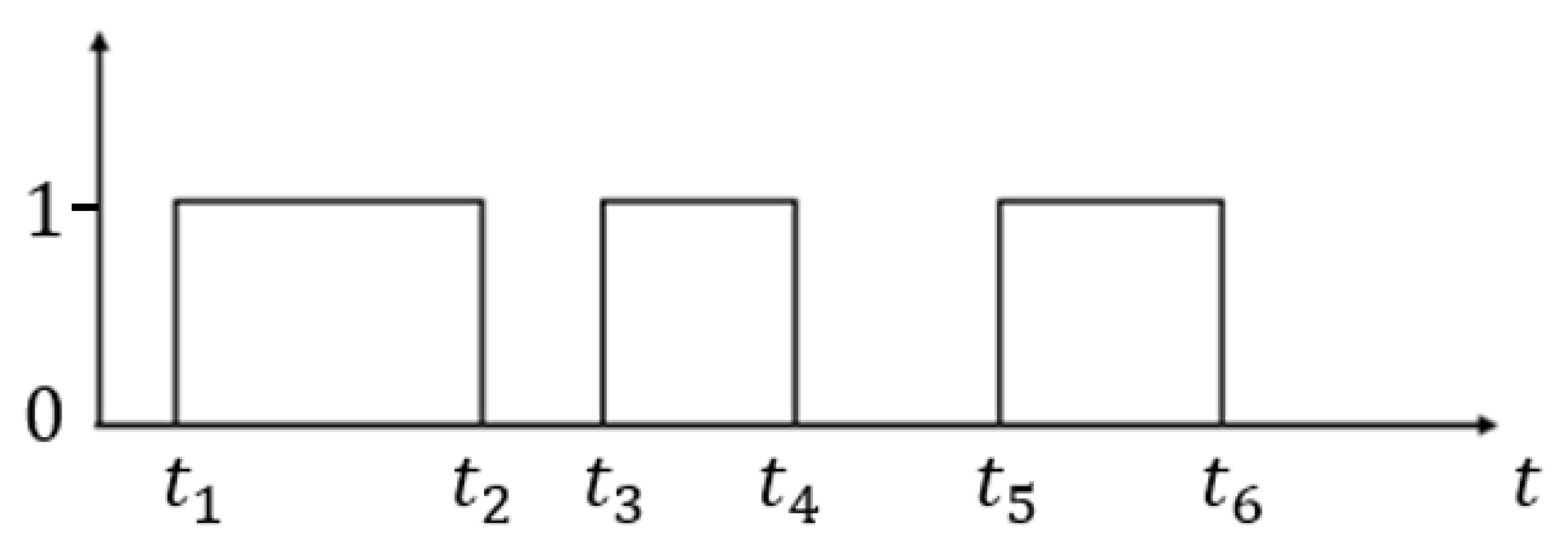

3.1. Feature Extraction

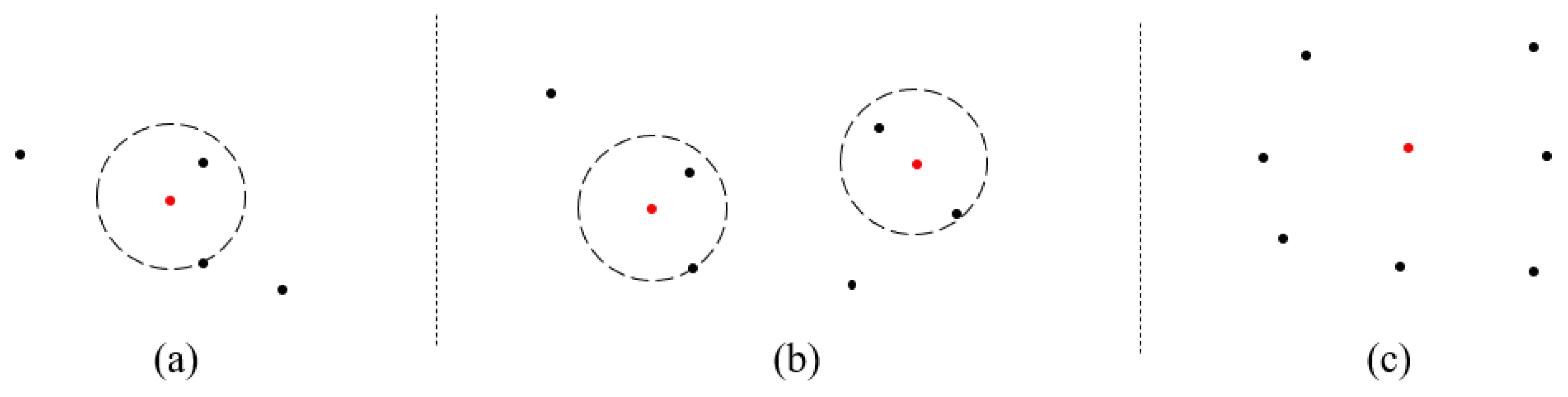

3.2. Model Training and Authentication

| Algorithm 1 One-Class DBSCAN |

| Input: |

D: The dimension of the vector. m: The size of the training data. {}: Training dataset : The parameter of One-Class DBSCAN, which means that the distance is . : The parameter of One-Class DBSCAN, which is the minimum number of data vectors within the distance required to form a core object. |

| Function: |

: The set of core objects |

| Algorithm 2 Authentication |

| Input: D: The dimension of the vector. L: The number of core objects. : The set of core objects : The parameter of One-Class DBSCAN, which means that the distance is . v: The vector of the new sample. Function:

True or False: Whether the new sample belongs to this class |

4. Experiment

4.1. Datasets and Evaluation Indicators

4.2. Implementation

| Algorithm 3 Evaluation process |

|

4.3. Ablation Study

4.4. Comparison

4.5. Running Time

5. Tapping Rhythm Security Improvement

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Seneviratne, S.; Hu, Y.; Nguyen, T.; Lan, G.; Khalifa, S.; Thilakarathna, K. A Survey of Wearable Devices and Challenges. IEEE Commun. Surv. Tutor. 2017, 19, 2573–2620. [Google Scholar] [CrossRef]

- Privacy Act 1988. Federal Register of Legislation. Available online: https://www.legislation.gov.au/Details/C2015C00279 (accessed on 1 January 2021).

- Haghighi, M.S.; Nader, O.; Jolfaei, A. A Computationally Intelligent Hierarchical Authentication and Key Establishment Framework for the Internet of Things. IEEE Internet Things Mag. 2020, 3, 36–39. [Google Scholar] [CrossRef]

- Hoyle, R.; Templeman, R.; Armes, S.; Anthony, D.; Crandall, D.; Kapadia, A. Privacy behaviors of lifeloggers using wearable cameras. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Seattle, WA, USA, 13–17 September 2014; pp. 571–582. [Google Scholar]

- Saa, P.; Moscoso-Zea, O.; Lujan-Mora, S. Wearable Technology, Privacy Issues. In Proceedings of the International Conference on Information Technology & Systems, Libertad City, Ecuador, 10–12 January 2018; pp. 518–527. [Google Scholar]

- Stojmenovic, I.; Wen, S.; Huang, X.; Luan, H. An Overview of Fog Computing and its Security Issues. Concurr. Comput. Pract. Exp. 2016, 28, 2991–3005. [Google Scholar] [CrossRef]

- Sametinger, J.; Rozenblit, J.; Lysecky, R.; Ott, P. Security Challenges for Medical Devices. Commun. ACM 2015, 58, 74–82. [Google Scholar] [CrossRef]

- Chen, X.; Li, C.; Wang, D.; Wen, S.; Zhang, J.; Nepal, S.; Ren, K. Android HIV: A Study of Repackaging Malware for Evading Machine-Learning Detection. IEEE Trans. Inf. Forensics Secur. 2020, 15, 987–1001. [Google Scholar] [CrossRef]

- Satyanarayanan, M. The emergence of edge computing. Computer 2016, 50, 30–39. [Google Scholar] [CrossRef]

- Weisong, S.; Schahram, D. The Promise of Edge Computing. Computer 2016, 49, 78–81. [Google Scholar]

- Yu, J.; Hou, B. Survey on IMD and Wearable Devices Security Threats and Protection Methods. In Proceedings of the International Conference on Cloud Computing and Security, Haikou, China, 8–10 June 2018; pp. 90–101. [Google Scholar]

- Internet of Things Security Study: Smartwatches. Available online: https://www.ftc.gov/system/files/documents/public_comments/2015/10/00050-98093.pdf (accessed on 1 January 2021).

- Lee, J.J.; Noh, S.; Park, K.R.; Kim, J. Iris Recognition in Wearable Computer. In Proceedings of the International Conference on Biometric Authentication, Hong Kong, China, 15–17 July 2004; pp. 475–483. [Google Scholar]

- Wang, T.; Song, Z.; Ma, J.; Xiong, Y.; Jie, Y. An anti-fake iris authentication mechanism for smart glasses. In Proceedings of the 2013 3rd International Conference on Consumer Electronics, Communications and Networks, Xianning, China, 20–22 November 2013; pp. 84–87. [Google Scholar]

- van der Haar, D. CaNViS: A cardiac and neurological-based verification system that uses wearable sensors. In Proceedings of the Third International Conference on Digital Information, Networking, and Wireless Communications, Moscow, Russia, 3–5 February 2015; pp. 99–104. [Google Scholar]

- Chun, S.Y.; Kang, J.H.; Kim, H.; Lee, C.; Oakley, I.; Kim, S.P. ECG based user authentication for wearable devices using short time Fourier transform. In Proceedings of the 39th International Conference on Telecommunications and Signal, Marrakech, Morocco, 20–23 October 2016; pp. 656–659. [Google Scholar]

- Cornelius, C.; Peterson, R.; Skinner, J.; Halter, R.; Kotz, D. A wearable system that knows who wears it. In Proceedings of the 12th annual International Conference on Mobile Systems, Applications, and Services, Bretton Woods, NH, USA, 16–19 June 2014; pp. 55–67. [Google Scholar]

- Yang, L.; Wang, W.; Zhang, Q. VibID: User identification through bio-vibrometry. In Proceedings of the 15th International Conference on Information Processing in Sensor Networks, Vienna Austria, 11–14 April 2016; pp. 1–12. [Google Scholar]

- Wang, Z.; Shen, C.; Chen, Y. Handwaving authentication: Unlocking your smartwatch through handwaving biometrics. In Proceedings of the Chinese Conference on Biometric Recognition, Beijing, China, 28–29 October 2017; pp. 545–553. [Google Scholar]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity Recognition using Cell Phone Accelerometers. ACM SIGKDD Explor. Newsl. 2010, 12, 74–82. [Google Scholar] [CrossRef]

- Zeng, Y.; Pande, A.; Zhu, J.; Mohapatra, P. WearIA: Wearable device implicit authentication based on activity information. In Proceedings of the IEEE International Symposium on A World of Wireless, Mobile and Multimedia Networks, Macau, China, 12–15 June 2017; pp. 1–9. [Google Scholar]

- Yang, J.; Li, Y.; Xie, M. MotionAuth: Motion-based authentication for wrist worn smart devices. In Proceedings of the IEEE International Conference on Pervasive Computing and Communication Workshops, St. Louis, MO, USA, 23–27 March 2015; pp. 550–555. [Google Scholar]

- Shen, C.; Wang, Z.; Si, C.; Chen, Y.; Su, X. Waving Gesture Analysis for User Authentication in Mobile Environment. IEEE Netw. Mag. 2020, 34, 57–63. [Google Scholar] [CrossRef]

- Wu, J.; Pan, G.; Zhang, D.; Qi, G.; Li, S. Gesture Recognition with a 3-D Accelerometer. In Proceedings of the International Conference on Ubiquitous Intelligence and Computing, Brisbane, Australia, 7–9 July 2009; pp. 25–38. [Google Scholar]

- Akl, A.; Feng, C.; Valaee, S. A Novel Accelerometer-Based Gesture Recognition System. IEEE Trans. Signal Process. 2011, 59, 6197–6205. [Google Scholar] [CrossRef]

- Liu, J.; Zhong, L.; Wickramasuriya, J.; Vasudevan, V. uWave: Accelerometer-based personalized gesture recognition and its applications. Pervasive Mob. Comput. 2009, 5, 657–675. [Google Scholar] [CrossRef]

- Hutchins, B.; Reddy, A.; Jin, W.; Zhou, M.; Li, M.; Yang, L. Beat-PIN: A User Authentication Mechanism for Wearable Devices Through Secret Beats. In Proceedings of the Asia Conference on Computer and Communications Security, Incheon, Korea, 4–8 June 2018; pp. 101–115. [Google Scholar]

- Shrestha, P.; Saxena, N. An Offensive and Defensive Exposition of Wearable Computing. ACM Comput. Surv. 2018, 50, 1–39. [Google Scholar] [CrossRef]

- Alzubaidi, A.; Kalita, J. Authentication of smartphone users using behavioral biometrics. IEEE Commun. Surv. Tutor. 2016, 18, 1998–2026. [Google Scholar] [CrossRef]

- Lin, G.; Wen, S.; Han, Q.L.; Zhang, J.; Xiang, Y. Software Vulnerability Detection Using Deep Neural Networks: A Survey. Proc. IEEE 2020, 108, 1825–1848. [Google Scholar] [CrossRef]

- Mare, S.; Markham, A.M.; Cornelius, C.; Peterson, R.; Kotz, D. ZEBRA: Zero-Effort Bilateral Recurring Authentication. In Proceedings of the 2014 IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 18–21 May 2014; pp. 705–720. [Google Scholar]

- Ren, Y.; Chen, Y.; Chuah, M.C.; Yang, J. Smartphone based user verification leveraging gait recognition for mobile healthcare systems. In Proceedings of the 2013 IEEE International Conference on Sensing, Communications and Networking, New Orleans, LA, USA, 24–27 June 2013; pp. 149–157. [Google Scholar]

- Draffin, B.; Zhu, J.; Zhang, J. KeySens: Passive User Authentication through Micro-behavior Modeling of Soft Keyboard Interaction. In Proceedings of the International Conference on Mobile Computing, Applications, and Services, Paris, France, 7–8 November 2013; pp. 184–201. [Google Scholar]

- Frank, M.; Biedert, R.; Ma, E.; Martinovic, I.; Song, D. Touchalytics: On the Applicability of Touchscreen Input as a Behavioral Biometric for Continuous Authentication. IEEE Trans. Inf. Forensics Secur. 2013, 8, 136–148. [Google Scholar] [CrossRef]

- De Luca, A.; Hang, A.; Brudy, F.; Lindner, C.; Hussmann, H. Touch me once and i know it’s you! implicit authentication based on touch screen patterns. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 987–996. [Google Scholar]

- Li, S.; Ashok, A.; Zhang, Y.; Xu, C.; Lindqvist, J.; Gruteser, M. Whose move is it anyway? Authenticating smart wearable devices using unique head movement patterns. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications, Sydney, Australia, 14–19 March 2016; pp. 1–9. [Google Scholar]

- Vasaki, P.; Chan, M.Y.; Adnan, B.A.A. Mobile Authentication Using Tapping Behavior. In Proceedings of the International Conference on Advances in Cyber Security, Penang, Malaysia, 30 July–1 August 2019; pp. 182–194. [Google Scholar]

- Satvik, K.; Ahmed, S.A. Woodpecker: Secret Back-of-Device Tap Rhythms to Authenticate Mobile Users. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics, Toronto, ON, Canada, 11–14 October 2020; pp. 2727–2733. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man, Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Pregibon, D. Logistic regression diagnostics. Ann. Stat. 1981, 9, 705–724. [Google Scholar] [CrossRef]

| N | 5 | 6 | 7 | 8 | 9 | 10 | Total |

|---|---|---|---|---|---|---|---|

| Num | 1700 | 1770 | 1330 | 790 | 260 | 260 | 6110 |

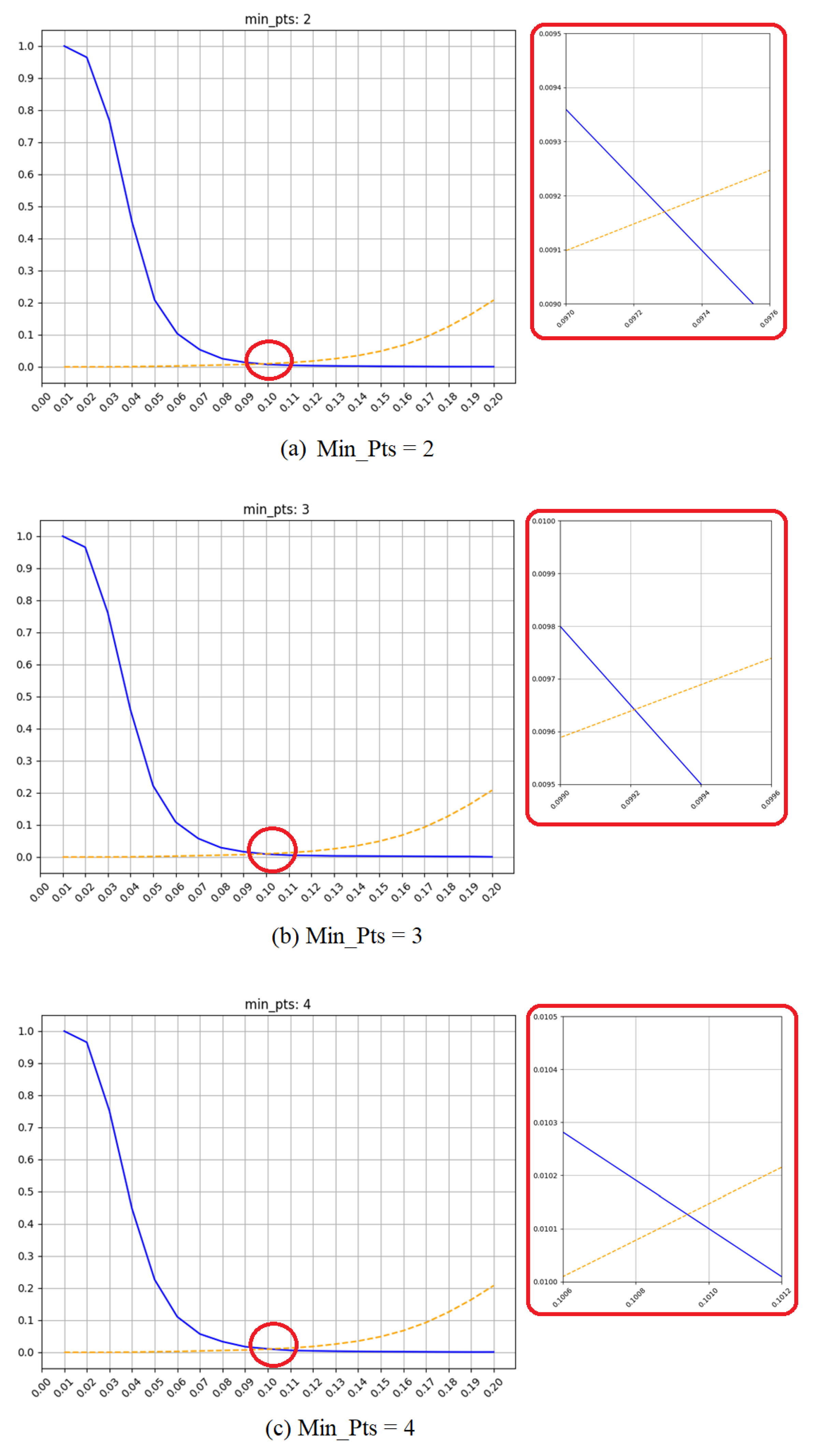

| 2 | 3 | 4 | |

|---|---|---|---|

| 0.0973 | 0.0992 | 0.1009 | |

| 0.92% | 0.96% | 1.01% |

| N | 5 | 6 | 7 | 8 |

|---|---|---|---|---|

| 1.11% | 0.89% | 0.85% | 0.63% | |

| 1.16% | 0.84% | 0.83% | 0.69% |

| Experiment | Optimal Parameter(s) | |

|---|---|---|

| Our method (Our features and One-Class DBSCAN) | , | 0.92% |

| Ben Hutchins et al. [27] method (Features in Ben Hutchins et al. [27] and Vector Comparison) | 4.04% | |

| Features in Ben Hutchins et al. [27] and One-Class DBSCAN | , | 2.51% |

| Our features and Vector Comparison | 1.06% | |

| Mean Shift | 53.4% | |

| Isolation Forest | , | 30.6% |

| Experiment | Optimal Parameter(s) | ||

|---|---|---|---|

| Decision Tree | = gini, = best, = 3, = 0.8 | 7.0% | 24.9% |

| Logistic Regression | 0.09% | 16.8% |

| N | Training | Authentication | ||

|---|---|---|---|---|

| avg (ms) | std (ms) | avg (ms) | std (ms) | |

| 5 | 49.1 | 9.58 | 8.9 | 4.12 |

| 6 | 51.3 | 8.12 | 9.6 | 3.66 |

| 7 | 53.8 | 6.01 | 11.2 | 3.73 |

| 8 | 55.6 | 8.24 | 12.6 | 2.53 |

| avg(ms) | 52.45 | 10.58 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Xiao, X.; Ni, S.; Dou, C.; Zhou, W.; Xia, S. Smartwatch User Authentication by Sensing Tapping Rhythms and Using One-Class DBSCAN. Sensors 2021, 21, 2456. https://doi.org/10.3390/s21072456

Zhang H, Xiao X, Ni S, Dou C, Zhou W, Xia S. Smartwatch User Authentication by Sensing Tapping Rhythms and Using One-Class DBSCAN. Sensors. 2021; 21(7):2456. https://doi.org/10.3390/s21072456

Chicago/Turabian StyleZhang, Hanqi, Xi Xiao, Shiguang Ni, Changsheng Dou, Wei Zhou, and Shutao Xia. 2021. "Smartwatch User Authentication by Sensing Tapping Rhythms and Using One-Class DBSCAN" Sensors 21, no. 7: 2456. https://doi.org/10.3390/s21072456

APA StyleZhang, H., Xiao, X., Ni, S., Dou, C., Zhou, W., & Xia, S. (2021). Smartwatch User Authentication by Sensing Tapping Rhythms and Using One-Class DBSCAN. Sensors, 21(7), 2456. https://doi.org/10.3390/s21072456