Manipulation Planning for Object Re-Orientation Based on Semantic Segmentation Keypoint Detection

Abstract

1. Introduction

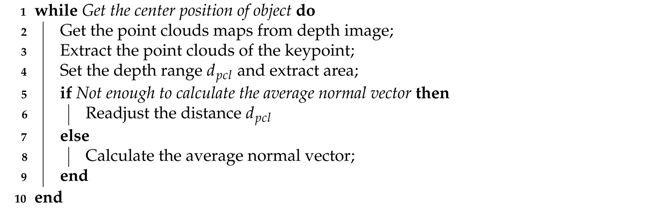

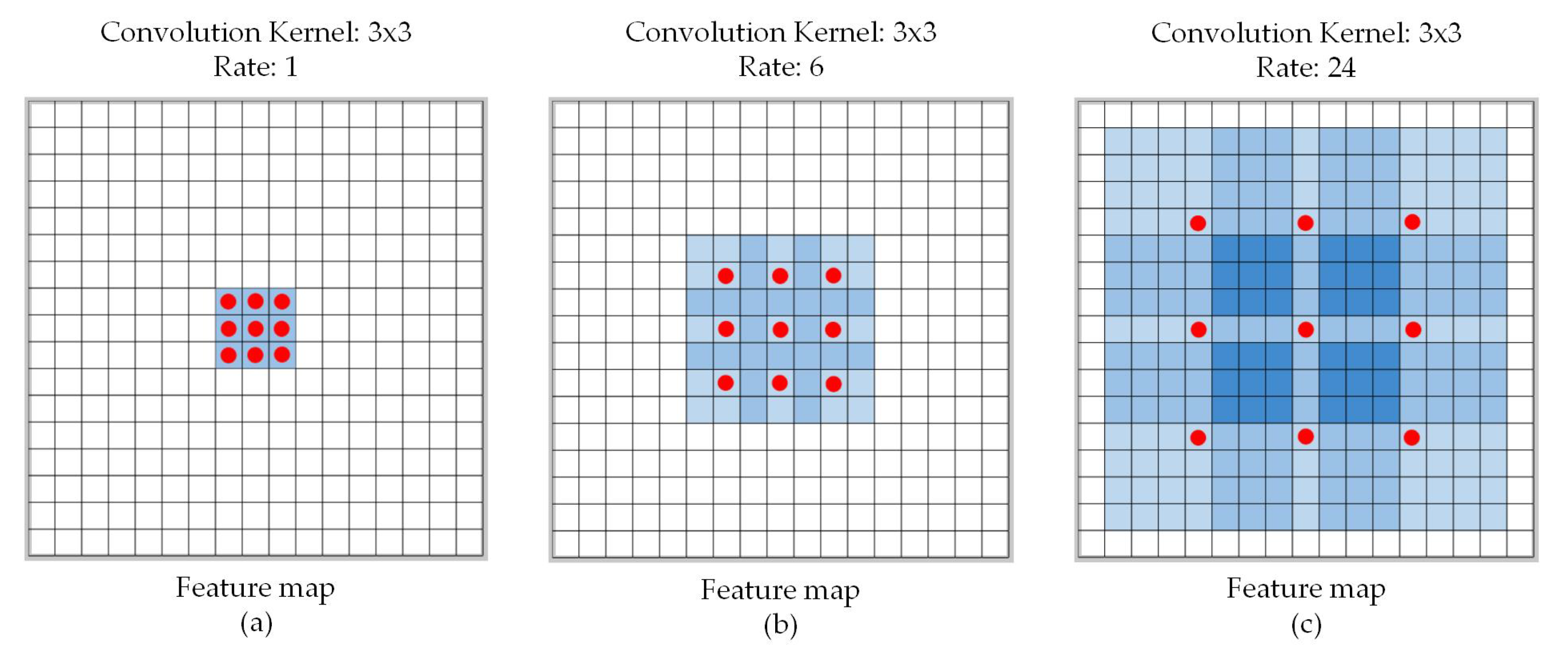

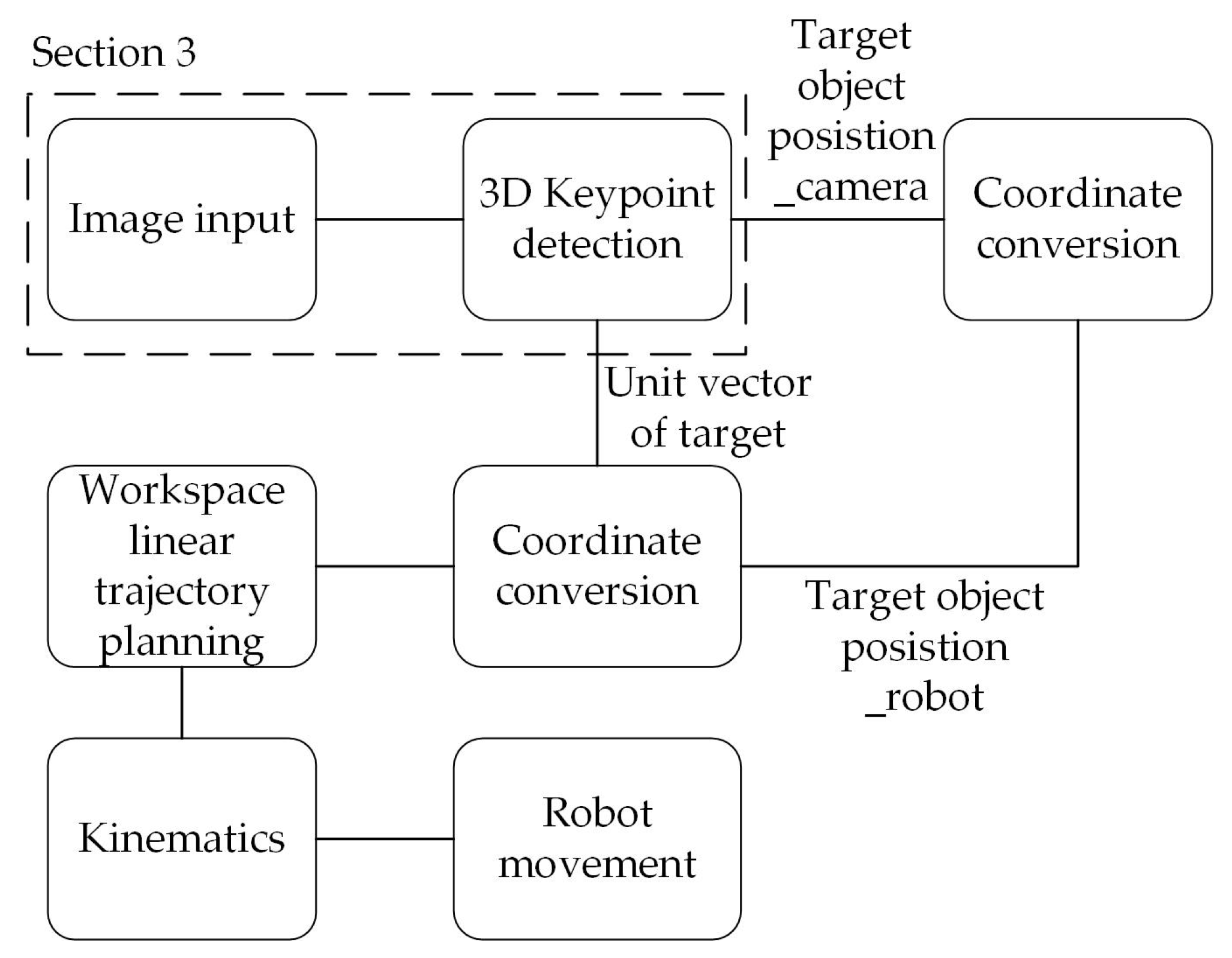

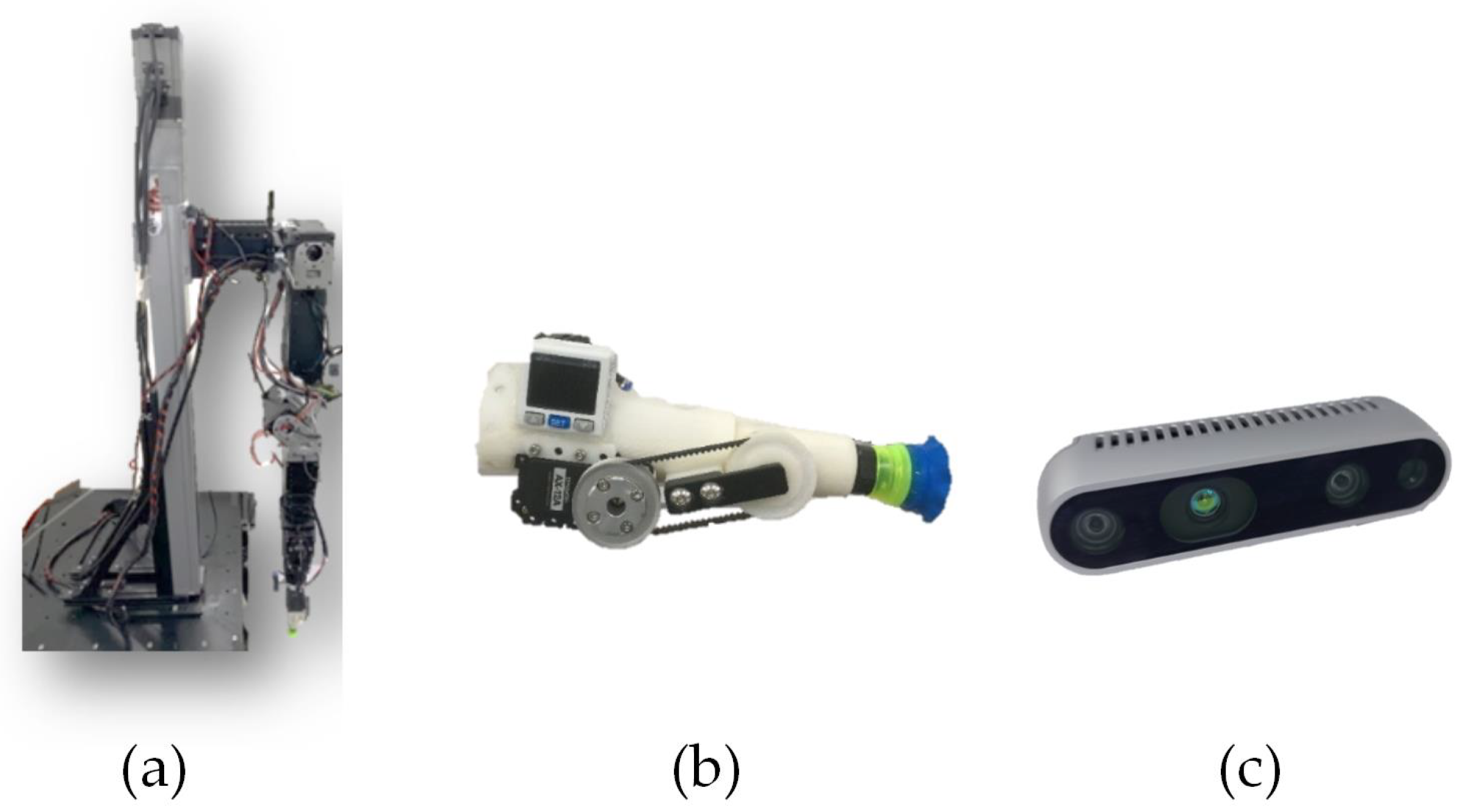

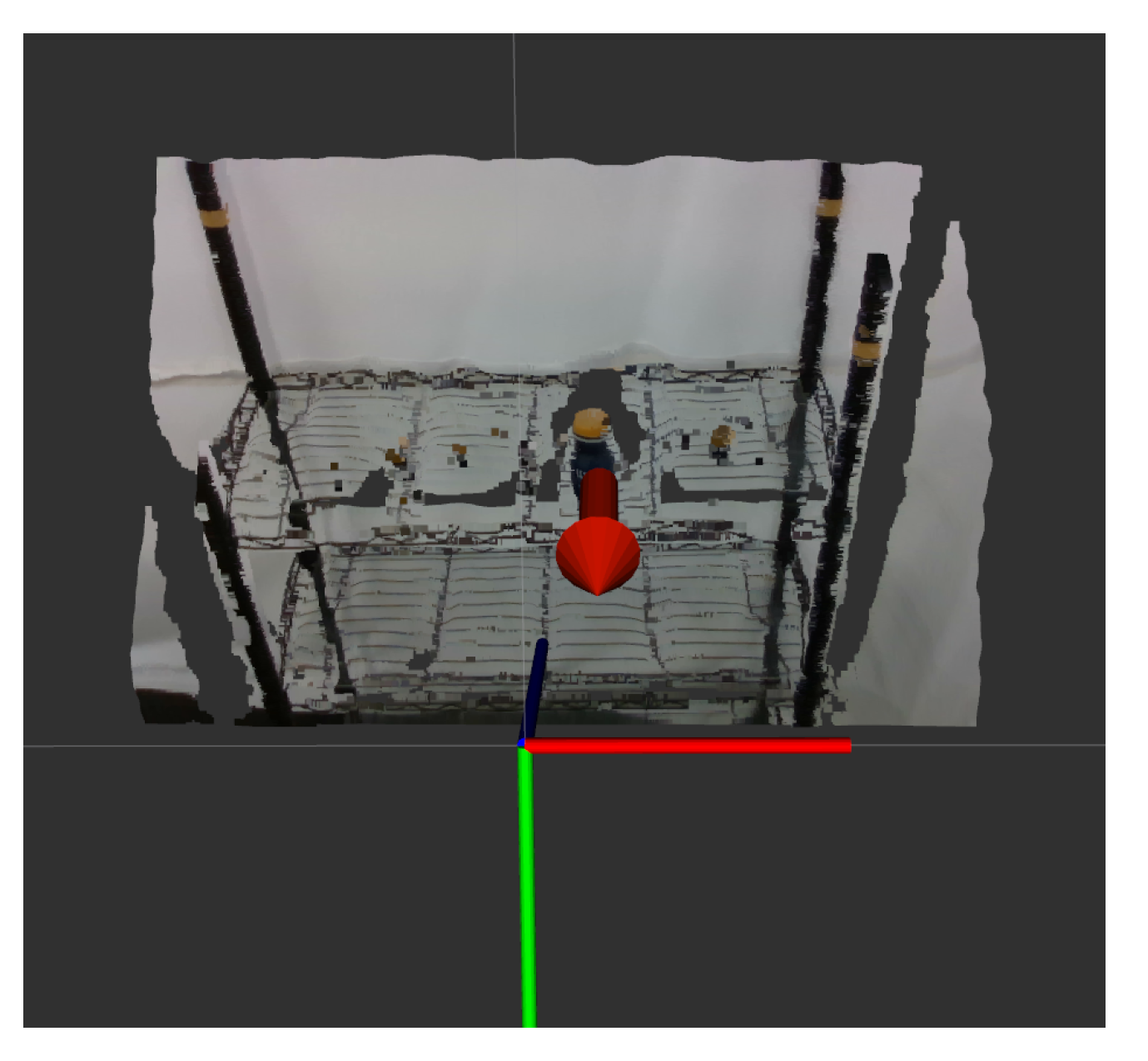

2. System Structure

3. 3D Keypoint Detection System

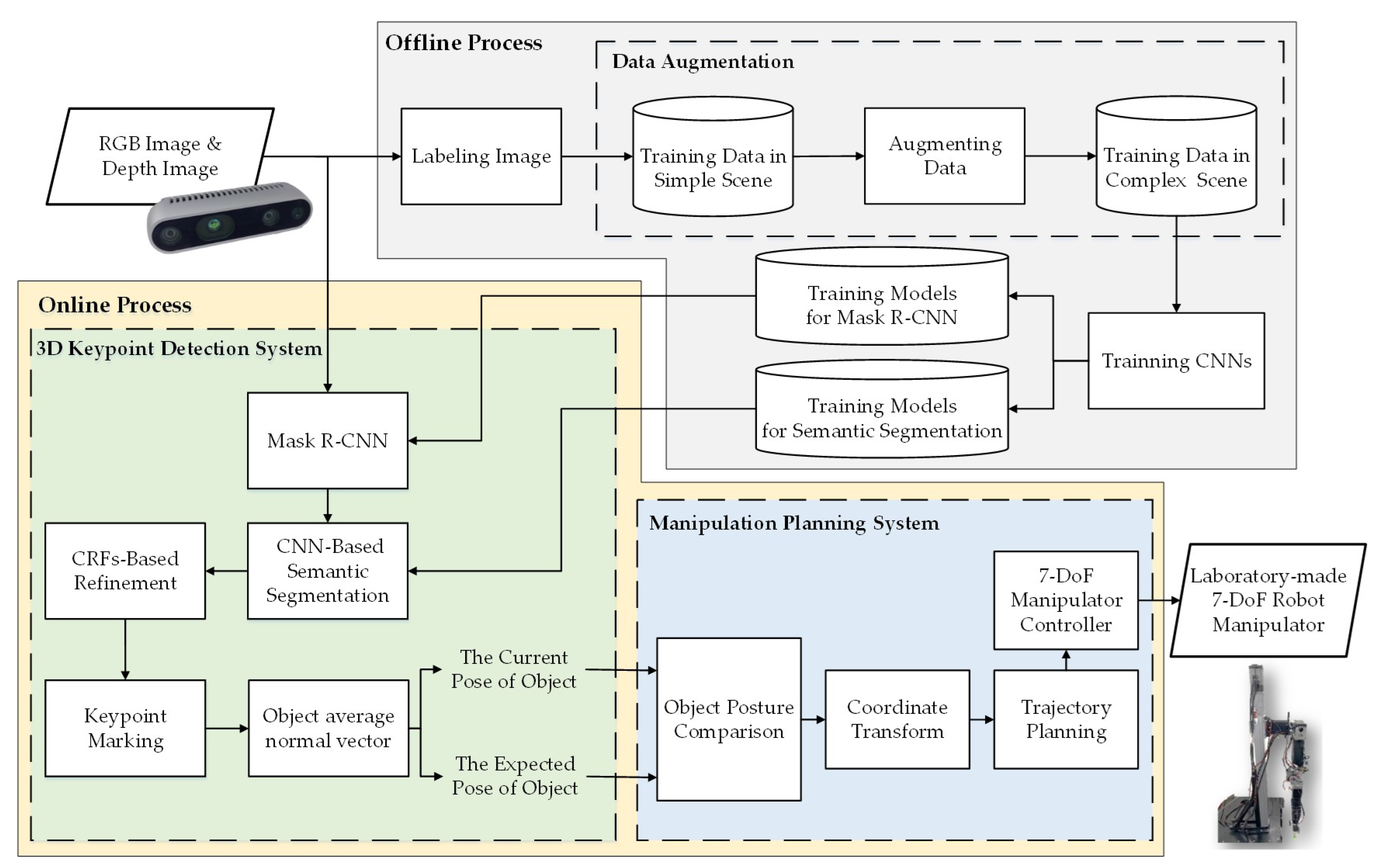

3.1. Mask R-CNN

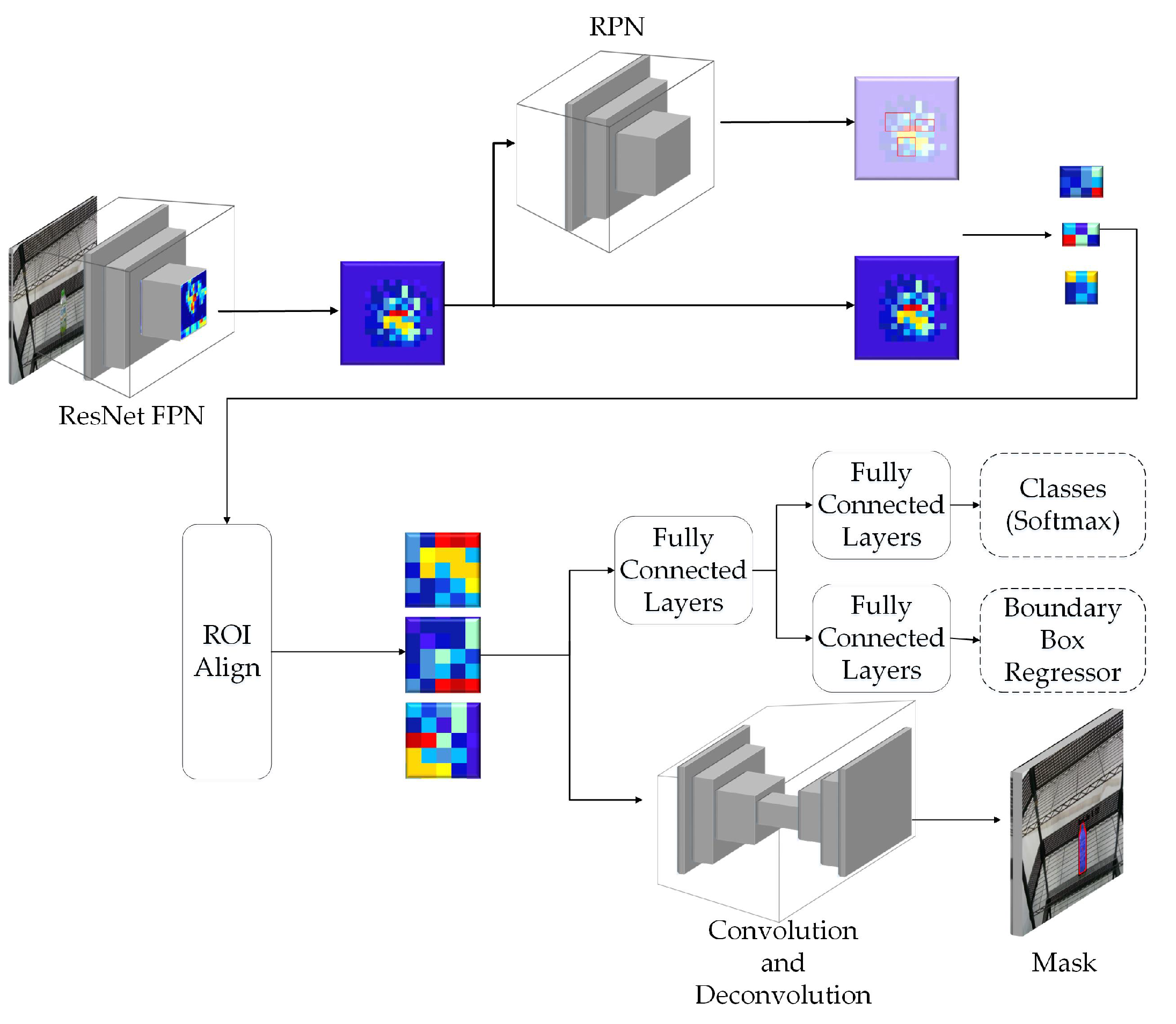

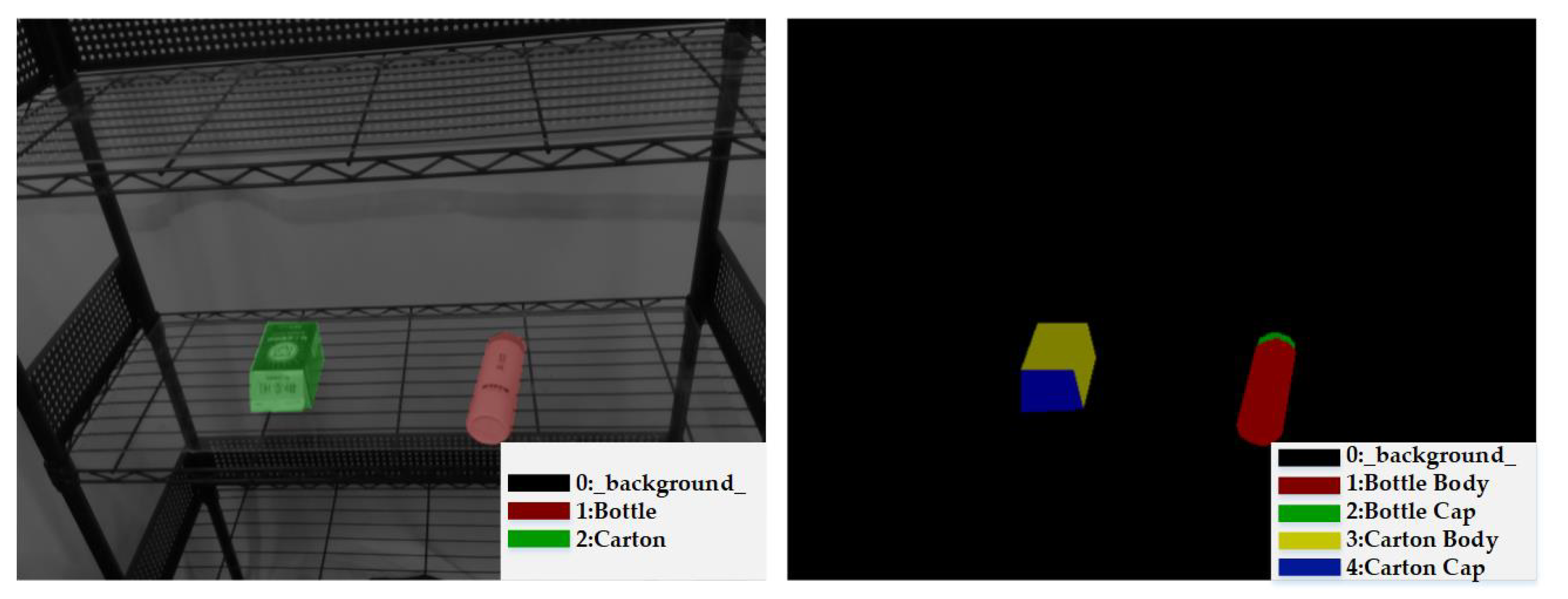

3.2. CNN-Based Semantic Segmentation

3.3. CRFs-Based Refinement

3.4. Keypoint Annotation and Object Average Normal Vector

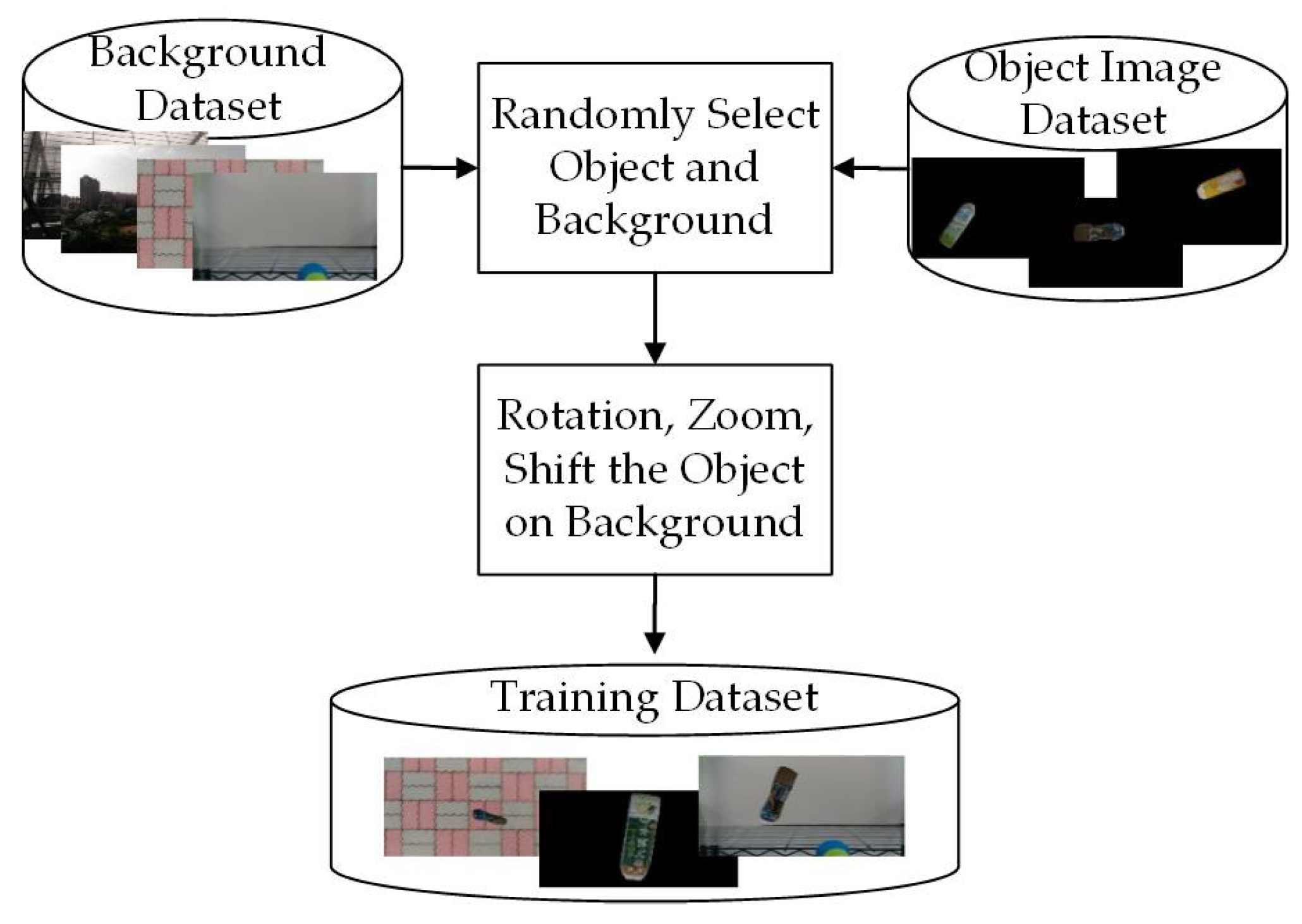

3.5. Data Augmentation for Training Dataset Generation

| Algorithm 1: Get average normal vector from point clouds. |

|

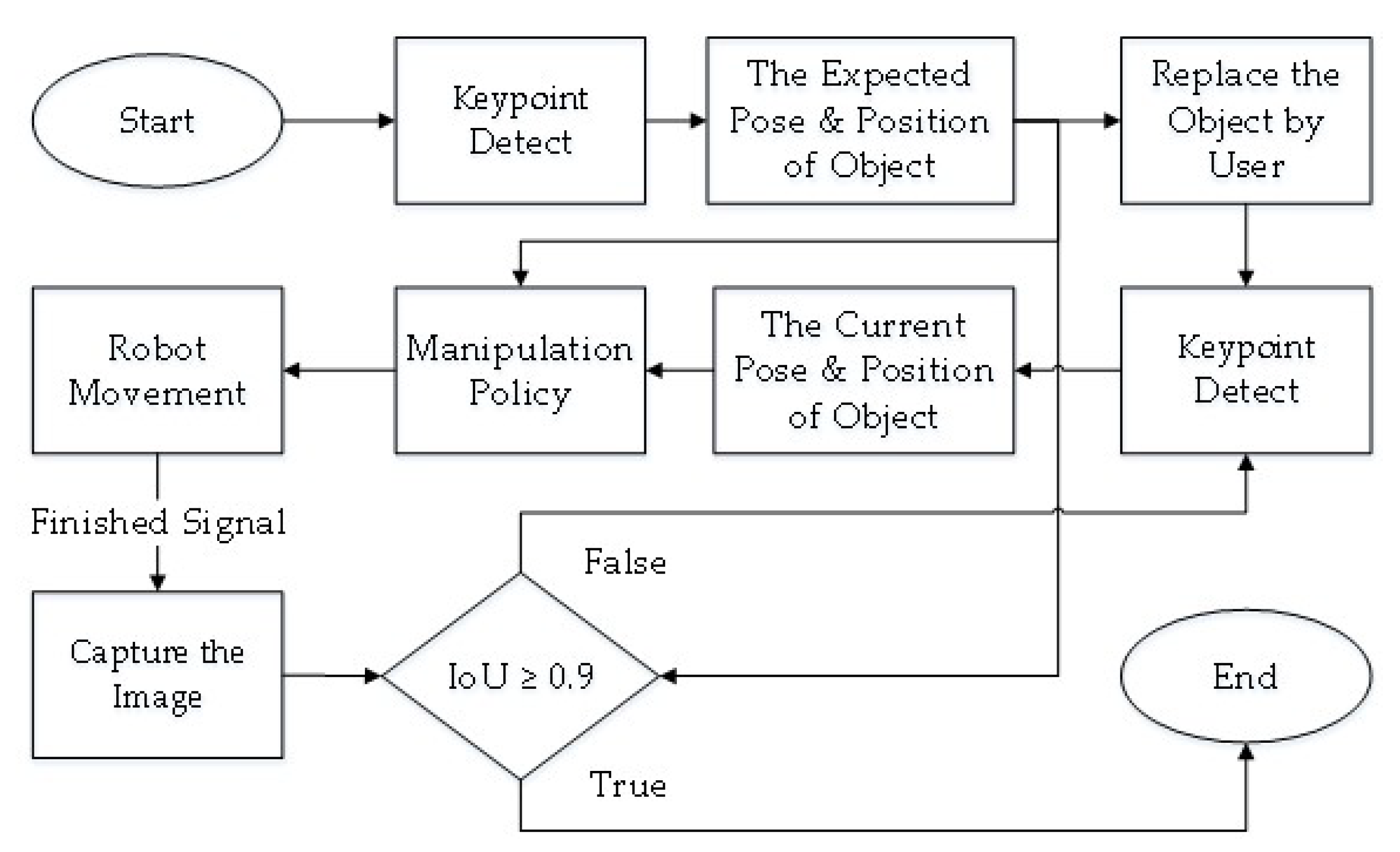

4. Manipulation Planning System

4.1. Object Pose Comparison

4.2. Robot Operation

5. Experimental Results

5.1. Image Recognition Result

5.1.1. CNN-Based Object Detection

5.1.2. Object Pose Estimation

5.2. Real-World Demonstration

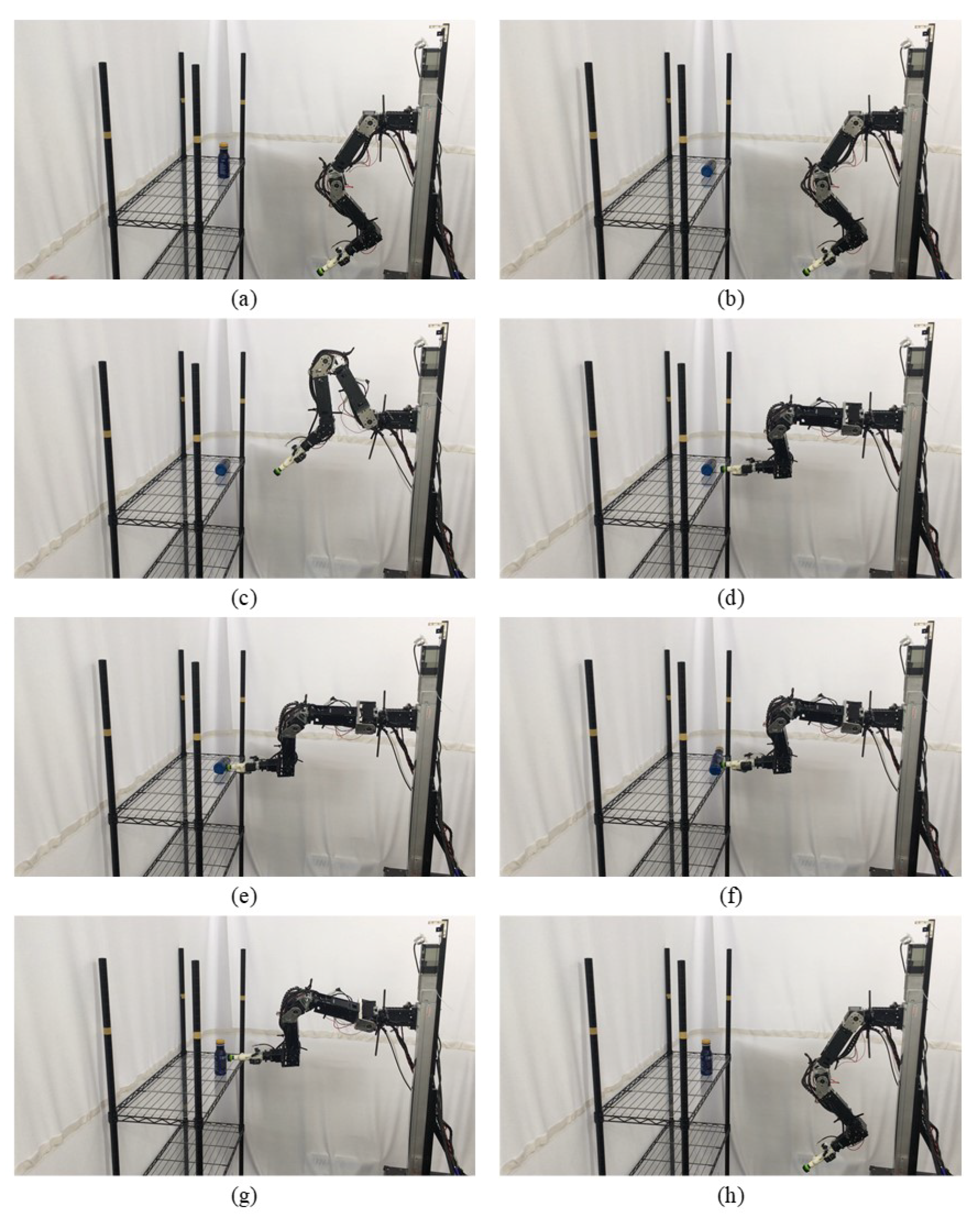

- (a)

- Capture the expected position and pose of the object.

- (b)

- Capture the current position and pose of the object.

- (c)

- Robot initial pose.

- (d)

- Move to the front of the object.

- (e)

- Suck the object.

- (f)

- Re-orientate the object.

- (g)

- Place the object to the expected pose.

- (h)

- Return to the initial state.

5.3. Computational Efficiency

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CRFs | Conditional Random Fields |

| DCNN | Deep Convolutional Neural Network |

| FOV | Field-of-View |

| FPN | Feature Pyramid Network |

| IoU | Intersection over Union |

| MAE | Mean Absolute Error |

| PCL | Point Cloud Library |

| R-CNN | Region-based Convolutional Neural Network |

| RoI | Regions of Interest |

| Slerp | Spherical Linear Interpolation |

| SSD | Single Shot MultiBox Detector |

| YOLO | You Only Look Once |

References

- Christian, S.; Wei, L.; Yangqing, J.; Pierre, S.; Scott, R.; Dragomir, A.; Dumitru, E.; Vincent, V.; Andrew, R. Going deeper with convolutions. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recogn. 2015, 7–12, 1–9. [Google Scholar]

- Yanmin, Q.; Philip, C.W. Very deep convolutional neural networks for robust speech recognition. IEEE Workshop Spok. Lang. Technol. 2017, 1, 481–488. [Google Scholar]

- Ross, G.; Jeff, D.; Trevor, D.; Jitendra, M. Rich feature hierarchies for accurate object detection and semantic segmentation. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recogn. 2014, 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. IEEE Int. Conf. Comput. Vis. 2015, 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Neural Inform. Process. Syst. 2015, 91–99. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Int. Conf. Comput. Vis. 2017, 2980–2988. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recogn. 2016, 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. IEEE Conf. Comput. Vis. Pattern Recogn. 2017, 187–213. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. Eu. Conf. Comput. Vis. 2016, 21–37. [Google Scholar] [CrossRef]

- Jiang, P.; Ishihara, Y.; Sugiyama, N.; Oaki, J.; Tokura, S.; Sugahara, A.; Ogawa, A. Depth image–based deep learning of grasp planning for textureless planar-faced objects in vision-guided robotic bin-picking. IEEE Sens. J. 2020, 20, 706. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.M.; Tsai, C.Y.; Lai, Y.C.; Li, S.A.; Wong, C.C. Visual object recognition and pose estimation based on a deep semantic segmentation network. IEEE Sen. J. 2018, 18, 9370–9381. [Google Scholar] [CrossRef]

- Wu, Y.; Fu, Y.; Wang, S. Deep instance segmentation and 6D object pose estimation in cluttered scenes for robotic autonomous grasping. Ind. Robot 2020, 47, 593–606. [Google Scholar] [CrossRef]

- Manuelli, L.; Gao, W.; Florence, P.; Tedrake, R. kPAM: KeyPoint affordances for category-level robotic manipulation. arXiv 2019, arXiv:1903.06684. [Google Scholar]

- Sun, X.; Xiao, B.; Wei, F.; Liang, S.; Wei, Y. Integral human pose regression. Comput. Sci. 2018, 11210, 536–553. [Google Scholar]

- Semochkin, A.N.; Zabihifar, S.; Efimov, A.R. Object grasping and manipulating according to user-defined method using key-points. In Proceedings of the IEEE International Conference on Developments in eSystems Engineering, Kazan, Russia, 7–10 October 2019; pp. 454–459. [Google Scholar]

- Vecerik, M.; Regli, J.-B.; Sushkov, O.; Barker, D.; Pevceviciute, R.; Rothörl, T.; Schuster, C.; Hadsell, R.; Agapito, L.; Scholz, J. S3K: Self-Supervised Semantic Keypoints for Robotic Manipulation via Multi-View Consistency. arXiv 2020, arXiv:2009.14711. [Google Scholar]

- Newbury, R.; He, K.; Cosgun, A.; Drummond, T. Learning to place objects onto flat surfaces in human-preferred orientations. arXiv 2020, arXiv:2004.00249. [Google Scholar]

- Mahler, J.; Liang, J.; Niyaz, S.; Laskey, M.; Doan, R.; Liu, X.; Ojea, J.A.; Goldberg, K. Dex-Net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. Robot. Sci. Syst. 2017, 58–72. [Google Scholar] [CrossRef]

- Morrison, D.; Leitner, J.; Corke, P. Closing the loop for robotic grasping: A real-time, generative grasp synthesis approach. Robot. Sci. Syst. 2018, 21–31. [Google Scholar] [CrossRef]

- Wada, K.; Okada, K.; Inaba, M. Joint learning of instance and semantic segmentation for robotic pick-and-place with heavy occlusions in clutter. Int. Conf. Robot. Autom. 2019, 9558–9564. [Google Scholar] [CrossRef]

- Wan, W.; Igawa, H.; Harada, K.; Onda, H.; Nagata, K.; Yamanobe, N. A regrasp planning component for object reorientation. Autonom. Robot. 2019, 43, 1101–1115. [Google Scholar] [CrossRef]

- Wan, W.; Mason, M.T.; Fukui, R.; Kuniyoshi, Y. Improving regrasp algorithms to analyze the utility of work surfaces in a workcell. IEEE Int. Conf. Robot. Autom. 2015, 4326–4333. [Google Scholar] [CrossRef]

- Ali, A.; Lee, J.Y. Integrated motion planning for assembly task with part manipulation using re-grasping. Appl. Sci. 2020, 10, 749. [Google Scholar] [CrossRef]

- Nguyen, A.; Kanoulas, D.; Caldwell, D.G.; Tsagarakis, N.G. Preparatory object reorientation for task-oriented grasping. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Daejeon, Korea, 9–14 October 2016; pp. 893–899. [Google Scholar]

- Do, T.T.; Nguyen, A.; Reid, I. AffordanceNet: An end-to-end deep learning approach for object affordance detection. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018; pp. 5882–5889. [Google Scholar]

- Lai, Y.-C. Task-Oriented Grasping and Tool Manipulation for Dual-Arm Robot (In Chinese). Ph.D. Thesis, Tamkang University, New Taipei City, Taiwan, 2020. [Google Scholar]

- Qin, Z.; Fang, K.; Zhu, Y.; Li, F.; Savarese, S. KETO: Learning keypoint representations for tool manipulation. arXiv 2019, arXiv:1910.11977. [Google Scholar]

- Shoemake, K. Animating rotation with quaternion curves. In Proceedings of the 12th Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 22–26 July 1985; pp. 245–254. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Conf. Comput. Vis. Pattern Recogn. 2014, 3431–3440. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Wong, C.C.; Chien, S.Y.; Feng, H.M.; Aoyama, H. Motion planning for dual-arm robot based on soft actor-critic. IEEE Access. 2021, 9, 26871–26885. [Google Scholar]

- Intel® RealSense™ Depth Module D400 Series Custom Calibration; Intel Corporation: Santa Clara, CA, USA, 2018.

| Item | Mask R-CNN | CNN-Based Semantic Segmentation |

|---|---|---|

| Backbone network | ResNet-101 | ResNet-101 |

| Number of objects | 3 | 6 |

| Number of random scenes | 10 | 10 |

| Manually label photos | 45 | 50 |

| Automatically generate photos | 4000 | 6000 |

| Resolution | 640 * 480 | 640 * 480 |

| PET Bottle | Carton Drink | Tomato Can | |

|---|---|---|---|

| Origin picture |  |  |  |

| Output of Mask R-CNN |  |  |  |

| Object extraction |  |  |  |

| Output of CNN-based Semantic Segmentation |  |  |  |

| CRFs-Based refinement |  |  |  |

| PET Bottle | PET Bottle | PET Bottle | |

|---|---|---|---|

| Origin picture |  |  |  |

| Output of Mask R-CNN |  |  |  |

| Object extraction |  |  |  |

| Output of CNN-based Semantic Segmentation |  |  |  |

| CRFs-Based refinement |  |  |  |

| MAE Value | Position Error (cm) | Rotation Error (Degree) | Total Test Number | ||||

|---|---|---|---|---|---|---|---|

| Figure 10 (left) | 0.1426 | 0.6438 | 0.7446 | 3.6722 | 5.2038 | 2.7232 | 15 |

| Figure 10 (right) | 0.1227 | 0.5210 | 0.6892 | 2.8746 | 6.1624 | 1.9902 | 15 |

| Total average | 0.1327 | 0.5824 | 0.7319 | 3.2734 | 5.6831 | 2.3567 | 30 |

| Function | Method | Processing Time | Proportion of Time |

|---|---|---|---|

| Visual Perception | Mask R-CNN | 0.258 | 14.8% |

| CNN-Based Semantic Segmentation | 0.429 | 24.6% | |

| CRFs-Based Refinement (5 iterations) | 0.996 | 57.1% | |

| Object Pose Estimation | Keypoint Annotation | 0.016 | 0.9% |

| Normal Vector from Point Cloud | 0.043 | 2.4% | |

| Total Processing Time (in seconds) | 1.742 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wong, C.-C.; Yeh, L.-Y.; Liu, C.-C.; Tsai, C.-Y.; Aoyama, H. Manipulation Planning for Object Re-Orientation Based on Semantic Segmentation Keypoint Detection. Sensors 2021, 21, 2280. https://doi.org/10.3390/s21072280

Wong C-C, Yeh L-Y, Liu C-C, Tsai C-Y, Aoyama H. Manipulation Planning for Object Re-Orientation Based on Semantic Segmentation Keypoint Detection. Sensors. 2021; 21(7):2280. https://doi.org/10.3390/s21072280

Chicago/Turabian StyleWong, Ching-Chang, Li-Yu Yeh, Chih-Cheng Liu, Chi-Yi Tsai, and Hisasuki Aoyama. 2021. "Manipulation Planning for Object Re-Orientation Based on Semantic Segmentation Keypoint Detection" Sensors 21, no. 7: 2280. https://doi.org/10.3390/s21072280

APA StyleWong, C.-C., Yeh, L.-Y., Liu, C.-C., Tsai, C.-Y., & Aoyama, H. (2021). Manipulation Planning for Object Re-Orientation Based on Semantic Segmentation Keypoint Detection. Sensors, 21(7), 2280. https://doi.org/10.3390/s21072280