Abstract

Precipitation has an important impact on people’s daily life and disaster prevention and mitigation. However, it is difficult to provide more accurate results for rainfall nowcasting due to spin-up problems in numerical weather prediction models. Furthermore, existing rainfall nowcasting methods based on machine learning and deep learning cannot provide large-area rainfall nowcasting with high spatiotemporal resolution. This paper proposes a dual-input dual-encoder recurrent neural network, namely Rainfall Nowcasting Network (RN-Net), to solve this problem. It takes the past grid rainfall data interpolated by automatic weather stations and doppler radar mosaic data as input data, and then forecasts the grid rainfall data for the next 2 h. We conduct experiments on the Southeastern China dataset. With a threshold of 0.25 mm, the RN-Net’s rainfall nowcasting threat scores have reached 0.523, 0.503, and 0.435 within 0.5 h, 1 h, and 2 h. Compared with the Weather Research and Forecasting model rainfall nowcasting, the threat scores have been increased by nearly four times, three times, and three times, respectively.

1. Introduction

Precipitation is the main forecast element of Numerical Weather Prediction [1] (NWP), which has an important impact on people’s daily life [2,3] and disaster prevention and mitigation [4]. After years of development, the current short-term and medium-term NWP models have become more and more accurate. However, for rainfall nowcasting, it is difficult to give accurate forecast results due to spin-up [5] and other problems in NWP models.

In recent years, artificial intelligence has become the new engine of the global scientific and technological revolution, and some scholars have applied machine learning and deep learning to precipitation forecasting [6,7,8,9,10,11,12,13]. After Shi et al. [14] achieved precipitation intensity nowcasting by radar echo extrapolation, it has emerged as a hot research topic in the meteorological community. They formulated radar echo extrapolation as a spatiotemporal prediction problem, and used ConvLSTM applying convolution structure to LSTM to predict future radar echo data by past radar echo data. They then used the Z–R relationship to convert predicted radar echo data into precipitation intensity data to realize precipitation intensity nowcasting. They conducted experiments on the dataset composed of radar echo data during the 97 days of precipitation in Hong Kong in 2011–2013. ConvLSTM has reached 0.577 for the Critical Success Index (CSI) with a threshold of 0.5 mm/h in the next 1.5 h, which has a strong ability to forecast precipitation intensity. In 2017, Shi et al. [15] further proposed TrajGRU to improve the effect of precipitation intensity nowcasting. It used the generated optical flow [16] to realize a connection structure based on position changes, and the point in the convolution structure is connected to points with higher correlation instead of a fixed number of surrounding points. In the experiment on the HKO-7 dataset, TrajGRU’s CSI reached 0.552 in the next 2 h with a threshold of 0.5 mm/h.

After Shi et al. [14] formulated radar echo extrapolation as a spatiotemporal prediction problem, many spatiotemporal prediction methods [17,18] regarded radar echo extrapolation as one of the problems to evaluate the effectiveness of their methods. Wang et al. [17] proposed a spatiotemporal prediction method celled PredRNN, which solves the problem of spatial features of each layer of ConvLSTM being independent of each other in time series. Spatiotemporal memory units are added to PredRNN and connected through a zigzag structure so that features can be propagated both spatially and temporally. They conducted experiments on the radar echo dataset in Guangzhou, and the mean square error of PredRNN is 30% higher than that of ConvLSTM. Bonnet et al. [19] applied the spatiotemporal prediction method PredRNN++ [20] to the precipitation intensity nowcasting. PredRNN++ utilized the Causal LSTM unit to integrate temporal and spatial features and Gradient Highway (GHU) that could alleviate gradient disappearance. However, the precipitation intensity nowcasting based on radar echo extrapolation has two main problems. One is that the radar echo data cannot reflect the real-world distribution of precipitation, which is caused by the working principle of the radar and various noises. The second is that the precipitation intensity converted from the radar echo data are inconsistent with the actual precipitation intensity, which is caused by the inaccurate Z–R relationship.

Compared with radar echo data used in precipitation intensity nowcasting, the rainfall data used in rainfall nowcasting can be directly measured by rain gauges and other equipment, which can more accurately reflect the real-world precipitation. Currently, there are few rainfall nowcasting methods based on machine learning and deep learning. Zhang et al. [21] used a multi-layer perceptron to forecast the rainfall data of 56 weather stations in China of the next 3 h. The forecast was derived from 13 physical factors related to precipitation in the surrounding area. Although the forecast results can meet the needs of nowcasting, its spatial resolution is too low to achieve large-area rainfall nowcasting.

Existing rainfall nowcasting methods based on machine learning and deep learning are difficult in order to achieve rainfall nowcasting with high spatiotemporal resolution. To be able to achieve this, we set the grid data interpolated from the rainfall data in the dense automatic weather station as the forecast object. Since the original data are directly measured by the rain gauge, the grid data will reflect the real-world rainfall distribution as much as possible. In the grid data, the forecasting time resolution and spatial resolution are 30 min and 5 km, respectively, which can meet the high spatiotemporal resolution requirement. As the forecast target is sequential grid data, we formulate this forecasting problem as a spatiotemporal prediction problem, which predicts future development through past spatiotemporal features [22]. Rainfall depends on rainfall intensity and rainfall duration so that its evolution is more complicated and diverse. Therefore, we utilize both rainfall data and radar echo data as our input data to gain more meteorological spatiotemporal features which can support this complex forecasting.

Based on experiments with multiple models, we propose a dual-input dual-encoder RNN, namely Rainfall Nowcasting Network (RN-Net). RN-Net extracts spatiotemporal features of the rainfall and the radar echo data via dual encoders. Then, these features are combined by a fusion module. Finally, the fused features are fed into a predictor to make forecasts. In order to reasonably evaluate the effectiveness of rainfall nowcasting, we propose a new performance metric that combines multiple metrics in the field of meteorological and spatiotemporal prediction. In the experiment, 10 months of radar echo data and rainfall data in the southeastern coastal area of China were used as deep learning samples and compared with rainfall nowcasting of the Weather Research and Forecasting (WRF) [23] model. The results are expected to provide convenience for daily activities such as travel and irrigation, and provide a basis for early warning of natural disasters such as floods and mudslides.

2. Preliminary

2.1. Data Details

The radar echo data used in this article is Doppler radar mosaic data. Radar echo data contains various echo noise, such as non-meteorological echo, interference echo, etc., which mislead prediction. Therefore, we construct a singular point filter and a bilateral filter to filter the value domain and the spatial domain, which can effectively eliminate the pulsation and clutter while retaining the echo characteristics. In addition, a high-pass filter is constructed to remove data below 15 dBZ, and only data related to precipitation are retained. Since the data will be saved as an image format, we convert the radar echo data into pixel data.

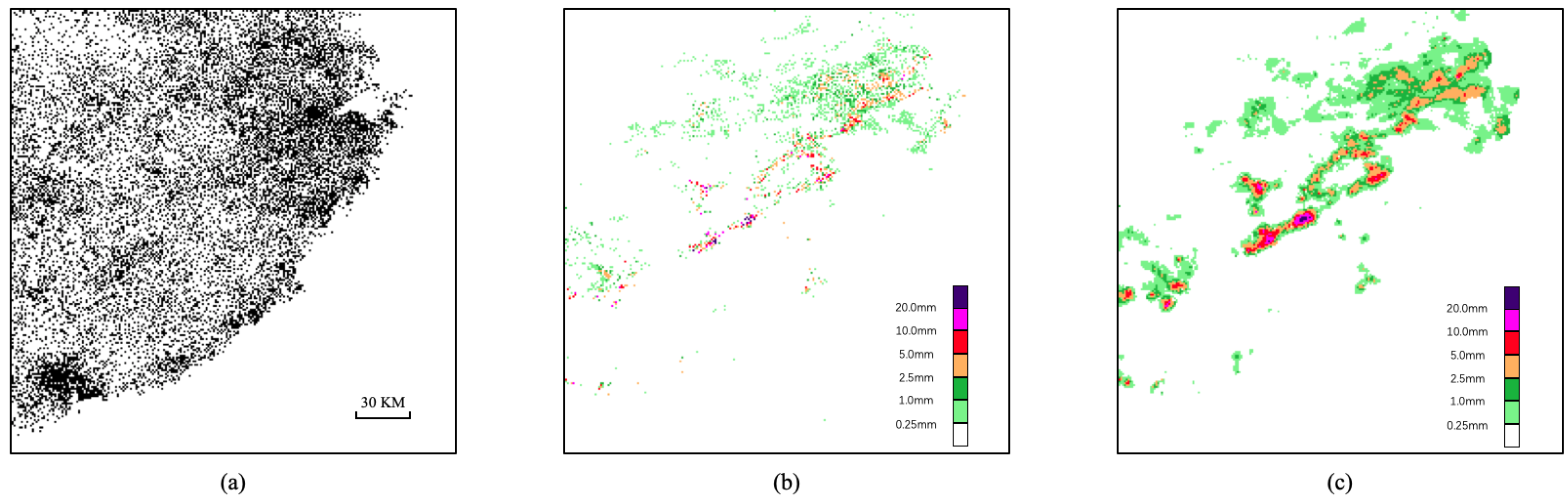

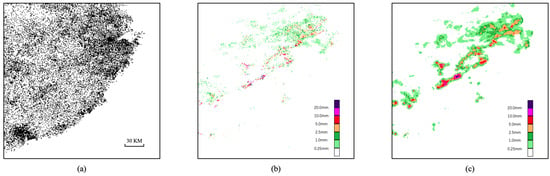

The rainfall interpolation data of the automatic weather station is selected as the rainfall data in this article. Automatic weather stations are widely distributed and the distribution of automatic weather stations used in this paper is shown in Figure 1a. Rainfall data are usually measured by rain gauges. It collects the rainfall in a specific area and divides the rainfall volume by the surface area to obtain the depth of rainfall. Inspired by the E-OBS dataset [24], we interpolate the rainfall point data into a uniform grid. We use Inverse Distance Weight [25] (IDW) to interpolate the rainfall data of 13,655 automatic weather stations in the forecasting area into a 240 × 240 grid. With such high-density data interpolation, the actual rainfall distribution is restored as much as possible. Figure 1b,c shows the effect of interpolation. IDW takes the distance between the interpolation point and the sample point as the weight for the weighted average. The closer the sample point is to the interpolation point, the greater the weight. The critical equation is as follows:

where n is the number of selected sampling points closest to the interpolation point, which is set to 16 in the experiment. represents the coordinates of the sample point, and denotes the coordinates of the interpolation point. is the value of this coordinate, and is the distance between the sample point and the interpolation point. is the weight of the sample point to the interpolation point. Finally, we convert the interpolated rainfall data into pixel data.

Figure 1.

(a) Distribution map of automatic weather stations; automatic weather station rainfall data of 9:00 a.m.–9:30 a.m. on 23 May 2017; (b) and data after interpolation (c).

In addition, the WRF model is used to compare the 0–2 h rainfall nowcasting effect of RN-Net. The WRF model [26] is configured with a one-domain nested grid system. The horizontal resolution of the domain is 5 km, with the grid points 240 × 240. The domain has 35 vertical layers, with the model top at 50 hPa. The boundary conditions are updated every 6 h from the 0.25° × 0.25° National Centers for Environmental Prediction (NCEP) Final Operational Model Global Tropospheric Analysis. The main physical parameterization schemes are shown in Table 1. The model is integrated every 6 h, the forecast time is 12 h, and the results are output every 30 min.

Table 1.

Physical parameterization schemes.

2.2. Problem Definition

Our goal is to forecast future automatic weather station rainfall interpolation data by past radar echo data and rainfall data. We formally define this problem as follows: suppose the current moment is . We have access to the radar echo data and the recent rainfall data . Our task is to predict , and make them as close as possible to , which is the real rainfall data for next time. Specifically, our goal is to find a mapping f such that

3. Method

3.1. Network Structure

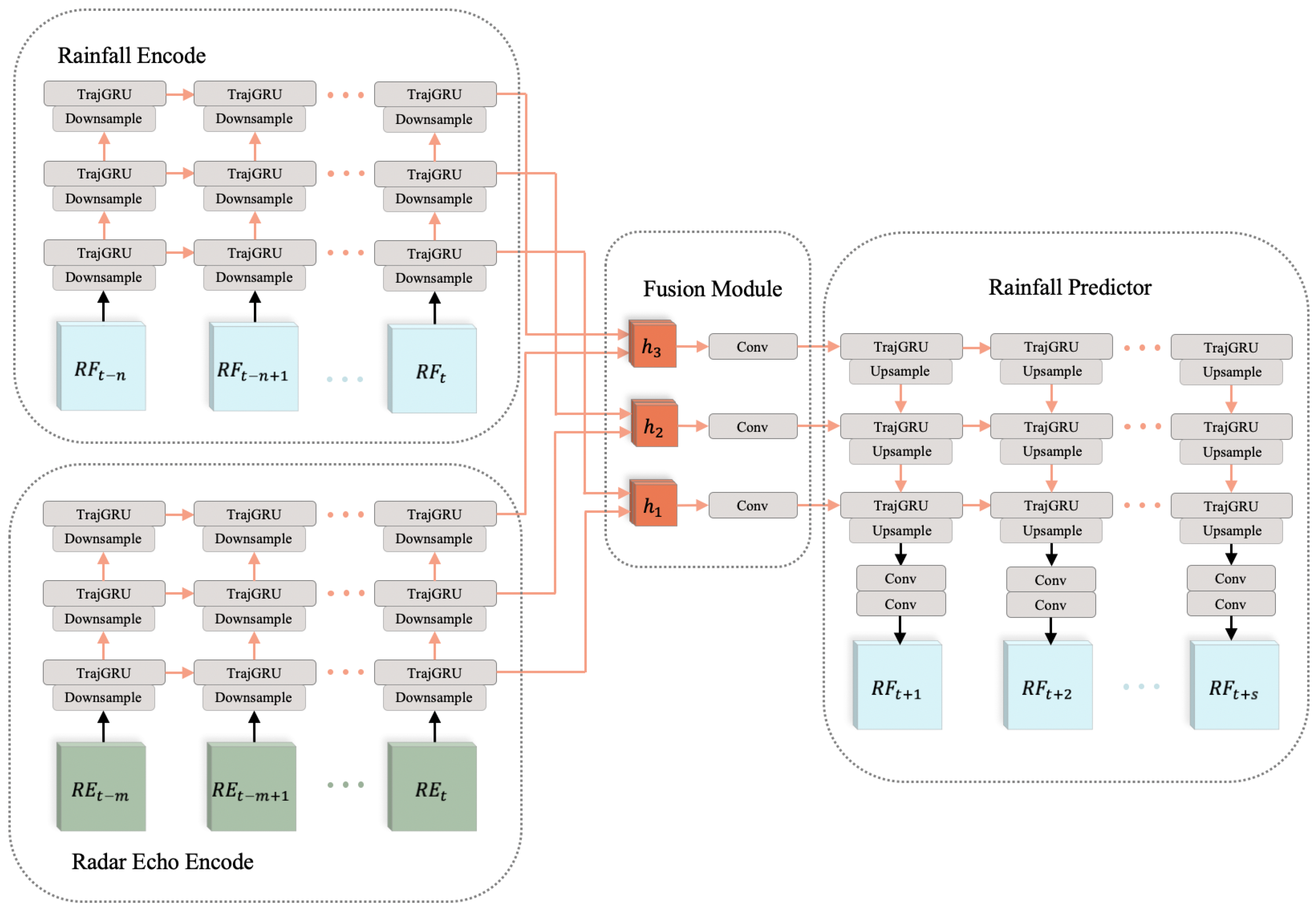

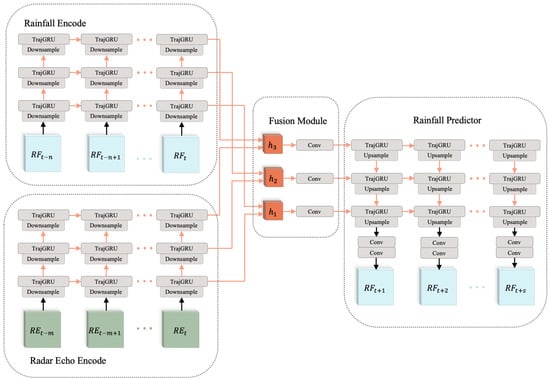

In order to achieve high spatiotemporal resolution rainfall nowcasting, our model needs to obtain sufficient meteorological spatiotemporal features to support the forecast. Meanwhile, its RNN unit also needs to have stronger feature extraction and transmission capabilities. The network structure of RN-Net is shown in Figure 2.

Figure 2.

RN-Net consists of four parts: Radar Echo Encoder, Rainfall Encoder, Fusion module, and Rainfall Predictor. The Radar Echo Encoder and the Rainfall Encoder encode spatiotemporal features of radar echo data and recent rainfall data , respectively. Then, the fusion module combines the radar echo feature and rainfall feature so as to provide more spatiotemporal feature support for nowcasting. Finally, the rainfall predictor receives the fused feature and makes forecasts .

Inspired by LightNet [27], RN-Net contains two encoders, a fusion module and a predictor. The time resolution of rainfall data from automatic weather stations is 30 min and the time resolution of radar echo data are 6 min. The time resolution difference between the two data are too large. Therefore, the two types of data cannot be encoded by the same encoder. RN-Net uses radar echo encoder and rainfall encoder to respectively encode the two kinds of data to generate spatiotemporal features. The fusion module composed of CNN is used to fuse the spatiotemporal features of the two data. Finally, the spatiotemporal features are input to the predictor, and the forecasting of future rainfall is output. We detail each component as follows.

Radar Echo Encoder or Rainfall Encoder: Both of these two encoders have the same network structure and parameters. The encoder has a three-layer structure, and each layer is composed of a layer of RNN and downsample unit. The downsample unit helps the model understand the high-level spatial features of the input data, so as to better extract the spatiotemporal features. The input of the first layer is radar echo data or rainfall data ( or ). Then, the hidden features of each time point of this layer are input into the downsample unit of the next layer, and the hidden state of the last time point ( or ) is used as the output of the encoder in this layer. The second and third layers continue the same process. The final output of the encoder is the hidden state of each layer, and the formula is expressed as follows:

Fusion Module: The radar echo data contain rich meteorological features. Due to its various noises, it cannot accurately reflect the real-world rainfall distribution. The rainfall data of the automatic weather station reflect the actual rainfall distribution. To obtain accurate rainfall nowcasting, the hidden features of the two data are combined. The fusion module superimposes the hidden features of the two, and then deep fusion through CNN. Its formula is expressed as follows:

Rainfall Predictor: The structure of rainfall predictor is similar to an encoder. It is also a three-layer structure, and each layer is composed of a layer of RNN and upsample units. The difference is that two layers of CNN are added to the output part, which is more conducive to generating forecasting data by spatiotemporal features. When forecasting, the input data of the predictor are the fused spatiotemporal hidden features. The third layer expands the corresponding fused hidden state in the future period. Then, the hidden state at each time is input to the upsample unit to generate the lower-level spatial hidden state, which is input into the next layer of the predictor. The second and first layers continue the same process. Finally, two layers of CNN output rainfall nowcasting based on low-level spatiotemporal features. The formula is as follows:

TrajGRU is the RNN unit used in RN-Net. It is improved based on ConvGRU and overcomes the problem that the connection structure between the memory states in other convRNNs is fixed. For input data, TrajGRU and ConvGRU both use convolution as the connection structure, which makes it possible to obtain the spatial features of the input data. For memory state, TrajGRU uses a structure generation network to dynamically generate the optical flow between states as the connection structure. Such a flexible connection structure can more efficiently learn complex motion patterns such as rotation and zooming in spatiotemporal features. The settings (the kernel size, channels and stride) of each component of RN-Net are detailed in Table 2.

Table 2.

Various settings in RN-Net, including channels, kernel, and stride.

In addition to RN-Net, we also try two other dual-input dual-encoder methods. When ConvLSTM is used as the RNN unit of RN-Net, the radar echo encoder/rainfall encoder and predictor need to transmit cells and hidden states simultaneously, and the fusion module needs to fuse the two features separately. When using PredRNN as the backbone network of RN-Net, the radar echo encoder/rainfall encoder and the predictor need to transmit cell, hidden state, and spatiotemporal memory at the same time, and spatiotemporal memory needs to be interspersed with zigzags in the network. The fusion module needs to fuse the three features separately.

3.2. Implementation Details

The proposed neural networks are implemented with Pytorch [28] and are trained end-to-end. All network parameters are initialized with a normal distribution. All models are optimized with L2 loss, and they are trained using the Adam optimizer [29] with a starting learning rate of 10. The training process is stopped after 40,000 iterations, and the batch size of each iteration is set to 4. The rainfall data and radar echo data normalized to the range of [0, 1] are used as network input data.

4. Experiment

In this part, we evaluate the proposed models on the Southeastern China dataset. In Section 4.1, we introduce the details of the dataset. In Section 4.2, we introduce a new rainfall nowcasting performance metric, which combines multiple evaluation metrics in the meteorological field and the spatiotemporal prediction field. Section 4.3 compares RN-Net with other methods, including eight deep learning methods and the WRF model. We visualize two representative examples for further analysis in Section 4.4. Our experimental platform uses Ubuntu16.04, 32 GB memory, and two Nvidia RTX 2080 GPUs.

4.1. Dataset

Radar Echo Data: We use the data from the southeast coast of China. The data are stored in a 240 × 240 grid, and its spatial resolution is 5 km. The time resolution is 6 min, and the time range includes May to September in 2018 and 2019.

Rainfall Data: The spatial range, spatial resolution, and time resolution of rainfall data from automatic weather stations are the same as those of radar echo data. Its original time resolution is 10 min; due to the small value and sparse spatial distribution after interpolation, its time resolution is converted to 30 min.

In the dataset, 207 days for training, 29 days for validation, and 57 days for testing. The data on some days were incomplete due to equipment failure or other reasons. Our task can be defined as nowcasting the rainfall data of the next 2 h, based on the rainfall data of the past 2 h and the radar echo data of the past 1 h.

4.2. Performance Metric

In our methods, the rainfall nowcasting with the high spatiotemporal resolution is formulated as a spatiotemporal prediction problem to solve. The forecast result is the cumulative rainfall interpolation data of four frames within 0.5 h in the next 2 h, which is compared with the actual automatic weather station rainfall interpolation data to evaluate the forecast effect. To make a reasonable evaluation, we define a new performance metric by combining various evaluation metrics in the field of meteorology and spatiotemporal prediction.

Commonly used metrics for rainfall nowcasting in the meteorological field include TS, probability of detection (POD) and false alarm rate (FAR). In the experiment, we choose to use the thresholds 0.25 mm, 1 mm, and 2.5 mm to calculate these metrics. The threshold setting refers to the rainfall level, and the corresponding relationship is shown in Table 3. In order to show the effect of rainfall nowcasting in the next 2 h, we take three time periods within 0.5 h, 1 h, and 2 h for evaluation. The forecast rainfall and actual rainfall in these three periods are accumulated and used as evaluation data. In the field of spatiotemporal prediction, the forecasting results are evaluated frame by frame. The evaluation result in a period of time is the average of the evaluation results of each frame in the period. Applying this idea to our method evaluation, and the multi-frame evaluation results within 1 h and 2 h are averaged, including the Critical Success Index (CSI), POD, and FAR.

Table 3.

Correspondence between threshold and rainfall level.

In addition, since the data are all two-dimensional grid data and are saved in the image format, we introduce two metrics, MAE and MSE, which respectively calculate the L1 distance and L2 distance between the truth data and the forecast data.

CSI and TS have the same calculation formula. TS evaluates accumulated rainfall in the period, and CSI is the average value of multiple frame evaluations in the period. The following are the calculation equations for these six evaluation metrics:

Here, w and h are the width and height of the rainfall data, respectively. and are the forecast rainfall and truth rainfall in the coordinates . NA, NB, NC, and ND represent the number of true-positive, false-positive, false-negative, and true-negative grid points.

Finally, the performance metric includes five evaluation results of cumulative rainfall within 0.5 h, 1 h and 2 h, and average values of the first two frames within 1 h and four frames within 2 h. Such performance metric not only reflect the forecasting effect of the method on rainfall with different time resolutions, but also reflect the spatiotemporal prediction capabilities of the method.

4.3. Experimental Results and Analysis

We try three deep learning model input methods: rainfall data single input, radar echo data single input, and rainfall data and radar echo data dual input. The single input method of rainfall data is similar to the radar echo extrapolation method, which forecasts its future development by past data. The single input method of radar echo data are used to detect whether the radar echo data are effective for rainfall nowcasting. The dual-input model can simultaneously obtain the meteorological spatiotemporal feature of radar echo data and rainfall data. Three deep learning models are tried for each input method, including ConvLSTM, TrajGRU, and PredRNN. The experiment contains nine deep learning methods, and the best method is dual-input dual-encoder RNN which uses TrajGRU, namely RN-Net.

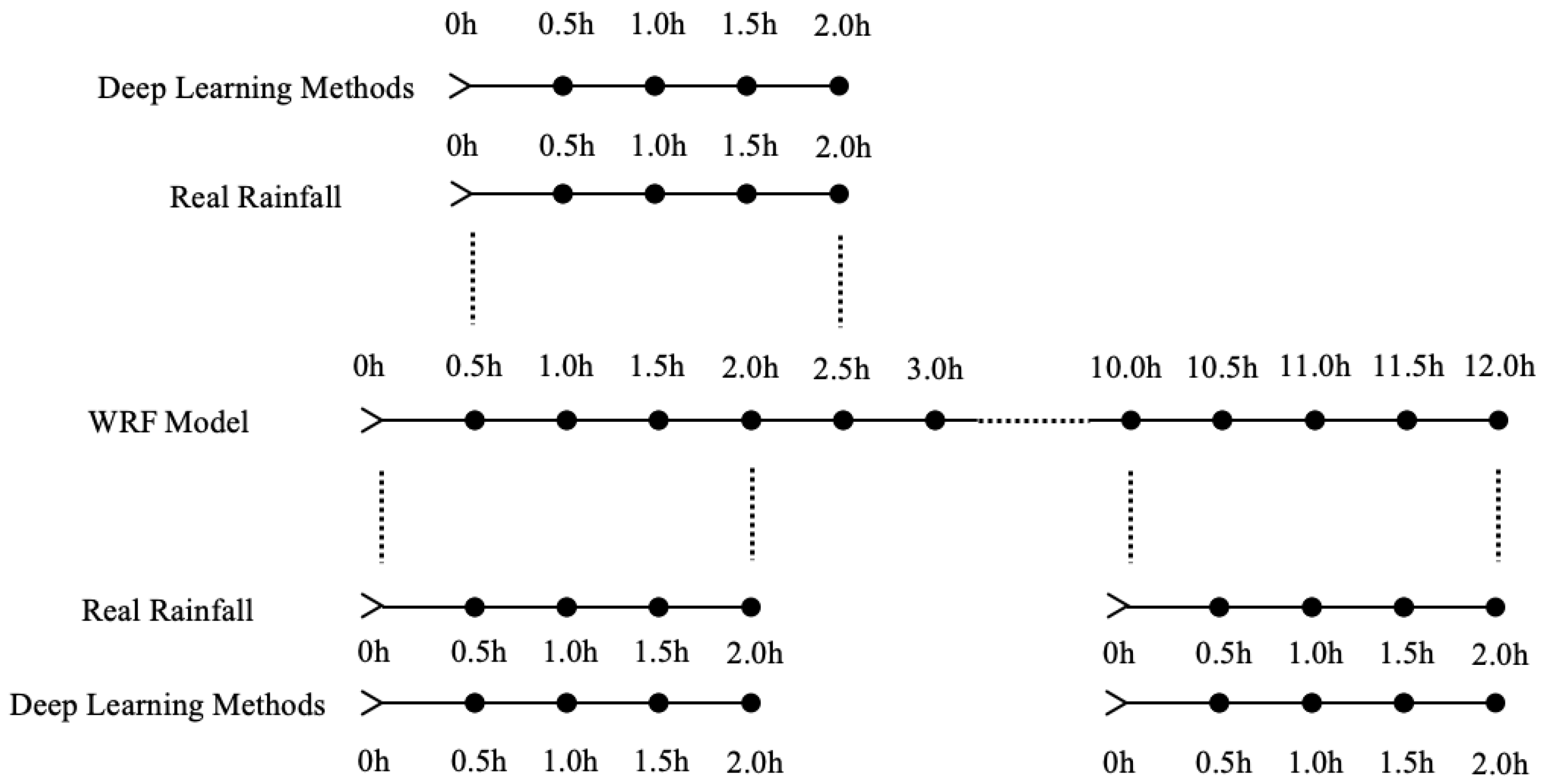

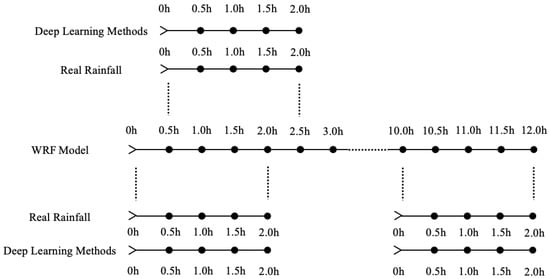

In addition, to compare with the traditional method, the WRF model is run to get the rainfall nowcasting. Its spatial scope is the same as the dataset, and its time scope covers the testing set of the dataset. The WRF model is integrated every 6 h and forecasts the next 12 h with a time resolution of 0.5 h of rainfall. Our deep learning methods forecast the rainfall for the next 2 h every 0.5 h. In order to compare the two types of rainfall nowcasting methods, we designed a special comparison method, as shown in Figure 3. First, we extract 2 h of data every 0.5 h from the 12 h WRF model rainfall forecast, and a total of 21 sets of data. Then, we compare each set of data with the corresponding real rainfall. However, the WRF model has a spin-up period whose duration cannot be determined, and the forecast in this period is usually not used. In order to avoid the spin-up period, the best evaluation results among the 21 sets of data are used as the WRF model evaluation result within 12-h. Meanwhile, we also compared our deep learning methods rainfall forecast of these 21 time periods with the corresponding real rainfall. The average of the 21 evaluation results is used as our deep learning methods evaluation result within 12-h. We compare all the 12-h WRF model forecasts integrated every 6 h in the testing set with the deep learning methods forecasts through the above method. This comparison method not only solves the problem of the different forecasting frequencies of the two methods, but also avoids the spin-up period of the WRF model.

Figure 3.

Schematic diagram of comparison method between our deep learning methods and the WRF model.

The evaluation results of cumulative rainfall nowcasting within 0.5 h, 1 h, and 2 h are shown in Table 4, Table 5 and Table 6, respectively. The average values of multi-frame 0.5 h rainfall forecast evaluation results within 1 h and 2 h are shown in Table 7 and Table 8, respectively. When comparing the performance of rainfall nowcasting methods, TS and CSI is the main basis. RN-Net has the highest TS and CSI in Table 4, Table 5, Table 6, Table 7 and Table 8 among all rainfall nowcasting methods. Next, we mainly compare the evaluation results of different methods from the following three aspects.

Table 4.

Evaluation results of 0.5 h cumulative rainfall nowcasting. The best performance is reported using red, and the second best is reported using blue. ‘↑’ means that the higher the score the better, while ‘↓’ means that the lower score the better. ‘’ means the skill score at the mm rainfall threshold in 0.5 h. RE, RF, and RE-RF, respectively, indicate that the method uses radar echo data, rainfall data, or both as input data. PredRNN, ConvLSTM, and PredRNN are RNN units or backbone networks used in this method.

Table 5.

Evaluation results of 1 h cumulative rainfall nowcasting. ‘’ means the skill score at the mm rainfall threshold in 1 h.

Table 6.

Evaluation results of 2 h cumulative rainfall nowcasting. ‘’ means the skill score at the mm rainfall threshold in 2 h.

Table 7.

Average evaluation results of two frames of rainfall nowcasting in 1 h. ‘’ means the skill score at the mm rainfall threshold in 0.5 h.

Table 8.

Average evaluation results of four frames of rainfall nowcasting in 2 h. ‘’ means the skill score at the mm rainfall threshold in 0.5 h.

Comparison between Deep Learning Methods and WRF Model: As shown in Table 4, Table 5, Table 6, Table 7 and Table 8, the deep learning methods are better than the WRF model. Compared with WRF model rainfall nowcasting, RN-Net’s TS within 0.5 h, 1 h, and 2 h of 0.25 mm as the threshold are increased by nearly four times, three times, and three times, respectively, and RN-Net’s CSI within 1 h and 2 h of 0.25 mm as the threshold are increased by nearly four times and three times, respectively. The rainfall nowcasting in the WRF model is not effective and the FAR is extremely high. This result is caused by two factors. First, it does not use the latest truth data, but is completely dependent on WRF simulations, and WRF simulations usually have deviations in the time domain and geographical area. Second, these methods are manually designed by meteorological experts and can hardly benefit from a large amount of historical data. In addition, the effect of WRF model at low time resolution is better than that at high time resolution, while the deep learning methods are the opposite. This is because the deep learning methods use high time resolution data as training data.

Comparison of Different Input Methods: The rainfall extrapolation method that is similar to the radar echo extrapolation method is not good, and even worse than methods that use radar echo data as input. This is due to the fact that spatiotemporal features of the rainfall data are too small to support their forecasts. The dual-input methods are better than the single-input methods.

Comparison of Different Network Components: PredRNN, which has the best performance in the three radar echo extrapolation models, has the worst effect in rainfall nowcasting. This is because the scale and quality of the dataset are not enough to support the training of complex deep learning models. The method that uses PredRNN will be more prone to overfitting during the training. Moreover, although the method using TrajGRU is superior to the method using ConvLSTM on TS/CSI and POD, it is slightly inferior to the latter on MSE, MAE, and FAR. This is due to the mechanism of TrajGRU to generate the state connection structure, and this needs further improvement.

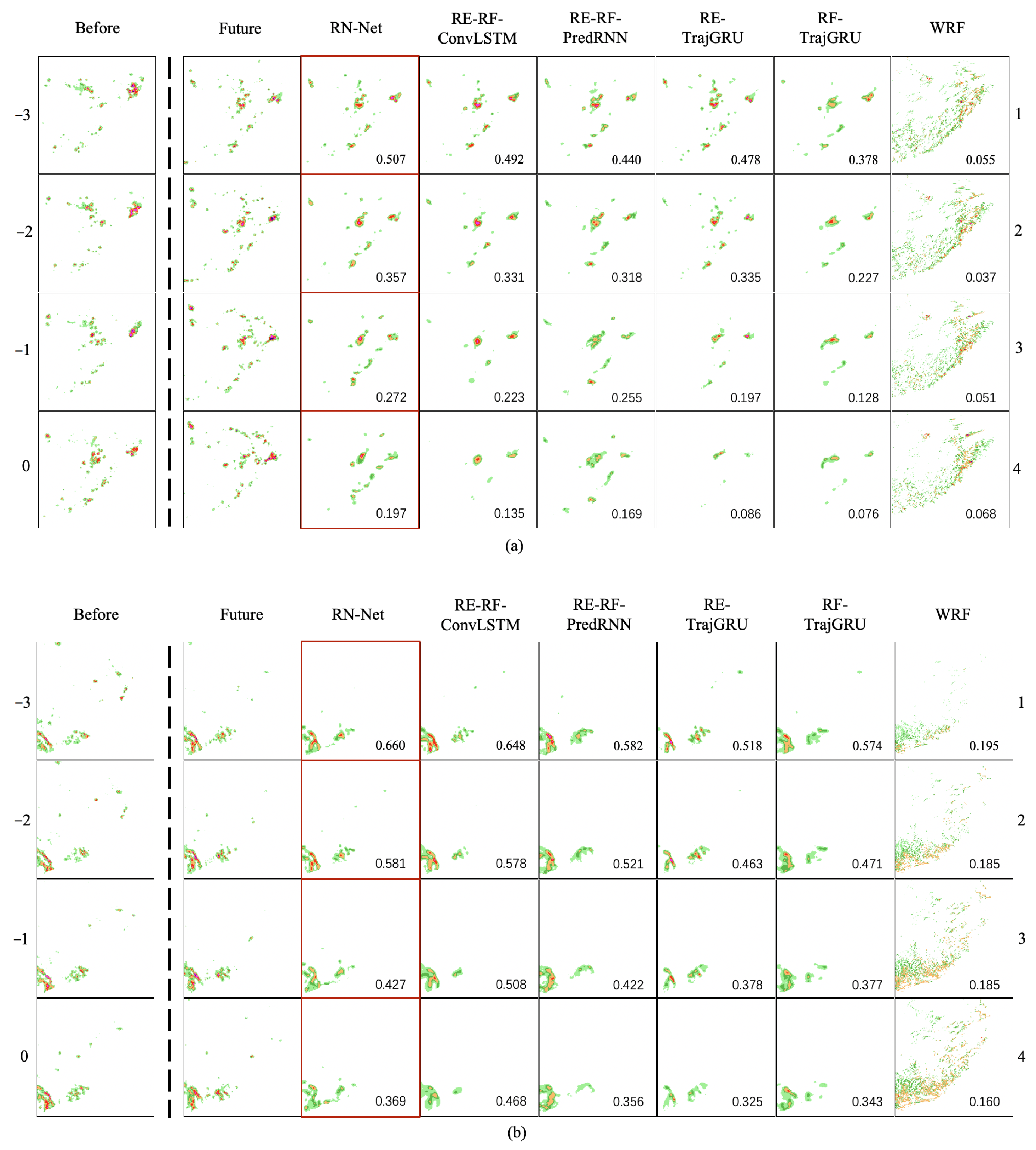

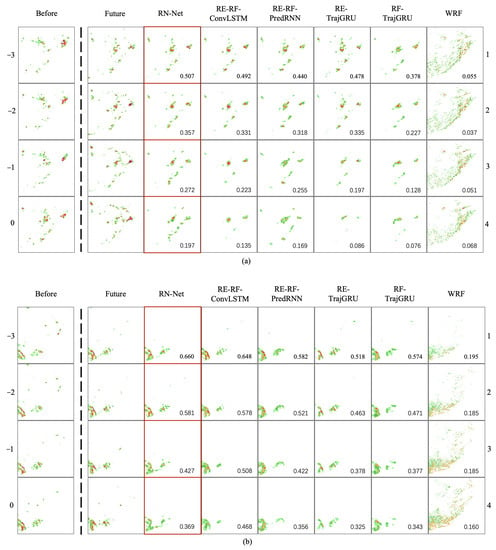

4.4. Visualization Results

Figure 4 visualizes two representative cases for RN-Net, RE-RF-ConvLSTM, RE-RF-PredRNN, RE-TrajGRU, RF-TrajGRU, and WRF model. From this figure, we observe that all of the deep learning methods except RF-TrajGRU can make accurate forecasting in the first hour, which is consistent with the performance of evaluation metrics. The rainfall data which is input data of RF-TrajGRU are not enough to provide enough meteorological spatiotemporal features to support rainfall nowcasting. Even in the first half-hour, there are some deviations. Moreover, the forecast results of the deep learning methods will gradually disappear after one hour. Among the three dual-input methods, the disappearance problem of the method including ConvLSTM is the most serious. This is due to the structure of RNN [30] and the distance loss function [31]. However, there is no identical situation with the WRF model. WRF model forecast results often have large-scale false alarms, which is identical to the performance in evaluation metrics.

Figure 4.

Visualize two representative rainfall nowcasting cases. In (a,b), from left to right, are the actual rainfall data of the past two hours , the actual rainfall data of the next two hours and rainfall nowcasting made by RN-Net, RE-RF-ConvLSTM, RE-RF-PredRNN, RE-TrajGR, RF-TrajGRU, and WRF models. The value in each forecast frame is the CSI with 0.25 mm as the threshold for this frame.

5. Conclusions

In this paper, we propose a model (namely RN-Net) for rainfall nowcasting. RN-Net is a deep neural network with dual-input and dual-encoder. RN-Net provides a more sufficient basis for forecasting by fusing the spatiotemporal features of rainfall data and radar data. On the one hand, it overcomes the drawback of conventional forecasting methods that cannot mine knowledge from historical data. On the other hand, it provides high spatiotemporal resolution forecasting that other deep learning methods cannot achieve. We conduct experiments on the Southeastern China dataset. In the experiment, RN-Net is much better than the WRF model. However, compared with the accuracy of precipitation intensity nowcasting [14,15,17,18,20], RN-Net’s rainfall nowcasting still has room for improvement. Moreover, the generalization ability of RN-Net may be poor. This is because rainfall is affected by topography, climate, season, and other factors, and our dataset only contains summer and autumn data of Southeast China.

In order to further improve the accuracy and generalization of rainfall nowcasting, we will extend our current work to three aspects. Firstly, we will increase the scale of the dataset and expand the area included in the dataset. Secondly, we will add more input data to provide more meteorological spatiotemporal features for forecast. Finally, we look forward to future work trying other novel deep learning networks.

Author Contributions

Conceptualization, X.W., J.G. and F.Z.; methodology, F.Z.; software, F.Z.; validation, X.W., J.G. and F.Z.; formal analysis, F.Z.; investigation, F.Z.; resources, J.G. and F.Z.; data curation, F.Z.; writing—original draft preparation, F.Z.; writing—review and editing, F.Z., M.W. and L.G.; visualization, F.Z.; supervision, F.Z.; project administration, F.Z.; funding acquisition, J.G. and X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Project No. 41975066).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw/processed data required to reproduce these findings cannot be shared at this time as the data also forms part of an ongoing study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Knievel, J.C.; Ahijevych, D.A.; Manning, K.W. Using temporal modes of rainfall to evaluate the performance of a numerical weather prediction model. Mon. Weather Rev. 2004, 132, 2995–3009. [Google Scholar] [CrossRef][Green Version]

- Muhandhis, I.; Susanto, H.; Asfari, U. Determining Salt Production Season Based on Rainfall Forecasting Using Weighted Fuzzy Time Series. J. Appl. Comput. Sci. Math. 2020, 14, 23–27. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Y.; Tian, S.; Lai, S. Forecasting Rainfall with Recurrent Neural Network for irrigation equipment. IOP Conf. Ser. Earth Environ. Sci. 2020, 510, 042040. [Google Scholar] [CrossRef]

- Osanai, N.; Shimizu, T.; Kuramoto, K.; Kojima, S.; Noro, T. Japanese early-warning for debris flows and slope failures using rainfall indices with Radial Basis Function Network. Landslides 2010, 7, 325–338. [Google Scholar] [CrossRef]

- Chu, Q.; Xu, Z.; Chen, Y.; Han, D. Evaluation of the ability of the Weather Research and Forecasting model to reproduce a sub-daily extreme rainfall event in Beijing, China using different domain configurations and spin-up times. Hydrol. Earth Syst. Sci. 2018, 22, 3391. [Google Scholar] [CrossRef]

- Hong, W.C. Rainfall forecasting by technological machine learning models. Appl. Math. Comput. 2008, 200, 41–57. [Google Scholar] [CrossRef]

- Sumi, S.M.; Zaman, M.F.; Hirose, H. A rainfall forecasting method using machine learning models and its application to the Fukuoka city case. Int. J. Appl. Math. Comput. Sci. 2012, 22, 841–854. [Google Scholar] [CrossRef]

- Cramer, S.; Kampouridis, M.; Freitas, A.A.; Alexandridis, A.K. An extensive evaluation of seven machine learning methods for rainfall prediction in weather derivatives. Expert Syst. Appl. 2017, 85, 169–181. [Google Scholar] [CrossRef]

- Moon, S.H.; Kim, Y.H.; Lee, Y.H.; Moon, B.R. Application of machine learning to an early warning system for very short-term heavy rainfall. J. Hydrol. 2019, 568, 1042–1054. [Google Scholar] [CrossRef]

- Parmar, A.; Mistree, K.; Sompura, M. Machine Learning Techniques for Rainfall Prediction: A Review. In Proceedings of the 2017 International Conference on Innovations in information Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017. [Google Scholar]

- Adewoyin, R.; Dueben, P.; Watson, P.; He, Y.; Dutta, R. TRU-NET: A Deep Learning Approach to High Resolution Prediction of Rainfall. arXiv 2020, arXiv:2008.09090. [Google Scholar]

- Ayzel, G.; Heistermann, M.; Sorokin, A.; Nikitin, O.; Lukyanova, O. All convolutional neural networks for radar-based precipitation nowcasting. Procedia Comput. Sci. 2019, 150, 186–192. [Google Scholar] [CrossRef]

- Tian, L.; Li, X.; Ye, Y.; Xie, P.; Li, Y. A Generative Adversarial Gated Recurrent Unit Model for Precipitation Nowcasting. IEEE Geosci. Remote Sens. Lett. 2019, 17, 601–605. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar]

- Shi, X.; Gao, Z.; Lausen, L.; Wang, H.; Yeung, D.Y.; Wong, W.k.; Woo, W.C. Deep learning for precipitation nowcasting: A benchmark and a new model. arXiv 2017, arXiv:1706.03458. [Google Scholar]

- Woo, W.C.; Wong, W.K. Operational application of optical flow techniques to radar-based rainfall nowcasting. Atmosphere 2017, 8, 48. [Google Scholar] [CrossRef]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Philip, S.Y. Predrnn: Recurrent Neural Networks for Predictive Learning Using Spatiotemporal Lstms. 2017. Available online: http://ise.thss.tsinghua.edu.cn/~mlong/doc/predrnn-nips17.pdf (accessed on 10 March 2021).

- Wang, Y.; Zhang, J.; Zhu, H.; Long, M.; Wang, J.; Yu, P.S. Memory in memory: A predictive neural network for learning higher-order non-stationarity from spatiotemporal dynamics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9154–9162. [Google Scholar]

- Bonnet, S.M.; Evsukoff, A.; Morales Rodriguez, C.A. Precipitation Nowcasting with Weather Radar Images and Deep Learning in São Paulo, Brasil. Atmosphere 2020, 11, 1157. [Google Scholar] [CrossRef]

- Wang, Y.; Gao, Z.; Long, M.; Wang, J.; Philip, S.Y. PredRNN++: Towards A Resolution of the Deep-in-Time Dilemma in Spatiotemporal Predictive Learning. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 16–20 June 2019; pp. 5123–5132. [Google Scholar]

- Zhang, P.; Jia, Y.; Gao, J.; Song, W.; Leung, H.K. Short-term rainfall forecasting using multi-layer perceptron. IEEE Trans. Big Data 2018, 6, 93–106. [Google Scholar] [CrossRef]

- Oprea, S.; Martinez-Gonzalez, P.; Garcia-Garcia, A.; Castro-Vargas, J.A.; Orts-Escolano, S.; Garcia-Rodriguez, J.; Argyros, A. A Review on Deep Learning Techniques for Video Prediction. arXiv 2020, arXiv:2004.05214. [Google Scholar]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Duda, M.G.; Huang, X.Y.; Wang, W.; Powers, J.G. A Description of the Advanced Research WRF Version 3. Available online: https://apps.dtic.mil/sti/pdfs/ADA487419.pdf (accessed on 11 March 2021).

- Cornes, R.C.; van der Schrier, G.; van den Besselaar, E.J.; Jones, P.D. An ensemble version of the E-OBS temperature and precipitation data sets. J. Geophys. Res. Atmos. 2018, 123, 9391–9409. [Google Scholar] [CrossRef]

- Chen, F.W.; Liu, C.W. Estimation of the spatial rainfall distribution using inverse distance weighting (IDW) in the middle of Taiwan. Paddy Water Environ. 2012, 10, 209–222. [Google Scholar] [CrossRef]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J. Prototypes for the WRF (Weather Research and Forecasting) Model. Available online: https://www.researchgate.net/profile/Jimy_Dudhia/publication/242446432_Prototypes_for_the_WRF_Weather_Research_and_Forecasting_model/links/0c9605314d71c38bae000000/Prototypes-for-the-WRF-Weather-Research-and-Forecasting-model.pdf (accessed on 10 March 2021).

- Geng, Y.a.; Li, Q.; Lin, T.; Jiang, L.; Xu, L.; Zheng, D.; Yao, W.; Lyu, W.; Zhang, Y. Lightnet: A dual spatiotemporal encoder network model for lightning prediction. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2439–2447. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Le, P.; Zuidema, W. Quantifying the vanishing gradient and long distance dependency problem in recursive neural networks and recursive LSTMs. arXiv 2016, arXiv:1603.00423. [Google Scholar]

- Tran, Q.K.; Song, S.k. Computer vision in precipitation nowcasting: Applying image quality assessment metrics for training deep neural networks. Atmosphere 2019, 10, 244. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).