Abstract

We developped an open source library called RcdMathLib for solving multivariate linear and nonlinear systems. RcdMathLib supports on-the-fly computing on low-cost and resource-constrained devices, e.g., microcontrollers. The decentralized processing is a step towards ubiquitous computing enabling the implementation of Internet of Things (IoT) applications. RcdMathLib is modular- and layer-based, whereby different modules allow for algebraic operations such as vector and matrix operations or decompositions. RcdMathLib also comprises a utilities-module providing sorting and filtering algorithms as well as methods generating random variables. It enables solving linear and nonlinear equations based on efficient decomposition approaches such as the Singular Value Decomposition (SVD) algorithm. The open source library also provides optimization methods such as Gauss–Newton and Levenberg–Marquardt algorithms for solving problems of regression smoothing and curve fitting. Furthermore, a positioning module permits computing positions of IoT devices using algorithms for instance trilateration. This module also enables the optimization of the position by performing a method to reduce multipath errors on the mobile device. The library is implemented and tested on resource-limited IoT as well as on full-fledged operating systems. The open source software library is hosted on a GitLab repository.

1. Introduction

Algorithms and scientific computing are workhorses of many numerical libraries that support users to solve technical and scientific problems. These libraries use mathematics and numerical algebraic computations, which contribute to a growing body of research in engineering and computational science. This leads to new disciplines and academic interests. The use of computers has accelerated the trend as well as enhanced the deployment of numerical libraries and approaches in scientific and engineering communities. Originally, computers were built for numerical and scientific applications. Konrad Zuse built a mechanical computer in 1938 to perform repetitive and cumbersome calculations. A specific problem, from the area of static engineering, requires performing tedious calculations to design load-bearing structures by solving systems of linear equations [1]. Howard Aiken independently developed an electro-mechanical computing device that can execute predetermined commands typed on a keyboard in the notation of mathematics and translated into numerical codes. These are stored on punched cards and perforated magnetic tapes or drums [2,3].

The first software libraries of numerical algorithms were developed in the programming language ALGOL 60 including procedures for solving linear systems of equations or eigenvalue problems [4]. This software was rewritten in FORTRAN and ported to the LINPACK and EISPACK software packages [5,6]. Cleve Moler implemented a user-friendly interface to enable his students an easy access to LINPACK and EISPACK without writing Fortran programs [7]. He called the interface MATLAB (Matrix Laboratory), which was so successful that he founded a company called MathWorks. MATLAB is now a full-featured computing platform.

Ubiquitous computing on resource-limited devices has become an important issue in the Internet of Things (IoT) and the Machine to Machine (M2M) communication technologies, enabling the implementation of various applications such as health monitoring or vehicle tracking and detection. IoT is an emerging and challenging technology that facilitates the realization of computing services in various areas by using advanced communication protocols, technologies, and intelligent data analytical software [8]. The M2M communication in combination with the Radio-Frequency Identification (RFID), localization, observation by sensors, and controlling of actuators provide context-aware intelligent decisions as well as high-quality services. Computing plays a key role in implementing such applications, particularly by applying local and decentral processing on mobile and ubiquitous devices. This affords in-network and local context-aware decisions without the use of external computing services (e.g., cloud services). Therefore, we provide an open source software library for numerical linear algebra called RcdMathLib (Mathematical Library for Resource-constrained devices) [9]. This software library is suitable for devices with limited resources such as microcontrollers or portable computing devices. These devices are mostly low-cost, are equipped with low-end processors, and have limited memory and energy resources. RcdMathLib supports a decentralized and on-the-fly numerical computing locally on a mobile device. The decentralized numerical calculations allow for pushing the application-level knowledge into the mobile device and avoiding the communication as well as the calculation on a central unit such as a processing server. The decentralization allows for the reduction of latency and processing time as well as enhancing the real-time capability because the length of the path to be traveled from data are shortened. Furthermore, RcdMathLib provides useful algorithms such as the Singular Value Decomposition (SVD) which has become an indispensable tool in science and engineering [10]. SVD is applied for image compression and restoration or biomedical applications, for example, noise reduction of biomedical signals [11].

RcdMathLib allows for computing on mobile devices as well as embedded systems providing algorithms for the solution of linear and nonlinear multivariate system of equations. The solution of these equation systems is achieved by using robust matrix decomposition algorithms. The software library offers an optimization module for curve fitting or solving of problems of regression smoothing. Applications can be built and organized as modules using the RcdMathLib, therefore, we offer a localization module. This is an application module for distance- and Direct-Current (DC)-pulsed, magnetic-based localization systems. The localization module allows for a position estimation of a mobile device. Localization enables the realization of context-aware computing applications in combination with mobile devices. In this sense, the software library enables the computation of the localization on mobile systems. RcdMathLib also enables the optimization of the estimated location by using an adaptive approach based on the SVD, Levenberg–Marquardt (LVM) algorithms, and the Position Dilution of Precision (PDOP) value [12,13,14]. In addition, the localization module provides an algorithm for the multipath detection and mitigation enables an accurate localization of the mobile device in Non-Line-of-Sight (NLoS) scenarios. RcdMathLib can be also used on a full-fledged device such as a Personal Computer (PC) or a computing server.

In this article, we will present the RcdMathLib as well as briefly address the difficulties by using linear algebra methods and the techniques to overcome the limitation of resource-constrained devices. Our main contributions are:

- An open source library for numerical computations on resource-limited devices and embedded systems. The software permits a user or a mobile device to solve multivariant linear equation systems based on efficient algorithms such as the Householder or the Moore–Penrose inverse. The Moore–Penrose inverse is implemented by using the SVD method.

- A module for solving multivariant nonlinear equation systems as well as optimization and curve fitting problems on a resource-contained device on the basis of the SVD algorithm.

- A utilities-module provides various algorithms such as the Shell sort algorithm or the Box–Muller method to generate normally distributed random variables.

- A localization module for positioning systems that use distance measurements or DC-pulsed, magnetic signals. This module enables an adaptive, optimized localization of mobile devices.

- A software routine to locally reduce multipath errors on mobile devices.

Guckenheimer perceived that we are conducting increasingly complex computations built upon the assumption that the underlying numerical approaches are complete and reliable. Furthermore, we ignore numerical analysis by using mathematical software packages [15]. Thus, the user must be aware of the limitations of the algorithms and be able to choose appropriate numerical methods. The use of inappropriate methods can lead to incorrect results. Therefore, we briefly address certain difficulties by using linear algebra algorithms.

The remainder of this article is structured as follows: Firstly, we review related works in Section 2. We present the architecture as well as describe the modules of the software library in Section 3. We introduce the implementation issues in Section 4 and the usage of the RcdMathLib in Section 5. In Section 6, we evaluate the algorithms on a resource-limited device and on a low-cost, single-board computer. Finally, we conclude our article and give an outlook on future works in Section 7.

2. Related Work

Computing software especially for linear algebra is indispensable in science and engineering for the implementation of applications like text classification or speech recognition. To the best of our knowledge, there are few mathematical libraries for resource-limited devices, and most of them are limited to simple algebraic operations.

Libraries for numerical computation such as the GNU Scientific Library (GSL) are suitable for Digital Signal Processors (DSPs) or Linux-based embedded systems. For example, the commercially available Arm Performance Libraries (ARMPL) offer basic linear algebra subprograms, fast Fourier transform routines for real and complex data as well as some mathematical routines such as exponential, power, and logarithmic routines. Nonetheless, these routines do not support resource-constrained devices such as microcontrollers [16].

The C standard mathematical library includes mathematical functions defined in <math.h>. This mathematical library is widely used for microcontrollers, since it is a part of the C compiler. It provides only basic mathematical functions for instance trigonometric functions (e.g., sin, cos) or exponentiation and logarithmic functions (e.g., exp, log) [17].

Our research shows that there are very few attempts to build numerical computations libraries, which can run on microcontrollers: Texas Instruments® (Dallas, Texas, USA) provides for its MSP430 and MSP432 devices the IQmath and Qmath Libraries, which contain a collection of mathematical routines for C programmers. However, this collection is restricted only to basic mathematical functions such as trigonometric and algorithmic functions [18].

Libfixmatrix is a matrix computation library for microcontrollers. This library includes basic matrix operations such as multiplication, addition, and transposition. Equation solving and matrix inversion are implemented by the QR decomposition. Libfixmatrix is only suitable for tasks involving small matrices [19].

MicroBLAS is a simple, tiny, and efficient library designed for PC and microcontrollers. It provides basic linear algebra subprograms on vectors and matrices [20].

Other libraries for microcontrollers are the MatrixMath and BasicLinearAlgebra libraries. However, these libraries offer limited functionalities and are restricted to the Arduino platform [21,22].

The Python programming language is becoming widely used in scientific and numeric computing. The Python package NumPy (Numeric Python) is used for manipulating large arrays and matrices of numeric data [23]. The Scientific Python (SciPy) extends the functionality of NumPy with numerous mathematical algorithms [24]. Python is widely used for PC and single-board computers such as the Raspberry Pi. A new programming language largely compatible with Python called MicroPython is optimized to run on microcontrollers [25]. MicroPython includes the math and cmath libraries, which are restricted to basic mathematical functions. The mainline kernel of MicroPython supports only the ARM Cortex-M processor family. CircuitPython is a derivative of the MicroPython created to support boards like the Gemma M0 [26]. Various mathematical libraries are outlined in Table 1, which reveals their capabilities, limitations, and supported platforms.

Table 1.

Comparison of mathematical libraries. FFT, Fast Fourier Transform.

3. Library Architecture and Description

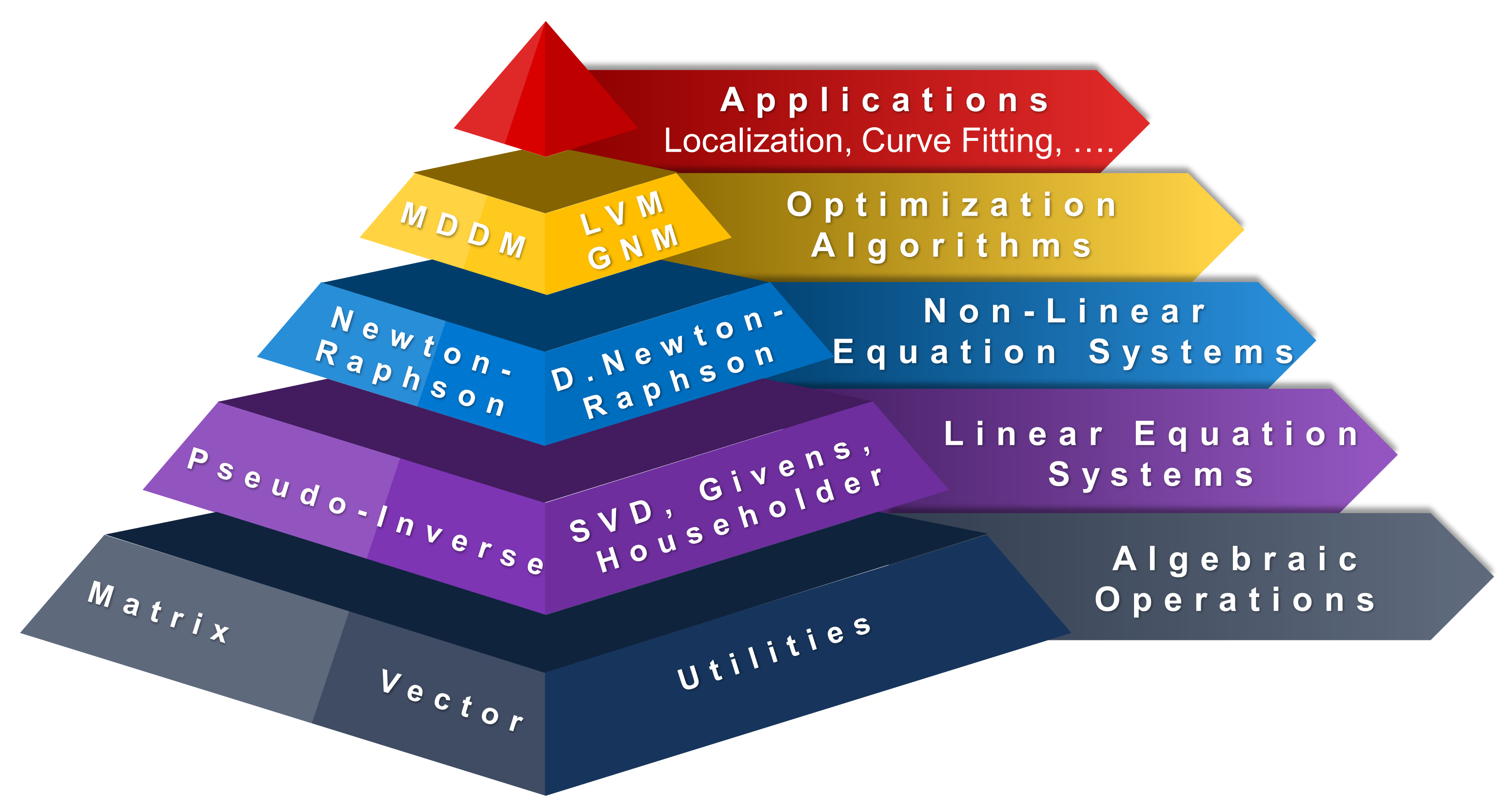

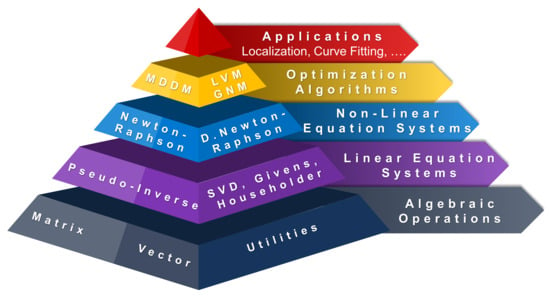

RcdMathLib has a pyramidal and a modular architecture as illustrated in Figure 1, whereby each layer rests upon the underlying layers. For example, the linear algebra module layer rests on the basic algebraic module layer. Each module layer is composed of several submodules such as the matrix or vector submodules. The submodules can be built up from the underlying submodules, for example, the pseudo-inverse submodule is based on the SVD, the Householder, and the Gevins submodules. For the sake of brevity, Figure 1 presents only the sublayers. The software layers will be briefly addressed in Section 3.1 through Section 3.3.

Figure 1.

Architecture of the RcdMathLib. SVD, Singular Value Decomposition. D. Newton-Raphson, Damped Newton-Raphson. LVM, Levenberg–Marquardt Method. GNM, Gauss–Newton Method. MDDM, Multipath Distance Detection and Mitigation.

3.1. Linear Algebra Module Layer

The module layer of linear algebra is composed of the following submodules:

- Basic operations submodule: provides algebraic operations such as addition or multiplication of vectors or matrices. This submodule distinguishes between vector and matrix operations.

- Matrix decomposition submodule: allows for the decomposition of matrices by using algorithms such as Givens, Householder, or the SVD. The SVD method is implemented using the Golub–Kahan–Reinsch algorithm [27,28].

- Pseudo-inverse submodule: enables the computation of the inverse of quadratic as well as of rectangular matrices. The matrix inverse can be calculated by using the Moore–Penrose, Givens, or Householder algorithms [29].

- Linear solve submodule: permits the solution of under-determined and over-determined linear equation systems. We solve the linear equation systems using two matrix decompositions: the SVD and QR factorizations. The first method uses the Moore–Penrose inverse, while the second approach applies the Householder or the Givens algorithms with the combination of the back substitution method. We also provide the Gaussian Elimination (GE) with a pivoting algorithm, which is based on the LU decomposition. We use the GE-based method only for testing purposes or for devices with very limited stack memory. We suggest using the SVD- or the QR-based methods due to the numerical stability and the support of non-quadratic matrices [30].

- Utilities submodule: offers filtering algorithms such as median, average, or moving average. Furthermore, it provides the Shell algorithm to put elements of a vector in a certain order as well as the Box–Muller method to generate normally distributed random variables [31,32].

3.2. Non-Linear Algebra Module Layer

The nonlinear algebra module includes the following submodules:

- Optimization submodule: enables the optimization of an approximate solution by using Nonlinear Least Squares (NLS) methods such as modified Gauss–Newton (GN) or the LVM algorithms. These methods are iterative and need a start value as an approximate solution. Moreover, the user should give a pointer to an error function and to a Jacobian matrix. The modified GN and the LVM algorithms will be briefly described in Section 3.2.1 and Section 3.2.2.

- Nonlinear equations submodule: allows for the solution of multivariate nonlinear equation systems by using Newton–Raphson and damped Newton–Raphson methods [33]. The user must deliver a start value as well as a pointer to nonlinear equation systems to solve, and a pointer to the appropriate Jacobian matrix.

3.2.1. Gauss–Newton Algorithm

The Gauss–Newton algorithm works iteratively to find the solution that minimizes the sum of the square errors. During the iteration process, we cache the value of with the minimal sum of squares to prevent the divergence of the GN algorithm [34]. The computed solution by the GN can be used as a start value for the subsequent LVM algorithm if the start value is unknown or the GN algorithm diverges.

3.2.2. Levenberg–Marquardt Algorithm

The LVM algorithm is also a numerical optimization approach enabling solving NLS problems [35,36,37]. The LVM algorithm can be used for optimization or fitting problems. The LVM method proceeds iteratively as follows:

where is the k-th approximation of the searched solution and is the k-th error correction vector. The LVM improves the approximate solution in each iteration step by calculating the correction vector as follows [38,39]:

where is the damping parameter, f is the error function, and is the Jacobian matrix. The LVM algorithm has the advantage over the GN method because the matrix on the left side of Equation (2) is no longer singular. This is accomplished by the factor regulating the matrix . The LVM method is described in Algorithm 1.

| Algorithm 1 LVM algorithm |

|

3.3. Localization Module Layer

Localization of users or IoT devices is indispensable for Localization-Based Services (LBSs) such as tracking in smart buildings, advertising in shopping centers, or routing and navigation in large public buildings [40]. Indoor Localization Systems (ILSs) are used to locate IoT or mobile devices inside environments where the Global Positioning System (GPS) cannot be deployed. Numerous technologies have been evaluated for ILSs, for example, Ultra-Wideband (UWB) [41], Wireless Local Area Network (WLAN) [42], ultrasound [43], magnetic signals [44], or Bluetooth [45].

3.3.1. Introduction and Layer Description

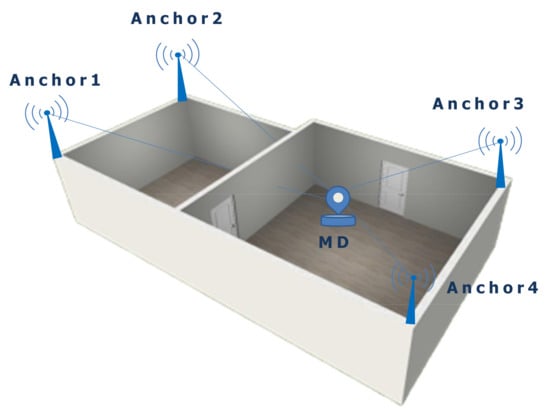

The Localization Module (LM) layer provides algorithms to calculate as well as optimize a position of an ILS. At the current stage of development, we provide two example applications of the LM as submodules: a distance-based as well as a DC-pulsed, magnetic-based submodule of ILSs. We also offer a common positioning submodule comprising shared algorithms like the trilateration algorithm [46].

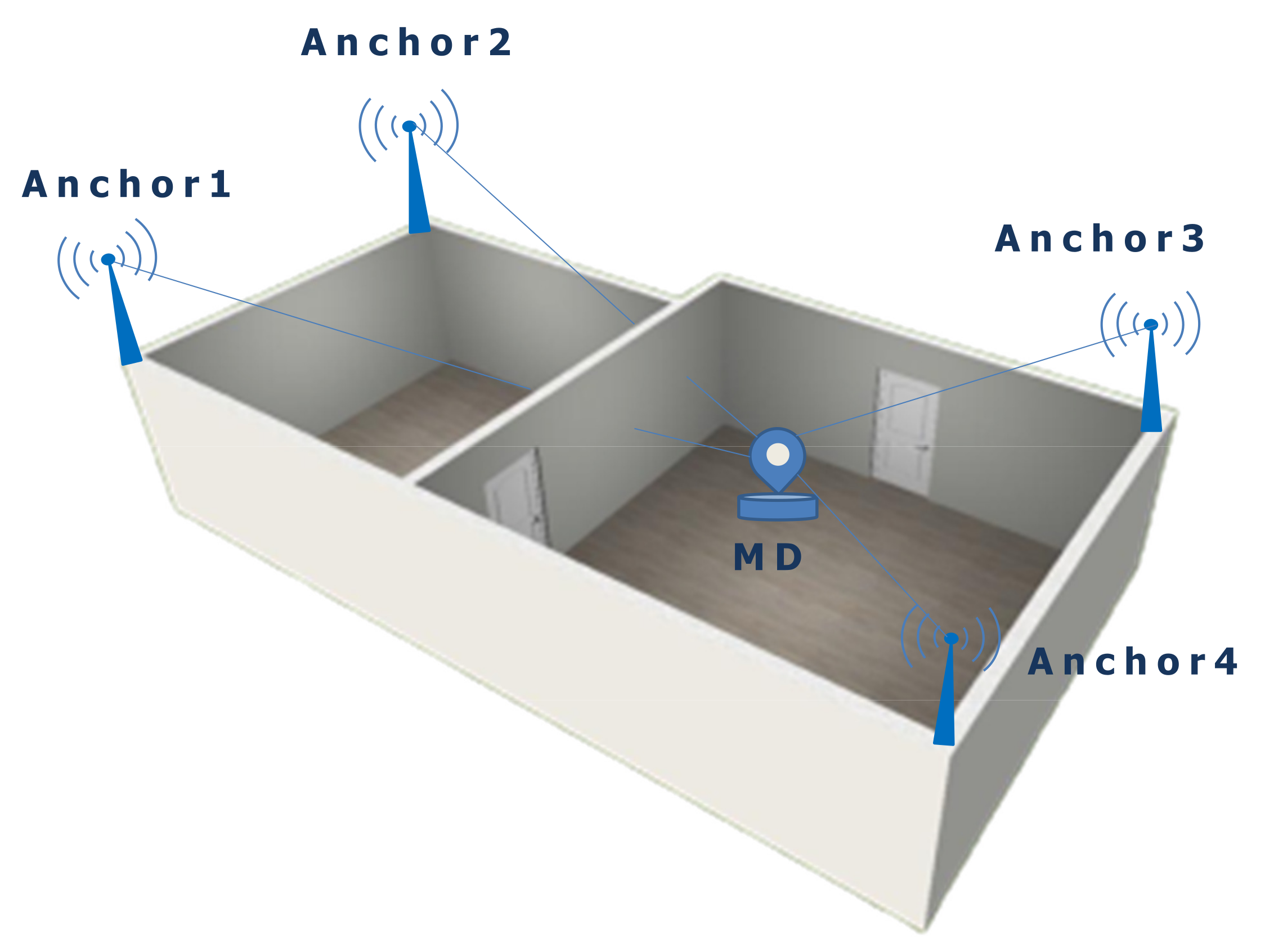

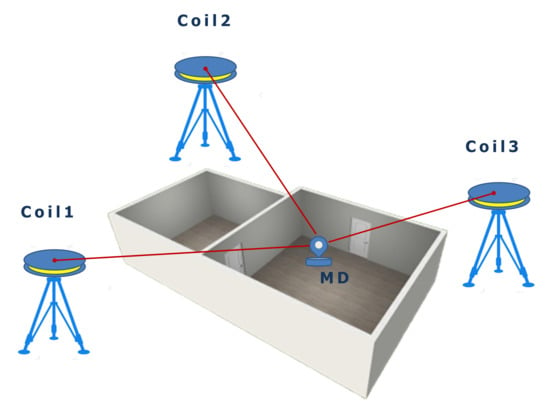

Figure 2 illustrates the principle of a distance-based ILS composed of four anchors with known positions and one mobile device. The distances between the anchors and the mobile device can be measured by using UWB or ultrasound sensors. The mobile device performs distance measurements to the four anchors. Furthermore, the collected distances are preprocessed using the median filter from the utilities submodule to remove outliers (see Section 3.1). Finally, the mobile device locally calculates a three-dimensional position using the trilateration algorithm provided by the RcdMathLib.

Figure 2.

Distance-based Indoor Localization System. MD, Mobile Device.

The trilateration algorithm computes the position of an unknown point with the coordinates and distances to the reference positions for . This problem requires the estimation of a vector such that:

where the matrix and the vector have the following forms [46,47,48]:

The solution is given by:

where is the pseudo-inverse of the matrix A. The pseudo-inverse matrix is computed by using the pseudo-inverse submodule of the RcdMathLib (see Section 3.1). The quality q of the calculated position is given by:

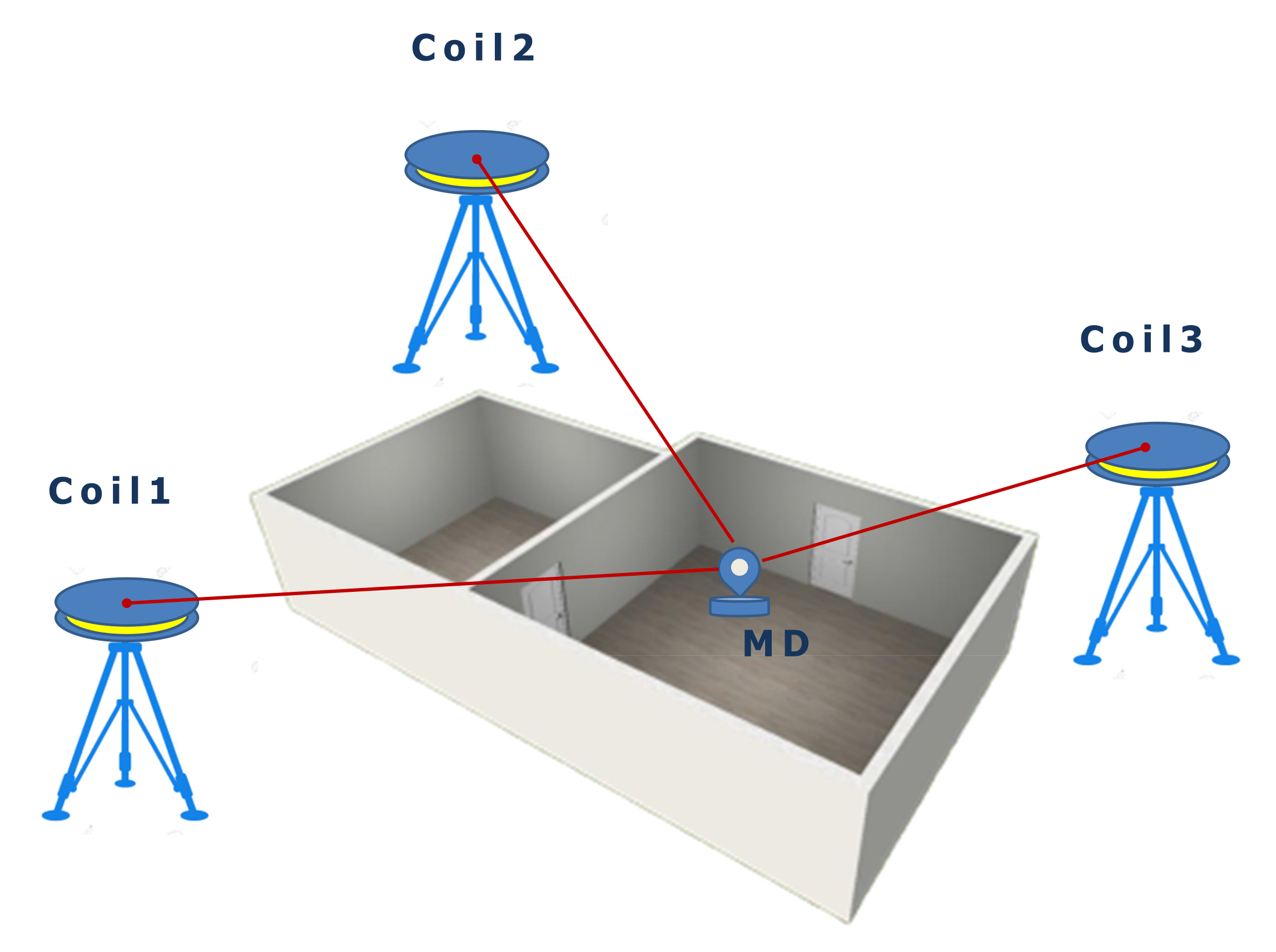

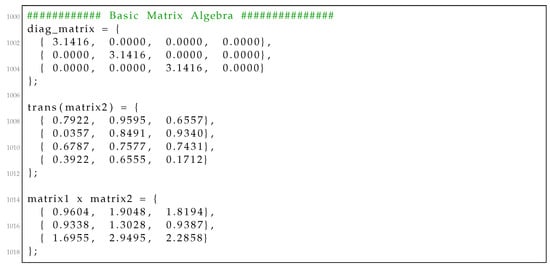

Figure 3 illustrates the principle of a magnetic-based ILS composed of various coils with known positions and a mobile device. This system enables the calculation of the position of the mobile device by measuring magnetic fields to the coils as anchors. The magnetic signals are artificially generated from the coils using a pulsed direct current. The collected measurement data are preprocessed from the utilities-submodule for removing outliers and calibrating magnetic data. Finally, the position is calculated on the mobile device.

Figure 3.

Magnetic-based indoor localization system. MD, Mobile Device.

The magnetic field generated from the coil i is equal to [47,49]:

In this setting, , where describes the number of turns of the wire, I is the current running through the coil, F expresses the base area of the coil, is the permeability of free space, is the distance between the mobile device and coil i, and is the mobile device elevation angle relative to the coil plane. The distance and the elevation angle are equal to:

Equation (8) is a nonlinear equation system with the unknowns coordinates x, y and z, which can be solved by applying the LVM algorithm from the optimization-submodule of the RcdMathLib, whereby, and are the coordinates of the i-th coil and the mobile device.

3.3.2. Multipath Distance Detection and Mitigation and Position Optimization Algorithm

The location module is not restricted to simple position calculations but rather performs complex tasks such as the Multipath Distance Detection and Mitigation (MDDM) by the usage of other modules of the RcdMathLib. The MDDM algorithm enables to reduce the effects of multipath fading on digital signals in radio environments of a mobile device to Reference Stations (RSs) with known locations. The MDDM approach is based on the Robust Position Estimation in Ultrasound Systems (RoPEUS) algorithm [50]. The MDDM is adapted for precise distance-based ILSs such as the Ultra-Wideband (UWB)-based localization systems, whereas it is simultaneously optimized for resource-limited devices [12]. The MDDM algorithm is summarized in Algorithm 2.

| Algorithm 2 MDDM algorithm | |

| 1: function recog_mitigate_multipath_alg() | |

| 2: ; ; | |

| 3: distances , ; | ▹ distance measures to n RSs |

| 4: while do | |

| 5: ; | ▹ choose k RSs |

| 6: | ▹ Compute a position related to k RSs |

| 7: ; | ▹ residuals |

| 8: ; | |

| 9: ; | |

| 10: ; | |

| 11: end while | |

| 12: | ▹ the solution |

| 13: | ▹ calculate PDOP-value of |

| 14: if then | |

| 15: LVM_ALG() | ▹ optimize the position |

| 16: end if | |

| 17: end function |

3.4. Documentation and Examples’ Modules

RcdMathLib includes a module that provides an Application Programming Interface (API) documentation. The API documentation is in Portable Document Format (PDF) and in Hypertext Markup Language (HTML) format. It is generated from the C source code by using the Doxygen tool [51]. The software reference documentation covers the description of the implemented functions as well as their passing parameters. In addition, the example module comprises samples of each module to familiarize the users with the API. The example module has the same structure as the RcdMathLib.

4. Implementation Issues

Given a non-singular matrix A and an n-vector , the fundamental problem of linear algebra is to find an n-vector such that . This fundamental problem emerges in various areas of science and engineering such as applied mathematics, physics, or electrical engineering [52]. Associated problems are finding the inverse, the rank, or projections of a matrix A. Attempting to solve the linear algebra problem using common theoretical approaches would face computational difficulties. For example, solving a () linear system with the Cramer’s Rule, which is a significant theoretical algorithm, might take more than a million years even by using fast computers [52].

We use the Givens, the Householder, and the SVD matrix decomposition algorithms. These decomposition methods enable the solution of various problems such as the computation of the inverse matrix, the linear equations, or the rank of a matrix. We do not use the Cholesky decomposition (), since it can become unstable due to the rounding errors that are equal to instead of . Although the Gaussian Elimination (GE) is an efficient algorithm to implement the LU factorization, we do not used it, since GE generally requires pivoting and is limited to square matrices. Furthermore, GE can be unstable in certain contrived cases; nonetheless, it performs well for the majority of practical problems [53,54].

We do not use the Classical Gram–Schmidt (CGS) and the Modified Gram–Schmidt (MGS) to implement the QR decomposition due to the numerical instability. Instead, we use the Householder and the Givens algorithms. Even though the Householder is more efficient than the Givens algorithm, the Givens method is easy to parallelize. The Givens and the Householder methods have a guaranteed stability but fail if the matrix is nearly rank-deficient or singular. The SVD algorithm can be used to avoid the rank deficiency problem. This algorithm is not be explicitly computable by determining the eigenvalues of the symmetric matrix due to the round-off errors in the calculation of the matrix . Therefore, we implement the SVD by using the Golub–Kahah–Reinsch (GKR) algorithm, which will be described in Section 4.1.

We calculate the pseudo-inverse matrix by using the Moore–Penrose method based on the SVD or the QR decomposition using the Householder or the Givens algorithms. The QR-based pseudo-inverse is computed as follows:

where is the inverse of an upper triangular matrix, and is the transpose of an orthogonal matrix. We calculate the matrix by using Algorithm 3 [55].

| Algorithm 3 Inverse of an upper triangular matrix |

|

In general, we avoid the explicit calculation of matrix multiplications such as the construction of Householder matrices () or of Givens matrices (). The calculated triangular matrix R is stored over the matrix A. We also provide functions to avoid the explicit computation and storage of the transpose matrix such as the function that implicitly calculates the matrix–transpose–vector multiplication (). We use the SVD algorithm to overcome the rank deficiency problem, for example, by the modified GN, the Newton–Raphson, and damped Newton–Raphson methods. We used the Householder instead of the SVD method by the LVM algorithm to save computing time and memory stack. This optimization is possible because of the robustness of the LVM algorithm (see Section 3.2.2). We provide the iterative Shell sort algorithm that is suitable for resource-limited devices with a limited stack size. We use the Shell sort algorithm to implement the median filter.

4.1. Singular Value Decomposition

The SVD method has become a powerful tool for solving a wide range of problems in different application domains such as biomedical engineering, control systems, or signal processing [52]. We implemented the SVD approach based on the Golub–Kahan–Reinsch algorithm that works in two phases: a bidiagonalization of the matrix A and the reduction of the calculated bidiagonal matrix to a diagonal form.

- First phase (bidiagonalization)A () matrix A is transformed to an upper bidiagonal matrix by using the Householder bidiagonalization, where . The matrix A is transformed as follows:where B is an bidiagonal matrix equal to

- Second phase (reduction to the diagonal form)The bidiagonal matrix B is further reduced to a diagonal matrix by using orthogonal equivalence transformations as follows:where is the matrix of the singular values and the matrices and are orthogonal. The singular vector matrices can be computed as follows:We implemented the first and second phases by the Golub–Kahan bidiagonal procedure and the Golub–Reinsch algorithm. Both algorithms will be described in detail in Section 4.1.1 and Section 4.1.2. In this description, we will mention some implementation issues.

4.1.1. Golub–Kahan Bi-Diagonal Procedure

The reduction of matrix A to the upper bi-diagonal matrix B is accomplished by using a sequence of Householder reflections, where the matrix B has the same set of singular values as the matrix A [56]. First, a Householder transformation is applied to zero out the sub-diagonal elements of the first column of the matrix A. Next, a Householder transformation is used to zero out the last () elements of the first row by post-multiplying the matrix : . Repeating these steps a total of n times, the matrix A will be transformed to:

4.1.2. Golub–Reinsch Algorithm

The Golub–Reinsch algorithm is a variant of the QR iteration [28]. At each iteration i, the implicit symmetric QR algorithm is applied with the Wilkinson shift without forming the product . The algorithm has guaranteed convergence with a quite fast rate [52]. Starting from the bi-diagonalization of the matrix A obtained from the previous Golub–Kahan bi-diagonal procedure, the algorithm creates a sequence of bi-diagonal matrices with possibly smaller off-diagonals than the previous one. For simplicity, we write:

We calculate the Wilkinson shift that is equal to the eigenvalue of the right-hand corner sub-matrix of the matrix :

which is closer to . G. H. Golub and C. F. Van Loan suggest to calculate the Wilkinson shift as follows [28]:

We calculate and such that:

and form the Givens rotation .

We apply the Givens rotation to the right of the matrix B:

The bidiagonal form is destroyed by the unwanted non-zero element (bulge) indicated by the “+” sign. Therefore, we apply the Givens rotations , , , …, , and to chase the badges.

We apply a Givens transformation to the left of the matrix to eliminate the unwanted sub-diagonal element. This reintroduces a badge in the first row to the right of the super-diagonal element:

We apply the Givens rotations to remove the badge in the matrix . This introduces a new badge into the sub-diagonal of the third row, which is eliminated by the Givens rotation :

The matrix pair (, ) terminates the chasing process and delivers a new bi-diagonal matrix :

In general, the chasing process creates a new bi-diagonal matrix that is related to the matrix B as follows [28]:

where and are orthogonal. During the chasing process, we distinguish between the splitting, the cancellation, and the negligibility steps [57]:

At the i-th iteration, we assume that the matrix is equal to:

- Splitting:

- If the matrix entry is equal to zero, we split the matrix into two block diagonal-matrices whose singular values can be computed independently:where is the singular value decomposition of the matrix ; in this case, we compute the matrix first. If the split occurs at i equal to n, then the matrix is equal to and is a singular value.

- Cancellation:

- If the matrix entry is equal to zero, we split the matrix by using Givens rotations from the left to zero out row i as follows:whereas the budge b is removed by using the Givens rotations for . Since the matrix element is equal to zero, the matrix splits again into two block diagonal sub-matrices (see the splitting step).

- Negligibility:

- The values of the matrix elements or will be small but not exactly zero due to the finite precision arithmetic used by digital processors. Therefore, we require a threshold to decide when the elements or can be considered zero. Golub and Reinsch [58] recommend the following threshold rule:where is the machine precision. Björck [27] suggests the following approach:Linpack [5] uses a variant Björck’s approach that omits the factor in Equations (35) and (36).

5. Usage of the RcdMathLib

RcdMathLib can be used on PCs, resource-constrained devices such as microcontrollers, or on small single-board computers like Raspberry Pi devices. It is a free software and available under the terms of the GNU Lesser General Public License as published by the Free Software Foundation, version (LGPLv) [59]. The RcdMathLib software is written in the C programming language by using the GNU Compiler Collection (GCC) for full-fledged devices and embedded tool chains for resource-limited devices; for instance, the GNU ARM Embedded Toolchain. RcdMathLib can also be used on top of an Operating System (OS) for resource-constrained devices with a minimal effort due to the modular architecture of the library. We support the RIOT-OS, which is an open source IoT OS [60]. RcdMathLib is interfaced with the RIOT-OS using the GNU Make utility, whereby the user only needs to choose the modules needed by setting the USE_MODULE-macro. We automatically calculate the dependencies of the modules needed and the user can choose between a floating-point single-precision or double-precision depending on the available stack memory. An OS for resource-limited devices is recommended for the use of the RcdMathLib, but it is not required. A minimum stack size of 2560 bytes is needed to compute with floating-point numbers. The printf() function needs extra memory stack, therefore a minimum stack size of 4608 bytes is required to work with double-precision for floating-point arithmetic. We recommend a stack size of 8192 bytes.

The Linaro toolchain can be used on Linux or Windows to build applications for a target platform [61]. The OpenOCD can be used for flashing the code to the target (chip) as well as for low level or source level debugging [62]. The source code as well as the documentation (APIs) of the RcdMathLib can be downloaded from the GitLab repository [63]. The wikis are available on the homepage of the library to get started with the RcdMathLib [9].

Simple Example

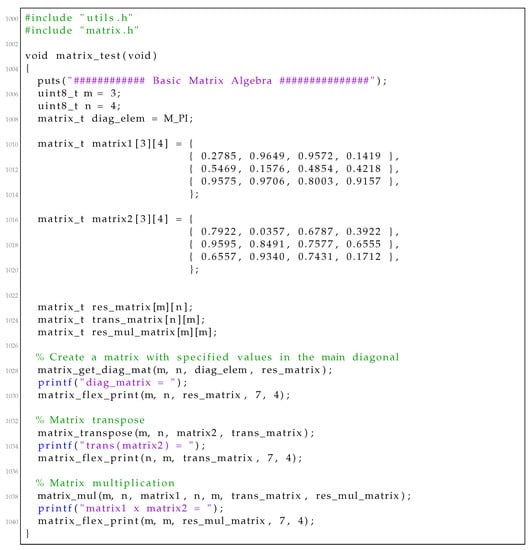

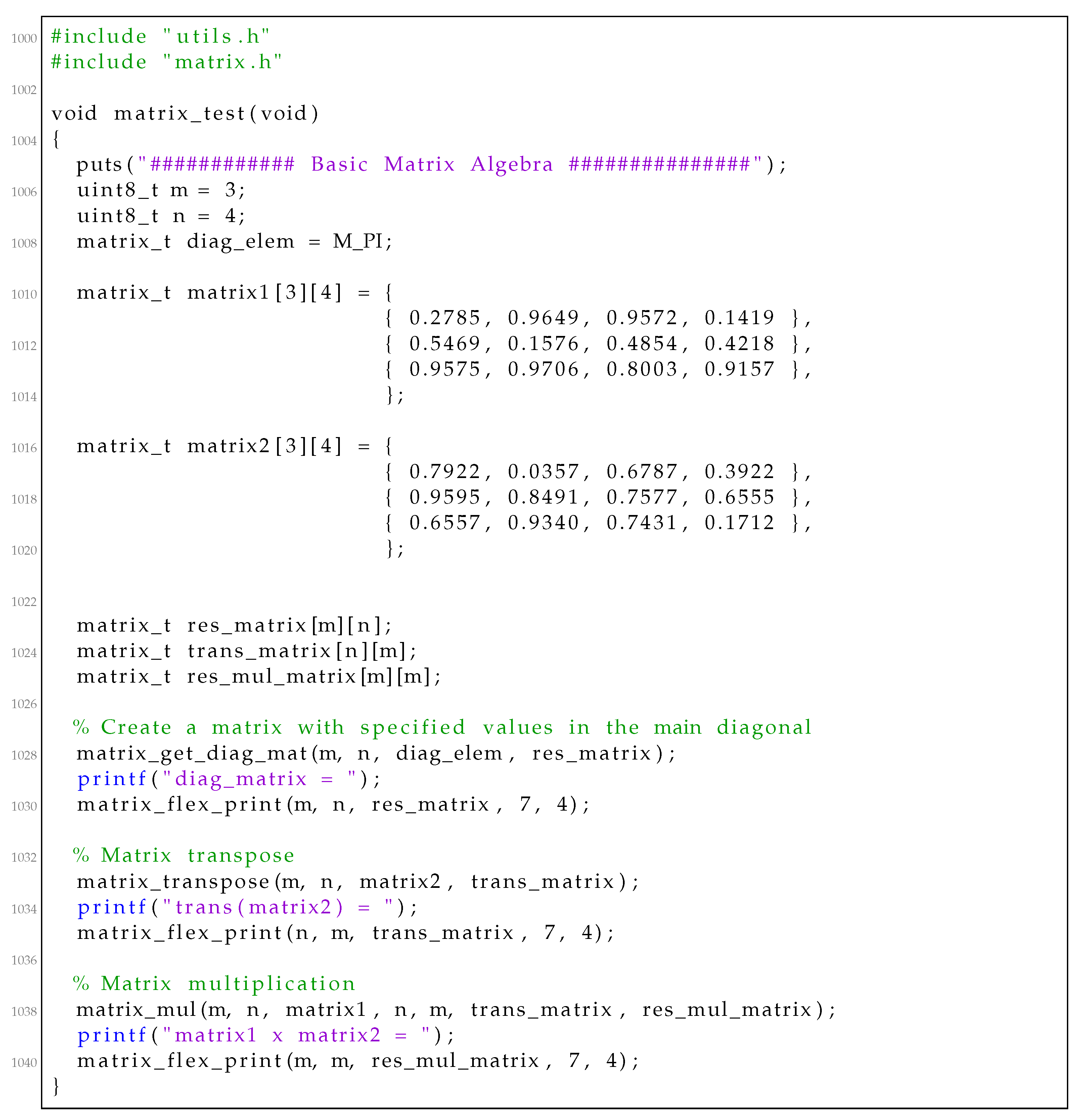

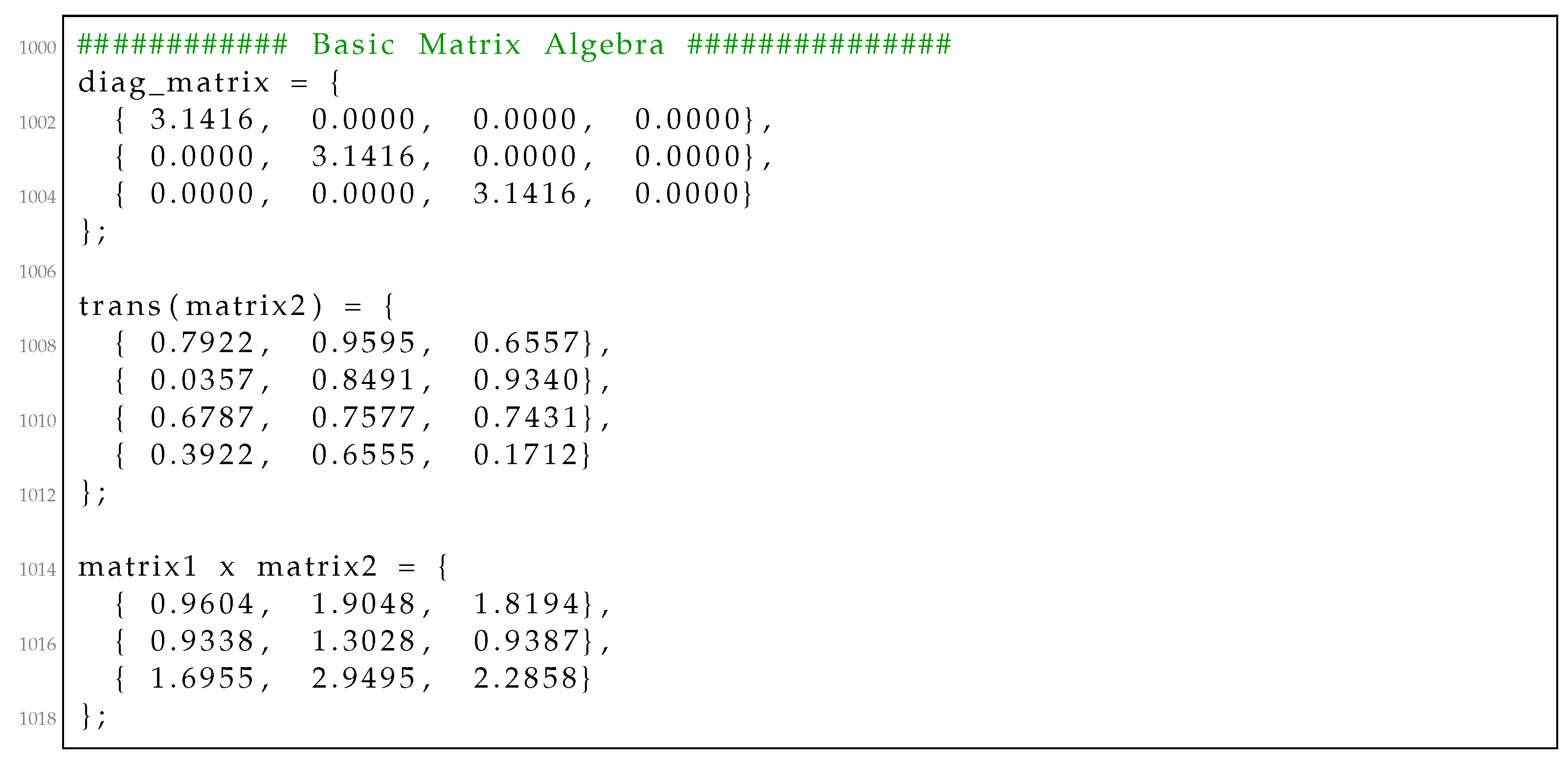

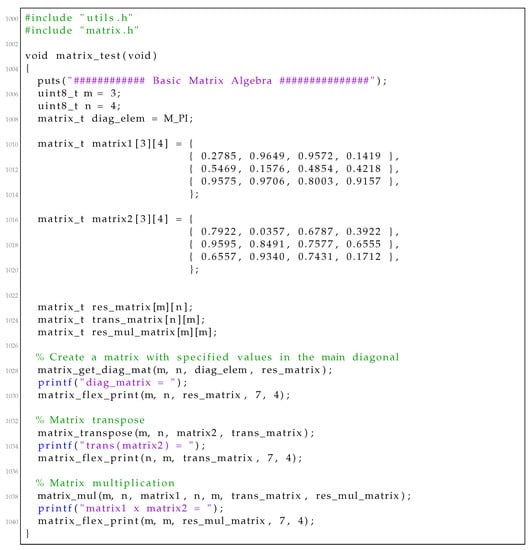

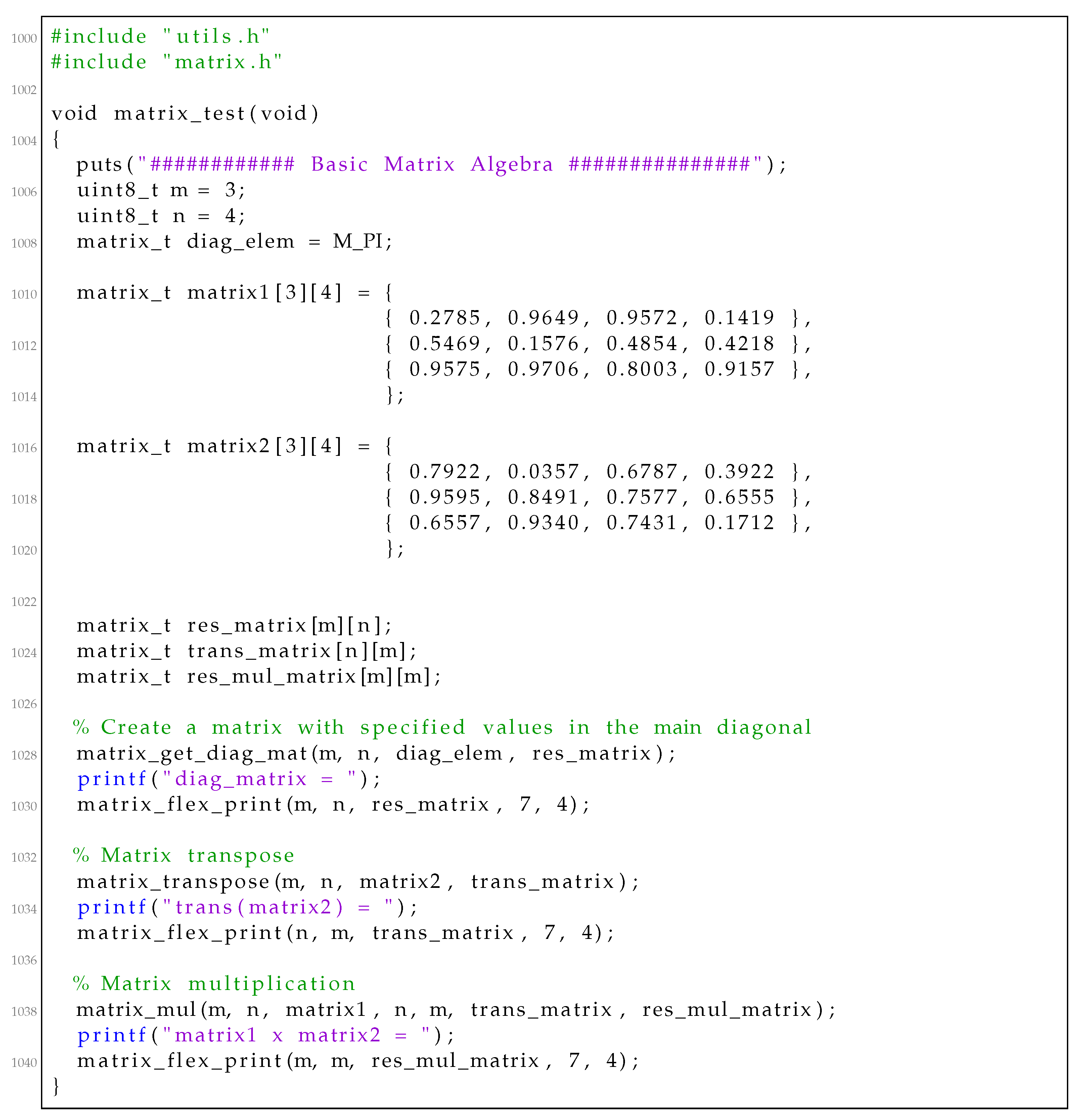

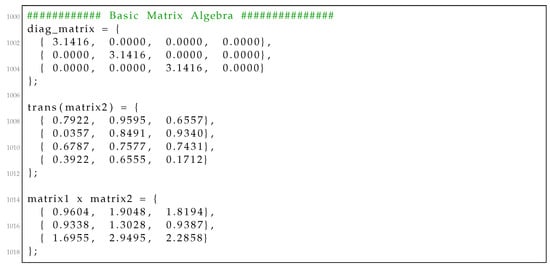

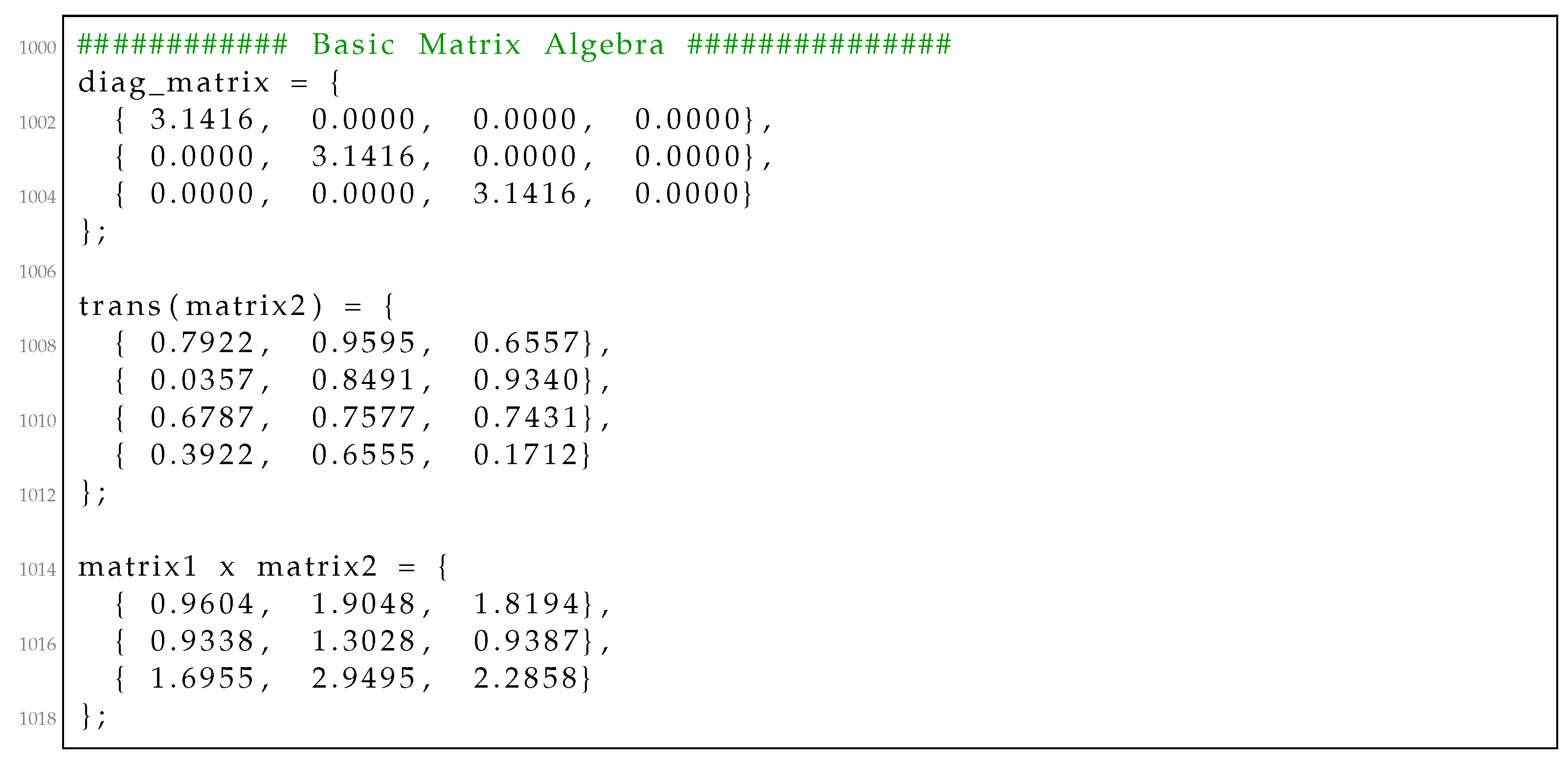

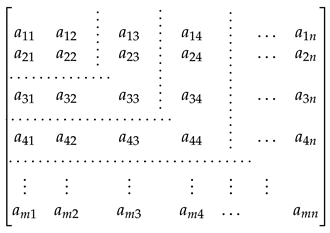

We present a simple example to demonstrate how to use the RcdMathLib by defining two matrices in Listing 1. We create a matrix with specific values equal to in the main diagonal by calling the “matrix_get_diag_mat()” function. In the next step, we calculate the transpose of the second matrix by invoking the “matrix_get_transpose()” function. Finally, we calculate the multiplication of the first matrix with the transpose of the second matrix by executing the “matrix_mul()” function. In these three cases, the user should deliver the dimension of the matrices as well as a reference to the matrix resulted. The outputs of the calculated results are presented in Listing 2.

Listing 1.

Example of basic matrix algebra.

Listing 1.

Example of basic matrix algebra.

Listing 2.

Outputs of the example of basic matrix algebra.

Listing 2.

Outputs of the example of basic matrix algebra.

6. Evaluation of the Algorithms

We evaluated the linear as well as the nonlinear algebra modules on an STM32F407 Microcontroller Unit (MCU) based on the ARM Cortex-M4 core operating at 168 MHz and having a memory capacity of 192 KB RAM. In order to demonstrate the scalability of the algorithms implemented, we also evaluated the same algorithms on Raspberry Pi 3, which has more capacity (Quad Core GHz and 1 GB RAM) than the STM32F4-MCU.

6.1. Evaluation of the Linear Algebra Module

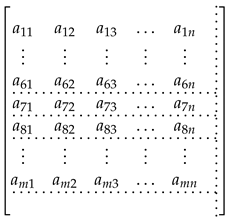

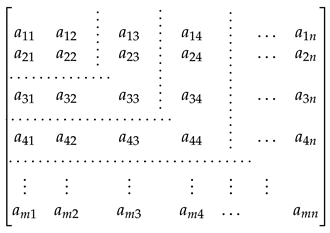

We evaluated the linear algebra module by using a () matrix A and a vector with uniformly distributed random numbers. The aim is to calculate and measure the mean execution time of the methods for solving linear equation systems. We evaluated the SVD-, QR-, and the LU-based algorithms for solving linear equation systems described in Section 3.1. The determined and the over-determined linear equation systems can be represented by the colon notation as follows:

where , and

where and is the sub-matrix of A with rows 1 up to i and columns 1 up to n. We use the same format as the corresponding column notation in MATLAB. The determined and the over-determined linear equation systems are illustrated in the matrix form in Equations (39) and (40), respectively.

The horizontal and vertical dotted lines enclose the square and rectangular sub-matrices in Equations (39) and (40). We set the maximal row (m) and column (n) numbers to 10 and 5. We also measured the mean execution time of the matrix multiplication by using the same matrix and a matrix initialized with uniformly distributed random numbers. The row and column number of the matrix B are equal to 5 and 10. The row number (m) of the matrix A varies from 1 to 10. The linear equation systems are solved by using three different decomposition algorithms: the Golub–Kahan–Reinsch, Householder, and GE with pivoting. We measured the execution time of matrix multiplications as well as of the methods for solving linear equation systems on the STM32F4 MCU and the Raspberry Pi 3.

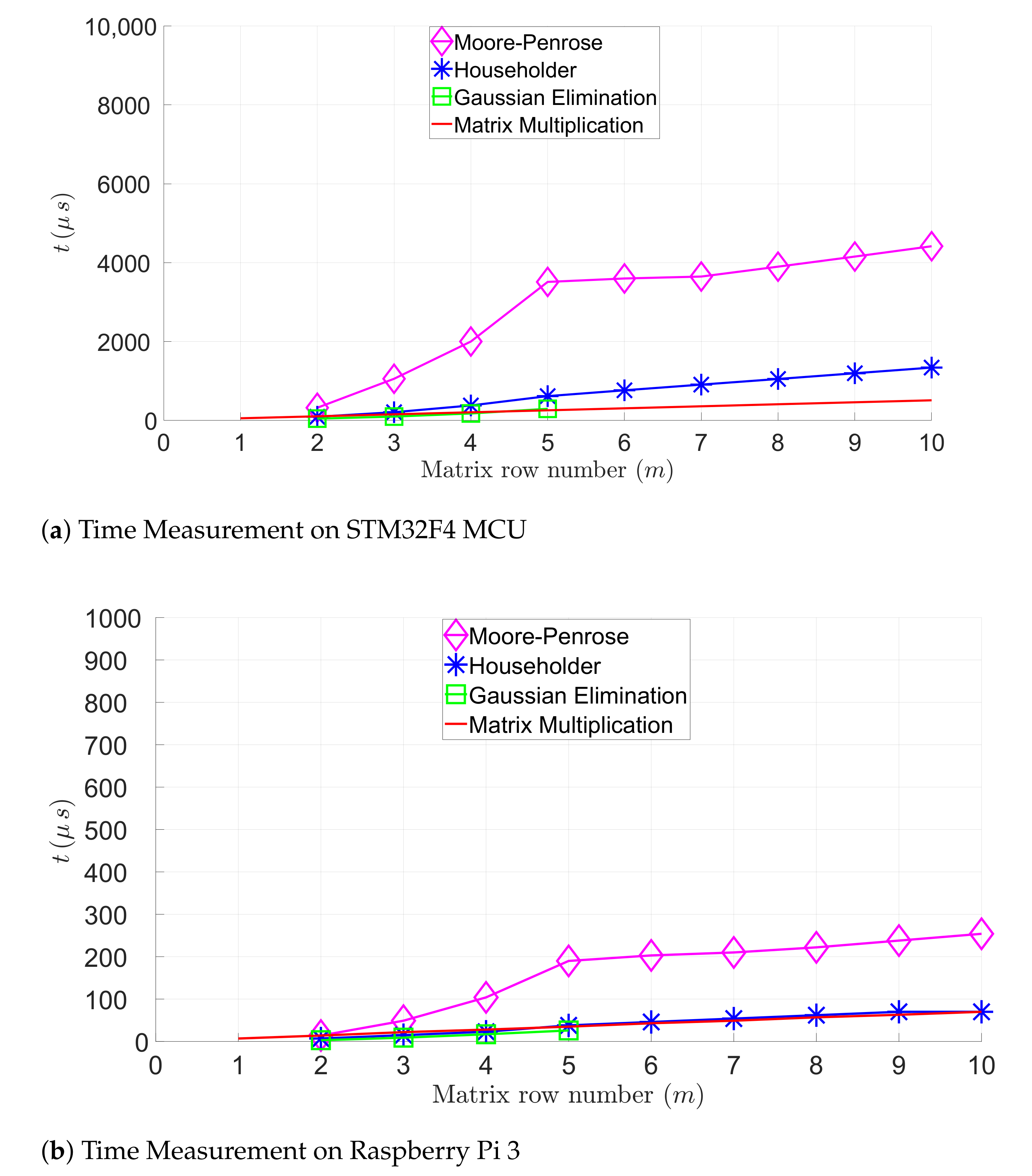

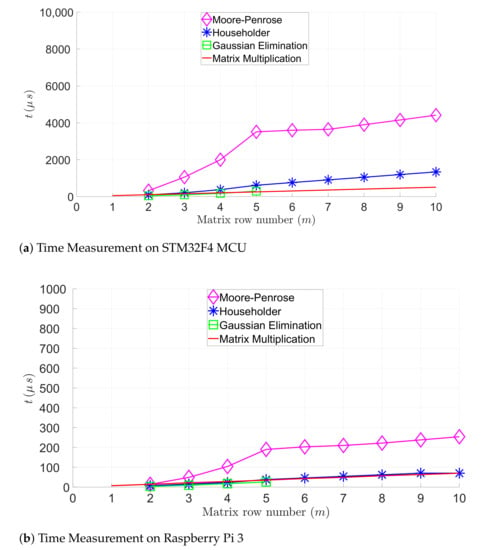

For solving linear equation systems, Figure 4 compares the execution time of the following algorithms: GE with pivoting, the Householder, and the Golub–Kahan–Reinsch. Furthermore, Figure 4 represents the execution time of the matrix multiplications. Figure 4a,b illustrate the execution time of these algorithms in function of the row number of the matrix on the STM32F4 MCU and the Raspberry Pi 3. The Golub–Kahan–Reinsch-based algorithm has the largest execution time, since it is more expensive than other algorithms (see Table 2). Table 3 summarizes the mean execution time of the matrix evaluated by calculating an matrix or solving an linear equation system. These execution times are measured on the STM32F4 MCU and the Raspberry Pi 3. The Raspberry Pi 3 outperforms the STM32F4 MCU, as expected, due to the limited computing capacity of the STM32F4 MCU. However, the execution time for finding a solution applying the Golub–Kahan–Reinsch algorithm remains in a micro-second range on the STM32F4 MCU, which would be sufficient for many IoT applications.

Figure 4.

Computing time evaluation of matrix multiplications and linear equation systems.

Table 2.

Complexity of the algorithms evaluated.

Table 3.

Mean execution time of computing or solving an linear equation system.

6.2. Evaluation of the Non-Linear Algebra Module

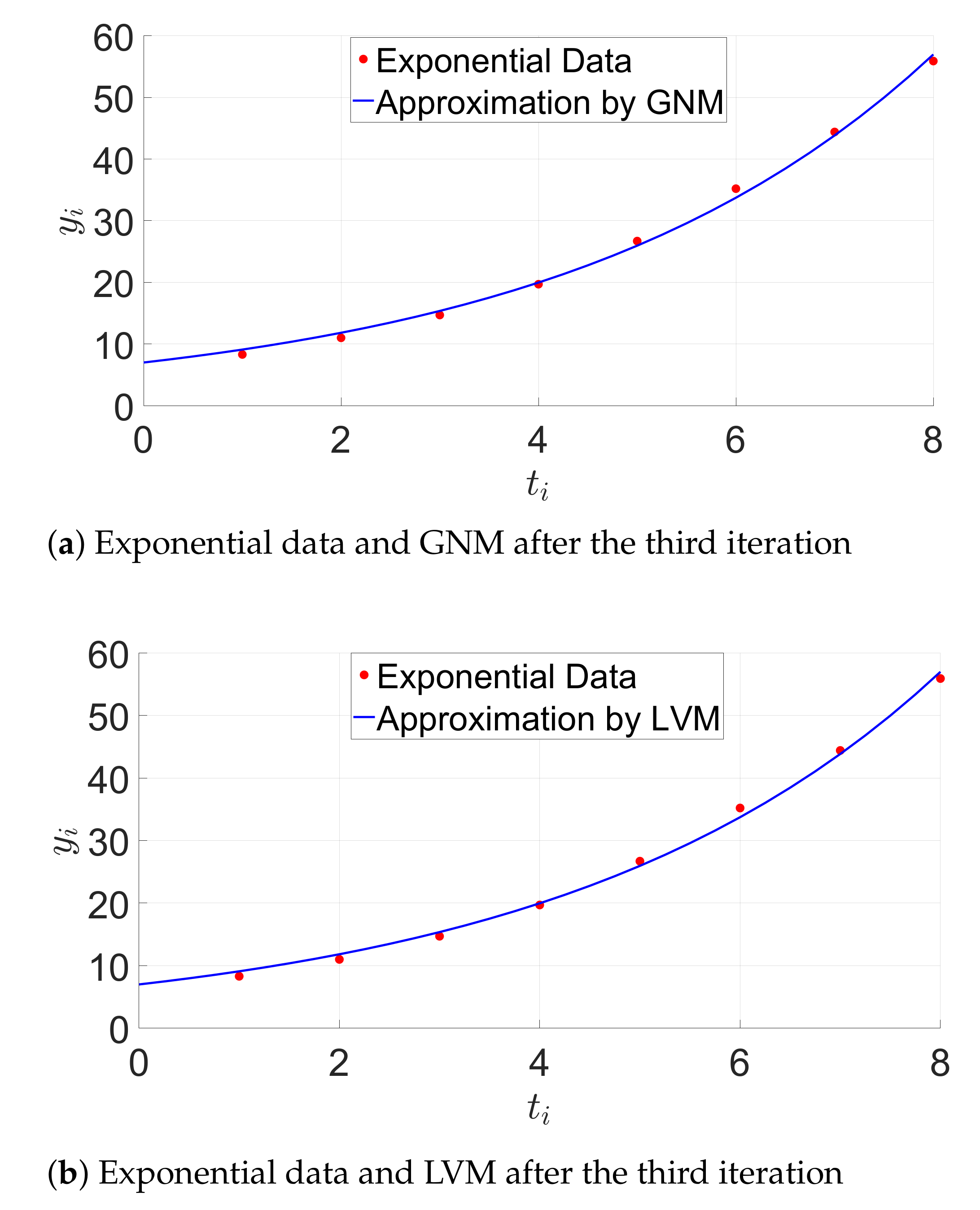

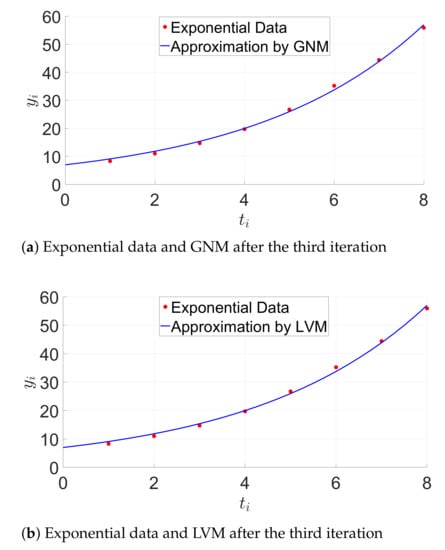

The nonlinear algebra module is evaluated by using exponential and sinusoidal data [64]. Optimizing of least-squares problems are solved by using the modified GN and LVM methods as described in Section 3.2.1 and Section 3.2.2.

6.2.1. Evaluation with Exponential Data

Given the model function that is equal to:

where and is the initial guess. The data set is , whereby is equal to and is equal to .

The aim is to find the parameters () that most accurately match the model function by minimizing the sum of squares of the error function . The function computes the residual values and is equal to:

We introduce the error function vector :

The Jacobian matrix is equal to:

The initial square residual is equal to . We get the solution by using the GN algorithm after three iterations. The appropriate square residual is equal to , which indicates the improvement of the model. We obtain the solution by using the LVM algorithm after three iterations. The LVM algorithm shows nearly the same behavior as the modified GN method. This is confirmed by Figure 5.

Figure 5.

Exponential model approximation. GNM, Gauss–Newton Method. LVM, Levenberg–Marquardt Method.

Table 4 summarizes the average time per iteration required from the GNM and LVM algorithms on the STM32F4 MCU and the Raspberry Pi 3.

Table 4.

Mean execution time of the Gauss–Newton and Levenberg–Marquardt methods (per iteration) using exponential data.

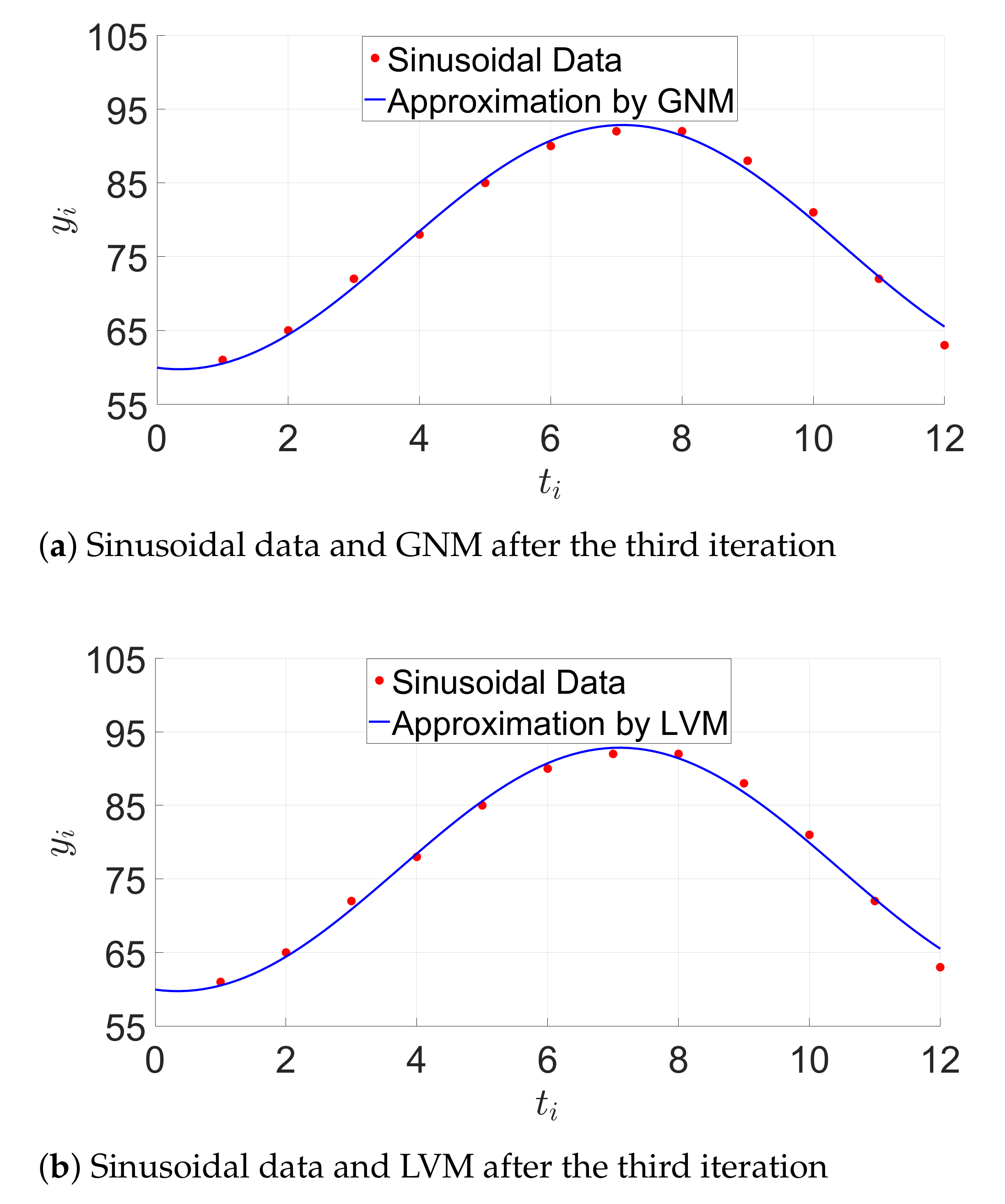

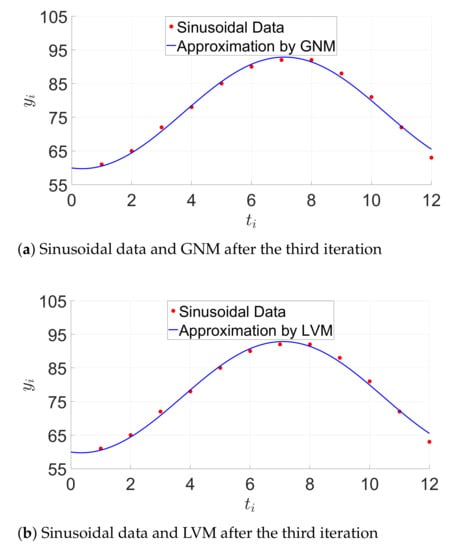

6.2.2. Evaluation with Sinusoidal Data

The model function is:

whereby, and is the initial guess. The set of data points is , where is equal to and is equal to . The error function is ; therefore, the error function vector is:

Thus, the Jacobian matrix is calculated using the partial derivatives in Equation (44) and is equal to:

The initial square residual is equal to . After one iteration (), the LVM algorithm is slightly more efficient than the GN method (). Both algorithms show the same behavior after two iterations. Figure 6 shows the sinusoidal model after three iterations by using the GN and LVM algorithms.

Figure 6.

Sinusoidal model approximation. GNM, Gauss–Newton Method. LVM, Levenberg–Marquardt Method.

Table 5 summarizes the average time per iteration required from the GNM and LVM approaches on the STM32F4 MCU and the Raspberry Pi 3.

Table 5.

Mean execution time of the Gauss–Newton and Levenberg–Marquardt methods (per iteration) using sinusoidal data.

7. Conclusions and Outlook

We presented an open source library for linear and nonlinear algebra as well as an application module for localization that is suitable for resource-limited, mobile, and embedded systems. This library permits the solution of linear equations and matrix operations like the matrix decomposition, the calculation of the inverse, or the rank of a matrix. It provides various algorithms such as sorting or filtering algorithms. This software also enables solving nonlinear problems like curve fitting or nonlinear equations. RcdMathLib allows for the localization of mobile devices by using localization algorithms like, for instance, the trilateration. The localization can be further refined by using an adaptive optimization algorithm based on the SVD method. The localization software module facilitates the localization of the mobile device in NLoS scenarios by using a multipath distance detection and mitigation algorithm. RcdMathLib can serve as a basis for artificial intelligence techniques for mobile technologies with IoT, or as a tool in research and industry. Therefore, we intend to extend the RcdMathLib with machine learning or digital signal processing algorithms. We also aim to extend the package with an additional algorithm for solving nonlinear problems called the Landweber method [65].

Author Contributions

Z.K. conceived the research, designed the software architecture, and implemented the software components of the RcdMathLib. Furthermore, he integrated the software components of the RcdMathLib in RIOT-OS, performed the experiments and data evaluation, and wrote all parts of the article. A.N. offered valuable tests and evaluation of the algorithms. He helped through the design of the localization algorithms. He wrote the “related works” part and contributed to the writing of the article part “Library Architecture and Description”. M.G. gave valuable suggestions and offered plenty of comments and useful discussions to the paper. J.S. gave valuable suggestions to the paper and reviewed the article. C.M. gave suggestions to the article and reviewed it. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Source code can be download from the GitLab repository on Reference [63].

Acknowledgments

We acknowledge the support of the Deutsche Forschungsgemeinschaft (DFG–German Research Foundation) and the Open Access Publishing Fund of Technical University of Darmstadt. The authors thank Naouar Guerchali for the support in the implementation and evaluation of the Levenberg–Marquardt algorithm. The authors thank Nikolas Voth for the final grammatical corrections of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ceruzzi, P.E. The Early Computers of Konrad Zuse, 1935 to 1945. Ann. Hist. Comput. 1981, 3, 241–262. [Google Scholar] [CrossRef]

- Ceruzzi, P.E. A History of Modern Computing, 2nd ed.; MIT Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Lee, J.A.N. Computer Pioneers; IEEE Computer Society Press: Los Alamitos, CA, USA, 1995. [Google Scholar]

- Partlett, B. Handbook for Automatic Computation, Vol. II, Linear Algebra (J. H. Wilkinson and C. Reinsch). SIAM Rev. 1972, 14, 658–661. [Google Scholar] [CrossRef]

- Dongarra, J.J.; Moler, C.B.; Bunch, J.R.; Stewart, G.W. LINPACK Users’ Guide; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1979. [Google Scholar] [CrossRef]

- Garbow, B.S.; Boyle, J.M.; Dongarra, J.J.; Moler, C.B. Matrix Eigensystem Routines—EISPACK Guide Extension; Springer: Berlin/Heidelberg, Germany, 1977. [Google Scholar] [CrossRef]

- Cleve Moler. A Brief History of MATLAB. In Technical Articles and Newsletters; MathWorks: Natick, MA, USA, 2019. [Google Scholar]

- Naveen, S.; Kounte, M.R. Key Technologies and challenges in IoT Edge Computing. In Proceedings of the 2019 Third International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 12–14 December 2019; pp. 61–65. [Google Scholar] [CrossRef]

- Kasmi, Z. Home of the RcdMathLib (Mathematical Library for Resource-Constrained Devices). 2021. Available online: https://git.imp.fu-berlin.de/zkasmi/RcdMathLib/-/wikis/Home (accessed on 25 February 2021).

- Strang, G. Linear Algebra and Its Applications, 4th ed.; Thomson, Brooks/Cole: Belmont, CA, USA, 2006. [Google Scholar]

- Schanze, T. Compression and Noise Reduction of Biomedical Signals by Singular Value Decomposition. IFAC-PapersOnLine 2018, 51, 361–366. [Google Scholar] [CrossRef]

- Kasmi, Z. Open Platform Architecture for Decentralized Localization Systems Based on Resource-Constrained Devices. Ph.D. Thesis, Freie Universität Berlin, Department of Mathematics and Computer Science, Berlin, Germany, 2019. [Google Scholar]

- Madsen, K.; Nielsen, H.B.; Tingleff, O. Methods for Non-Linear Least Squares Problems, 2nd ed.; Technical University of Denmark: Lyngbyn, Denmark, 2004. [Google Scholar]

- Rose, J.A.; Tong, J.R.; Allain, D.J.; Mitchell, C.N. The Use of Ionospheric Tomography and Elevation Masks to Reduce the Overall Error in Single-Frequency GPS Timing Applications. Adv. Space Res. 2011, 47, 276–288. [Google Scholar] [CrossRef]

- Guckenheimer, J. Numerical Computation in the Information Age. In SIAM NEWS; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1988. [Google Scholar]

- Arm Limited. Arm Performance Libraries. 2019. Available online: https://developer.arm.com/tools-and-software/server-and-hpc/arm-architecture-tools/arm-performance-libraries (accessed on 25 February 2021).

- Sanchez, J.; Canton, M.P. Microcontrollers: High-Performance Systems and Programming; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar] [CrossRef]

- Texas Instruments Incorporated. MSP430 IQmathLib User’s Guide; Texas Instruments Incorporated: Dallas, TX, USA, 2015. [Google Scholar]

- Aimonen, P. Cross Platform Fixed Point Maths Library 2012–2019. Available online: https://github.com/PetteriAimonen/libfixmath (accessed on 25 February 2021).

- Nicolosi, A. A Simple, Tiny and Efficient BLAS Library, Designed for PC and Microcontrollers. 2018. Available online: https://github.com/alenic/microBLAS (accessed on 25 February 2021).

- Matlack, C. Minimal Linear Algebra Library. 2018. Available online: https://github.com/eecharlie/MatrixMath (accessed on 25 February 2021).

- Stewart, T. A Library for Representing Matrices and Doing Matrix Math on Arduino. 2018. Available online: https://github.com/tomstewart89/BasicLinearAlgebra (accessed on 25 February 2021).

- Oliphant, T.E. Guide to NumPy, 2nd ed.; CreateSpace Independent Publishing Platform: North Charleston, SC, USA, 2015. [Google Scholar]

- Nunez-Iglesias, J.; van der Walt, S.; Dashnow, H. Elegant SciPy: The Art of Scientific Python, 1st ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017. [Google Scholar]

- Bell, C. MicroPython for the Internet of Things: A Beginner’s Guide to Programming with Python on Microcontrollers; Apress: Berkeley, CA, USA, 2017. [Google Scholar] [CrossRef]

- Kurniawan, A. CircuitPython Development Workshop; PE Press: Berlin, Germany, 2018. [Google Scholar]

- Björck, A. Numerical Methods for Least Squares Problems; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1996. [Google Scholar] [CrossRef]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 4th ed.; Johns Hopkins University Press: Baltimore, MD, USA, 2013. [Google Scholar]

- Barata, J.; Hussein, M. The Moore-Penrose Pseudoinverse. A Tutorial Review of the Theory. Braz. J. Phys. 2011, 42, 146–165. [Google Scholar] [CrossRef]

- Kaw, A. Introduction to Matrix Algebra; University of South Florida: Tampa, FL, USA, 2008. [Google Scholar]

- Sengupta, S.; Korobkin, C.P. C++: Object-Oriented Data Structures; Springer: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Bailey, R. The Box-Muller Method and the T-distribution; Department of Economics Discussion Paper; University of Birmingham, Department of Economics: Birmingham, UK, 1992. [Google Scholar]

- Allgower, E.; Georg, K.; Research, U.; Foundation, N. Computational Solution of Nonlinear Systems of Equations; Lectures in Applied Mathematics; American Mathematical Society: Providence, RI, USA, 1990. [Google Scholar]

- Nocedal, J.; Wright, S. Numerical Optimization; Springer: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Chen, Y.Y.; Gao, Y. Two New Levenberg-Marquardt Methods for Non-smooth Nonlinear Complementarity Problems. Sci. Asia 2014, 40, 89. [Google Scholar] [CrossRef][Green Version]

- Song, L.; Gao, Y. On The Local Convergence of a Levenberg-Marquardt Method for Non-smooth Nonlinear Complementarity Problems. Sci. Asia 2017, 43, 377. [Google Scholar] [CrossRef]

- Du, S.Q. Some Global Convergence Properties of the Levenberg-Marquardt Methods with Line Search. J. Appl. Math. Inform. 2013, 31, 373–378. [Google Scholar] [CrossRef][Green Version]

- Dahmen, W.; Reusken, A. Numerik für Ingenieure und Naturwissenschaftler; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar] [CrossRef]

- Guerchali, N. Untersuchung des Levenberg-Marquardt-Algorithmus zur Indoor-Lokalisierung für den STMF407-Mikrocontroller. Bachelor’s Thesis, Fachhochschule Aachen, Aachen, Germany, 2017. [Google Scholar]

- Ramnath, S.; Javali, A.; Narang, B.; Mishra, P.; Routray, S.K. IoT-Based Localization and Tracking. In Proceedings of the 2017 International Conference on IoT and Application (ICIOT), Nagapattinam, India, 19–20 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Reed, J.H. An Introduction to Ultra Wideband Communication Systems, 1st ed.; Prentice Hall Press: Upper Saddle River, NJ, USA, 2005. [Google Scholar]

- AeroScout Corporation. Available online: http://www.aeroscout.com (accessed on 25 February 2021).

- Holm, S. Ultrasound Positioning Based on Time-of-Flight and Signal Strength. In Proceedings of the 2012 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydney, Australia, 13–15 November 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Pasku, V.; De Angelis, A.; De Angelis, G.; Arumugam, D.D.; Dionigi, M.; Carbone, P.; Moschitta, A.; Ricketts, D.S. Magnetic Field-Based Positioning Systems. IEEE Commun. Surv. Tutor. 2017, 19, 2003–2017. [Google Scholar] [CrossRef]

- Chawathe, S.S. Low-Latency Indoor Localization Using Bluetooth Beacons. In Proceedings of the 2009 12th International IEEE Conference on Intelligent Transportation Systems, St. Louis, MO, USA, 4–7 October 2009; pp. 1–7. [Google Scholar] [CrossRef]

- Norrdine, A. An Algebraic Solution to the Multilateration Problem. In Proceedings of the Third International Conference on Indoor Positioning and Indoor Navigation (IPIN2012), Sydney, Australia, 13–15 November 2012; pp. 1–5. [Google Scholar]

- Kasmi, Z.; Norrdine, A.; Blankenbach, J. Platform Architecture for Decentralized Positioning Systems. Sensors 2017, 17, 957. [Google Scholar] [CrossRef] [PubMed]

- Kasmi, Z.; Guerchali, N.; Norrdine, A.; Schiller, J.H. Algorithms and Position Optimization for a Decentralized Localization Platform Based on Resource-Constrained Devices. IEEE Trans. Mob. Comput. 2019, 18, 1731–1744. [Google Scholar] [CrossRef]

- Prigge, E.A.; How, J.P. Signal architecture for a distributed magnetic local positioning system. IEEE Sens. J. 2004, 4, 864–873. [Google Scholar] [CrossRef]

- Prieto, J.C.; Croux, C.; Jiménez, A.R. RoPEUS: A New Robust Algorithm for Static Positioning in Ultrasonic Systems. Sensors 2009, 9, 4211. [Google Scholar] [CrossRef] [PubMed]

- Van Heesch, D. Doxygen: Generate Documentation from Source Code. Available online: https://www.doxygen.nl/index.html (accessed on 25 February 2021).

- Datta, B.N. Numerical Linear Algebra and Applications, 2nd ed.; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2010. [Google Scholar]

- Trefethen, L.; Schreiber, R. Average-Case Stability of Gaussian Elimination. SIAM J. Matrix Anal. Appl. 1990, 11. [Google Scholar] [CrossRef]

- Trefethen, L.; Bau, D. Numerical Linear Algebra; Other Titles in Applied Mathematics; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1997. [Google Scholar]

- Stewart, G.W. Matrix Algorithms: Volume 1, Basic Decompositions; Matrix Algorithms; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1998. [Google Scholar]

- Thisted, R.A. Elements of Statistical Computing: Numerical Computation; CRC Press/Taylor & Francis Group: Boca Raton, FL, USA, 1988. [Google Scholar]

- Gander, W.; Gander, M.J.; Kwok, F. Scientific Computing—An Introduction Using Maple and MATLAB; Springer Publishing Company, Incorporated: New York, NY, USA, 2014. [Google Scholar]

- Golub, G.H.; Reinsch, C. Singular Value Decomposition and Least Squares Solutions. Numer. Math. 1970, 14, 403–420. [Google Scholar] [CrossRef]

- Free Software Foundation, Inc. GNU Lesser General Public License, Version 2.1. 2021. Available online: https://www.gnu.org/licenses/old-licenses/lgpl-2.1.en.html (accessed on 25 February 2021).

- Baccelli, E.; Hahm, O.; Günes, M.; Wählisch, M.; Schmidt, T.C. RIOT OS: Towards an OS for the Internet of Things. In Proceedings of the 2013 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Turin, Italy, 14–19 April 2013; pp. 79–80. [Google Scholar] [CrossRef]

- Hope, M. Linaro Toolchain Binaries. 2020. Available online: https://launchpad.net/linaro-toolchain-binaries (accessed on 25 February 2021).

- Team, O. OpenOCD—Open On-Chip Debugger Reference Manual; Samurai Media Limited: London, UK, 2015. [Google Scholar]

- Kasmi, Z. GitLab of the RcdMathLib (Mathematical Library for Resource-Constrained Devices). 2021. Available online: https://git.imp.fu-berlin.de/zkasmi/RcdMathLib (accessed on 25 February 2021).

- Croeze, A.; Pittman, L.; Reynolds, W. Solving Nonlinear Least-Squares Problems with the Gauss–Newton and Levenberg–Marquardt Methods; Technical Report; Department of Mathematics, Louisiana State University: Baton Rouge, LA, USA, 2012. [Google Scholar]

- Zhao, X.L.; Huang, T.Z.; Gu, X.M.; Deng, L.J. Vector Extrapolation based Landweber Method for Discrete ill-posed Problems. Math. Probl. Eng. 2017, 2017, 1375716. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).