Monophonic and Polyphonic Wheezing Classification Based on Constrained Low-Rank Non-Negative Matrix Factorization

Abstract

1. Introduction

2. Theoretical Background

2.1. Non-Negative Matrix Factorization

2.2. Spectral Sparseness

2.3. Temporal/Spectral Smoothness

3. Proposed Method

3.1. Time-Frequency Signal Representation

3.2. Stage I: Constrained Low-Rank Non-Negative Matrix Factorization

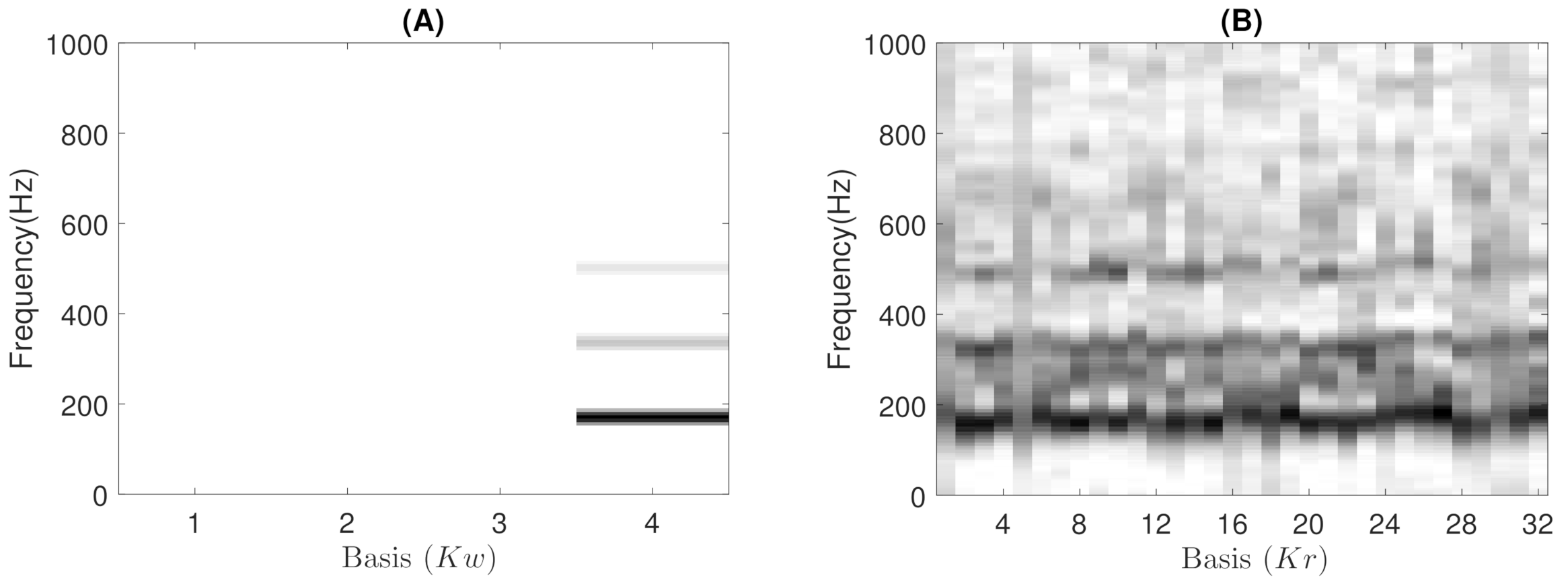

- Low-rank: The number of wheezing components should be much less than the number of normal respiratory components, that is . This assumption allows that the number of frequency components can be reduced in the least number of bases possible for their posterior analysis, while normal respiratory sounds are modeled using a higher range of components. Experimental results showed that the best classification performance was obtained when and . In particular, when , the proposed CL-RNMF approach tends to converge very quickly at the expense of losing relevant wheezing content. On the other hand, when , the spectral wheezing patterns tend to be split into different components of the matrix .

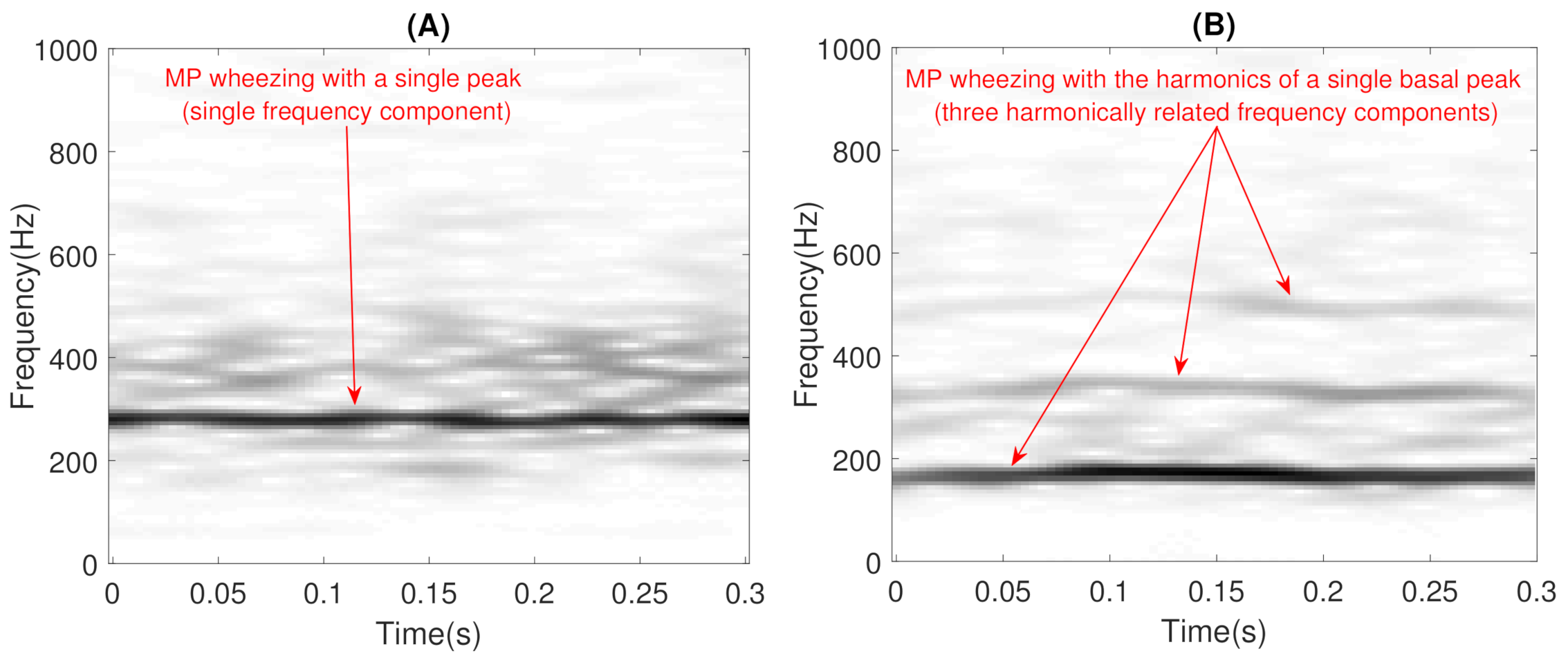

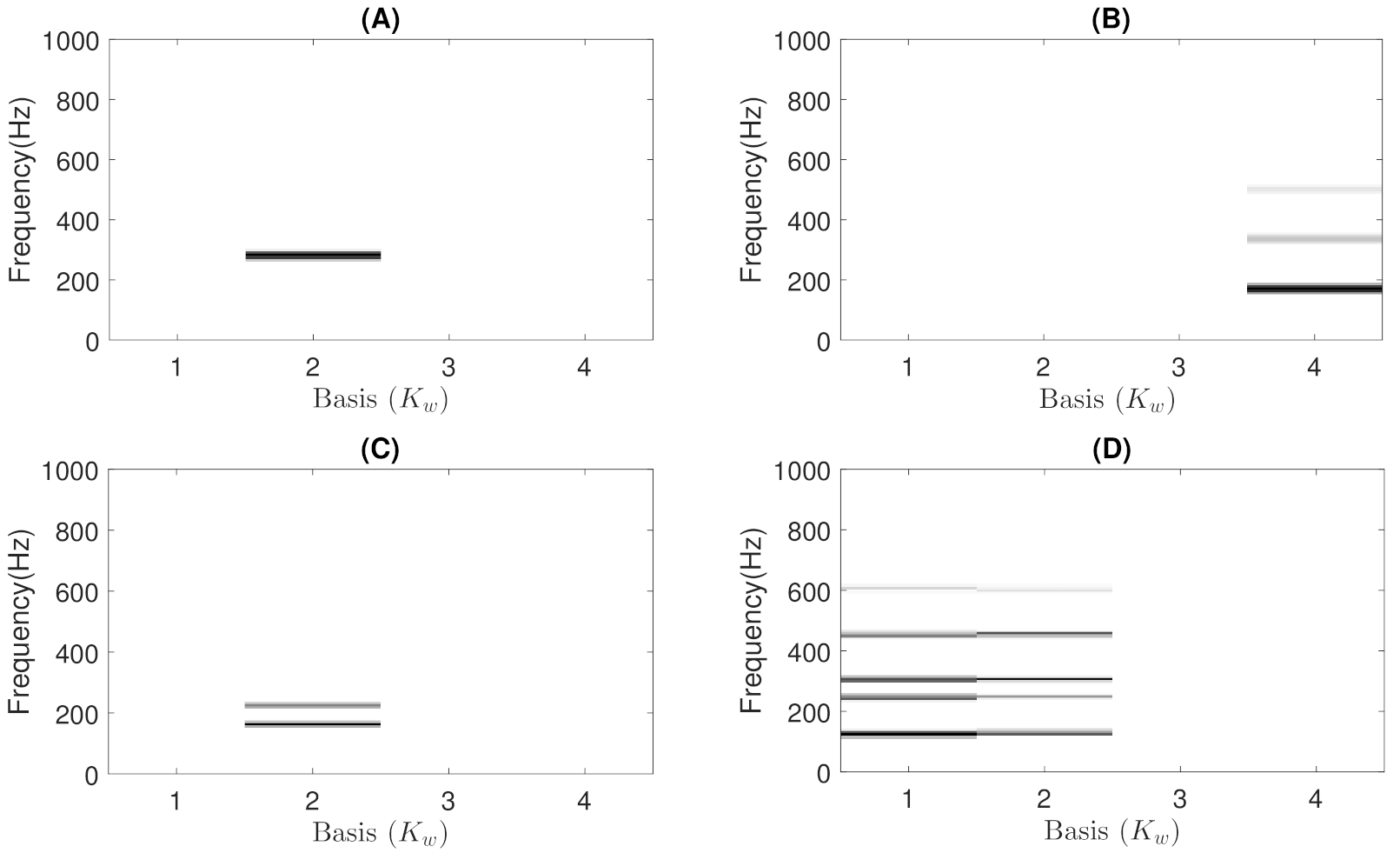

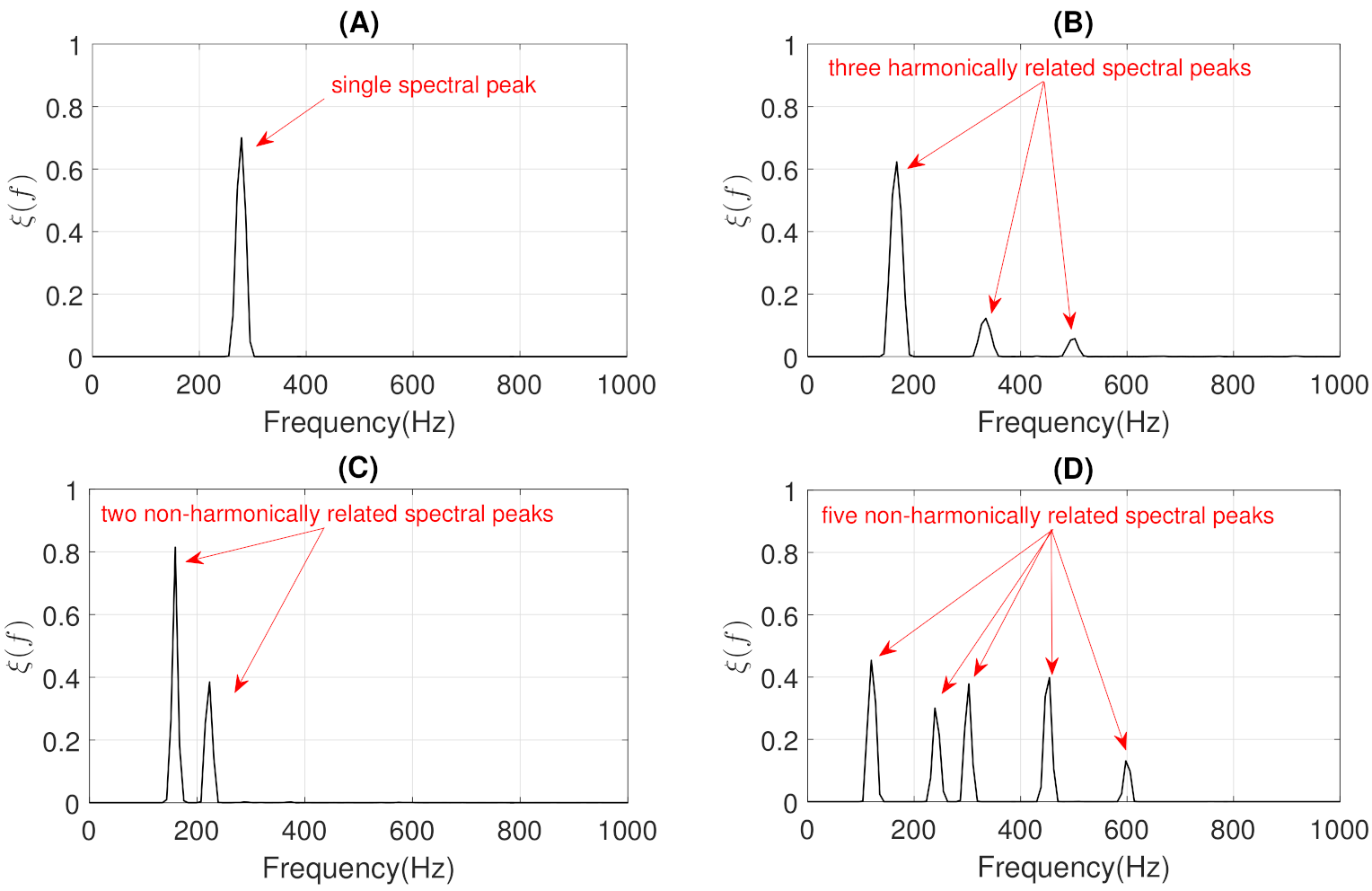

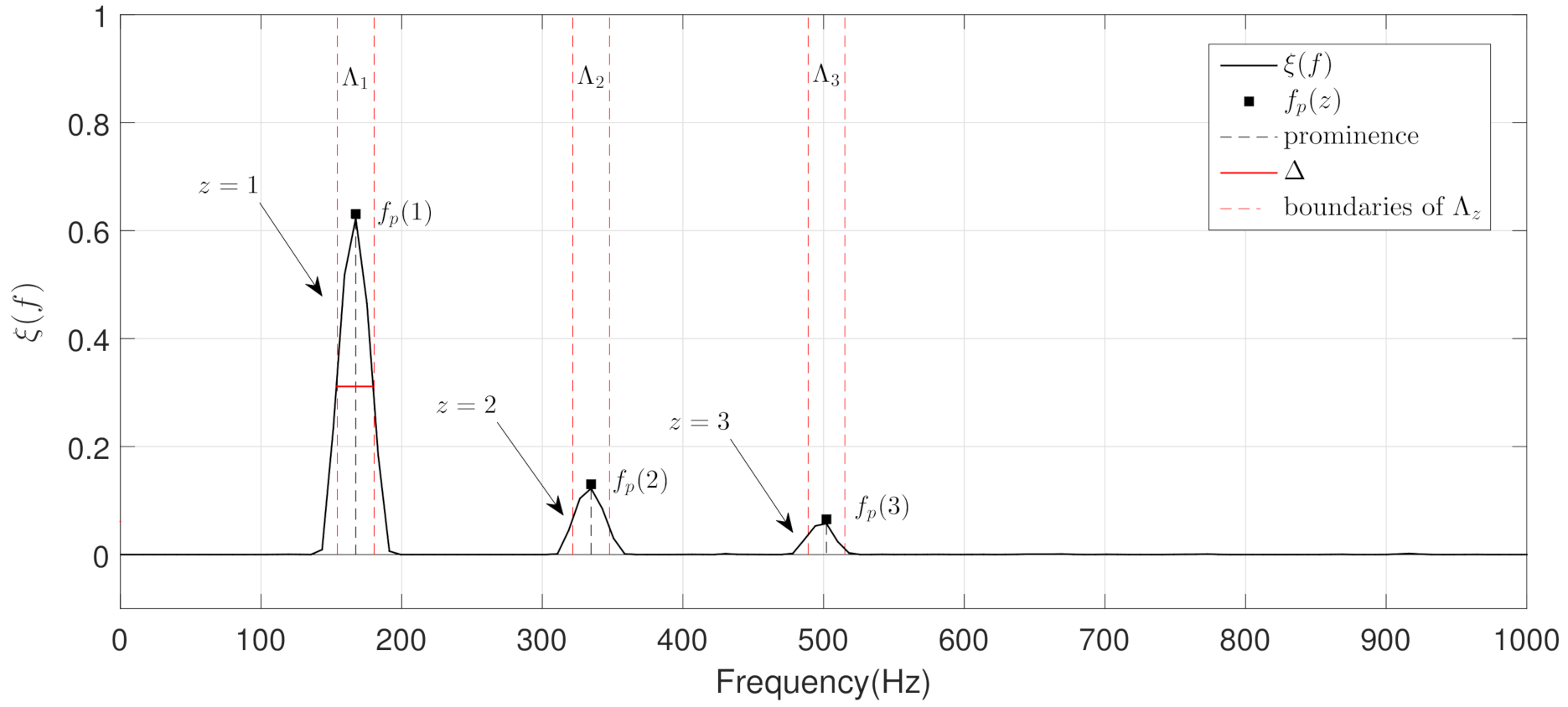

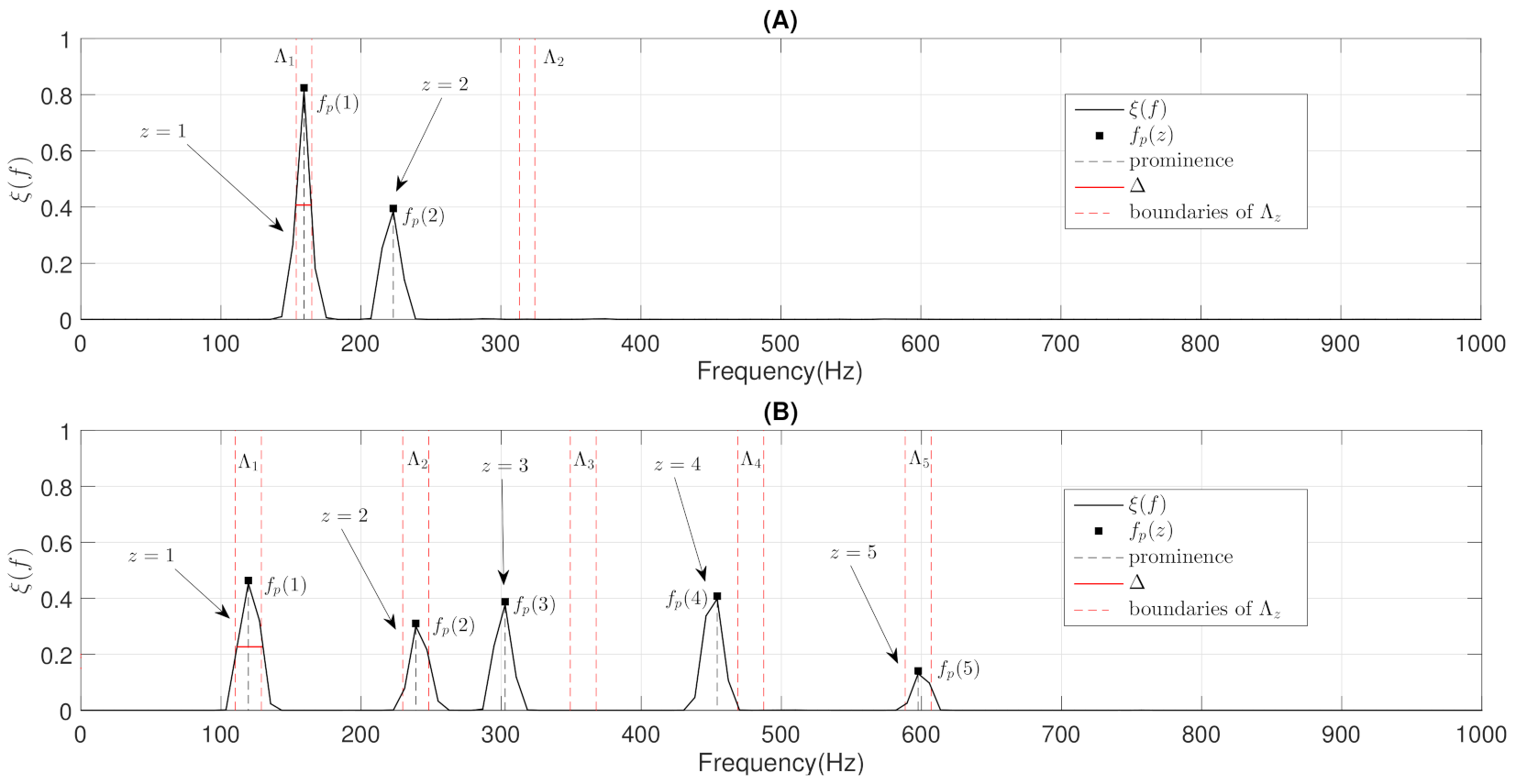

- Constraints: These characterize wheezing sounds and normal respiratory sounds using opposite restrictions between both sounds. The use of constraints allows isolating the spectral wheezing patterns from the spectral patterns of normal respiratory sounds. Therefore, in order to find a better NMF decomposition that shows spectro-temporal features of the wheezing and normal respiratory sounds as can be observed in the real world, we propose to incorporate sparseness and smoothness into the NMF decomposition process. As shown in Figure 1 and Figure 2, wheezing sounds can be considered sparse in frequency because MP wheezing or PP wheezing is characterized by one or more than one narrowband spectral peak. Moreover, wheezing sounds can be considered smooth or continuous events in time, that is slow variation of the magnitude spectrogram along time. On the other hand, normal respiratory sounds can be considered smooth in frequency, that is they can be modeled assuming wideband spectral patterns. Therefore, should contain wheezing spectral patterns composed of one or more than one narrowband spectral peak, depending on the spectral complexity of each wheezing, and should be composed of a set of wideband spectral patterns that model the behavior of normal respiratory sounds.

| Algorithm 1: CL-RNMF. |

| Require: , , , , , , and M.ss |

| 1: Compute the normalized magnitude spectrogram using Equation (11).ss |

| 2: Initialize , , , and with random non-negative values.ss |

| 3: Update the estimated wheezing basis matrix using Equation (14).ss |

| 4: Update the estimated respiratory basis matrix using Equation (15).ss |

| 5: Update the estimated wheezing activations matrix using Equation (16).ss |

| 6: Update the estimated respiratory activations matrix using Equation (17).ss |

| 7: Repeat Steps 3–6 until the algorithm converges (or until the maximum number of iterations M is reached).ss |

| 8: Compute the spectral energy distribution from using Equation (18).ss |

| return |

3.3. Stage II: Harmonic Structure Analysis

- The objective of the first step is to locate, in terms of frequency, all the narrowband spectral peaks detected in the previous Stage I. For this, we propose to locate the most prominent frequency in each spectral peak . Each value was calculated using the findpeaks function provided by the MATLAB software [74] due to the satisfactory results obtained in several preliminary analyses performed. Figure 7 shows the location , in terms of frequency, of each spectral peak for the MP example previously shown in Figure 1B.

- The objective of the second step is to check if the different spectral peaks are harmonically related or not. We assume that the first spectral peak () represents the basal peak. Therefore, the wheezing is classified as MP if the rest of spectral peaks () are located in the harmonic frequencies (integer multiple) of the basal peak. Otherwise, the wheezing is classified as PP. From the width of the main lobe of the basal peak () and the value of its most prominent frequency , the spectral intervals where the possible harmonic frequencies should be located are calculated as follows,where denotes the spectral interval comprised between the lower limit i and the upper limit j, in terms of frequency. Specifically, represents the spectral interval associated with the basal peak, and () corresponds to the spectral intervals where the harmonic frequencies should be located. Note that the width of the main lobe was obtained by positioning the reference line beneath the peak at a vertical distance equal to half the peak prominence [74].

| Algorithm 2: Harmonic structure analysis. |

| Require: . |

| 1: From , detect the number of narrowband spectral peaks. |

| if then |

| return Wheezing category = MP |

| else |

| 2: Locate the frequency in each spectral peak .ssssss |

| 3: Compute the spectral intervals using Equation (20). |

| if then |

| return Wheezing category = MP |

| else |

| return Wheezing category = PP |

| end if |

| end if |

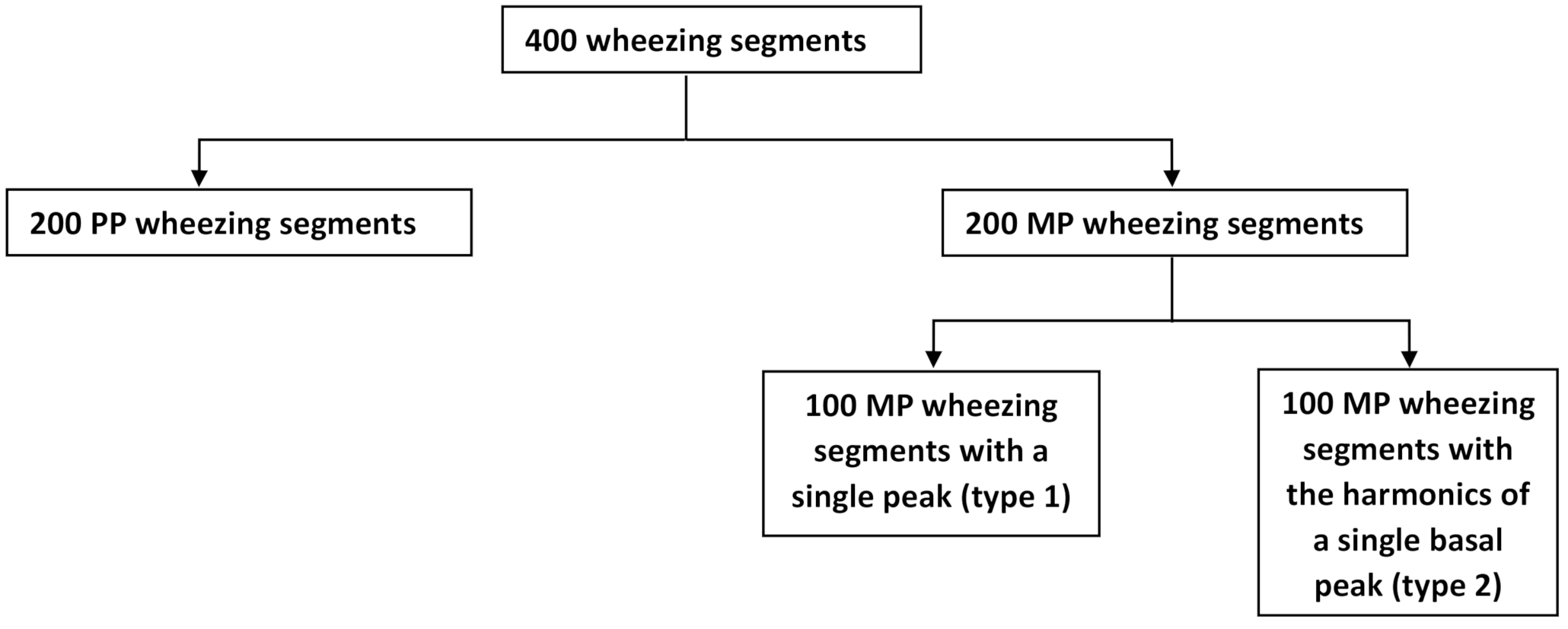

4. Experimental Results and Discussion

4.1. Data Collection

4.2. Experimental Setup

4.3. Evaluation Metrics

4.4. State-of-the-Art Method for Comparison

4.5. Accuracy Results

- the improvement, in terms of , of the proposed method is about 8.25% UPER (SVM), 12% UPER (KNN), and 10.5% UPER (ELM).

- the improvement, in terms of , of the proposed method is about 4% UPER (SVM), 7.1% UPER (KNN), and 5.5% UPER (ELM).

- the improvement, in terms of , of the proposed method is about 12.5% UPER (SVM), 17% UPER (KNN), and 15.5% UPER (ELM).

- the improvement, in terms of , of the proposed method is about 5% UPER (SVM), 10% UPER (KNN), and 8% UPER (ELM).

- the improvement, in terms of , of the proposed method is about 20% UPER (SVM), 24% UPER (KNN), and 23% UPER (ELM).

- (i)

- Due to the time-frequency overlapping problem, normal respiratory sounds often mask wheezing sounds, hiding relevant medical information [5]. While the proposed method (based on CL-RNMF) allows removing as much as possible the acoustic interference from normal respiratory sounds, the method UPER is based on a feature PER obtained from the sub-band energy of the wavelet coefficients, so the presence of normal respiratory sounds interferes in the selection of the optimal sub-bands that really belong to the wheezing components.

- (ii)

- The method UPER has more difficulty in discriminating between PP and MP wheezing composed by a basal peak and its harmonics since it achieves the worst performance in terms of . The reason is because UPER is based on energy and ignoring the spectral location of the components that model the harmonic behavior of MP wheezing. Results in Table 2 suggest that MP/PP classification based on the spectral location of the harmonic structure as occurs in the proposed method is more reliable than the use of the energy of the wheezing spectral components, as occurs in UPER.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Terms of the Multiplicative Update Rules

References

- World Health Organization. Chronic Respiratory Diseases. Available online: https://www.who.int/health-topics/chronic-respiratory-diseases#tab=tab_1 (accessed on 30 December 2020).

- World Health Organization, Asthma. Available online: https://www.who.int/news-room/fact-sheets/detail/asthma (accessed on 30 December 2020).

- World Health Organization. Chronic Obstructive Pulmonary Disease. Available online: http://www.emro.who.int/health-topics/chronic-obstructive-pulmonary-disease-copd/index.html (accessed on 30 December 2020).

- Sarkar, M.; Madabhavi, I.; Niranjan, N.; Dogra, M. Auscultation of the respiratory system. Ann. Thorac. Med. 2015, 10, 158. [Google Scholar] [CrossRef] [PubMed]

- Pasterkamp, H.; Kraman, S.S.; Wodicka, G.R. Respiratory sounds: Advances beyond the stethoscope. Am. J. Respir. Crit. Care Med. 1997, 156, 974–987. [Google Scholar] [CrossRef]

- Lozano-Garcia, M.; Fiz, J.A.; Martinez-Rivera, C.; Torrents, A.; Ruiz-Manzano, J.; Jane, R. Novel approach to continuous adventitious respiratory sound analysis for the assessment of bronchodilator response. PLoS ONE 2017, 12, e0171455. [Google Scholar] [CrossRef]

- Ulukaya, S.; Serbes, G.; Kahya, Y.P. Wheeze type classification using non-dyadic wavelet transform based optimal energy ratio technique. Comput. Biol. Med. 2019, 104, 175–182. [Google Scholar] [CrossRef] [PubMed]

- Andrès, E.; Gass, R.; Charloux, A.; Brandt, C.; Hentzler, A. Respiratory sound analysis in the era of evidence-based medicine and the world of medicine 2.0. J. Med. Life 2018, 11, 89. [Google Scholar] [PubMed]

- Torre-Cruz, J.; Canadas-Quesada, F.; Carabias-Orti, J.; Vera-Candeas, P.; Ruiz-Reyes, N. A novel wheezing detection approach based on constrained non-negative matrix factorization. Appl. Acoust. 2019, 148, 276–288. [Google Scholar] [CrossRef]

- Leng, S.; San Tan, R.; Chai, K.T.C.; Wang, C.; Ghista, D.; Zhong, L. The electronic stethoscope. Biomed. Eng. Online 2015, 14, 1–37. [Google Scholar] [CrossRef]

- Sen, I.; Saraclar, M.; Kahya, Y.P. A comparison of SVM and GMM-based classifier configurations for diagnostic classification of pulmonary sounds. IEEE Trans. Biomed. Eng. 2015, 62, 1768–1776. [Google Scholar] [CrossRef]

- Salazar, A.J.; Alvarado, C.; Lozano, F.E. System of heart and lung sounds separation for store-and-forward telemedicine applications. Rev. Fac. Ingeniería Univ. Antioq. 2012, 64, 175–181. [Google Scholar]

- Douros, K.; Grammeniatis, V.; Loukou, I. Crackles and Other Lung Sounds. In Breath Sounds; Springer International Publishing: Cham, Switzerland, 2018; Chapter 12; pp. 225–236. [Google Scholar]

- Lozano, M.; Fiz, J.A.; Jané, R. Automatic differentiation of normal and continuous adventitious respiratory sounds using ensemble empirical mode decomposition and instantaneous frequency. IEEE J. Biomed. Health Inform. 2015, 20, 486–497. [Google Scholar] [CrossRef]

- Rao, A.; Huynh, E.; Royston, T.J.; Kornblith, A.; Roy, S. Acoustic methods for pulmonary diagnosis. IEEE Rev. Biomed. Eng. 2018, 12, 221–239. [Google Scholar] [CrossRef]

- Pramono, R.X.A.; Bowyer, S.; Rodriguez-Villegas, E. Automatic adventitious respiratory sound analysis: A systematic review. PLoS ONE 2017, 12, e0177926. [Google Scholar] [CrossRef]

- Rocha, B.M.; Pessoa, D.; Marques, A.; Carvalho, P.; Paiva, R.P. Automatic Classification of Adventitious Respiratory Sounds: A (Un) Solved Problem? Sensors 2021, 21, 57. [Google Scholar] [CrossRef] [PubMed]

- Jin, F.; Krishnan, S.; Sattar, F. Adventitious sounds identification and extraction using temporal–spectral dominance-based features. IEEE Trans. Biomed. Eng. 2011, 58, 3078–3087. [Google Scholar] [PubMed]

- Gurung, A.; Scrafford, C.G.; Tielsch, J.M.; Levine, O.S.; Checkley, W. Computerized lung sound analysis as diagnostic aid for the detection of abnormal lung sounds: A systematic review and meta-analysis. Respir. Med. 2011, 105, 1396–1403. [Google Scholar] [CrossRef] [PubMed]

- Sakai, T.; Kato, M.; Miyahara, S.; Kiyasu, S. Robust detection of adventitious lung sounds in electronic auscultation signals. In Proceedings of the 21st IEEE International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1993–1996. [Google Scholar]

- Liu, X.; Ser, W.; Zhang, J.; Goh, D.Y.T. Detection of adventitious lung sounds using entropy features and a 2-D threshold setting. In Proceedings of the 2015 10th IEEE International Conference on Information, Communications and Signal Processing (ICICS), Singapore, 2–4 December 2015; pp. 1–5. [Google Scholar]

- Matsutake, S.; Yamashita, M.; Matsunaga, S. Abnormal-respiration detection by considering correlation of observation of adventitious sounds. In Proceedings of the 2015 23rd IEEE European Signal Processing Conference (EUSIPCO), Nice, France, 31 August–4 September 2015; pp. 634–638. [Google Scholar]

- Nakamura, N.; Yamashita, M.; Matsunaga, S. Detection of patients considering observation frequency of continuous and discontinuous adventitious sounds in lung sounds. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 3457–3460. [Google Scholar]

- İçer, S.; Gengeç, Ş. Classification and analysis of non-stationary characteristics of crackle and rhonchus lung adventitious sounds. Digit. Signal Process. 2014, 28, 18–27. [Google Scholar] [CrossRef]

- Yamashita, M.; Himeshima, M.; Matsunaga, S. Robust classification between normal and abnormal lung sounds using adventitious-sound and heart-sound models. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 4418–4422. [Google Scholar]

- Aykanat, M.; Kılıç, Ö.; Kurt, B.; Saryal, S. Classification of lung sounds using convolutional neural networks. EURASIP J. Image Video Process. 2017, 65, 1–9. [Google Scholar] [CrossRef]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Lung sounds classification using convolutional neural networks. Artif. Intell. Med. 2018, 88, 58–69. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, X.; Li, Y. LungRN+NL: An improved adventitious lung sound classification using non-local block resnet neural network with mixup data augmentation. In Proceedings of the Interspeech 2020, 21st Annual Conference of the International Speech Communication Association, Virtual Event, Shanghai, China, 25–29 October 2020; pp. 2902–2906. [Google Scholar]

- Demir, F.; Ismael, A.M.; Sengur, A. Classification of Lung Sounds With CNN Model Using Parallel Pooling Structure. IEEE Access 2020, 8, 105376–105383. [Google Scholar] [CrossRef]

- Sovijarvi, A.; Dalmasso, F.; Vanderschoot, J.; Malmberg, L.; Righini, G.; Stoneman, S. Definition of terms for applications of respiratory sounds. Eur. Respir. Rev. 2000, 10, 597–610. [Google Scholar]

- Meslier, N.; Charbonneau, G.; Racineux, J. Wheezes. Eur. Respir. J. 1995, 8, 1942–1948. [Google Scholar] [CrossRef] [PubMed]

- Baughman, R.P.; Loudon, R.G. Lung sound analysis for continuous evaluation of airflow obstruction in asthma. Chest 1985, 88, 364–368. [Google Scholar] [CrossRef]

- Cortes, S.; Jane, R.; Fiz, J.; Morera, J. Monitoring of wheeze duration during spontaneous respiration in asthmatic patients. In Proceedings of the 27th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Shanghai, China, 17–18 January 2006; pp. 6141–6144. [Google Scholar]

- Qiu, Y.; Whittaker, A.; Lucas, M.; Anderson, K. Automatic wheeze detection based on auditory modelling. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2005, 219, 219–227. [Google Scholar] [CrossRef]

- Zhang, J.; Ser, W.; Yu, J.; Zhang, T. A novel wheeze detection method for wearable monitoring systems. In Proceedings of the IEEE International Symposium on Intelligent Ubiquitous Computing and Education, Chengdu, China, 15–16 May 2009; pp. 331–334. [Google Scholar]

- Lin, B.S.; Wu, H.D.; Chen, S.J. Automatic wheezing detection based on signal processing of spectrogram and back-propagation neural network. J. Healthc. Eng. 2015, 6, 649–672. [Google Scholar] [CrossRef]

- Kochetov, K.; Putin, E.; Azizov, S.; Skorobogatov, I.; Filchenkov, A. Wheeze detection using convolutional neural networks. In EPIA Conference on Artificial Intelligence; Springer: Cham, Switzerland, 2017; pp. 162–173. [Google Scholar]

- Kandaswamy, A.; Kumar, C.S.; Ramanathan, R.P.; Jayaraman, S.; Malmurugan, N. Neural classification of lung sounds using wavelet coefficients. Comput. Biol. Med. 2004, 34, 523–537. [Google Scholar] [CrossRef]

- Le Cam, S.; Belghith, A.; Collet, C.; Salzenstein, F. Wheezing sounds detection using multivariate generalized Gaussian distributions. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 541–544. [Google Scholar]

- Wisniewski, M.; Zielinski, T.P. Tonality detection methods for wheezes recognition system. In Proceedings of the IEEE 19th International Conference on Systems, Signals and Image Processing (IWSSIP), Vienna, Austria, 11–13 April 2012; pp. 472–475. [Google Scholar]

- Wisniewski, M.; Zielinski, T.P. Joint application of audio spectral envelope and tonality index in an e-asthma monitoring system. IEEE J. Biomed. Health Inform. 2015, 19, 1009–1018. [Google Scholar] [CrossRef]

- Chien, J.C.; Wu, H.D.; Chong, F.C.; Li, C.I. Wheeze detection using cepstral analysis in gaussian mixture models. In Proceedings of the 29th IEEE Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Lyon, France, 22–26 August 2007; pp. 3168–3171. [Google Scholar]

- Bahoura, M. Pattern recognition methods applied to respiratory sounds classification into normal and wheeze classes. Comput. Biol. Med. 2009, 39, 824–843. [Google Scholar] [CrossRef]

- Bahoura, M.; Pelletier, C. Respiratory sounds classification using Gaussian mixture models. In Proceedings of the IEEE Canadian Conference on Electrical and Computer Engineering, Niagara Falls, ON, Canada, 2–5 May 2004; Volume 3, pp. 1309–1312. [Google Scholar]

- Mayorga, P.; Druzgalski, C.; Morelos, R.; Gonzalez, O.; Vidales, J. Acoustics based assessment of respiratory diseases using GMM classification. In Proceedings of the IEEE Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 6312–6316. [Google Scholar]

- Taplidou, S.A.; Hadjileontiadis, L.J. Wheeze detection based on time-frequency analysis of breath sounds. Comput. Biol. Med. 2007, 37, 1073–1083. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.; Vepa, J. Lung sound analysis for wheeze episode detection. In Proceedings of the 30th IEEE Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Vancouver, BC, Canada, 20–25 August 2008; pp. 2582–2585. [Google Scholar]

- Mendes, L.; Vogiatzis, I.; Perantoni, E.; Kaimakamis, E.; Chouvarda, I.; Maglaveras, N.; Tsara, V.; Teixeira, C.; Carvalho, P.; Henriques, J.; et al. Detection of wheezes using their signature in the spectrogram space and musical features. In Proceedings of the 37th IEEE Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 5581–5584. [Google Scholar]

- Oletic, D.; Bilas, V. Asthmatic wheeze detection from compressively sensed respiratory sound spectra. IEEE J. Biomed. Health Inform. 2018, 22, 1406–1414. [Google Scholar] [CrossRef]

- Torre-Cruz, J.; Canadas-Quesada, F.; García-Galán, S.; Ruiz-Reyes, N.; Vera-Candeas, P.; Carabias-Orti, J. A constrained tonal semi-supervised non-negative matrix factorization to classify presence/absence of wheezing in respiratory sounds. Appl. Acoust. 2020, 161, 107188. [Google Scholar] [CrossRef]

- De La Torre Cruz, J.; Cañadas Quesada, F.J.; Carabias Orti, J.J.; Vera Candeas, P.; Ruiz Reyes, N. Combining a recursive approach via non-negative matrix factorization and Gini index sparsity to improve reliable detection of wheezing sounds. Expert Syst. Appl. 2020, 147, 113212. [Google Scholar] [CrossRef]

- Nagasaka, Y. Lung Sounds in Bronchial Asthma. Allergol. Int. 2012, 61, 353–363. [Google Scholar] [CrossRef]

- Mason, R.C.; Murray, J.F.; Nadel, J.A.; Gotway, M.B. Murray & Nadel’s Textbook of Respiratory Medicine E-Book; Elsevier Health Sciences: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Taplidou, S.A.; Hadjileontiadis, L.J. Analysis of wheezes using wavelet higher order spectral features. IEEE Trans. Biomed. Eng. 2010, 57, 1596–1610. [Google Scholar] [CrossRef]

- Forgacs, P. The functional basis of pulmonary sounds. Chest 1978, 73, 399–405. [Google Scholar] [CrossRef]

- Jácome, C.; Oliveira, A.; Marques, A. Computerized respiratory sounds: A comparison between patients with stable and exacerbated COPD. Clin. Respir. J. 2017, 11, 612–620. [Google Scholar] [CrossRef]

- Hashemi, A.; Arabalibiek, H.; Agin, K. Classification of wheeze sounds using wavelets and neural networks. In International Conference on Biomedical Engineering and Technology; IACSIT Press: Singapore, 2011; Volume 11, pp. 127–131. [Google Scholar]

- Naves, R.; Barbosa, B.H.; Ferreira, D.D. Classification of lung sounds using higher-order statistics: A divide-and-conquer approach. Comput. Methods Programs Biomed. 2016, 129, 12–20. [Google Scholar] [CrossRef]

- Ulukaya, S.; Sen, I.; Kahya, Y.P. A novel method for determination of wheeze type. In Proceedings of the 23nd Signal Processing and Communications Applications Conference (SIU), Malatya, Turkey, 16–19 May 2015; pp. 2001–2004. [Google Scholar] [CrossRef]

- Ulukaya, S.; Sen, I.; Kahya, Y.P. Feature extraction using time-frequency analysis for monophonic-polyphonic wheeze discrimination. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 5412–5415. [Google Scholar]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.D.; Seung, H.S. Algorithms for non-negative matrix factorization. Adv. Neural Inf. Process. Syst. 2001, 23, 556–562. [Google Scholar]

- Canadas-Quesada, F.; Ruiz-Reyes, N.; Carabias-Orti, J.; Vera-Candeas, P.; Fuertes-Garcia, J. A non-negative matrix factorization approach based on spectro-temporal clustering to extract heart sounds. Appl. Acoust. 2017, 125, 7–19. [Google Scholar] [CrossRef]

- Dia, N.; Fontecave-Jallon, J.; Gumery, P.Y.; Rivet, B. Denoising Phonocardiogram signals with Non-negative Matrix Factorization informed by synchronous Electrocardiogram. In Proceedings of the 2018 26th IEEE European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 51–55. [Google Scholar]

- Torre-Cruz, J.; Canadas-Quesada, F.; Vera-Candeas, P.; Montiel-Zafra, V.; Ruiz-Reyes, N. Wheezing sound separation based on constrained non-negative matrix factorization. In Proceedings of the 2018 10th International Conference on Bioinformatics and Biomedical Technology, Amsterdam, The Netherlands, 18–24 May 2018; pp. 18–24. [Google Scholar]

- De La Torre Cruz, J.; Cañadas Quesada, F.J.; Ruiz Reyes, N.; Vera Candeas, P.; Carabias Orti, J.J. Wheezing Sound Separation Based on Informed Inter-Segment Non-Negative Matrix Partial Co-Factorization. Sensors 2020, 20, 2679. [Google Scholar] [CrossRef]

- Févotte, C.; Bertin, N.; Durrieu, J.L. Nonnegative matrix factorization with the Itakura-Saito divergence: With application to music analysis. Neural Comput. 2009, 21, 793–830. [Google Scholar] [CrossRef]

- Liutkus, A.; Fitzgerald, D.; Badeau, R. Cauchy nonnegative matrix factorization. In Proceedings of the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 18–21 October 2015; pp. 1–5. [Google Scholar]

- Canadas-Quesada, F.J.; Vera-Candeas, P.; Ruiz-Reyes, N.; Carabias-Orti, J.; Cabanas-Molero, P. Percussive/harmonic sound separation by non-negative matrix factorization with smoothness/sparseness constraints. EURASIP J. Audio Speech Music Process. 2014, 2014, 26. [Google Scholar] [CrossRef]

- Laroche, C.; Kowalski, M.; Papadopoulos, H.; Richard, G. A structured nonnegative matrix factorization for source separation. In Proceedings of the 2015 23rd IEEE European Signal Processing Conference (EUSIPCO), Nice, France, 31 August–4 September 2015; pp. 2033–2037. [Google Scholar]

- Eggert, J.; Korner, E. Sparse coding and NMF. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No. 04CH37541), Budapest, Hungary, 25–29 July 2004; Volume 4, pp. 2529–2533. [Google Scholar]

- Virtanen, T. Monaural sound source separation by nonnegative matrix factorization with temporal continuity and sparseness criteria. IEEE Trans. Audio Speech Lang. Process. 2007, 15, 1066–1074. [Google Scholar] [CrossRef]

- Marxer, R.; Janer, J. Study of regularizations and constraints in NMF-based drums monaural separation. In Proceedings of the International Conference on Digital Audio Effects Conference (DAFx-13), Maynooth, Ireland, 2–6 September 2013. [Google Scholar]

- Prominence Criterion of a Peak According to the MATLAB Software. Available online: https://es.mathworks.com/help/signal/ref/findpeaks.html?searchHighlight=findpeak&s_tid=doc_srchtitle#buff2uu (accessed on 30 December 2020).

- The r.a.l.e. Repository. Available online: http://www.rale.ca (accessed on 30 December 2020).

- Stethographics Lung Sound Samples. Available online: http://www.stethographics.com (accessed on 30 December 2020).

- 3m Littmann Stethoscopes. Available online: https://www.3m.com (accessed on 30 December 2020).

- East Tennessee State University Pulmonary Breath Sounds. Available online: http://faculty.etsu.edu (accessed on 30 December 2020).

- ICBHI 2017 Challenge. Available online: https://bhichallenge.med.auth.gr (accessed on 30 December 2020).

- Lippincott NursingCenter. Available online: https://www.nursingcenter.com (accessed on 30 December 2020).

- Thinklabs Digital Stethoscope. Available online: https://www.thinklabs.com (accessed on 30 December 2020).

- Thinklabs Youtube. Available online: https://www.youtube.com/channel/UCzEbKuIze4AI1523_AWiK4w (accessed on 30 December 2020).

- Emedicine/Medscape. Available online: https://emedicine.medscape.com/article/1894146-overview#a3 (accessed on 30 December 2020).

- E-Learning Resources. Available online: https://www.ers-education.org/e-learning/reference-database-of-respiratory-sounds.aspx (accessed on 30 December 2020).

- Respiratory Wiki. Available online: http://respwiki.com/Breath_sounds (accessed on 30 December 2020).

- Easy Auscultation. Available online: https://www.easyauscultation.com/lung-sounds-reference-guide (accessed on 30 December 2020).

- Colorado State University. Available online: http://www.cvmbs.colostate.edu/clinsci/callan/breath_sounds.htm (accessed on 30 December 2020).

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 1–27. [Google Scholar] [CrossRef]

| Terms | Definitions |

|---|---|

| (True PP) | PP wheezing segments correctly classified |

| (True MP) | MP wheezing segments correctly classified |

| (False PP) | PP wheezing segments misclassified as MP |

| (False MP) | MP wheezing segments misclassified as PP |

| (True MP Type 1) | MP Type 1 wheezing segments correctly classified |

| (True MP Type 2) | MP Type 2 wheezing segments correctly classified |

| (False MP Type 1) | MP Type 1 wheezing segments misclassified as PP |

| (False MP Type 2) | MP Type 2 wheezing segments misclassified as PP |

| Algorithm | |||||

|---|---|---|---|---|---|

| Proposed Method | 92% | 91.5% | 92.5% | 91% | 94% |

| UPER (SVM) [7] | 83.75% | 87.5% | 80% | 86% | 74% |

| UPER (KNN) [7] | 80% | 84.4% | 75.5% | 81% | 70% |

| UPER (ELM) [7] | 81.5% | 86% | 77% | 83% | 71% |

| Scheme | Training Set | Validate Set | SVM | KNN | ELM |

|---|---|---|---|---|---|

| LOO | 399 (99.75%) | 1 (0.25%) | 83.75% | 80% | 81.5% |

| LPO () | 320 (80%) | 80 (20%) | 81.5% | 79.25% | 80% |

| LPO () | 240 (60%) | 160 (40%) | 80.5% | 77.75% | 79.5% |

| LPO () | 160 (40%) | 240 (60%) | 78.25% | 74.75% | 77.25% |

| LPO () | 80 (20%) | 320 (80%) | 76.25% | 71.75% | 75.25% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

De La Torre Cruz, J.; Cañadas Quesada, F.J.; Ruiz Reyes, N.; García Galán, S.; Carabias Orti, J.J.; Peréz Chica, G. Monophonic and Polyphonic Wheezing Classification Based on Constrained Low-Rank Non-Negative Matrix Factorization. Sensors 2021, 21, 1661. https://doi.org/10.3390/s21051661

De La Torre Cruz J, Cañadas Quesada FJ, Ruiz Reyes N, García Galán S, Carabias Orti JJ, Peréz Chica G. Monophonic and Polyphonic Wheezing Classification Based on Constrained Low-Rank Non-Negative Matrix Factorization. Sensors. 2021; 21(5):1661. https://doi.org/10.3390/s21051661

Chicago/Turabian StyleDe La Torre Cruz, Juan, Francisco Jesús Cañadas Quesada, Nicolás Ruiz Reyes, Sebastián García Galán, Julio José Carabias Orti, and Gerardo Peréz Chica. 2021. "Monophonic and Polyphonic Wheezing Classification Based on Constrained Low-Rank Non-Negative Matrix Factorization" Sensors 21, no. 5: 1661. https://doi.org/10.3390/s21051661

APA StyleDe La Torre Cruz, J., Cañadas Quesada, F. J., Ruiz Reyes, N., García Galán, S., Carabias Orti, J. J., & Peréz Chica, G. (2021). Monophonic and Polyphonic Wheezing Classification Based on Constrained Low-Rank Non-Negative Matrix Factorization. Sensors, 21(5), 1661. https://doi.org/10.3390/s21051661