Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN

Abstract

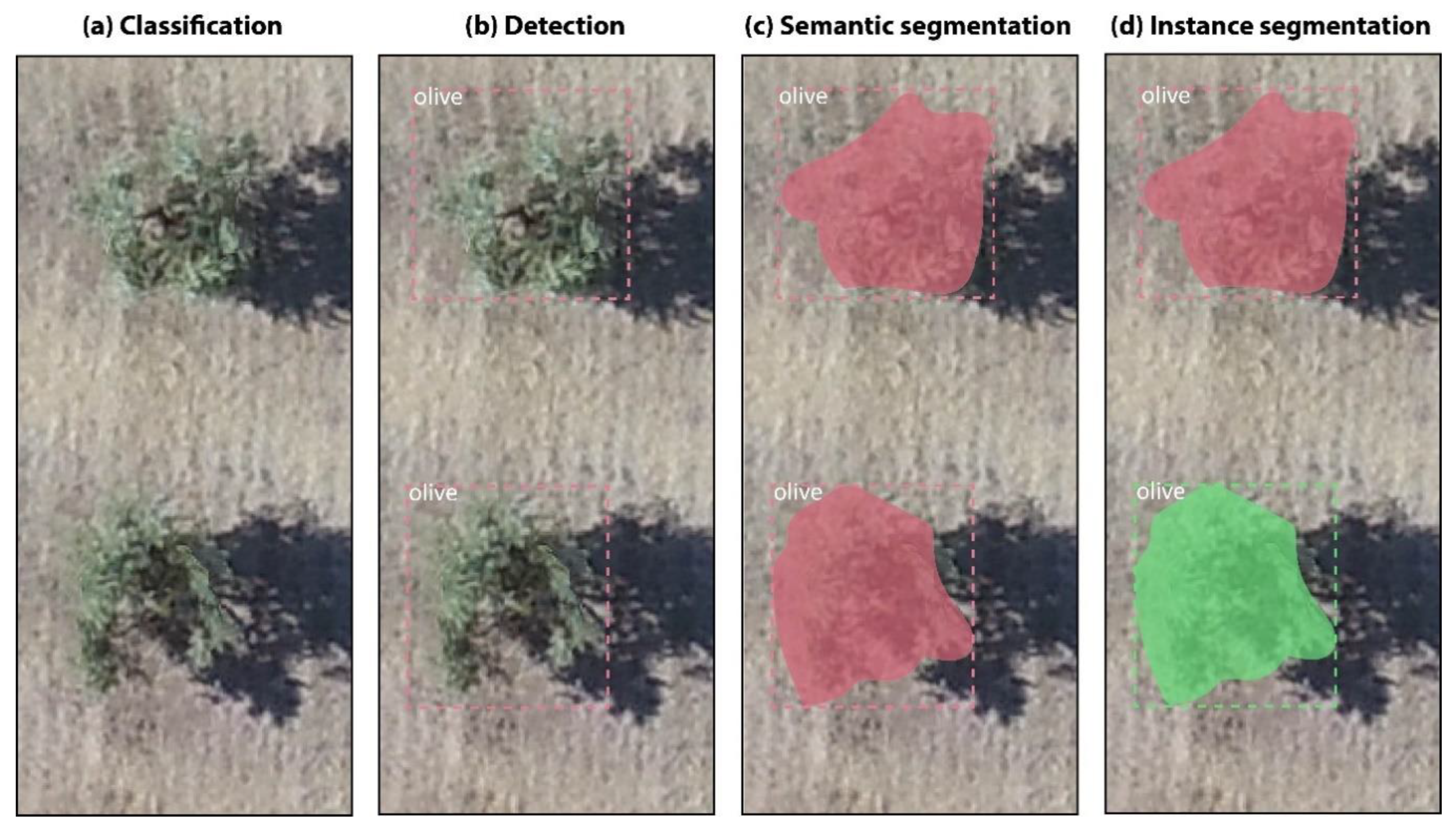

1. Introduction

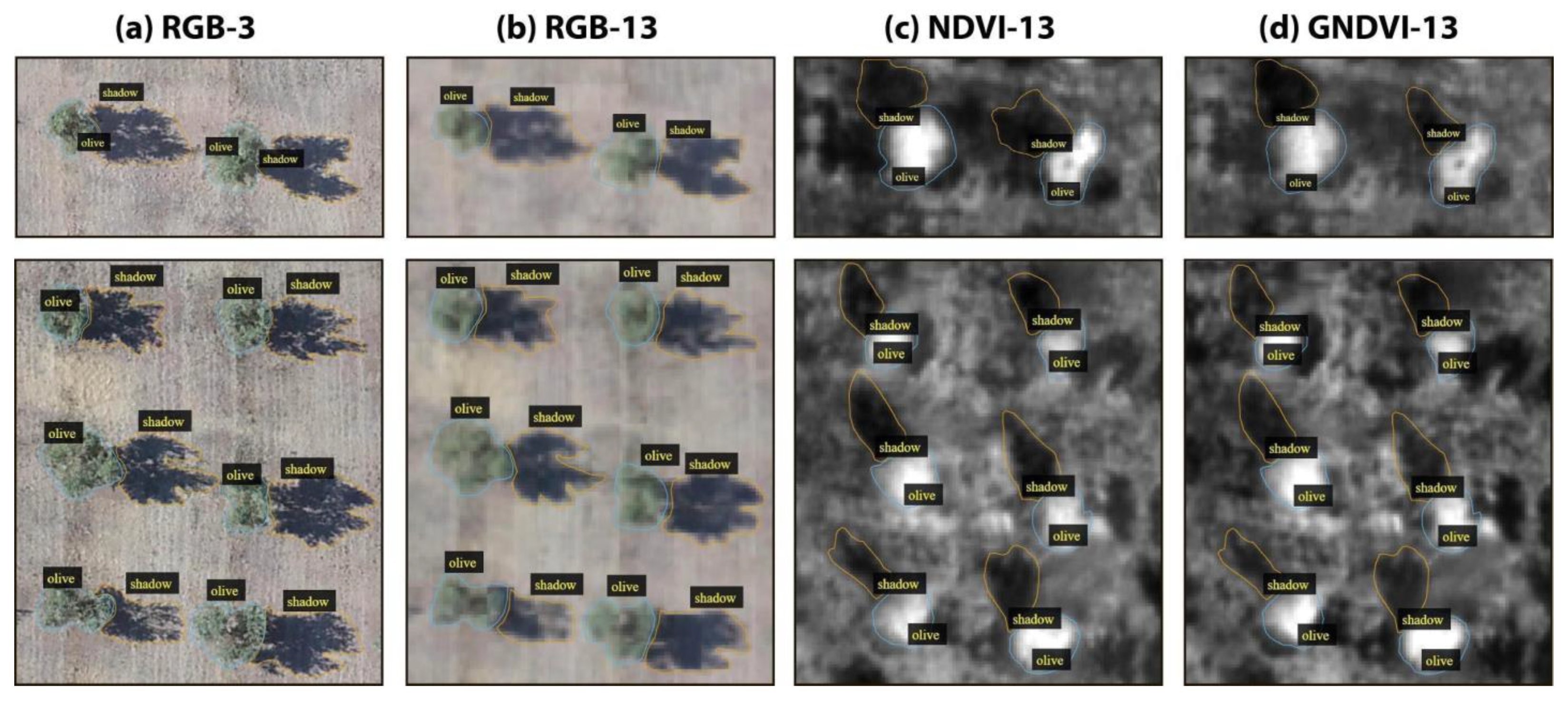

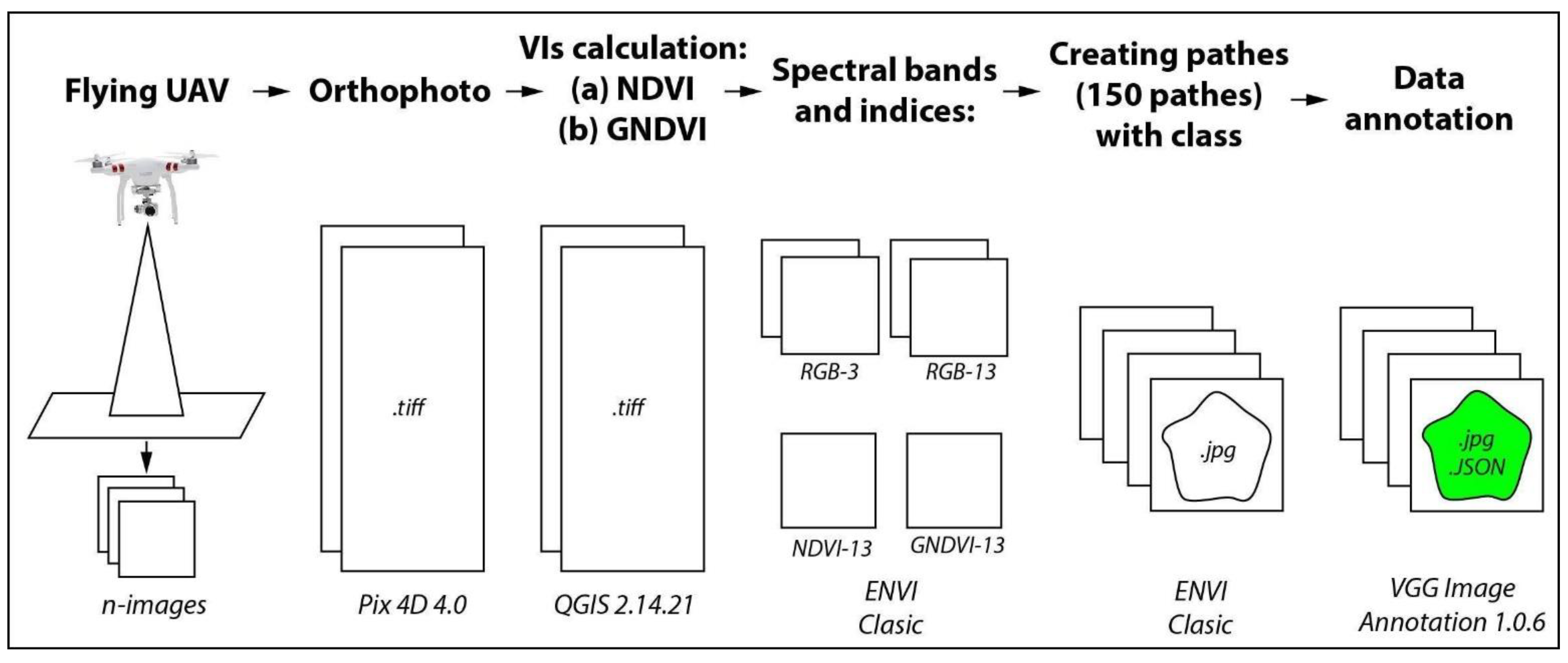

- We have built a new annotated multi-spectral orthoimages dataset for olive tree crown segmentation, called OTCS-dataset. OTCS-dataset is organized into four subsets of different spectral bands and vegetation indices (RGB, NDVI, and GNDVI), at two spatial resolutions (3 cm/pixel and 13 cm/pixel).

- We evaluated the instance segmentation Mask R-CNN model for the tasks of olive trees crown segmentation and shadows segmentation in UAV images. We present a model based on the fusion of RGB images and vegetation indices that improves segmentation over models without image fusion.

- We estimated the biovolume of olive trees based on the area of their crowns and their height inferred from their shadow length.

- Our results show that NDVI or GNDVI spectral indices information with 13 cm/pixel resolution are enough for accurately estimating the biovolume of olive trees.

2. Related Works

3. Materials and Methods

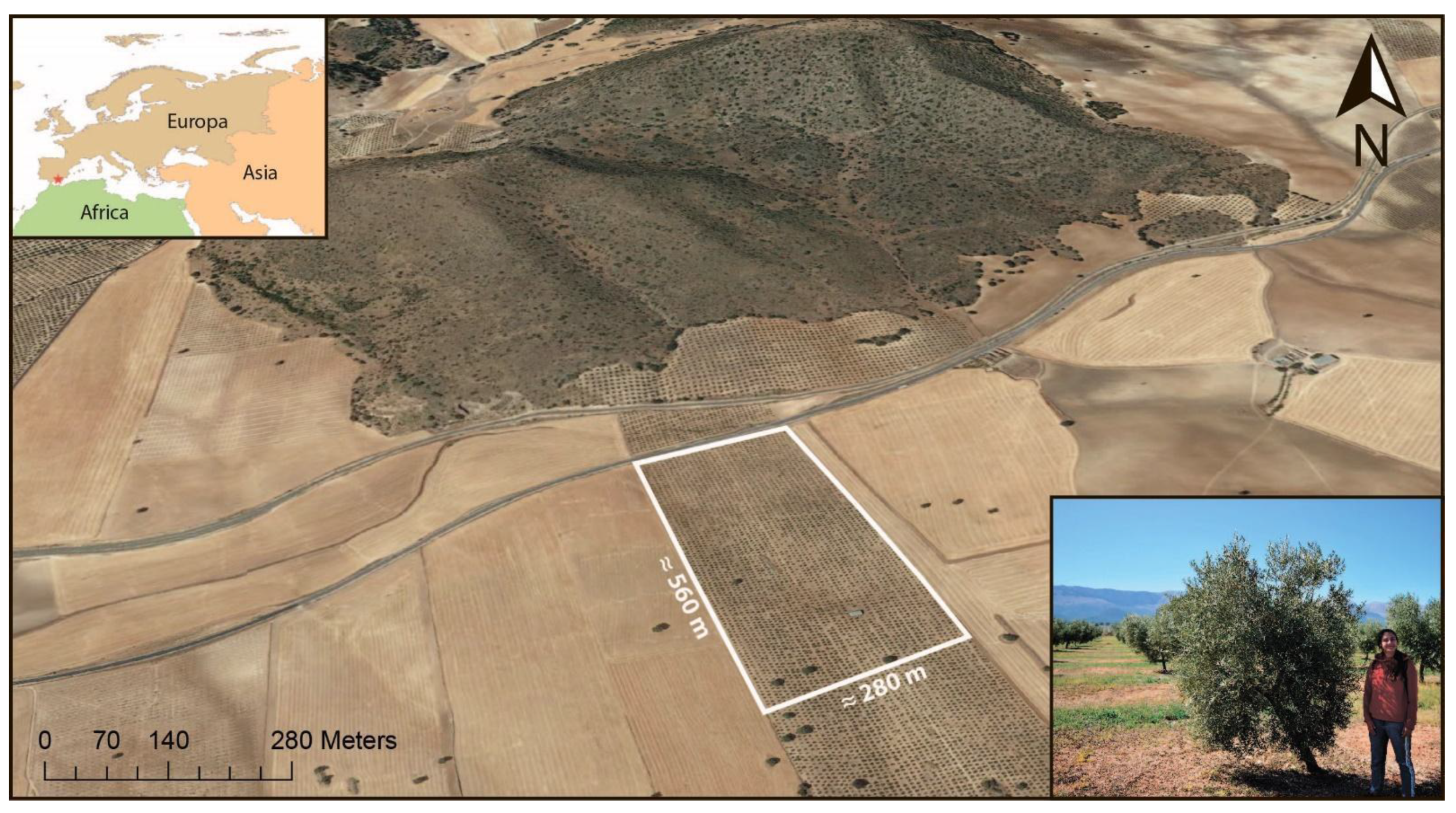

3.1. Study Area and UAV RGB and Multispectral Images

3.2. UAV RGB and Multispectral Images

- (1)

- In February 2019, we flew a Sequoia multispectral sensor installed on the Parrot Disco-Pro AG UAV (Parrot SA, Paris, France) that captured four spectral bands (green, red, red edge, and near-infrared -NIR). The spatial resolution of the multispectral image was 13 cm/pixel. We then derived the vegetation indices detailed in the introduction: the normalized difference vegetation index (NDVI) (1) [38], and the green normalized difference vegetation index (GNDVI) (2) [14].

- (2)

- In July 2019, to get finer spatial resolution, we flew the native RGB Hasselblad 20-megapixel camera of the DJI-Phantom 4 UAV (Parrot SA, Paris, France). The spatial resolution of the RGB image was 3 cm/pixel. These RGB images were then converted to 13-cm/pixel by spatial averaging so they could be compared to. In both flights, images were donated by the company Garnata Drone S.L. (Granada, Spain).

3.3. OTCSS-Dataset Construction

3.4. Mask R-CNN

3.5. Experimental Setup

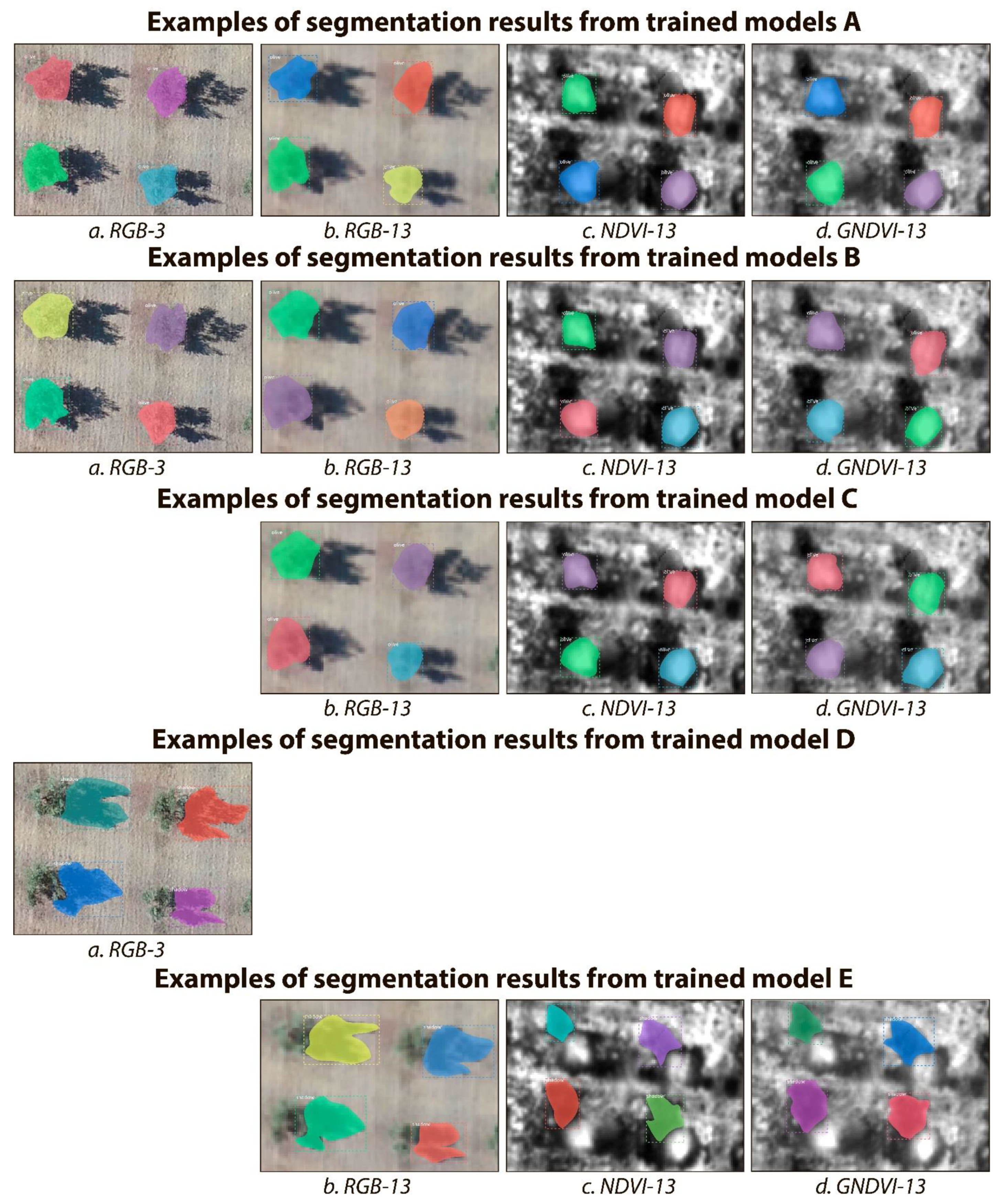

- For tree crown estimation, we trained models on each subset of data separately (i.e., RGB-3, RGB-13, NDVI-13, and GNDVI-13) without (group A of models) and with data augmentation (group B of models) (i.e., scaling, rotation, translation, horizontal and vertical shear). In addition, we also tested whether data fusion could improve the generalization of the final model, that is, whether training a single model (model C) on all the RGB, NDVI, and GNDVI data together at 13 cm/pixel could result in a single general model able of accurately segmenting olive tree crowns independently of the input (i.e., RGB-13, NDVI-13, or GNDVI-13).

- For tree shadow estimation, we just trained one model (model D) with data augmentation on the RGB-3 subset to estimate tree heights on the dataset with highest spatial resolution precision. That model was then applied to the four subsets of data. In addition, we also tested whether data fusion could improve the generalization of the final model, that is, whether training a single model (model E) on all the RGB, NDVI, and GNDVI data together at 13 cm/pixel could result in a single general model able of accurately segmenting olive tree shadows independently of the input (i.e., RGB-13, NDVI-13, or GNDVI-13).

3.6. Metrics for CNN Performance Evaluation

3.7. Biovolume Calculation from Tree Crown and Tree Shadow Estimations

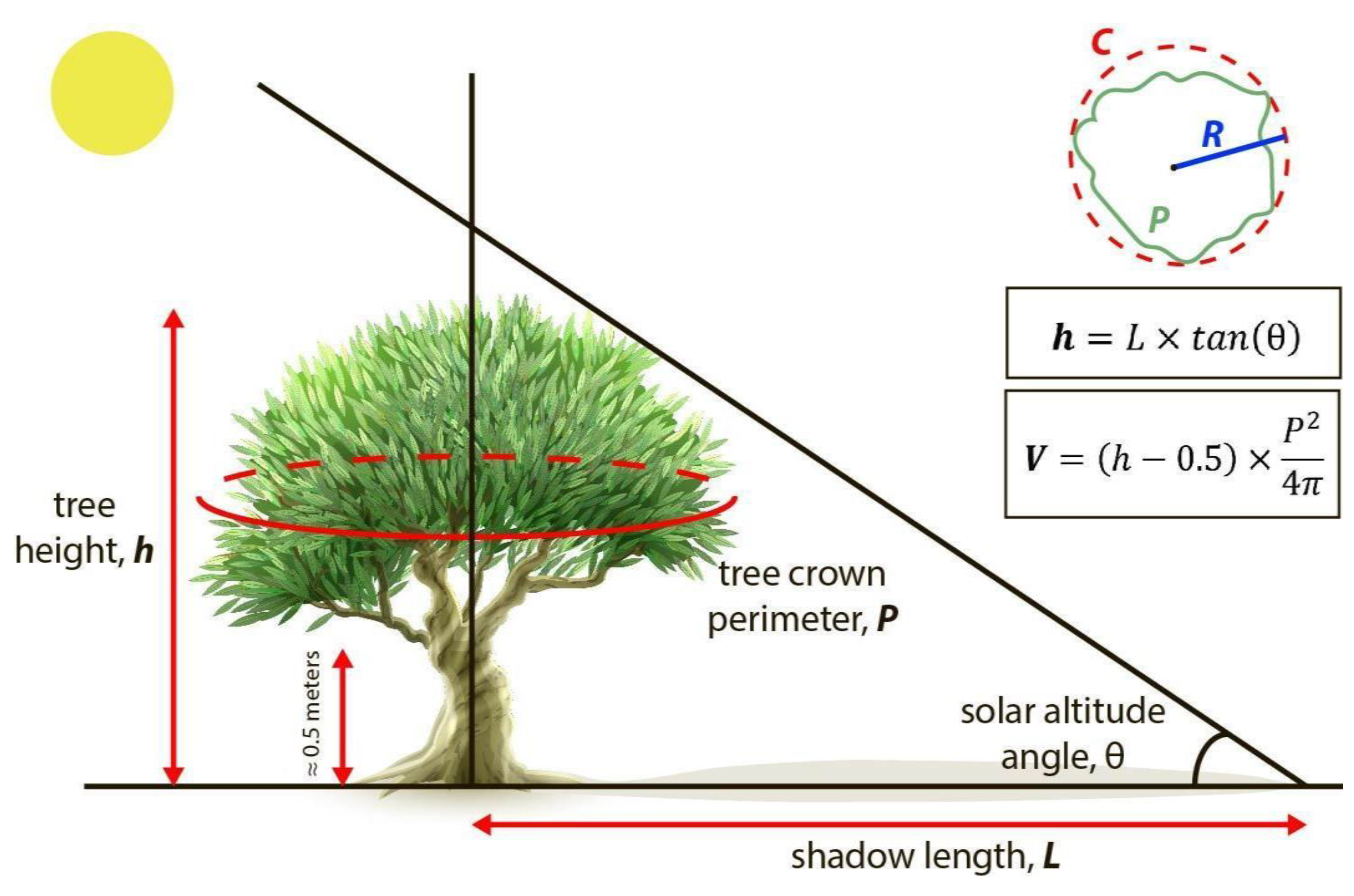

- For tree crown surface (S), we first obtained the perimeter (P) of the tree crown polygon and then calculated the surface of a circle of the same perimeter.

- For tree height (h), we followed [44] to derive tree heights from tree shadows. In a flatland, the height of the tree (h) can be calculated from the length of the shadow (L) and the angle (θ) between the horizon and the sun altitude in the sky. The tree shadow length was derived from the shadow polygons as the distance from the tree crown polygon to the far end of the shadow polygon using QGIS 2.14.21 program. The angle between the horizon and the sun altitude can be calculated from the geographical position (latitude and longitude), date and time of imagery acquisition (7) [45]. Since the fly time and date of DL-Phantom 4 Pro drone was 10:51, 9 February 2019, and the coordinates were 37°23′57″ N 3°24′47″ W, the θ was 29.61°. The fly time and date of Parrot Disco-Pro AG was 18:54, 19 June 2019, and the coordinates were 37°23′57″ N 3°24′47″ W, the θ was 26.22° [46]:

- Finally, for tree canopy volume (V), we approximated the biovolume in m3 by multiplying the tree crown surface (S) in m2 by the tree height minus 0.5 m (L − 0.5) in m. We systematically removed 0.5 m to the tree height to exclude the lower part of the tree trunk, on which there are no branches (on average about 0.5 m in height) (Figure 5). Though we could only take six ground truth samples for canopy biovolume, we assessed the overall accuracy of it as follows:where, VG is the approximate volume of tree canopy estimated from ground truth measurements, VM is the approximate volume of the tree canopy derived from the Mask R-CNN segmentation of tree crowns and shadows, i is each individual tree, and N is the total number of trees.

4. Experimental Results

4.1. Tree Crown and Tree Shadow Segmentation with RGB and Vegetation Indices Images

4.2. Results of Tree Biovolume Calculations

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| OTCSS | Olive Tree Crown and Shadow Segmentation dataset |

| UAV | Unmanned Aerial Vehicle |

| IoU | Intersection over Union |

| VGG | Visual Geometry Group |

| RGB | Red-Green-Blue |

| R-CNN | Regions-based CNN |

| NIR | Near-infrared |

| NDVI | Normalized Difference Vegetation Index |

| GNDVI | Green Normalized Difference Vegetation Index |

| GPU | Graphics Processing Unit |

| CPU | Central Processing Unit |

References

- Martínez-Valderrama, J.; Guirado, E.; Maestre, F.T. Unraveling Misunderstandings about Desertification: The Paradoxical Case of the Tabernas-Sorbas Basin in Southeast Spain. Land 2020, 9, 269. [Google Scholar] [CrossRef]

- Olive Oil in the EU. Available online: https://ec.europa.eu/info/food-farming-fisheries/plants-and-plant-products/plant-products/olive-oil_en (accessed on 6 April 2020).

- Scagliarini, M. Xylella, l’UE Cambierà le Misure di Emergenze: Ridotta L’area di Taglio. Available online: https://www.lagazzettadelmezzogiorno.it/news/home/1184219/xylella-l-ue-cambiera-le-misure-di-emergenze-ridotta-l-area-di-taglio.html (accessed on 24 July 2020).

- Brito, C.; Dinis, L.-T.; Moutinho-Pereira, J.; Correia, C.M. Drought Stress Effects and Olive Tree Acclimation under a Changing Climate. Plants 2019, 8, 232. [Google Scholar] [CrossRef] [PubMed]

- Sofo, A.; Manfreda, S.; Fiorentino, M.; Dichio, B.; Xiloyannis, C. The Olive Tree: A Paradigm for Drought Tolerance in Mediterranean Climates. Hydrol. Earth Syst. Sci. 2008, 12, 293–301. [Google Scholar] [CrossRef]

- Guirado, E.; Tabik, S.; Alcaraz-Segura, D.; Cabello, J.; Herrera, F. Deep-Learning Versus OBIA for Scattered Shrub Detection with Google Earth Imagery: Ziziphus Lotus as Case Study. Remote Sens. 2017, 9, 1220. [Google Scholar] [CrossRef]

- Guirado, E.; Alcaraz-Segura, D.; Cabello, J.; Puertas-Ruíz, S.; Herrera, F.; Tabik, S. Tree Cover Estimation in Global Drylands from Space Using Deep Learning. Remote Sens. 2020, 12, 343. [Google Scholar] [CrossRef]

- Guirado, E.; Blanco-Sacristán, J.; Rodríguez-Caballero, E.; Tabik, S.; Alcaraz-Segura, D.; Martínez-Valderrama, J.; Cabello, J. Mask R-CNN and OBIA Fusion Improves the Segmentation of Scattered Vegetation in Very High-Resolution Optical Sensors. Sensors 2021, 21, 320. [Google Scholar] [CrossRef]

- Brandt, M.; Tucker, C.J.; Kariryaa, A.; Rasmussen, K.; Abel, C.; Small, J.; Chave, J.; Rasmussen, L.V.; Hiernaux, P.; Diouf, A.A.; et al. An Unexpectedly Large Count of Trees in the West African Sahara and Sahel. Nature 2020, 587, 78–82. [Google Scholar] [CrossRef] [PubMed]

- Stateras, D.; Kalivas, D. Assessment of Olive Tree Canopy Characteristics and Yield Forecast Model Using High Resolution UAV Imagery. Agriculture 2020, 10, 385. [Google Scholar] [CrossRef]

- Lukas, V.; Novák, J.; Neudert, L.; Svobodova, I.; Rodriguez-Moreno, F.; Edrees, M.; Kren, J. The Combination of Uav Survey and Landsat Imagery for Monitoring of Crop Vigor in Precision Agriculture. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41B8, 953–957. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Cárdenas, D.A.G.; Valencia, J.A.R.; Velásquez, D.F.A.; Gonzalez, J.R.P. Dynamics of the Indices NDVI and GNDVI in a Rice Growing in Its Reproduction Phase from Multispectral Aerial Images Taken by Drones. In Proceedings of the Advances in Information and Communication Technologies for Adapting Agriculture to Climate Change II, Cali, Colombia, 22 November 2018; Springer: Cham, Switzerland, 2018; pp. 106–119. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a Green Channel in Remote Sensing of Global Vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Basso, M.; Stocchero, D.; Ventura Bayan Henriques, R.; Vian, A.; Bredemeier, C.; Konzen, A. Proposal for an Embedded System Architecture Using a GNDVI Algorithm to Support UAV-Based Agrochemical Spraying. Sensors 2019, 19, 5397. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems-Volume 1, Red Hook, NY, USA, 3 December 2012; Curran Associates Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Zhang, W.; Tang, P.; Zhao, L. Remote Sensing Image Scene Classification Using CNN-CapsNet. Remote Sens. 2019, 11, 494. [Google Scholar] [CrossRef]

- Gonzalez-Fernandez, I.; Iglesias-Otero, M.A.; Esteki, M.; Moldes, O.A.; Mejuto, J.C.; Simal-Gandara, J. A Critical Review on the Use of Artificial Neural Networks in Olive Oil Production, Characterization and Authentication. Crit. Rev. Food Sci. Nutr. 2019, 59, 1913–1926. [Google Scholar] [CrossRef]

- Holloway, J.; Mengersen, K. Statistical Machine Learning Methods and Remote Sensing for Sustainable Development Goals: A Review. Remote Sens. 2018, 10, 1365. [Google Scholar] [CrossRef]

- DeLancey, E.R.; Simms, J.F.; Mahdianpari, M.; Brisco, B.; Mahoney, C.; Kariyeva, J. Comparing Deep Learning and Shallow Learning for Large-Scale Wetland Classification in Alberta, Canada. Remote Sens. 2020, 12, 2. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2018, arXiv:1703.06870. [Google Scholar]

- Tabik, S.; Peralta, D.; Herrera-Poyatos, A.; Herrera, F. A Snapshot of Image Pre-Processing for Convolutional Neural Networks: Case Study of MNIST. Int. J. Comput. Intell. Syst. 2017, 10, 555–568. [Google Scholar] [CrossRef]

- Zhang, Q.; Qin, R.; Huang, X.; Fang, Y.; Liu, L. Classification of Ultra-High Resolution Orthophotos Combined with DSM Using a Dual Morphological Top Hat Profile. Remote Sens. 2015, 7, 16422–16440. [Google Scholar] [CrossRef]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of Fir Trees (Abies Sibirica) Damaged by the Bark Beetle in Unmanned Aerial Vehicle Images with Deep Learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef]

- Natesan, S.; Armenakis, C.; Vepakomma, U. ResNet-based tree species classification using UAV images. In Proceedings of the ISPRS-International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH: Enschede, The Netherlands, 2019; Volume XLII-2-W13, pp. 475–481. [Google Scholar]

- Fan, Z.; Lu, J.; Gong, M.; Xie, H.; Goodman, E.D. Automatic Tobacco Plant Detection in UAV Images via Deep Neural Networks. Ieee J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 876–887. [Google Scholar] [CrossRef]

- Kitano, B.T.; Mendes, C.C.T.; Geus, A.R.; Oliveira, H.C.; Souza, J.R. Corn Plant Counting Using Deep Learning and UAV Images. Ieee Geosci. Remote Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Wu, H.; Wiesner-Hanks, T.; Stewart, E.L.; DeChant, C.; Kaczmar, N.; Gore, M.A.; Nelson, R.J.; Lipson, H. Autonomous Detection of Plant Disease Symptoms Directly from Aerial Imagery. Plant Phenome J. 2019, 2. [Google Scholar] [CrossRef]

- Castelão Tetila, E.; Brandoli Machado, B.; Menezes, G.K.; Oliveira, A.d.S.; Alvarez, M.; Amorim, W.P.; de Souza Belete, N.A.; da Silva, G.G.; Pistori, H. Automatic Recognition of Soybean Leaf Diseases Using UAV Images and Deep Convolutional Neural Networks. Ieee Geosci. Remote Sens. Lett. 2019, 17, 1–5. [Google Scholar] [CrossRef]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef]

- Neupane, B.; Horanont, T.; Hung, N.D. Deep Learning Based Banana Plant Detection and Counting Using High-Resolution Red-Green-Blue (RGB) Images Collected from Unmanned Aerial Vehicle (UAV). PLoS ONE 2019, 14. [Google Scholar] [CrossRef] [PubMed]

- dos Santos, A.A.; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H.; et al. Assessment of CNN-Based Methods for Individual Tree Detection on Images Captured by RGB Cameras Attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:180402767. [Google Scholar]

- Onishi, M.; Ise, T. Automatic Classification of Trees Using a UAV Onboard Camera and Deep Learning. arXiv 2018, arXiv:180410390. [Google Scholar]

- Zhao, T.; Yang, Y.; Niu, H.; Wang, D.; Chen, Y. Comparing U-Net Convolutional Network with Mask R-CNN in the Performances of Pomegranate Tree Canopy Segmentation. In Proceedings of the Multispectral, Hyperspectral, and Ultraspectral Remote Sensing Technology, Techniques and Applications VII, Honolulu, HI, USA, 24–26 September 2018; International Society for Optics and Photonics: Bellingham, WA, USA, 2018; Volume 10780, p. 107801J. [Google Scholar]

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cué La Rosa, L.; Marcato Junior, J.; Martins, J.; Olã Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying Fully Convolutional Architectures for Semantic Segmentation of a Single Tree Species in Urban Environment on High Resolution UAV Optical Imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef]

- Gurumurthy, V.A.; Kestur, R.; Narasipura, O. Mango Tree Net--A Fully Convolutional Network for Semantic Segmentation and Individual Crown Detection of Mango Trees. arXiv 2019, arXiv:1907.06915. [Google Scholar]

- Measuring Vegetation (NDVI & EVI). Available online: https://earthobservatory.nasa.gov/features/MeasuringVegetation (accessed on 19 December 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- COCO-Common Objects in Context. Available online: https://cocodataset.org/#keypoints-eval (accessed on 21 November 2020).

- TensorFlow 2 Object Detection API Tutorial—TensorFlow 2 Object Detection API Tutorial Documentation. Available online: https://tensorflow-object-detection-api-tutorial.readthedocs.io/en/latest/ (accessed on 18 December 2020).

- Sasaki, Y. The Truth of the F-Measure. Teach Tutor Mater 2007, 1–5. Available online: https://www.cs.odu.edu/~mukka/cs795sum09dm/Lecturenotes/Day3/F-measure-YS-26Oct07.pdf (accessed on 20 February 2021).

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression. arXiv 2019, arXiv:190209630. [Google Scholar]

- Barlow, J.F.; Harrison, G. Shaded by Trees? Trees in focus. In Practical Care and Management; APN 5; Arboricultural Advisory and Information Service: Alice Holt Lodge, Wrecclesham, Farnham GU10 4LH, UK, 1999; pp. 1–8. Available online: https://www.trees.org.uk/Trees.org.uk/files/d1/d13a81b7-f8f5-4af3-891a-b86ec5b1a507.pdf (accessed on 10 February 2021).

- Wolter, P.T.; Berkley, E.A.; Peckham, S.D.; Singh, A.; Townsend, P.A. Exploiting Tree Shadows on Snow for Estimating Forest Basal Area Using Landsat Data. Remote Sens. Envrion. 2012, 121, 69–79. [Google Scholar] [CrossRef][Green Version]

- SunCalc Sun Position- Und Sun Phases Calculator. Available online: https://www.suncalc.org (accessed on 7 April 2020).

- Yeom, J.; Jung, J.; Chang, A.; Ashapure, A.; Maeda, M.; Maeda, A.; Landivar, J. Comparison of Vegetation Indices Derived from UAV Data for Differentiation of Tillage Effects in Agriculture. Remote Sens. 2019, 11, 1548. [Google Scholar] [CrossRef]

- Chen, Y.; Li, C.; Ghamisi, P.; Jia, X.; Gu, Y. Deep fusion of remote sensing data for accurate classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1253–1257. [Google Scholar] [CrossRef]

- Handique, B.K.; Khan, A.Q.; Goswami, C.; Prashnani, M.; Gupta, C.; Raju, P.L.N. Crop Discrimination Using Multispectral Sensor Onboard Unmanned Aerial Vehicle. Proc. Natl. Acad. Sci. India Sect. Phys. Sci. 2017, 87, 713–719. [Google Scholar] [CrossRef]

- Hunt, E.R.; Horneck, D.A.; Spinelli, C.B.; Turner, R.W.; Bruce, A.E.; Gadler, D.J.; Brungardt, J.J.; Hamm, P.B. Monitoring Nitrogen Status of Potatoes Using Small Unmanned Aerial Vehicles. Precis. Agric. 2018, 19, 314–333. [Google Scholar] [CrossRef]

- Varkarakis, V.; Bazrafkan, S.; Corcoran, P. Deep neural network and data augmentation methodology for off-axis iris segmentation in wearable headsets. Neural Netw. 2020, 121, 101–121. [Google Scholar] [CrossRef]

- Ulku, I.; Barmpoutis, P.; Stathaki, T.; Akagunduz, E. Comparison of Single Channel Indices for U-Net Based Segmentation of Vegetation in Satellite Images. In Proceedings of the Twelfth International Conference on Machine Vision (ICMV 2019), Amsterdam, The Netherlands, 31 January 2020; Volume 11433, p. 1143319. [Google Scholar]

- Jiménez-Brenes, F.M.; López-Granados, F.; de Castro, A.I.; Torres-Sánchez, J.; Serrano, N.; Peña, J.M. Quantifying Pruning Impacts on Olive Tree Architecture and Annual Canopy Growth by Using UAV-Based 3D Modelling. Plant Methods 2017, 13, 55. [Google Scholar] [CrossRef] [PubMed]

- Estornell Cremades, J.; Velázquez Martí, B.; López Cortés, I.; Salazar Hernández, D.M.; Fernández-Sarría, A. Estimation of wood volume and height of olive tree plantations using airborne discrete-return LiDAR data. GISci. Remote Sens. 2014, 17–29. [Google Scholar] [CrossRef]

| Tree Crown Subset | # of Training Images | # of Training Segments | # of Testing Images | # of Testing Segments | Total of Images | Total of Segments |

|---|---|---|---|---|---|---|

| RGB-3 | 120 | 480 | 30 | 120 | 150 | 600 |

| RGB-13 | 120 | 480 | 30 | 120 | 150 | 600 |

| NDVI-13 | 120 | 480 | 30 | 120 | 150 | 600 |

| GNDVI-13 | 120 | 480 | 30 | 120 | 150 | 600 |

| Total | 480 | 1920 | 120 | 480 | 600 | 2400 |

| Tree Shadow Subset | # of Training Images | # of Training Segments | # of Testing Images | # of Testing Segments | Total of Images | Total of Segments |

|---|---|---|---|---|---|---|

| RGB-3 | 120 | 480 | 30 | 120 | 150 | 600 |

| RGB-13 | 120 | 480 | 30 | 120 | 150 | 600 |

| NDVI-13 | 120 | 480 | 30 | 120 | 150 | 600 |

| GNDVI-13 | 120 | 480 | 30 | 120 | 150 | 600 |

| Total | 480 | 1920 | 120 | 480 | 600 | 2400 |

| Testing Subset | TP | FP | FN | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| ||||||

| RGB-3 | 120 | 0 | 0 | 1.0000 | 1.0000 | 1.0000 |

| RGB-13 | 119 | 0 | 1 | 1.0000 | 0.9916 | 0.9958 |

| NDVI-13 | 114 | 2 | 6 | 0.9827 | 0.9500 | 0.9660 |

| GNDVI-13 | 110 | 0 | 10 | 1.0000 | 0.9166 | 0.9564 |

| ||||||

| RGB-3 | 120 | 0 | 0 | 1.0000 | 1.0000 | 1.0000 |

| RGB-13 | 118 | 0 | 2 | 1.0000 | 0.9833 | 0.9915 |

| NDVI-13 | 118 | 13 | 2 | 0.9007 | 0.9833 | 0.9401 |

| GNDVI-13 | 118 | 12 | 2 | 0.9076 | 0.9833 | 0.9439 |

| ||||||

| RGB-13 | 119 | 0 | 1 | 1.0000 | 0.9916 | 0.9958 |

| NDVI-13 | 116 | 0 | 4 | 1.0000 | 0.9666 | 0.9830 |

| GNDVI-13 | 109 | 0 | 11 | 1.0000 | 0.9083 | 0.9519 |

| Testing Subset | TP | FP | FN | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| ||||||

| RGB-3 | 120 | 0 | 0 | 1.0000 | 1.0000 | 1.0000 |

| ||||||

| RGB-13 | 119 | 0 | 1 | 1.0000 | 0.9916 | 0.9958 |

| NDVI-13 | 111 | 0 | 9 | 1.0000 | 0.9250 | 0.9610 |

| GNDVI-13 | 117 | 0 | 3 | 1.0000 | 0.9750 | 0.9873 |

| Models A & D | Models C & E | Models C & E | Models C & E | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ground Truth | Tested on RGB-3 | Tested on RGB-13 | Tested on NDVI-13 | Tested on GNDVI-13 | |||||||||||||||

| N | P | h | V | P | L | h | V | P | L | h | V | P | L | h | V | P | L | h | V |

| 1 | 6.3 | 2.5 | 6.31 | 6.6 | 4.3 | 2.4 | 6.70 | 7.1 | 4.1 | 2.3 | 7.34 | 7.7 | 3.6 | 1.8 | 6.00 | 9.4 | 3.6 | 1.8 | 8.95 |

| 2 | 6.5 | 2.6 | 7.06 | 6.5 | 4.8 | 2.7 | 7.40 | 8.0 | 4.3 | 2.4 | 9.89 | 8.2 | 4.5 | 2.2 | 9.18 | 8.2 | 4.5 | 2.2 | 9.18 |

| 3 | 8.3 | 3.0 | 13.70 | 8.8 | 4.6 | 2.6 | 13.02 | 10.0 | 5.8 | 3.3 | 22.25 | 10.0 | 5.2 | 2.6 | 16.4 | 10.6 | 5.2 | 2.6 | 18.42 |

| 4 | 8.5 | 3.0 | 14.37 | 8.5 | 5.2 | 2.9 | 14.11 | 8.7 | 5.1 | 2.9 | 14.34 | 9.1 | 4.8 | 2.4 | 12.28 | 10.6 | 4.8 | 2.4 | 16.66 |

| 5 | 8.1 | 2.9 | 12.53 | 8.1 | 5.4 | 3.1 | 13.41 | 8.1 | 5.9 | 3.4 | 14.89 | 8.4 | 4.5 | 2.2 | 9.63 | 9.2 | 4.5 | 2.2 | 11.56 |

| 6 | 8.7 | 3.0 | 15.05 | 8.4 | 5.9 | 3.3 | 16.02 | 8.5 | 5.1 | 2.9 | 13.78 | 9.2 | 5.0 | 2.5 | 13.21 | 10.1 | 5.0 | 2.5 | 15.93 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Safonova, A.; Guirado, E.; Maglinets, Y.; Alcaraz-Segura, D.; Tabik, S. Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN. Sensors 2021, 21, 1617. https://doi.org/10.3390/s21051617

Safonova A, Guirado E, Maglinets Y, Alcaraz-Segura D, Tabik S. Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN. Sensors. 2021; 21(5):1617. https://doi.org/10.3390/s21051617

Chicago/Turabian StyleSafonova, Anastasiia, Emilio Guirado, Yuriy Maglinets, Domingo Alcaraz-Segura, and Siham Tabik. 2021. "Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN" Sensors 21, no. 5: 1617. https://doi.org/10.3390/s21051617

APA StyleSafonova, A., Guirado, E., Maglinets, Y., Alcaraz-Segura, D., & Tabik, S. (2021). Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN. Sensors, 21(5), 1617. https://doi.org/10.3390/s21051617