Evaluation and Selection of Video Stabilization Techniques for UAV-Based Active Infrared Thermography Application

Abstract

1. Introduction

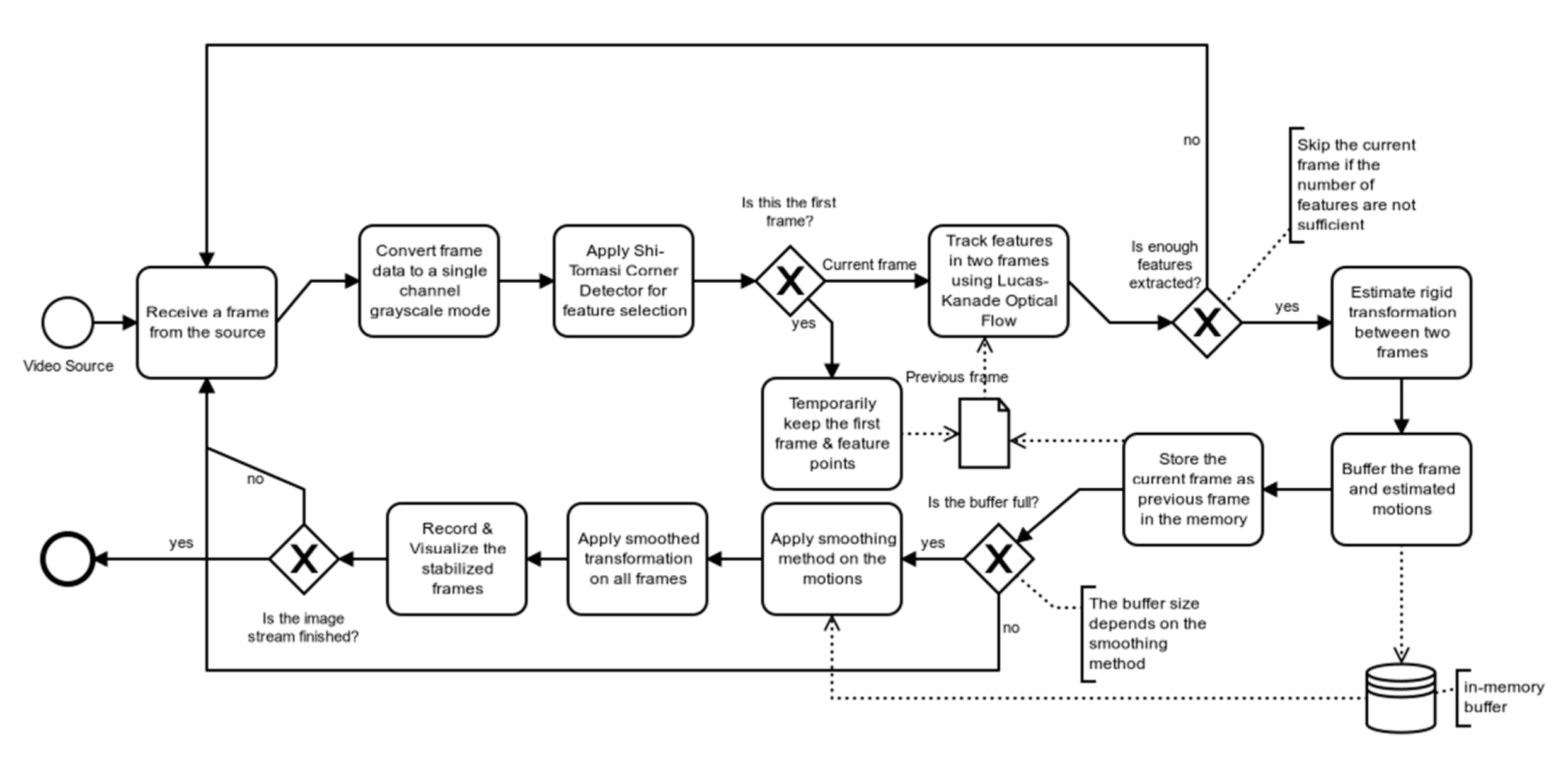

1.1. General UAV Applications

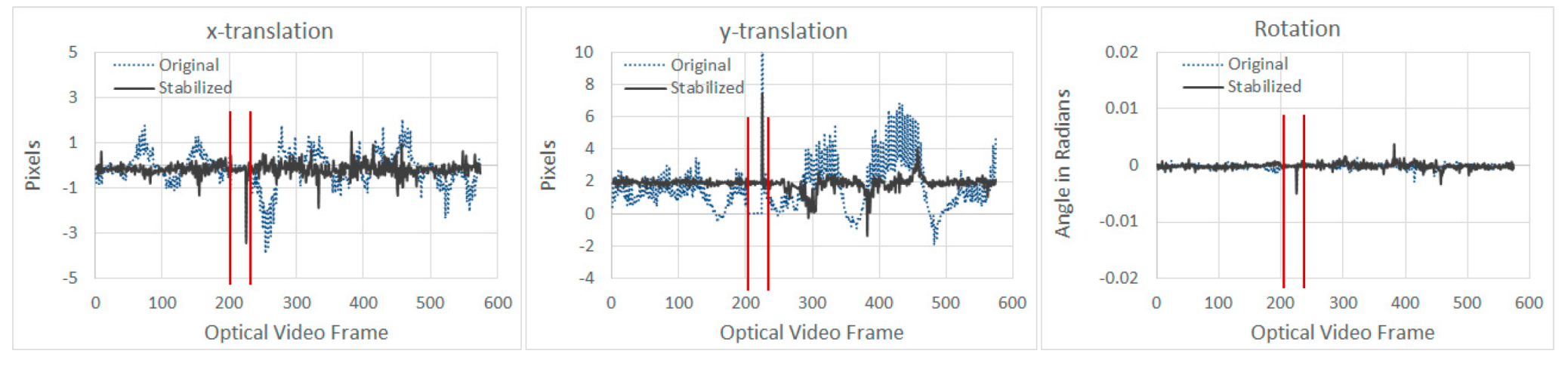

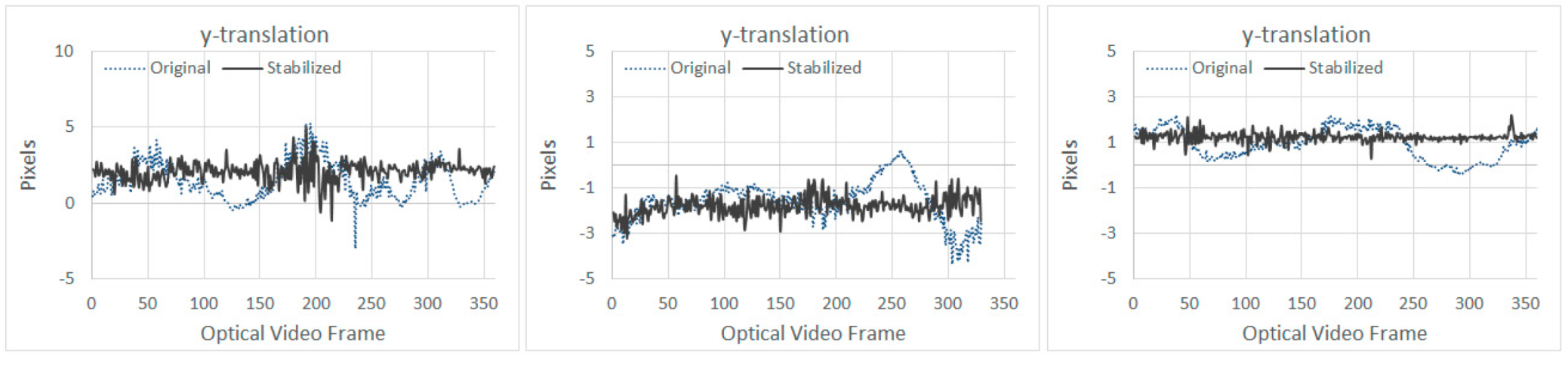

1.2. Passive Thermography and UAV Applications

1.3. Active Thermography and UAV Applications

1.4. Video Stabilization for UAV Applications

2. Experimental Setup

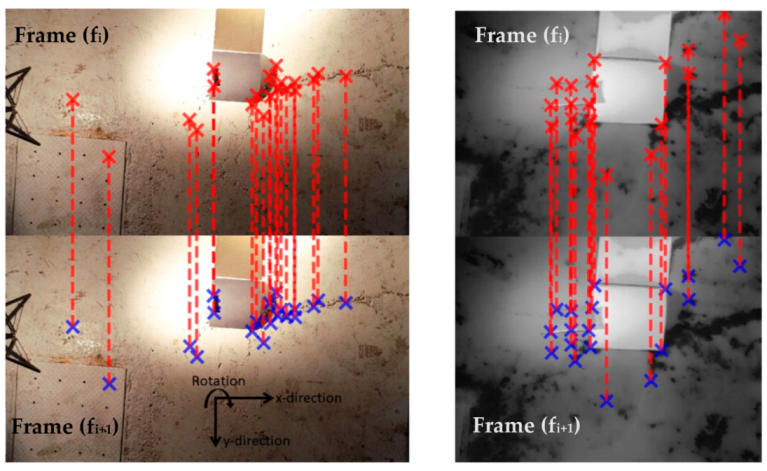

3. Methodology

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, C.; Loudjani, V.P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Salvo, G.; Caruso, L.; Scordo, A. Urban Traffic Analysis through an UAV. Procedia Soc. Behav. Sci. 2014, 111, 1083–1091. [Google Scholar] [CrossRef]

- Siebert, S.; Teizer, J. Mobile 3D mapping for surveying earthwork projects using an Unmanned Aerial Vehicle (UAV) system. Automat. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Cassana, J.; Kantner, J.; Wiewel, A.; Cothren, J. Archaeological aerial thermography: A case study at the Chaco-era Blue J community, New Mexico. J. Archaeol. Sci. 2014, 45, 207–219. [Google Scholar] [CrossRef]

- Nigam, N. The Multiple Unmanned Air Vehicle Persistent Surveillance Problem: A Review. Machines 2014, 2, 13–72. [Google Scholar] [CrossRef]

- Clarke, R.; Bennett Moses, L. The Regulation of Civilian UAVs’ Impacts on Public Safety. CLSR. 2014, 30, 263–285. [Google Scholar] [CrossRef]

- Kim, D.; Youn, J.; Kim, C. Automatic Fault Recognition of Photovoltaic Modules Based on Statistical Analysis of Uav Thermography. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 179–182. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A Review on the Use of Unmanned Aerial Vehicles and Imaging Sensors for Monitoring and Assessing Plant Stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Ortiz-Sanz, J.; Gil-Docampo, M.; Arza-García, M.; Cañas-Guerrero, I. IR Thermography from UAVs to Monitor Thermal Anomalies in the Envelopes of Traditional Wine Cellars: Field Test. Remote Sens. 2019, 11, 1424. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Burke, C.; McWhirter, P.R.; Veitch-Michaelis, J.; McAree, O.; Pointon, H.A.G.; Wich, S.; Longmore, S. Requirements and Limitations of Thermal Drones for Effective Search and Rescue in Marine and Coastal Areas. Drones 2019, 3, 78. [Google Scholar] [CrossRef]

- Melis, M.T.; Da Pelo, S.; Erbì, I.; Loche, M.; Deiana, G.; Demurtas, V.; Meloni, M.A.; Dessì, F.; Funedda, A.; Scaioni, M.; et al. Thermal Remote Sensing from UAVs: A Review on Methods in Coastal Cliffs Prone to Landslides. Remote Sens. 2020, 1, 1971. [Google Scholar] [CrossRef]

- Ibarra-Castanedo, C.; Sfarra, S.; Klein, M.; Maldague, X. Solar loading thermography: Time-lapsed thermographic survey and advanced thermographic signal processing for the inspection of civil engineering and cultural heritage structures. Infrared Phys. Technol. 2017, 82, 56–74. [Google Scholar] [CrossRef]

- Ibarra-Castanedo, C.; Brault, L.; Genest, M.; Farley, V.; Maldague, X.P. Detection and characterization of water ingress in honeycomb structures by passive and active infrared thermography using a high resolution camera. In Proceedings of the 11th International Conference on Quantitative InfraRed Thermography, Naples, Italy, 11–14 June 2012. [Google Scholar] [CrossRef]

- Zhang, H.; Avdelidis, N.P.; Osman, A.; Ibarra-Castanedo, C.; Sfarra, S.; Fernandes, H.; Matikas, T.E.; Maldague, X.P. Enhanced infrared image processing for impacted carbon/glass fiber-reinforced composite evaluation. Sensors 2017, 18, 45. [Google Scholar] [CrossRef]

- Aghaei, M.; Leva, S.; Grimaccia, F. PV power plant inspection by image mosaicing techniques for IR real-time images. In Proceedings of the 2016 IEEE 43rd Photovoltaic Specialists Conference (PVSC), Portland, OR, USA, 5–10 June 2016; pp. 3100–3105. [Google Scholar]

- Oswald-Tranta, B.; Sorger, M. Scanning pulse phase thermography with line heating. Quant. InfraRed Thermogr. J. 2013, 9, 103–122. [Google Scholar] [CrossRef]

- Mavromatidis, L.E.; Dauvergne, J.-L.; Saleri, R.; Batsale, J.-C. First experiments for the diagnosis and thermophysical sampling using impulse IR thermography from Unmanned Aerial Vehicle (UAV). In Proceedings of the QIRT Conference, Bordeaux, France, 7–11 July 2014. [Google Scholar] [CrossRef]

- Ibarra-Castanedo, C.; Maldague, X. Pulsed phase thermography reviewed. Quant. InfraRed Thermogr. J. 2004, 1, 47–70. [Google Scholar] [CrossRef]

- Sachs, D.; Nasiri, S.; Goehl, D.; Image Stabilization Technology Overview. InvenSense. Whitepaper. 2006. Available online: https://www.digikey.gr/Web%20Export/Supplier%20Content/invensense-1428/pdf/invensense-image-stabilization-technology.pdf (accessed on 22 February 2021).

- Shen, H.; Pan, Q.; Cheng, Y.; Yu, Y. Fast video stabilization algorithm for UAV. In Proceedings of the IEEE International Conference on Intelligent Computing and Intelligent Systems, Shanghai, China, 20–22 November 2009; pp. 542–546. [Google Scholar]

- Wang, Y.; Hou, Z.; Leman, K.; Chang, R. Real-time video stabilization for Unmanned Aerials Vehicles. In Proceedings of the Conference on Machine Vision Applications, Nara, Japan, 13–15 June 2011; pp. 336–339. [Google Scholar]

- Hong, S.; Hong, T.; Yang, W. Multi-resolution unmanned aerial vehicle video stabilization. In Proceedings of the IEEE 2010 National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 14–16 July 2010; pp. 126–131. [Google Scholar]

- Rahmanair, W.; Wang, W.-J.; Chen, H.-C. Real-Time Detection and Recognition of Multiple Moving Objects for Aerial Surveillance. Electronics 2019, 8, 1373. [Google Scholar] [CrossRef]

- Walha, A.; Wali, A.; Alimi, A.M. Video Stabilization for Aerial Video Surveillance. AASRI Procedia 2013, 4, 72–77. [Google Scholar] [CrossRef]

- Zhou, M.; Ansari, V.K. A fast video stabilization system based on speeded-up robust features. In Advances in Visual Computing, Proceedings of the International Symposium on Visual Computing; Las Vegas, NV, USA, 26–28 September 2011; Bebis, G., Boyle, R., Parvin, B., Koracin, D., Wang, S., Kyungnam, K., Benes, B., Moreland, K., Borst, C., DiVerdi, S., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 428–435. [Google Scholar]

- Auberger, S.; Miro, C. Digital Video Stabilization Architecture for Low Cost Devices. In Proceedings of the 4th International Symposium on Image and Signal Processing and Analysis, Zagreb, Croatia, 15–17 September 2005; pp. 474–479. [Google Scholar]

- Marcenaro, L.; Vernazza, G.; Regazzoni, C.S. Image stabilization algorithms for video-surveillance applications. In Proceedings of the 2001 International Conference on Image Processing (Cat. No.01CH37205), Thessaloniki, Greece, 7–10 October 2001; Volume 1, pp. 349–352. [Google Scholar] [CrossRef]

- Morimoto, C.; Chellappa, R. Evaluation of image stabilization algorithms. In Proceedings of the 1998 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP ’98 (Cat. No.98CH36181), Seattle, WA, USA, 15 May 1998; Volume 5, pp. 2789–2792. [Google Scholar] [CrossRef]

- Souza, M.; Pedrini, H. Digital video stabilization based on adaptive camera trajectory smoothing. J. Image Video Proc. 2018, 37. [Google Scholar] [CrossRef]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thirty-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar] [CrossRef]

- Thakur, A.S. Video Stabilization Using Point Feature Matching in OpenCV. Learn OpenCV. Available online: https://www.learnopencv.com/author/abhi-una12/ (accessed on 12 July 2020).

- Shi-Tomasi Corner Detector. OpenCV. Available online: https://docs.opencv.org/3.4/d8/dd8/tutorial_good_features_to_track.html (accessed on 19 January 2021).

- Optical Flow. OpenCV. Available online: https://docs.opencv.org/3.4/d4/dee/tutorial_optical_flow.html (accessed on 19 January 2021).

- Klinker, F. Exponential moving average versus moving exponential average. Math. Semesterber. 2011, 58, 97–107. [Google Scholar] [CrossRef]

- Zhang, X. Gaussian Distribution. In Encyclopedia of Machine Learning; Sammut, C., Webb, G.I., Eds.; Springer: Boston, MA, USA, 2011. [Google Scholar] [CrossRef]

- Linear Regression. Scikit-Learn. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LinearRegression.html (accessed on 10 July 2020).

- Support Vector Regression. Scikit-Learn. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVR.html (accessed on 13 July 2020).

- Butterworth Filter. SciPy.org. Available online: https://docs.scipy.org/doc/scipy/reference/generated/scipy.signal.butter.html (accessed on 18 July 2020).

- ZENMUSE XT. User Manual, v1.2; FFC Calibration. 2016, p. 14, DJI. Available online: https://dl.djicdn.com/downloads/zenmuse_xt/en/Zenmuse_XT_User_Manual_en_v1.2.pdf (accessed on 22 February 2021).

- What Calibration Terms are Applied in the Camera? There is the FFC and also the Gain Calibration. Are there others? Can I Do My Own Calibration? FLIR. Available online: https://www.flir.ca/support-center/oem/what-calibration-terms-are-applied-in-the-camera-there-is-the-ffc-and-also-the-gain-calibration.-are-there-others-can-i-do-my-own-calibration/ (accessed on 7 January 2021).

- Tukey, J.W. Exploratory Data Analysis. Addison-Wesley: Reading, MA, USA, 1977; ISBN 978-0-201-07616-5. [Google Scholar]

- Litvin, A.; Konrad, J.; Karl, W. Probabilistic Video Stabilization Using Kalman Filtering and Mosaicing. In Proceedings of the SPIE 5022, Image and Video Communications and Processing, Santa Clara, CA, USA, 7 May 2003; pp. 663–674. [Google Scholar]

- Cheung, V.; Frey, B.J.; Jojic, N. Video Epitomes. Int. J. Comput. Vis. 2008, 76, 141–152. [Google Scholar] [CrossRef]

- Matsushita, Y.; Ofek, E.; Ge, W.; Tang, X.; Shum, H.-Y. Full-frame video stabilization with motion inpainting. IEEE Trans. Patt. Anal. Mach. Intell. 2006, 28, 1150–1163. [Google Scholar] [CrossRef] [PubMed]

- Maldague, X.P.V. Theory and Practice of Infrared Technology for NonDestructive Testing; John Wiley-Interscience: New York, NY, USA, 2001; 684. [Google Scholar]

- Shepard, S.M.; Lhota, J.R.; Rubadeux, B.A.; Wang, D.; Ahmed, T. Reconstruction and enhancement of active thermographic image sequences. Opt. Eng. 2003, 42, 1337–1342. [Google Scholar] [CrossRef]

- Martin, R.E.; Gyekenyesi, A.L.; Shepard, S.M. Interpreting the results of pulsed thermography data. Mater. Eval. 2003, 61, 611–616. [Google Scholar]

- Rajic, N. Principal component thermography for flaw contrast enhancement and flaw depth characterisation in composite structures. Compos. Struct. 2002, 58, 521–528. [Google Scholar] [CrossRef]

| Rows | Defect Type | Quantity | Size | Depth |

|---|---|---|---|---|

| 1 | Circular | 4 | 5 cm (diameter) | 2.2 cm |

| 2 | Circular | 4 | 5 cm (diameter) | 1 cm (filled) |

| 3 | Circular | 4 | 5 cm (diameter) | (filled) |

| 4 | Circular | 4 | 5 cm (diameter) | (filled) |

| 5 | Rectangular | 1 | 2 × 24 cm | 2 cm |

| Smoothing Algorithm | x-Translation (Pixels) | y-Translation (Pixels) | Rotation (Radians) | MS-SSIM | Average BB Percent | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Lower Bound | Upper Bound | Lower Bound | Upper Bound | Lower Bound | Upper Bound | Min | Max | Average | ||

| Original | −4.54 | 2.28 | −1.98 | 7.74 | 0.00 | 0.00 | 0.72 | 1.00 | 0.86 | 0.00 |

| EMA-1s | −3.18 | 1.50 | 0.25 | 10.97 | 0.00 | 0.00 | 0.75 | 0.99 | 0.88 | 0.08 |

| EMA-3s | −4.32 | 1.42 | −0.97 | 8.55 | 0.00 | 0.01 | 0.80 | 0.99 | 0.90 | 4.85 |

| EMA-5s | −24.55 | 1.36 | 0.00 | 42.68 | 0.00 | 0.00 | 0.84 | 0.99 | 0.92 | 12.67 |

| GF-1s | −3.08 | 1.81 | 1.31 | 7.86 | 0.00 | 0.00 | 0.74 | 0.96 | 0.88 | 0.00 |

| GF-3s | −1.06 | 1.14 | 1.33 | 5.00 | 0.00 | 0.00 | 0.81 | 0.95 | 0.88 | 0.00 |

| GF-5s | −1.87 | 0.85 | 1.22 | 4.29 | 0.00 | 0.00 | 0.83 | 0.96 | 0.90 | 0.20 |

| LBW | −5.94 | 2.65 | −2.42 | 10.43 | 0.00 | 0.00 | 0.71 | 1.00 | 0.87 | 0.00 |

| LR | −1.23 | 5.00 | 0.68 | 4.20 | 0.00 | 0.00 | 0.78 | 0.91 | 0.86 | 0.12 |

| SMA-1s | −4.71 | 2.05 | −1.20 | 9.94 | 0.00 | 0.00 | 0.73 | 0.99 | 0.87 | 0.00 |

| SMA-3s | −3.13 | 6.10 | 0.03 | 9.94 | 0.00 | 0.00 | 0.70 | 0.99 | 0.88 | 1.05 |

| SMA-5s | −2.28 | 1.40 | −0.04 | 7.64 | 0.00 | 0.00 | 0.75 | 0.99 | 0.88 | 3.56 |

| SVR-LR | −0.92 | 7.71 | 0.74 | 4.19 | 0.00 | 0.00 | 0.79 | 0.91 | 0.86 | 0.34 |

| Smoothing Algorithm | x-Translation (Pixels) | y-Translation (Pixels) | Rotation (Radians) | MS-SSIM | Average BB Percent | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Lower Bound | Upper Bound | Lower Bound | Upper Bound | Lower Bound | Upper Bound | Min | Max | Average | ||

| Original | −1.74 | 1.55 | −1.90 | 4.59 | 0.00 | 0.00 | 0.86 | 1.00 | 0.97 | 0.00 |

| EMA-1s | −1.37 | 1.11 | −0.46 | 3.71 | 0.00 | 0.00 | 0.92 | 1.00 | 0.98 | 0.40 |

| EMA-3s | −1.14 | 0.88 | −0.48 | 3.99 | 0.00 | 0.00 | 0.89 | 1.00 | 0.98 | 8.64 |

| EMA-5s | −1.09 | 0.83 | −0.74 | 3.99 | 0.00 | 0.00 | 0.85 | 1.00 | 0.99 | 19.33 |

| GF-1s | −1.29 | 0.99 | −0.07 | 3.45 | 0.00 | 0.00 | 0.92 | 1.00 | 0.98 | 0.00 |

| GF-3s | −0.76 | 0.45 | 0.16 | 3.34 | 0.00 | 0.00 | 0.93 | 0.99 | 0.98 | 0.10 |

| GF-5s | −0.70 | 0.42 | 0.33 | 2.93 | 0.00 | 0.00 | 0.93 | 0.99 | 0.99 | 0.55 |

| LBW | −2.34 | 2.14 | −2.00 | 5.49 | 0.00 | 0.00 | 0.92 | 1.00 | 0.98 | 0.00 |

| LR | −0.70 | 0.39 | 1.37 | 2.45 | 0.00 | 0.00 | 0.92 | 0.99 | 0.98 | 0.57 |

| SMA-1s | −1.90 | 1.71 | −1.36 | 4.74 | 0.00 | 0.00 | 0.92 | 1.00 | 0.98 | 0.00 |

| SMA-3s | −1.38 | 1.10 | −0.36 | 3.57 | 0.00 | 0.00 | 0.92 | 1.00 | 0.98 | 2.05 |

| SMA-5s | −0.98 | 0.79 | −0.77 | 4.65 | 0.00 | 0.00 | 0.93 | 1.00 | 0.98 | 6.64 |

| SVR-LR | −0.73 | 0.35 | 1.26 | 2.43 | 0.00 | 0.00 | 0.88 | 0.99 | 0.98 | 1.05 |

| Smoothing Algorithm | Optical Video OSM | Thermal Video OSM |

|---|---|---|

| Original | 0.00 | 0.00 |

| EMA-1s | 7.92 | 25.34 |

| EMA-3s | 11.80 | 16.15 |

| EMA-5s | −92.48 | 2.64 |

| GF-1s | 17.28 | 29.12 |

| GF-3s | 37.85 | 37.09 |

| GF-5s | 39.84 | 39.63 |

| LBW | −10.64 | 5.23 |

| LR | 30.52 | 42.26 |

| SMA-1s | −0.57 | 14.22 |

| SMA-3s | −8.26 | 24.76 |

| SMA-5s | 14.47 | 24.33 |

| SVR-LR | 25.15 | 31.60 |

| Smoothing Algorithm | UAV Flown at 1.5 m Height OSM | UAV Flown at 2 m Height OSM | UAV Flown at 3 m Height OSM |

|---|---|---|---|

| Original | 0.00 | 0.00 | 0.00 |

| EMA-1s | −18.90 | 14.53 | 17.46 |

| EMA-3s | 0.38 | 13.28 | 27.57 |

| EMA-5s | −2.15 | 0.86 | 29.51 |

| GF-1s | 14.15 | 28.98 | 25.75 |

| GF-3s | 18.92 | 25.37 | 38.20 |

| GF-5s | 8.33 | 23.60 | 29.75 |

| LBW | −19.82 | −4.14 | −4.66 |

| LR | 20.24 | 33.97 | 43.82 |

| SMA-1s | −10.61 | 3.03 | 2.07 |

| SMA-3s | −29.68 | 18.08 | 10.40 |

| SMA-5s | −6.69 | 10.32 | 20.54 |

| SVR-LR | 22.93 | 32.79 | 44.75 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pant, S.; Nooralishahi, P.; Avdelidis, N.P.; Ibarra-Castanedo, C.; Genest, M.; Deane, S.; Valdes, J.J.; Zolotas, A.; Maldague, X.P.V. Evaluation and Selection of Video Stabilization Techniques for UAV-Based Active Infrared Thermography Application. Sensors 2021, 21, 1604. https://doi.org/10.3390/s21051604

Pant S, Nooralishahi P, Avdelidis NP, Ibarra-Castanedo C, Genest M, Deane S, Valdes JJ, Zolotas A, Maldague XPV. Evaluation and Selection of Video Stabilization Techniques for UAV-Based Active Infrared Thermography Application. Sensors. 2021; 21(5):1604. https://doi.org/10.3390/s21051604

Chicago/Turabian StylePant, Shashank, Parham Nooralishahi, Nicolas P. Avdelidis, Clemente Ibarra-Castanedo, Marc Genest, Shakeb Deane, Julio J. Valdes, Argyrios Zolotas, and Xavier P. V. Maldague. 2021. "Evaluation and Selection of Video Stabilization Techniques for UAV-Based Active Infrared Thermography Application" Sensors 21, no. 5: 1604. https://doi.org/10.3390/s21051604

APA StylePant, S., Nooralishahi, P., Avdelidis, N. P., Ibarra-Castanedo, C., Genest, M., Deane, S., Valdes, J. J., Zolotas, A., & Maldague, X. P. V. (2021). Evaluation and Selection of Video Stabilization Techniques for UAV-Based Active Infrared Thermography Application. Sensors, 21(5), 1604. https://doi.org/10.3390/s21051604