Abstract

Indoor autonomous navigation refers to the perception and exploration abilities of mobile agents in unknown indoor environments with the help of various sensors. It is the basic and one of the most important functions of mobile agents. In spite of the high performance of the single-sensor navigation method, multi-sensor fusion methods still potentially improve the perception and navigation abilities of mobile agents. This work summarizes the multi-sensor fusion methods for mobile agents’ navigation by: (1) analyzing and comparing the advantages and disadvantages of a single sensor in the task of navigation; (2) introducing the mainstream technologies of multi-sensor fusion methods, including various combinations of sensors and several widely recognized multi-modal sensor datasets. Finally, we discuss the possible technique trends of multi-sensor fusion methods, especially its technique challenges in practical navigation environments.

1. Introduction

As early as the 1960s, there were science fiction films and TV works depicting the autonomous navigation of mobile agents: intelligent robots that are able to travel freely in indoors such as in offices, factories, shopping malls, and hospitals and help people in work, production, play, and study. In these scenarios, autonomous navigation ability is the basic and one of the most important functions of mobile agents.

Autonomous indoor navigation of mobile agents refers to the abilities of autonomous localization and map construction in dynamic scenes [1]. This technology is based on the collection, analysis, and perception of environmental information, and carries out real-time localization and route planning by constructing a map. Using the data acquired by sensors to perceive the environment is the key technique related to mobile agent navigation. Historically, various sensors have been adopted for mobile agent navigation, such as cameras [2], light detection and ranging (LiDAR) [3], inertial measurement units (IMU) [4], ultra-wide band (UWB) [5], Wi-Fi [6], Bluetooth [7], ZigBee [8], infrared [9], ultrasonic [10], etc. According to the different principles and usages of these sensors, some scholars divided autonomous navigation into two categories: single-sensor navigation methods and multi-sensor fusion navigation methods [11].

In the single sensor navigation methods, the agents decide their own navigation states in the environment depending on a single sensor [12], among which cameras and LiDAR are widely used. A single sensor has specific advantages and limitations in navigation: for example, the visual sensor has the advantages of low price and various mature algorithms provided by researchers. However, the vision perception accuracies are easily influenced by the environments’ changes in illumination [13]. Correspondingly, LiDAR data have the advantage of high frequency. However, LiDAR data’s resolution usually requires improvement and the content information is not intuitively presented in it [13]. Compared to single-sensor agent navigation, multi-sensor fusion methods improve the localization accuracy and robustness in the task of navigation by collecting and fusing the environmental information from different types of sensors [11]. In recent years, research on multi-sensor fusion methods for agent navigation has become an important trend.

The goal of this paper is not to present a complete summary of mobile agents’ navigation, but to focus on the discussions of multiple sensors’ fusion methods in mobile agents’ indoor navigation. In spite of several recent publications on related work [14,15], this work is obviously different from them, in that we focus on the multi-sensor fusion methods rather than a discussion of positioning [14], mapping, and way finding [15]. Namely, we focus on the discussion of sensors’ functionalities and the fusion methods of them in navigation rather than pure algorithms or the navigation task.

According to the event where and when fusions were processed, fusion could happen at the data (feature) level, model level, and decision level, respectively [16]. According to the calculation method, the fusion can be divided into rule-based and statistic (machine learning)-based fusion [17]. Considering the relativity of different channels, some literature divided their relationships into three categories: complementary, mutual exclusion, and redundancy [18]. In this work, considering the fact that the sensors play key roles in the perception and decision making in navigation, we divide the multi-sensor fusion models into two types: one dominant sensor combined with assisting sensors [19], and multiple sensors assisting each other without a dominant sensor [20]. We believe that this strategy benefits the outline of each sensor, and helps to learn sensors’ advantages and disadvantages in the fusion procedure. To this end, we focus on the fusion methods of different possible sensors in navigation, including traditional classical fusion methods, but also the new methods introduced in recent years, such as deep learning and reinforcement learning on sensor fusion. In each section, we start with the introduction of traditional methods, and then gradually transition to the new developments in this area in recent years.

In addition, quite a few multi-sensor fusion methods are proposed by the experiments, which are based on both indoor and outdoor environments, and a large portion of fusion methods have high versatility in various environments. Due to the stability and safety of indoor environments, compared with that of outdoors, we believe that some of these methods still have the same potential in indoor navigation. In this way, a few multiple fusion methods originally proposed in outdoor environments are also included in our discussions, since the fusion methods are worth referencing.

The remainder of this paper is organized as follows: we first briefly review some widely used sensors in Section 2; the main multi-sensor fusion methods for agent navigation and some well-known multi-modal datasets are introduced in Section 3 and Section 4; and the discussions and possible technique trends of multi-sensor fusion methods are given in Section 5. Finally, Section 6 concludes the whole work.

4. Multi-Modal Datasets

To validate the performance of multiple data fusion methods, some researchers have constructed multi-modal indoor simulation datasets, and a few of them are freely available on websites [105,106,107]. These public datasets contain at least two kinds of multi-modal data, which help to validate the multi-sensor fusion methods. According to the styles of data collection, we divide multi-modal indoor simulation datasets into three types according to the data acquisition styles: the datasets acquired by the devices setup on robots, the datasets acquired by handheld devices, and those generated in virtual 3D environments.

4.1. Robot@Home: An Example of Datasets Acquired by The Devices Setup on Robot

The Robot-at-Home dataset is a multi-modal dataset published by J.R. Ruiz Sarmiento in 2017, which collects multi-modal data from the home environment from the view of robot platform [108]. The Robot-at-Home dataset is a collection of raw and processed data from five domestic settings (36 rooms) compiled by a mobile robot equipped with 4 RGB-D cameras and a 2D laser scanner. In addition to the RGB-D cameras and laser scanner data, the 3D room reconstruction, the semantic information and the camera posture data are also provided (as shown in Figure 2). Therefore, the dataset is rich in contextual information of objects and rooms. The dataset contains more than 87,000 groups of data collected by mobile robots under different time nodes. The dataset samples multiple scenes to provide different perspectives of the same objects in the same scene, which shows the displacement changes of objects at different times. All modal data are correlated by the time stamp. Figure 1 presents several types of data provided by the Robot-at-Home dataset. There are some multi-modal fusion works discussed based on the Robot-at-Home dataset, such as multi-layer semantic structure analysis using RGB-D information combined with LIDAR data [109], visual odometry with the assistance of depth data [110], and path planning by the combination of depth data and LiDAR data [111], etc.

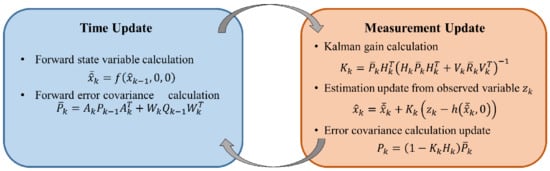

Figure 2.

Several types of data provided by the Robot-at-Home dataset: (a) RGB image, (b) depth image, (c) 2D LiDAR map, (d) 3D room reconstruction, and (e) 3D room semantic reconstruction [108].

The Robot-at-Home dataset is collected in the real home environment and contains detailed semantic labels, which is also important in SLAM. There are also other multi-modal datasets acquired by the devices setup on robot, such as the MIT Stata Center Dataset [112], TUMindoor Dataset [113], Fribourg Dataset [114], and KITTI Dataset [115], etc. These datasets also provide abundant multi-modal data and are widely used in navigation research [116,117,118].

4.2. Microsoft 7 Scenes: An Example of Datasets Acquired by Handheld Devices

Microsoft 7 Scenes is a multi-modal dataset released by Microsoft in 2013. The dataset contains seven scenes, and the data collection is completed by 640 × 480 resolution hand-held Kinect RGB-D camera [119]. Each scene in the dataset contains 500–1000 sets of data, and each set of data is divided into three parts (as shown in Figure 3): a RGB image, a depth image, and a 4 × 4 pose matrix from camera to world homogeneous coordinate system. For each scene, the dataset is divided into a training set and a test set. In addition, the truncated signed distance function (TSDF) volume of the scene is also provided in the dataset. Users can use these data as the basis of multi-modal information fusion, or directly reconstruct the scene using the TSDF volumes provided by the dataset. In 2013, Ben Glocker et al. proposed a camera relocalization method based on Microsoft 7 Scenes, which achieved a good result [119]. Jamie Shotton et al. proposed an approach which employs a camera relocalization method based on regression forest, which was performed on the Microsoft 7 Scenes dataset [120].

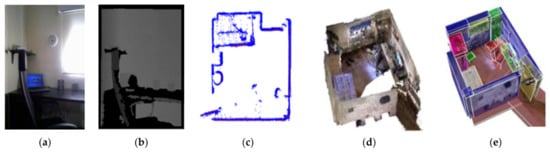

Figure 3.

Three types of the data provided by Microsoft 7 Scenes Dataset: (a) RGB image, (b) depth image, and (c) TSDF volume for scene reconstruction [119].

The Microsoft 7 Scenes dataset has a large quantity of samples and rich details for a single room, and the difference between adjacent samples is small. Some samples also have motion blur, which is suitable for model training related to the discrimination of scene changes. However, the data of Microsoft 7 Scenes were collected by a hand-held RGB-D camera, and thus are not suitable for research on indoor robots where viewing angles are strictly required.

4.3. ICL-NUIM RGB-D Benchmark Dataset: An Example of Datasets Generated in Virtual 3D Environments

The ICL-NUIM RGB-D Benchmark Dataset is a dataset released by Imperial College London and the National University of Ireland in 2014. It simulates the agent motion and visual sensor posture in 3D virtual scenes [121]. The dataset contains two basic scenes: the living room and the office. There are 4601 sets of image data in each scene. Each group of data includes a RGB image, a depth image, and a 4 × 4 matrix, which describes the camera pose. For each scene, two different view modes are designed by the dataset: one is to simulate the free movement of the camera in the scene, and the collected images have a variety of perspectives (as shown in Figure 4a); the other is that the camera rotates and moves at a certain height, and the collected images only have a head-up perspective (as shown in Figure 4b). Images from different perspectives can correspond to different application scenarios. For example, multi-perspective view images may be more suitable for simulating the indoor UAV [122], while head-up perspective view images are closer to the motion state of mobile robots [123,124].

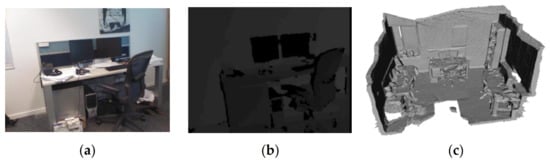

Figure 4.

Two kinds of different view modes are designed in ICL-NUIM RGB-D Benchmark Dataset [121]. Image (a) shows the view mode of a variety of camera perspectives. Image (b) shows the view mode of a head-up camera perspective.

Because the data in ICL-NUIM RGB-D Benchmark Dataset are simulated 3D data, they are slightly different from real scenes. The light in the 3D scene is ideal, and the details of the collected images are rich and balanced. There are no motion blur or illumination changes in the images. These characters make the images of ICL-NUIM RGB-D unrealistic in vision, and the algorithms validated in ICL-NUIM RGB-D may not be completely effective in real practical applications.

4.4. Others

In addition the discussed datasets above, Table 3 presents a view for various multi-sensors agent navigation datasets, including UZH-FPV Drone Racing [125], TUM RGB-D Dataset [126], ScanNet [127], NYU V2 [128], InteriorNet [129], SceneNet RGB-D [130], and others [131,132,133,134,135,136,137,138,139,140,141,142,143,144], etc. These datasets provide the basic requirements of simulation and evaluation of multi-sensor fusion in experiments.

Table 3.

A List of Multi-modal Datasets.

5. Discussions and Future Trends

5.1. Discussions

In this work, we focus on the discussions of sensors and the fusion methods of them according to their dominant functionalities in fusion. This strategy benefits the outline of each sensor, and helps to present the sensors’ advantages and disadvantages in the fusion procedure. However, because of the space limitation of this work, we did not present the sensors’ data acquisition and the data processing, such as feature extraction, sensors’ data presentations, etc., in details. Since data acquisition and process are also important factors in multi-modal information fusion, we hope that the readers will refer to the details in related references listed in this work.

In addition, in the section relating to the multi-modal dataset, the categories of the datasets were divided into three types according to the locations where the sensors are mounted. We believe that how the sensors are placed on or around the robots is critical for multi-modal sensor fusion. Although there are researchers who divided the dataset according to the number of sensors involved in the dataset, or the scale of accessible ranges for navigation, the ways that we consider the dataset from sensors’ mounting styles are useful supplements to the previous work.

5.2. Future Trends

With the emergence of various sensors and the continuous development of them, multi-sensor fusion will be an urgent need, which includes not only more specific algorithms, but also their applications in more practical scenes. We briefly discuss several technique trends of multi-sensor fusion navigation methods.

5.2.1. Uniform Fusion Framework

The fusion methods discussed in this work are mostly bi-modal or tri-modal fusion methods and most of them are task-specific. Compared with bi-modal and tri-modal fusion methods, the fusion of more than three sensors means the whole navigation system will become more complex. There are some researchers that have already paid attention to more than three kinds of sensor fusion in robot navigation [20]. However, there is a lack of methods fusing various sensors’ information in a uniform framework, and there are problems integrating the algorithm in the robot navigation and providing a more effective fusion strategy. In the future, we need a multiple fusion framework, which is able to fuse more different modalities simultaneously in a uniform framework.

5.2.2. Evaluation Methods

To evaluate a fusion model, it is necessary to establish an evaluation method for a multi-modal sensor fusion model [145]. In spite of several mature evaluation methods, such as the Monte Carlo strategy, real-time simulation, and individual calculation in the specific applications, and some SLAM evaluation methods, for instance relative pose error (RPE) [146], absolute trajectory error (ATE) [146], etc., they are not suitable to evaluate the performance of each sensor in the whole fusion system. For the multi-sensor fusion methods, we need more effective evaluation methods which are able to score not only the fusion system, but also to outline the contribution and performance of each sensor in the whole fusion process.

5.2.3. On-Line Learning and Planning

With the increasing demands of practical applications, the agents are possibly required to complete some tasks that have not been learned before. These tasks need the agent to make appropriate judgments independently, which depend on the agents’ perception, on-line learning and planning in the unknown indoor environment. For example, the agent is able to learn the name and localization of unknown objects in the scene, so as to effectively feedback the tasks and instructions given by users. The related techniques include interactive learning, knowledge transfer leaning, etc., from the multi-modal fusion data. With these methods, the agents are hoped to obtain the ability to correct itself with continuous learning in continuous environments, simulate human thinking and make decisions in dynamic environments.

6. Conclusions

Multi sensor fusion has become an important research direction in mobile agent navigation. We introduce the mainstream techniques of multi-sensor fusion methods for mobile agents’ indoor autonomous navigation in this work, including: single-sensor navigation methods, multi-sensor fusion navigation methods, some well-recognized multi-modal datasets, and the trend of future development. We believe with the increasing demand for human–computer interaction, mobile agents with multi-sensor fusion will be more intelligent and interactive in the future.

Author Contributions

Y.Q. completed all the writing work and main analyses work of the manuscript; M.Y. proposed the framework and ideas of the whole work and put forward some important guiding opinions; J.Z. completed part of the literature review and summary work; W.X. provided some guidance for writing and analyses; B.Q. and J.C. put forward some constructive suggestions for the final work. Y.Q. and M.Y. have the same equal contribution to the work. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Key Research & Development Program of China (No. 2018AAA0102902), the National Natural Science Foundation of China (NSFC) (No.61873269), the Beijing Natural Science Foundation (No: L192005), the CAAI-Huawei MindSpore Open Fund (CAAIXSJLJJ-20202-027A), the Guangxi Key Research and Development Program (AD19110137, AB18126063), and the Natural Science Foundation of Guangxi of China (2019GXNSFDA185006).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous localization and mapping: A survey of current trends in autonomous driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Kohlbrecher, S.; Von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable SLAM system with full 3D motion estimation. In Proceedings of the 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan, 31 October–5 November 2011; pp. 155–160. [Google Scholar]

- Huang, G. Visual-inertial navigation: A concise review. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9572–9582. [Google Scholar]

- Liu, L.; Liu, Z.; Barrowes, B.E. Through-wall bio-radiolocation with UWB impulse radar: Observation, simulation and signal extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 791–798. [Google Scholar] [CrossRef]

- He, S.; Chan, S.-H.G. Wi-Fi Fingerprint-based indoor positioning: Recent advances and comparisons. IEEE Commun. Surv. Tutor. 2015, 18, 466–490. [Google Scholar] [CrossRef]

- Faragher, R.; Harle, R. Location fingerprinting with bluetooth low energy beacons. IEEE J. Sel. Areas Commun. 2015, 33, 2418–2428. [Google Scholar] [CrossRef]

- Kaemarungsi, K.; Ranron, R.; Pongsoon, P. Study of received signal strength indication in ZigBee location cluster for indoor localization. In Proceedings of the 2013 10th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Krabi, Thailand, 15–17 May 2013; pp. 1–6. [Google Scholar]

- Shin, Y.-S.; Kim, A. Sparse depth enhanced direct thermal-infrared SLAM beyond the visible spectrum. IEEE Robot. Autom. Lett. 2019, 4, 2918–2925. [Google Scholar] [CrossRef]

- Freye, C.; Bendicks, C.; Lilienblum, E.; Al-Hamadi, A. Multiple camera approach for SLAM based ultrasonic tank roof inspection. In Image Analysis and Recognition, Proceedings of the ICIAR 2014, Vilamoura, Portugal, 22–24 October 2014; Springer: Cham, Switzerland, 2014; Volume 8815, pp. 453–460. [Google Scholar]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.D.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Davison, A.J. Davison real-time simultaneous localisation and mapping with a single camera. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 2, pp. 1403–1410. [Google Scholar]

- Debeunne, C.; Vivet, D. A review of visual-LiDAR fusion based simultaneous localization and mapping. Sensors 2020, 20, 2068. [Google Scholar] [CrossRef] [PubMed]

- Kunhoth, J.; Karkar, A.; Al-Maadeed, S.; Al-Ali, A. Indoor positioning and wayfinding systems: A survey. Hum. Cent. Comput. Inf. Sci. 2020, 10, 18. [Google Scholar] [CrossRef]

- Otero, R.; Lagüela, S.; Garrido, I.; Arias, P. Mobile indoor mapping technologies: A review. Autom. Constr. 2020, 120, 103399. [Google Scholar] [CrossRef]

- Maehara, Y.; Saito, S. The relationship between processing and storage in working memory span: Not two sides of the same coin. J. Mem. Lang. 2007, 56, 212–228. [Google Scholar] [CrossRef]

- Town, C. Multi-sensory and multi-modal fusion for sentient computing. Int. J. Comput. Vis. 2006, 71, 235–253. [Google Scholar] [CrossRef]

- Yang, M.; Tao, J. A review on data fusion methods in multimodal human computer dialog. Virtual Real. Intell. Hardw. 2019, 1, 21–38. [Google Scholar] [CrossRef]

- Graeter, J.; Wilczynski, A.; Lauer, M. Limo: LiDAR-monocular visual odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7872–7879. [Google Scholar]

- Ji, Z.; Singh, S. Visual-LiDAR odometry and mapping: Low-drift, robust, and fast. In Proceedings of the IEEE International Conference on Robotics & Automation, Seattle, WA, USA, 26–30 May 2015. [Google Scholar]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-map as an open-source LiDAR and visual simultaneous localization and mapping library for large-scale and long-term online operation: Labb and michaud. J. Field Robot. 2018, 36, 416–446. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small ar workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Engel, J.; Schps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular slam. In Proceedings of the 2014 European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans. Robot. 2016, 33, 249–265. [Google Scholar] [CrossRef]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3-D mapping with an RGB-D camera. IEEE Trans. Robot. 2014, 30, 177–187. [Google Scholar] [CrossRef]

- Kerl, C.; Sturm, J.; Cremers, D. Dense visual SLAM for RGB-D cameras. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2100–2106. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with Rao-Blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Deschaud, J.E. IMLS-SLAM: Scan-to-model matching based on 3d data. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: LiDAR odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LiDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Zhang, R.; Hoflinger, F.; Reindl, L. Inertial sensor based indoor localization and monitoring system for emergency responders. IEEE Sensors J. 2013, 13, 838–848. [Google Scholar] [CrossRef]

- Gui, J.; Gu, D.; Wang, S.; Hu, H. A review of visual inertial odometry from filtering and optimisation perspectives. Adv. Robot. 2015, 29, 1289–1301. [Google Scholar] [CrossRef]

- Ye, H.; Chen, Y.; Liu, M. Tightly coupled 3D LiDAR inertial odometry and mapping. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Mourikis, A.I.; Roumeliotis, S.I. A Multi-state constraint kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar] [CrossRef]

- Young, D.P.; Keller, C.M.; Bliss, D.W.; Forsythe, K.W. Ultra-wideband (UWB) transmitter location using time difference of arrival (TDOA) techniques. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers 2003, Pacific Grove, CA, USA, 9–12 November 2013. [Google Scholar]

- Porcino, D.; Hirt, W. Ultra-wideband radio technology: Potential and challenges ahead. IEEE Commun. Mag. 2003, 41, 66–74. [Google Scholar] [CrossRef]

- Despaux, F.; Bossche, A.V.D.; Jaffrès-Runser, K.; Val, T. N-TWR: An accurate time-of-flight-based N-ary ranging protocol for Ultra-Wide band. Ad Hoc Netw. 2018, 79, 1–19. [Google Scholar] [CrossRef]

- Iwakiri, N.; Kobayashi, T. Joint TOA and AOA estimation of UWB signal using time domain smoothing. In Proceedings of the 2007 2nd International Symposium on Wireless Pervasive Computing, San Juan, PR, USA, 5–7 February 2007. [Google Scholar] [CrossRef]

- Al-Madani, B.; Orujov, F.; Maskeliūnas, R.; Damaševičius, R.; Venčkauskas, A. Fuzzy logic type-2 based wireless indoor localization system for navigation of visually impaired people in buildings. Sensors 2019, 19, 2114. [Google Scholar] [CrossRef]

- Orujov, F.; Maskeliūnas, R.; Damaeviius, R.; Wei, W.; Li, Y. Smartphone based intelligent indoor positioning using fuzzy logic. Future Gener. Comput. Syst. 2018, 89, 335–348. [Google Scholar] [CrossRef]

- Wietrzykowski, J.; Skrzypczynski, P. A fast and practical method of indoor localization for resource-constrained devices with limited sensing. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 293–299. [Google Scholar]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3D point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 1. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Li, S.; Xu, C.; Xie, M. A robust O(n) solution to the perspective-n-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1444–1450. [Google Scholar] [CrossRef] [PubMed]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Pomerleau, F.; Colas, F.; Siegwart, R. A review of point cloud registration algorithms for mobile robotics. Found. Trends Robot. 2015, 4, 1–104. [Google Scholar] [CrossRef]

- Barone, F.; Marrazzo, M.; Oton, C.J. Camera calibration with weighted direct linear transformation and anisotropic uncertainties of image control points. Sensors 2020, 20, 1175. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Pei, L.; Xiang, Y.; Wu, Q.; Xia, S.; Tao, L.; Yu, W. P3-LOAM: PPP/LiDAR loosely coupled SLAM with accurate covariance estimation and robust RAIM in urban canyon environment. IEEE Sens. J. 2021, 21, 6660–6671. [Google Scholar] [CrossRef]

- Zhang, H.; Ye, C. DUI-VIO: Depth uncertainty incorporated visual inertial odometry based on an RGB-D camera. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5002–5008. [Google Scholar]

- Sorkine, O. Least-squares rigid motion using SVD. Tech. Notes 2009, 120, 52. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In Vision Algorithms: Theory and Practice, Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; Springer: Cham, Switzerland, 1999. [Google Scholar]

- Bouguet, J.Y. Pyramidal implementation of the affine Lucas Kanade feature tracker description of the algorithm. Intel Corp. 2001, 5, 1–10. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, H.; Nakamura, Y.; Yang, L.; Zhang, L. Flowfusion: Dynamic dense RGB-D SLAM based on optical flow. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Xu, J.; Ranftl, R.; Koltun, V. Accurate optical flow via direct cost volume processing. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5807–5815. [Google Scholar]

- Ma, L.; Stuckler, J.; Kerl, C.; Cremers, D. Multi-view deep learning for consistent semantic mapping with RGB-D cameras. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 598–605. [Google Scholar]

- Qi, X.; Liao, R.; Jia, J.; Fidler, S.; Urtasun, R. In 3D graph neural networks for RGBD semantic segmentation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Liao, Y.; Huang, L.; Wang, Y.; Kodagoda, S.; Yu, Y.; Liu, Y. Parse geometry from a line: Monocular depth estimation with partial laser observation. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5059–5066. [Google Scholar]

- Shin, Y.S.; Park, Y.S.; Kim, A. Direct visual SLAM using sparse depth for camera-LiDAR system. In Proceedings of the 2018 International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- De Silva, V.; Roche, J.; Kondoz, A. Fusion of LiDAR and camera sensor data for environment sensing in driverless vehicles. arXiv 2017, arXiv:1710.06230. [Google Scholar]

- Scherer, S.; Rehder, J.; Achar, S.; Cover, H.; Chambers, A.; Nuske, S.; Singh, S. River mapping from a flying robot: State estimation, river detection, and obstacle mapping. Auton. Robot. 2012, 33, 189–214. [Google Scholar] [CrossRef]

- Huang, K.; Xiao, J.; Stachniss, C. Accurate direct visual-laser odometry with explicit occlusion handling and plane detection. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Pascoe, G.; Maddern, W.; Newman, P. Direct visual localisation and calibration for road vehicles in changing city environments. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 98–105. [Google Scholar]

- Zhen, W.; Hu, Y.; Yu, H.; Scherer, S. LiDAR-enhanced structure-from-motion. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 6773–6779. [Google Scholar]

- Park, C.; Moghadam, P.; Kim, S.; Sridharan, S.; Fookes, C. Spatiotemporal camera-LiDAR calibration: A targetless and structureless approach. IEEE Robot. Autom. Lett. 2020, 5, 1556–1563. [Google Scholar] [CrossRef]

- Kummerle, J.; Kuhner, T. Unified intrinsic and extrinsic camera and LiDAR calibration under uncertainties. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA) Paris, France, 31 May–31 August 2020; pp. 6028–6034. [Google Scholar]

- Zhu, Y.; Li, C.; Zhang, Y. Online camera-LiDAR calibration with sensor semantic information. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4970–4976. [Google Scholar]

- Delmerico, J.; Scaramuzza, D. A benchmark comparison of monocular visual-inertial odometry algorithms for flying robots. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2502–2509. [Google Scholar] [CrossRef]

- Sun, S.-L.; Deng, Z.-L. Multi-sensor optimal information fusion Kalman filter. Automatica 2004, 40, 1017–1023. [Google Scholar] [CrossRef]

- Weiss, S.; Siegwart, R. Real-time metric state estimation for modular vision-inertial systems. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4531–4537. [Google Scholar] [CrossRef]

- Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M.; Siegwart, R. A robust and modular multi-sensor fusion approach applied to MAV navigation. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3923–3929. [Google Scholar]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual–inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A.I. High-precision, consistent EKF-based visual-inertial odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Kim, C.; Sakthivel, R.; Chung, W.K. Unscented FastSLAM: A robust and efficient solution to the SLAM problem. IEEE Trans. Robot. 2008, 24, 808–820. [Google Scholar] [CrossRef]

- Thrun, S.; Montemerlo, M. The graph SLAM algorithm with applications to large-scale mapping of urban structures. Int. J. Robot. Res. 2006, 25, 403–429. [Google Scholar] [CrossRef]

- Chen, C.; Wang, B.; Lu, C.X.; Trigoni, N.; Markham, A. A survey on deep learning for localization and mapping: Towards the age of spatial machine intelligence. arXiv 2020, arXiv:2006.12567. [Google Scholar]

- Clark, R.; Wang, S.; Wen, H.; Markham, A.; Trigoni, N. Vinet: Visual-inertial odometry as a sequence-to-sequence learning problem. In Proceedings of the 2017 AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Han, L.; Lin, Y.; Du, G.; Lian, S. DeepVIO: Self-supervised deep learning of monocular visual inertial odometry using 3D geometric constraints. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 6906–6913. [Google Scholar]

- Benini, A.; Mancini, A.; Longhi, S. An IMU/UWB/vision-based extended Kalman filter for mini-UAV localization in indoor environment using 802.15.4a wireless sensor network. J. Intell. Robot. Syst. 2013, 70, 461–476. [Google Scholar] [CrossRef]

- Masiero, A.; Perakis, H.; Gabela, J.; Toth, C.; Gikas, V.; Retscher, G.; Goel, S.; Kealy, A.; Koppányi, Z.; Błaszczak-Bak, W.; et al. Indoor navigation and mapping: Performance analysis of UWB-based platform positioning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 549–555. [Google Scholar] [CrossRef]

- Queralta, J.P.; Almansa, C.M.; Schiano, F.; Floreano, D.; Westerlund, T. UWB-based system for UAV localization in GNSS-denied environments: Characterization and dataset. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4521–4528. [Google Scholar]

- Zhu, Z.; Yang, S.; Dai, H.; Li, F. Loop detection and correction of 3D laser-based SLAM with visual information. In Proceedings of the Proceedings of the 31st International Conference on Computer Animation and Social Agents—CASA 2018, Beijing, China, 21–23 May 2018; Association for Computing Machinery (ACM): New York, NY, USA, 2018; pp. 53–58. [Google Scholar]

- Pandey, G.; Mcbride, J.R.; Savarese, S.; Eustice, R.M. Visually bootstrapped generalized ICP. In Proceedings of the IEEE International Conference on Robotics & Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Ratz, S.; Dymczyk, M.; Siegwart, R.; Dubé, R. Oneshot global localization: Instant LiDAR-visual pose estimation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Zhang, J.; Ramanagopal, M.S.; Vasudevan, R.; Johnson-Roberson, M. LiStereo: Generate dense depth maps from LiDAR and Stereo Imagery. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 7829–7836. [Google Scholar]

- Liang, J.; Patel, U.; Sathyamoorthy, A.J.; Manocha, D. Realtime collision avoidance for mobile robots in dense crowds using implicit multi-sensor fusion and deep reinforcement learning. arXiv 2020, arXiv:2004.03089v2. [Google Scholar]

- Surmann, H.; Jestel, C.; Marchel, R.; Musberg, F.; Elhadj, H.; Ardani, M. Deep reinforcement learning for real autonomous mobile robot navigation in indoor environments. arXiv 2020, arXiv:2005.13857. [Google Scholar]

- Hol, J.D.; Dijkstra, F.; Luinge, H.; Schon, T.B. Tightly coupled UWB/IMU pose estimation. In Proceedings of the 2009 IEEE International Conference on Ultra-Wideband, Vancouver, BC, Canada, 9–11 September 2009; pp. 688–692. [Google Scholar]

- Qin, C.; Ye, H.; Pranata, C.; Han, J.; Zhang, S.; Liu, M. R-lins: A robocentric LiDAR-inertial state estimator for robust and efficient navigation. arXiv 2019, arXiv:1907.02233. [Google Scholar]

- Moore, J.B. Discrete-time fixed-lag smoothing algorithms. Automatica 1973, 9, 163–173. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Rus, D. Lio-sam: Tightly-coupled LiDAR inertial odometry via smoothing and mapping. arXiv 2020, arXiv:2007.00258v2. [Google Scholar]

- Velas, M.; Spanel, M.; Hradis, M.; Herout, A. CNN for IMU assisted odometry estimation using velodyne LiDAR. In Proceedings of the 2018 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Lisbon, Portugal, 25–27 April 2018; pp. 71–77. [Google Scholar]

- Le Gentil, C.; Vidal-Calleja, T.; Huang, S. 3D LiDAR-IMU calibration based on upsampled preintegrated measurements for motion distortion correction. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2149–2155. [Google Scholar]

- Mueller, M.W.; Hamer, M.; D’Andrea, R. Fusing ultra-wideband range measurements with accelerometers and rate gyroscopes for quadrocopter state estimation. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1730–1736. [Google Scholar]

- Corrales, J.A.; Candelas, F.A.; Torres, F. Hybrid tracking of human operators using IMU/UWB data fusion by a Kalman filter. In Proceedings of the 3rd International Conference on Intelligent Information Processing; Association for Computing Machinery (ACM), Amsterdam, The Netherlands, 12–15 March 2008; pp. 193–200. [Google Scholar]

- Zhang, M.; Xu, X.; Chen, Y.; Li, M. A Lightweight and accurate localization algorithm using multiple inertial measurement units. IEEE Robot. Autom. Lett. 2020, 5, 1508–1515. [Google Scholar] [CrossRef]

- Ding, X.; Wang, Y.; Li, D.; Tang, L.; Yin, H.; Xiong, R. Laser map aided visual inertial localization in changing environment. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4794–4801. [Google Scholar]

- Zuo, X.; Yang, Y.; Geneva, P.; Lv, J.; Liu, Y.; Huang, G.; Pollefeys, M. Lic-fusion 2.0: LiDAR-inertial-camera odometry with sliding-window plane-feature tracking. arXiv 2020, arXiv:2008.07196. [Google Scholar]

- Jiang, G.; Yin, L.; Jin, S.; Tian, C.; Ma, X.; Ou, Y. A simultaneous localization and mapping (SLAM) framework for 2.5D map building based on low-cost LiDAR and vision fusion. Appl. Sci. 2019, 9, 2105. [Google Scholar] [CrossRef]

- Tian, M.; Nie, Q.; Shen, H. 3D scene geometry-aware constraint for camera localization with deep learning. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4211–4217. [Google Scholar]

- Robot@Home Dataset. Available online: http://mapir.isa.uma.es/mapirwebsite/index.php/mapir-downloads/203-robot-at-home-dataset (accessed on 9 January 2021).

- Rgb-D Dataset 7-Scenes—Microsoft Research. Available online: https://www.microsoft.com/en-us/research/project/rgb-d-dataset-7-scenes/ (accessed on 9 January 2021).

- Imperial College London. ICL-NUIM RGB-D Benchmark Dataset. Available online: http://www.doc.ic.ac.uk/~ahanda/VaFRIC/iclnuim.html (accessed on 9 January 2021).

- Ruiz-Sarmiento, J.R.; Galindo, C.; Gonzalez-Jimenez, J. Robot@Home, a robotic dataset for semantic mapping of home environments. Int. J. Robot. Res. 2017, 36, 131–141. [Google Scholar] [CrossRef]

- Ruiz-Sarmiento, J.R.; Galindo, C.; Gonzalez-Jimenez, J. Building multiversal semantic maps for mobile robot operation. Knowl. Based Syst. 2017, 119, 257–272. [Google Scholar] [CrossRef]

- Mariano, J.; Javier, M.; Manuel, L.A.; Javier, G.J. Robust planar odometry based on symmetric range flow and multiscan alignment. IEEE Trans. Robot. 2018, 34, 1623–1635. [Google Scholar]

- Moreno, F.-A.; Monroy, J.; Ruiz-Sarmiento, J.-R.; Galindo, C.; Gonzalez-Jimenez, J. Automatic waypoint generation to improve robot navigation through narrow spaces. Sensors 2019, 20, 240. [Google Scholar] [CrossRef]

- Fallon, M.; Johannsson, H.; Kaess, M.; Leonard, J.J. The MIT Stata Center dataset. Int. J. Robot. Res. 2013, 32, 1695–1699. [Google Scholar] [CrossRef]

- Huitl, R.; Schroth, G.; Hilsenbeck, S.; Schweiger, F.; Steinbach, E. TUMindoor: An extensive image and point cloud dataset for visual indoor localization and mapping. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 1773–1776. [Google Scholar]

- Blanco-Claraco, J.-L.; Moreno-Dueñas, F.-Á.; González-Jiménez, J. The Málaga urban dataset: High-rate stereo and LiDAR in a realistic urban scenario. Int. J. Robot. Res. 2014, 33, 207–214. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Rusli, I.; Trilaksono, B.R.; Adiprawita, W. RoomSLAM: Simultaneous localization and mapping with objects and indoor layout structure. IEEE Access 2020, 8, 196992–197004. [Google Scholar] [CrossRef]

- Nikoohemat, S.; Diakité, A.A.; Zlatanova, S.; Vosselman, G. Indoor 3D reconstruction from point clouds for optimal routing in complex buildings to support disaster management. Autom. Constr. 2020, 113, 103109. [Google Scholar] [CrossRef]

- Feng, D.; Haase-Schutz, C.; Rosenbaum, L.; Hertlein, H.; Glaser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 2020, 2020, 2972974. [Google Scholar] [CrossRef]

- Glocker, B.; Izadi, S.; Shotton, J.; Criminisi, A. Real-time RGB-D camera relocalization. In Proceedings of the IEEE International Symposium on Mixed & Augmented Reality, Adelaide, Australia, 1–4 October 2013. [Google Scholar]

- Shotton, J.; Glocker, B.; Zach, C.; Izadi, S.; Criminisi, A.; FitzGibbon, A. Scene coordinate regression forests for camera relocalization in RGB-D images. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2930–2937. [Google Scholar]

- Handa, A.; Whelan, T.; McDonald, J.; Davison, A.J. A benchmark for RGB-D visual odometry, 3D reconstruction and SLAM. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1524–1531. [Google Scholar]

- Shetty, A.; Gao, G.X. UAV pose estimation using cross-view geolocalization with satellite imagery. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Whelan, T.; Leutenegger, S.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J. Elasticfusion: Dense SLAM without a pose graph. In Proceedings of the Robotics: Science & Systems 2015, Rome, Italy, 13–17 July 2015. [Google Scholar]

- Tateno, K.; Tombari, F.; Laina, I.; Navab, N. CNN-SLAM: Real-time dense monocular SLAM with learned depth prediction. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6565–6574. [Google Scholar]

- Delmerico, J.; Cieslewski, T.; Rebecq, H.; Faessler, M.; Scaramuzza, D. Are we ready for autonomous drone racing? The UZH-FPV drone racing dataset. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6713–6719. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Niessner, M. ScanNet: Richly-annotated 3D reconstructions of indoor scenes. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2432–2443. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from RGBD images. In Proceedings of the 2012 European Conference on Computer Vision (ECCV), Firenze, Italy, 7–13 October 2012; pp. 746–760. [Google Scholar]

- Li, W.; Saeedi, S.; McCormac, J.; Clark, R.; Tzoumanikas, D.; Ye, Q.; Huang, Y.; Tang, R.; Leutenegger, S. Interiornet: Mega-scale multi-sensor photo-realistic indoor scenes dataset. arXiv 2018, arXiv:1809.00716. [Google Scholar]

- McCormac, J.; Handa, A.; Leutenegger, S.; Davison, A.J. Scenenet RGB-D: 5m photorealistic images of synthetic indoor trajectories with ground truth. arXiv 2016, arXiv:1612.05079. [Google Scholar]

- Gehrig, D.; Rebecq, H.; Gallego, G.; Scaramuzza, D. EKLT: Asynchronous photometric feature tracking using events and frames. Int. J. Comput. Vis. 2020, 128, 601–618. [Google Scholar] [CrossRef]

- Rodriguez-Gomez, J.; Eguiluz, A.G.; Dios, J.M.-D.; Ollero, A. Asynchronous event-based clustering and tracking for intrusion monitoring in UAS. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 8518–8524. [Google Scholar]

- Leibe, B.; Matas, J.; Sebe, N.; Welling, M. Real-time Large-Scale Dense 3D Reconstruction with Loop Closure. In Proceedings of the 2016 European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 500–516. [Google Scholar]

- Taira, H.; Okutomi, M.; Sattler, T.; Cimpoi, M.; Pollefeys, M.; Sivic, J.; Pajdla, T.; Torii, A. InLoc: Indoor visual localization with dense matching and view synthesis. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 1. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. arXiv 2017, arXiv:1706.02413. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. Pointcnn: Convolution on x-transformed points. arXiv 2018, arXiv:1801.07791. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A dataset for semantic scene understanding of LiDAR Sequences. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9296–9306. [Google Scholar]

- Xu, B.; Li, W.; Tzoumanikas, D.; Bloesch, M.; Davison, A.; Leutenegger, S. MID-Fusion: Octree-based object-level multi-instance dynamic SLAM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Zhang, S.; He, F.; Ren, W.; Yao, J. Joint learning of image detail and transmission map for single image dehazing. Vis. Comput. 2018, 36, 305–316. [Google Scholar] [CrossRef]

- Armeni, I.; Sax, S.; Zamir, A.R.; Savarese, S. Joint 2D–3D-semantic data for indoor scene understanding. arXiv 2017, arXiv:1702.01105. [Google Scholar]

- Tremblay, J.; To, T.; Sundaralingam, B.; Xiang, Y.; Fox, D.; Birchfield, S. Deep object pose estimation for semantic robotic grasping of household objects. arXiv 2018, arXiv:1809.10790. [Google Scholar]

- Bujanca, M.; Gafton, P.; Saeedi, S.; Nisbet, A.; Bodin, B.; O’Boyle, M.F.P.; Davison, A.J.; Kelly, P.H.J.; Riley, G.; Lennox, B.; et al. SLAMbench 3.0: Systematic automated reproducible evaluation of slam systems for robot vision challenges and scene understanding. In Proceedings of the 2019 International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Zhang, Z.; Scaramuzza, D. A tutorial on quantitative trajectory evaluation for visual(-inertial) odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7244–7251. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).