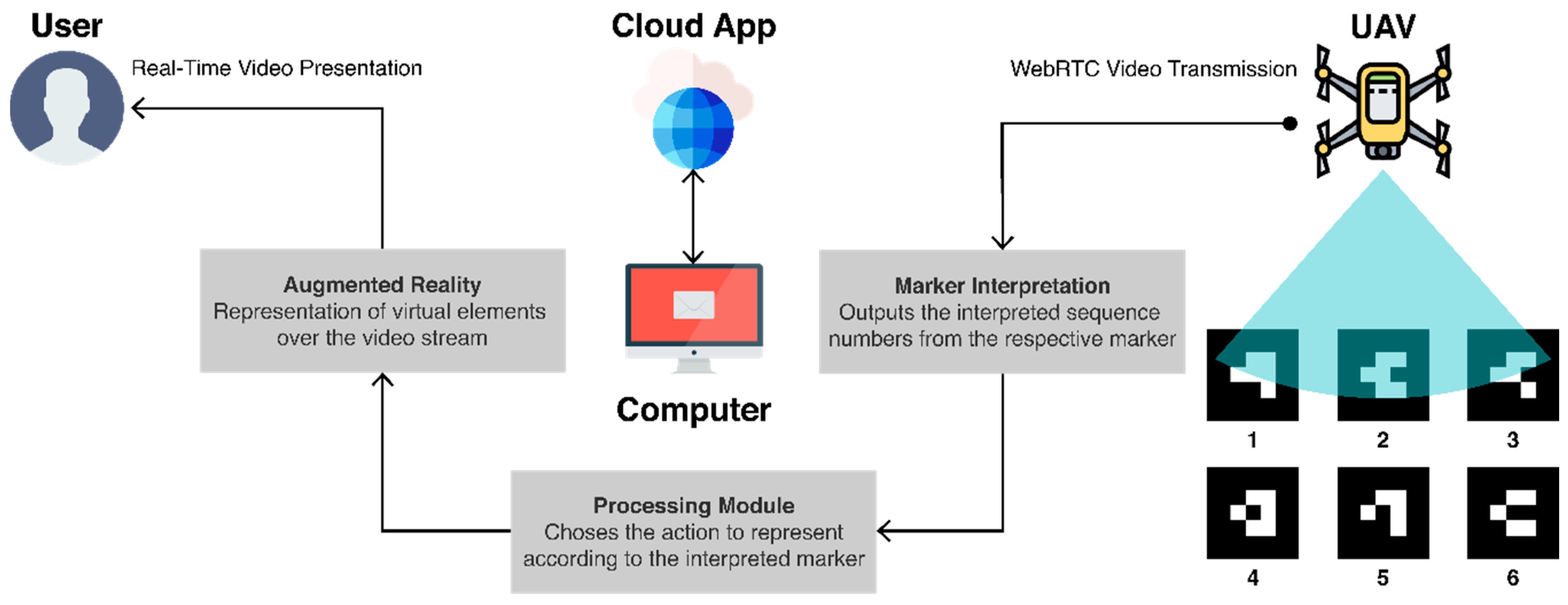

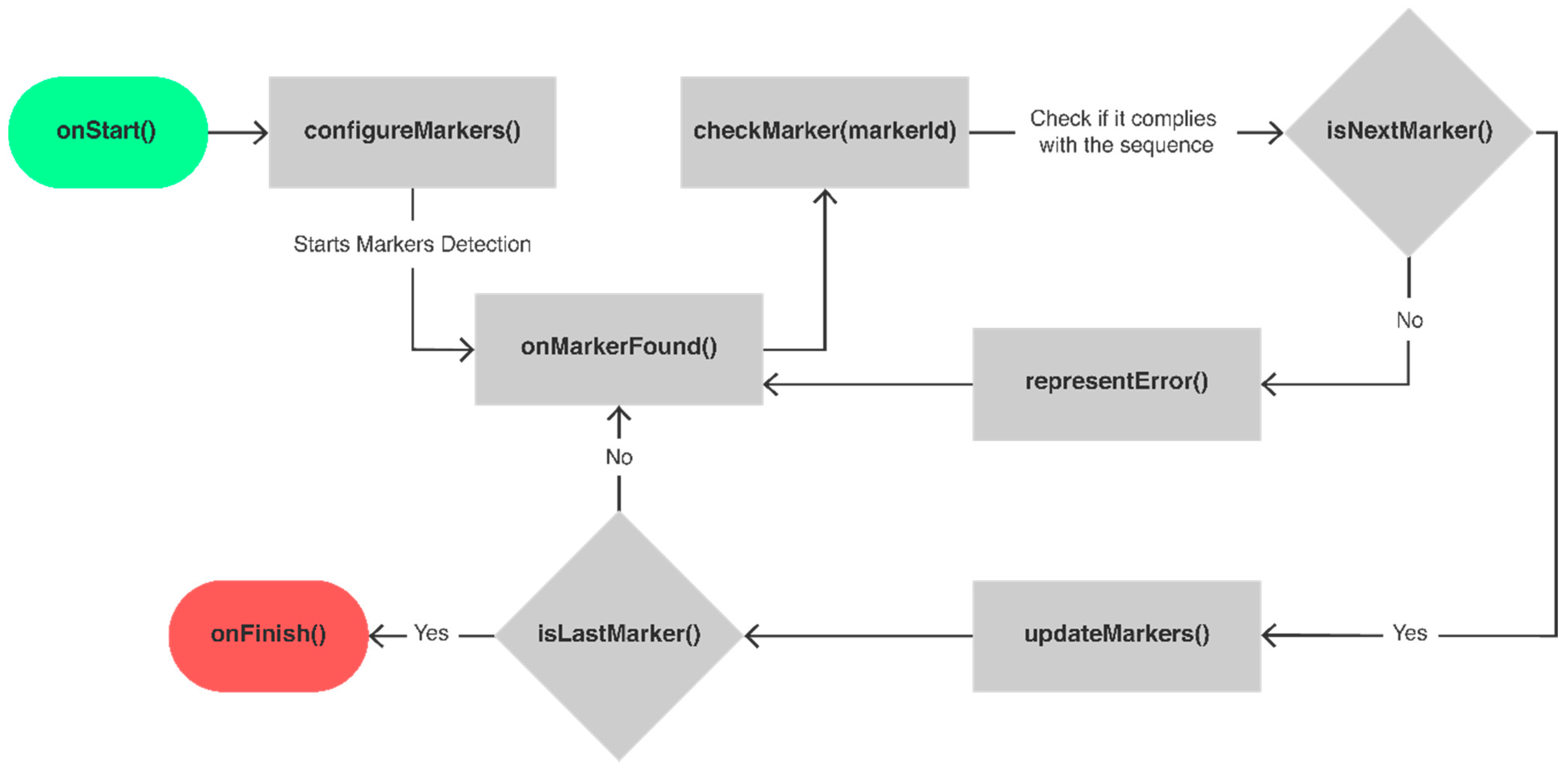

The development of the prototype was divided into iterations that helped improve the result. The assessment was separated into two phases. In the first phase, the solution was tested to validate the implementation logic and the success of the intended functionalities. Then, the performance of the prototype itself was evaluated to measure the quality of the experience built with Web AR. This section presents the two assessment phases and their results.

4.2.2. Evaluation of the Prototype

To assess the developed prototype, we designed and performed three different tests. In the first one, the objective was to verify how the marker detection technology behaves with different distances, perspectives, and camera specifications. The second one was intended to understand how fast a user could react to the representation of a virtual element, from the moment the solution detects a marker. The third evaluates, with users, the improvements that AR visual hints can bring to UAV training circuits relative to the perception of the trajectory.

Test 1—Detection of the marker according to different distances, perspectives, and cameras

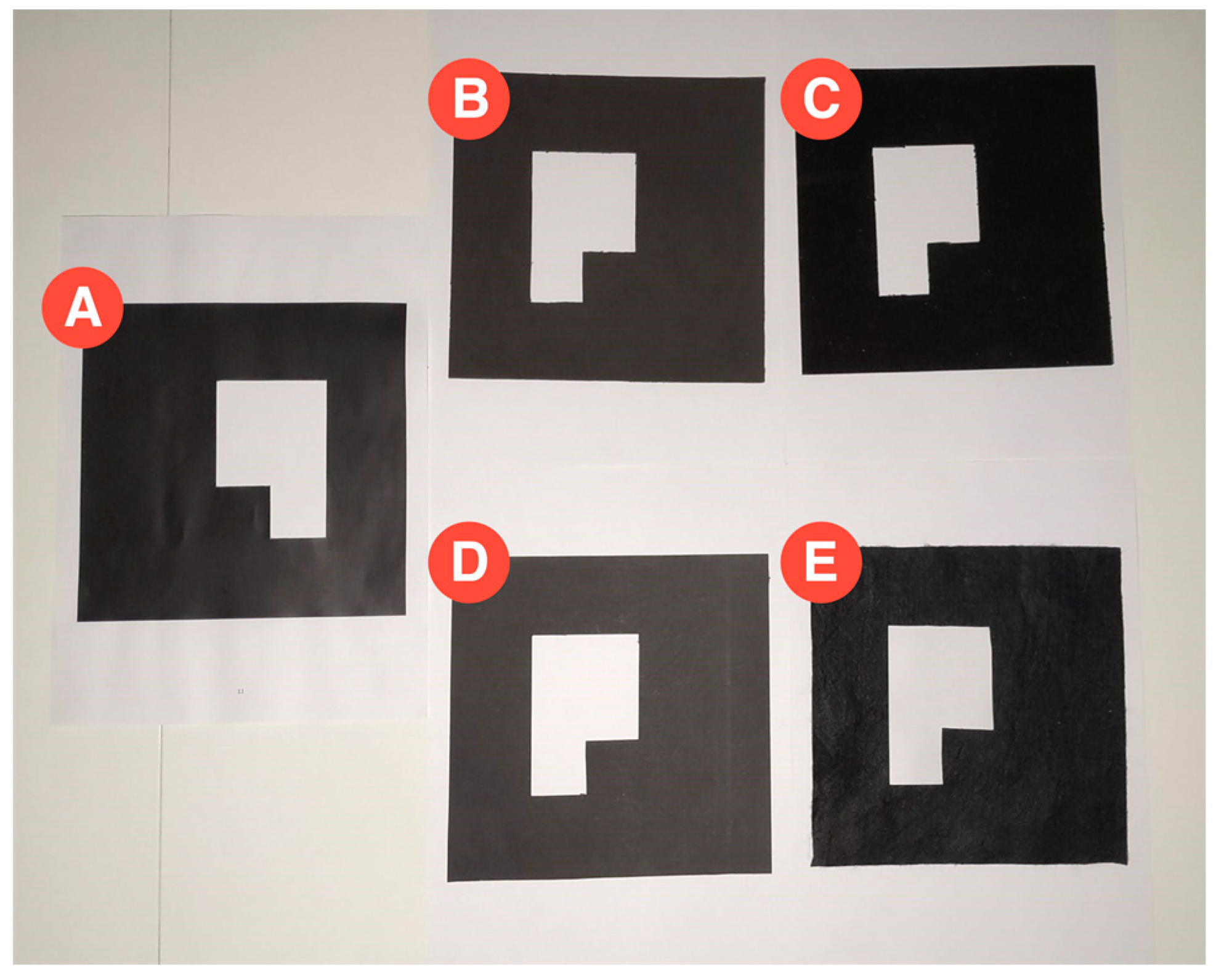

First, to evaluate the suitability of the technology used in the implementation, we defined three test scenarios based on a marker, measuring 18.5 cm by 18.5 cm, printed on an A4 page. In the first scenario, the marker was fixed to a wall, perpendicular to the ground, in an outdoor scenario with cloudy weather. The second one was similar, but the marker was fixed onto the wall of an indoor garage with standard incandescent lights, during the night. In the third one, the marker that was fixed on the ground was overflown by a drone with its camera pointing down. These tests were used to assess the efficiency of the detection with different distances and inclinations.

This experiment had four sets of seven iterations with three different devices: a low-mid range smartphone Xiaomi MI A2, an out-of-the-box DJI Phantom 4 drone (

Figure 12), and a system to simulate a cost-effective modularly built UAV. The cost-effective equipment used to take the pictures was a Raspberry Pi 3 and a Pi NoIR Camera V2.1 with a still resolution of 8 MP. The pictures were captured in the default size of each device and saved in the JPG format.

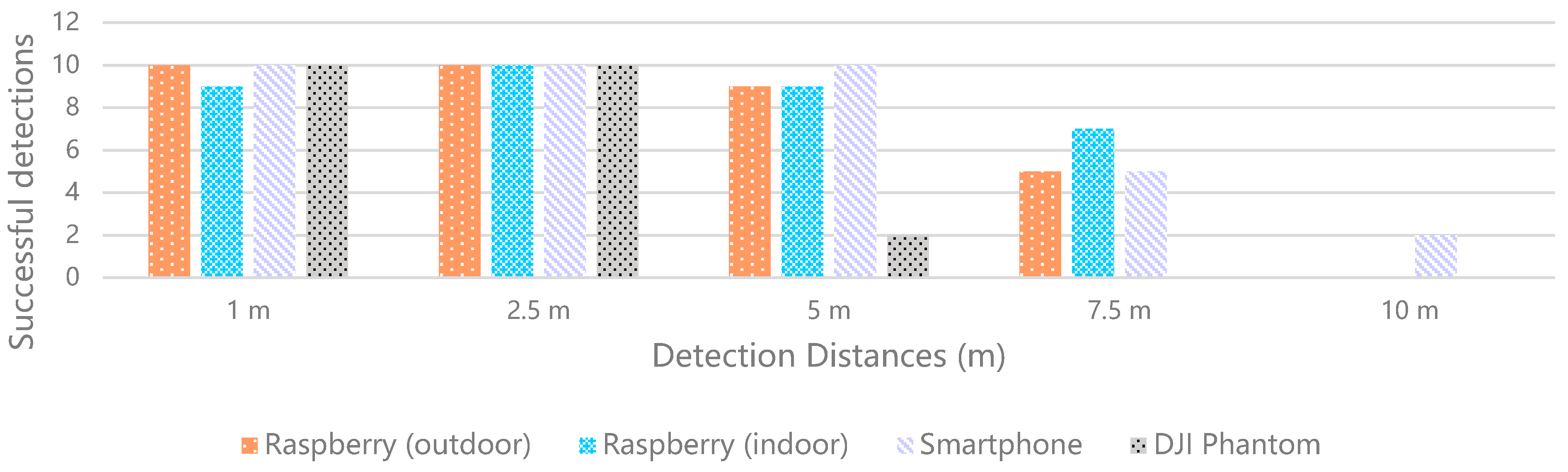

The smartphone and the drone captured pictures with 9 MP. Every iteration consisted of taking 10 pictures from different angles and positions, with the defined distances of 1 m, 2.5 m, 5 m, 7.5 m, 10 m, 12.5 m, and 15 m. In the case of the aerial drone footage, the distance was measured with the DJI GCS app, DJI GO 4. So, the first scenario resulted in 140 pictures, 70 captured with the Raspberry Pi and 70 with the smartphone. The second scenario resulted in 70 pictures, captured with the Raspberry Pi, and the third scenario in 70 pictures, captured with the DJI drone. These pictures were loaded to the Web AR application to validate the detection and the results are represented in

Table 4.

These results show the percentage of successes for each iteration case. In the case of 1 m apart, every iteration was successful, except for a picture captured by the Raspberry Pi in the garage, which presented a shadow crossing the marker. In the case of 2.5 m apart, every iteration was successful. In the case of 5 m apart, only the pictures captured by the smartphone were fully successful. In the case of the pictures captured by the Raspberry Pi outside and in the garage, the system did not detect the marker in 1 of them, both presenting a success rate of 90%. The drone presented the worst results, with only 2 detections out of 10. In the case of 7.5 m apart, both the Raspberry Pi outside and the smartphone presented a 50% success rate, whilst the Raspberry Pi in the garage shows better results, with 7 detections out of 10. The drone did not provide any detection with an altitude of 7.5 m. In the case of 10 m apart, the detection only happened in 2 pictures of the smartphone. In the cases of 12.5 m and 15 m, there was no detection. The smartphone samples were the only ones that could detect AR markers up to 10 m, whilst the samples from the DJI Phantom drone could only detect the AR markers up to 5 m. Additionally, the smartphone presented the best results for detection with the longest distance (

Figure 13), with 2 true positives (TPs) in 10 samples, whilst the DJI Phantom presented the worst results, with a total of only 22 detections against 37 detections with the smartphone. The Raspberry Pi presented good results both outdoors and indoors with low luminosity.

In this context and according to the performance of the detection system, the results are interpreted as follows. The true positives (TPs) are the samples with detected AR markers; the true negatives (TNs) are the samples without AR markers and no detection; the false positives (FPs) are the samples without AR markers and detection; and the false negatives (FNs) are the samples with undetected AR markers. Among the iterations, there are no samples without AR markers, which means that there are no TNs or FPs. These circumstances influence the precision and recall. The precision value is 1 for every iteration because there are no false positive samples, since it considers the TP samples relative to all the positive ones (i.e., TPs and FPs). On the other hand, the recall corresponds to the normalized success percentage that describes the results of the 10 samples for each distance, so the higher the recall, the better the performance of the system. This value considers the TP values relative to the total of relevant samples (i.e., TPs and FNs). These values are represented in

Table 5, which excludes the samples of the distances of 12.5 m and 15 m, since the system did not work for those distances. The TP samples are presented in

Figure 14.

Table 6 presents the average success rates for each distance, independently of the test scenario, whilst

Table 7 presents the average success rates for each test scenario, independent of the distance. The analysis of these values shows that the distance with the best results was 2.5 m, whilst the test scenario with better performance was the one where the samples were captured with the smartphone. Regarding the performance of the proposed system, considering the tested characteristics, we can expect reliable results with distances of up to 5 m.

By analyzing the pictures in which the marker was not detected, we realize that shadows that partially change the color of the marker can negatively affect its segmentation and the subsequent detection. The solution is intended to work according to the frames obtained from a video feed, preferably of 30 frames per second. This means that in a second, the algorithm can receive 30 images to process, and some can be blurred or present light artifacts, but there should also be good frames with a clean capture of the marker.

The solution works from different angles and distances, which can go up to 10 m with the smartphone. This supports the development of a cost-effective AR experience for UAVs, which can be used for pilot training or the assessment of related solutions in a dynamic virtual environment, and that saves the user from spending money on physical obstacles for flying courses. Additionally, the success of the solution will depend on the quality of the camera and size of the marker. In this case, for cost-effective equipment, the identification of the marker is achievable from 7.5 m, which suggests that with a better camera and bigger marker, the solution would work from a longer distance.

Test 2—Measurement of the user reaction time after the detection of a marker

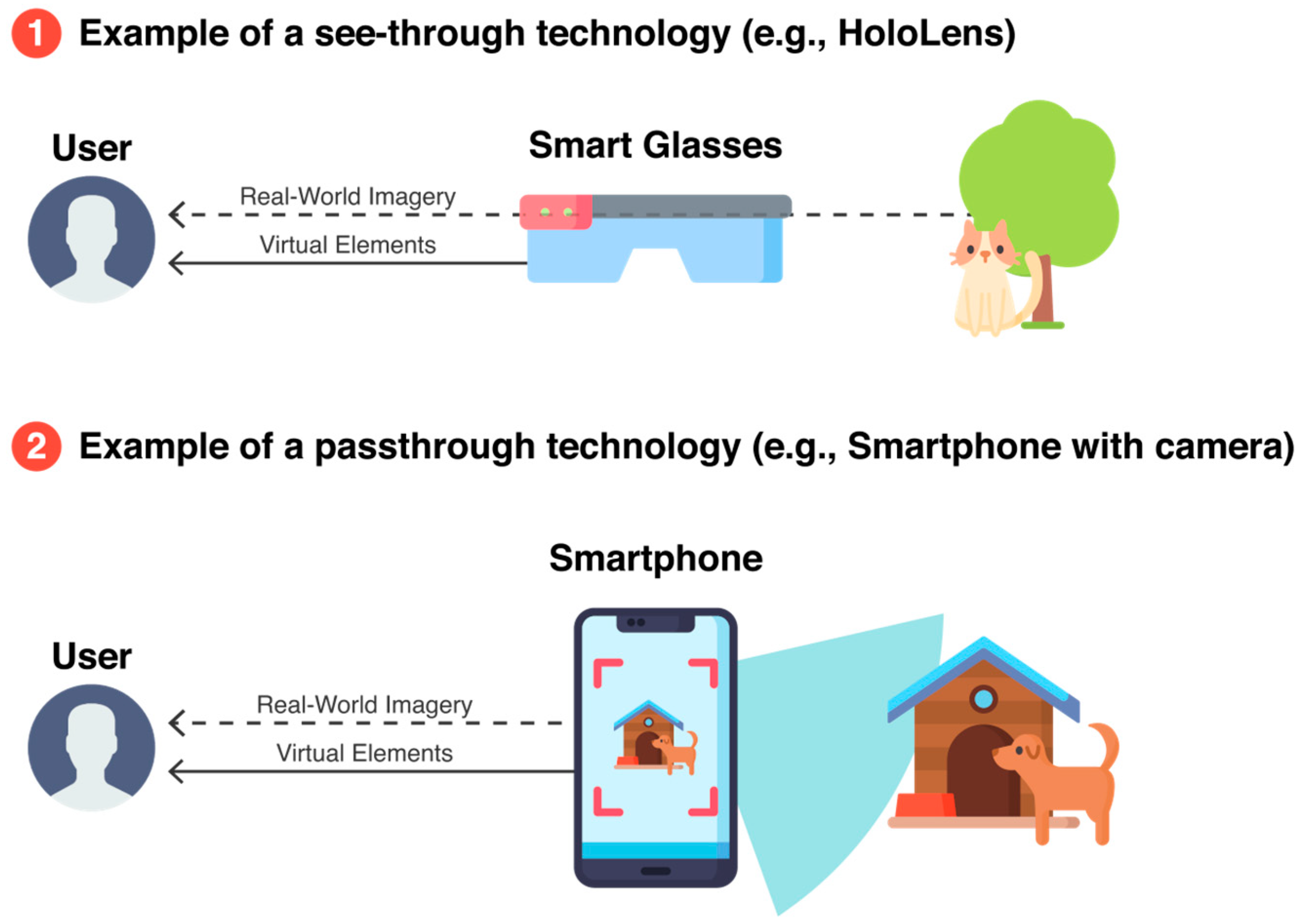

Next, to evaluate if the use of the solution was plausible in real time, we defined a test scenario consisting of a simple AR web application, a laptop, and a printed marker. This test was designed to measure how long a user would take to react after the system identified a marker and displayed the respective virtual element.

In the web application, we included the basic AR functionalities required in the development of the solution, which are the detection of a marker and the representation of a 3D model. In this application, we added a button to register timing in the event of a click. The time interval (∆t) considered for the measurements starts with the event triggered by the AR.js library when a marker is found and ends with the click of the register button, as in the following Equation (1). Each timing includes the delay from the mouse input.

The web application was deployed locally, since the implementation of the system followed the pure front-end approach, which means that the AR part of the solution always runs on the client device. So, for these measurements, it is not relevant if the solution is deployed locally or whether it is cloud hosted. The experiment was performed in daylight conditions, using a laptop, its webcam, and a barcode marker. With the application running, we passed the marker in front of the webcam and clicked on the register button when the 3D model was presented. The samples were collected during the daytime, on a sunny day, in a room filled with natural sunlight. From the 108 registered samples, we discarded eight outliers that resulted from input errors, resulting in 100 samples, presented in

Table 8, sorted from highest to lowest, divided into 5 groups of 20 samples, and rounded to 3 decimal places.

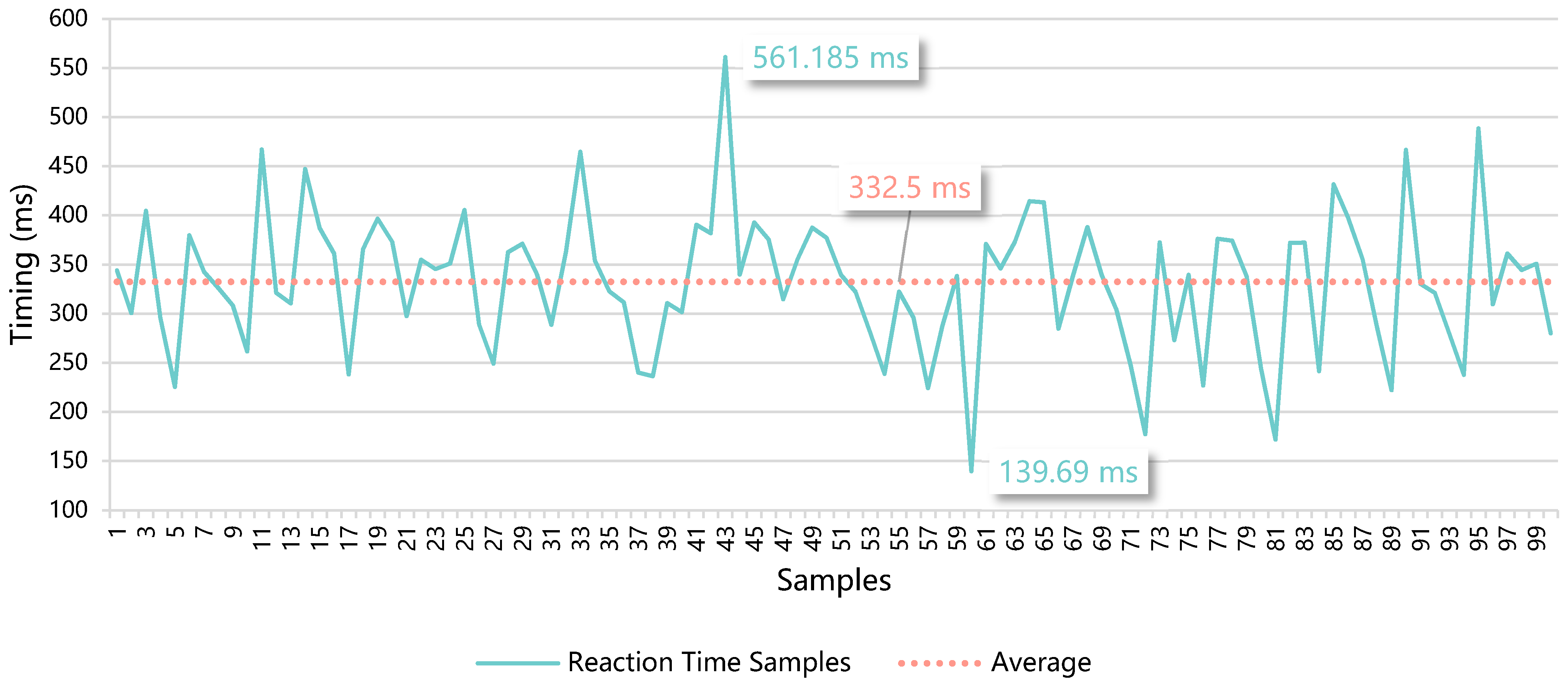

The average reaction time for the 100 samples was 332.5 ms, as represented in

Figure 15. The median was 339.4 ms with a standard deviation of 69.2 ms. These data show an equilibrium of the timings represented by the samples relative to the average, since the median value is close. As the standard deviation shows, there is not a significant difference between the sample values and the average. This means that, on average, the event corresponding to the detection of a marker by the system until the reaction of the user based on the representation of the respective virtual element would take approximately a third of a second. This is a small interval that does not jeopardize the AR experience. The users can undergo a fluid interactive training experience in real time, with a real drone and in a real environment, which is enhanced by virtual elements presented through Web AR technologies.

Test 3—User testing to validate AR hints as an improvement in a simulated circuit

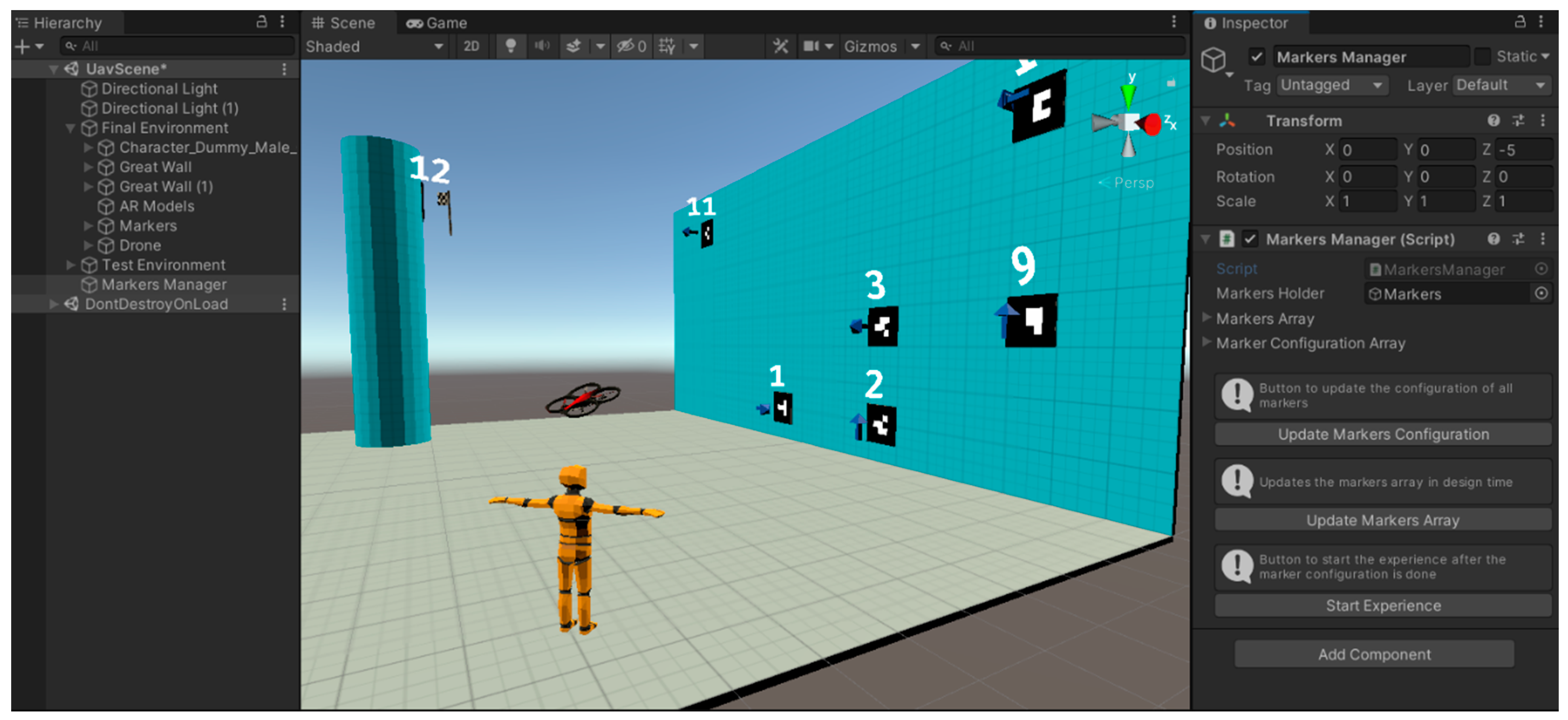

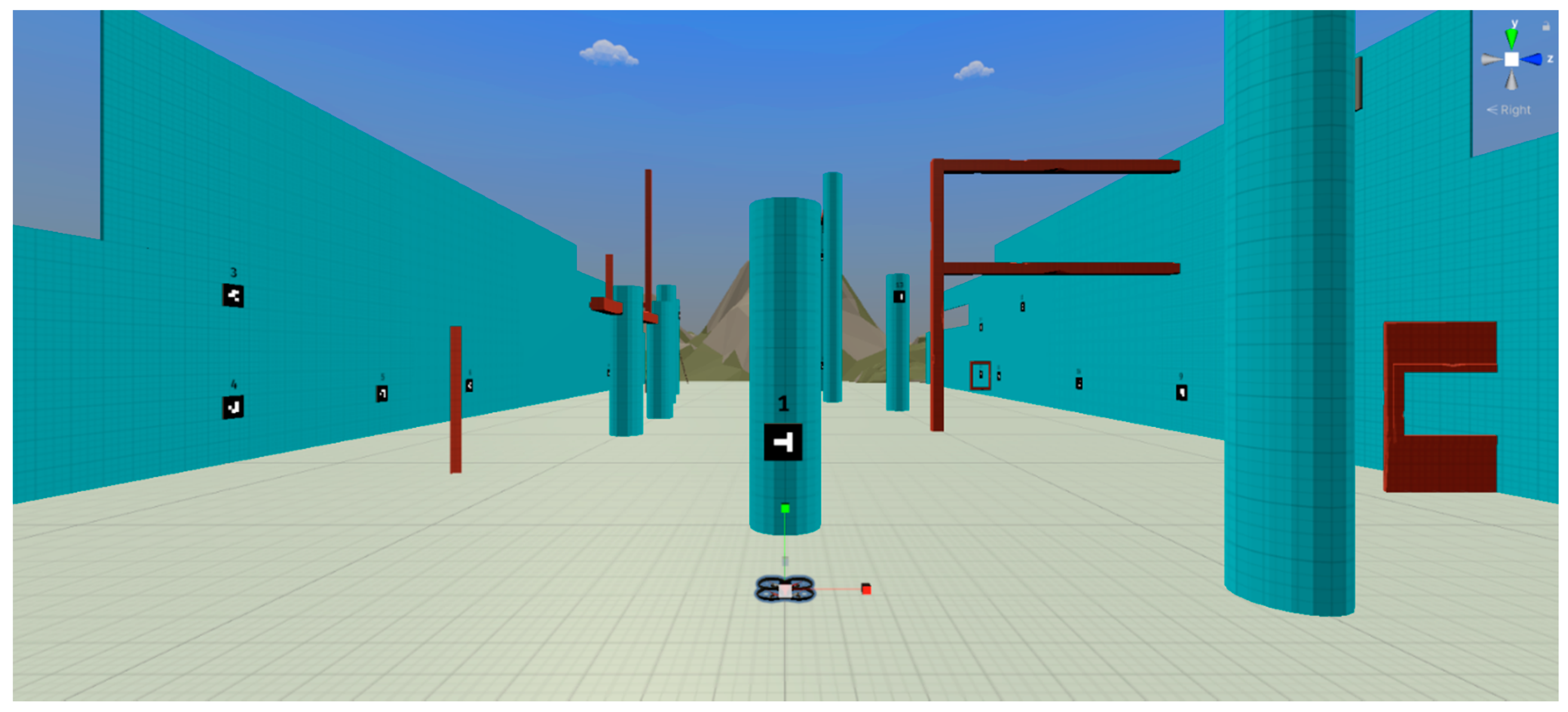

To validate the proposed training solution with users, we defined two test scenarios based on the same environment, presented in the virtual simulation created in Unity, where a quadcopter can be fully piloted through a keyboard or a gamepad, which is similar to the real UAV controllers (

Figure 16). The simulator is available at the link presented in the

Supplementary Materials section.

This assessment intends to validate if the addition of dynamic AR visual hints with the POIs of an unknown trajectory improves to the flight times. With that purpose, this test considers the times registered for the first time the participants complete the two configured circuits, that correspond to the two test scenarios, represented by levels 1 and 2 in the simulation. The first level corresponds to a circuit made of 26 POIs, which create a trajectory of 443.56 m. The second level corresponds to a circuit made of 26 POIs as well, which creates a trajectory of 432.42 m.

In both levels, the participants must detect the markers in the correct order, which is represented with text above the markers, in this case, from 1 to 26. When a participant detects a marker, the simulation checks if it is part of the defined trajectory, as the next POI to visit, or not. These two situations trigger an audio feedback that represents success and failure. Additionally, when the participants detect the first marker, the stopwatch available in the simulation starts counting. When the participants arrive at the 26th marker, the recorded time is presented on the screen in the format “00:00:00:000”, showing hours, minutes, seconds, and milliseconds. The data were converted to minutes and rounded to three decimal places, to facilitate their presentation and interpretation.

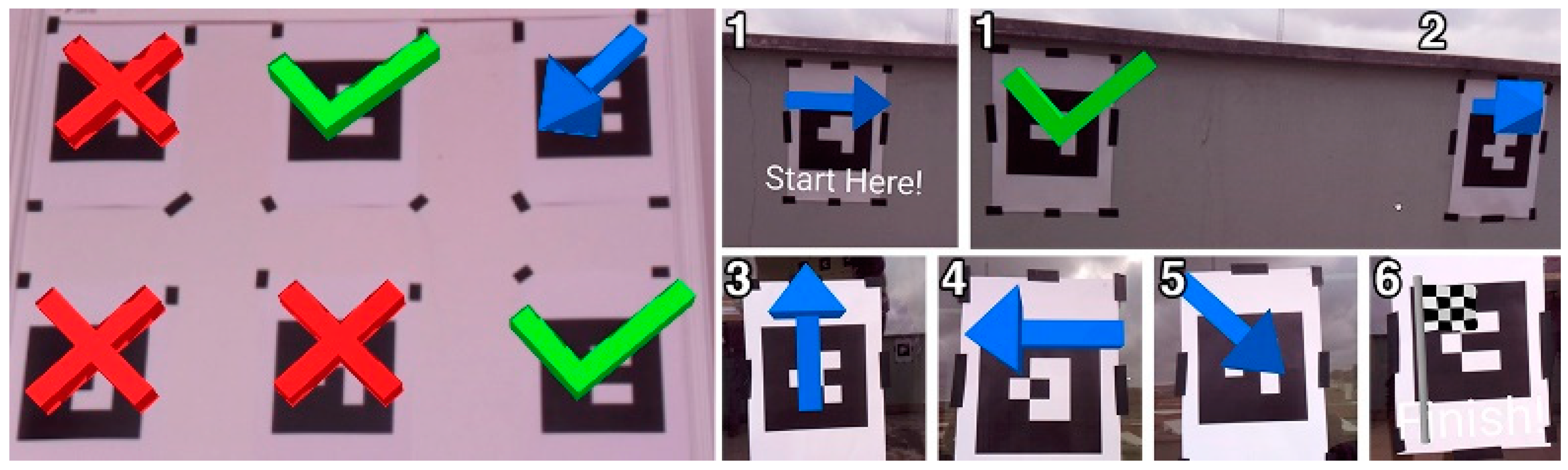

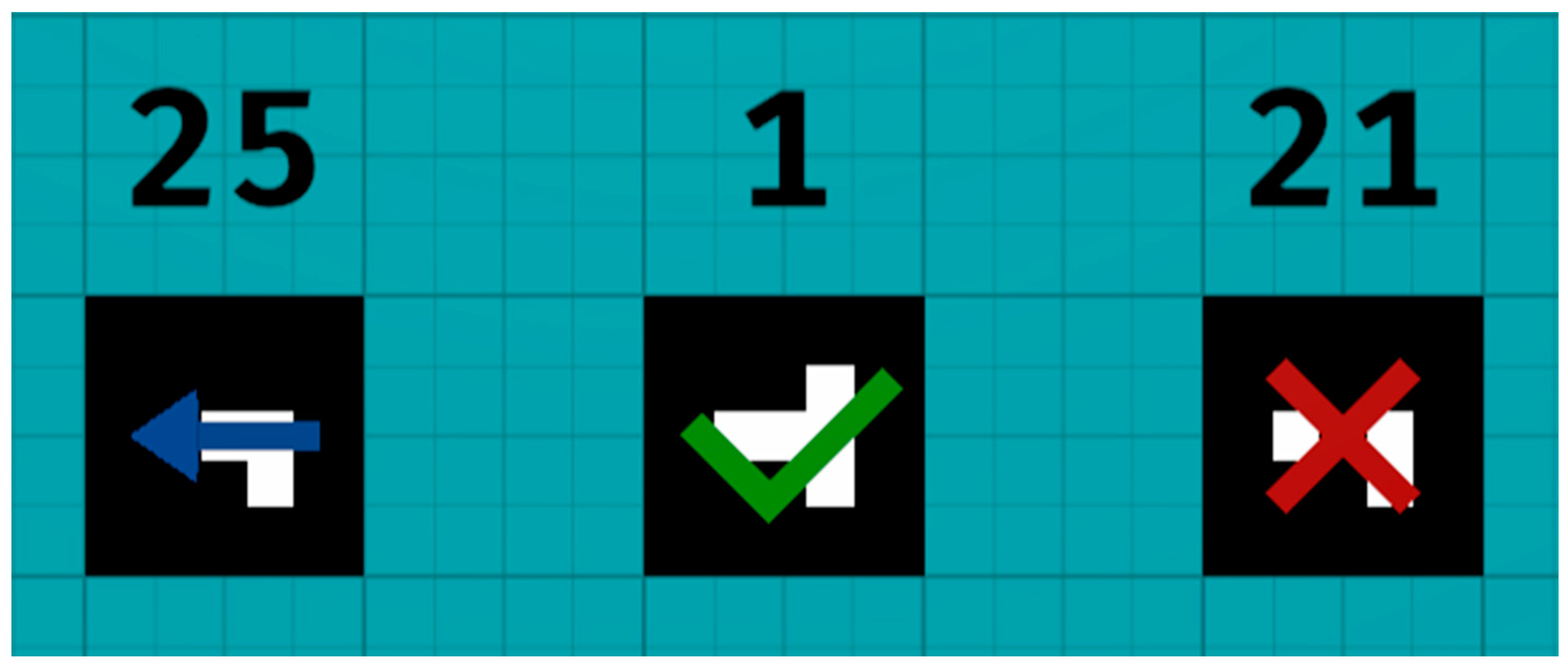

The difference between the two levels is the absence or presence of the visual hints. Level one only presents the markers with a numeral indicator on top, representing the order of the trajectory. As the solution proposed in this paper, level 2 simulates the addition of AR visual hints when the drone gets close to the markers, as presented in

Figure 17. The visual hints are arrows pointing at the next marker, a check sign if the marker was already validated, and a cross if the detection does not follow the defined trajectory. To prevent the results of the second level from being influenced by an improvement in the control skill, we provided a training level with the same environment, so that users can get used to the control before starting the experience.

As for the simulated quadcopter, it flies with a maximum speed of 6 m/s, either when moving along its horizontal plane or increasing and decreasing its altitude, and it rotates 90 degrees per second along its vertical axis, to perform turns leftwards and rightwards.

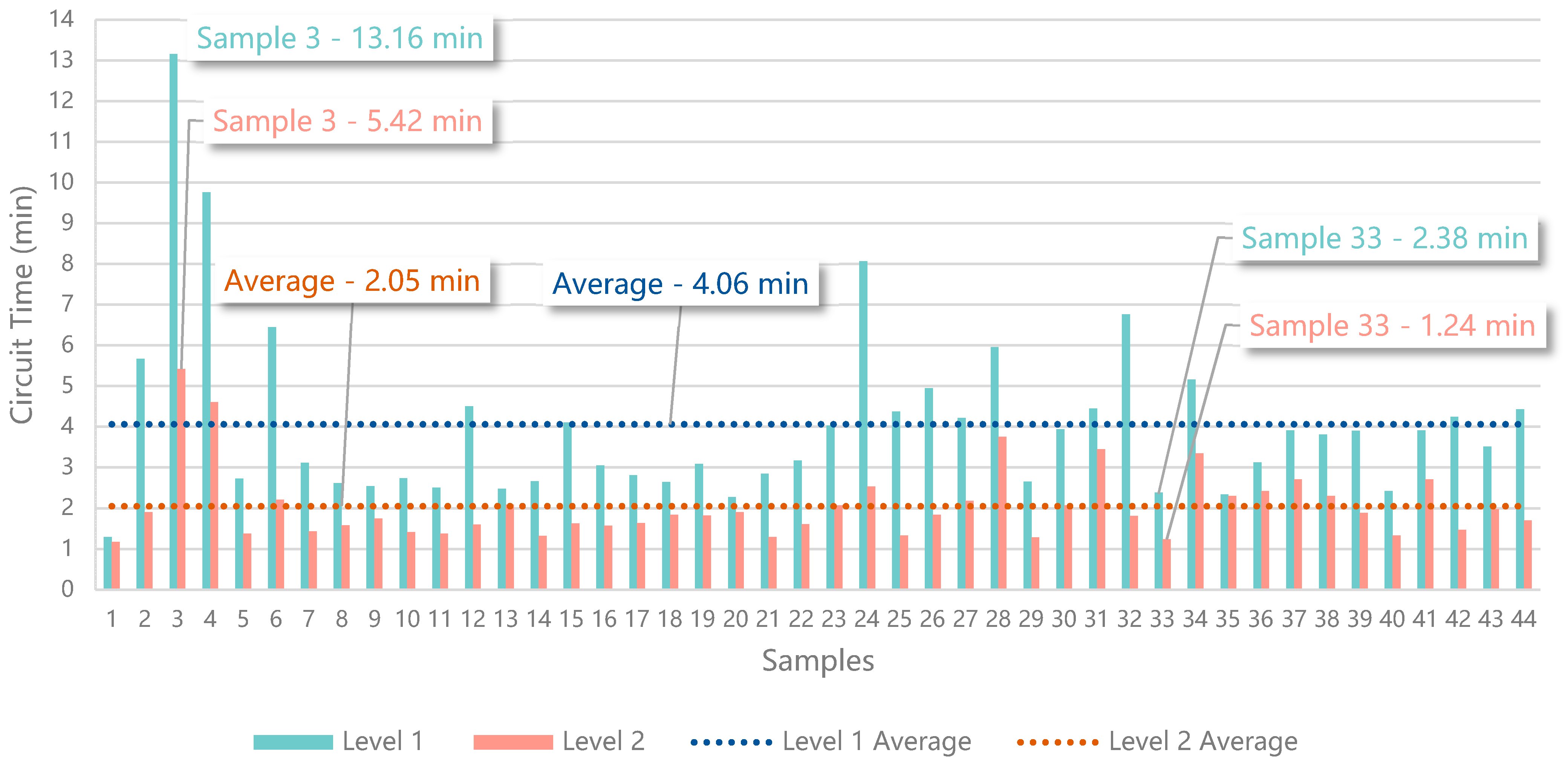

This evaluation was performed by 44 participants, 31 men and 13 women, with ages from 18 to 58. After the experience, each participant answered a Google Forms survey to upload the results, which are presented in

Table 9 and

Figure 18, where the first sample corresponds to the control values obtained by the authors that built the trajectory, for comparison purposes (i.e., 1.298 min in level 1 and 1.172 min in level 2).

The third sample had the highest values (i.e., 13.162 min in level 1 and 5.416 min in level 2), whilst the sample with the lowest values, besides the control sample, was sample 33 (i.e., 2.368 min in level 1 and 1.233 min in level 2). There are 5 samples that can be identified as outliers, which correspond to users with less dexterity and less experience in control interfaces (i.e., samples 3, 4, 6, 24, 32). Samples 13 (i.e., 2.480 min in level 1 and 2.085 min in level 2) and 35 (i.e., 2.339 min in level 1 and 2.303 min in level 2) describe the results from two participants that gave up from level 1, due to not being able to find the marker identified by the number 4, then finished level 2 and returned to finish level 1, failing to comply with the test rules. The average from level 1 is 4.061 min (i.e., 4 min, 36 s, and 600 ms), whilst the average from level 2 is 2.052 min (i.e., 2 min, 3 s, and 120 ms). The comparison of the average values shows that the participants were 1.979 times faster traveling the trajectory of level 2, which includes the simulation of the AR visual hints. From the 44 participants, 5 reported that having to search for the trajectory in level 1 was annoying, but then enjoyed flying in level 2, following the AR visual hints. Additionally, 8 participants complained about the controls not being intuitive, which is a common feeling among users that are not used to the piloting of UAVs.

The results greatly support the use of AR visual hints to improve the performance of the participants learning to fly along a trajectory. The addition of the visual hints (i.e., arrow, check, and cross) in level 2 substantially decreased the time required to finish a similar trajectory, within the same environment configuration.