Displacement Estimation Based on Optical and Inertial Sensor Fusion

Abstract

1. Introduction

2. Materials and Methods

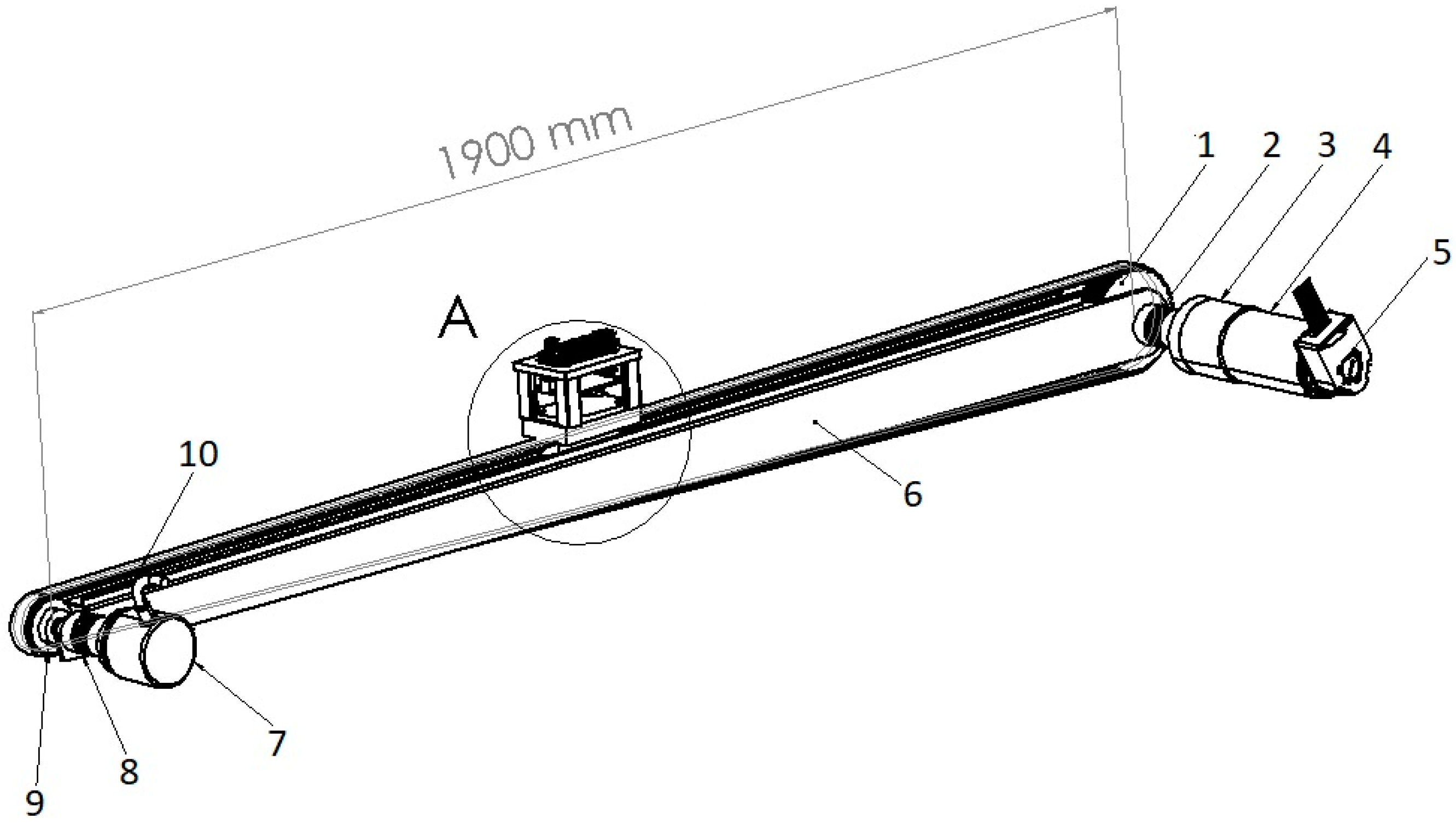

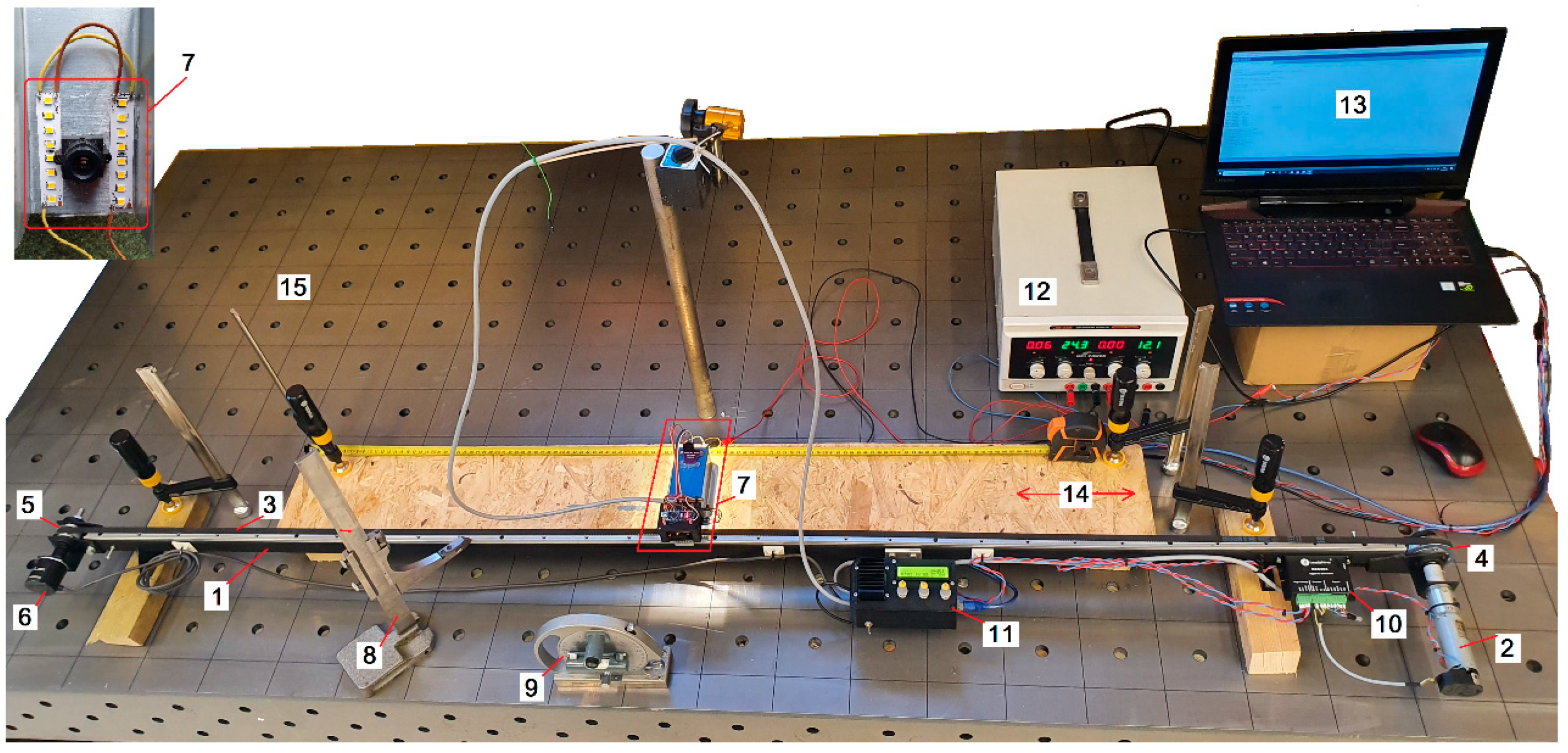

2.1. Designed Test Stand and Studied Sensors

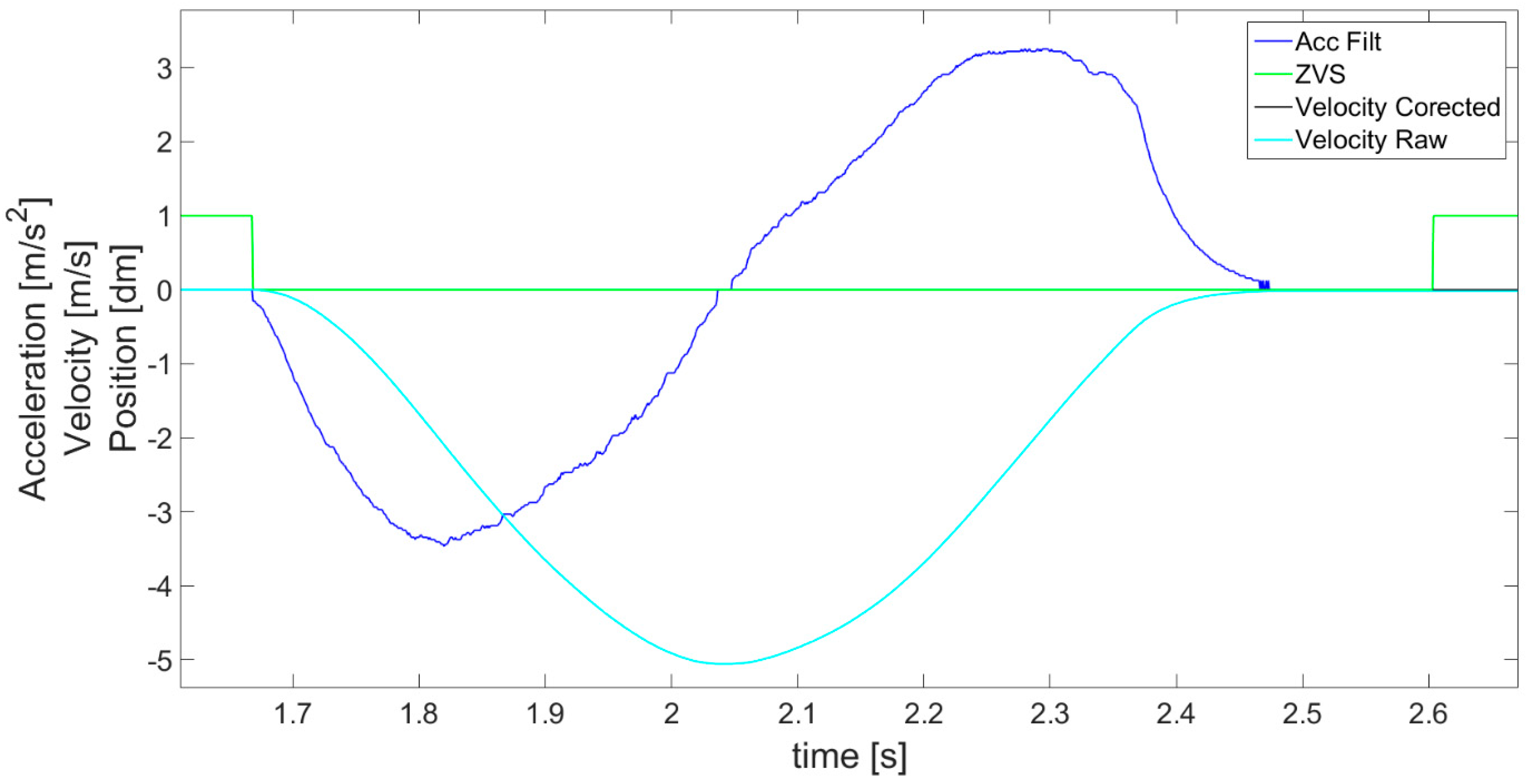

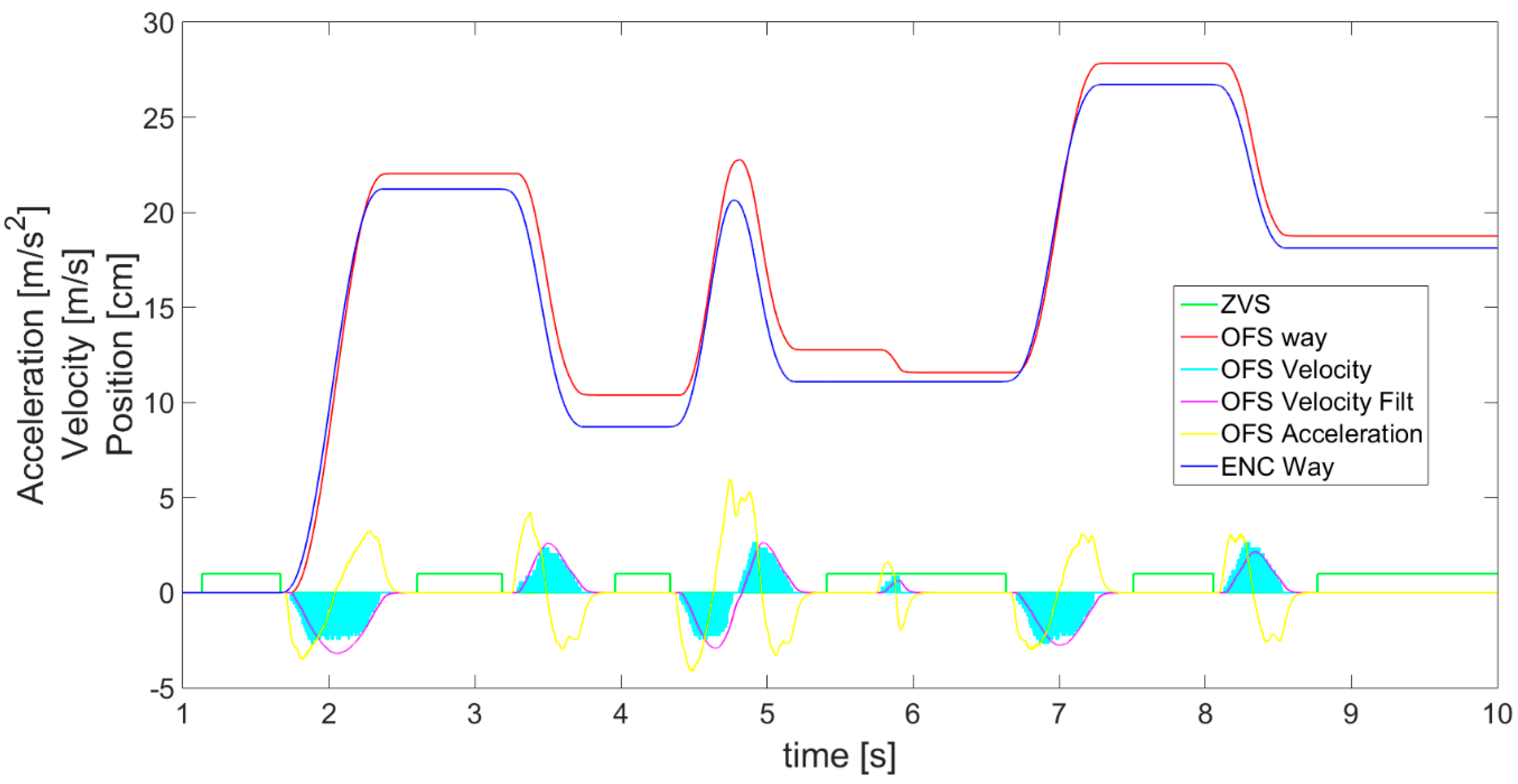

2.2. Accelerometer—Displacement Estimation Algorithm

2.3. Optical Flow Sensor—Displacement Estimation Algorithm

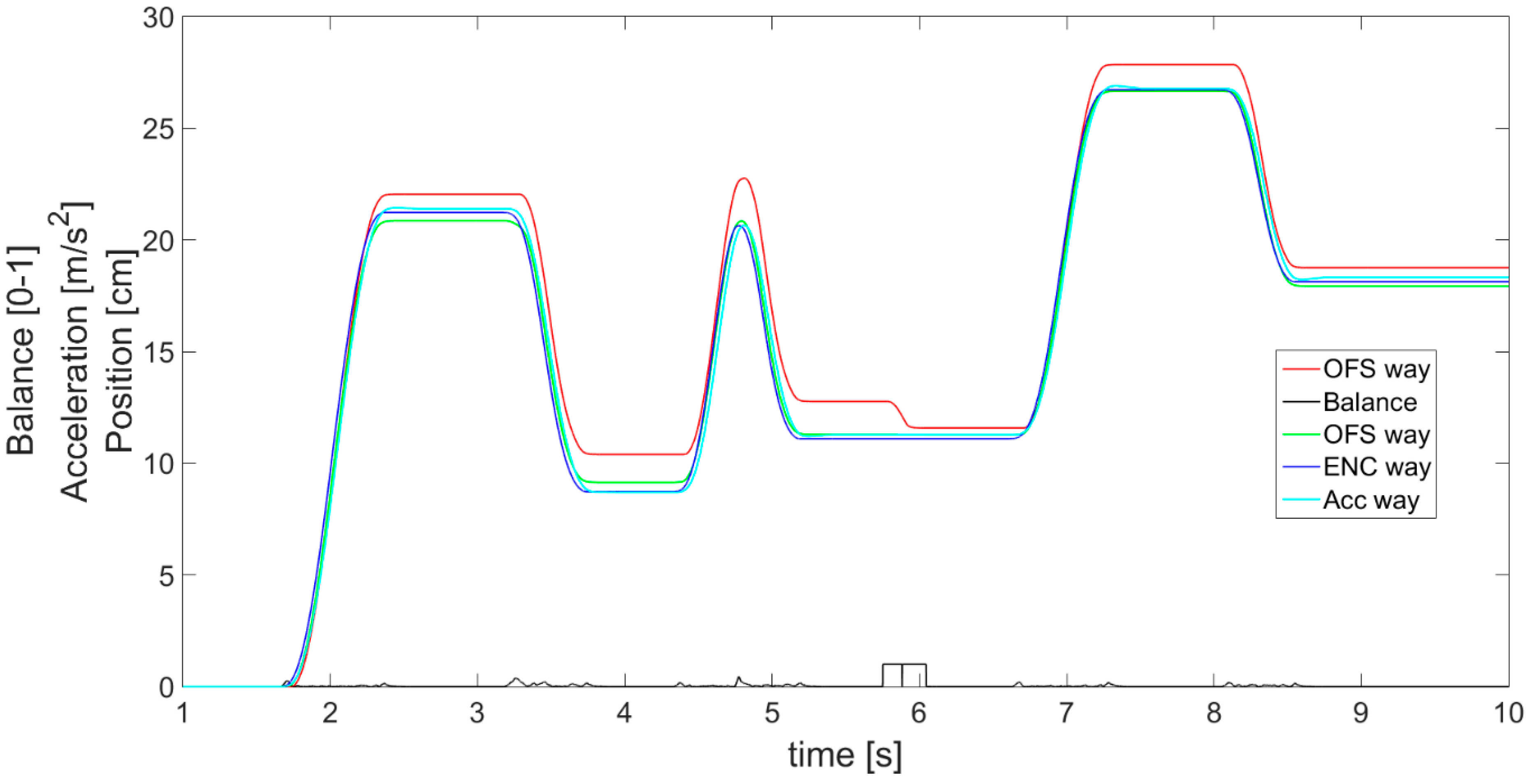

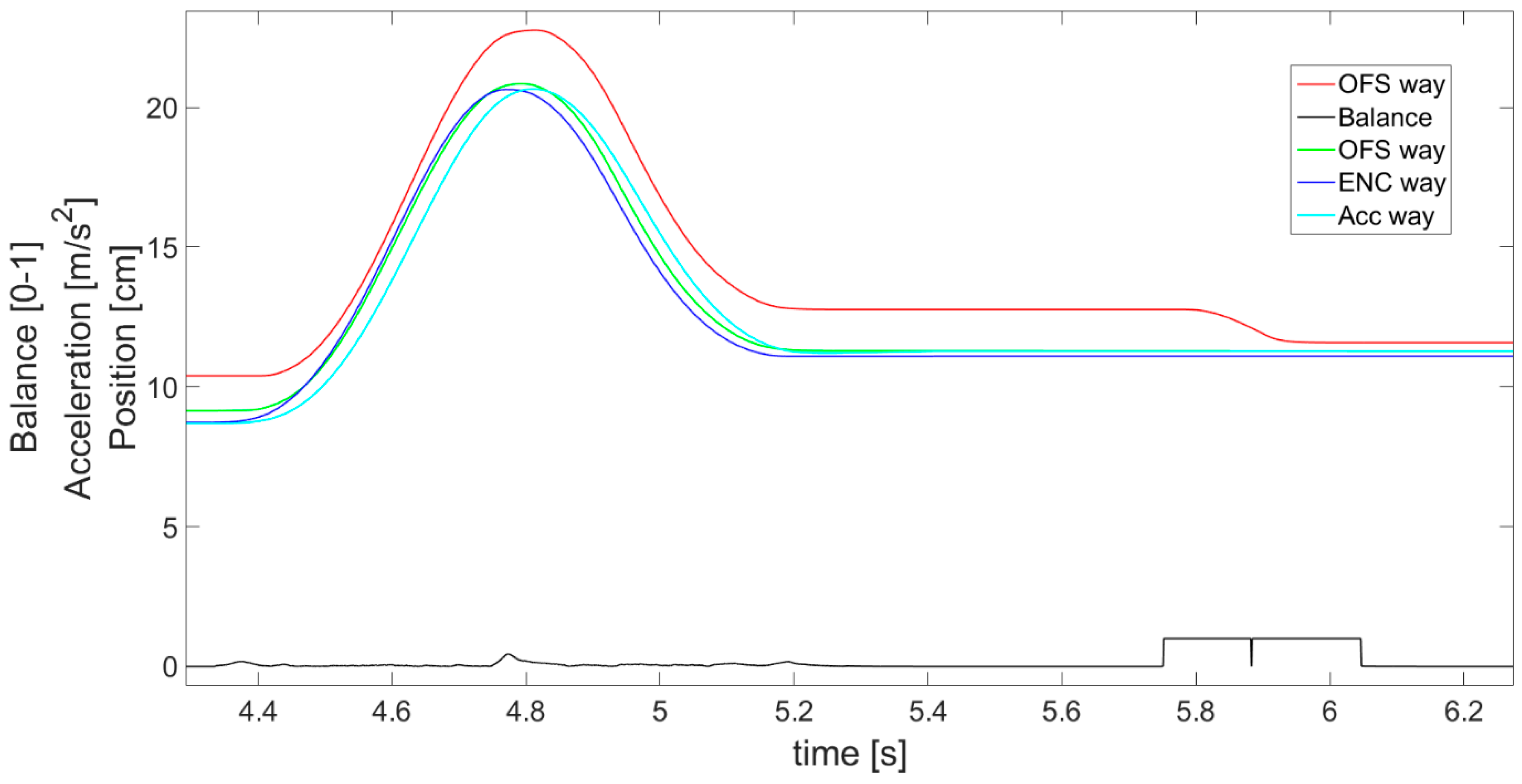

2.4. Sensor Fusion Algorithm—Complementary Filter

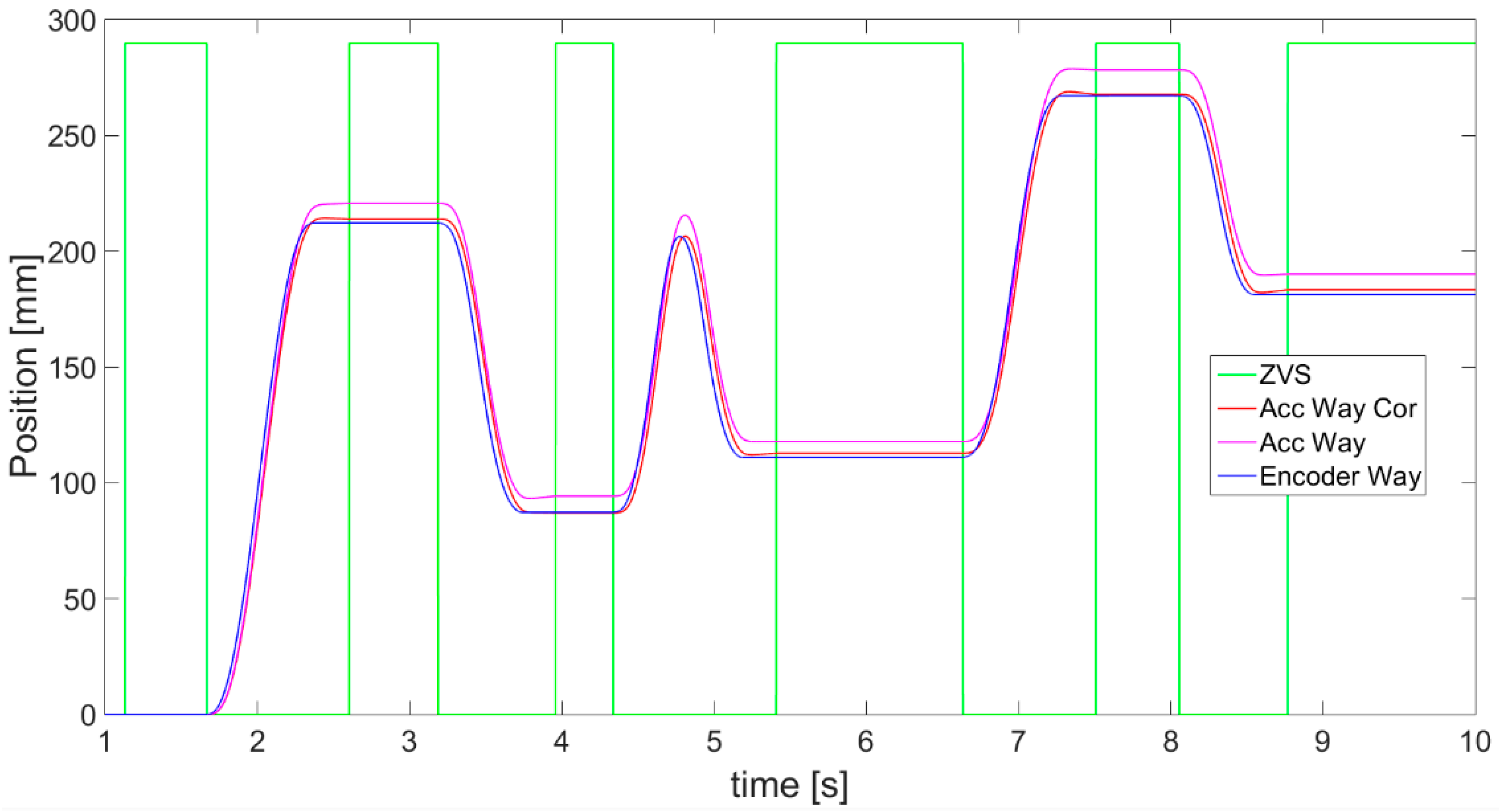

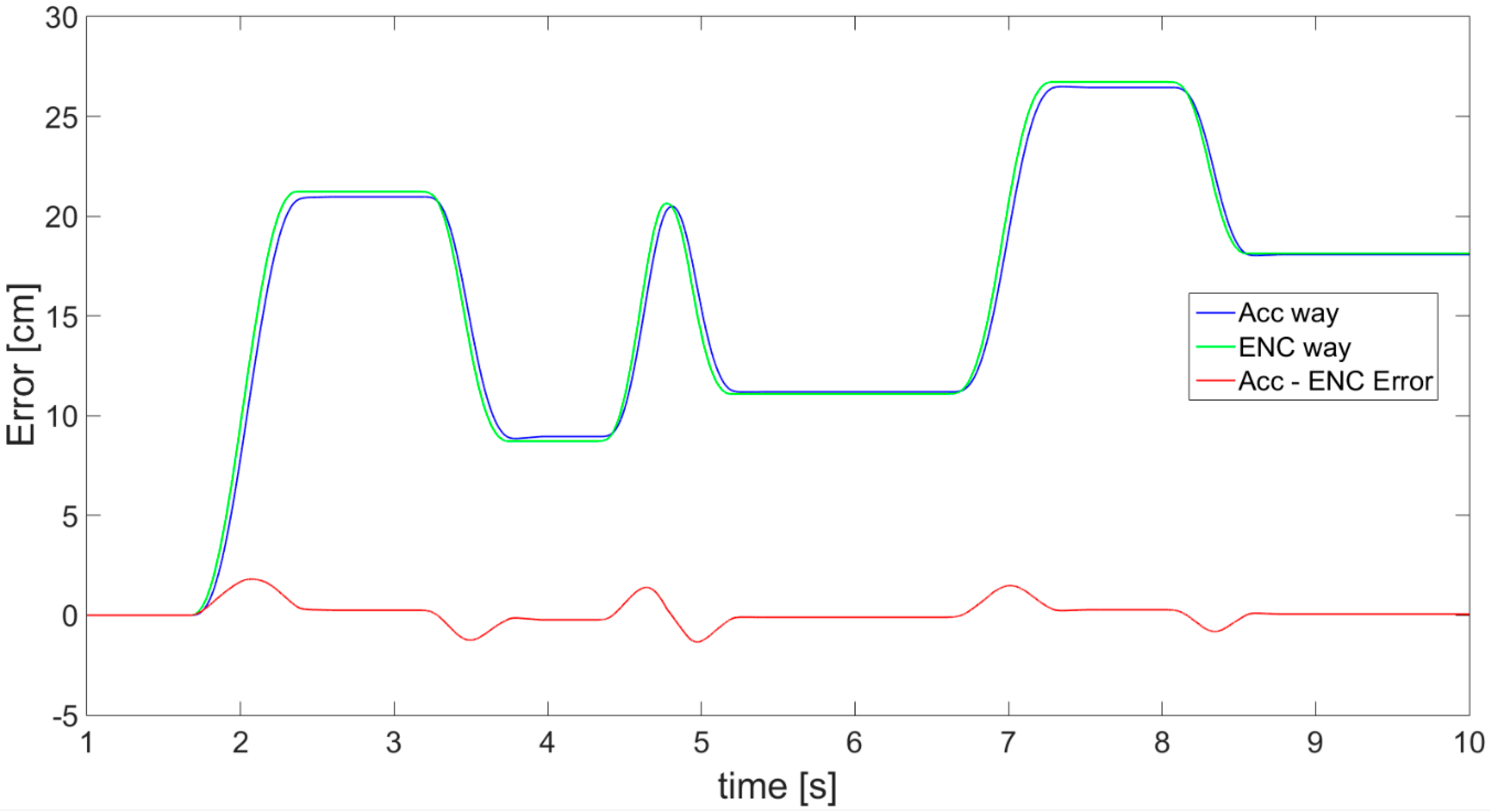

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Olinski, M.; Gronowicz, A.; Ceccarelli, M.; Cafolla, D. Human Motion Characterization Using Wireless Inertial Sensors. In New Advances in Mechanisms, Mechanical Transmissions and Robotics; Corves, B., Lovasz, E.C., Hüsing, M., Maniu, I., Gruescu, C., Eds.; Springer: Cham, Switzerland, 2017; pp. 401–408. [Google Scholar]

- Aircraft Rotations Body Axes—National Aeronautics and Space Administration. Available online: https://www.grc.nasa.gov/www/k-12/airplane/rotations.html (accessed on 21 December 2020).

- Koenderink, J.J.; van Doorn, A.J. Facts on optic flow. Biol. Cybern. 1987, 56, 247–254. [Google Scholar] [CrossRef]

- Raharijaona, T.; Serres, J.; Vanhoutte, E.; Ruffier, F. Toward an insect-inspired event-based autopilot combining both visual and control events. In Proceedings of the 3rd International Conference on Event-Based Control, Communication and Signal Processing (EBCCSP), Funchal, Portugal, 24–26 May 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Sanada, A.; Ishii, K.; Yagi, T. Self-Localization of an Omnidirectional Mobile Robot Based on an Optical Flow Sensor. J. Bionic Eng. 2010, 7, 172–176. [Google Scholar] [CrossRef]

- Lee, S.; Song, J. Mobile Robot Localization Using Optical Flow Sensors. Int. J. Control Autom. Syst. 2004, 2, 485–493. [Google Scholar]

- Dahmen, H.; Mallot, H.A. Odometry for ground moving agents by optic flow recorded with optical mouse chips. Sensors 2014, 14, 21045–21064. [Google Scholar] [CrossRef] [PubMed]

- Mafrica, S.; Servel, A.; Ruffier, F. Minimalistic optic flow sensors applied to indoor and outdoor visual guidance and odometry on a car-like robot. Bioinspir. Biomim. 2016, 11, 066007. [Google Scholar] [CrossRef] [PubMed]

- Campbell, J.; Sukthankar, R.; Nourbakhsh, I. Techniques for evaluating optical flow for visual odometry in extreme terrain. Proc. IEEE Int. Conf. Intell. Robot. Syst. 2004, 4, 3704–3711. [Google Scholar]

- Ross, R.; Devlin, J. Analysis of real-time velocity compensation for outdoor optical mouse sensor odometry. In Proceedings of the 11th International Conference on Control Automation Robotics & Vision, Singapore, 7–10 December 2010; pp. 839–843. [Google Scholar]

- Yi, D.; Lee, T.; Cho, D. Afocal Optical Flow Sensor for Reducing Vertical Height Sensitivity in Indoor Robot Localization and Navigation. Sensors 2015, 15, 11208–11221. [Google Scholar] [CrossRef]

- Yi, D.; Lee, T.; Cho, D. Afocal optical flow sensor for mobile robot odometry. In Proceedings of the 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; pp. 1393–1397. [Google Scholar]

- Tajti, F.; Szayer, G.; Kovács, B.; Barna, P.; Korondi, P. Optical flow based odometry for mobile robots supported by multiple sensors and sensor fusion. Automatika 2016, 57, 201–211. [Google Scholar] [CrossRef]

- Shen, C.; Bai, Z.; Cao, H.; Xu, K.; Wang, C.; Zhang, H.; Wang, D.; Tang, J.; Liu, J. Optical Flow Sensor/INS/Magnetometer Integrated Navigation System for MAV in GPS-Denied Environment. J. Sens. 2016, 2016, 6105803. [Google Scholar] [CrossRef]

- RoboteQ—OFS for Mobile Robots. Available online: www.roboteq.com (accessed on 21 December 2020).

- Zhu, R.; Wang, Y.; Yu, B.; Gan, X.; Jia, H.; Wang, B. Enhanced Heuristic Drift Elimination with Adaptive Zero-Velocity Detection and Heading Correction Algorithms for Pedestrian Navigation. Sensors 2020, 20, 951. [Google Scholar] [CrossRef]

- Qiu, S.; Yang, Y.; Hou, J.; Ji, R.; Hu, H.; Wang, Z. Ambulatory estimation of 3D walking trajectory and knee joint angle using MARG Sensors. In Proceedings of the Fourth edition of the International Conference on the Innovative Computing Technology (INTECH 2014), Luton, UK, 13–15 August 2014; ISBN 978-1-4799-4233-6. [Google Scholar]

- Wang, Y.; Li, X.; Zou, J. A Foot-Mounted Inertial Measurement Unit (IMU) Positioning Algorithm Based on Magnetic Constraint. Sensors 2018, 18, 741. [Google Scholar] [CrossRef]

- Chen, W.; Chen, R.; Chen, X.; Zhang, X.; Chen, Y. Comparison of EMG-based and Accelerometer-based Speed Estimation Methods in Pedestrian Dead Reckoning. J. Navig. 2011, 64, 265–280. [Google Scholar] [CrossRef]

- Diaz, E.M. Inertial Pocket Navigation System: Unaided 3D Positioning. Sensors 2015, 15, 9156–9178. [Google Scholar] [CrossRef]

- Ma, M.; Song, Q.; Gu, Y.; Li, Y.; Zhou, Z. An Adaptive Zero Velocity Detection Algorithm Based on Multi Sensor Fusion for a Pedestrian Navigation System. Sensors 2018, 18, 3261. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Zhu, H.; Du, Q.; Tang, S. A Survey of the Research Status of Pedestrian Dead Reckoning Systems Based on Inertial Sensors. Int. J. Autom. Comput. 2019, 16, 65–83. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, H.; Qiu, S.; Gao, Q. Stance-Phase detection for ZUPT-Aided foot-Mounted pedestrian navigation system. IEEE/ASME Trans. Mechatron. 2015, 20, 3170–3181. [Google Scholar] [CrossRef]

- Ursel, T.W. Object Displacement Estimation with the Use of Microelectromechanical Accelerometer. In Proceedings of the International Conference MSM, Bialystok, Poland, 1–3 July 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Park, J.; Sim, S.; Jung, H.; Spencer, B. Development of a Wireless Displacement Measurement System Using Acceleration Responses. Sensors 2013, 13, 8377–8392. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Yang, S.; Ni, Z.; Qian, W.; Gu, C.; Cao, Z. Pedestrian Navigation Method Based on Machine Learning and Gait Feature Assistance. Sensors 2020, 20, 1530. [Google Scholar] [CrossRef]

- Ceron, J.D.; Martindale, C.; López, D.M.; Kluge, F.; Eskofier, B. Indoor Trajectory Reconstruction of Walking, Jogging, and Running Activities Based on a Foot-Mounted Inertial Pedestrian Dead-Reckoning System. Sensors 2020, 20, 651. [Google Scholar] [CrossRef]

- Kang, X.; Huang, B.; Qi, G. A Novel Walking Detection and Step Counting Algorithm Using Unconstrained Smartphones. Sensors 2018, 18, 297. [Google Scholar] [CrossRef] [PubMed]

- Ho, N.H.; Truong, P.H.; Jeong, G.M. Step-Detection and Adaptive Step-Length Estimation for Pedestrian Dead-Reckoning at Various Walking Speeds Using a Smartphone. Sensors 2016, 16, 1423. [Google Scholar] [CrossRef] [PubMed]

- Ursel, T.; Olinski, M. Estimation of objects instantaneous displacement using inertial sensors. IJES 2016, 4, 56–64. (In Polish) [Google Scholar]

- ADXL345 Accelerometer Datasheet. Available online: https://www.analog.com/media/en/technical-documentation/data-sheets/ADXL345.pdf (accessed on 21 December 2020).

- PMW3901 Optical Flow Sensor Datasheet. Available online: https://wiki.bitcraze.io/_media/projects:crazyflie2:expansionboards:pot0189-pmw3901mb-txqt-ds-r1.00-200317_20170331160807_public.pdf (accessed on 21 December 2020).

- ABC-RC.pl—ADXL345. Available online: https://abc-rc.pl/product-pol-7180-Akcelerometr-3-osiowy-GY-291-na-ADXL345-miernik-przyspieszenia.html (accessed on 21 December 2020).

- Amazon—PMW3901 Optical Flow Sensor Module. Available online: https://www.amazon.com/PMW3901-Optical-Sensor-Current-Translation/dp/B082BFMPG8 (accessed on 21 December 2020).

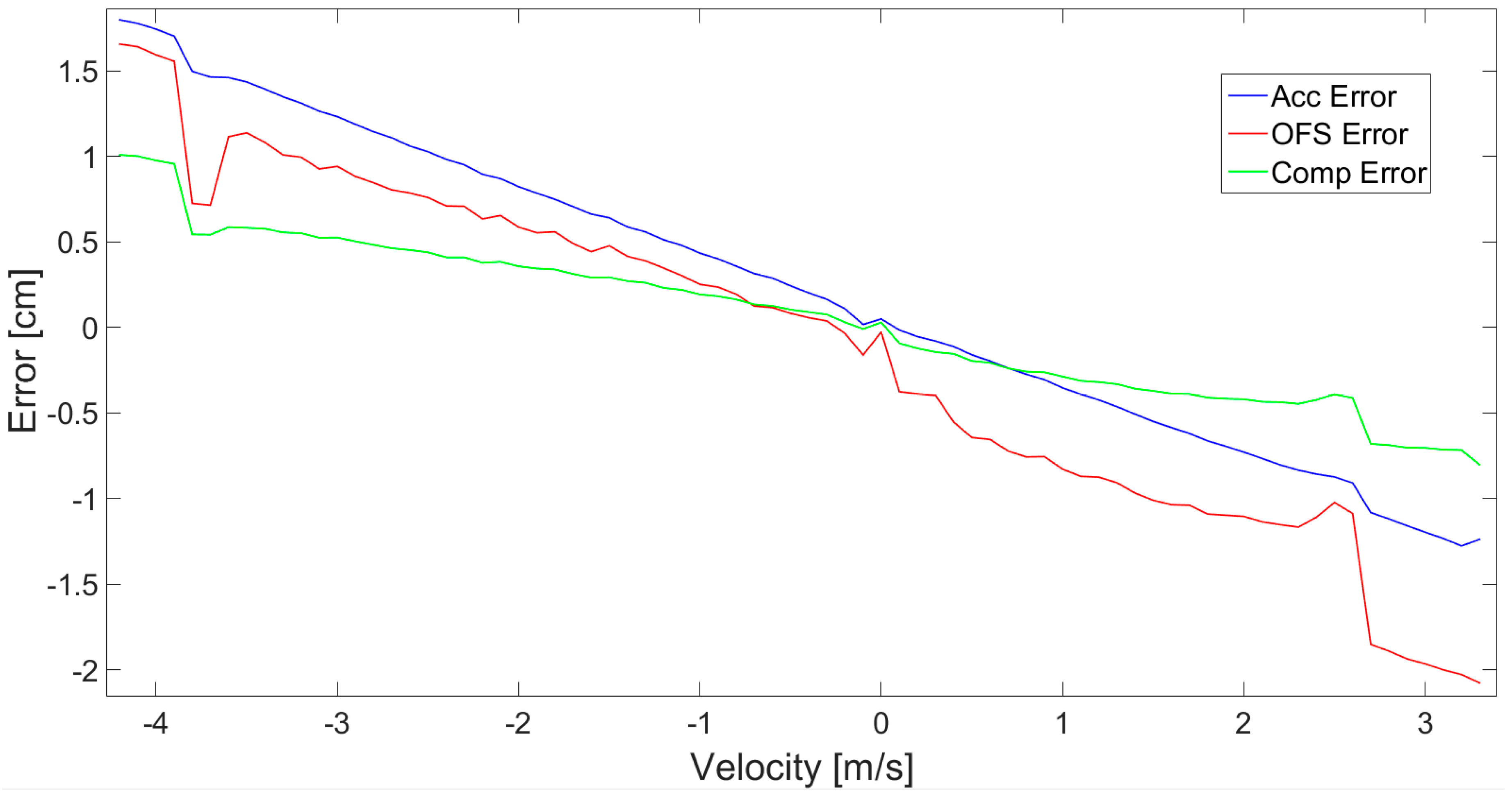

| Experimental Case | Max Error [cm] | AVG Error [cm] | Variance Error [cm] |

|---|---|---|---|

| Accelerometer | 2.61 | 0.1165 | 0.3245 |

| OFS | 2.132 | -0.812 | 0.6448 |

| Fusion | 1.0142 | 0.0331 | 0.1266 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ursel, T.; Olinski, M. Displacement Estimation Based on Optical and Inertial Sensor Fusion. Sensors 2021, 21, 1390. https://doi.org/10.3390/s21041390

Ursel T, Olinski M. Displacement Estimation Based on Optical and Inertial Sensor Fusion. Sensors. 2021; 21(4):1390. https://doi.org/10.3390/s21041390

Chicago/Turabian StyleUrsel, Tomasz, and Michał Olinski. 2021. "Displacement Estimation Based on Optical and Inertial Sensor Fusion" Sensors 21, no. 4: 1390. https://doi.org/10.3390/s21041390

APA StyleUrsel, T., & Olinski, M. (2021). Displacement Estimation Based on Optical and Inertial Sensor Fusion. Sensors, 21(4), 1390. https://doi.org/10.3390/s21041390