Social Collective Attack Model and Procedures for Large-Scale Cyber-Physical Systems

Abstract

1. Introduction

- We introduce a model of social collective attack on physical systems, which makes full use of the characteristics of cyber-physical-social interactions and the integration of infrastructures such as smart grids.

- We extend MITRE ATT&CK [16], the most used cyber adversary behavior modeling framework to cover cyber, physical, and social domains. In other words, we provide a systematic description framework for security threats that are launched from the social domain, penetrate through the cyber domain, and target physical domains.

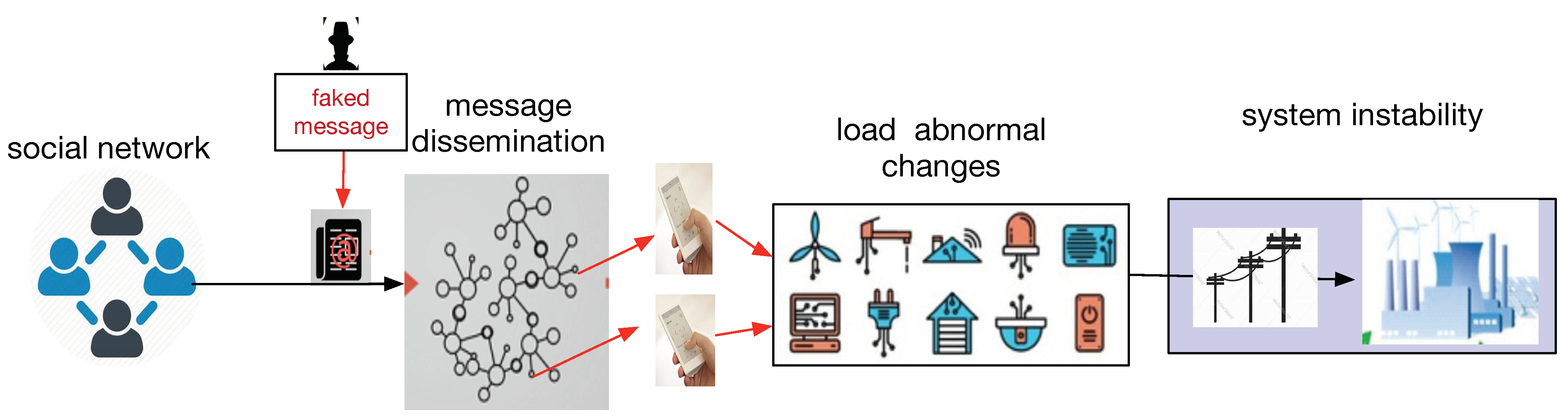

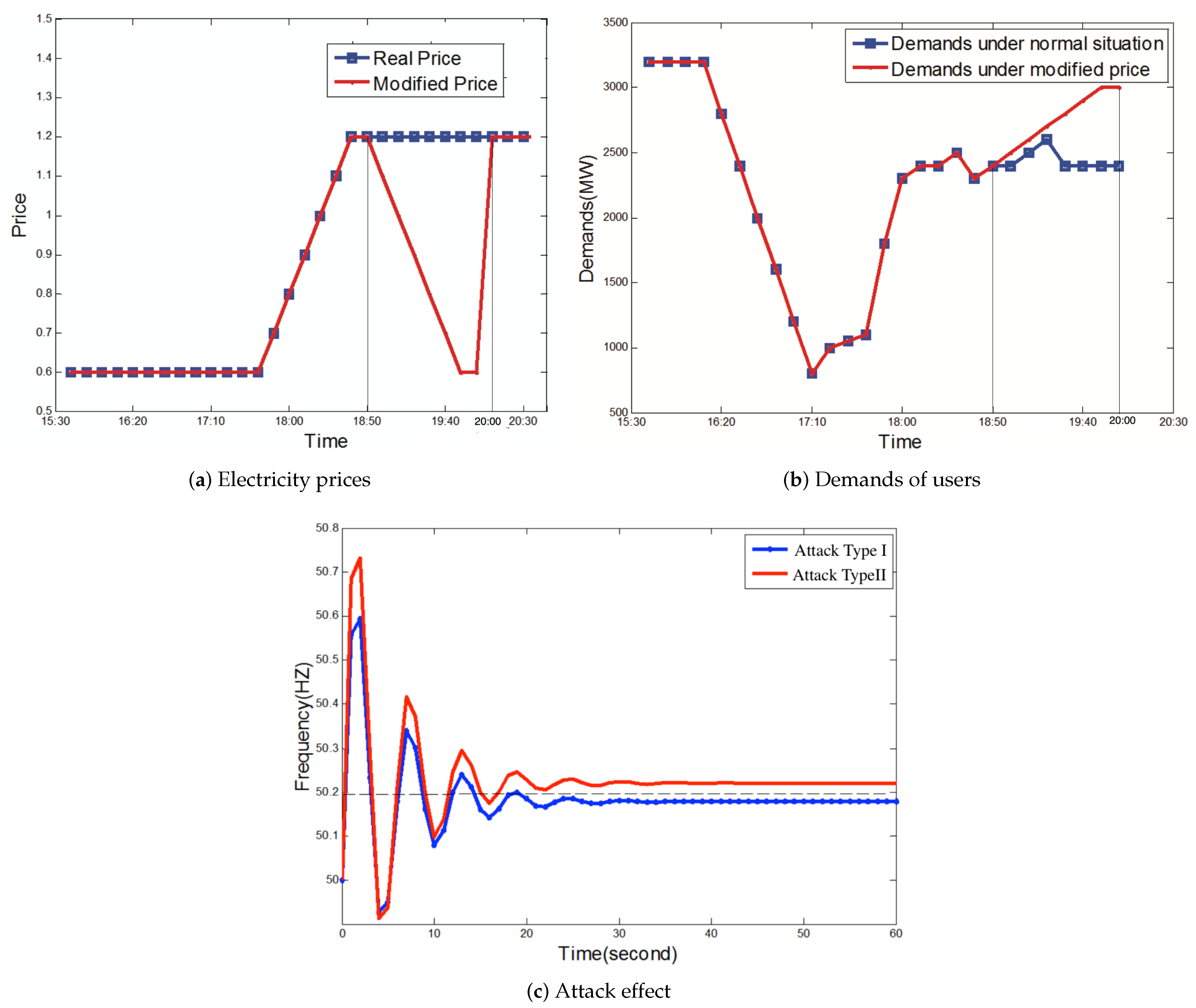

- We give an extensive analysis of the implementation of SCAC in a smart grid called Drastic Demand Change (DDC) attack, which manipulates a large number of users by disinformation to modify their electricity consumption behavior, and the sudden change of power load demand leads to the instability of the power grid. The simulation and experimental results show that the DDC attack can cause the duration of a frequency deviation to exceed the threshold and lead to the disconnection of power generators from the grid. As for the two methods to realize DDC attack, the reverse demands attack can achieve a better impact than the fast attack.

2. Related Work

2.1. Traditional Security Model of CPS

2.2. Attacks Initiated from Social Networks

2.3. Power System Attacks by Demand-Side Manipulation

- (1)

- Direct load control and related attack:

- (2)

- Indirect load control and related attack:

- (3)

- Social collective attack on the CPS:

3. Attack Model and Procedures

3.1. System Model of CPS Combined with the Social Domain

- Physical domain: The physical domain operates in the physical world and provides services to the end users. Elements in the physical domain include devices in the engineering domain, which interact with the cyber domain through sensors, actuators, and controllers. Sensors act as detectors to capture the physical data and transmit data to the information system via communication channels. Actuators execute commands from controllers and operate directly in the physical world. Controllers receive commands from the information system and convert semantic commands into signals that can be understood by the actuators.

- Cyber domain: The cyber domain is comprised of the information system that transmits the state of the physical system and sends control commands to the engineering equipment, and social media is also based on the information system that carries the communications among end users. For electrical appliances, the smart meters and smart home apps work mainly in the cyber domain and interact with the physical systems.

- Social domain: There mainly exist two kinds of social roles: operators and CPS users. In this paper, we mainly pay attention to CPS users. The users can get service from the CPS and provide feedback to the system. Power users’ behaviors have an influence on the operation of the physical system, and their thoughts can be influenced by the communication among people on social media.

3.2. Model and Steps of Social Collective Attack on CPS

3.2.1. Reconnaissance and Planning

3.2.2. Disinformation Fabrication

- (1)

- Price-based approach:

- (2)

- Incentive-based approach:

- (3)

- Loss-avoidance approach:

- (4)

- Environment-aware approach:

3.2.3. Disinformation Propagation and Amplification on Social Media

3.2.4. Disinformation Exploitation

3.2.5. Evaluation and Calibration

3.3. Disinformation Fabrication and Exploitation Procedures

3.3.1. Disinformation Based on the Fast Attack

3.3.2. Disinformation Based on Reverse Demands Attack

- Gradually change demands: From attack action to , attackers gradually change the demands of users in one direction. During the process, the changing demands should not trigger any alert, and the system keeps stable under new demands.

- Abruptly change demands in reverse: When an attacker has controlled a large number of users, action would drastically change the demands of users in the opposite direction from the impact of . For example, when attack action decreases the demands of users, action increases the demands of users.

4. Formal Description and Evaluation of the SCAC Model

4.1. Formal Description of the Physical System Instability Mechanism

4.2. Formal Evaluation of the SCAC Attack Effect

4.2.1. Search and Analyze the Social Relationships

4.2.2. Estimate the Number of Infected Users

4.2.3. Estimate Demand Change When Manipulated

5. Simulation Analysis of SCAC in a Smart Grid

5.1. Power System Model and Power Price Model

5.2. Simulation Evaluation

- Attack Type I: attackers only propagate the false notification on social media.

- Attack Type II: besides the false notification, attackers propagate false price messages, which can gradually modify the power price such that everyone eventually believes in the false prices.

5.2.1. The Influence of Disinformation Contents on the Attack Effect

5.2.2. The Accuracy of Impact Evaluation

5.2.3. The Effectiveness of the Fast Attack and the Reverse Demands Attack

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cho, C.; Chung, W.; Kuo, S. Cyberphysical Security and Dependability Analysis of Digital Control Systems in Nuclear Power Plants. IEEE Trans. Syst. Man Cybern. Syst. 2016, 46, 356–369. [Google Scholar] [CrossRef]

- Hu, F.; Lu, Y.; Vasilakos, A.V.; Hao, Q.; Ma, R.; Patil, Y.; Zhang, T.; Lu, J.; Xiong, N.N. Robust Cyber-Physical Systems: Concept, models, and implementation. Future Gener. Comput. Syst. FGCS 2016, 56, 449–475. [Google Scholar] [CrossRef]

- Mitchell, R.; Chen, I. Modeling and Analysis of Attacks and Counter Defense Mechanisms for Cyber Physical Systems. IEEE Trans. Reliab. 2016, 65, 350–358. [Google Scholar] [CrossRef]

- Nicholson, A.; Webber, S.; Dyer, S.; Patel, T.; Janicke, H. SCADA security in the light of Cyber-Warfare. Comput. Secur. 2012, 31, 418–436. [Google Scholar] [CrossRef]

- Liu, Y.; Ning, P.; Reiter, M.K. False Data Injection Attacks against State Estimation in Electric Power Grids. ACM Trans. Inf. Syst. Secur. 2011, 14. [Google Scholar] [CrossRef]

- Li, W.; Xie, L.; Deng, Z.; Wang, Z. False sequential logic attack on SCADA system and its physical impact analysis. Comput. Secur. 2016, 58, 149–159. [Google Scholar] [CrossRef]

- Zhou, Y.; Yu, F.R.; Chen, J.; Kuo, Y. Cyber-Physical-Social Systems: A State-of-the-Art Survey, Challenges and Opportunities. IEEE Commun. Surv. Tutor. 2020, 22, 389–425. [Google Scholar] [CrossRef]

- Jiang, L.; Tian, H.; Xing, Z.; Wang, K.; Zhang, K.; Maharjan, S.; Gjessing, S.; Zhang, Y. Social-aware energy harvesting device-to-device communications in 5G networks. IEEE Wirel. Commun. 2016, 23, 20–27. [Google Scholar] [CrossRef]

- Sun, H.; Wang, Z.; Wang, J.; Huang, Z.; Carrington, N.; Liao, J. Data-Driven Power Outage Detection by Social Sensors. IEEE Trans. Smart Grid 2016, 7, 2516–2524. [Google Scholar] [CrossRef]

- Xiong, G.; Zhu, F.; Liu, X.; Dong, X.; Huang, W.; Chen, S.; Zhao, K. Cyber-physical-social system in intelligent transportation. IEEE/CAA J. Autom. Sin. 2015, 2, 320–333. [Google Scholar] [CrossRef]

- Molina-García, A.; Bouffard, F.; Kirschen, D.S. Decentralized Demand-Side Contribution to Primary Frequency Control. IEEE Trans. Power Syst. 2011, 26, 411–419. [Google Scholar] [CrossRef]

- Noureddine, M.; Keefe, K.; Sanders, W.H.; Bashir, M. Quantitative Security Metrics with Human in the Loop. In Proceedings of the 2015 Symposium and Bootcamp on the Science of Security—HotSoS’15, Urbana, IL, USA, 21–22 April 2015; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Furnell, S.; Clarke, N. Power to the people? The evolving recognition of human aspects of security. Comput. Secur. 2012, 31, 983–988. [Google Scholar] [CrossRef]

- Mishra, S.; Li, X.; Pan, T.; Kuhnle, A.; Thai, M.T.; Seo, J. Price Modification Attack and Protection Scheme in Smart Grid. IEEE Trans. Smart Grid 2017, 8, 1864–1875. [Google Scholar] [CrossRef]

- Soltan, S.; Mittal, P.; Poor, H.V. BlackIoT: IoT Botnet of High Wattage Devices Can Disrupt the Power Grid. In Proceedings of the 27th USENIX Conference on Security Symposium—SEC’18, Baltimore, MD, USA, 15–17 August 2018; USENIX Association: Berkeley, CA, USA, 2018; pp. 15–32. [Google Scholar]

- Mitre ATT&CK Knowledge Base. Available online: https://attack.mitre.org (accessed on 26 December 2020).

- ATT&CK for Industrial Control Systems. Available online: https://collaborate.mitre.org/attackics/index.php/Main_Page (accessed on 26 December 2020).

- Adversarial Misinformation and Influence Tactics and Techniques. Available online: https://github.com/misinfosecproject/amitt_framework (accessed on 26 December 2020).

- Al Faruque, M.A.; Chhetri, S.R.; Canedo, A.; Wan, J. Acoustic Side-Channel Attacks on Additive Manufacturing Systems. In Proceedings of the 2016 ACM/IEEE 7th International Conference on Cyber-Physical Systems (ICCPS), Vienna, Austria, 11–14 April 2016; pp. 1–10. [Google Scholar] [CrossRef]

- The Spread of WeChat Rumors Should Be Cautious. Available online: https://www.sohu.com/a/55755551_114882 (accessed on 26 December 2020). (In Chinese).

- Bompard, E.; Napoli, R.; Xue, F. Social and Cyber Factors Interacting over the Infrastructures: A MAS Framework for Security Analysis. In Intelligent Infrastructures; Negenborn, R.R., Lukszo, Z., Hellendoorn, H., Eds.; Springer: Dordrecht, The Netherlands, 2010; pp. 211–234. [Google Scholar] [CrossRef]

- Karnouskos, S. Stuxnet worm impact on industrial cyber-physical system security. In Proceedings of the IECON 2011—37th Annual Conference of the IEEE Industrial Electronics Society, Melbourne, Australia, 7–10 November 2011; pp. 4490–4494. [Google Scholar] [CrossRef]

- Wang, S.; Wang, D.; Su, L.; Kaplan, L.; Abdelzaher, T.F. Towards Cyber-Physical Systems in Social Spaces: The Data Reliability Challenge. In Proceedings of the 2014 IEEE Real-Time Systems Symposium, Rome, Italy, 2–5 December 2014; pp. 74–85. [Google Scholar] [CrossRef]

- Tang, D.; Fang, Y.; Zio, E.; Ramirez-Marquez, J.E. Resilience of Smart Power Grids to False Pricing Attacks in the Social Network. IEEE Access 2019, 7, 80491–80505. [Google Scholar] [CrossRef]

- Chen, C.; Wang, J.; Kishore, S. A Distributed Direct Load Control Approach for Large-Scale Residential Demand Response. IEEE Trans. Power Syst. 2014, 29, 2219–2228. [Google Scholar] [CrossRef]

- Maharjan, S.; Zhu, Q.; Zhang, Y.; Gjessing, S.; Başar, T. Demand Response Management in the Smart Grid in a Large Population Regime. IEEE Trans. Smart Grid 2016, 7, 189–199. [Google Scholar] [CrossRef]

- University of Oxford. Social Media Manipulation Rising Globally, New Report Warns. Available online: http://comprop.oii.ox.ac.uk/research/cybertroops2018/ (accessed on 16 November 2020).

- Amini, S.; Pasqualetti, F.; Mohsenian-Rad, H. Dynamic Load Altering Attacks Against Power System Stability: Attack Models and Protection Schemes. IEEE Trans. Smart Grid 2018, 9, 2862–2872. [Google Scholar] [CrossRef]

- Pan, T.; Mishra, S.; Nguyen, L.N.; Lee, G.; Kang, J.; Seo, J.; Thai, M.T. Threat From Being Social: Vulnerability Analysis of Social Network Coupled Smart Grid. IEEE Access 2017, 5, 16774–16783. [Google Scholar] [CrossRef]

- Li, D.; Chiu, W.Y.; Sun, H. Chapter 7—Demand Side Management in Microgrid Control Systems. In Microgrid; Mahmoud, M.S., Ed.; Butterworth-Heinemann: Oxford, UK, 2017; pp. 203–230. [Google Scholar] [CrossRef]

- Earthhour. Available online: http://www.earthhour.org (accessed on 16 November 2020).

- Karlova, N.A.; Fisher, K.E. A social diffusion model of misinformation and disinformation for understanding human information behaviour. Inf. Res. 2013, 18, 1–12. [Google Scholar]

- Tambuscio, M.; Ruffo, G.; Flammini, A.; Menczer, F. Fact-Checking Effect on Viral Hoaxes: A Model of Misinformation Spread in Social Networks. In Proceedings of the 24th International Conference on World Wide Web—WWW’15 Companion, Florence, Italy, 18–22 May 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 977–982. [Google Scholar] [CrossRef]

- Miller, D.L. Introduction to Collective Behavior and Collective Action; Waveland Press: Long Grove, IL, USA, 2013. [Google Scholar]

- Zhou, J.; Liu, Z.; Li, B. Influence of network structure on rumor propagation. Phys. Lett. A 2007, 368, 458–463. [Google Scholar] [CrossRef]

- Short, J.A.; Infield, D.G.; Freris, L.L. Stabilization of Grid Frequency Through Dynamic Demand Control. IEEE Trans. Power Syst. 2007, 22, 1284–1293. [Google Scholar] [CrossRef]

- Zhao, C.; Topcu, U.; Low, S.H. Optimal Load Control via Frequency Measurement and Neighborhood Area Communication. IEEE Trans. Power Syst. 2013, 28, 3576–3587. [Google Scholar] [CrossRef]

- Mishra, S.; Li, X.; Kuhnle, A.; Thai, M.T.; Seo, J. Rate alteration attacks in smart grid. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Kowloon, Hong Kong, 26 April–1 May 2015; pp. 2353–2361. [Google Scholar] [CrossRef]

- Jokar, P.; Arianpoo, N.; Leung, V.C.M. Electricity Theft Detection in AMI Using Customers’ Consumption Patterns. IEEE Trans. Smart Grid 2016, 7, 216–226. [Google Scholar] [CrossRef]

- Wasserman, S. Network Science: An Introduction to Recent Statistical Approaches. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining—KDD’09, Paris, France, 28 June–1 July 2009; Association for Computing Machinery: New York, NY, USA, 2009; pp. 9–10. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, P.; Xun, P.; Hu, Y.; Xiong, Y. Social Collective Attack Model and Procedures for Large-Scale Cyber-Physical Systems. Sensors 2021, 21, 991. https://doi.org/10.3390/s21030991

Zhu P, Xun P, Hu Y, Xiong Y. Social Collective Attack Model and Procedures for Large-Scale Cyber-Physical Systems. Sensors. 2021; 21(3):991. https://doi.org/10.3390/s21030991

Chicago/Turabian StyleZhu, Peidong, Peng Xun, Yifan Hu, and Yinqiao Xiong. 2021. "Social Collective Attack Model and Procedures for Large-Scale Cyber-Physical Systems" Sensors 21, no. 3: 991. https://doi.org/10.3390/s21030991

APA StyleZhu, P., Xun, P., Hu, Y., & Xiong, Y. (2021). Social Collective Attack Model and Procedures for Large-Scale Cyber-Physical Systems. Sensors, 21(3), 991. https://doi.org/10.3390/s21030991