1. Introduction

The rapid development and effectiveness of convolutional neural networks (CNN) have greatly contributed to enormously emerging computer vision applications and tools. As the performance and maturity level of computer vision technologies are continuously improving, we can utilize them to enhance or assist human tasks under certain circumstances in order to reduce safety risks, increase work efficiency, and cut costs.

One growing trend of applying computer vision technologies is remote visual inspection (RVI) [

1,

2], where a human inspector inspects a video instead of being physically present on the site. This is very beneficial when the assets to be inspected are difficult to access or in dangerous environments. Typical applications for RVI include aircraft and spacecraft engines, oil and gas pipelines, nuclear power stations, etc.

In recent years, applying RVI to ship inspection is of great interest to the maritime sector, in particular for inspection inside cargo and ballast water tanks. Regular ship inspections are required by the International Maritime Organization (IMO) with a specific purpose: “All ships must be surveyed and verified so that relevant certificates can be issued to establish that the ships are designed, constructed, maintained and managed in compliance with the requirements of IMO” [

3]. The traditional way of conducting ship inspections is that human inspectors travel to the location of the ship and manually inspect all required areas. The whole process is costly, and human inspectors are often exposed to risky situations, such as shortage of oxygen in the tank, chemical toxicity, or other harsh environment. To reduce the inspection cost and increase personnel safety, leading ship classification societies (A ship classification society (

https://en.wikipedia.org/wiki/Ship_classification_society) is a non-governmental organization that establishes and maintains technical standards for the construction and operation of ships and offshore structures.) such as DNV GL (DNV GL (

www.dnvgl.com) is an international accredited registrar and classification society and headquartered in Høvik, Norway.), ABS (ABS (

https://ww2.eagle.org/en.html) is an American maritime classification society.), and LIoyd’s Register (LIoyd’s Register Group Limited (

https://www.lr.org/en/) is a technical and business services organization and a maritime classification society registered in England and Wales.) have been actively undertaking remote inspection services [

4,

5,

6]. Remote inspection is becoming increasingly popular in times of the coronavirus crisis in 2020 [

7]. However, the current practice of remote ship inspection still requires that the human inspectors visually inspect all of the streamed videos and manually identify the possible defects. The human inspectors have to concentrate on watching the several hours of inspection videos which often contain only a few minutes capturing the actual defects such as crack, corrosion, deformation and leakage. Such human-based visual inspection of videos is tedious, and the inspectors may therefore overlook some defects.

To further improve the remote ship inspection efficiency and increase inspection coverage as well, integrating computer vision technologies into a drone-based ship inspection process to automate the defect detection is promising [

8]. One pragmatic scenario is that the inspection videos taken by the drone flying in the ship tanks are streamed to the cloud storage operated by the classification society. The videos, stored in the cloud, will be processed in a computer vision-based inspection system to automatically detect a variety of defects typically occurring in ships. The defects detected by the automated inspection system will be visualized in human understandable ways, such as using a bounding box to annotate the defects. Among those typical defects, cracks are most severe and have to be repaired before the ship can continue operating.

Another issue arising with the automated detection of defects in videos rather than in single images, is that many video frames will capture the same defect which will be detected many times as well. To save video inspection time, it is therefore also important to recognize the same defect across video frames, in order to reduce the number of frames that need to be inspected. An object tracking approach therefore adds the value to the automated inspection system by further reducing the number of video frames to be inspected.

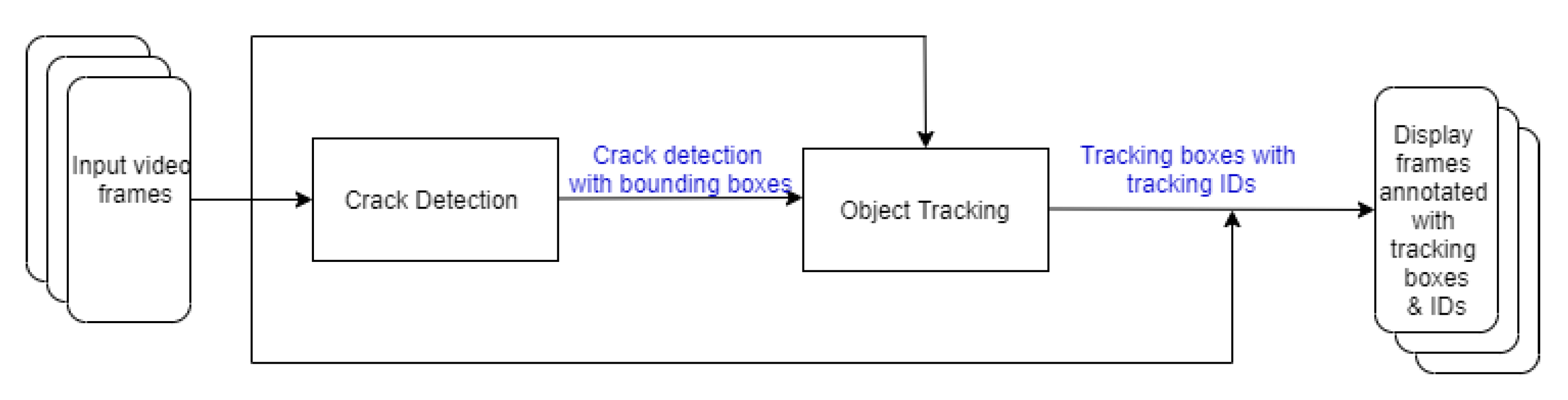

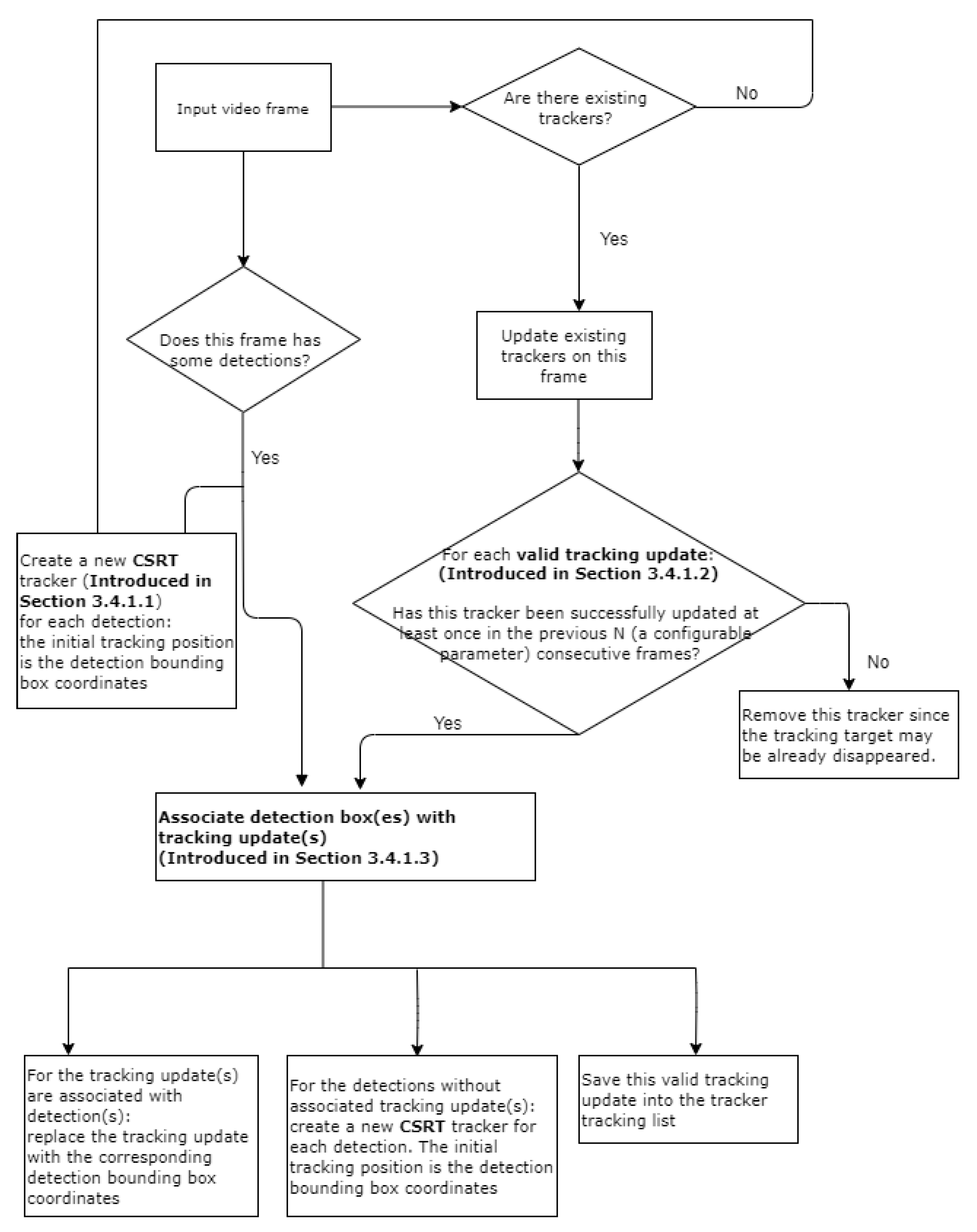

In this paper, we present a detection-based object tracking system which automates the process of detecting cracks in the ship inspection videos and assists human inspectors to focus their attention on a subset of the video frames for analysis and decision-making. This system consists of two main modules concatenated. The first one is a CNN-based object detection model which detects cracks captured in the ship inspection images/videos. The second one is an object tracking module which tracks the crack detected by the detection model over the consecutive frames and maintains a unique index for the same crack.

The proposed detection-based crack tracking system has several distinguished features, i.e., our main contributions, listed below.

The subsequent part of this paper is organized as follows. The related work is briefly summarized in

Section 2. In

Section 3, the proposed crack detection system is described in detail. The experiment results are presented in

Section 4. The discussion and conclusion are presented in

Section 5 and

Section 6, respectively.

2. Related Work

There have been attempts to explore image processing techniques and deep learning approaches to automate the process of detecting cracks in ship structures. A percolation-based crack detector is proposed to identify crack pixels in an image in accordance with a set of rules [

12]. This crack detector requires tuning several parameters based on its defined rules. Thus, the generalizability of this crack detector is unclear.

A CNN-based method is proposed to detect hull-stiffened plate cracks [

13]. The proposed CNN model was trained on synthetic data which emulated only limited crack locations and lengths. It is not applicable to detection of cracks occurred in the ships under operation.

In this paper, we focus on investigating the feasibility of the CNN-based detection model which is trained on the real crack images captured by our ship inspectors. In addition, the object detection model does not require tuning parameters when applying it to unseen images.

2.1. Object Detection

Object detection is one of the common object recognition tasks. The main objective of object detection is to locate the presence of specific objects with a bounding box and classify the located objects in an image [

14].

CNN-based object detection has proven to be an effective solution for numerous computer vision applications. The most widely deployed object detection application is facial recognition [

15,

16,

17]. Other well-known applications of object detection include video surveillance, image retrieval, and the self-driving system of autonomous vehicles [

18,

19]. CNN models have been developed surprisingly complex in order to achieve human level performance, i.e., the number of stacked layers increases. For instance, the ResNets (short for Residual Networks) family, which is commonly used as the backbone network by many state-of-the-art object recognition models, has three network architecture realizations including ResNet 50-layer, ResNet 101-layer, and ResNet 152-layer [

20]. Even the simplest ResNet architecture, ResNet 50-layer, contains around 25 million trainable parameters [

21]. Such complex model architectures have to rely on enormous training data to globally optimize the huge parametric space of the model in order to achieve acceptable performance levels.

The real applications often lack sufficiently labeled training data to effectively train those complex CNN models. Thank to the publicly accessible image datasets, it has become a common practice in computer vision community that the novel but complex CNN models are first (pre-)trained on some large-scale public datasets which have been collected extensively and are suitable for more general data-driven tasks. For a specific real application, the user (or data scientist) can use the pretrained CNN model as starting point and train the same model on a customized dataset which is generally a small-scale and specific to the real application. Such a technique is called transfer learning, which is the improvement of learning in a new task through the transfer of knowledge from a related task that has already been learned [

22].

2.2. Object Tracking in Videos

Object tracking in videos is a classical computer vision problem. It requires solving several sub-tasks including (1) detecting the object in a scene, (2) recognizing the same object in every frame of the video, and (3) distinguishing a specific object from other objects, both static and dynamic. The main technical challenges related to object tracking are data association, similarity measurement, correlation, matching/retrieval, reasoning with “strong” priors, and detection with very similar examples [

23].

Object tracking algorithms can be categorized as either detection-based or detection-free. Detection-based tracking algorithms rely on a pretrained object detector to generate detection hypothesis which are used to form tracking trajectories. Detection-free tracking algorithms require manual initialization of a target object in the first frame where it appears. Detection-based tracking algorithms are of high interest because they can automatically start to track newly detected objects and terminate tracking objects disappearing.

When a specific application does not have a big set of videos which can be used to train a deep learning based object tracking model, the tracking approaches based on conventional computer vision technologies such as motion model and visual appearance model are more pragmatic. For instance, SORT was initially designed for online multiple object tracking (MOT) applications [

10] and ranked as the best

open source multiple object tracker on the 2D MOT benchmark 2015. It mainly consists of three components as listed below.

An estimation model which is the representation and the motion model used to propagate a target’s identity into the next frame. The estimation model used in the original paper is Kalman Filter.

A data association function which is to assign detections to existing targets. The assignment is solved optimally using the Hungarian algorithm (The Hungarian method is a combinatorial optimization algorithm that solves the assignment problem in polynomial time and which anticipated later primal-dual methods.).

Creation and detection of tracker identities function which is to create or delete unique identities when objects enter and leave the image.

If an initial position of an object is given (e.g., a detection of an object), there are many modern and popular trackers which could provide good enough tracking performance. Particularly, OpenCV 4.4.0 (Open Source Computer Vision:

https://docs.opencv.org/master/d9/df8/group__tracking.html) already provides APIs for several popular trackers including Boosting [

24], CSRT [

11], GOTURN [

25], KCF [

26], MedianFlow [

27], MIL [

28], MOSSE [

29], and TLD [

30].

5. Discussions

5.1. Detection and Tracking Performance

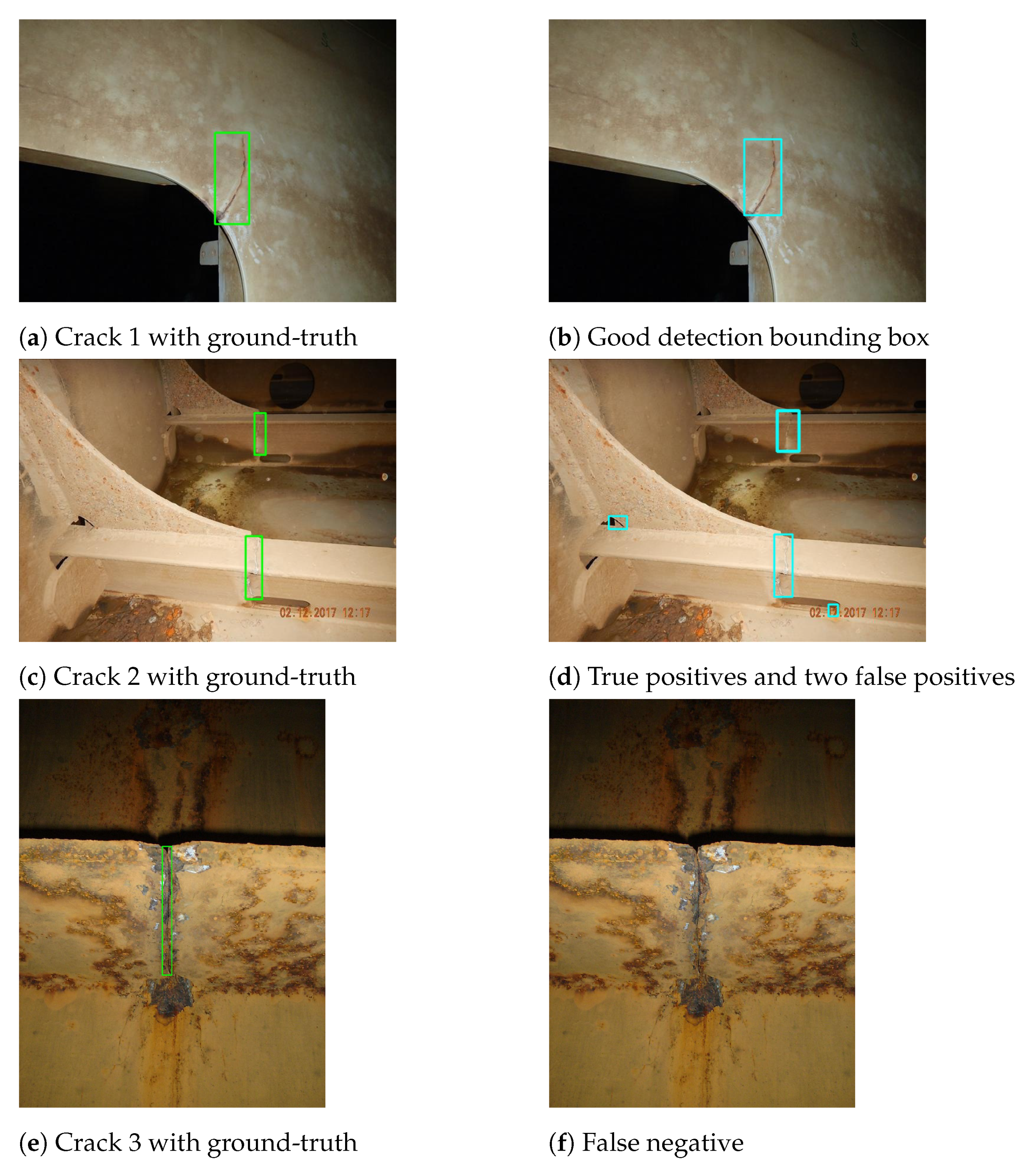

There are some issues related to the performance. The first issue is that there are still too many FNs although the object tracking approach adds value and reduces the number of FNs. It is critical in our application to minimize the number of FNs as cracks compromise the structural integrity of the ship hull and therefore have to be spotted and repaired.

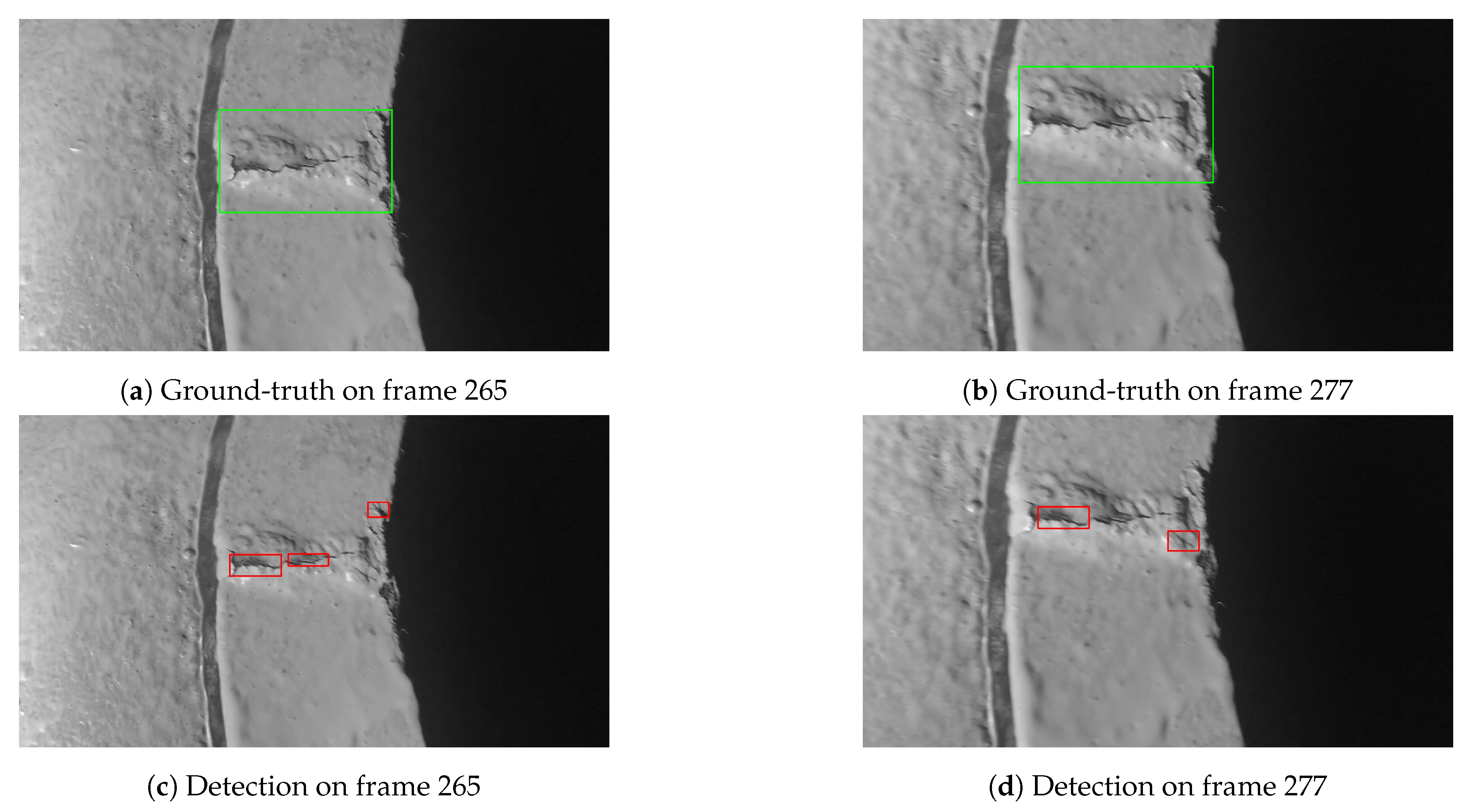

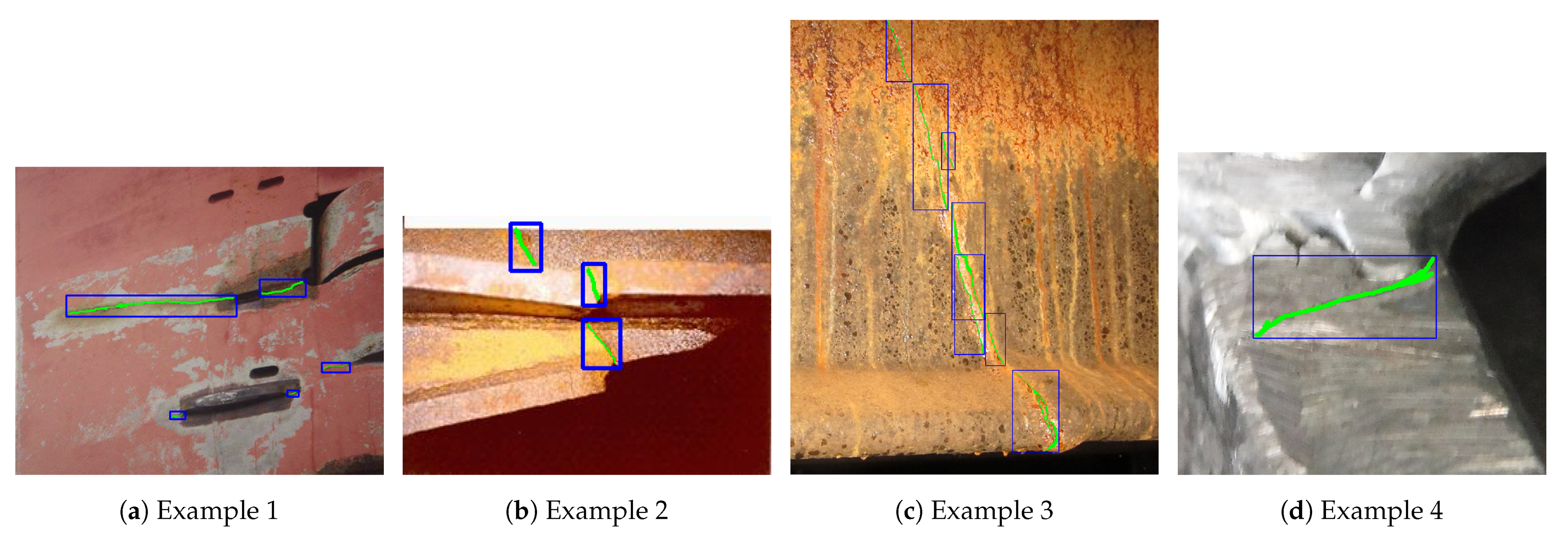

The second issue is that the customized RetinaNet model sometimes detects only a small part of the actual cracks and generates some severe detection jitters. One of the possible reasons is that the training images contain a diverse range of crack scales. In addition, the irregular shapes and orientations of cracks often lead to very loose ground-truth annotations when annotating training images.

Figure 11 shows some training image example, where the actual cracks were first labeled using green color for pixel-wise representation (for training semantic segmentation model) and then are localized using the blue color bounding boxes (for training object detection model).

The third issue is related to the bounding box method. It is obvious that the actual cracks occupy only a small percentage of the bounding box areas. However, the RetinaNet has learned the whole bounding box as the potential “target object” which leads to a severe false positive learning. In

Section 6, some further work is suggested to cope with these issues.

In addition, the detection speed of RetinaNet model varies significantly under different GPU configurations. For instance, when inferencing 183 static images with various shapes under the GPU configuration described in

Table 3, it needed 24.70 s in total and led to the average inference time, 24.70 s/183 = 0.14 s. However, when inferencing the test video consisting of 595 frames with the same shape under the GPU configuration described in

Table 5, the total inference time was 208.25 s and the average inference time was 0.35 s.

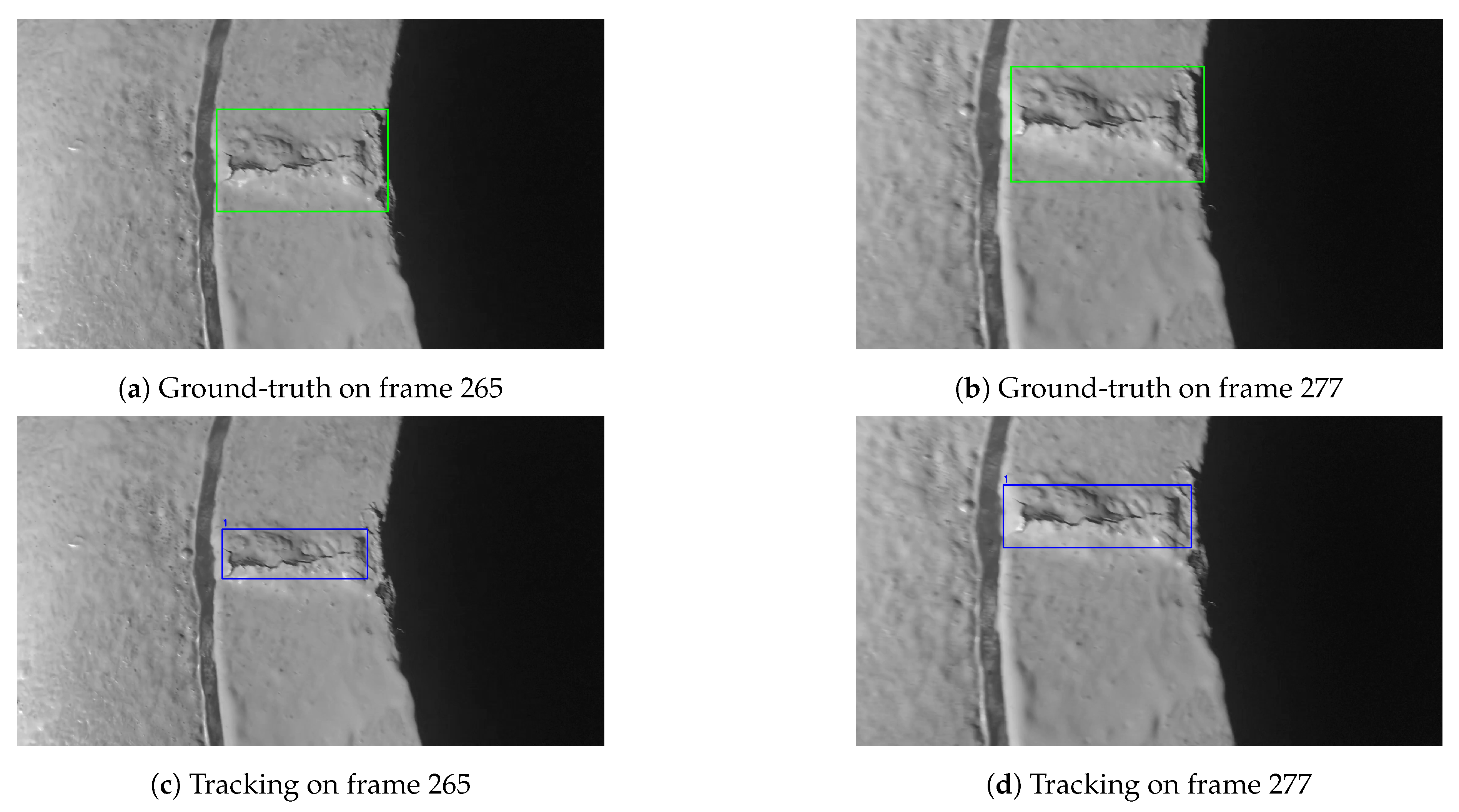

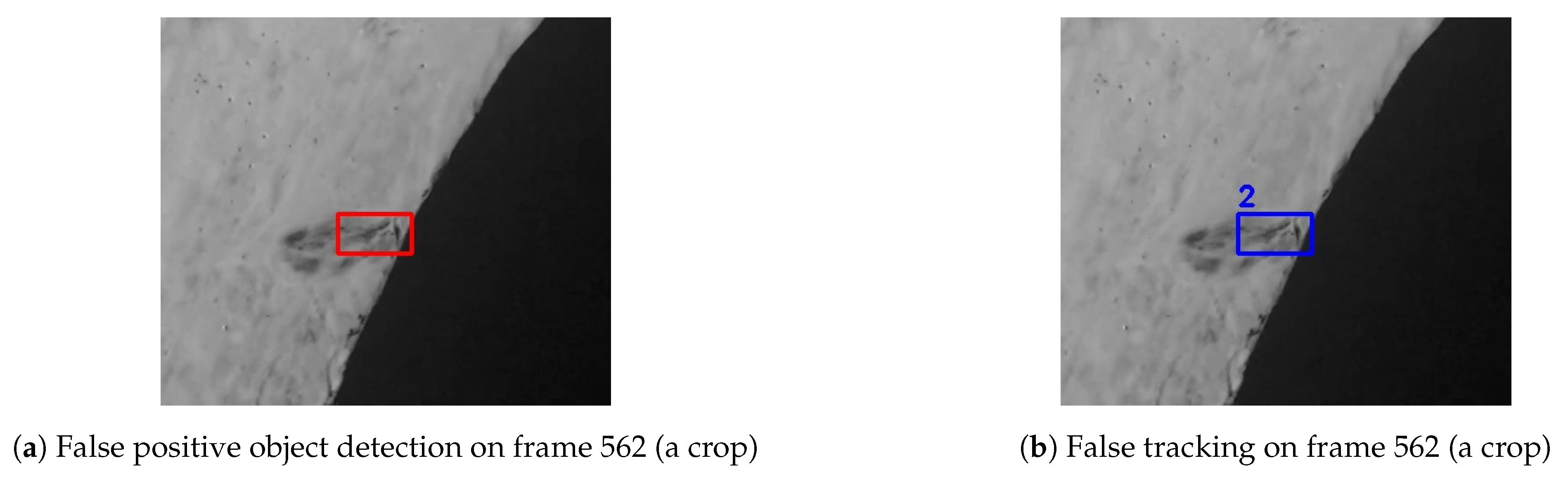

The tracking stage accuracy relies on the object detection model performance. The proposed tracking model can effectively fill the intermittent FNs in frames between two TP detections through data association. One important factor used to remove detection jitter is to consider both the IoU of the detection box and tracking box and their respective areas which is part of Algorithm A3. However, if the detection model predicts FPs at the same location more than certain numbers (some configurable parameter of the tracking model), the tracking model will lock this location and track it in the consecutive frames instead of removing those false detections. Thus, it requires both improving the detection model accuracy and employing other postprocessing techniques to identify and remove the false tracking.

5.2. Validity

The object detection model training results (refer

Figure 5) shows that the model achieved the best training results at the completion of Epoch 3. One possible explanation is that using transfer learning with the pretrained backbone network has provided an initial set of the backbone network’s weights close to their optimal values. More specifically, the backbone network is responsible for extracting the low-level image features. Therefore, we argue that a crack is more likely an assembly of low-level features.

The object detection model were trained, validated, and tested on static images captured by human inspectors to document findings. Those images were not intended for training machine learning models. In contrast, the detection-based tracking system was tested on video footage captured by a dedicated human inspector who consciously emulated the drone flying movements, using a different camera and light source. It can be argued that the video footage used for testing the tracking system is partly out-of-distribution. Therefore, the overall performance results of the detection-based tracking system are conservative. One should expect improved detection and tracking performance in the future if the object detection model is re-trained using video footages which are captured by following a standardized process.

The training data of the object detection model also includes augmented images. We argue that the augmented images and the video footage have different distributions. Accordingly, the performance results of the detection-based system are conservative. We expect that the performance of the object detection model will be improved in the future by training it on more video footages and fewer augmented images.

6. Conclusions and Further Work

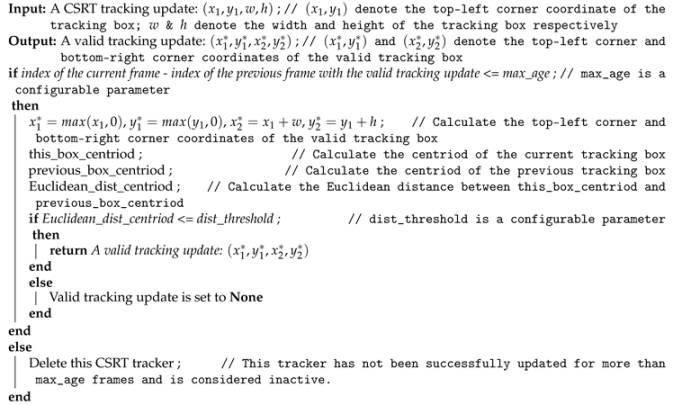

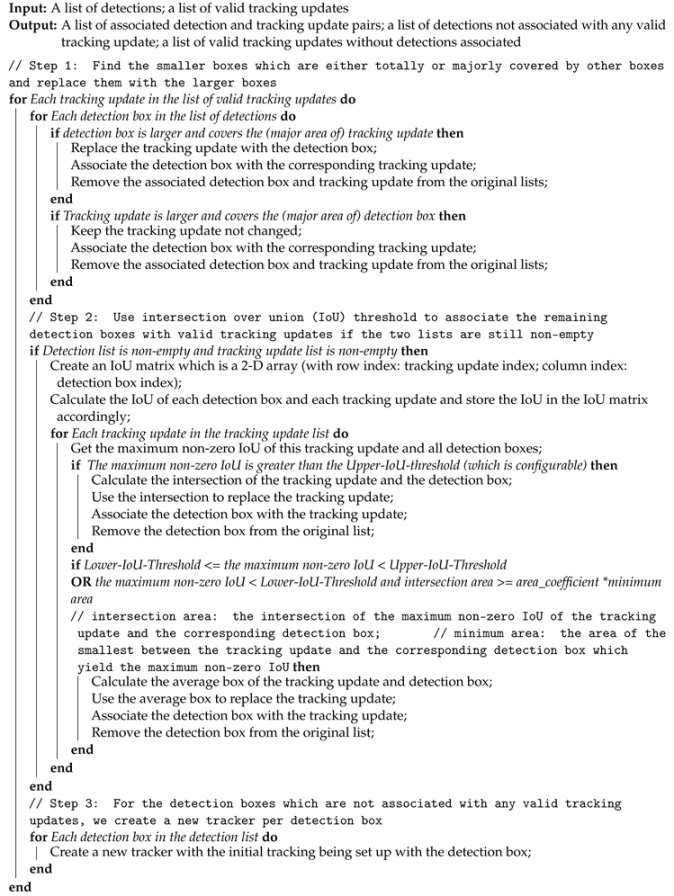

In this paper, a detection-based tracking system to detect and track cracks in ship inspection videos was proposed. The detection stage is a customized RetinaNet model with the optimal anchor setting and a postprocessing algorithm to remove the redundant predictions. The tracking stage consists of two main components: (1) one is the enhanced CSRT tracker which predicts the tracking updates in the consecutive frames by providing an initial tracking target, and (2) the other is a novel data association algorithm which associates detections with the existing trackers and maintain tracking indices for each unique tracking trajectory.

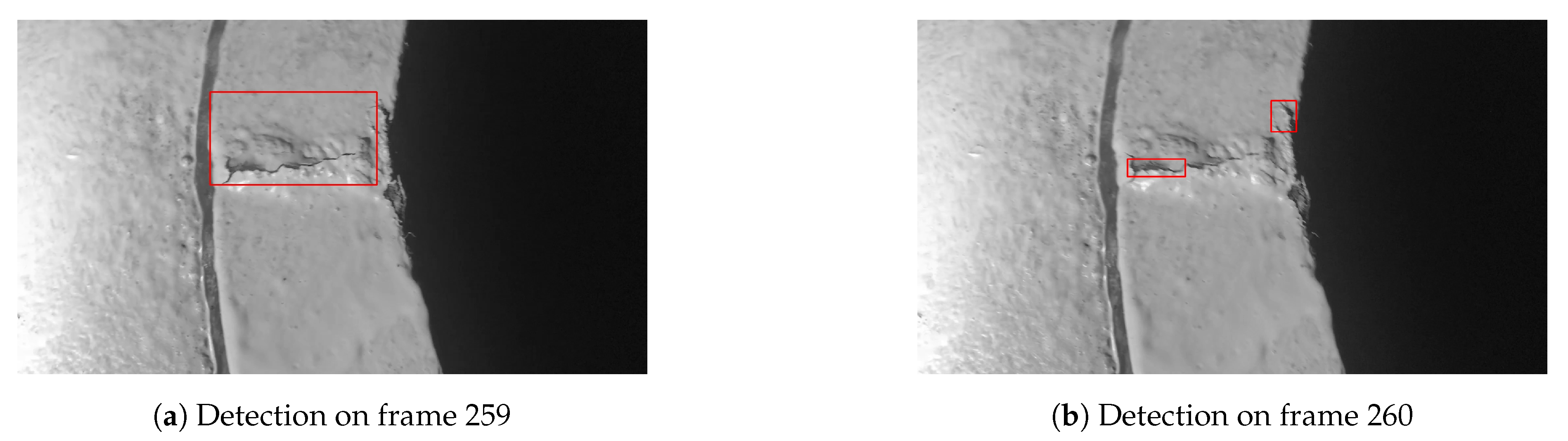

Most object detection models are prone to bounding box jitter [

41]. Particularly, we observed three noticeable artifacts in our test videos. First, the aspect ratios of predicted bounding boxes may vary significantly in consecutive frames. Second, the model may predict several small bounding boxes, each of which is around only a part of the crack. Third, the model may not predict the crack at all in some frames in the frame sequence containing the crack. The detection bounding box jitter directly impacts the tracking performance. To avoid propagating detection jitters in the tracking stage, we developed a novel data association algorithm which considers not only the IoU of detection boxes and tracking updates, but also their respective areas when associating them with the same tracking trajectory. Our data association algorithm compensates for detection jitters and avoids initiating new trackers for those fragmented detections which essentially locate a small part of the actual cracks.

This work has proven the feasibility of applying CNN-based computer vision techniques to remote ship inspection. Our study showed that the state-of-the-art object detection and tracking models and relevant algorithms have to be customized or enhanced to extract patterns and features which can properly represent the cracks existing in our images and videos. The customized RetinaNet model has achieved a reasonable detection accuracy acknowledged by our ship inspectors. The results also demonstrate that the object tracking module adds value in reducing the number of FPs and FNs.

Further Work

To mature this model and facilitate its usage in a production ready system, we need to further remove FP detections. There are several improvements to be considered.

First, we may improve the RetinaNet detection model’s performance and combat overfitting by adding more training data, fine-tuning model hyperparameters, or using regularization techniques such as dropout.

Second, semantic segmentation models can learn the features of cracks in pixel-level. In particular, horizontal bounding boxes have some limitations for training a crack detector. Cracks often appear at different scales and as irregular shapes (e.g., mostly as lines), and the pixels representing the crack constitute a small percentage of all the pixels within the bounding box. This results in the ground-truth annotations containing a large percentage of false positive pixels. Accordingly the object detection model trained on the dataset with such ground-truth annotations has learned some wrong features. We have been investigating U-Net model [

42], which was originally developed for biomedical image segmentation purpose, in parallel with the RetinaNet model. Ensemble learning uses multiple learning models and combine multiple hypotheses generated by those constituent models to form a better one. RetinaNet model is relatively heavy and highly relies on the computing power. When applying this model to analysis of large volume of inspection videos, each of which may last a couple of hours, it will require substantially more processing time. Thus, it will be desirable to efficiently integrate both RetinaNet and U-Net models into the detection-based tracking system to improve the overall detection accuracy and robustness while not compromising the detection speed too much.

Third, we have been continuously identifying/annotating more training images/video footages using semisupervised learning to increase our training dataset size.

To further enable an automated inspection process, we are also developing a modular infrastructure, where data acquisition, data process, data storage, data visualization, and inspection reporting will be integrated. The proposed detection-based tracking system will be the main component of the data process module.